by Scott Muniz | Jan 27, 2023 | Security, Technology

This article is contributed. See the original author and article here.

The Internet Systems Consortium (ISC) has released security advisories that address vulnerabilities affecting multiple versions of the ISC’s Berkeley Internet Name Domain (BIND) 9. A remote attacker could exploit these vulnerabilities to potentially cause denial-of-service conditions and system failures.

CISA encourages users and administrators to review the following ISC advisories CVE-2022-3094, CVE-2022-3488, CVE-2022-3736, and CVE-2022-3924 and apply the necessary mitigations.

by Contributed | Jan 27, 2023 | Technology

This article is contributed. See the original author and article here.

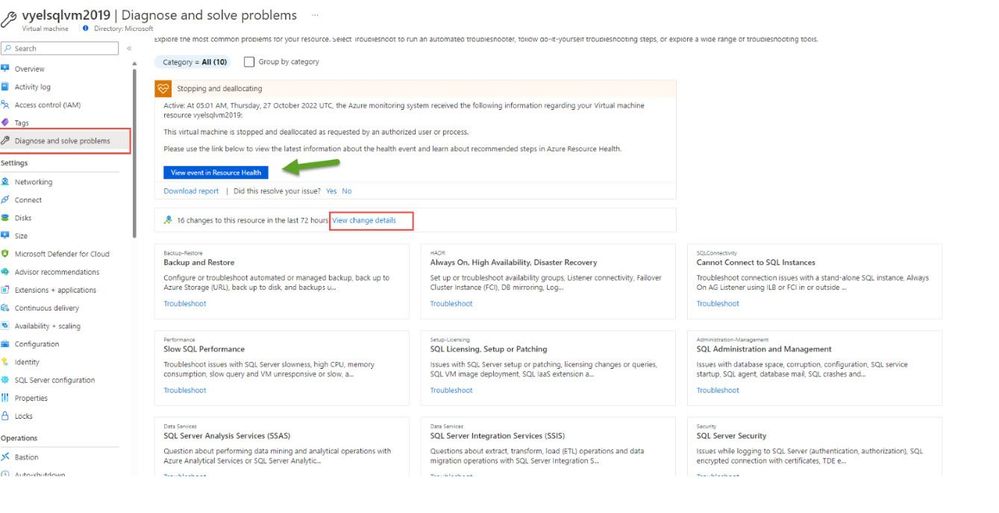

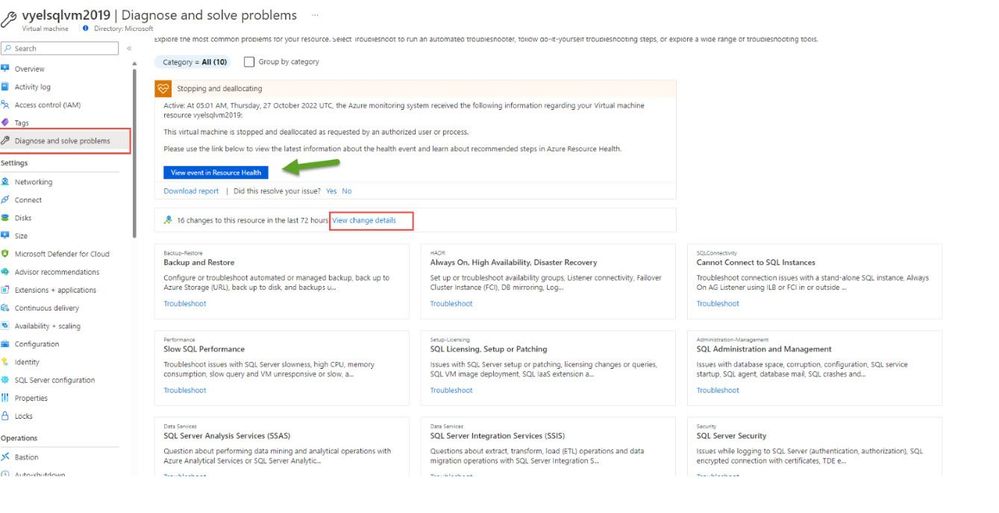

Software fails, hardware breaks and you can run into issues when trying new things. Whenever we encounter an issue on Azure, we quickly open our favorite search engine, weed through the results, and look for answers pertaining to that issue. But what if I tell you that we have a blade in the Azure Portal that can help you diagnose and resolve issues, find authoritative troubleshooting resources (tools, guided steps, and articles), and easily get additional help as needed.

The Diagnose and Solve problems blade empowers Azure customers to troubleshoot and solve Azure service issues via service/resource health insights, automated troubleshooters (insight diagnostics), curated troubleshooting guides (common solutions), and additional troubleshooting tools provided by service teams.

This blade is available for all the azure resources. In this blog post, we will look at SQL Server on Azure VM as an example.

The diagnose and Solve blade contains 2 sections.

- Common Problems

- Troubleshooting Tools

Common Problems

Each common problem has a title, a category, and a brief description, making it easier for customers to make the right selection. Customers can also search or use filters to look for a problem or a tool. One can also group the problem by category.

The following are the various categories that have been listed.

- Administration-Management

- Backup-Restore

- Data Services

- HADR

- Performance

- Security

- Setup-Licensing

- SQL Connectivity

- Change

- Health

The page also contains information about the resource health and any change details associated with the resource in the last 72 hours.

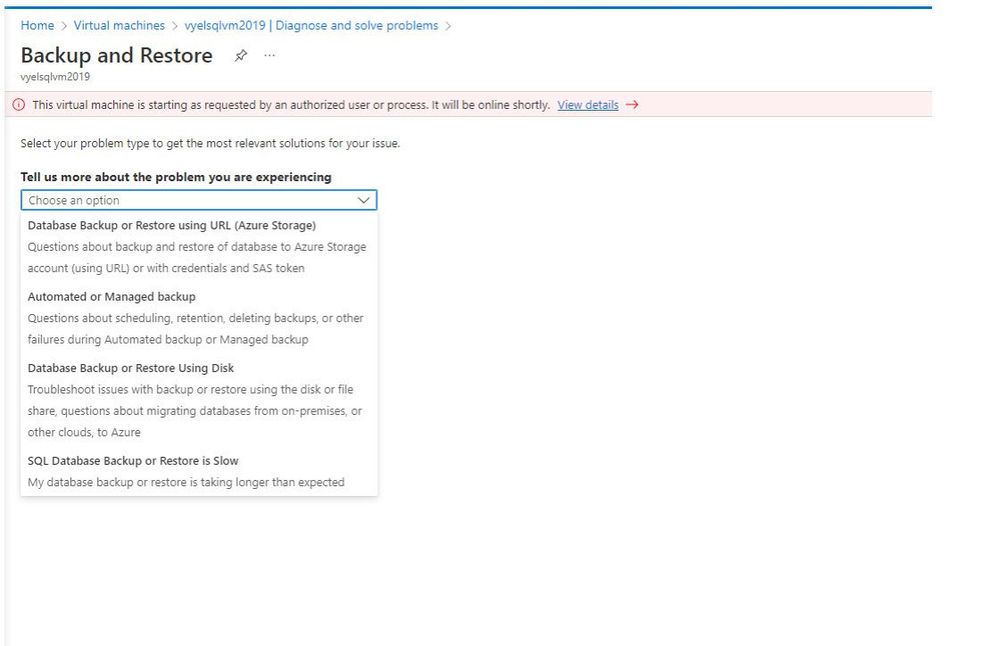

Once the customer identifies the problem they are having, clicking on “Troubleshoot” button, will open a new page where you can tell us more about the problem you are experiencing. Depending on the information provided and the problem scenario chosen, we get information on ways to fix the issue.

I have chosen Backup and Restore as the problem and clicking on the problem takes me to a new page asking me to tell more about the problem that I am experiencing. It also provides me with further options to choose that match my specific problem.

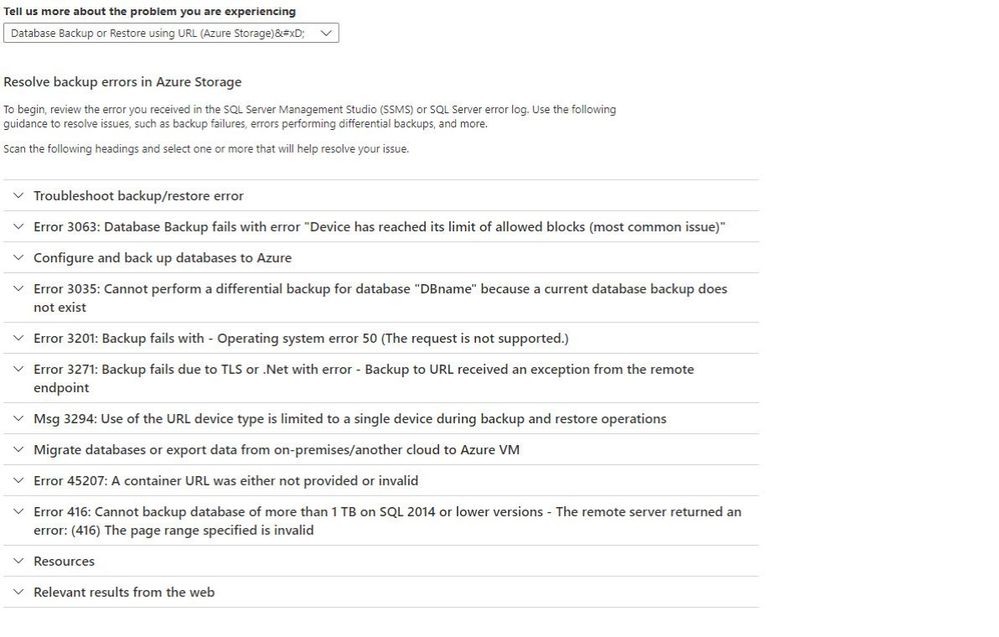

I have chosen “Database Backup or Restore using URL (Azure Storage)” as the issue that matches my problem. The page provides me with a list of common causes for the issues related to backup or restore using URL.

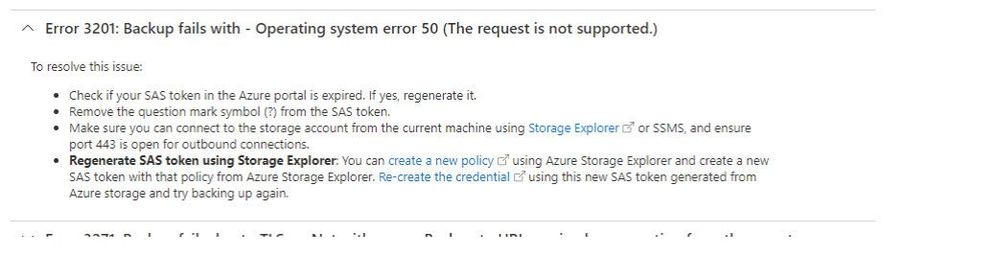

Assuming, the error msg that I am getting is Error 3201: Backup fails with – Operating system error 50, clicking on the headline, provides me with the steps to resolve the issue.

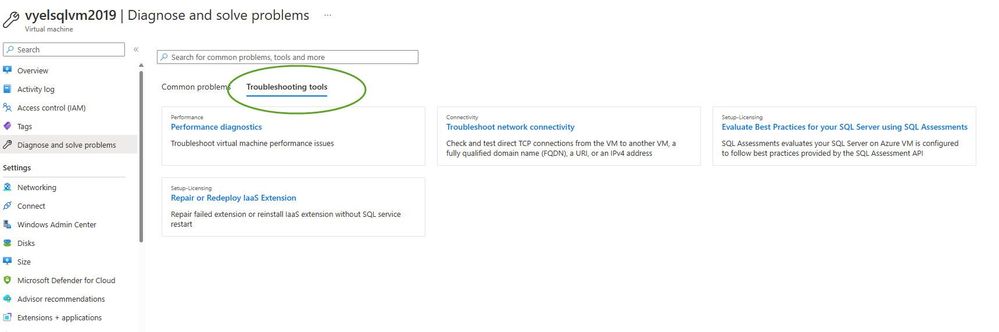

Troubleshooting Tools

Clicking on the Troubleshooting Tools tab for the SQL Server on Azure VM provides you with 4 options.

- Performance Diagnostics

- Troubleshoot network connectivity

- Evaluate Best Practices for your SQL Server using SQL Assessments

- Repair or Redeploy Iaas extension

Performance Diagnostics

PerfInsights is a self-help diagnostics tool that can be run through the portal or as a standalone tool that collects diagnostic data, produces analytic reporting, summarizes system information, and produces a system log output that can help troubleshoot virtual machine performance problems in Azure for various scenarios including SQL Server, Windows OS, Linux, Azure storage, and more.

From a SQL Server on Azure VM perspective, we can use PerfInsights captures to review SQL Server findings, review storage analysis against Azure storage thresholds, resource reporting, review the system summary information that can make it a lot easier to document your Azure SQL Virtual Machine environment.

PerfInsights can be run on virtual machines as a standalone tool, directly from the portal by using Performance Diagnostics for Azure virtual machines, or by installing Azure Performance Diagnostics VM Extension.

If you are experiencing performance problems with virtual machines, before contacting support, run this tool. More information can be found here.

Troubleshoot network Connectivity

The connection troubleshoot feature of Network Watcher provides the capability to check a direct TCP connection from a virtual machine to a virtual machine (VM), fully qualified domain name (FQDN), URI, or IPv4 address. Network scenarios are complex, they are implemented using network security groups, firewalls, user-defined routes, and resources provided by Azure. Complex configurations make troubleshooting connectivity issues challenging. Network Watcher helps reduce the amount of time to find and detect connectivity issues. The results returned can provide insights into whether a connectivity issue is due to a platform or a user configuration issue.

More information can be found here.

SQL Best Practice Assessment

SQL best practices assessment provides a mechanism to evaluate the configuration of your Azure SQL VM for best practices like indexes, deprecated features, trace flag usage, statistics, etc. Assessment results are uploaded to your Log Analytics workspace using Microsoft Monitoring Agent (MMA).

In order to enable SQL best practices assessment, your SQL Server on Azure VM (2012 and higher versions) needs to be registered with SQL IaaS extension in full mode. Registering your VM is easy and provides additional benefits that help you manage your SQL Server on Azure VM.

More information can be found here.

Repair or Redeploy Iaas Extension

Repair SQL IaaS Agent extension if the status is “Failed”. This operation will also help install latest SQL IaaS Agent extension if it is not already installed.

There is also a ” Force repair” option. Checking that will enable the repair button even if the SQL IaaS Agent extension is in “Succeeded” provisioning state. This allows you to upgrade your SQL IaaS Agent extension which is already in “Succeeded” provisioning state to the latest version.

From an azure SQL perspective, we have similar scenarios curated for both Azure SQL Database and Managed instance. We will explore them in another blog post.

I would encourage you all to take advantage of the “Diagnostics and Solve” blade to quickly identify your issues and resolve them. If one cannot resolve the issue, you can always use Azure Support to further troubleshoot it.

by Scott Muniz | Jan 26, 2023 | Security, Technology

This article is contributed. See the original author and article here.

Today, the Joint Cyber Defense Collaborative (JCDC) announced its 2023 Planning Agenda. This release marks a major milestone in the continued evolution and maturation of the collaborative’s planning efforts. JCDC’s Planning Agenda brings together government and private sector partners to develop and execute cyber defense plans that achieve specific risk reduction goals focused on systemic risk, collective cyber response, and high-risk communities.

Through this effort, CISA and partners across government and the private sector will take steps to measurably reduce some of the most significant cyber risks facing the global cyber community. This effort also aims to deepen our collaborative capabilities to enable more rapid action when the need arises.

CISA encourages organizations to review JCDC’s Planning Agenda webpage and CISA Executive Assistant Director Eric Goldstein’s blog post on this effort for a deeper understanding of the collaborative’s joint cyber defense plans. Visit CISA.gov/JCDC to learn about other ways JCDC is uniting the global cyber community in the collective defense of cyberspace.

Recent Comments