Automated Machine Learning on the M5 Forecasting Competition

This article is contributed. See the original author and article here.

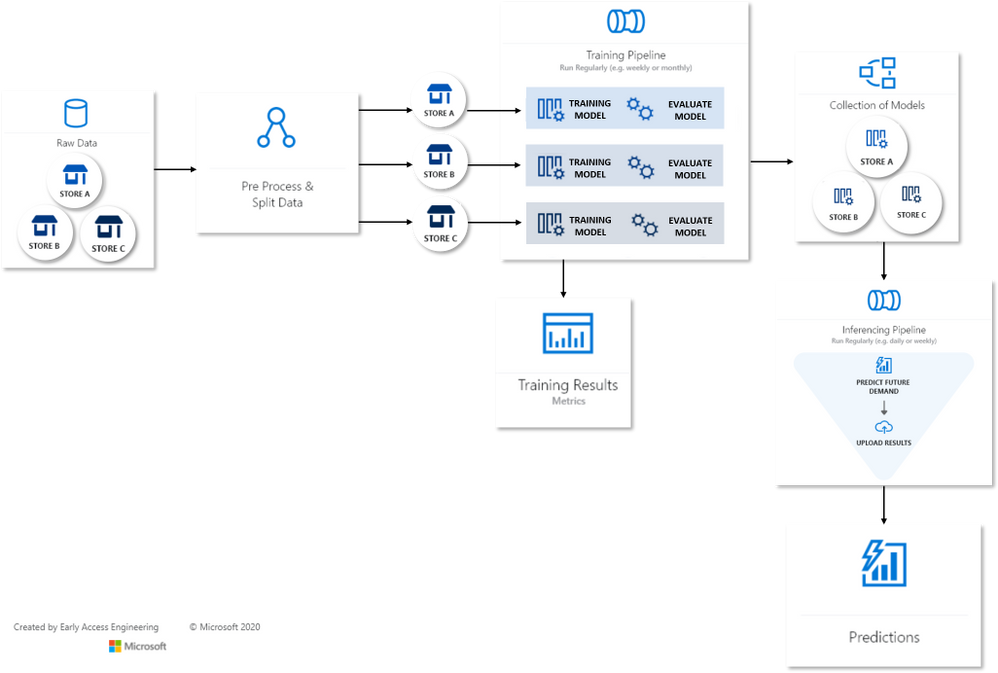

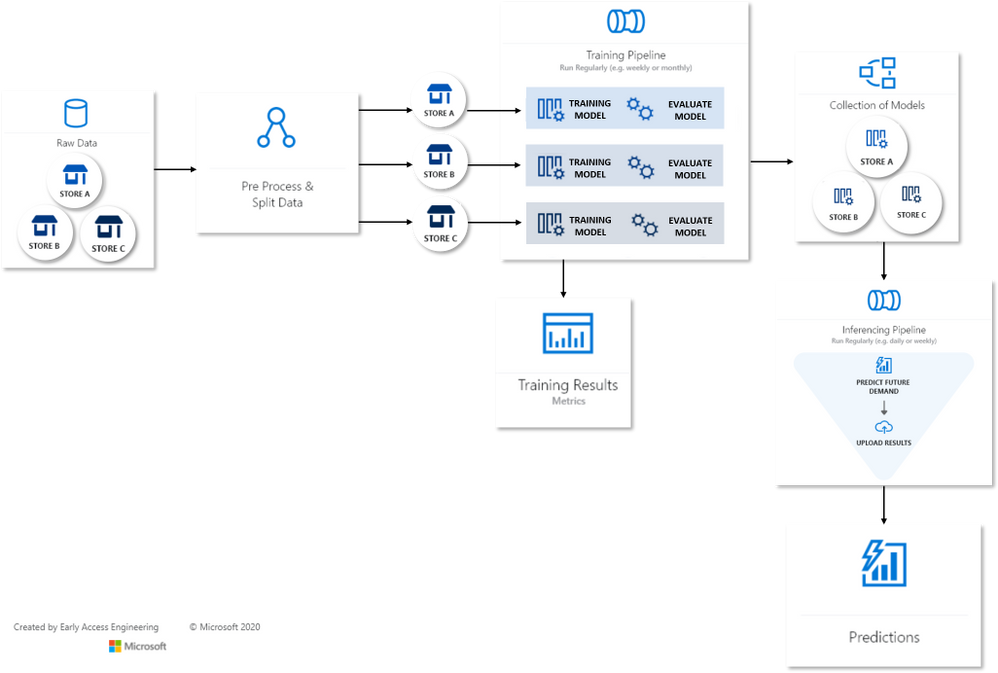

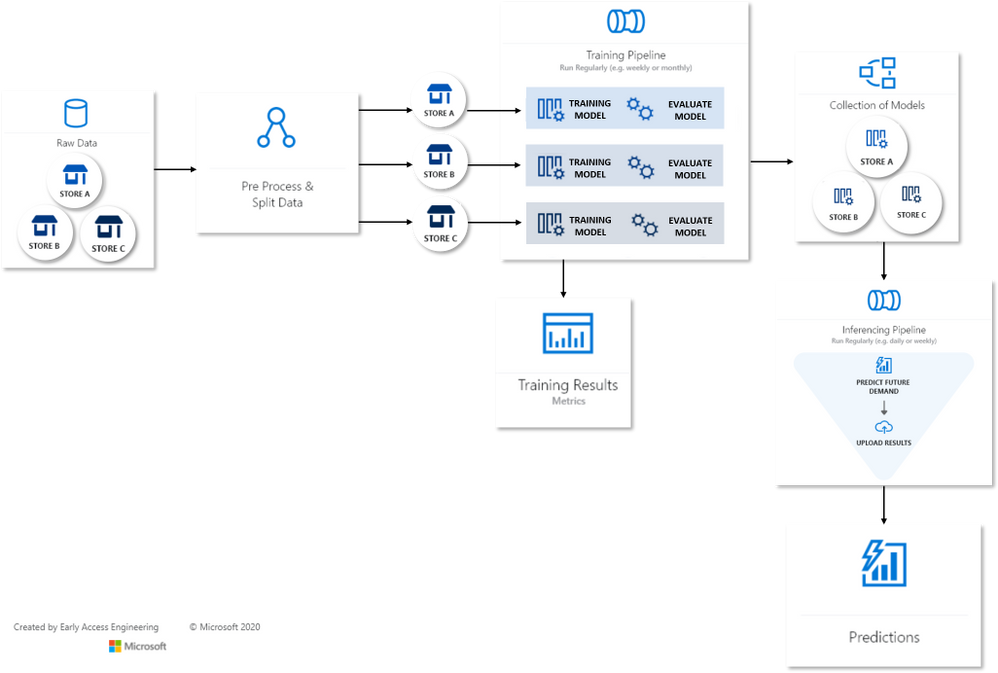

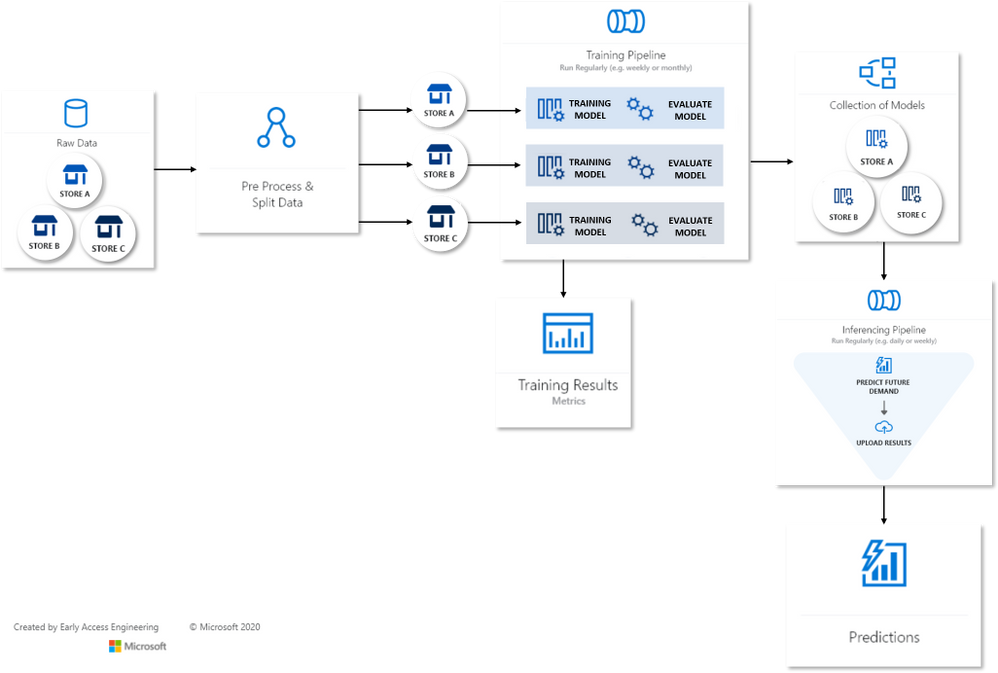

Many Models Flow Map

Many Models Flow Map

This article is contributed. See the original author and article here.

Many Models Flow Map

Many Models Flow Map

This article is contributed. See the original author and article here.

https://www.youtube-nocookie.com/embed/hsNc_QjYwfw

At the beginning of November, Microsoft had their second Ignite of the year, announcing or further clarifying details around many of the latest and near-immediate future features expected to rollout to Microsoft 365. However, as many of the US Federal cloud tenants see features months (if not longer) after they hit the commercial tenant, these users are often left wondering “what’s next for us” instead of having the same excitement commercial tenant owners have coming out of these conferences.

In this episode, we meet with Microsoft architect John Moh (LinkedIn) to discuss our favorite ways to stay up to date on what’s available to us in the GCC, GCC-H, and DOD tenants!

This article is contributed. See the original author and article here.

TLDR; Using minimal API, you can create a Web API in just 4 lines of code by leveraging new features like top-level statements and more.

There are many reasons for wanting to create an API in a few lines of code:

There are a few differences:

using and namespace are gone as well, so this code:

using System;

namespace Application

{

class Program

{

static void Main(string[] args)

{

Console.WriteLine("Hello World!");

}

}

}

is now this code:

Console.WriteLine("Hello World!");

Map[VERB] function, like you see above with MapGet() which takes a route and a function to invoke when said route is hit.To get started with minimal API, you need to make sure that .NET 6 is installed and then you can scaffold an API via the command line, like so:

dotnet new web -o MyApi -f net6.0

Once you run that, you get a folder MyApi with your API in it.

What you get is the following code in Program.cs:

var builder = WebApplication.CreateBuilder(args);

var app = builder.Build();

if (app.Environment.IsDevelopment())

{

app.UseDeveloperExceptionPage();

}

app.MapGet("/", () => "Hello World!");

app.Run();

To run it, type dotnet run. A little difference here with the port is that it assumes random ports in a range rather than 5000/5001 that you may be used to. You can however configure the ports as needed. Learn more on this docs page

Ok so you have a minimal API, what’s going on with the code?

var builder = WebApplication.CreateBuilder(args);

On the first line you create a builder instance. builder has a Services property on it, so you can add capabilities on it like Swagger Cors, Entity Framework and more. Here’s an example where you set up Swagger capabilities (this needs install of the Swashbuckle NuGet to work though):

builder.Services.AddEndpointsApiExplorer();

builder.Services.AddSwaggerGen(c =>

{

c.SwaggerDoc("v1", new OpenApiInfo { Title = "Todo API", Description = "Keep track of your tasks", Version = "v1" });

});

Here’s the next line:

var app = builder.Build();

Here we create an app instance. Via the app instance, we can do things like:

app.Run()app.MapGet()app.UseSwagger()With the following code, a route and route handler is configured:

app.MapGet("/", () => "Hello World!");

The method MapGet() sets up a new route and takes the route “/” and a route handler, a function as the second argument () => “Hello World!”.

To start the app, and have it serve requests, the last thing you do is call Run() on the app instance like so:

app.Run();

To add an additional route, we can type like so:

public record Pizza(int Id, string Name);

app.MapGet("/pizza", () => new Pizza(1, "Margherita"));

Now you have code that looks like so:

var builder = WebApplication.CreateBuilder(args);

var app = builder.Build();

if (app.Environment.IsDevelopment())

{

app.UseDeveloperExceptionPage();

}

app.MapGet("/pizza", () => new Pizza(1, "Margherita"));

app.MapGet("/", () => "Hello World!");

public record Pizza(int Id, string Name);

app.Run();

Where you to run this code, with dotnet run and navigate to /pizza you would get a JSON response:

{

"pizza" : {

"id" : 1,

"name" : "Margherita"

}

}

Let’s take all our learnings so far and put that into an app that supports GET and POST and lets also show easily you can use query parameters:

var builder = WebApplication.CreateBuilder(args);

var app = builder.Build();

if (app.Environment.IsDevelopment())

{

app.UseDeveloperExceptionPage();

}

var pizzas = new List<Pizza>(){

new Pizza(1, "Margherita"),

new Pizza(2, "Al Tonno"),

new Pizza(3, "Pineapple"),

new Pizza(4, "Meat meat meat")

};

app.MapGet("/", () => "Hello World!");

app.MapGet("/pizzas/{id}", (int id) => pizzas.SingleOrDefault(pizzas => pizzas.Id == id));

app.MapGet("/pizzas", (int ? page, int ? pageSize) => {

if(page.HasValue && pageSize.HasValue)

{

return pizzas.Skip((page.Value -1) * pageSize.Value).Take(pageSize.Value);

} else {

return pizzas;

}

});

app.MapPost("/pizza", (Pizza pizza) => pizzas.Add(pizza));

app.Run();

public record Pizza(int Id, string Name);

Run this app with dotnet run

In your browser, try various things like:

{id} matching the 2 and thereby it filters down on the one item that matches.Check out these LEARN modules on learning to use minimal API

This article is contributed. See the original author and article here.

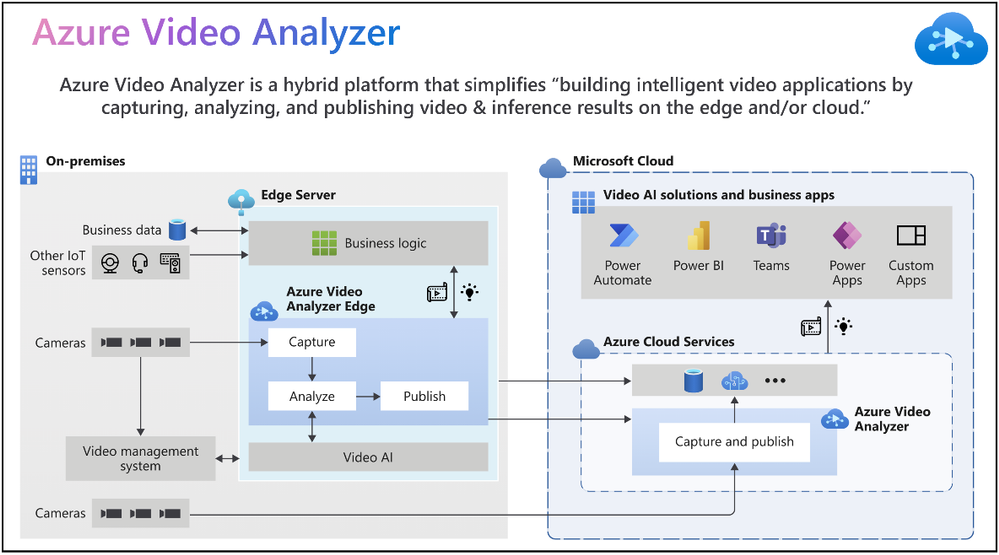

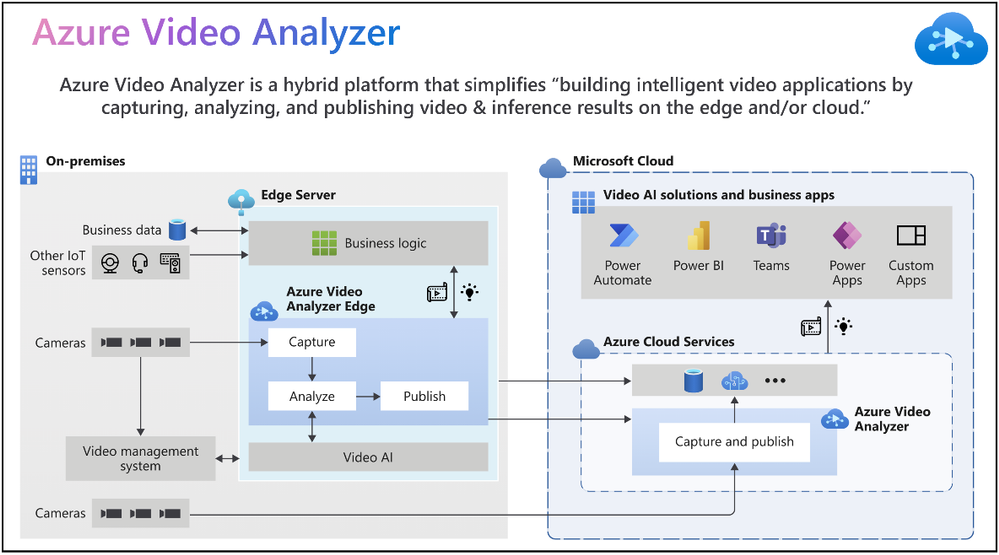

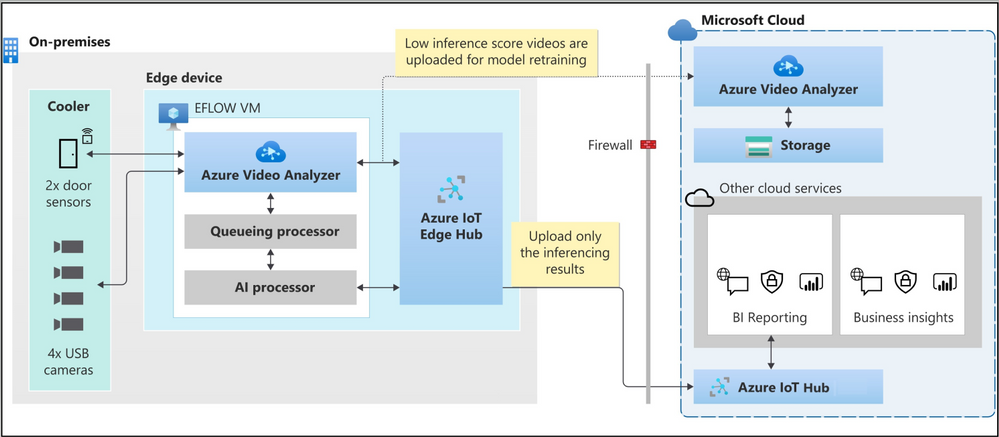

Intelligent video applications built using existing cameras or newer smart cameras, and edge gateways are at the core of a massive wave of innovation benefiting our customers.

According to the IoT Signals report, the vast majority of companies with a video AI solution strategy see it as an integral part of their IoT solution. Yet, the reality is that building such a solution can be complicated. Azure Video Analyzer is an Azure Applied AI service that greatly reduces the effort needed to build intelligent video applications by capturing, analyzing, and publishing video & inference on the edge or the cloud.

“Vision and AI capabilities on edge devices are helping companies create companies create breakthrough applications,” said Moe Tanabian, Vice President, and General Manager of Azure Edge Devices, Platform & Services, Microsoft. With the latest updates to Azure Video Analyzer, we are making it easier than ever for our customers to build comprehensive AI-powered solutions with actionable insights from videos”

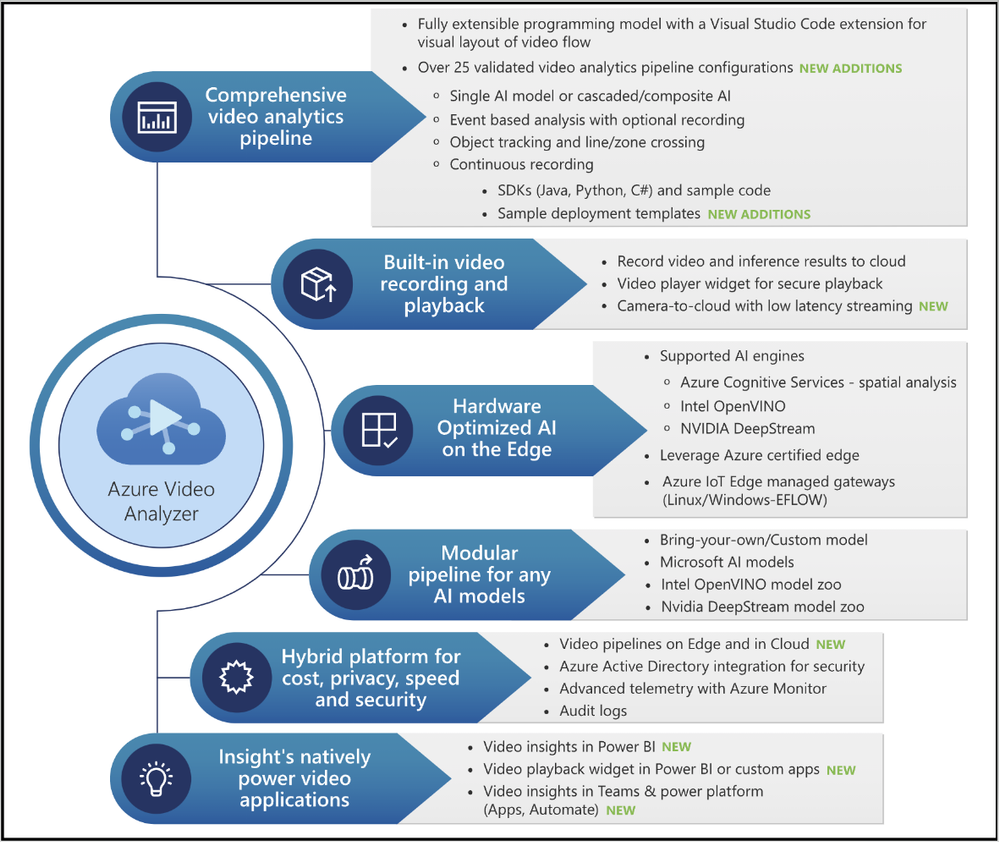

Figure 1: Azure Video Analyzer overview

Since its introduction earlier this year, Azure Video Analyzer capabilities have grown significantly to meet your needs. The Ignite 2021 (November) release provides you with the following new capabilities and enhancements:

The following illustration provides an overview of both existing Azure Video Analyzer capabilities and the new capabilities made available for Ignite 2021.

Figure 2: Azure Video Analyzer capabilities

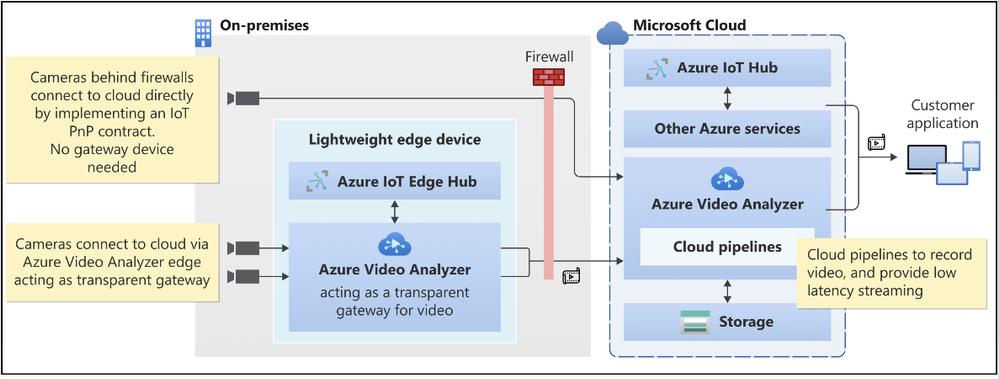

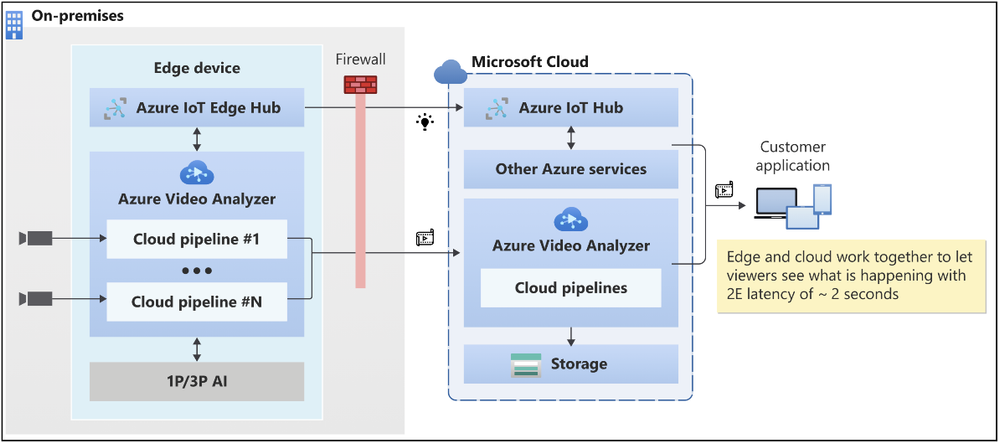

Video Surveillance as a Service (VSaaS) solutions have the convenience of ease of use coupled with the scalability and manageability of cloud computing, making them attractive to enterprises adopting cloud-native solutions. Such solutions offer the same benefits as other cloud services, such as managed infrastructure for IT teams, easy customization, and integration, and remote access from anywhere. Our customers can take advantage of the following features to achieve the desired outcome in these areas.

Figure 3: Camera to Azure Video Analyzer Cloud (with or without gateway)

Figure 4: Azure Video Analyzer low latency video playback

“Safety, security and productivity are essential elements for the growth and sustainability of every society and business. Together Axis Communications and Azure Video Analyzer are empowering developers with the tools to rapidly build and deploy AI-powered solutions that improve operational agility, optimize business efficiency and enhance safety and security” Fredrik Nilsson, Vice President, Americas, Axis Communications

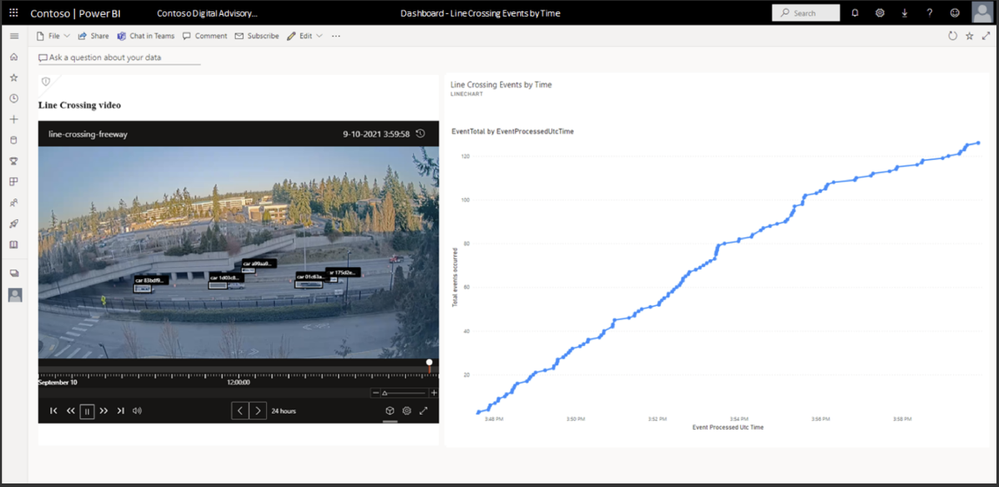

Visualization of AI inference data is necessary to make sense of data generated from Video AI systems. Customers need data visualization to detect anomalies or trends, and so on. With this objective in mind:

These product investments in Azure Video Analyzer will enable you to visualize actionable insights quickly.

Figure 5: Azure Video Analyzer insights visualization

“We were impressed with the comprehensiveness of the Azure Video Analyzer to build out solutions quickly. The integrations between the Azure Video Analyzer and Scenera’s Platform as a Service will help our customers gain valuable business insights using the solution’s scene-based event analytics..” Andrew Wajs, CTO & Founder of Scenera

Manageability of Windows devices and the necessity to run Linux-based containers are key drivers for our investments with “Edge For Linux On Windows” EFLOW. We now guide our developer ecosystem through the PowerShell experience of running EFLOW with Azure Video Analyzer to strengthen this commitment further.

Figure 6: Azure Video Analyzer + EFLOW powering inventory management solution

Finally, use the following resources to learn more about the overall product and services capabilities.

Please contact the Azure Video Analyzer product team at videoanalyzerhelp@microsoft.com for feedback and deeper engagement discussions.

This article is contributed. See the original author and article here.

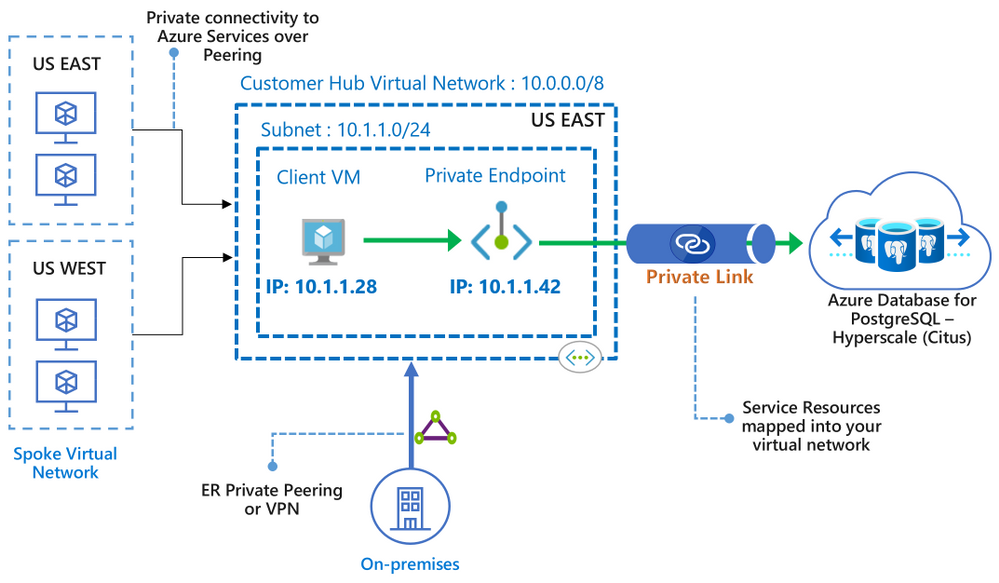

We recently announced the preview of Azure Private Link support for the Hyperscale (Citus) option in our Azure Database for PostgreSQL managed service.

Private Link enables you to create private endpoints for Hyperscale (Citus) nodes, which are exhibited as private IPs within your Virtual Network. Private Link essentially brings Hyperscale (Citus) inside your Virtual Network and allows you to have direct connectivity from your application to the managed database service.

With Private Link, communications between your Virtual Network and the Hyperscale (Citus) service travel over the Microsoft backbone network privately and securely, eliminating the need to expose the service to the public internet.

If you’re not familiar, Hyperscale (Citus) is an option in the Azure Database for PostgreSQL managed service that enables you to scale out your Postgres database horizontally. Hyperscale (Citus) leverages the Citus open source extension to Postgres, effectively transforming Postgres into a distributed database.

As with all the other Azure PaaS services that support Azure Private Link, the Private Link integration with Hyperscale (Citus) in our PostgreSQL managed service implements the same battle-tested Azure Private Link technology, provides the same consistent experiences, and has the following features:

To learn more about Private Link technology and PaaS services that support Private Link functionality, you can review the general Azure Private Link documentation.

Figure 1: Architecture diagram depicting the secure and private connectivity to Hyperscale (Citus) in the Azure Database for PostgreSQL managed service—when using Private Link

Figure 1: Architecture diagram depicting the secure and private connectivity to Hyperscale (Citus) in the Azure Database for PostgreSQL managed service—when using Private Link

In this “how to” blog post about the Private Link preview1 for Hyperscale (Citus), you can learn how to bring your Hyperscale (Citus) server groups inside your Virtual Network, by creating and managing private endpoints on your server groups. You will also get to know some of the details to be aware of when using Private Link with Hyperscale (Citus).

Let’s take a walk through these 4 scenarios for using Azure Private Link with Hyperscale (Citus):

Before you can create a Hyperscale (Citus) server group with a private endpoint—or add a private endpoint for an existing Hyperscale (Citus) server group—you first need to setup a resource group and a virtual network with a subnet that has enough available private IPs:

As the database admin or owner, you can create a private endpoint on the coordinator node when you are provisioning a new Hyperscale (Citus) server group. For help on how to provision a Hyperscale (Citus) server group, take a look at this tutorial.

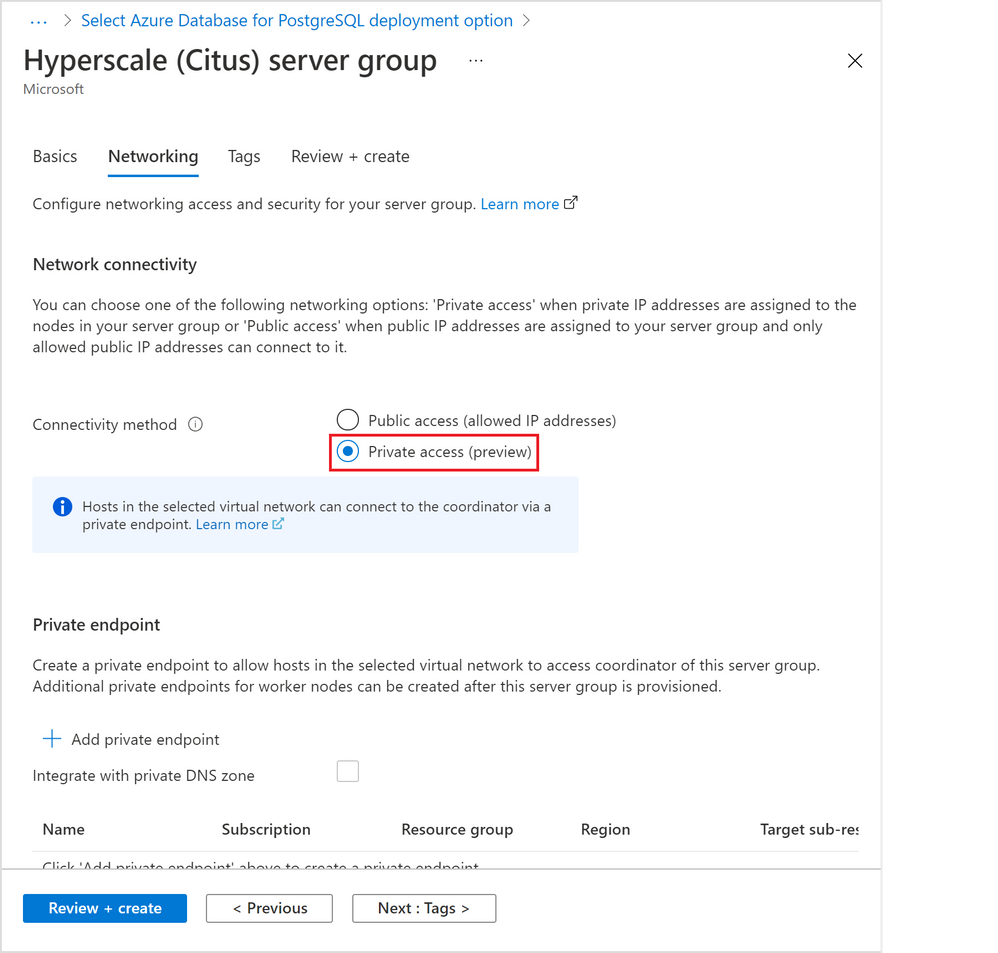

In the “Networking” tab (Figure 2 below), select by clicking the “Private access” radio button for the “Connectivity method”.

Figure 2: Screen capture from the Azure portal showing the option to create a Hyperscale (Citus) server group with private access connectivity

Figure 2: Screen capture from the Azure portal showing the option to create a Hyperscale (Citus) server group with private access connectivity

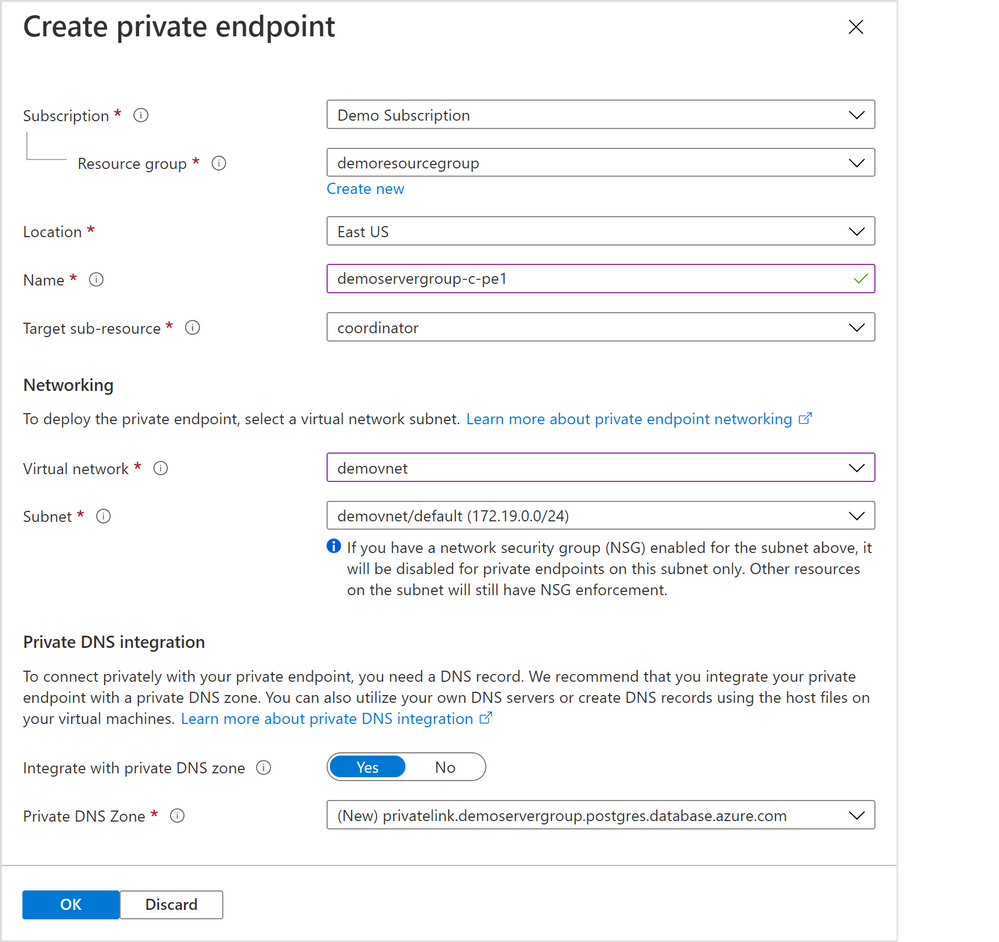

A “Create private endpoint” screen will appear (Figure 3 below). If this screen doesn’t appear, or you close it accidentally, you can manually re-open it by clicking “+ Add private endpoint” in the “Networking” tab showing above.

Figure 3: Screen capture from the Azure portal showing the “Create private endpoint” screen when “Private access” is selected as the connectivity method

Figure 3: Screen capture from the Azure portal showing the “Create private endpoint” screen when “Private access” is selected as the connectivity method

Select appropriate resource group, location, name, and networking values for your private endpoint. If you are just experimenting with Citus on Azure, the default values should work for most cases.

Please pay special attention to the Networking configurations. The networking configurations specify the Virtual Network and Subnet for the private IP from which the new private endpoint will be allocated. For example, you need to make sure there are enough private IPs available in the selected subnet.

The rest of the steps are exactly the same as in the tutorial for creating a Hyperscale (Citus) server group.

You can also create a private endpoint on a node in an existing Hyperscale (Citus) server group.

In fact, if you need to create a private endpoint on a worker node in a cluster, you must first create the database cluster and then subsequently add the private endpoint to the worker node.

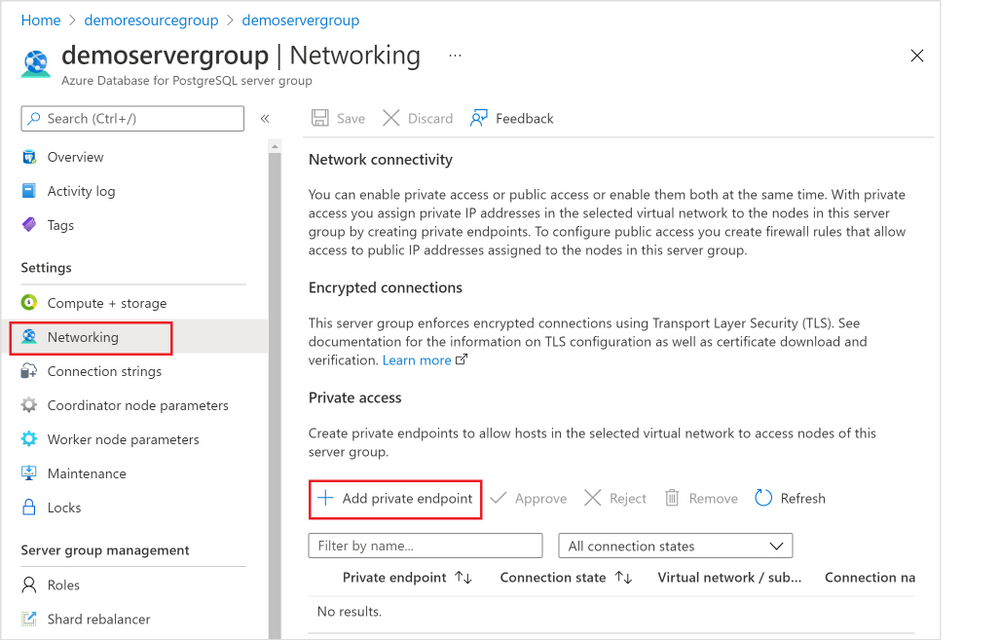

There are two places you can do this, and the first place is through the “Networking” blade for the Hyperscale (Citus) server group.

1. Navigate to the “Networking” blade for the Hyperscale (Citus) server group (Figure 4 below), click “+ Add private endpoint”.

Figure 4: Screen capture from the Azure portal showing the “+ Add Private Endpoint” button in the Networking blade for Hyperscale (Citus) in the Azure Database for PostgreSQL managed service

Figure 4: Screen capture from the Azure portal showing the “+ Add Private Endpoint” button in the Networking blade for Hyperscale (Citus) in the Azure Database for PostgreSQL managed service

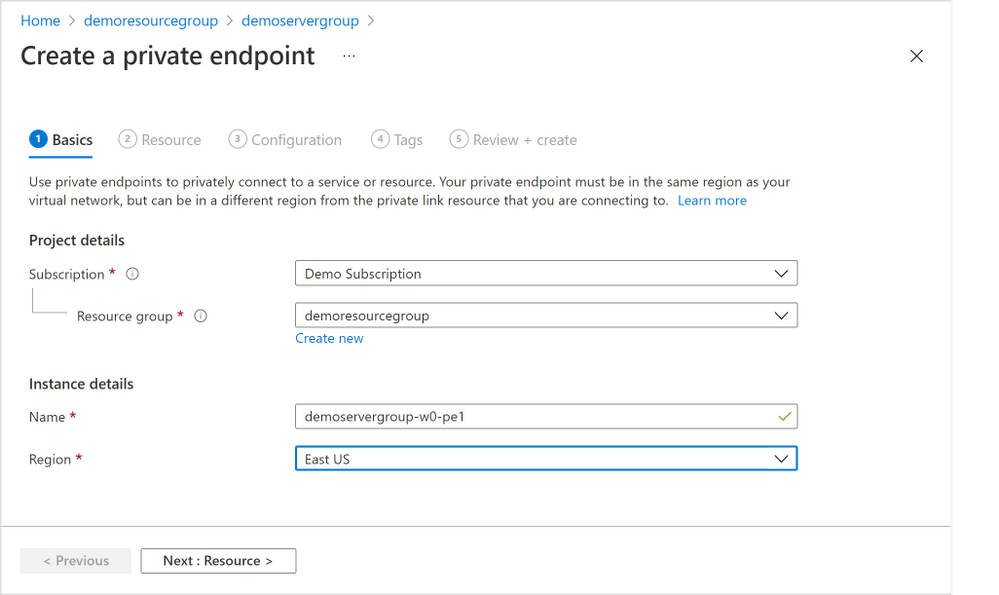

2. In the “Basics” tab (Figure 5 below), select the appropriate “Subscription”, “Resource group”, and “Region” information where you want your private endpoint to be created, and enter a meaningful “Name” for the private endpoint, e.g., you can use a naming convention like “ServerGroupName-NodeName-pe”. Select “Next: Resource >”.

Figure 5: Screen capture from the Azure portal showing the “Basics” tab for the “Create a private endpoint” flow

Figure 5: Screen capture from the Azure portal showing the “Basics” tab for the “Create a private endpoint” flow

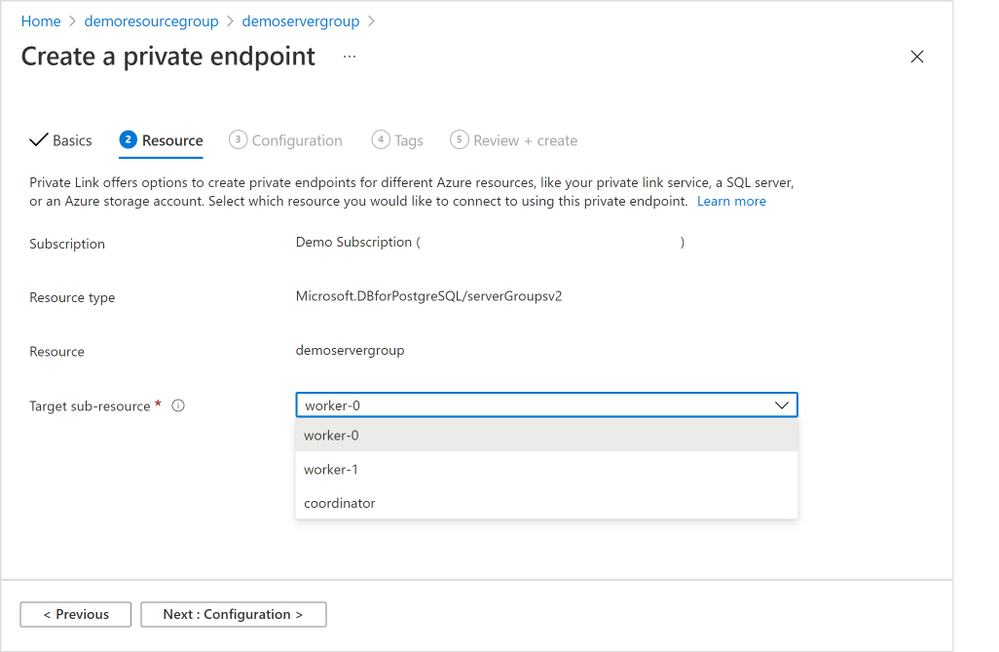

3. In the “Resource” tab in the screenshot below (Figure 6 below), choose the target node of the Hyperscale (Citus) server group. Generally, “coordinator” is the desired node unless you have reasons to access to the Hyperscale (Citus) worker nodes. (If you need private endpoints for all the worker nodes, you will need to repeat this process for all target sub-resources). Select “Next: Configuration >”.

Figure 6: Screen capture from the Azure portal showing the “Resource” tab for the “Create a private endpoint” flow

Figure 6: Screen capture from the Azure portal showing the “Resource” tab for the “Create a private endpoint” flow

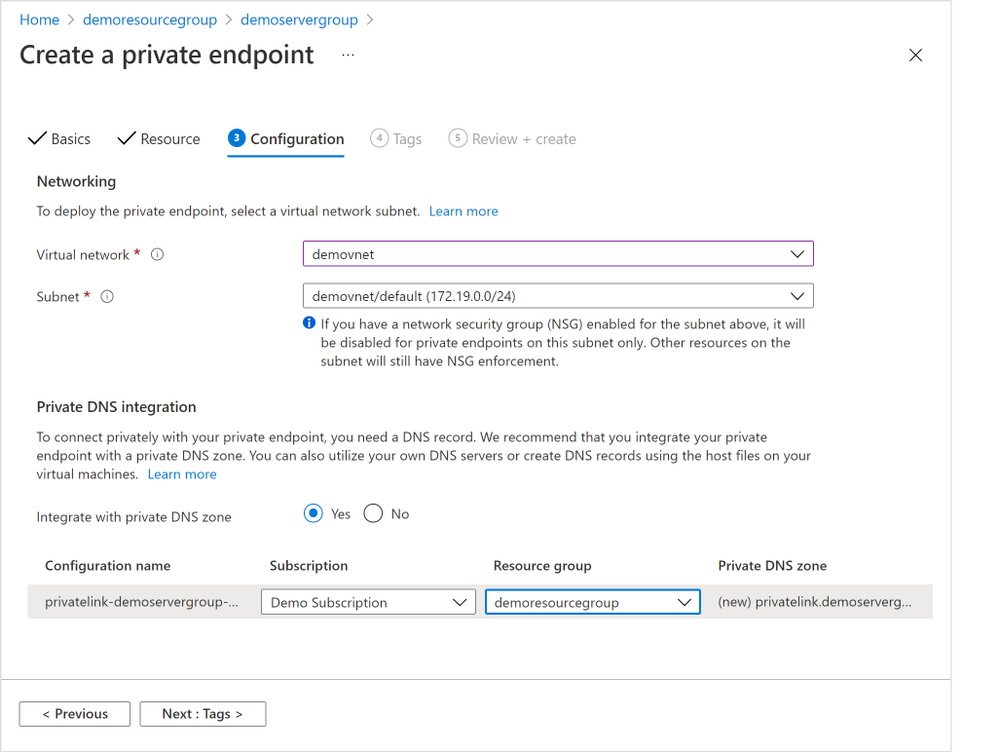

4. In the “Configuration” tab below (Figure 7 below), choose the “Virtual network” and “Subnet” from where the private IP for the private endpoint will be allocated.

It’s not required, but highly recommended to create all your private endpoints for the same Hyperscale (Citus) server group using the same Virtual Network / Subnet.

Select the “Yes” radio button next to “Integration with private DNS zone” to have private DNS integration.

Figure 7: Screen capture from the Azure portal showing the “Configuration” tab for the “Create a private endpoint” flow

Figure 7: Screen capture from the Azure portal showing the “Configuration” tab for the “Create a private endpoint” flow

5. Finish the rest of the steps by adding any tags you want, reviewing the settings and selecting “Create” to create the private endpoint.

If you need to create private endpoints for more than one Hyperscale (Citus) server group—or for multiple Azure managed services, perhaps you also manage other databases besides Postgres—you can choose to create a private endpoint using the generic private endpoint creation process provided by the Azure Networking team.

You might also want to use generic private endpoint resource creation if you don’t have access to the Hyperscale (Citus) server group, e.g., you are network admin instead of database admin, or you need to create a private endpoint to a database in another subscription you don’t have access to.

1. From the home page of Azure portal, select the “Create a resource” button and search for “Private Endpoint”. Click “Create” button (Figure 8 below) to start creating a private endpoint.

Figure 8: Screen capture from the Azure portal showing the “Create” page for “Create a resource” of Private Endpoint

Figure 8: Screen capture from the Azure portal showing the “Create” page for “Create a resource” of Private Endpoint

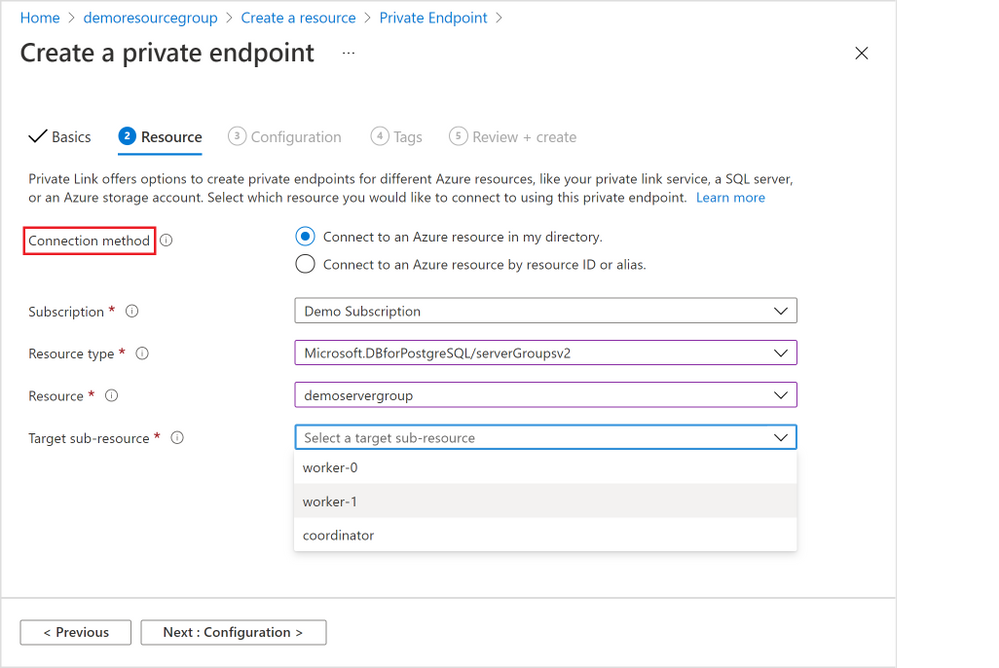

2. All the rest of the steps should be the same as illustrated in the section above, except for the “Resource” tab step (Figure 9 below).

For the “Resource” tab step, you will need to select the “Connection method” based on your permission to the Hyperscale (Citus) server group on which you want to create a private endpoint. You can learn more in the “Access to a private link resource using approval workflow” docs.

Figure 9: Screen capture from the Azure portal showing the “Resource” tab for the “Create a private endpoint” flow when you are using Private Endpoint resource creation

Figure 9: Screen capture from the Azure portal showing the “Resource” tab for the “Create a private endpoint” flow when you are using Private Endpoint resource creation

As mentioned above, there are different connection and approval methods based on your permission on the Hyperscale (Citus) server group.

As the Hyperscale (Citus) server group owner or admin, you can manage all the private endpoint connections created on your server group.

Just like adding a Private Endpoint for an existing server group, there are two places you as the Hyperscale (Citus) server group admin can manage the private endpoint connections.

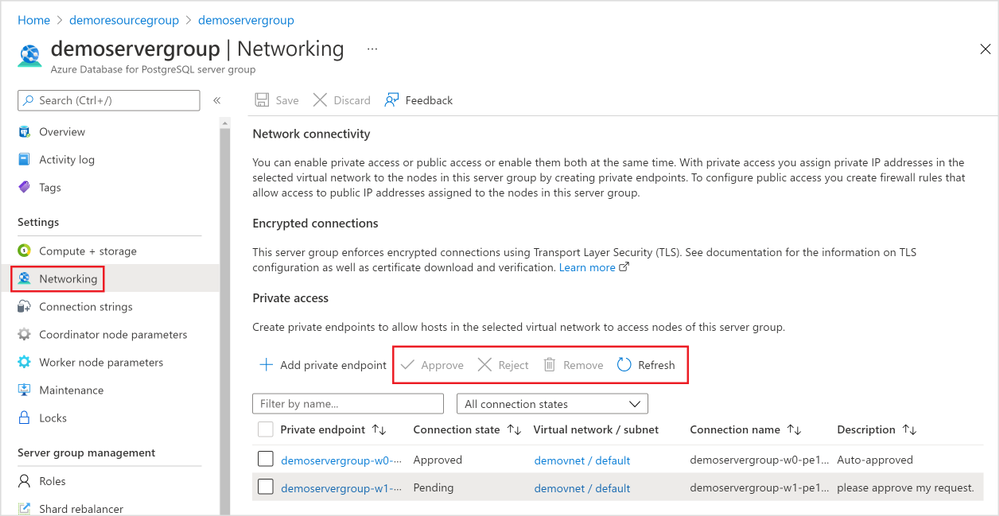

The 1st place is again using the Hyperscale (Citus) server group’s “Networking” blade (Figure 10 below).

Figure 10: Screen capture from the Azure portal showing management options for a Private Endpoint Connection in the Networking blade for Hyperscale (Citus) in the Azure Database for PostgreSQL managed service

Figure 10: Screen capture from the Azure portal showing management options for a Private Endpoint Connection in the Networking blade for Hyperscale (Citus) in the Azure Database for PostgreSQL managed service

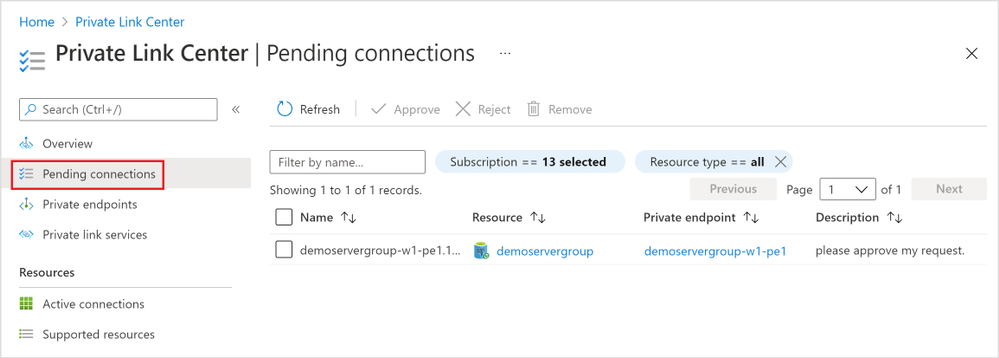

The 2nd place you can manage the private endpoint connections is the “Private Link Center”. Search “Private Link” services from the Azure portal, and you will be navigated to the “Private Link Center”.

1. The “Pending connections” blade (Figure 11 below) in the “Private Link Center” lists all the private endpoints that are in “Pending” state. You can filter based on “Subscription”, “Name”, and “Resource Type” to the private endpoints you want to manage.

Figure 11: Screen capture from the Azure portal showing all “Pending connections” in the “Private Link Center”

Figure 11: Screen capture from the Azure portal showing all “Pending connections” in the “Private Link Center”

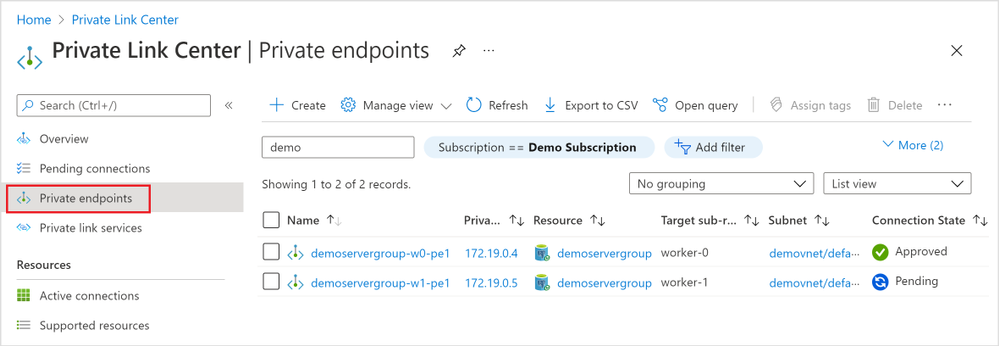

2. The “Private endpoints” blade (Figure 12 below) in the “Private Link Center” lists all the private endpoints in all connection state. Again, you can filter based on “Subscription”, “Name”, and “Resource Type” to the private endpoints you want to manage.

Figure 12: Screen capture from the Azure portal showing all “Private endpoints” in the “Private Link Center”

Figure 12: Screen capture from the Azure portal showing all “Private endpoints” in the “Private Link Center”

With the preview of the Azure Private Link for Hyperscale (Citus), you are now empowered to bring your Hyperscale (Citus) server groups—new or existing—into your private Virtual Network space. You can create and manage private endpoints for any of or all the Hyperscale (Citus) database nodes.

If you want to learn more about using Hyperscale (Citus) to shard Postgres on Azure, you can:

Your feedback and questions are welcome. You can always reach out to our team of Postgres experts at Ask Azure DB for PostgreSQL.

Footnotes

Recent Comments