by Scott Muniz | Oct 11, 2022 | Security, Technology

This article is contributed. See the original author and article here.

| actian — psql |

If folder security is misconfigured for Actian Zen PSQL BEFORE Patch Update 1 for Zen 15 SP1 (v15.11.005), Patch Update 4 for Zen 15 (v15.01.017), or Patch Update 5 for Zen 14 SP2 (v14.21.022), it can allow an attacker (with file read/write access) to remove specific security files in order to reset the master password and gain access to the database. |

2022-09-30 |

8.8 |

CVE-2022-40756

MISC

MISC |

| apache — airflow |

In Apache Airflow, prior to version 2.4.1, deactivating a user wouldn’t prevent an already authenticated user from being able to continue using the UI or API. |

2022-10-07 |

8.1 |

CVE-2022-41672

CONFIRM

CONFIRM |

| apache — commons_jxpath |

Those using JXPath to interpret untrusted XPath expressions may be vulnerable to a remote code execution attack. All JXPathContext class functions processing a XPath string are vulnerable except compile() and compilePath() function. The XPath expression can be used by an attacker to load any Java class from the classpath resulting in code execution. |

2022-10-06 |

9.8 |

CVE-2022-41852

MISC |

| arubanetworks — instant |

There are buffer overflow vulnerabilities in multiple underlying services that could lead to unauthenticated remote code execution by sending specially crafted packets destined to the PAPI (Aruba Networks AP management protocol) UDP port (8211). Successful exploitation of these vulnerabilities results in the ability to execute arbitrary code as a privileged user on the underlying operating system of Aruba InstantOS 6.4.x: 6.4.4.8-4.2.4.20 and below; Aruba InstantOS 6.5.x: 6.5.4.23 and below; Aruba InstantOS 8.6.x: 8.6.0.18 and below; Aruba InstantOS 8.7.x: 8.7.1.9 and below; Aruba InstantOS 8.10.x: 8.10.0.1 and below; ArubaOS 10.3.x: 10.3.1.0 and below; Aruba has released upgrades for Aruba InnstantOS that address these security vulnerabilities. |

2022-10-06 |

9.8 |

CVE-2022-37888

MISC |

| asus — rt-ax56u_firmware |

A stack overflow vulnerability exists in the httpd service in ASUS RT-AX56U Router Version 3.0.0.4.386.44266. This vulnerability is caused by the strcat function called by “caupload” input handle function allowing the user to enter 0xFFFF bytes into the stack. This vulnerability allows an attacker to execute commands remotely. The vulnerability requires authentication. |

2022-10-06 |

8.8 |

CVE-2021-40556

CONFIRM

MISC |

| autodesk — autocad |

A maliciously crafted X_B, CATIA, and PDF file when parsed through Autodesk AutoCAD 2023 and 2022 can be used to write beyond the allocated buffer. This vulnerability can lead to arbitrary code execution. |

2022-10-03 |

7.8 |

CVE-2022-33885

MISC |

| autodesk — autocad |

A maliciously crafted MODEL and SLDPRT file can be used to write beyond the allocated buffer while parsing through Autodesk AutoCAD 2023 and 2022. The vulnerability exists because the application fails to handle crafted MODEL and SLDPRT files, which causes an unhandled exception. An attacker can leverage this vulnerability to execute arbitrary code. |

2022-10-03 |

7.8 |

CVE-2022-33886

MISC |

| autodesk — autocad |

A maliciously crafted PDF file when parsed through Autodesk AutoCAD 2023 causes an unhandled exception. An attacker can leverage this vulnerability to cause a crash or read sensitive data or execute arbitrary code in the context of the current process. |

2022-10-03 |

7.8 |

CVE-2022-33887

MISC |

| autodesk — autocad |

A malicious crafted Dwg2Spd file when processed through Autodesk DWG application could lead to memory corruption vulnerability by write access violation. This vulnerability in conjunction with other vulnerabilities could lead to code execution in the context of the current process. |

2022-10-03 |

7.8 |

CVE-2022-33888

MISC |

| autodesk — autocad |

Parsing a maliciously crafted X_B file can force Autodesk AutoCAD 2023 and 2022 to read beyond allocated boundaries. This vulnerability in conjunction with other vulnerabilities could lead to code execution in the context of the current process. |

2022-10-03 |

7.5 |

CVE-2022-33884

MISC |

| autodesk — autodesk_desktop |

Under certain conditions, an attacker could create an unintended sphere of control through a vulnerability present in file delete operation in Autodesk desktop app (ADA). An attacker could leverage this vulnerability to escalate privileges and execute arbitrary code. |

2022-10-03 |

9.8 |

CVE-2022-33882

MISC |

| autodesk — design_review |

A maliciously crafted GIF or JPEG files when parsed through Autodesk Design Review 2018, and AutoCAD 2023 and 2022 could be used to write beyond the allocated heap buffer. This vulnerability could lead to arbitrary code execution. |

2022-10-03 |

7.8 |

CVE-2022-33889

MISC |

| autodesk — design_review |

A maliciously crafted PCT or DWF file when consumed through DesignReview.exe application could lead to memory corruption vulnerability. This vulnerability in conjunction with other vulnerabilities could lead to code execution in the context of the current process. |

2022-10-03 |

7.8 |

CVE-2022-33890

MISC |

| autodesk — moldflow_synergy |

A malicious crafted file consumed through Moldflow Synergy, Moldflow Adviser, Moldflow Communicator, and Advanced Material Exchange applications could lead to memory corruption vulnerability. This vulnerability in conjunction with other vulnerabilities could lead to code execution in the context of the current process. |

2022-10-03 |

7.8 |

CVE-2022-33883

MISC |

| autodesk — subassembly_composer |

A maliciously crafted PKT file when consumed through SubassemblyComposer.exe application could lead to memory corruption vulnerability. This vulnerability in conjunction with other vulnerabilities could lead to code execution in the context of the current process. |

2022-10-03 |

7.8 |

CVE-2022-41301

MISC |

| axiosys — bento4 |

Bento4 v1.6.0-639 was discovered to contain a heap overflow via the AP4_BitReader::ReadBits function in mp4mux. |

2022-10-03 |

8.8 |

CVE-2022-41428

MISC |

| axiosys — bento4 |

Bento4 v1.6.0-639 was discovered to contain a heap overflow via the AP4_Atom::TypeFromString function in mp4tag. |

2022-10-03 |

8.8 |

CVE-2022-41429

MISC |

| axiosys — bento4 |

Bento4 v1.6.0-639 was discovered to contain a heap overflow via the AP4_BitReader::ReadBit function in mp4mux. |

2022-10-03 |

8.8 |

CVE-2022-41430

MISC |

| backdropcms — backdrop_cms |

Backdrop CMS 1.22.0 has Unrestricted File Upload vulnerability via ‘themes’ that allows attackers to Remote Code Execution. |

2022-10-07 |

7.2 |

CVE-2022-42092

MISC |

| billing_system_project_project — billing_system_project |

Billing System Project v1.0 was discovered to contain a remote code execution (RCE) vulnerability via the component /php_action/createProduct.php. |

2022-09-30 |

7.2 |

CVE-2022-41437

MISC |

| billing_system_project_project — billing_system_project |

Billing System Project v1.0 was discovered to contain a SQL injection vulnerability via the id parameter at /phpinventory/edituser.php. |

2022-09-30 |

7.2 |

CVE-2022-41439

MISC |

| billing_system_project_project — billing_system_project |

Billing System Project v1.0 was discovered to contain a SQL injection vulnerability via the id parameter at /phpinventory/editcategory.php. |

2022-09-30 |

7.2 |

CVE-2022-41440

MISC |

| bookingultrapro — booking_ultra_pro_appointments_booking_calendar |

Multiple Cross-Site Request Forgery (CSRF) vulnerabilities in Booking Ultra Pro plugin <= 1.1.4 at WordPress. |

2022-09-30 |

8.8 |

CVE-2021-36854

CONFIRM

CONFIRM |

| bus_pass_management_system_project — bus_pass_management_system |

Bus Pass Management System 1.0 was discovered to contain a SQL Injection vulnerability via the searchdata parameter at /buspassms/download-pass.php.. |

2022-09-30 |

9.8 |

CVE-2022-35156

MISC

MISC

MISC |

| cisco — ios_xe |

A vulnerability in the DHCP processing functionality of Cisco IOS XE Wireless Controller Software for the Catalyst 9000 Family could allow an unauthenticated, remote attacker to cause a denial of service (DoS) condition. This vulnerability is due to the improper processing of DHCP messages. An attacker could exploit this vulnerability by sending malicious DHCP messages to an affected device. A successful exploit could allow the attacker to cause the device to reload, resulting in a DoS condition. |

2022-09-30 |

7.5 |

CVE-2022-20847

CISCO |

| cisco — ios_xe |

A vulnerability in the UDP processing functionality of Cisco IOS XE Software for Embedded Wireless Controllers on Catalyst 9100 Series Access Points could allow an unauthenticated, remote attacker to cause a denial of service (DoS) condition. This vulnerability is due to the improper processing of UDP datagrams. An attacker could exploit this vulnerability by sending malicious UDP datagrams to an affected device. A successful exploit could allow the attacker to cause the device to reload, resulting in a DoS condition. |

2022-09-30 |

7.5 |

CVE-2022-20848

CISCO |

| cisco — ios_xe |

A vulnerability in the processing of Control and Provisioning of Wireless Access Points (CAPWAP) Mobility messages in Cisco IOS XE Wireless Controller Software for the Catalyst 9000 Family could allow an unauthenticated, remote attacker to cause a denial of service (DoS) condition on an affected device. This vulnerability is due to a logic error and improper management of resources related to the handling of CAPWAP Mobility messages. An attacker could exploit this vulnerability by sending crafted CAPWAP Mobility packets to an affected device. A successful exploit could allow the attacker to exhaust resources on the affected device. This would cause the device to reload, resulting in a DoS condition. |

2022-09-30 |

7.5 |

CVE-2022-20856

CISCO |

| cisco — ios_xe |

A vulnerability in the processing of malformed Common Industrial Protocol (CIP) packets that are sent to Cisco IOS Software and Cisco IOS XE Software could allow an unauthenticated, remote attacker to cause an affected device to unexpectedly reload, resulting in a denial of service (DoS) condition. This vulnerability is due to insufficient input validation during processing of CIP packets. An attacker could exploit this vulnerability by sending a malformed CIP packet to an affected device. A successful exploit could allow the attacker to cause the affected device to unexpectedly reload, resulting in a DoS condition. |

2022-09-30 |

7.5 |

CVE-2022-20919

CISCO |

| cisco — ios_xe |

A vulnerability in the web UI feature of Cisco IOS XE Software could allow an authenticated, remote attacker to perform an injection attack against an affected device. This vulnerability is due to insufficient input validation. An attacker could exploit this vulnerability by sending crafted input to the web UI API. A successful exploit could allow the attacker to execute arbitrary commands on the underlying operating system with root privileges. To exploit this vulnerability, an attacker must have valid Administrator privileges on the affected device. |

2022-09-30 |

7.2 |

CVE-2022-20851

CISCO |

| cisco — sd-wan_vbond_orchestrator |

Multiple vulnerabilities in the CLI of Cisco SD-WAN Software could allow an authenticated, local attacker to gain elevated privileges. These vulnerabilities are due to improper access controls on commands within the application CLI. An attacker could exploit these vulnerabilities by running a malicious command on the application CLI. A successful exploit could allow the attacker to execute arbitrary commands as the root user. |

2022-09-30 |

7.8 |

CVE-2022-20818

CISCO |

| cisco — sd-wan_vmanage |

Multiple vulnerabilities in the CLI of Cisco SD-WAN Software could allow an authenticated, local attacker to gain elevated privileges. These vulnerabilities are due to improper access controls on commands within the application CLI. An attacker could exploit these vulnerabilities by running a malicious command on the application CLI. A successful exploit could allow the attacker to execute arbitrary commands as the root user. |

2022-09-30 |

7.8 |

CVE-2022-20775

CISCO |

| cisco — sd-wan_vsmart_controller |

A vulnerability in the CLI of stand-alone Cisco IOS XE SD-WAN Software and Cisco SD-WAN Software could allow an authenticated, local attacker to delete arbitrary files from the file system of an affected device. This vulnerability is due to insufficient input validation. An attacker could exploit this vulnerability by injecting arbitrary file path information when using commands in the CLI of an affected device. A successful exploit could allow the attacker to delete arbitrary files from the file system of the affected device. |

2022-09-30 |

7.1 |

CVE-2022-20850

CISCO |

| cloudflare — goflow |

sflow decode package does not employ sufficient packet sanitisation which can lead to a denial of service attack. Attackers can craft malformed packets causing the process to consume large amounts of memory resulting in a denial of service. |

2022-09-30 |

7.5 |

CVE-2022-2529

MISC |

| codeigniter — codeigniter |

B.C. Institute of Technology CodeIgniter <=3.1.13 is vulnerable to SQL Injection via systemdatabaseDB_query_builder.php or_where() function. |

2022-10-07 |

9.8 |

CVE-2022-40824

MISC |

| codeigniter — codeigniter |

B.C. Institute of Technology CodeIgniter <=3.1.13 is vulnerable to SQL Injection via systemdatabaseDB_query_builder.php where_in() function. |

2022-10-07 |

9.8 |

CVE-2022-40825

MISC |

| codeigniter — codeigniter |

B.C. Institute of Technology CodeIgniter <=3.1.13 is vulnerable to SQL Injection via systemdatabaseDB_query_builder.php or_having() function. |

2022-10-07 |

9.8 |

CVE-2022-40826

MISC |

| codeigniter — codeigniter |

B.C. Institute of Technology CodeIgniter <=3.1.13 is vulnerable to SQL Injection via systemdatabaseDB_query_builder.php where() function. |

2022-10-07 |

9.8 |

CVE-2022-40827

MISC |

| codeigniter — codeigniter |

B.C. Institute of Technology CodeIgniter <=3.1.13 is vulnerable to SQL Injection via systemdatabaseDB_query_builder.php or_where_not_in() function. |

2022-10-07 |

9.8 |

CVE-2022-40828

MISC |

| codeigniter — codeigniter |

B.C. Institute of Technology CodeIgniter <=3.1.13 is vulnerable to SQL Injection via systemdatabaseDB_query_builder.php or_like() function. |

2022-10-07 |

9.8 |

CVE-2022-40829

MISC |

| codeigniter — codeigniter |

B.C. Institute of Technology CodeIgniter <=3.1.13 is vulnerable to SQL Injection via systemdatabaseDB_query_builder.php where_not_in() function. |

2022-10-07 |

9.8 |

CVE-2022-40830

MISC |

| codeigniter — codeigniter |

B.C. Institute of Technology CodeIgniter <=3.1.13 is vulnerable to SQL Injection via systemdatabaseDB_query_builder.php like() function. |

2022-10-07 |

9.8 |

CVE-2022-40831

MISC |

| codeigniter — codeigniter |

B.C. Institute of Technology CodeIgniter <=3.1.13 is vulnerable to SQL Injection via systemdatabaseDB_query_builder.php having() function. |

2022-10-07 |

9.8 |

CVE-2022-40832

MISC |

| codeigniter — codeigniter |

B.C. Institute of Technology CodeIgniter <=3.1.13 is vulnerable to SQL Injection via systemdatabaseDB_query_builder.php or_where_in() function. |

2022-10-07 |

9.8 |

CVE-2022-40833

MISC |

| codeigniter — codeigniter |

B.C. Institute of Technology CodeIgniter <=3.1.13 is vulnerable to SQL Injection via systemdatabaseDB_query_builder.php or_not_like() function. |

2022-10-07 |

9.8 |

CVE-2022-40834

MISC |

| codeigniter — codeigniter |

B.C. Institute of Technology CodeIgniter <=3.1.13 is vulnerable to SQL Injection via systemdatabaseDB_query_builder.php. |

2022-10-07 |

9.8 |

CVE-2022-40835

MISC |

| creativedream_file_uploader_project — creativedream_file_uploader |

Arbitrary file upload vulnerability in php uploader |

2022-10-03 |

9.8 |

CVE-2022-40721

MISC

MISC

MLIST |

| css-what_project — css-what |

The package css-what before 2.1.3 are vulnerable to Regular Expression Denial of Service (ReDoS) due to the usage of insecure regular expression in the re_attr variable of index.js. The exploitation of this vulnerability could be triggered via the parse function. |

2022-09-30 |

7.5 |

CVE-2022-21222

CONFIRM

CONFIRM |

| dairy_farm_shop_management_system_project — dairy_farm_shop_management_system |

Dairy Farm Shop Management System 1.0 is vulnerable to SQL Injection via bwdate-report-ds.php file. |

2022-09-30 |

9.8 |

CVE-2022-40943

MISC

MISC |

| dairy_farm_shop_management_system_project — dairy_farm_shop_management_system |

Dairy Farm Shop Management System 1.0 is vulnerable to SQL Injection via sales-report-ds.php file. |

2022-09-30 |

9.8 |

CVE-2022-40944

MISC

MISC

MISC |

| dedecms — dedecms |

DedeCMS 5.7.98 has a file upload vulnerability in the background. |

2022-10-03 |

7.2 |

CVE-2022-40886

MISC |

| dell — hybrid_client |

Dell Hybrid Client below 1.8 version contains a Zip Slip Vulnerability in UI. A guest privilege attacker could potentially exploit this vulnerability, leading to system files modification. |

2022-09-30 |

7.1 |

CVE-2022-34429

MISC |

| fasterxml — jackson-databind |

In FasterXML jackson-databind before 2.14.0-rc1, resource exhaustion can occur because of a lack of a check in primitive value deserializers to avoid deep wrapper array nesting, when the UNWRAP_SINGLE_VALUE_ARRAYS feature is enabled. |

2022-10-02 |

7.5 |

CVE-2022-42003

MISC

MISC

MISC |

| fasterxml — jackson-databind |

In FasterXML jackson-databind before 2.13.4, resource exhaustion can occur because of a lack of a check in BeanDeserializer._deserializeFromArray to prevent use of deeply nested arrays. An application is vulnerable only with certain customized choices for deserialization. |

2022-10-02 |

7.5 |

CVE-2022-42004

MISC

MISC

MISC |

| flyte — flyteadmin |

FlyteAdmin is the control plane for the data processing platform Flyte. Users who enable the default Flyte’s authorization server without changing the default clientid hashes will be exposed to the public internet. In an effort to make enabling authentication easier for Flyte administrators, the default configuration for Flyte Admin allows access for Flyte Propeller even after turning on authentication via a hardcoded hashed password. This password is also set on the default Flyte Propeller configmap in the various Flyte Helm charts. Users who enable auth but do not override this setting in Flyte Admin’s configuration may unbeknownst to them be allowing public traffic in by way of this default password with attackers effectively impersonating propeller. This only applies to users who have not specified the ExternalAuthorizationServer setting. Usage of an external auth server automatically turns off this default configuration and are not susceptible to this vulnerability. This issue has been addressed in version 1.1.44. Users should manually set the staticClients in the selfAuthServer section of their configuration if they intend to rely on Admin’s internal auth server. Again, users who use an external auth server are automatically protected from this vulnerability. |

2022-10-06 |

7.5 |

CVE-2022-39273

MISC

CONFIRM

MISC |

| generex — cs141_firmware |

Generex CS141 before 2.08 allows remote command execution by administrators via a web interface that reaches run_update in /usr/bin/gxserve-update.sh (e.g., command execution can occur via a reverse shell installed by install.sh). |

2022-10-06 |

7.2 |

CVE-2022-42457

MISC

MISC

MISC |

| google — android |

Improper protection in IOMMU prior to SMR Oct-2022 Release 1 allows unauthorized access to secure memory. |

2022-10-07 |

7.8 |

CVE-2022-39854

MISC |

| gridea — gridea |

Gridea version 0.9.3 allows an external attacker to execute arbitrary code remotely on any client attempting to view a malicious markdown file through Gridea. This is possible because the application has the ‘nodeIntegration’ option enabled. |

2022-09-30 |

7.8 |

CVE-2022-40274

MISC

MISC |

| hitachi — storage_plug-in |

Incorrect Privilege Assignment vulnerability in Hitachi Storage Plug-in for VMware vCenter allows remote authenticated users to cause privilege escalation. This issue affects: Hitachi Storage Plug-in for VMware vCenter 04.8.0. |

2022-10-06 |

8.8 |

CVE-2022-2637

MISC |

| htmly — htmly |

Directory Traversal vulnerability in htmly before 2.8.1 allows remote attackers to perform arbitrary file deletions via modified file parameter. |

2022-09-30 |

8.1 |

CVE-2021-33354

MISC |

| ibm — qradar_security_information_and_event_manager |

IBM QRadar SIEM 7.4 and 7.5 data node rebalancing does not function correctly when using encrypted hosts which could result in information disclosure. IBM X-Force ID: 225889. |

2022-10-07 |

7.5 |

CVE-2022-22480

XF

CONFIRM |

| ibm — websphere_automation_for_ibm_cloud_pak_for_watson_aiops |

IBM WebSphere Automation for Cloud Pak for Watson AIOps 1.4.2 is vulnerable to cross-site request forgery, caused by improper cookie attribute setting. IBM X-Force ID: 226449. |

2022-10-07 |

8.8 |

CVE-2022-22493

XF

CONFIRM |

| ikus-soft — rdiffweb |

Allocation of Resources Without Limits or Throttling in GitHub repository ikus060/rdiffweb prior to 2.5.0a4. |

2022-10-06 |

9.8 |

CVE-2022-3273

MISC

CONFIRM |

| ikus-soft — rdiffweb |

Allocation of Resources Without Limits or Throttling in GitHub repository ikus060/rdiffweb prior to 2.5.0a3. |

2022-09-30 |

7.5 |

CVE-2022-3371

CONFIRM

MISC |

| ikus-soft — rdiffweb |

Path Traversal in GitHub repository ikus060/rdiffweb prior to 2.4.10. |

2022-10-06 |

7.5 |

CVE-2022-3389

CONFIRM

MISC |

| innovaphone — innovaphone_firmware |

AP Manager in Innovaphone before 13r2 Service Release 17 allows command injection via a modified service ID during app upload. |

2022-09-30 |

9.8 |

CVE-2022-41870

MISC |

| joplinapp — joplin |

Joplin version 2.8.8 allows an external attacker to execute arbitrary commands remotely on any client that opens a link in a malicious markdown file, via Joplin. This is possible because the application does not properly validate the schema/protocol of existing links in the markdown file before passing them to the ‘shell.openExternal’ function. |

2022-09-30 |

7.8 |

CVE-2022-40277

MISC

MISC |

| lighttpd — lighttpd |

A resource leak in gw_backend.c in lighttpd 1.4.56 through 1.4.66 could lead to a denial of service (connection-slot exhaustion) after a large amount of anomalous TCP behavior by clients. It is related to RDHUP mishandling in certain HTTP/1.1 chunked situations. Use of mod_fastcgi is, for example, affected. This is fixed in 1.4.67. |

2022-10-06 |

7.5 |

CVE-2022-41556

MISC

MISC

MISC |

| linuxfoundation — dapr_dashboard |

Dapr Dashboard v0.1.0 through v0.10.0 is vulnerable to Incorrect Access Control that allows attackers to obtain sensitive data. |

2022-10-03 |

7.5 |

CVE-2022-38817

MISC

MISC |

| microsoft — exchange_server |

Microsoft Exchange Server Elevation of Privilege Vulnerability. |

2022-10-03 |

8.8 |

CVE-2022-41040

MISC

CERT-VN |

| microsoft — exchange_server |

Microsoft Exchange Server Remote Code Execution Vulnerability. |

2022-10-03 |

8.8 |

CVE-2022-41082

MISC

CERT-VN |

| mojoportal — mojoportal |

mojoPortal v2.7 was discovered to contain an arbitrary file upload vulnerability which allows attackers to execute arbitrary code via a crafted PNG file. |

2022-09-30 |

8.8 |

CVE-2022-40341

MISC

MISC |

| moodle — moodle |

A remote code execution risk when restoring backup files originating from Moodle 1.9 was identified. |

2022-09-30 |

9.8 |

CVE-2022-40314

MISC

MISC |

| moodle — moodle |

A limited SQL injection risk was identified in the “browse list of users” site administration page. |

2022-09-30 |

9.8 |

CVE-2022-40315

MISC

MISC |

| moodle — moodle |

Enabling and disabling installed H5P libraries did not include the necessary token to prevent a CSRF risk. |

2022-10-06 |

8.8 |

CVE-2022-2986

MISC

MISC |

| moodle — moodle |

Recursive rendering of Mustache template helpers containing user input could, in some cases, result in an XSS risk or a page failing to load. |

2022-09-30 |

7.1 |

CVE-2022-40313

MISC

MISC |

| mybb — mybb |

MyBB is a free and open source forum software. The _Mail Settings_ ? Additional Parameters for PHP’s mail() function mail_parameters setting value, in connection with the configured mail program’s options and behavior, may allow access to sensitive information and Remote Code Execution (RCE). The vulnerable module requires Admin CP access with the `_Can manage settings?_` permission and may depend on configured file permissions. MyBB 1.8.31 resolves this issue with the commit `0cd318136a`. Users are advised to upgrade. There are no known workarounds for this vulnerability. |

2022-10-06 |

7.2 |

CVE-2022-39265

MISC

CONFIRM

MISC

MISC |

| najeebmedia — frontend_file_manager |

The Frontend File Manager Plugin WordPress plugin before 21.3 allows any authenticated users, such as subscriber, to rename a file to an arbitrary extension, like PHP, which could allow them to basically be able to upload arbitrary files on the server and achieve RCE |

2022-10-03 |

8.8 |

CVE-2022-3125

MISC |

| nedi — nedi |

In certain Nedi products, a vulnerability in the web UI of NeDi login & Community login could allow an unauthenticated, remote attacker to affect the integrity of a device via a User Enumeration vulnerability. The vulnerability is due to insecure design, where a difference in forgot password utility could allow an attacker to determine if the user is valid or not, enabling a brute force attack with valid users. This affects NeDi 1.0.7 for OS X 1.0.7 <= and NeDi for Suse 1.0.7 <= and NeDi for FreeBSD 1.0.7 <=. |

2022-10-06 |

9.1 |

CVE-2022-40895

MISC

MISC

MISC |

| octopus — octopus_server |

In affected versions of Octopus Deploy it is possible to bypass rate limiting on login using null bytes. |

2022-09-30 |

9.8 |

CVE-2022-2778

MISC |

| omron — cx-programmer |

OMRON CX-Programmer 9.78 and prior is vulnerable to an Out-of-Bounds Write, which may allow an attacker to execute arbitrary code. |

2022-10-06 |

9.8 |

CVE-2022-3396

CONFIRM |

| omron — cx-programmer |

OMRON CX-Programmer 9.78 and prior is vulnerable to an Out-of-Bounds Write, which may allow an attacker to execute arbitrary code. |

2022-10-06 |

9.8 |

CVE-2022-3397

CONFIRM |

| omron — cx-programmer |

OMRON CX-Programmer 9.78 and prior is vulnerable to an Out-of-Bounds Write, which may allow an attacker to execute arbitrary code. |

2022-10-06 |

9.8 |

CVE-2022-3398

CONFIRM |

| online_diagnostic_lab_management_system_project — online_diagnostic_lab_management_system |

An arbitrary file upload vulnerability in the component /php_action/editFile.php of Online Diagnostic Lab Management System v1.0 allows attackers to execute arbitrary code via a crafted PHP file. |

2022-10-07 |

7.2 |

CVE-2022-41512

MISC |

| online_diagnostic_lab_management_system_project — online_diagnostic_lab_management_system |

Online Diagnostic Lab Management System v1.0 was discovered to contain a SQL injection vulnerability via the id parameter at /diagnostic/edittest.php. |

2022-10-07 |

7.2 |

CVE-2022-41513

MISC |

| online_diagnostic_lab_management_system_project — online_diagnostic_lab_management_system |

Online Diagnostic Lab Management System v1.0 is vulnerable to SQL Injection via /diagnostic/editclient.php?id=. |

2022-10-07 |

7.2 |

CVE-2022-42073

MISC |

| online_diagnostic_lab_management_system_project — online_diagnostic_lab_management_system |

Online Diagnostic Lab Management System v1.0 is vulnerable to SQL Injection via /diagnostic/editcategory.php?id=. |

2022-10-07 |

7.2 |

CVE-2022-42074

MISC |

| online_leave_management_system_project — online_leave_management_system |

Online Leave Management System v1.0 was discovered to contain a SQL injection vulnerability via the id parameter at /leave_system/classes/Master.php?f=delete_department. |

2022-10-06 |

7.2 |

CVE-2022-41355

MISC |

| online_pet_shop_we_app_project — online_pet_shop_we_app |

Online Pet Shop We App v1.0 was discovered to contain a SQL injection vulnerability via the id parameter at /pet_shop/admin/?page=maintenance/manage_category. |

2022-10-07 |

7.2 |

CVE-2022-41377

MISC |

| online_pet_shop_we_app_project — online_pet_shop_we_app |

Online Pet Shop We App v1.0 was discovered to contain a SQL injection vulnerability via the id parameter at /pet_shop/admin/?page=inventory/manage_inventory. |

2022-10-07 |

7.2 |

CVE-2022-41378

MISC |

| open_source_sacco_management_system_project — open_source_sacco_management_system |

Open Source SACCO Management System v1.0 was discovered to contain a SQL injection vulnerability via the id parameter at /sacco_shield/ajax.php?action=delete_loan. |

2022-10-07 |

7.2 |

CVE-2022-41514

MISC |

| open_source_sacco_management_system_project — open_source_sacco_management_system |

Open Source SACCO Management System v1.0 was discovered to contain a SQL injection vulnerability via the id parameter at /sacco_shield/ajax.php?action=delete_payment. |

2022-10-07 |

7.2 |

CVE-2022-41515

MISC |

| orchest — orchest |

### Impact In a CSRF attack, an innocent end user is tricked by an attacker into submitting a web request that they did not intend. This may cause actions to be performed on the website that can include inadvertent client or server data leakage, change of session state, or manipulation of an end user’s account. ### Patch Upgrade to v2022.09.10 to patch this vulnerability. ### Workarounds Rebuild and redeploy the Orchest `auth-server` with this commit: https://github.com/orchest/orchest/commit/c2587a963cca742c4a2503bce4cfb4161bf64c2d ### References https://en.wikipedia.org/wiki/Cross-site_request_forgery https://cwe.mitre.org/data/definitions/352.html ### For more information If you have any questions or comments about this advisory: * Open an issue in https://github.com/orchest/orchest * Email us at rick@orchest.io |

2022-09-30 |

8.1 |

CVE-2022-39268

MISC

MISC

MISC

CONFIRM |

| phpipam — phpipam |

phpipam v1.5.0 was discovered to contain a header injection vulnerability via the component /admin/subnets/ripe-query.php. |

2022-10-03 |

9.8 |

CVE-2022-41443

MISC |

| pjsip — pjsip |

PJSIP is a free and open source multimedia communication library written in C. In versions of PJSIP prior to 2.13 the PJSIP parser, PJMEDIA RTP decoder, and PJMEDIA SDP parser are affeced by a buffer overflow vulnerability. Users connecting to untrusted clients are at risk. This issue has been patched and is available as commit c4d3498 in the master branch and will be included in releases 2.13 and later. Users are advised to upgrade. There are no known workarounds for this issue. |

2022-10-06 |

9.8 |

CVE-2022-39244

MISC

CONFIRM |

| pjsip — pjsip |

PJSIP is a free and open source multimedia communication library written in C. When processing certain packets, PJSIP may incorrectly switch from using SRTP media transport to using basic RTP upon SRTP restart, causing the media to be sent insecurely. The vulnerability impacts all PJSIP users that use SRTP. The patch is available as commit d2acb9a in the master branch of the project and will be included in version 2.13. Users are advised to manually patch or to upgrade. There are no known workarounds for this vulnerability. |

2022-10-06 |

9.1 |

CVE-2022-39269

MISC

CONFIRM |

| pyup — dependency_parser |

dparse is a parser for Python dependency files. dparse in versions before 0.5.2 contain a regular expression that is vulnerable to a Regular Expression Denial of Service. All the users parsing index server URLs with dparse are impacted by this vulnerability. A patch has been applied in version `0.5.2`, all the users are advised to upgrade to `0.5.2` as soon as possible. Users unable to upgrade should avoid passing index server URLs in the source file to be parsed. |

2022-10-06 |

7.5 |

CVE-2022-39280

MISC

MISC

MISC

CONFIRM |

| realvnc — vnc_server |

RealVNC VNC Server before 6.11.0 and VNC Viewer before 6.22.826 on Windows allow local privilege escalation via MSI installer Repair mode. |

2022-09-30 |

7.8 |

CVE-2022-41975

MISC |

| samsung — factorycamera |

Path traversal vulnerability in AtBroadcastReceiver in FactoryCamera prior to version 3.5.51 allows attackers to write arbitrary file as FactoryCamera privilege. |

2022-10-07 |

7.8 |

CVE-2022-39858

MISC |

| semtech — loramac-node |

LoRaMac-node is a reference implementation and documentation of a LoRa network node. Versions of LoRaMac-node prior to 4.7.0 are vulnerable to a buffer overflow. Improper size validation of the incoming radio frames can lead to an 65280-byte out-of-bounds write. The function `ProcessRadioRxDone` implicitly expects incoming radio frames to have at least a payload of one byte or more. An empty payload leads to a 1-byte out-of-bounds read of user controlled content when the payload buffer is reused. This allows an attacker to craft a FRAME_TYPE_PROPRIETARY frame with size -1 which results in an 65280-byte out-of-bounds memcopy likely with partially controlled attacker data. Corrupting a large part if the data section is likely to cause a DoS. If the large out-of-bounds write does not immediately crash the attacker may gain control over the execution due to now controlling large parts of the data section. Users are advised to upgrade either by updating their package or by manually applying the patch commit `e851b079`. |

2022-10-06 |

9.8 |

CVE-2022-39274

MISC

MISC

CONFIRM |

| simple_cold_storage_management_system_project — simple_cold_storage_management_system |

Simple Cold Storage Management System v1.0 is vulnerable to SQL injection via /csms/classes/Master.php?f=delete_message. |

2022-10-06 |

7.2 |

CVE-2022-42241

MISC |

| simple_cold_storage_management_system_project — simple_cold_storage_management_system |

Simple Cold Storage Management System v1.0 is vulnerable to SQL injection via /csms/classes/Master.php?f=delete_booking. |

2022-10-06 |

7.2 |

CVE-2022-42242

MISC |

| simple_cold_storage_management_system_project — simple_cold_storage_management_system |

Simple Cold Storage Management System v1.0 is vulnerable to SQL injection via /csms/admin/storages/manage_storage.php?id=. |

2022-10-06 |

7.2 |

CVE-2022-42243

MISC |

| simple_cold_storage_management_system_project — simple_cold_storage_management_system |

Simple Cold Storage Management System v1.0 is vulnerable to SQL injection via /csms/admin/storages/view_storage.php?id=. |

2022-10-06 |

7.2 |

CVE-2022-42249

MISC |

| simple_cold_storage_management_system_project — simple_cold_storage_management_system |

Simple Cold Storage Management System v1.0 is vulnerable to SQL injection via /csms/admin/inquiries/view_details.php?id=. |

2022-10-06 |

7.2 |

CVE-2022-42250

MISC |

| simple_e-learning_system_project — simple_e-learning_system |

An SQL injection vulnerability issue was discovered in Sourcecodester Simple E-Learning System 1.0., in /vcs/classRoom.php?classCode=, classCode. |

2022-10-07 |

9.8 |

CVE-2022-40872

MISC |

| snyk — cli |

Snyk CLI before 1.996.0 allows arbitrary command execution, affecting Snyk IDE plugins and the snyk npm package. Exploitation could follow from the common practice of viewing untrusted files in the Visual Studio Code editor, for example. The original demonstration was with shell metacharacters in the vendor.json ignore field, affecting snyk-go-plugin before 1.19.1. This affects, for example, the Snyk TeamCity plugin (which does not update automatically) before 20220930.142957. |

2022-10-03 |

7.8 |

CVE-2022-40764

MISC

MISC

MISC

MISC |

| solarwinds — orion_platform |

A vulnerable component of Orion Platform was vulnerable to SQL Injection, an authenticated attacker could leverage this for privilege escalation or remote code execution. |

2022-09-30 |

8.8 |

CVE-2022-36961

MISC

MISC |

| sonicjs — sonicjs |

SonicJS through 0.6.0 allows file overwrite. It has the following mutations that are used for updating files: fileCreate and fileUpdate. Both of these mutations can be called without any authentication to overwrite any files on a SonicJS application, leading to Arbitrary File Write and Delete. |

2022-10-01 |

9.1 |

CVE-2022-42002

MISC

MISC |

| swmansion — react_native_reanimated |

The package react-native-reanimated before 3.0.0-rc.1 are vulnerable to Regular Expression Denial of Service (ReDoS) due to improper usage of regular expression in the parser of Colors.js. |

2022-09-30 |

7.5 |

CVE-2022-24373

CONFIRM

CONFIRM

CONFIRM

CONFIRM |

| sylabs — singularity_image_format |

syslabs/sif is the Singularity Image Format (SIF) reference implementation. In versions prior to 2.8.1the `github.com/sylabs/sif/v2/pkg/integrity` package did not verify that the hash algorithm(s) used are cryptographically secure when verifying digital signatures. A patch is available in version >= v2.8.1 of the module. Users are encouraged to upgrade. Users unable to upgrade may independently validate that the hash algorithm(s) used for metadata digest(s) and signature hash are cryptographically secure. |

2022-10-06 |

9.8 |

CVE-2022-39237

CONFIRM

MISC |

| tooljet — tooljet |

Account Takeover :: when see the info i can see the hash pass i can creaked it …………… Account Takeover :: when see the info i can see the forgot_password_token the hacker can send the request and changed the pass |

2022-10-07 |

7.5 |

CVE-2022-3422

CONFIRM

MISC |

| veritas — netbackup |

An issue was discovered in Veritas NetBackup through 10.0 and related Veritas products. The NetBackup Primary server is vulnerable to a SQL Injection attack affecting the NBFSMCLIENT service. |

2022-10-03 |

9.8 |

CVE-2022-42302

MISC |

| veritas — netbackup |

An issue was discovered in Veritas NetBackup through 10.0 and related Veritas products. The NetBackup Primary server is vulnerable to a second-order SQL Injection attack affecting the NBFSMCLIENT service by leveraging CVE-2022-42302. |

2022-10-03 |

9.8 |

CVE-2022-42303

MISC |

| veritas — netbackup |

An issue was discovered in Veritas NetBackup through 10.0 and related Veritas products. The NetBackup Primary server is vulnerable to a SQL Injection attack affecting idm, nbars, and SLP manager code. |

2022-10-03 |

9.8 |

CVE-2022-42304

MISC |

| veritas — netbackup |

An issue was discovered in Veritas NetBackup through 10.0.0.1 and related Veritas products. The NetBackup Primary server is vulnerable to an XML External Entity (XXE) Injection attack through the DiscoveryService service. |

2022-10-03 |

9.8 |

CVE-2022-42307

MISC |

| veritas — netbackup |

An issue was discovered in Veritas NetBackup through 10.0.0.1 and related Veritas products. The NetBackup Primary server is vulnerable to an XML External Entity (XXE) injection attack through the nbars process. |

2022-10-03 |

8.8 |

CVE-2022-42301

MISC |

| veritas — netbackup |

An issue was discovered in Veritas NetBackup through 10.0.0.1 and related Veritas products. The NetBackup Primary server is vulnerable to a denial of service attack through the DiscoveryService service. |

2022-10-03 |

7.5 |

CVE-2022-42299

MISC |

| veritas — netbackup |

An issue was discovered in Veritas NetBackup through 10.0.0.1 and related Veritas products. The NetBackup Primary server is vulnerable to a Path traversal attack through the DiscoveryService service. |

2022-10-03 |

7.5 |

CVE-2022-42305

MISC |

| veritas — netbackup |

An issue was discovered in Veritas NetBackup through 8.2 and related Veritas products. An attacker with local access can delete arbitrary files by leveraging a path traversal in the pbx_exchange registration code. |

2022-10-03 |

7.1 |

CVE-2022-42308

MISC |

| vmware — rabbitmq |

RabbitMQ is a multi-protocol messaging and streaming broker. In affected versions the shovel and federation plugins perform URI obfuscation in their worker (link) state. The encryption key used to encrypt the URI was seeded with a predictable secret. This means that in case of certain exceptions related to Shovel and Federation plugins, reasonably easily deobfuscatable data could appear in the node log. Patched versions correctly use a cluster-wide secret for that purpose. This issue has been addressed and Patched versions: `3.10.2`, `3.9.18`, `3.8.32` are available. Users unable to upgrade should disable the Shovel and Federation plugins. |

2022-10-06 |

7.5 |

CVE-2022-31008

MISC

CONFIRM |

| web-based_student_clearance_system_project — web-based_student_clearance_system |

A vulnerability was found in SourceCodester Web-Based Student Clearance System. It has been classified as critical. Affected is an unknown function of the file /Admin/login.php of the component POST Parameter Handler. The manipulation of the argument txtusername leads to sql injection. It is possible to launch the attack remotely. The exploit has been disclosed to the public and may be used. VDB-210246 is the identifier assigned to this vulnerability. |

2022-10-07 |

9.8 |

CVE-2022-3414

N/A

N/A |

by Contributed | Oct 10, 2022 | Technology

This article is contributed. See the original author and article here.

During Cybersecurity Awareness Month, it’s important to focus not only on the dangers of data breaches but also on how to build with products like Microsoft Power Platform and Dataverse—products that are designed to help keep your organization’s data protected. Use the resources on Microsoft Learn to explore ways to support security in your organization, whether you’re a traditional solution architect, a business user, or an IT pro. April Dunnam, Power Platform Cloud Advocate, notes that anyone building a low-code solution should have a security-first mindset. She explains, “Your solution will be better designed, and then it’s going to be long-standing.”

Dataverse and Microsoft Power Platform—better together

Dataverse is a critical and foundational component of Microsoft Power Platform. It’s what the platform runs on, and it’s secure by design. You can configure many layers of security in Dataverse. As April points out, “You can use the [Microsoft Power Platform] admin center to define different data loss prevention policies to determine which connectors you can and can’t use, all the way down to a really granular level.” She recommends checking out the new managed environments that apply to both Microsoft Power Platform and Dataverse, which make it easier to manage some of the out-of-the box security features. For every solution, you can configure additional security capabilities. For more information, go to the Microsoft Learn module Create and manage environments in Dataverse.

As part of your cybersecurity strategy, April also recommends setting up a Center of Excellence and using the toolkit, because, as she observes, “[It] augments the capabilities of Microsoft Power Platform and fosters an internal community who can think ahead and put together best practices to enable secure low-code solutions.” To learn more, explore the Microsoft Learn module Get started with Microsoft Power Platform Center of Excellence.

April is all for using Microsoft Power Platform tools “right out of the box” for building security solutions. She observes, “These intuitive, user-friendly tools guide us through making sure that the applications and solutions we build are secure. So, with just a little bit of work and a little bit of reading some of the Microsoft Learn material to get a good understanding, you’re ready to come up to speed on making sure that the solutions that you build are secure.” For details, check out the Microsoft Learn module Introduction to Microsoft Power Platform security and governance.

Use a collection to discover more security content

April encourages the use of Microsoft Learn resources, saying, “Learning paths and modules are so helpful for getting a basic understanding of security to help you set your department up for success. Later, you can transition to more advanced content as you strategize for your organization in a role like solution architect.” April recommends the Cybersecurity Awareness – Microsoft Power Platform collection, which offers a basic understanding of Dataverse security capabilities. Plus, it explores fundamental Microsoft Power Platform security concepts.

Earn a Microsoft Certification

People who follow April know that she’s a passionate Microsoft Power Platform advocate with many certifications. She observes, “People are always asking me, ‘What should I do next on my path?’” April has a couple of recommendations for validating your skills by earning an industry-recognized Microsoft Certification. She says, “If you’re building some secure applications for your team, then look into earning a Microsoft Certified: Power Platform Functional Consultant Associate certification. [Pass Exam PL-200.] If you’re at a senior level, doing things like deploying scalable applications and managing security across the environment for an organization, then explore earning a Microsoft Certified: Power Platform Solution Architect Expert certification. [Pass Exam-PL-600 and a prerequisite.]”

Keep learning with April Dunnam

Now that you’ve gotten some key tips on how to handle security issues, it’s time to dive deep into Microsoft Power Platform and Dataverse on April Dunnam’s YouTube channel. Watch for her upcoming video “Why SharePoint Experts are using Dataverse”, and be sure to check out her show—The Low Code Revolution—to build apps efficiently and expand your skill set.

by Contributed | Oct 8, 2022 | Technology

This article is contributed. See the original author and article here.

Next.js is one of the most popular JavaScript frameworks for building complex, server-driven React applications, combining the features that make React a useful UI library with server-side rendering, built-in API support and SEO optimizations.

With today’s preview release, we’re improving support for Next.js on Azure Static Web Apps.

What’s new

In this preview we’re focusing on making zero-config deployments with Next.js even easier than it’s been before by including support for Server-Side Rendering and Incremental Static Regeneration (SSR and ISR respectively), API Routes, advanced image compression, and Next.js Auth. In this post, we want to highlight three features that make building Next.js apps on Azure more powerful.

Server-Side Rendering

When we first launched Static Web Apps, we ensured we had support for Next.js, but our focus on this was Static-Site Generation or SSG. SSG takes the application and compiles static HTML from it that is then served and while SSG is useful in some scenarios it doesn’t support dynamic updates to the content of the page per request.

This is where Server-Side Rendering, or SSR, comes in. With SSR you can inject data from a backend data source before the HTML is sent to the client, aka in the pre-rendering phase, allowing for more contextual, real-time updates to the data. Check out Next.js’s docs for more on SSR.

For this demo we’ll add a getServerSideProps function to our index.js file that has the current timestamp:

export async function getServerSideProps() {

const data = JSON.stringify({ time: new Date() });

return { props: { data } };

}

We can then consume this in the component:

export default function Home({ data }) {

const serverData = JSON.parse(data);

// snip

And then output the date timestamp.

API routes

API routes allow us to build a backend API for the client-side components of our Next.js app to communicate with and get data from other systems. These are added to a project by creating an api folder within our Next.js app and defining JavaScript (or TypeScript) files with exported functions that Next.js will turn into APIs that can be called to return JSON to the client.

API routes can be as simple as masking an external service, or as complex as hosting a GraphQL server, which we’re doing in the example below.

Here you’ll see that we called the API route, /graphql, and got back a GraphQL payload response. You’ll find this sample on GitHub.

Image optimisation

When it comes to ensuring your website is optimized for all web clients, Web Vitals is a valuable measure. To help with this Next.js has an Image component and image optimisation. This feature of Next.js also makes it easier to create responsive images on your website and optimise the image being sent to the browser based off the dimensions of the view port and whether the image is currently visible or not.

You can see this in action where we have deployed the Next.js image example.

Deploying with the new Next.js support

When it comes to deploying an application to leverage these Next.js features, you need to select Next.js from the Build Presets and leave the rest of the options as their default, as SSR Next.js applications are the default for Static Web Apps. If you wish to use Next.js as a Static Site Generator, you’ll need to add the environment variable is_static_export to your deployment pipeline and set it to true and the output location set to out.

Common Questions

Can I still use SSG?

Yes! Static rendered Next.js applications are still supported on Static Web Apps, and we encourage you to keep using them if they are the right model for your applications.

Existing Next.js SSG sites should be unaffected by the launch today, although it is encouraged that you add the is_static_export environment flag to your deployment pipeline, ensuring that Static Web Apps correctly identifies the SSG.

Should I use SSR over SSG?

This is very much an it depends answer. The support announced today for SSR is preview support, meaning that it is not recommended for production workloads, for production we still encourage people to using SSG as their preferred model when working with Next.js. Additionally, echoing the recommendations from Next.js themselves, that SSG should be the preferred model when publishing sites.

SSG sites have a performance benefit over SSR, as the HTML files are created at build time rather than runtime, meaning there is less work for the server to do when producing content, thus increasing performance.

But if you’re looking to use features like dynamic routing, have a very large site that have hundreds (or thousands) of pages, or want to fetch data before sending to the client, SSR will be a better fit for you and worth exploring.

If you’re still unsure which approach to use, check out this excellent guide from Next.js themselves.

Can I use Azure Functions or BYO Backends as well as API routes?

No, if you’re deploying a hybrid Next.js application then no additional backend will be available for the site as API routes can be used to achieve much of the same functionality.

Next steps

This is all exciting and if you’re like me and can’t wait to try out the new features, check out the sample repo from this post then head on over to our documentation and get started with your next Next.js application today.

by Contributed | Oct 8, 2022 | Technology

This article is contributed. See the original author and article here.

Hi,

In this article, I will walk you through some typical challenges when building AKS platforms. I will also reflect on the AKS impact over the Hub & Spoke topology, which is widely adopted by organizations.

Quick recap of the Hub & Spoke

I won’t spend much time on this because, most of you already know what the Hub & Spoke model is all about. Microsoft has already documented this here, although the proposed diagram is a little bit naïve, but the essentials are there. The Hub & Spoke model, is a network-centric architecture where everything ends up in a virtual network in a way or another. This gives companies a greater control over the network traffic. There are many variants, but the spokes are virtual networks dedicated to business workloads, while the hubs play a control role, mostly to rule and inspect east-west (spoke to spoke & DC to DC) and south-north traffic (coming from outside the private perimeter and going outside). On top of increased control over the network traffic, the Hub & Spoke model aims at sharing some services across workloads, such as DNS to name just one.

Most companies rely on network virtual appliances (NVA) to rule the network traffic, although we see a growing adoption of Azure Firewall.

Today, most PaaS services can be plugged to the Hub & Spoke model in a way or another:

- Through VNET Integration for outbound traffic

- Through Private Link for inbound traffic

- Through Microsoft-managed virtual networks for many data services.

- Natively, such as App Service Environments, Azure API Management, etc. and of course AKS!!

That is why we see a growing adoption of this model. The ultimate purpose of Hub & Spoke is to isolate workloads from Internet and have an increased control over internet-facing workloads, for which it is functionally required to be public (ie: a mobile app talking to an API), a B2C offering, an e-business site, etc.

The Hub & Spoke model gives companies the opportunity to:

- Route traffic as they wish

- Use layer-4 & 7 firewalls

- Use IDS/IPS and TLS inspection

- Do network micro-segmentation and workload isolation

Some companies push the micro-segmentation very far, by for example, allocating a dedicated virtual network for each and every asset, which is only peered with the required capabilities (internet in-out, dc, etc.), while others share some zones across applications. However, no matter what they do, they will still rely on network security groups and next gen firewalls to govern their traffic.

AKS, the elephant in the Hub & Spoke room

AKS is not a service like others, it has a vast ecosystem and a different approach to networking. An AKS cluster is typically meant to host more than a single application, and you can’t afford to “simply” rely on the Hub & Spoke to manage network traffic. Kubernetes is aimed at abstracting away infrastructure components such as nodes, load balancers etc. Most K8s solutions are based on dynamic rules and programmable networks…this is light years away from the rather static approach of NSGs and NVAs. A single AKS cluster might host hundreds of applications…That is why I consider AKS as the elephant in the Hub & Spoke room here. Somehow, AKS “breaks” the hub & spoke model, at least for East-West traffic. South-North traffic remains more controllable using traditional techniques, as it involves the cluster boundaries (IN and OUT).

Network plugins

Before talking about how you can isolate apps in AKS, let’s have a look at the different networking options for the cluster itself:

- Kubenet: K8s native network plugin. Often used by companies because of its IP friendliness. Only nodes get a routable IP while pods get NAT IPs. Kubenet comes with some limitations, such as max 400 nodes per cluster incurred by the underlying route table, whose the UDR limit is (400 routes max). Note that 400 nodes is a theoretical limit because you are likely to add your own routes on top of the AKS ones, which will reduce even more the max number of nodes you can have. Also, if you provision new node pools during cluster upgrades, that limit will even be lower. At this stage, you can’t use virtual nodes with Kubenet. NAT is also supposed to incur a performance penalty but I never perceived any visible effect. By default, you can’t leverage K8s network policies with Kubenet, but Calico Policy comes to the rescue. At last, because of NAT, you can’t use NSGs and NVAs the same way you would do with VMs or Azure CNI (more on that later).

- Azure CNI: The Azure Container Network Interface for K8s. In a nutshell, with Azure CNI, every pod instance gets a routable IP assigned, hence a potential high usage of IPs. With Azure CNI, you can leverage Azure Networking as if AKS was just a bunch of mere VMs. You also have K8s network policies available

- Bring your own CNI: features will vary according to the CNI vendor. Mind the fact that you won’t have MS support for CNI-related issues.

- AKS CNI Overlay (early days in 10/2022): this makes me thing of some sort of managed Kubenet. It has the benefits of Kubenet (IP friendly) but overcomes some of its limitations.

We will see later what impact the network plugin may have over traffic management, but let us focus first on a more concrete example.

South-North and East-West traffic

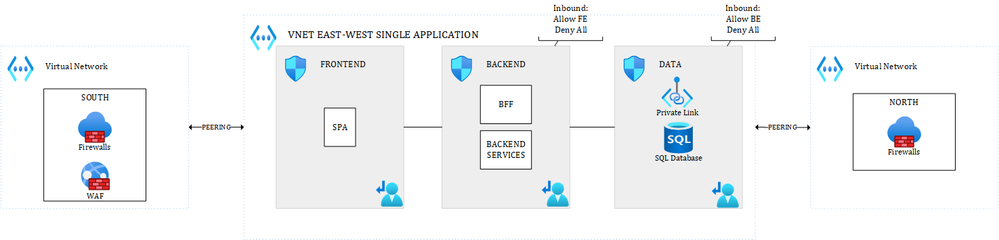

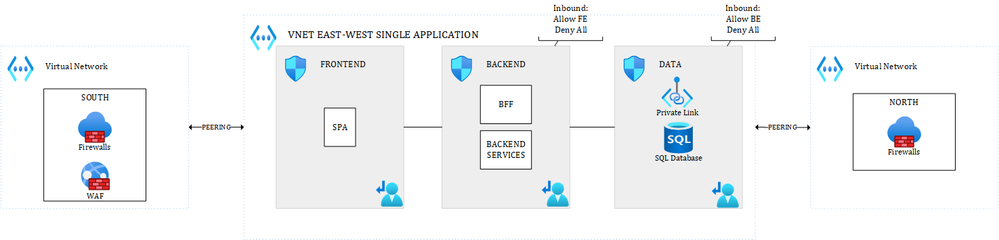

In a typical Hub & Spoke approach, a N-TIER architecture for a single application could look like this (simplified views):

Figure 1 – East-West and South-North traffic – Multiple Hubs

where the different layers of the application are talking to each other through the system routes and controlled by Network Security Groups (which is commonly accepted). Only traffic coming from outside the VNET (South) or leaving the VNET (North) would be routed through an NVA or Azure Firewall. In the above diagram, there are dedicated hubs for North & South traffic.

An alternative to this could be:

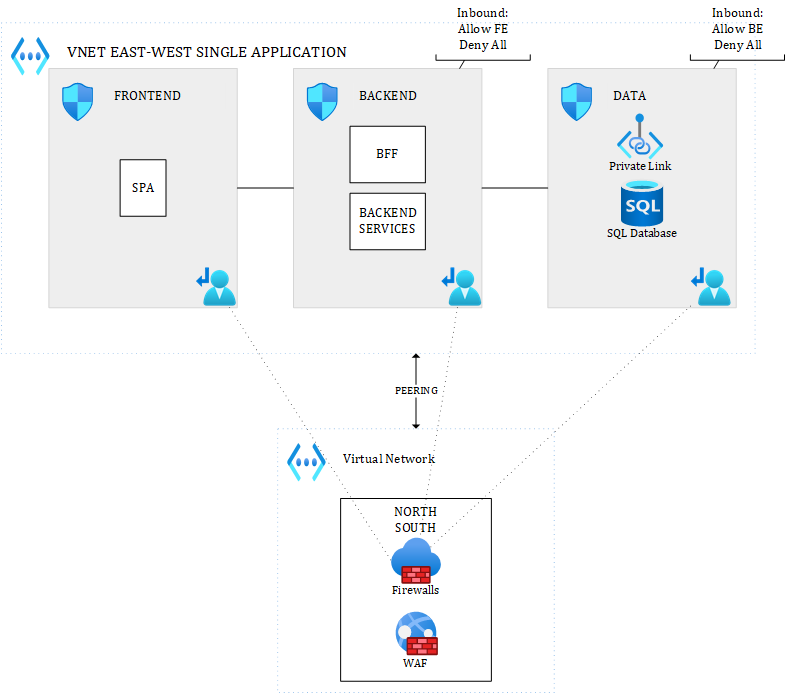

Figure 2 – Alternative for East-West and South-North traffic – Single Hub

where you’d have a single hub for South-North traffic, and where you might optionally route internal VNET traffic (East-West) of that single application to an NVA or Azure Firewall, if you really want to enforce IDPS everywhere and/or TLS inspection.

With UDRs and peering, everything is possible. The number of hubs you want to use depends on you. While multiple hubs improve visibility, they incur extra costs (at least one firewall or NVA per hub, and even more for HA and/or DR setup). Costs can rise pretty quickly.

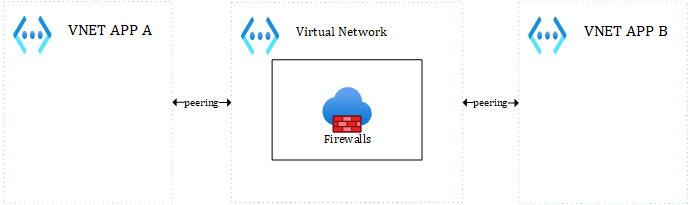

For East-West traffic involving multiple applications, you would simply handle this with a hub (either the main hub, either an integration hub) in the middle (very typical this one):

Figure 3 – East-West traffic with the Hub in the middle

Whatever you put (VMs, ASE, APIM, other PaaS services…) inside the different subnets, all of this makes perfect sense in a Hub & Spoke network, but what about AKS?

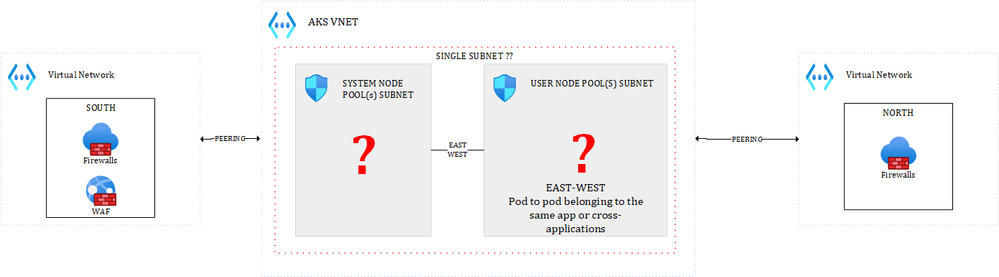

As a reminder, an AKS cluster is a set of node pools (system and user node pools). A best practice is to have at least one dedicated system node pool and 1 or more user node pools. While it is a best practice, this is by no way enforced by Azure. Each node pool, except the system one, will result in 0 to n nodes (virtual machines), depending on the defined scaling settings. Ultimately, these nodes will end up in one or more subnets, as illustrated below:

Figure 4 – Subnets for worker nodes

Before diving into East-West traffic, let us focus on South-North for a second, because that is the easiest. You can simply peer VNET(s) to the AKS one to filter out what comes inside the cluster (where you’ll also have an ingress controller), and what goes outside of the cluster (where you could have an egress controller). Egress traffic could be initiated by a pod reaching out to Azure data services, other VNETs or Internet.

Regarding East-West and the above diagram, a few questions arise:

- Are you going to have one subnet for system node pools and one for user node pools?

- Are you going to combine altogether?

- Will it be one subnet per user node pool???

Note that, there is already one sure thing: you cannot use different VNETS to rule East-West traffic across applications hosted in the same cluster.… Your best possible boundary is the subnet. But, even though subnets could be used as a boundary, should you use NSGs, NVAs, etc. for internal traffic?? That is for sure not a cloud native approach to rely on this, but it does not mean you can’t use them. Let’s first make the assumption that you want to go the cloud native way. For this, you’d rely on:

- A service mesh such as Istio, Linkerd, Open Service Mesh, NGINX Service Mesh, etc. to apply layer-7 policies (access controls, mTLS, etc.)

- K8s network policies or Calico Network policies to apply layer-4 policies. Note that Calico also integrates with Istio.

And you would rely on this, independently of the underlying nodes that are being used by the cluster. This approach is fine, as long as:

- You master Service Meshes et Network Policies, which is not an easy task :).

- The process initiating or receiving traffic is a container running in the cluster.

Relying “only” on a Service Mesh and Network Policies to manage internal cluster traffic, can still let some doors opened to the following types of attacks:

- Escape from containers type of vulnerabilities, where the process would have access to the underlying OS. In such a case, the execution context will be insensitive to your mesh & network policies

- Operators logged onto the cluster worker nodes themselves, or host-level processes. Same as above, they could perform lateral movements escaping the control of your mesh & network policies.

With proper Azure Policy in place, you can manage to mitigate item number 1, at least to make sure that containers do not run as root, are not able to escalate privileges etc. Also, making sure images only come from trusted registries and are built on trusted base images, etc. will greatly help, but working on container CAPS is the best way to go. Of course, if there is an OS-level vulnerability or an admission controller vulnerability, you could still be at risk.

Item number 2 can be mitigated with strict access policies, making sure that not everybody has access to the private SSH keys. This is more of a malicious insider threat, which you should handle like any other malicious insider threat.

But by the way, why would you even want to go the Cloud native way and use a Service Mesh combined to Calico (or K8s network policies)?

– To build a zero-trust environment, based on layer-4 and layer-7 rules.

– To rely 100% on automation, since everything is “as code”, leading to predictable and repeatable outputs

– To benefit from the built-in elasticity and resilience of K8s, where pods can be re-scheduled in case of adverse event to any node that can accommodate the required resources.

– To benefit from smart load balancers that understand application protocols such as gRPC, HTTP/2, etc.

– To benefit from rolling upgrades and modern deployment techniques such as blue/green, A/B testing, etc.

– To have a greater visibility thanks to the built-in observability mechanisms that are part of service meshes

– To have more robust applications, which you can stress with chaos engineering techniques, again built-in in multiple meshes

All of these are very valid reasons to go the Cloud native way, but most organizations are just not ready yet for this mindset shift.

Risks highlighted above might be acceptable under the following circumstances:

– You host multiple applications, which are closely related to each other (same family, same business line, etc.)

– You host a single application in the cluster (yes, it happens)

– You host multiple applications belonging to a single tenant

It is of course up to you to define where to put the limits. However, if you host multiple assets belonging to different customers, in other words, if you have a true multi-tenant cluster, you will want to make sure a given customer cannot access another one. In that case, relying only on Service Meshes and K8s network policies might be more risky, and this is where subnet segregation and NSGs might come into play.

Multitenant clusters

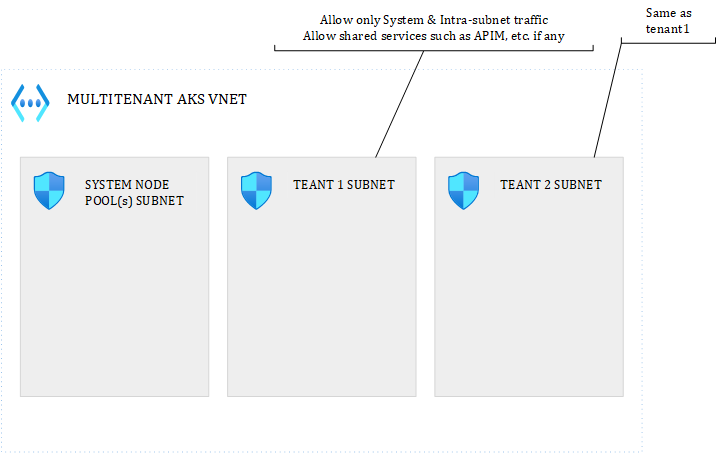

Let us explore the possibilities for true multi-tenant clusters. Something you can end up with would look like this:

Figure 5 – Multitenancy in AKS – Possible setup

I got rid of the South-North traffic to focus only on East-West. You could isolate each tenant in a dedicated subnet and define the NSG rules you want. UDRs can be added on top.

When network plugin matters

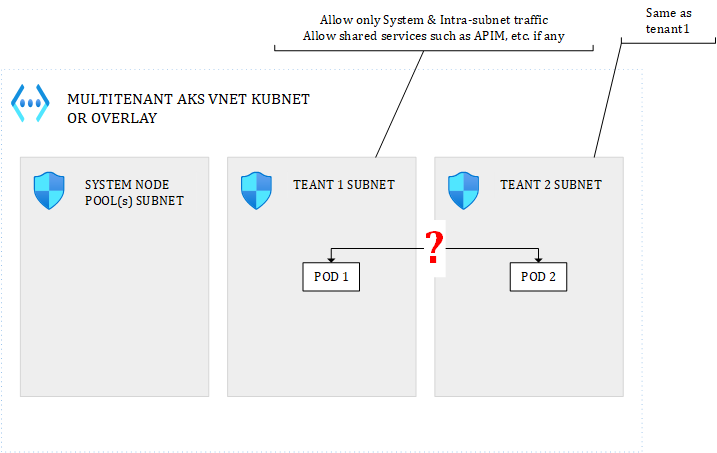

With such a topology, network plugins matter. Let’s see how:

Figure 6 – POD to POD with Kubenet

With the Kubenet plugin, when POD1 and POD2 talk together, the NSGs will see the NAT IP, not even the underlying node IP onto which the pod is running. Because the mapping between each AKS node and a POD CIDR range is unpredictable, there is no way you could use the pod or underlying node IP in the NSG. With Kubenet, you’d be forced to allow the entire POD CIDR range for every subnet you have, else you might even block pods belonging to the same tenant to talk together. To isolate tenants, you’d then need to rely on:

– NSGs for the subnet ranges, not to rule how pod can/cannot talk together but to isolate tenant nodes from each other and mitigate lateral movements in case of container escape and direct access to underlying VMs.

– K8s network policies to prevent lateral movements from within the cluster. Optionally, adding layer-7 policies with a mesh

You would have to combine both NSGs and K8s network policies.

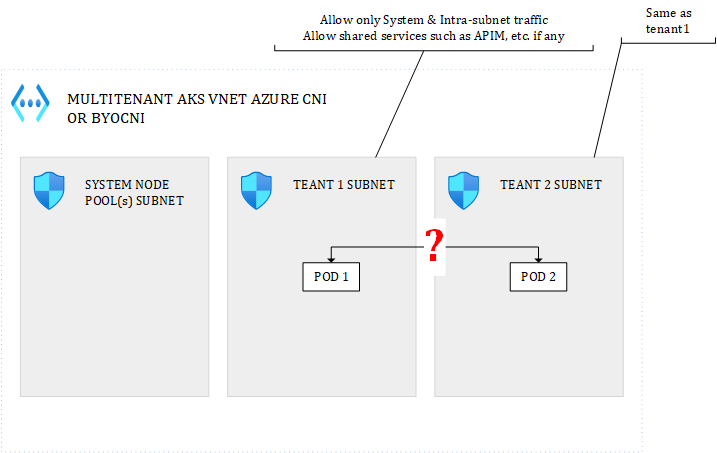

With CNI:

Figure 7 – POD to POD with CNI

POD1 and POD2 would see each other’s assigned routable IP, as well as NSG. Therefore, whitelisting internal subnet traffic only at the level of the NSG, would do the job. However, I would discourage the use of POD IPs in NSG rules because POD IPs are very volatile. Out of the box, there is no way to assign a static IP to a POD. This can be achieved with some CNI plugins but I don’t think it is a good idea. I would recommend to work with subnet-wide rules and/or node-level rules, not deeper. Of course, you can also use internal K8s network policies to apply fine-grained rules.

Managing resilience and availability

Ok, but, how do you make sure pods from tenant1 are not scheduled to tenant2 nodes? Well, there are multiple ways to achieve this in K8s. You can use node selectors, node affinity, taints & tolerations. By attaching appropriate taints or labels to your node pools, ie: tenant=tenant1, tenant=tenant2, etc., you could achieve this easily.

Great but, isn’t there something that bothers you here? What about the elasticity and K8s’built-in features to re-schedule pods on healthy nodes in case of node failures? With these siloes in place, you’d put your availability at risk….Well, this indeed not ideal, but there are some possiblities:

- Define each node pool as zone-redundant to maximize each tenant’s resilience

- Define each node pool with minimum 2/3 nodes (one per zone) to maximize each tenant’s active/active availability

Because all nodes belonging to the same node pool are tainted/labelled the same way, K8s would still be able to re-schedule pods accordingly should a given node suffer form hardware/software failure. Of course, such a setup would require many nodes (mind Kubnet’s 400 limit) and would incur huge costs.

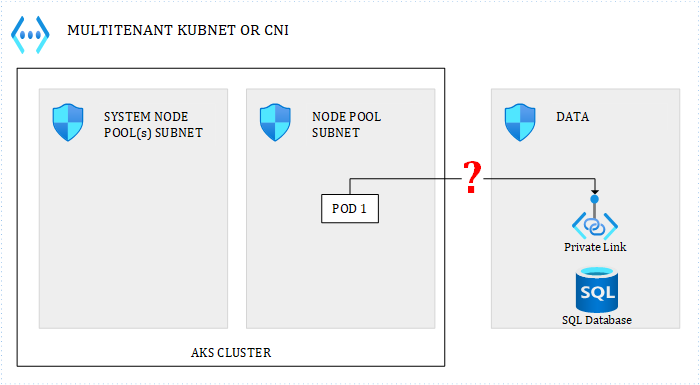

CNI or Kubenet, impact on Egress

What will a data service (or anything else) see when a POD calls it?

Figure 8 – Egress traffic

- with Kubenet, the NSG around the data subnet will see the underlying node IP

- with CNI, the NSG will see the POD IP

Remember that with Kubenet, for internal traffic, even if it involves multiple nodes on different subnets, NSGs will see the NAT IP. However, for traffic leaving the cluster, the underlying node IP is seen. With CNI, it makes no difference whether traffic targets an internal or external service.

Combining best of both worlds

Beyond being multi-tenant or not, one setup which can be interesting is the following:

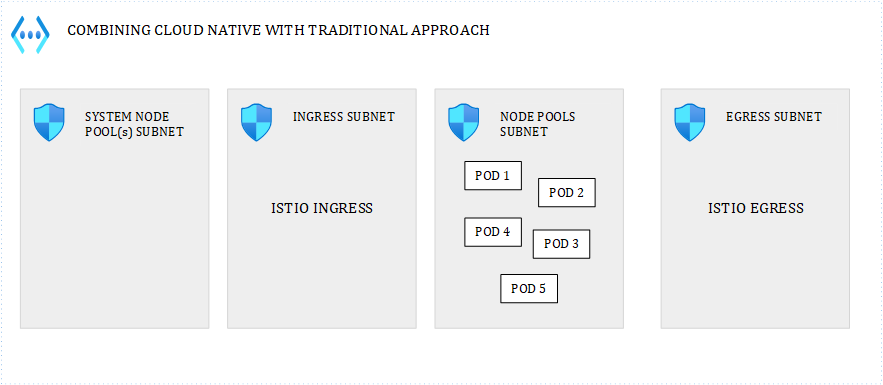

Figure 9 – Combining Cloud native with traditional approach

where you isolate key cluster features such as ingress & egress. For example, with a dedicated egress subnet for the Istio (for example) egress controller, could help you enforce Istio egress rules everywhere, by allowing only that subnet to get out of the cluster, while still giving flexibility to the ones managing the cluster.

Conclusion

The cost friendliest and the most cloud native approach to handle East-West traffic, consists in relying on K8s built-in mechanisms and ecosystem solutions such as Service Meshes & Calico, to make abstraction of the infrastructure and leverage the full K8s potential in terms of elasticity, resilience and self-healing capabilities. However, we have seen some limits of that approach, in some very specific scenarios. I would still advocate to work the cloud native way, unless you really deal with highly sensitive workloads and/or true multi-tenancy.

South-North traffic is not a game changer, whether you go cloud native or not. You will keep using WAFs and Azure Firewall/NVAs to manage that type of network traffic. Bringing back NSGs (and potentially NVAs) for East-West traffic can be challenging and the chosen network plugin dramatically impacts the NSG/UDR configuration. It can also potentially harm other non-functional requirements such as high availability, scalability and maintainability.

Recent Comments