by Contributed | Dec 21, 2022 | Technology

This article is contributed. See the original author and article here.

I have already written a few articles on AKS, so feel free to have a look at them as I will not repeat what I’ve already written there. For the record, here are the links:

As a side note, this post is about how to harden ingress using Network Security Groups on top of internal network policies. Probably, 99% of the clusters worldwide are hardened using network policies only. If your organization is part of the last percent, and want to harden clusters further, this post is for you.

In AKS, ingress is used to let external components/callers interact with resources that are inside the cluster. Ingress is handled by an ingress controller. There are many of them: Nginx, Istio, Traefik, AGIC, etc. but whatever technology you choose, you will rely on a load balancer (LB) service to serve the traffic. When you instruct K8s to provision a service of type LB, it tells the Cloud provider (Microsoft in this case) to provision a LB service. This LB might be public facing or internal. The recommended approach is to provision an internal LB that is proxied by a Web Application Firewall (WAF) (Front Door+PLS or Application Gateway, or even any third party WAF). API traffic is also often proxied by a more specific API gateway.

The default behavior of a LB service in K8s, is to load balance the traffic twice:

- Firstly, at the level of the Azure LB, which will forward traffic to any healthy AKS node

- Secondly, at the level of K8s itself.

Because the Azure Load Balancer has no clue about the actual workload of the AKS cluster, it will blindly forward traffic to any “healthy” node, no matter what the pod density is. To prevent an imbalanced load balancing, K8s adds its own bits, to achieve a truly evenly distributed load balancing that considers the overall cluster capacity. While this sounds like the perfect thing on earth, it comes with two major caveats:

- K8s might add an extra hop between the Azure Load Balancer and the actual target, thus impacting latency.

- No matter whether there is an extra hop or not, K8s will source network address translation (SNAT) the source traffic, thus hiding the actual client IP. The ingress controller will therefore not be able to apply traffic restriction rules.

When you provision a LB service, for example, following the steps documented here, you will end up with a service, whose externalTrafficPolicy is set to Cluster. The Cluster mode takes care of the imbalanced load balancing. It performs the extra K8s bits to optimize load balancing and comes with the caveats described earlier.

This problem is known and documented so, why am I blogging about this? Well, this is because the load balancing algorithm that you choose at the level of the LB service, also has an impact on how you can restrict (or not) traffic that comes in, at the level of the Network Security Groups, and that is not something I could find guidance on.

If you do not want to restrict ingress traffic in any way, you can stop reading here, else, keep on reading.

Lab environment

Let us deep dive into the internals of AKS ingress with a concrete example.

For this, let us use the following lab:

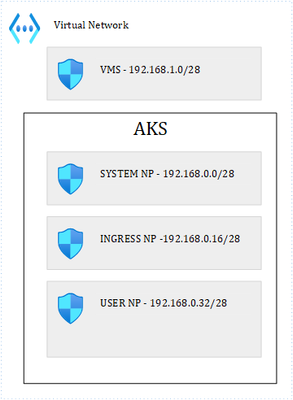

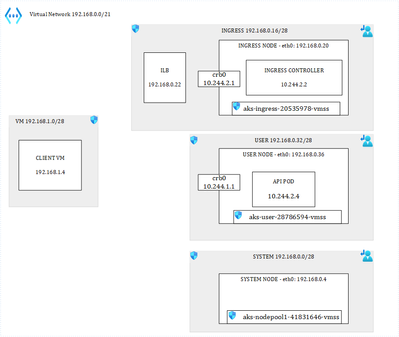

Where we have 1 VNET and 4 subnets. 3 subnets are used by the AKS cluster: 1 for the system node pool, 1 for ingress and the last one for user pods. I have labelled the Ingress and User node pools so that I can schedule pods accordingly. It is important to precise that our cluster is based on Kubenet, which adds a layer of complexity in terms of how networking works with K8s. The VM subnet holds our client VM, used to perform client calls to an API that is exposed through the ingress controller. Ultimately, we end up with this:

Load balancer with default behavior

I have provisioned the following LB service in AKS:

apiVersion: v1

kind: Service

metadata:

annotations:

meta.helm.sh/release-name: ingress-nginx

meta.helm.sh/release-namespace: ingress

service.beta.kubernetes.io/azure-load-balancer-health-probe-request-path: /healthz

service.beta.kubernetes.io/azure-load-balancer-internal: “true”

service.beta.kubernetes.io/azure-load-balancer-internal-subnet: ingress

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.5.1

helm.sh/chart: ingress-nginx-4.4.0

name: ingress-nginx-controller

namespace: ingress

spec:

allocateLoadBalancerNodePorts: true

externalTrafficPolicy: Cluster

internalTrafficPolicy: Cluster

ipFamilies:

– IPv4

ipFamilyPolicy: SingleStack

loadBalancerIP: 192.168.0.22

ports:

– appProtocol: http

name: http

nodePort: 31880

port: 80

protocol: TCP

targetPort: http

selector:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

sessionAffinity: None

type: LoadBalancer

Notice the Azure-specific annotations at the top, telling Azure to provision an internal load balancer in a specific subnet, and with the /healthz endpoint for the health probe.

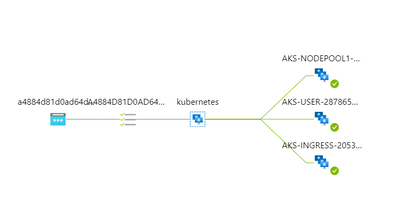

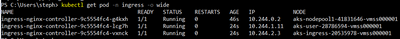

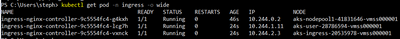

I’m only using HTTP but same logic applies to HTTPS. I have three nodes in the cluster:

I also have deployed one API and a few privileged containers, for later use:

Each privileged container runs on its corresponding node (one on the system node, one on the ingress node, and another one on the user node). The API runs on the user node. My ingress controller runs a single pod (for now) on the Ingress node:

Lastly, I deployed the following ingress rule:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: weather

spec:

ingressClassName: nginx

rules:

– host: demoapi.eyskens.corp

http:

paths:

– path: /weatherforecast

pathType: Prefix

backend:

service:

name: simpleapi

port:

number: 8080

Upon the deployment of the K8s LB service, Azure creates its own LB service, which after a few minutes, shows the status of the health probe checks:

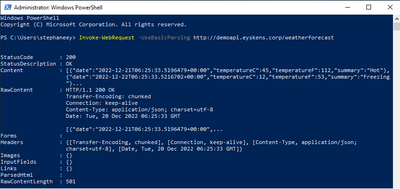

Each AKS node is being probed by the load balancer. So far so good, we can run our first API call from the client VM:

Our ingress controller sees the call and logs it:

192.168.0.4 – – [20/Dec/2022:06:25:33 +0000] “GET /weatherforecast HTTP/1.1” 200 513 “-“

“Mozilla/5.0 (Windows NT; Windows NT 10.0; en-US) WindowsPowerShell/5.1.17763.3770” 180

0.146 [default-simpleapi-8080] [] 10.244.1.5:8080 513 0.146 200

76e041740b70be9b36dd4fda52f97410

Everything works well until the security architect asks you how you control who can call this service…So, let us pretend that our client VM is the only one that can call the service. We could simply specify a rule at the level of the ingress controller itself. We know the IP of our VM so let’s give it a try:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: weather

annotations:

nginx.ingress.kubernetes.io/whitelist-source-range: 192.168.1.4

spec:

ingressClassName: nginx

rules:

– host: demoapi.eyskens.corp

http:

paths:

– path: /weatherforecast

pathType: Prefix

backend:

service:

name: simpleapi

port:

number: 8080

By adding a whitelist-source-range annotation. Surprise, we now we get a Forbidden response from our Client VM:

Looking at the NGINX logs reveals the problem:

…*24932 access forbidden by rule, client: 10.244.2.1, …

The client is 10.244.2.1 (crb0 of the ingress node) instead of our VM (192.168.1.4). Why is that? That is because of caveat number 2 described earlier. The Client IP is always hidden by the SNAT operation because the service is in Cluster mode. Well, is the client always 10.244.2.1? No, just run a few hundred queries from the same client VM, and you will notice things like this:

2022/12/20 07:03:45 [error] 98#98: *39317 access forbidden by rule, client: 10.244.2.1,

server: demoapi.eyskens.corp, request: “GET /weatherforecast HTTP/1.1”, host:

“demoapi.eyskens.corp”

2022/12/20 07:03:45 [error] 98#98: *39317 access forbidden by rule, client: 10.244.2.1,

server: demoapi.eyskens.corp, request: “GET /weatherforecast HTTP/1.1”, host:

“demoapi.eyskens.corp”

2022/12/20 07:03:48 [error] 98#98: *39361 access forbidden by rule, client: 192.168.0.36,

server: demoapi.eyskens.corp, request: “GET /weatherforecast HTTP/1.1”, host:

“demoapi.eyskens.corp”

We can see our CBR0 back, but also 192.168.0.36, which is the IP of our user node. Because you want to understand how things work internally, you decide to analyze traffic from the ingress node itself. You basically exec into the privileged container that is running on that node:

kubectl exec ingresspriv-5cfc6468c8-f8bjf -it — /bin/sh

Then, you go onto the node itself, and run a tcpdump:

chroot /host

tcpdump -i cbr0|grep weatherforecast

Where you can indeed see that traffic is coming from everywhere:

07:15:29.484576 IP aks-user-28786594-vmss000001.internal.cloudapp.net.35448 >

10.244.2.2.http: Flags [P.], seq 14689:14845, ack 26227, win 16411, length 156: HTTP:

GET /weatherforecast HTTP/1.1

07:15:29.499647 IP aks-user-28786594-vmss000001.internal.cloudapp.net.35448 >

10.244.2.2.http: Flags [P.], seq 14845:15001, ack 26506, win 16416, length 156: HTTP:

GET /weatherforecast HTTP/1.1

07:15:29.538515 IP aks-user-28786594-vmss000001.internal.cloudapp.net.35448 >

10.244.2.2.http: Flags [P.], seq 15001:15157, ack 26785, win 16414, length 156: HTTP:

GET /weatherforecast HTTP/1.1

07:15:29.552183 IP aks-user-28786594-vmss000001.internal.cloudapp.net.35448 >

10.244.2.2.http: Flags [P.], seq 15157:15313, ack 27064, win 16413, length 156: HTTP:

GET /weatherforecast HTTP/1.1

07:15:29.552630 IP aks-nodepool1-41831646-vmss000001.internal.cloudapp.net.24631 >

10.244.2.2.http: Flags [P.], seq 1:181, ack 1, win 16416, length 180: HTTP: GET

/weatherforecast HTTP/1.1

07:15:29.604062 IP aks-user-28786594-vmss000001.internal.cloudapp.net.35448 >

10.244.2.2.http: Flags [P.], seq 15313:15469, ack 27343, win 16412, length 156: HTTP:

GET /weatherforecast HTTP/1.1

07:15:29.620439 IP aks-user-28786594-vmss000001.internal.cloudapp.net.35448 >

10.244.2.2.http: Flags [P.], seq 15469:15625, ack 27622, win 16411, length 156: HTTP:

GET /weatherforecast HTTP/1.1

07:15:29.637675 IP aks-nodepool1-41831646-vmss000001.internal.cloudapp.net.24631 >

10.244.2.2.http: Flags [P.], seq 181:337, ack 280, win 16414, length 156: HTTP: GET

/weatherforecast HTTP/1.1

07:15:29.666067 IP aks-nodepool1-41831646-vmss000001.internal.cloudapp.net.24631

This is plain normal: every single node of the cluster could be a potential client. So, no way to filter out traffic from the perspective of the ingress controller, with the Cluster mode.

Never mind, let us use the NSG instead of the ingress controller to restrict traffic to our VM only. That’s even better, let’s stop the illegal traffic sooner and not even hit our ingress controller. Naturally, you tell the security architect that you have a plan and come with the following NSG rules for the ingress NSG:

You basically allow the client VM to talk to the LB, and some system ports, but you deny everything else. You tell the security guy to relax about the “Any” destination since none of the internal cluster IPs is routable or visible to the outside world anyway (Kubenet), so only what is exposed through an ingress rule will be “visible”. For the two other subnets, you decide to be even more restrictive. You basically use the same rules as shown above, except for rule 100. You’re pretty sure it should be ok, and that no one else on earth but your client VM will ever be able to consume that API. Because you are a thoughtful person, you still decided to enable the NSG flow logs to make sure you capture traffic…in case you need to troubleshooting things later on.

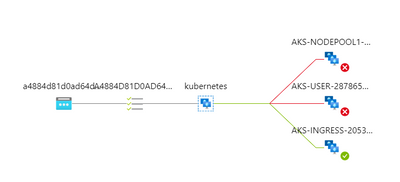

So, you retry and you realize that a few queries go through while others are blocked, until every call from the client VM is going through again….What could be the culprit? First reaction is to look at the Azure Load Balancer itself and you’ll notice that it is not in a great shape anymore:

Why is that? It is because of the NSG that you defined at the level of the Ingress subnet, which broke it entirely, although you had authorized the AzureLoadBalancer tag. So, you decide to remove the NSG, wait a few minutes, and inspect again what comes into the CBR0 of the Ingress node, to figure it out from the inside:

tcpdump -i cbr0

This is what comes out of the dump:

08:36:46.835356 IP aks-user-28786594-vmss000001.internal.cloudapp.net.54259 >

10.244.2.2.http: Flags [P.], seq 199:395, ack 125, win 1024, length 196: HTTP: GET

/healthz HTTP/1.1

08:36:48.382720 IP aks-nodepool1-41831646-vmss000001.internal.cloudapp.net.8461 >

10.244.2.2.http: Flags [P.], seq 197:391, ack 125, win 16384, length 194: HTTP: GET

/healthz HTTP/1.1

You see that all nodes are directly probing the HEALTH endpoint on the ingress node…so the NSG rules described earlier are too restrictive. You start realizing that ruling AKS traffic with NSGs is a risky business…So, the non-ingress nodes are considered unhealthy by the Azure LB because they are unable to probe the ingress node. The ingress node is still considered healthy because it can probe itself and AzureLoadBalancer tag is allowed. That’s what explains the fact that ultimately, everything is going through because only the ingress node itself receives traffic, right from the LB and is already allowing the client VM. If you leave the cluster as is, you basically broke the built-in LB algorithm of K8s itself that protects against imbalanced traffic, plus you’ll keep getting calls from the other nodes trying to probe the ingress one. But because you want to optimize LB and avoid further issues, you add this rule to your ingress NSG:

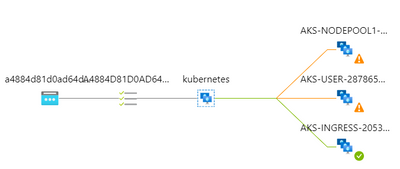

Where you basically allow every node to probe on port 80 (remember we only focus on HTTP). You can safely put Any as destination since the destination is the IP of the Ingress Pod, which you can’t guess in advance. After a few minutes, the Azure LB seems to be in a better shape:

And yet a few minutes later, it’s all green again.

Now that you have restored the ingress, you’re back to square 1: some requests go through but not all. First, you checked the NSG flow logs of the User node pool and realized that you have such flow tuples that are blocked by the NSG:

[“1671521722,192.168.1.4,192.168.0.22,50326,80,T,I,D,B,,,,”]

Where you see that our client VM is trying to hit port 80 of our Azure Load Balancer…Wait, we said that the Cluster mode hides the original client IP, isn’t?? Yes that’s right but only from the inside, not from the outside. The NSGs still see the original IP….Ok, but why do I find these logs in the NSG of the user node?? Well, because each node of the cluster can potentially forward traffic to the destination…. So, it boils down to the following conclusion when using the Cluster mode:

- All nodes must be granted inbound access to the ingress node(s) for the health probes.

- Because each node is potentially forwarding the traffic itself, you must allow the callers (our VM in this case, an Application Gateway, an APIM in reality) in each subnet.

- You must accept de-facto cluster-wide node-level lateral movements to these endpoints/ports.

But what if the security architect cannot live with that? Let us explore the second option.

LB with externalTrafficPolicy set to Local.

The only thing needed to do is to switch the service’s externalTrafficPolicy to Local:

Type: LoadBalancer

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.0.58.7

IPs: 10.0.58.7

IP: 192.168.0.22

LoadBalancer Ingress: 192.168.0.22

Port: http 80/TCP

TargetPort: http/TCP

NodePort: http 31880/TCP

Endpoints: 10.244.2.2:80

Session Affinity: None

External Traffic Policy: Local

After a minute, you’ll notice this at the level of the Azure Load Balancer:

Don’t worry, this is the expected behavior. When you switch the K8s service to Local, K8s stops adding its own bits to the load balancing algorithm. The only way to prevent the Azure Load Balancer from sending traffic to a non-ingress-enabled node, is to mark these nodes as unhealthy. Any probe to the healthz endpoint will return a 503 answer from non-ingress-enabled nodes (example with the user node):

kubectl exec userpriv-5d879699c8-b2cww -it — /bin/sh

chroot /host

root@aks-user-28786594-vmss000001:/# curl -v http://localhost:31049/healthz

* Trying 127.0.0.1…

* TCP_NODELAY set

* Connected to localhost (127.0.0.1) port 31049 (#0)

> GET /healthz HTTP/1.1

> Host: localhost:31049

> User-Agent: curl/7.58.0

> Accept: */*

>

< HTTP/1.1 503 Service Unavailable

< Content-Type: application/json

< X-Content-Type-Options: nosniff

< Date: Tue, 20 Dec 2022 11:57:34 GMT

< Content-Length: 105

<

{

“service”: {

“namespace”: “ingress”,

“name”: “ingress-nginx-controller”

},

“localEndpoints”: 0

* Connection #0 to host localhost left intact

}root@aks-user-28786594-vmss000001:/#

While it will return a 200 on every ingress-enabled node. But what is it exactly an ingress-enabled node? It’s simply a node that is hosting an ingress controller pod. So, with this, you can control much better the ingress entry point, plus you can also directly use a whitelist-source-range annotation, and this time, the ingress controller will see the real client IP since there is no more SNAT happening.

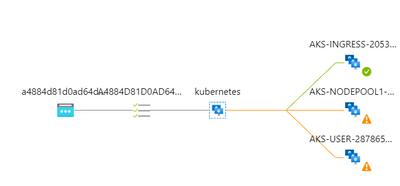

You still must pay attention to where your ingress controller pods are running. Remember that I have dedicated one node pool in its own subnet for ingress and I have forced the pod scheduling on that node pool through a nodeSelector. Should I omit this, and scale my replicas to 3, I could end up with the following situation where AKS would schedule pods across nodes of different node pools:

This would lead to a situation where nodes hosting an instance of the ingress controller would show up as healthy again at the level of the Azure Load Balancer. Therefore, you’d be hitting NSGs of subnets hosting those nodes…So, if you want to concentrate the ingress traffic to a single entry point, you need to:

- Dedicate a node pool with 1 or more nodes (more for HA) and enable Availability Zones for your ingress pods to run on.

- Make sure to force the pod scheduling of the ingress controller onto that node pool.

- Make sure you white list your clients (APIM, Application Gateway, etc.) at the level of the NSG of the ingress subnet. You do not need to white list other nodes because in Local mode, nodes do not probe the ingress node anymore. If you run a tcdump -i cbr0 on the ingress node, you’ll see only traffic from the node’s pod cidr itself.

Conclusion

Whether you are using Kubenet or CNI, ruling AKS ingress with Network Security Groups is not something you can improvise. Using the native load balancing algorithm (Cluster), you should end up with an optimized load balancing, but you must accept the fact that you have to live with lateral movements across the entire cluster. You can of course try to compensate with additional network policies but OS-level traffic would still be allowed (mind the container escape risk). Using the Local mode, you can control much better the incoming traffic, at the level of the NSGs as well as at the level of the ingress controller itself. You can run any number of ingress controller pods, as long as you scheduled them on identified nodes. The downside is that you might end up with an imbalanced traffic. This is a trade-off!

by Scott Muniz | Dec 19, 2022 | Security, Technology

This article is contributed. See the original author and article here.

| pacparser — pacparser |

A vulnerability classified as problematic was found in pacparser up to 1.3.x. Affected by this vulnerability is the function pacparser_find_proxy of the file src/pacparser.c. The manipulation of the argument url leads to buffer overflow. Attacking locally is a requirement. Upgrading to version 1.4.0 is able to address this issue. The name of the patch is 853e8f45607cb07b877ffd270c63dbcdd5201ad9. It is recommended to upgrade the affected component. The associated identifier of this vulnerability is VDB-215443. |

2022-12-13 |

not yet calculated |

CVE-2019-25078

MISC

MISC

MISC

MISC |

| zhimengzhe — ibarn |

File upload vulnerability in function upload in action/Core.class.php in zhimengzhe iBarn 1.5 allows remote attackers to run arbitrary code via avatar upload to index.php. |

2022-12-15 |

not yet calculated |

CVE-2020-20588

MISC |

| netgate — multiple_products |

Cross Site Scripting (XSS) vulnerability in Netgate pf Sense 2.4.4-Release-p3 and Netgate ACME package 0.6.3 allows remote attackers to to run arbitrary code via the RootFolder field to acme_certificate_edit.php page of the ACME package. |

2022-12-15 |

not yet calculated |

CVE-2020-21219

MISC

MISC |

| easywebpack-cli — easywebpack-cli |

Directory Traversal vulnerability in easywebpack-cli before 4.5.2 allows attackers to obtain sensitive information via crafted GET request. |

2022-12-15 |

not yet calculated |

CVE-2020-24855

MISC |

| ibm — spectrum_protect_plus |

IBM Spectrum Protect Plus 10.1.0 through 10.1.12 discloses sensitive information due to unencrypted data being used in the communication flow between Spectrum Protect Plus vSnap and its agents. An attacker could obtain information using main in the middle techniques. IBM X-Force ID: 182106. |

2022-12-14 |

not yet calculated |

CVE-2020-4497

MISC

MISC |

| apache — zeppelin |

The improper Input Validation vulnerability in “”Move folder to Trash” feature of Apache Zeppelin allows an attacker to delete the arbitrary files. This issue affects Apache Zeppelin Apache Zeppelin version 0.9.0 and prior versions. |

2022-12-16 |

not yet calculated |

CVE-2021-28655

MISC |

| sourcecodester — online_grading_system |

A SQL injection vulnerability in Sourcecodester Online Grading System 1.0 allows remote attackers to execute arbitrary SQL commands via the uname parameter. |

2022-12-16 |

not yet calculated |

CVE-2021-31650

MISC |

| inikulin — replicator |

A deserialization issue discovered in inikulin replicator before 1.0.4 allows remote attackers to run arbitrary code via the fromSerializable function in TypedArray object. |

2022-12-15 |

not yet calculated |

CVE-2021-33420

MISC

MISC

MISC

MISC |

| hp — omen_gaming_hub_sdk |

Potential security vulnerabilities have been identified in an OMEN Gaming Hub SDK package which may allow escalation of privilege and/or denial of service. HP is releasing software updates to mitigate the potential vulnerabilities. |

2022-12-12 |

not yet calculated |

CVE-2021-3437

MISC |

| solarwinds — serv-u_ftp_server |

Common encryption key appears to be used across all deployed instances of Serv-U FTP Server. Because of this an encrypted value that is exposed to an attacker can be simply recovered to plaintext. |

2022-12-16 |

not yet calculated |

CVE-2021-35252

MISC

MISC

MISC |

| feehi_cms — feehi_cms |

Cross Site Scripting (XSS) vulnerability in Feehi CMS thru 2.1.1 allows attackers to run arbitrary code via the user name field of the login page. |

2022-12-15 |

not yet calculated |

CVE-2021-36572

MISC |

| hp — workstation_bios |

A potential security vulnerability has been identified in certain HP Workstation BIOS (UEFI firmware) which may allow arbitrary code execution. HP is releasing firmware mitigations for the potential vulnerability. |

2022-12-12 |

not yet calculated |

CVE-2021-3661

MISC |

| ruoyi — ruoyi |

Deserialization issue discovered in Ruoyi before 4.6.1 allows remote attackers to run arbitrary code via weak cipher in Shiro framework. |

2022-12-16 |

not yet calculated |

CVE-2021-38241

MISC |

| hp — multiple_products |

A potential security vulnerability has been identified in OMEN Gaming Hub and in HP Command Center which may allow escalation of privilege and/or denial of service. HP has released software updates to mitigate the potential vulnerability. |

2022-12-12 |

not yet calculated |

CVE-2021-3919

MISC |

| hp — multiple_products |

Certain HP Print products and Digital Sending products may be vulnerable to potential remote code execution and buffer overflow with use of Link-Local Multicast Name Resolution or LLMNR. |

2022-12-12 |

not yet calculated |

CVE-2021-3942

MISC |

| seacms — seacms |

An issue was discovered in /Upload/admin/admin_notify.php in Seacms 11.4 allows attackers to execute arbitrary php code via the notify1 parameter when the action parameter equals set. |

2022-12-15 |

not yet calculated |

CVE-2021-39426

MISC |

| 188jianzhan — 188jianzhan |

Cross site scripting vulnerability in 188Jianzhan 2.10 allows attackers to execute arbitrary code via the username parameter to /admin/reg.php. |

2022-12-15 |

not yet calculated |

CVE-2021-39427

MISC |

| eyoucms — eyoucms |

Cross Site Scripting (XSS) vulnerability in Users.php in eyoucms 1.5.4 allows remote attackers to run arbitrary code and gain escalated privilege via the filename for edit_users_head_pic. |

2022-12-15 |

not yet calculated |

CVE-2021-39428

MISC |

| rsfirewall — rsfirewall |

RSFirewall tries to identify the original IP address by looking at different HTTP headers. A bypass is possible due to the way it is implemented. |

2022-12-15 |

not yet calculated |

CVE-2021-4226

MISC |

| chbrown — rfc6902 |

A vulnerability classified as problematic has been found in chbrown rfc6902. This affects an unknown part of the file pointer.ts. The manipulation leads to improperly controlled modification of object prototype attributes (‘prototype pollution’). The exploit has been disclosed to the public and may be used. The name of the patch is c006ce9faa43d31edb34924f1df7b79c137096cf. It is recommended to apply a patch to fix this issue. The associated identifier of this vulnerability is VDB-215883. |

2022-12-15 |

not yet calculated |

CVE-2021-4245

N/A

N/A

N/A |

| roxlukas — lmeve |

A vulnerability was found in roxlukas LMeve and classified as critical. Affected by this issue is some unknown functionality of the component Login Page. The manipulation of the argument X-Forwarded-For leads to sql injection. The attack may be launched remotely. The name of the patch is 29e1ead3bb1c1fad53b77dfc14534496421c5b5d. It is recommended to apply a patch to fix this issue. The identifier of this vulnerability is VDB-216176. |

2022-12-17 |

not yet calculated |

CVE-2021-4246

N/A

N/A |

| hp — jumpstart |

A potential security vulnerability has been identified in the HP Jumpstart software, which might allow escalation of privilege. HP is recommending that customers uninstall HP Jumpstart and use myHP software. |

2022-12-12 |

not yet calculated |

CVE-2022-1038

MISC |

| google — android |

In multiple locations of NfcService.java, there is a possible disclosure of NFC tags due to a confused deputy. This could lead to local information disclosure with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-199291025 |

2022-12-16 |

not yet calculated |

CVE-2022-20199

MISC |

| google — android |

In onCreate of WifiDppConfiguratorActivity.java, there is a possible way for a guest user to add a WiFi configuration due to a missing permission check. This could lead to local escalation of privilege with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-224772890 |

2022-12-16 |

not yet calculated |

CVE-2022-20503

MISC |

| google — android |

In multiple locations of DreamManagerService.java, there is a missing permission check. This could lead to local escalation of privilege and dismissal of system dialogs with User execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-225878553 |

2022-12-16 |

not yet calculated |

CVE-2022-20504

MISC |

| google — android |

In openFile of CallLogProvider.java, there is a possible permission bypass due to a path traversal error. This could lead to local escalation of privilege with User execution privileges needed. User interaction is not needed for exploitationProduct: AndroidVersions: Android-13Android ID: A-225981754 |

2022-12-16 |

not yet calculated |

CVE-2022-20505

MISC |

| google — android |

In onCreate of WifiDialogActivity.java, there is a missing permission check. This could lead to local escalation of privilege from a guest user with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-226133034 |

2022-12-16 |

not yet calculated |

CVE-2022-20506

MISC |

| google — android |

In onMulticastListUpdateNotificationReceived of UwbEventManager.java, there is a possible arbitrary code execution due to a missing bounds check. This could lead to local escalation of privilege with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-246649179 |

2022-12-16 |

not yet calculated |

CVE-2022-20507

MISC |

| google — android |

In onAttach of ConfigureWifiSettings.java, there is a possible way for a guest user to change WiFi settings due to a permissions bypass. This could lead to local escalation of privilege with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-218679614 |

2022-12-16 |

not yet calculated |

CVE-2022-20508

MISC |

| google — android |

In mapGrantorDescr of MessageQueueBase.h, there is a possible out of bounds write due to a missing bounds check. This could lead to local escalation of privilege with System execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-244713317 |

2022-12-16 |

not yet calculated |

CVE-2022-20509

MISC |

| google — android |

In getNearbyNotificationStreamingPolicy of DevicePolicyManagerService.java, there is a possible way to learn about the notification streaming policy of other users due to a permissions bypass. This could lead to local information disclosure with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-235822336 |

2022-12-16 |

not yet calculated |

CVE-2022-20510

MISC |

| google — android |

In getNearbyAppStreamingPolicy of DevicePolicyManagerService.java, there is a missing permission check. This could lead to local information disclosure with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-235821829 |

2022-12-16 |

not yet calculated |

CVE-2022-20511

MISC |

| google — android |

In navigateUpTo of Task.java, there is a possible way to launch an intent handler with a mismatched intent due to improper input validation. This could lead to local escalation of privilege with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-238602879 |

2022-12-16 |

not yet calculated |

CVE-2022-20512

MISC |

| google — android |

In decrypt_1_2 of CryptoPlugin.cpp, there is a possible out of bounds read due to a missing bounds check. This could lead to local information disclosure with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-244569759 |

2022-12-16 |

not yet calculated |

CVE-2022-20513

MISC |

| google — android |

In acquireFabricatedOverlayIterator, nextFabricatedOverlayInfos, and releaseFabricatedOverlayIterator of Idmap2Service.cpp, there is a possible out of bounds write due to a use after free. This could lead to local escalation of privilege with System execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-245727875 |

2022-12-16 |

not yet calculated |

CVE-2022-20514

MISC |

| google — android |

In onPreferenceClick of AccountTypePreferenceLoader.java, there is a possible way to retrieve protected files from the Settings app due to a confused deputy. This could lead to local information disclosure with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-220733496 |

2022-12-16 |

not yet calculated |

CVE-2022-20515

MISC |

| google — android |

In rw_t3t_act_handle_check_ndef_rsp of rw_t3t.cc, there is a possible out of bounds read due to an integer overflow. This could lead to remote information disclosure with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-224002331 |

2022-12-16 |

not yet calculated |

CVE-2022-20516

MISC |

| google — android |

In getMessagesByPhoneNumber of MmsSmsProvider.java, there is a possible access to restricted tables due to SQL injection. This could lead to local information disclosure with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-224769956 |

2022-12-16 |

not yet calculated |

CVE-2022-20517

MISC |

| google — android |

In query of MmsSmsProvider.java, there is a possible access to restricted tables due to SQL injection. This could lead to local information disclosure with User execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-224770203 |

2022-12-16 |

not yet calculated |

CVE-2022-20518

MISC |

google — android

|

In onCreate of AddAppNetworksActivity.java, there is a possible way for a guest user to configure WiFi networks due to a missing permission check. This could lead to local escalation of privilege with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-224772678 |

2022-12-16 |

not yet calculated |

CVE-2022-20519

MISC |

| google — android |

In onCreate of various files, there is a possible tapjacking/overlay attack. This could lead to local escalation of privilege or denial of server with User execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-227203202 |

2022-12-16 |

not yet calculated |

CVE-2022-20520

MISC |

| google — android |

In sdpu_find_most_specific_service_uuid of sdp_utils.cc, there is a possible way to crash Bluetooth due to a missing null check. This could lead to local denial of service with no additional execution privileges needed. User interaction is needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-227203684 |

2022-12-16 |

not yet calculated |

CVE-2022-20521

MISC |

| google — android |

In getSlice of ProviderModelSlice.java, there is a missing permission check. This could lead to local escalation of privilege from the guest user with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-227470877 |

2022-12-16 |

not yet calculated |

CVE-2022-20522

MISC |

| google — android |

In IncFs_GetFilledRangesStartingFrom of incfs.cpp, there is a possible out of bounds read due to a missing bounds check. This could lead to local information disclosure with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-228222508 |

2022-12-16 |

not yet calculated |

CVE-2022-20523

MISC |

| google — android |

In compose of Vibrator.cpp, there is a possible arbitrary code execution due to a use after free. This could lead to local escalation of privilege with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-228523213 |

2022-12-16 |

not yet calculated |

CVE-2022-20524

MISC |

| google — android |

In enforceVisualVoicemailPackage of PhoneInterfaceManager.java, there is a possible leak of visual voicemail package name due to a permissions bypass. This could lead to local escalation of privilege with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-229742768 |

2022-12-16 |

not yet calculated |

CVE-2022-20525

MISC |

| google — android |

In CanvasContext::draw of CanvasContext.cpp, there is a possible out of bounds write due to a missing bounds check. This could lead to local escalation of privilege with no additional execution privileges needed. User interaction is needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-229742774 |

2022-12-16 |

not yet calculated |

CVE-2022-20526

MISC |

| google — android |

In HalCoreCallback of halcore.cc, there is a possible out of bounds read due to a missing bounds check. This could lead to local information disclosure from the NFC firmware with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-229994861 |

2022-12-16 |

not yet calculated |

CVE-2022-20527

MISC |

| google — android |

In findParam of HevcUtils.cpp there is a possible out of bounds read due to a missing bounds check. This could lead to local escalation of privilege with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-230172711 |

2022-12-16 |

not yet calculated |

CVE-2022-20528

MISC |

| google — android |

In multiple locations of WifiDialogActivity.java, there is a possible limited lockscreen bypass due to a logic error in the code. This could lead to local escalation of privilege in wifi settings with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-231583603 |

2022-12-16 |

not yet calculated |

CVE-2022-20529

MISC |

| google — android |

In strings.xml, there is a possible permission bypass due to a misleading string. This could lead to remote information disclosure of call logs with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-231585645 |

2022-12-16 |

not yet calculated |

CVE-2022-20530

MISC |

| google — android |

In placeCall of TelecomManager.java, there is a possible way to determine whether an app is installed, without query permissions, due to side channel information disclosure. This could lead to local information disclosure with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-231988638 |

2022-12-16 |

not yet calculated |

CVE-2022-20531

MISC |

| google — android |

In getSlice of WifiSlice.java, there is a possible way to connect a new WiFi network from the guest mode due to a missing permission check. This could lead to local escalation of privilege with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-232798363 |

2022-12-16 |

not yet calculated |

CVE-2022-20533

MISC |

| google — android |

In registerLocalOnlyHotspotSoftApCallback of WifiManager.java, there is a possible way to determine whether an app is installed, without query permissions, due to side channel information disclosure. This could lead to local information disclosure with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-233605242 |

2022-12-16 |

not yet calculated |

CVE-2022-20535

MISC |

| google — android |

In registerBroadcastReceiver of RcsService.java, there is a possible way to change preferred TTY mode due to a missing permission check. This could lead to local escalation of privilege with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-235100180 |

2022-12-16 |

not yet calculated |

CVE-2022-20536

MISC |

| google — android |

In createDialog of WifiScanModeActivity.java, there is a possible way for a Guest user to enable location-sensitive settings due to a missing permission check. This could lead to local escalation of privilege from the Guest user with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-235601169 |

2022-12-16 |

not yet calculated |

CVE-2022-20537

MISC |

| google — android |

In getSmsRoleHolder of RoleService.java, there is a possible way to determine whether an app is installed, without query permissions, due to side channel information disclosure. This could lead to local information disclosure with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-235601770 |

2022-12-16 |

not yet calculated |

CVE-2022-20538

MISC |

| google — android |

In parameterToHal of Effect.cpp, there is a possible out of bounds write due to a missing bounds check. This could lead to local escalation of privilege in the audio server with System execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-237291425 |

2022-12-16 |

not yet calculated |

CVE-2022-20539

MISC |

| google — android |

In SurfaceFlinger::doDump of SurfaceFlinger.cpp, there is possible arbitrary code execution due to a use after free. This could lead to local escalation of privilege with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-237291506 |

2022-12-16 |

not yet calculated |

CVE-2022-20540

MISC |

| google — android |

In phNxpNciHal_ioctl of phNxpNciHal.cc, there is a possible out of bounds read due to a missing bounds check. This could lead to local information disclosure with System execution privileges needed. User interaction is needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-238083126 |

2022-12-16 |

not yet calculated |

CVE-2022-20541

MISC |

| google — android |

In multiple locations, there is a possible display crash loop due to improper input validation. This could lead to local denial of service with system execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-238178261 |

2022-12-16 |

not yet calculated |

CVE-2022-20543

MISC |

| google — android |

In onOptionsItemSelected of ManageApplications.java, there is a possible bypass of profile owner restrictions due to a missing permission check. This could lead to local escalation of privilege with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-238745070 |

2022-12-16 |

not yet calculated |

CVE-2022-20544

MISC |

| google — android |

In bindArtworkAndColors of MediaControlPanel.java, there is a possible way to crash the phone due to improper input validation. This could lead to remote denial of service with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-239368697 |

2022-12-16 |

not yet calculated |

CVE-2022-20545

MISC |

| google — android |

In getCurrentConfigImpl of Effect.cpp, there is a possible out of bounds write due to a missing bounds check. This could lead to local escalation of privilege with System execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-240266798 |

2022-12-16 |

not yet calculated |

CVE-2022-20546

MISC |

| google — android |

In multiple functions of AdapterService.java, there is a possible way to manipulate Bluetooth state due to a missing permission check. This could lead to local escalation of privilege with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-240301753 |

2022-12-16 |

not yet calculated |

CVE-2022-20547

MISC |

| google — android |

In setParameter of EqualizerEffect.cpp, there is a possible out of bounds write due to improper input validation. This could lead to local escalation of privilege with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-240919398 |

2022-12-16 |

not yet calculated |

CVE-2022-20548

MISC |

| google — android |

In authToken2AidlVec of KeyMintUtils.cpp, there is a possible out of bounds write due to an incorrect bounds check. This could lead to local escalation of privilege with System execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-242702451 |

2022-12-16 |

not yet calculated |

CVE-2022-20549

MISC |

| google — android |

In Multiple Locations, there is a possibility to launch arbitrary protected activities due to a confused deputy. This could lead to local escalation of privilege with User execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-242845514 |

2022-12-16 |

not yet calculated |

CVE-2022-20550

MISC |

| google — android |

In btif_a2dp_sink_command_ready of btif_a2dp_sink.cc, there is a possible out of bounds read due to a use after free. This could lead to local information disclosure with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-243922806 |

2022-12-16 |

not yet calculated |

CVE-2022-20552

MISC |

| google — android |

In onCreate of LogAccessDialogActivity.java, there is a possible way to bypass a permission check due to a tapjacking/overlay attack. This could lead to local escalation of privilege with System execution privileges needed. User interaction is needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-244155265 |

2022-12-16 |

not yet calculated |

CVE-2022-20553

MISC |

| google — android |

In removeEventHubDevice of InputDevice.cpp, there is a possible OOB read due to a use after free. This could lead to local escalation of privilege with System execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-245770596 |

2022-12-16 |

not yet calculated |

CVE-2022-20554

MISC |

| google — android |

In ufdt_get_node_by_path_len of ufdt_convert.c, there is a possible out of bounds read due to a missing bounds check. This could lead to local information disclosure with System execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-246194233 |

2022-12-16 |

not yet calculated |

CVE-2022-20555

MISC |

| google — android |

In launchConfigNewNetworkFragment of NetworkProviderSettings.java, there is a possible way for the guest user to add a new WiFi network due to a missing permission check. This could lead to local escalation of privilege with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-246301667 |

2022-12-16 |

not yet calculated |

CVE-2022-20556

MISC |

| google — android |

In MessageQueueBase of MessageQueueBase.h, there is a possible out of bounds read due to a missing bounds check. This could lead to local escalation of privilege with System execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-247092734 |

2022-12-16 |

not yet calculated |

CVE-2022-20557

MISC |

| google — android |

In registerReceivers of DeviceCapabilityListener.java, there is a possible way to change preferred TTY mode due to a permissions bypass. This could lead to local escalation of privilege with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-236264289 |

2022-12-16 |

not yet calculated |

CVE-2022-20558

MISC |

| google — android |

In revokeOwnPermissionsOnKill of PermissionManager.java, there is a possible way to determine whether an app is installed, without query permissions, due to side channel information disclosure. This could lead to local information disclosure with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android-13Android ID: A-219739967 |

2022-12-16 |

not yet calculated |

CVE-2022-20559

MISC |

| google — android |

Product: AndroidVersions: Android kernelAndroid ID: A-212623833References: N/A |

2022-12-16 |

not yet calculated |

CVE-2022-20560

MISC |

| google — android |

In TBD of aud_hal_tunnel.c, there is a possible memory corruption due to a use after free. This could lead to local escalation of privilege with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android kernelAndroid ID: A-222162870References: N/A |

2022-12-16 |

not yet calculated |

CVE-2022-20561

MISC |

| google — android |

In various functions of ap_input_processor.c, there is a possible way to record audio during a phone call due to a logic error in the code. This could lead to local information disclosure with User execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android kernelAndroid ID: A-231630423References: N/A |

2022-12-16 |

not yet calculated |

CVE-2022-20562

MISC |

| google — android |

In TBD of ufdt_convert, there is a possible out of bounds read due to memory corruption. This could lead to local escalation of privilege with System execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android kernelAndroid ID: A-242067561References: N/A |

2022-12-16 |

not yet calculated |

CVE-2022-20563

MISC |

| google — android |

In _ufdt_output_strtab_to_fdt of ufdt_convert.c, there is a possible out of bounds write due to an incorrect bounds check. This could lead to local escalation of privilege with System execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android kernelAndroid ID: A-243798789References: N/A |

2022-12-16 |

not yet calculated |

CVE-2022-20564

MISC |

| google — android |

In l2cap_chan_put of l2cap_core, there is a possible use after free due to improper locking. This could lead to local escalation of privilege with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android kernelAndroid ID: A-165329981References: Upstream kernel |

2022-12-16 |

not yet calculated |

CVE-2022-20566

MISC |

| google — android |

In pppol2tp_create of l2tp_ppp.c, there is a possible use after free due to a race condition. This could lead to local escalation of privilege with System execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android kernelAndroid ID: A-186777253References: Upstream kernel |

2022-12-16 |

not yet calculated |

CVE-2022-20567

MISC |

| google — android |

In (TBD) of (TBD), there is a possible way to corrupt kernel memory due to a use after free. This could lead to local escalation of privilege with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android kernelAndroid ID: A-220738351References: Upstream kernel |

2022-12-16 |

not yet calculated |

CVE-2022-20568

MISC |

| google — android |

In thermal_cooling_device_stats_update of thermal_sysfs.c, there is a possible out of bounds write due to improper input validation. This could lead to local escalation of privilege in the kernel with System execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android kernelAndroid ID: A-229258234References: N/A |

2022-12-16 |

not yet calculated |

CVE-2022-20569

MISC |

| google — android |

Product: AndroidVersions: Android kernelAndroid ID: A-230660904References: N/A |

2022-12-16 |

not yet calculated |

CVE-2022-20570

MISC |

| google — android |

In extract_metadata of dm-android-verity.c, there is a possible way to corrupt kernel memory due to a use after free. This could lead to local escalation of privilege with System execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android kernelAndroid ID: A-234030265References: Upstream kernel |

2022-12-16 |

not yet calculated |

CVE-2022-20571

MISC |

| google — android |

In verity_target of dm-verity-target.c, there is a possible way to modify read-only files due to a missing permission check. This could lead to local escalation of privilege with System execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android kernelAndroid ID: A-234475629References: Upstream kernel |

2022-12-16 |

not yet calculated |

CVE-2022-20572

MISC |

| google — android |

In sec_sysmmu_info of drm_fw.c, there is a possible out of bounds read due to improper input validation. This could lead to local information disclosure with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android kernelAndroid ID: A-237582191References: N/A |

2022-12-16 |

not yet calculated |

CVE-2022-20574

MISC |

| google — android |

In read_ppmpu_info of drm_fw.c, there is a possible out of bounds read due to an incorrect bounds check. This could lead to local information disclosure with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android kernelAndroid ID: A-237585040References: N/A |

2022-12-16 |

not yet calculated |

CVE-2022-20575

MISC |

| google — android |

In externalOnRequest of rilapplication.cpp, there is a possible out of bounds write due to a missing bounds check. This could lead to local escalation of privilege with System execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android kernelAndroid ID: A-239701761References: N/A |

2022-12-16 |

not yet calculated |

CVE-2022-20576

MISC |

| google — android |

In OemSimAuthRequest::encode of wlandata.cpp, there is a possible out of bounds write due to a missing bounds check. This could lead to local escalation of privilege with System execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android kernelAndroid ID: A-241762281References: N/A |

2022-12-16 |

not yet calculated |

CVE-2022-20577

MISC |

| google — android |

In RadioImpl::setGsmBroadcastConfig of ril_service_legacy.cpp, there is a possible stack clash leading to memory corruption. This could lead to local escalation of privilege with System execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android kernelAndroid ID: A-243509749References: N/A |

2022-12-16 |

not yet calculated |

CVE-2022-20578

MISC |

| google — android |

In RadioImpl::setCdmaBroadcastConfig of ril_service_legacy.cpp, there is a possible stack clash leading to memory corruption. This could lead to local escalation of privilege with System execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android kernelAndroid ID: A-243510139References: N/A |

2022-12-16 |

not yet calculated |

CVE-2022-20579

MISC |

| google — android |

In ufdt_do_one_fixup of ufdt_overlay.c, there is a possible out of bounds write due to an incorrect bounds check. This could lead to local escalation of privilege with System execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android kernelAndroid ID: A-243629453References: N/A |

2022-12-16 |

not yet calculated |

CVE-2022-20580

MISC |

| google — android |

In the Pixel camera driver, there is a possible use after free due to a logic error in the code. This could lead to local escalation of privilege with System execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android kernelAndroid ID: A-245916120References: N/A |

2022-12-16 |

not yet calculated |

CVE-2022-20581

MISC |

| google — android |

In ppmp_unprotect_mfcfw_buf of drm_fw.c, there is a possible out of bounds write due to improper input validation. This could lead to local escalation of privilege with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android kernelAndroid ID: A-233645166References: N/A |

2022-12-16 |

not yet calculated |

CVE-2022-20582

MISC |

| google — android |

In ppmp_unprotect_mfcfw_buf of drm_fw.c, there is a possible out of bounds write due to improper input validation. This could lead to local escalation of privilege in S-EL1 with System execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android kernelAndroid ID: A-234859169References: N/A |

2022-12-16 |

not yet calculated |

CVE-2022-20583

MISC |

| google — android |

In page_number of shared_mem.c, there is a possible code execution in secure world due to improper input validation. This could lead to local escalation of privilege with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android kernelAndroid ID: A-238366009References: N/A |

2022-12-16 |

not yet calculated |

CVE-2022-20584

MISC |

| google — android |

In valid_out_of_special_sec_dram_addr of drm_access_control.c, there is a possible EoP due to improper input validation. This could lead to local escalation of privilege with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android kernelAndroid ID: A-238716781References: N/A |

2022-12-16 |

not yet calculated |

CVE-2022-20585

MISC |

| google — android |

In valid_out_of_special_sec_dram_addr of drm_access_control.c, there is a possible EoP due to improper input validation. This could lead to local escalation of privilege with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android kernelAndroid ID: A-238718854References: N/A |

2022-12-16 |

not yet calculated |

CVE-2022-20586

MISC |

| google — android |

In ppmp_validate_wsm of drm_fw.c, there is a possible EoP due to improper input validation. This could lead to local escalation of privilege with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android kernelAndroid ID: A-238720411References: N/A |

2022-12-16 |

not yet calculated |

CVE-2022-20587

MISC |

| google — android |

In sysmmu_map of sysmmu.c, there is a possible EoP due to a precondition check failure. This could lead to local escalation of privilege with System execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android kernelAndroid ID: A-238785915References: N/A |

2022-12-16 |

not yet calculated |

CVE-2022-20588

MISC |

| google — android |

In valid_va_secbuf_check of drm_access_control.c, there is a possible ID due to improper input validation. This could lead to local information disclosure with System execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android kernelAndroid ID: A-238841928References: N/A |

2022-12-16 |

not yet calculated |

CVE-2022-20589

MISC |

| google — android |

In valid_va_sec_mfc_check of drm_access_control.c, there is a possible information disclosure due to improper input validation. This could lead to local information disclosure with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android kernelAndroid ID: A-238932493References: N/A |

2022-12-16 |

not yet calculated |

CVE-2022-20590

MISC |

| google — android |

In ppmpu_set of ppmpu.c, there is a possible information disclosure due to a logic error in the code. This could lead to local information disclosure with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android kernelAndroid ID: A-238939706References: N/A |

2022-12-16 |

not yet calculated |

CVE-2022-20591

MISC |

| google — android |

In ppmp_validate_secbuf of drm_fw.c, there is a possible information disclosure due to improper input validation. This could lead to local information disclosure with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android kernelAndroid ID: A-238976908References: N/A |

2022-12-16 |

not yet calculated |

CVE-2022-20592

MISC |

| google — android |

In pop_descriptor_string of BufferDescriptor.h, there is a possible out of bounds read due to a missing bounds check. This could lead to local information disclosure with System execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android kernelAndroid ID: A-239415809References: N/A |

2022-12-16 |

not yet calculated |

CVE-2022-20593

MISC |

| google — android |

In updateStart of WirelessCharger.cpp, there is a possible out of bounds write due to a missing bounds check. This could lead to local escalation of privilege with System execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android kernelAndroid ID: A-239567689References: N/A |

2022-12-16 |

not yet calculated |

CVE-2022-20594

MISC |

| google — android |

In getWpcAuthChallengeResponse of WirelessCharger.cpp, there is a possible out of bounds read due to a missing bounds check. This could lead to local information disclosure with System execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android kernelAndroid ID: A-239700137References: N/A |

2022-12-16 |

not yet calculated |

CVE-2022-20595

MISC |

| google — android |

In sendChunk of WirelessCharger.cpp, there is a possible out of bounds write due to a missing bounds check. This could lead to local escalation of privilege with System execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android kernelAndroid ID: A-239700400References: N/A |

2022-12-16 |

not yet calculated |

CVE-2022-20596

MISC |

| google — android |

In ppmpu_set of ppmpu.c, there is a possible EoP due to an integer overflow. This could lead to local escalation of privilege with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android kernelAndroid ID: A-243480506References: N/A |

2022-12-16 |

not yet calculated |

CVE-2022-20597

MISC |

| google — android |

In sec_media_protect of media.c, there is a possible EoP due to an integer overflow. This could lead to local escalation of privilege of secure mode MFC Core with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android kernelAndroid ID: A-242357514References: N/A |

2022-12-16 |

not yet calculated |

CVE-2022-20598

MISC |

| google — android |

In Pixel firmware, there is a possible exposure of sensitive memory due to a missing bounds check. This could lead to local escalation of privilege with System execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android kernelAndroid ID: A-242332706References: N/A |

2022-12-16 |

not yet calculated |

CVE-2022-20599

MISC |

| google — android |

In TBD of TBD, there is a possible out of bounds write due to memory corruption. This could lead to local escalation of privilege with System execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android kernelAndroid ID: A-239847859References: N/A |

2022-12-16 |

not yet calculated |

CVE-2022-20600

MISC |

| google — android |

Product: AndroidVersions: Android kernelAndroid ID: A-204541506References: N/A |

2022-12-16 |

not yet calculated |

CVE-2022-20601

MISC |

| google — android |

Product: AndroidVersions: Android kernelAndroid ID: A-211081867References: N/A |

2022-12-16 |

not yet calculated |

CVE-2022-20602

MISC |

| google — android |

In SetDecompContextDb of RohcDeCompContextOfRbId.cpp, there is a possible out of bounds write due to a missing bounds check. This could lead to remote code execution with System execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android kernelAndroid ID: A-219265339References: N/A |

2022-12-16 |

not yet calculated |

CVE-2022-20603

MISC |

| google — android |

In SAECOMM_SetDcnIdForPlmn of SAECOMM_DbManagement.c, there is a possible out of bounds read due to a missing bounds check. This could lead to remote information disclosure from a single device with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android kernelAndroid ID: A-230463606References: N/A |

2022-12-16 |

not yet calculated |

CVE-2022-20604

MISC |

| google — android |

In SAECOMM_CopyBufferBytes of SAECOMM_Utility.c, there is a possible out of bounds read due to an incorrect bounds check. This could lead to remote information disclosure with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android kernelAndroid ID: A-231722405References: N/A |

2022-12-16 |

not yet calculated |

CVE-2022-20605

MISC |

| google — android |

In SAEMM_MiningCodecTableWithMsgIE of SAEMM_RadioMessageCodec.c, there is a possible out of bounds read due to a missing bounds check. This could lead to remote information disclosure with System execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android kernelAndroid ID: A-233230674References: N/A |

2022-12-16 |

not yet calculated |

CVE-2022-20606

MISC |

| google — android |

In the Pixel cellular firmware, there is a possible out of bounds write due to a missing bounds check. This could lead to remote code execution with LTE authentication needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android kernelAndroid ID: A-238914868References: N/A |

2022-12-16 |

not yet calculated |

CVE-2022-20607

MISC |

| google — android |

In Pixel cellular firmware, there is a possible out of bounds read due to an incorrect bounds check. This could lead to local information disclosure with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android kernelAndroid ID: A-239239246References: N/A |

2022-12-16 |

not yet calculated |

CVE-2022-20608

MISC |

| google — android |

In Pixel cellular firmware, there is a possible out of bounds read due to a missing bounds check. This could lead to local information disclosure with no additional execution privileges needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android kernelAndroid ID: A-239240808References: N/A |

2022-12-16 |

not yet calculated |

CVE-2022-20609

MISC |

| google — android |

In cellular modem firmware, there is a possible out of bounds read due to a missing bounds check. This could lead to remote code execution with LTE authentication needed. User interaction is not needed for exploitation.Product: AndroidVersions: Android kernelAndroid ID: A-240462530References: N/A |

2022-12-16 |

not yet calculated |

CVE-2022-20610

MISC |

| qualcomm — snapdragon |

Memory corruption in Core due to improper configuration in boot remapper. |

2022-12-15 |

not yet calculated |

CVE-2022-22063

MISC |

| codex-team — editor.js |

Editor.js is a block-style editor with clean JSON output. Versions prior to 2.26.0 are vulnerable to Code Injection via pasted input. The processHTML method passes pasted input into wrapper’s innerHTML. This issue is patched in version 2.26.0. |

2022-12-15 |

not yet calculated |

CVE-2022-23474

MISC

MISC |

bigbluebutton — bigbluebutton

|

BigBlueButton is an open source web conferencing system. Versions prior to 2.4-rc-6 are vulnerable to Insertion of Sensitive Information Into Sent Data. The moderators-only webcams lock setting is not enforced on the backend, which allows an attacker to subscribe to viewers’ webcams, even when the lock setting is applied. (The required streamId was being sent to all users even with lock setting applied). This issue is fixed in version 2.4-rc-6. There are no workarounds. |

2022-12-17 |

not yet calculated |

CVE-2022-23488

MISC

MISC |

| bigbluebutton — bigbluebutton |

BigBlueButton is an open source web conferencing system. Versions prior to 2.4.0 expose sensitive information to Unauthorized Actors. This issue affects meetings with polls, where the attacker is a meeting participant. Subscribing to the current-poll collection does not update the client UI, but does give the attacker access to the contents of the collection, which include the individual poll responses. This issue is patched in version 2.4.0. There are no workarounds. |

2022-12-16 |

not yet calculated |

CVE-2022-23490

MISC

MISC |

| informalsystems — tendermint-rs |

Tendermint is a high-performance blockchain consensus engine for Byzantine fault tolerant applications. Versions prior to 0.28.0 contain a potential attack via Improper Verification of Cryptographic Signature, affecting anyone using the tendermint-light-client and related packages to perform light client verification (e.g. IBC-rs, Hermes). The light client does not check that the chain IDs of the trusted and untrusted headers match, resulting in a possible attack vector where someone who finds a header from an untrusted chain that satisfies all other verification conditions (e.g. enough overlapping validator signatures) could fool a light client. The attack vector is currently theoretical, and no proof-of-concept exists yet to exploit it on live networks. This issue is patched in version 0.28.0. There are no workarounds. |

2022-12-15 |

not yet calculated |

CVE-2022-23507

MISC |

| flavorjones — loofah |

Loofah is a general library for manipulating and transforming HTML/XML documents and fragments, built on top of Nokogiri. Loofah < 2.19.1 contains an inefficient regular expression that is susceptible to excessive backtracking when attempting to sanitize certain SVG attributes. This may lead to a denial of service through CPU resource consumption. This issue is patched in version 2.19.1. |

2022-12-14 |

not yet calculated |

CVE-2022-23514

MISC

MISC |

flavorjones — loofah

|

Loofah is a general library for manipulating and transforming HTML/XML documents and fragments, built on top of Nokogiri. Loofah >= 2.1.0, < 2.19.1 is vulnerable to cross-site scripting via the image/svg+xml media type in data URIs. This issue is patched in version 2.19.1. |

2022-12-14 |

not yet calculated |

CVE-2022-23515

MISC

MISC

MISC |

| flavorjones — loofah |

Loofah is a general library for manipulating and transforming HTML/XML documents and fragments, built on top of Nokogiri. Loofah >= 2.2.0, < 2.19.1 uses recursion for sanitizing CDATA sections, making it susceptible to stack exhaustion and raising a SystemStackError exception. This may lead to a denial of service through CPU resource consumption. This issue is patched in version 2.19.1. Users who are unable to upgrade may be able to mitigate this vulnerability by limiting the length of the strings that are sanitized. |

2022-12-14 |

not yet calculated |

CVE-2022-23516

MISC |

helm — helm

|

Helm is a tool for managing Charts, pre-configured Kubernetes resources. Versions prior to 3.10.3 are subject to Uncontrolled Resource Consumption, resulting in Denial of Service. Input to functions in the _strvals_ package can cause a stack overflow. In Go, a stack overflow cannot be recovered from. Applications that use functions from the _strvals_ package in the Helm SDK can have a Denial of Service attack when they use this package and it panics. This issue has been patched in 3.10.3. SDK users can validate strings supplied by users won’t create large arrays causing significant memory usage before passing them to the _strvals_ functions. |

2022-12-15 |

not yet calculated |

CVE-2022-23524

MISC |

| helm — helm |

Helm is a tool for managing Charts, pre-configured Kubernetes resources. Versions prior to 3.10.3 are subject to NULL Pointer Dereference in the _repo_package. The _repo_ package contains a handler that processes the index file of a repository. For example, the Helm client adds references to chart repositories where charts are managed. The _repo_ package parses the index file of the repository and loads it into structures Go can work with. Some index files can cause array data structures to be created causing a memory violation. Applications that use the _repo_ package in the Helm SDK to parse an index file can suffer a Denial of Service when that input causes a panic that cannot be recovered from. The Helm Client will panic with an index file that causes a memory violation panic. Helm is not a long running service so the panic will not affect future uses of the Helm client. This issue has been patched in 3.10.3. SDK users can validate index files that are correctly formatted before passing them to the _repo_ functions. |

2022-12-15 |

not yet calculated |

CVE-2022-23525

MISC

MISC |

| helm — helm |