by Contributed | Aug 22, 2022 | Technology

This article is contributed. See the original author and article here.

We are excited to announce that you can now block suspicious entities when submitting emails, URLs, or attachments for Microsoft to review. In the Microsoft 365 Defender portal (https://security.microsoft.com), security operations team can now block the sender or domain, URL or attachment while submitting suspicious emails, URLs or attachments from the admin submission flyout panel. You’ll no longer need to switch to the Tenant allow/block list page to block a suspicious entity.

Let’s look at how it works!

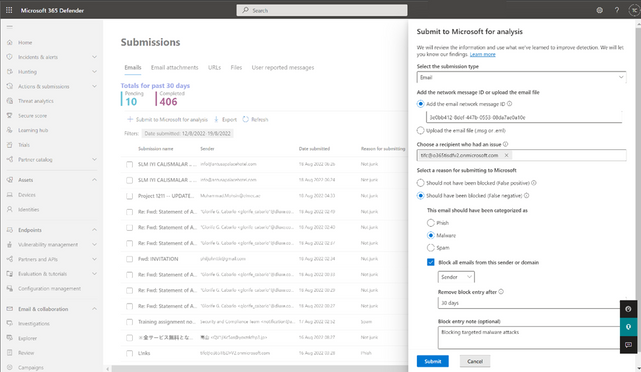

Blocking email addresses or domains through email admin submission flyout

From the Emails tab under the submissions portal in Actions & submissions in the Microsoft 365 Defender portal, select Submit to Microsoft for analysis to report phishing, malware or spam email. You can choose to block the sender or domain and provide block expiry date and optional notes. Make sure that you have the required permissions before submitting to Microsoft.

To learn more about blocking email addresses or domains in Tenant allow/block list, See Allow or block emails using the Tenant Allow/Block List.

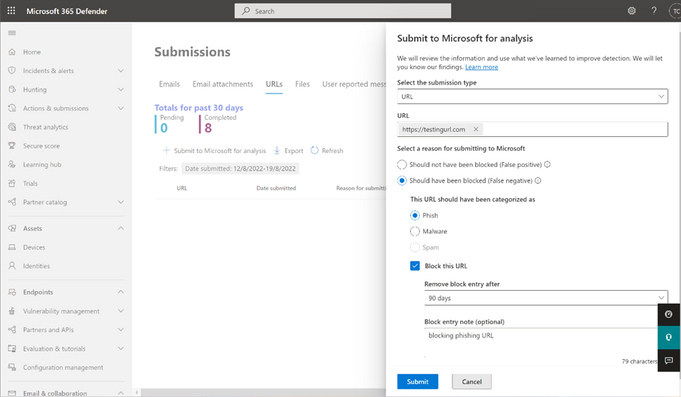

Blocking URL through URL admin submission flyout

From the URLs tab under the submissions portal in Actions & submissions in the Microsoft 365 Defender portal, select Submit to Microsoft for analysis to report phishing or malware URL. You can choose to block the URL and provide block expiry date and optional notes. Make sure that you have the required permissions before submitting to Microsoft.

To learn more about blocking urls in Tenant allow/block list, see Allow or block URLs using the Tenant Allow/Block List.

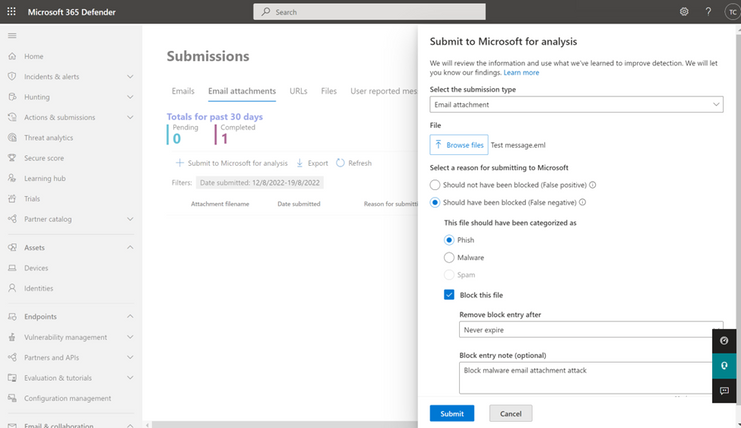

Blocking email attachment through email attachment admin submission flyout

From the Email attachments tab, under the submissions portal in Actions & submissions in the Microsoft 365 Defender portal, select Submit to Microsoft for analysis to report phishing or malware email attachment. You can choose to block the email attachment and provide block expiry date and optional notes. Make sure that you have the required permissions before submitting to Microsoft.

To learn more about blocking email attachment in Tenant allow/block list, see Allow or block files using the Tenant Allow/Block List.

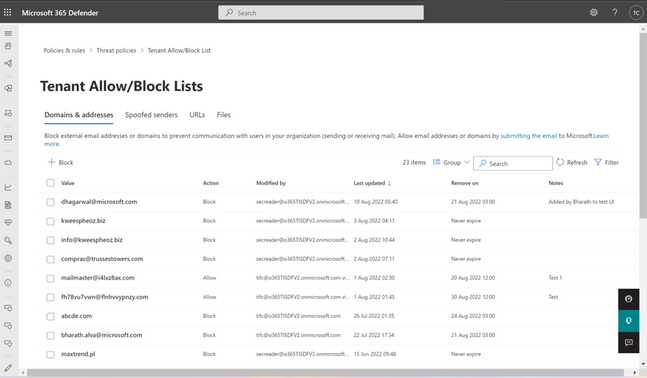

Viewing blocked entities from the admin submission flyout

All of the blocked entities created from the admin submission panel for URL, email attachment and emails will show up in Tenant allow/block list under the URL, file and Domains & addresses tab, respectively.

To learn more about Tenant allow/block list, see View entries in the Tenant Allow/Block List.

All other aspects of the submission experience, such as submitting a sample for analysis and viewing the results, remain as it is.

Let us know what you think!

The experience will start rolling out by the end of August. You can expect to see these changes over the next few weeks. The new submissions experience will be available to customers with Exchange Online Protection, Defender for Office 365 Plan 1, Defender for Office 365 Plan 2, including those with Office 365 E5, Microsoft 365 E5, or Microsoft 365 E5 Security licenses.

We’re excited for you to try out these new capabilities. Let us know what you think using the Defender for Office 365 forum.

by Contributed | Aug 19, 2022 | Technology

This article is contributed. See the original author and article here.

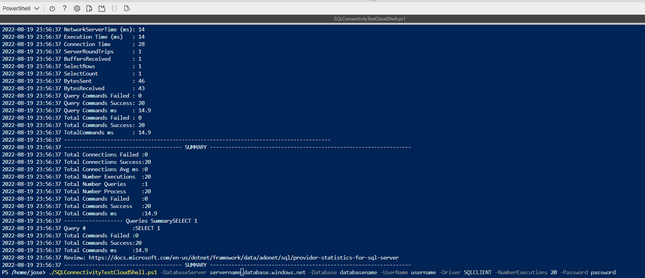

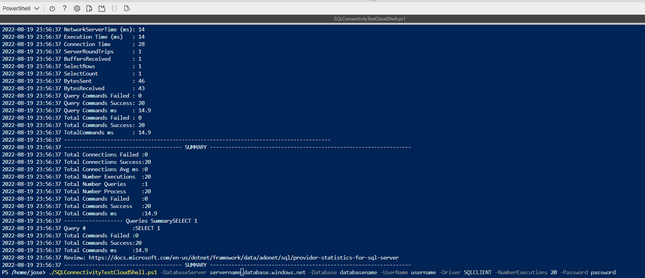

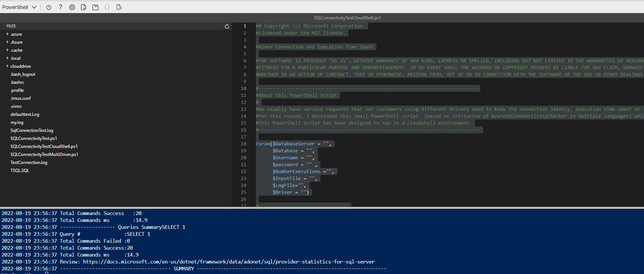

We usually have service requests when our customers, using different drivers, need to know the connection latency, execution time spent or validate the connection, in this article you could find an example code to perform this operation.

We received feedback to test using directly in CloudShell without deploying or having Virtual Machine on Azure. In this URL you could find the version for CloudShell.

Unfortunately, this CloudShell. version only supports SQLCLIENT and ODBC but the remaining features are the same.

To execute the PowerShell script CloudShell:

- Upload the file in your $home folder using the upload/download file in CloudShell

- Run this PowerShell script

- This script will create a log file in the same folder with the results of the execution. There is not needed to modify the PowerShell script, basically, you need to execute this as follows:

./SQLConnectivityTestCloudShell.ps1 -DatabaseServer servername.database.windows.net -Database databasename -UserName username -Driver SQLCLIENT -NumberExecutions 20 -Password password

Example how to execute the PowerShell Script

Example how to edit the file if needed.

Enjoy!

by Contributed | Aug 19, 2022 | Technology

This article is contributed. See the original author and article here.

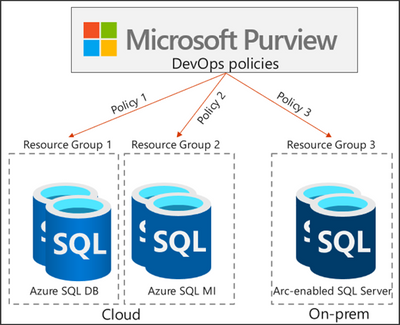

Microsoft Purview access policies enable customers to manage access to different data systems across their entire data estate, all from a central location in the cloud. These policies are access grants that can be created through Microsoft Purview Studio, avoiding the need for code. They dictate whether a set of AAD principals (users, groups, etc.) should be allowed or denied a specific type of access (e.g., Read, Modify) to a data source or a data asset within it. These policies get communicated to and get natively enforced by the data source.

DevOps policies are a special type of Microsoft Purview access policies. They leverage Microsoft Purview’s understanding of the customer’s data estate to simplify access provisioning for IT operations and security auditing functions. Access to system metadata is crucial for DBAs and other DevOps users to perform their job. That access can be granted and revoked efficiently and at-scale from Microsoft Purview. Microsoft Purview DevOps policies support a couple of permissions for SQL-type data sources: Microsoft Purview DevOps policies can be configured on individual data sources, resource groups and subscriptions. Beyond the UI, they also support an API which can be called from other DevOps tools.

A sample scenario of how this works

Bob and Alice are DevOps users at their company. Given their role, they need to login to dozens of Azure SQL logical servers to monitor their performance so that critical DevOps processes don’t break. Their manager, Mateo, creates an AAD group and includes Alice and Bob. He then uses Microsoft Purview DevOps policies (Policy 1 in the diagram below) to grant this AAD group access to the Resource Group (Resource Group 1) that hosts the Azure SQL servers.

Here are the benefits:

- Mateo does not have to create local logins in each logical server

- The policies from Microsoft Purview improve security by helping limit local privileged access. In the scenario, Mateo only grants the minimum access necessary that Bob and Alice need to perform the task of monitoring performance.

- When new Azure SQL servers are added to the Resource Group, Mateo does not need to update the policies in Microsoft Purview for them to be effective on the new logical servers.

- If Alice or Bob leave their job and get backfilled, Mateo just updates the AAD group, without having to make any changes to the servers or to the policies he created in Microsoft Purview.

- At any point in time, Mateo or the company’s auditor can see what access has been granted directly in Microsoft Purview Studio.

Access provisioning for SQL Performance Monitoring and SQL Security Auditing is already supported from Microsoft Purview in public preview. We are now adding a new simplified UX and API for these types of policies. If you are interested in test-driving, you can sign-up for the private preview of this new feature through this link https://forms.office.com/r/fycGz59PU9

by Contributed | Aug 19, 2022 | Technology

This article is contributed. See the original author and article here.

We are excited to announce that the iOS and Android apps for Microsoft To Do (a tool for managing and sharing tasks and lists) is rolling out to GCC (Government Community Cloud) users. The rollout begins in late August, with plans to be completed by mid-September. This launch will add the mobile apps to the web and Outlook functionality that GCC accounts already have today.

Using To Do on iOS and Android means that users can bring the power and flexibility of task list management wherever they go, with an intuitive UI closely resembling the experience users know from the web app:

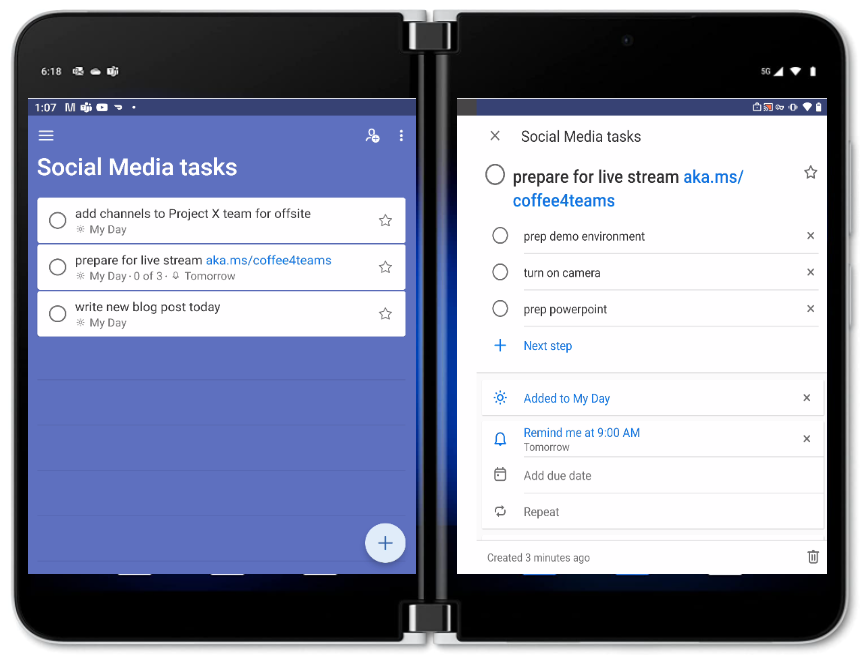

Surface Duo users will also appreciate the 2-screen experience of the Android app that allows them to view their list of tasks on 1 screen, while viewing the details of a particular task on the other:

No admin action is needed to enable To Do mobile app access for GCC tenants. Features that remain unavailable in GCC at this time (consistent with the limitations of To Do Web) include shared list notifications, and the ability to share task lists outside your organization.

For more information, check out the support page.

For more info from me on collaboration & teamwork, follow me at TeamworkCowbell (blog | Twitter | YouTube) or at ricardo303, SharePointCowbell, and LinkedIn.

by Contributed | Aug 18, 2022 | Technology

This article is contributed. See the original author and article here.

Takeaways

- Tietoevry created the Connect Academy, which reskills existing employees, including those whose current skills are based on legacy technologies.

- Microsoft Learn content, particularly Azure instructor-led training and certifications, is at the core of the Connect Academy curriculum. Extensive resources, like hands-on labs, complement the experiential learning in the program.

- A holistic process at Tietoevry integrates business strategy, resourcing needs, executive sponsorship, and individual career development.

- The program plans for business needs and places employees immediately into their new roles—while still training—providing mentorship, on-the-job learning, and billable assignments.

- Reskilling internal employees is more efficient than trying to secure new external resources with appropriate skills from the current highly competitive job market.

- Employees are valued and retained, and they’re able to plan the next phases of their careers, including ongoing learning and certification. They join approximately 2,500 employees company-wide who have already earned 3,600 Azure certifications as part of the overall Tietoevry skilling goal.

Finnish company Tietoevry is the largest IT services provider in the Nordics, with 24,000 employees worldwide serving customers in 90 countries and regions. As the company’s customers move to the cloud, the skills required of Tietoevry teams are changing accordingly. Hiring new talent is difficult and expensive—current IT skills are in demand everywhere. At the same time, the company has a base of great employees with years of productive experience in legacy technologies.

Many organizations face similar situations, but Tietoevry created an extraordinarily holistic plan for reskilling that serves the company’s needs, valuing and retaining its employees, and building robust teams to serve its customers. To address the challenge, in 2021, Tietoevry created its Connect Academy—a comprehensive program that focuses on reskilling internal employees on Microsoft technologies, with simultaneous new job placement within the company.

A ‘Keep Learning’ culture

One of the pillars of the Tietoevry culture is “Keep Learning,” which means that the company and its employees seek to continue developing their skills—now and in the future—and to stay curious and relevant. “Our employees do value learning very highly, and that’s why we want to support them in this,” says Lelde Saleniece, Tietoevry’s People Development Consultant. “The [Connect] Academy is one great example of how we do that, how we support them—not just in upskilling but in reskilling, as well. We care for them and their knowledge, and we want them to stay here.”

The Connect Academy begins with an analysis of business needs and the resources required to meet them. After the analysis, the candidate selection phase starts and everyone can apply. Line managers nominate candidates, and then, in the “handshake” step, candidates go through a career-planning stage, join their new teams, and begin training—including job shadowing and assignments. Microsoft Learn training resources are key, notes Lelde. “Microsoft has been very helpful, and definitely, without the support and collaboration, this wouldn’t be as good a program as it is.”

Microsoft Learn resources are at the core of the experiential learning journey, reports Lead Cloud Advisor Bjørn Sigurd Hove, who is a mentor for learners in the program. The certifications that learners earn demonstrate mastery, he observes, “But in my view, the road to that certification is maybe more important.” In addition to instructor-led training, he explains, “I stressed that they should use the study guides, which have links to resources on each and every subject.” Bjørn also points learners to the Microsoft Learn labs for hands-on experience. “The certification is just the end goal of this journey, but the journey is most important.”

The training component is intense—two months of dedicated time for classwork, labs, exam preparation, and certification exams. Raja Ali, a Tietoevry employee who completed the Connect Academy, used all the offered Microsoft Learn self-study resources. “The real deal was when you were sitting down and working on Microsoft Learn,” he recalls. “The coolest thing was the sandbox experience you get in Microsoft Learn, when you’re reading something and they ask you to actually do the activity right there.”

The many benefits of this process start with meeting the business needs, ensuring that “we are not training people just for the sake of the training, but we are training people to the actual roles and actual jobs,” notes Ari Lehtovaara, Head of the Connect Academy. “The main idea is to give them basic knowledge so that they can fit into the team and start working.”

Tietoevry Line Manager Niklas Klasén welcomes reskilled employees onto his team. He points out that selecting candidates for the academy is important, given how hard it is to recruit suitable talent from outside the company. Their background is important. “For me,” Niklas emphasizes, “being able to combine the skills that people already have from their long IT careers with the new cloud experience, that has been a very valuable concept for us.”

At every step of the two-month program, the Connect Academy assists employees and supports them in their new roles. They join their new teams immediately when training starts, with a line manager and mentor to help them.

Partnering to build skills and careers in the cloud space

Legacy skills can be helpful to the team, Niklas explains. “Let’s say, for example, they worked with networking on-prem or in our own datacenters. They are now subject matter experts when it comes to connecting that datacenter to the cloud and are a key resource when it comes to migrating from the datacenter, as well.”

With Microsoft Learn partnering to provide technical product knowledge at core of the Connect Academy, Tietoevry has created a thoughtfully strategic way forward for the company—and, most of all, for its employees. As Bjørn points out, “That program gives our colleagues a new career in the cloud space, and I’m really proud of that.”

For more details on Tietoevry’s learning journey, check out the following Microsoft Customer Tech Talks episode.

Recent Comments