This article is contributed. See the original author and article here.

Admittedly, Dapr is still in its early days since it is still in Alpha release at the time of writing but I see an enormous potential for it in the coming years. As I often tend to say, Microservices architectures bring a lot of benefits but also suffer from many drawbacks and one of them being an increased technical complexity. Dapr aims at fixing the latter, or at least, make it easier to develop assets in a Microservices way.

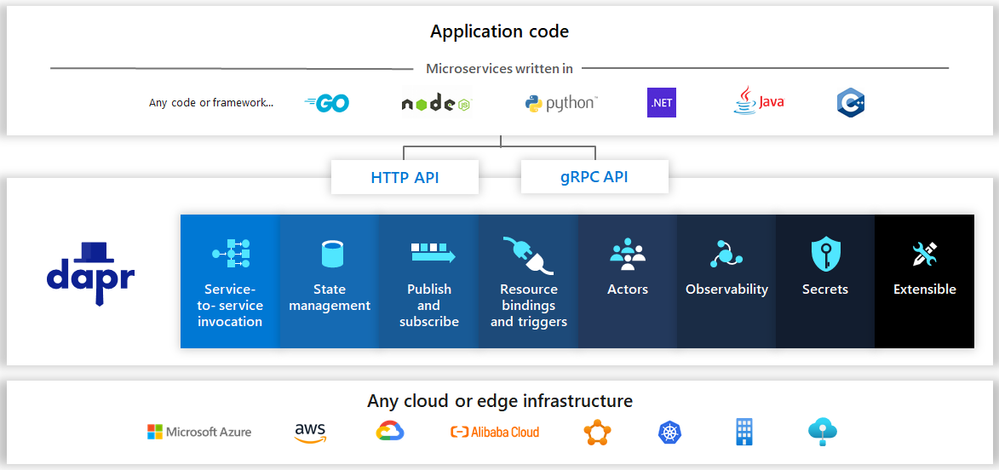

The value proposal of Dapr is to offer an abstraction between your application code and underlying backend systems used in the following areas:

Image from https://github.com/dapr/dapr

for any infrastructure environment. They support an impressive list of connectors:

Dapr is available to any programming language since the only thing the application code ever sees are HTTP/gRPC calls to … localhost. So for instance, you can target another Dapr-enabled service by invoking it http://localhost:3500/v1.0/invoke/service or a state store through http://localhost:3500/v1.0/state/anystatestore . The operation (invoke, state, publish, secrets, workflows, etc.) tells Dapr what your application is dealing with. Of course, one can leverage Dapr bindings for any Event-Driven scenario with input-bindings & pub/sub mechanisms.

On top of the areas depicted in the above picture, Dapr also ships with a Logic Apps wrapper that one can use to execute self-hosted Logic Apps workflows. Self-hosted Azure Functions (that you can run anywhere) also offer an extension that have built-in Dapr bindings, see here to know more https://cloudblogs.microsoft.com/opensource/2020/07/01/announcing-azure-functions-extension-for-dapr/

Should you be using Dapr from within Kubernetes, you can combine it with best practices and other products such as Service Meshes. I have tested Dapr together with Linkerd and it works fine. To give a concrete example on how to use Dapr combined with best practices, I built a Dapr workflow (based on Logic Apps) doing the following:

- The workflow is triggered by an HTTP call and gets a text to be translated

- The first step extracts a secret (Cognitive Service API key) from Azure Key Vault using Dapr secrets

- The second step consists in calling the Translation Service using the extracted key.

- The third and last step saves the translated text into an Azure Blob Storage using Dapr bindings

The Logic Apps wrapper (depicted above as the workflow engine) is bound to AAD Pod Identities and is granted a GET secret access policy to Azure Key Vault so that no credentials have to be stored anywhere. Here is the workflow code:

{

"definition": {

"$schema": "https://schema.management.azure.com/providers/Microsoft.Logic/schemas/2016-06-01/workflowdefinition.json#",

"actions": {

"GetAPIKey": {

"inputs": {

"method": "GET",

"uri": "http://localhost:3500/v1.0/secrets/azurekeyvault/translator-ap-key"

},

"runAfter": {},

"type": "Http"

},

"CallTranslationService": {

"inputs": {

"body": [ { "Text": "@triggerBody()?['Message']" } ],

"headers": {

"Content-Type": "application/json",

"Ocp-Apim-Subscription-Key": "@body('GetAPIKey')?['translator-ap-key']",

"Ocp-Apim-Subscription-Region": "westeurope"

},

"method": "POST",

"retryPolicy": {

"count": 3,

"interval": "PT5S",

"maximumInterval": "PT30S",

"minimumInterval": "PT5S",

"type": "exponential"

},

"uri": "https://api.cognitive.microsofttranslator.com/translate?api-version=3.0&to=fr"

},

"runAfter": {

"GetAPIKey": [

"Succeeded"

]

},

"type": "Http"

},

"SaveTranslation": {

"type": "Http",

"inputs": {

"method": "POST",

"uri": "http://localhost:3500/v1.0/bindings/translation",

"body": {

"data": "@replace(string(body('CallTranslationService')), '"', '"')",

"operation": "create"

},

"headers": {

"Content-Type": "application/json"

}

},

"runAfter": {

"CallTranslationService": [

"Succeeded"

]

}

},

"Response": {

"inputs": {

"body": {

"value": "@body('CallTranslationService')"

},

"statusCode": 200

},

"runAfter": {

"SaveTranslation": [

"Succeeded"

]

},

"type": "Response"

}

},

"contentVersion": "1.0.0.0",

"outputs": {},

"parameters": {},

"triggers": {

"manual": {

"inputs": {

"method": "POST",

"schema": {

"properties": {

"Message": {

"type": "string"

}

},

"type": "object"

}

},

"kind": "Http",

"type": "Request"

}

}

}

}

If you look at the URLs of actions 1 & 3, you’ll notice that they target localhost with respectively a /secrets/ and /bindings/ operation. But, here is the million dollar question: how come does Dapr know what the real targets are (in this case, Azure Key Vault and Azure Storage)? Answer: by defining components. The below one defines Azure Key Vault:

apiVersion: dapr.io/v1alpha1

kind: Component

metadata:

name: azurekeyvault

namespace: default

spec:

type: secretstores.azure.keyvault

metadata:

- name: vaultName

value: sey----ks

- name: spnClientId

value: 3cc97f71-----------fbb5c4d4dbf

You’ll notice the link with AAD Pod Identities since Components have a placeholder to define which SPN has to be used. The second component used by the workflow is an Azure Blob Storage:

apiVersion: dapr.io/v1alpha1

kind: Component

metadata:

name: translation

spec:

type: bindings.azure.blobstorage

metadata:

- name: storageAccount

value: seykeda

- name: storageAccessKey

secretKeyRef:

name: storageKey

key: storageKey

- name: container

value: daprdocs

auth:

secretStore: azurekeyvault

Notice how this one refers to the Secret component, stating that the related secret store is azurekeyvault (name of my Secret Store) and that the Storage Account key is stored in the storageKey secret. This makes it very secure since no sensitive information has to be disclosed anywhere but within Key Vault itself. Also, remember that it could be any of the supported secret stores. The workflow knows nothing about the Azure Storage & Azure Key Vault which could be replaced by anything else by overwriting the existing components.

In the more advanced topics, Dapr provides an actor implementation based on the Virtual Actor pattern which is exposed through Java/.NET/.NET Core SDKs. Some SDKs are also available to implement a Dapr-aware gRPC client/server.

Bottom line: Dapr is definitely a framework to keep an eye on, given its fast growth and a first production-ready release that should come around December 2020.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

Recent Comments