by Scott Muniz | Sep 10, 2020 | Uncategorized

This article is contributed. See the original author and article here.

Today, I worked on a service request that our customer needs to know how to run a bulk insert for reading CSV file using Managed Identity credential. Following I would like to share with you how I configure this.

We have to configure two elements: Azure SQL Database and Storage Account.

Storage Account:

- First of all, we need to have a blob storage of general-purpose v2 type.

- Using PowerShell, you need to register your Azure SQL server with Azure Active Directory, running the following commands:

Connect-AzAccount

Select-AzSubscription -SubscriptionId <subscriptionId>

Set-AzSqlServer -ResourceGroupName your-database-server-resourceGroup -ServerName your-SQL-servername -AssignIdentity

- Under your storage account, navigate to Access Control (IAM), and select Add role assignment. Assign Storage Blob Data Contributor Azure role to the server hosting your Azure SQL Database which you’ve registered with Azure Active Directory (AAD) previously.

Azure SQL Database:

- Open SQL Server Management Studio and connect to the database.

- Open a new query and run the following commands.

- Create the database credential:

CREATE DATABASE SCOPED CREDENTIAL MyCredential WITH IDENTITY = 'Managed Identity'

CREATE EXTERNAL DATA SOURCE [MyDataSource]

WITH (

TYPE = BLOB_STORAGE, LOCATION = 'https://storageaccountname.blob.core.windows.net/backup', CREDENTIAL = MyCredential

);

CREATE TABLE [dbo].[MyDummyTable] (ID INT)

BULK INSERT [dbo].[MyDummyTable]

FROM 'info.txt'

WITH (DATA_SOURCE = 'MyDataSource'

, FIELDTERMINATOR = 't')

It is possible that running bulk insert command you could get an Access denied issue, I saw that after adding the Identity and the RBAC of the storage account these operations take some minutos to be effective.

Enjoy!

by Scott Muniz | Sep 10, 2020 | Azure, Technology, Uncategorized

This article is contributed. See the original author and article here.

Azure Stream Analytics service makes it easy to ingest, process, and analyze streaming data from an events source (Event Hub/IoT Hub/Blob Storage), enabling powerful insights to drive real-time actions. But before you publish your Stream Analytics query to cloud to run 24×7, do you know you can test your query 3 different ways?

Benefits of testing the query

Here are the key benefits of testing your query:

- Development productivity: Fast iteration to test and fix the query in a single box environment

- Test before deploying: You can test the query logic with live data or local data without deploying to the cloud

- Query behavior consistency: For live input, the queries will output the same results

- Fast results: You can view query results in < 4seconds

- Free: Test your query for free

Here are 3 ways you can test your query

| |

1. Azure Portal |

2. VS Code |

3. Visual Studio |

| Platform type |

Web client |

Editor |

IDE |

| Query execution |

Test runs in the cloud without charge |

Test runs on your machine

|

Test runs on your machine

|

|

Test scenario 1

Local data (JSON/CSV)

- You can test your Stream Analytics query by using a local file.

|

Yes |

Yes |

Yes |

|

Test scenario 2

Sample data

- You can test your Stream Analytics query against snapshot/sample of live stream input.

|

Yes

(Event Hub, IoT Hub, Blob Storage)

SQL reference data supported

|

No |

No |

|

Test scenario 3

Live data

- You can test your Stream Analytics query against live stream input.

|

No |

Yes

(Event Hub, IoT Hub, Blob Storage)

Blob/SQL reference data supported

|

Yes

(Event Hub, IoT Hub, Blob Storage)

Blob/SQL reference data supported

|

|

Job diagram availability while testing the query

|

No |

Yes

You can view the data & metrics of each query step in each intermediate result set to find the source of an issue.

|

Yes

You can view the data & metrics of each query step in each intermediate result set to find the source of an issue.

|

| Documentation |

Local data

Sample data

Job diagram |

Local data

Live data

Job diagram

|

Local data

Live data

Job diagram

|

|

Limitations

|

No support for Event ordering in testing.

C# UDFs and deserializers not supported.

Local input size <2 MB for testing.

Queries with <1 min execution time are supported for testing. |

Support for Event ordering.

C# UDFs and deserializers support expected by end of year.

|

Support for Event ordering

C# UDFs and deserializers are supported.

|

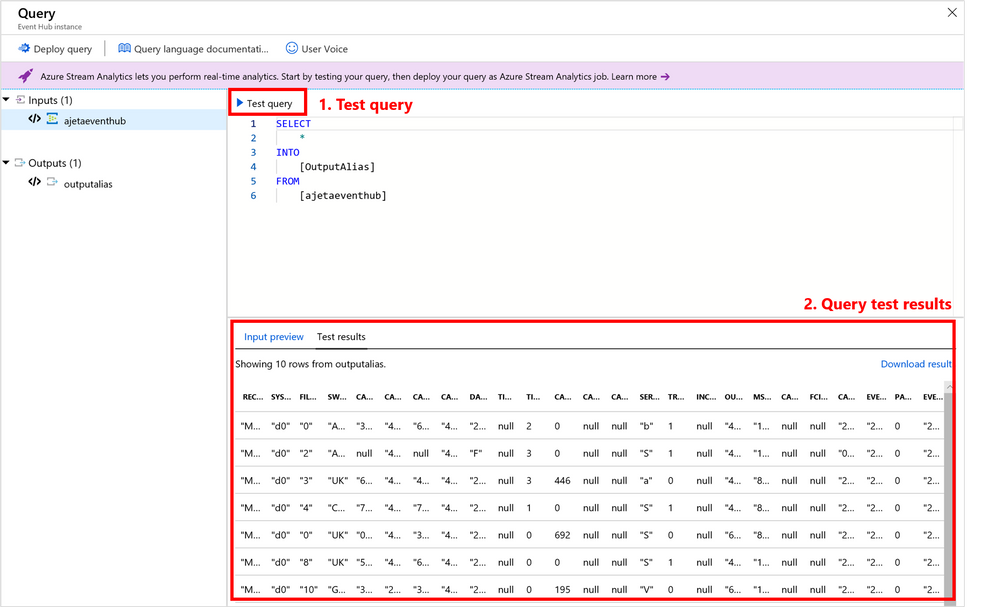

1. Azure Portal

Test your query in Azure portal by using a local file or sample of live data

Test your query in Azure portal by using a local file or sample of live data

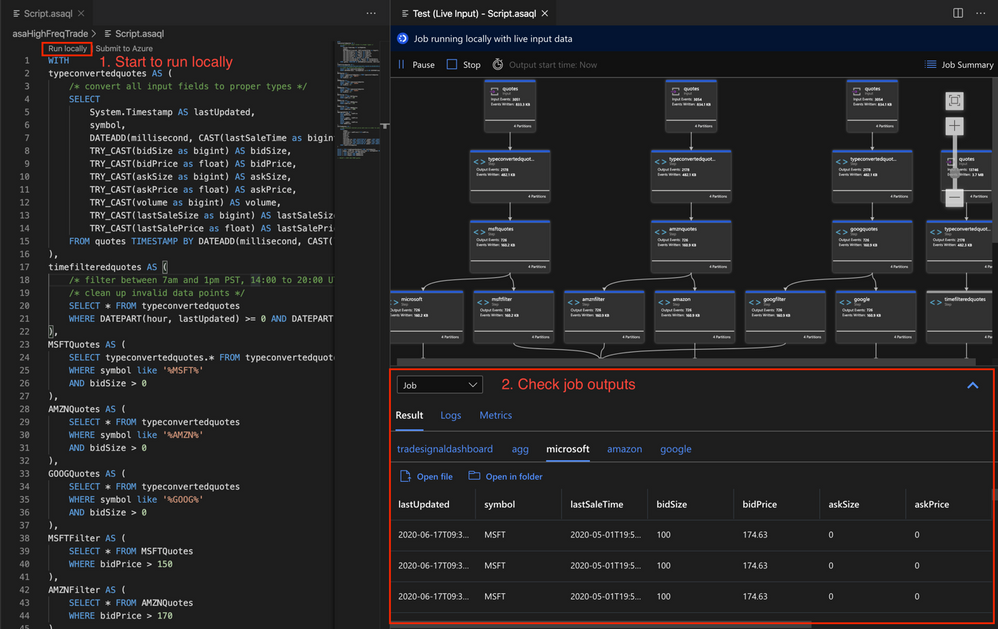

2. VS Code

Test your query in VS Code by using a local file or live data

Test your query in VS Code by using a local file or live data

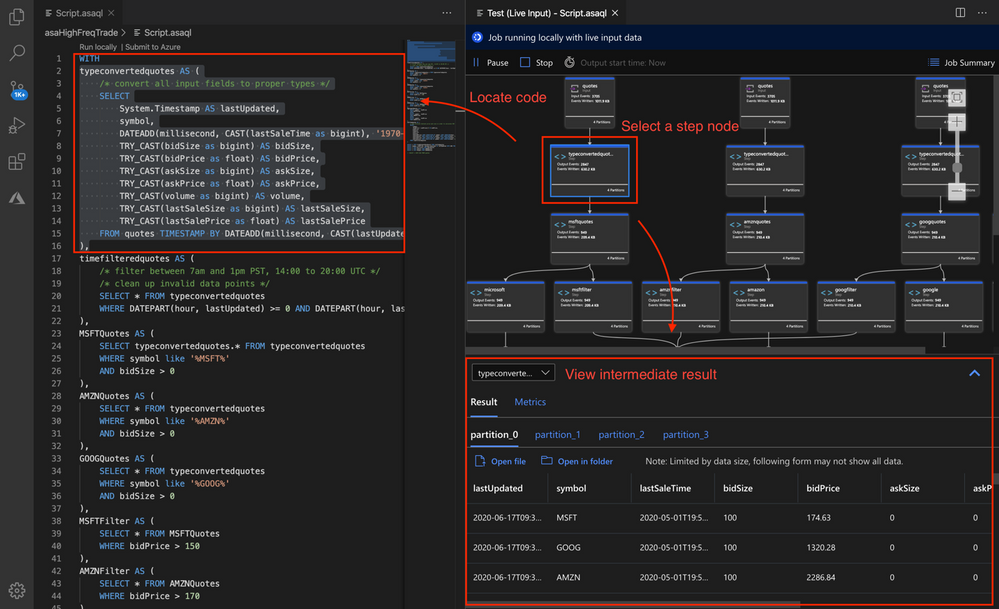

Use Job Diagram to see intermediate results

Use Job Diagram to see intermediate results

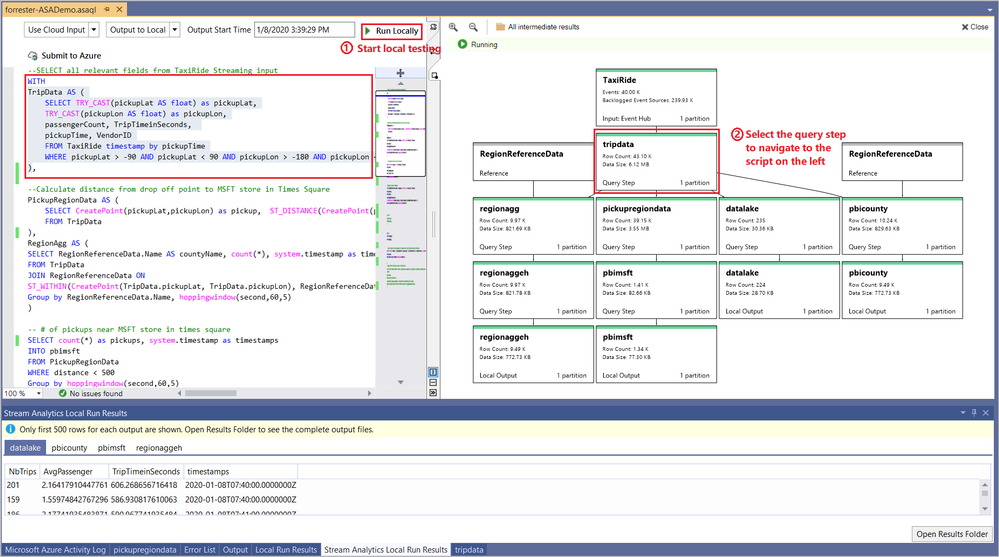

3. Visual Studio

Test your query in VS Code by using a local file or live data

Test your query in VS Code by using a local file or live data

Query testing should only be used for functional testing purposes. It does not replace the performance and scalability tests you would perform in the cloud. This testing feature should not be used for production purposes since running in a local environment doesn’t guarantee any service SLA.

Feedback and engagement

Engage with us and get early glimpses of new features by following us on Twitter at @AzureStreaming.

The Azure Stream Analytics team is highly committed to listening to your feedback and letting the user’s voice influence our future investments. We welcome you to join the conversation and make your voice heard via our UserVoice page

by Scott Muniz | Sep 10, 2020 | Uncategorized

This article is contributed. See the original author and article here.

Excited for the FIRST ever virtual Microsoft Ignite? The Humans of IT Community will be bringing a compilation of stories where humanity and technology intersect all in one track so that you can discover how to empower your community, build meaningful careers, be more productive and achieve more!

We know this is the post you’ve been waiting for.

As many of you have probably guessed, Humans of IT will be back at Microsoft Ignite 2020! Join us during our 8 Humans of IT sessions at Microsoft Ignite 2020! P.S – If you haven’t already heard, Microsoft Ignite is free to attend this year, so what are you waiting for? Register today!

8 Sessions You Don’t Want to Miss at Microsoft Ignite 2020

Tuesday 9/22 11:30AM PST: Leveraging Tech for Good: Empowering Nonprofits in a Global Pandemic

Speakers: Dux Raymond Sy, Shing Yu, Mario H Trentim, Foyin Olajide-Bello

Come hear from our panel about the vital role technology plays in helping nonprofits digitize and develop strong business continuity processes in the new normal. In this session, you will also discover how you can play your part in supporting the broader community and contributing your tech skills for good.

Tuesday 9/22 3:15PM PST: How to be a Social Technologist in a Virtual World

Speakers: Grace Macjones, Scott Hanselman

What does it take to be a social technologist in this new normal? Are you visible online, and an even better question – should you be? How do you show up on virtual Teams meetings or events? We cover GitHub, Twitter, LinkedIn, StackOverflow, Blogging, Podcasting etc and share tips on setting up your own webcam and home AV equipment (Spoiler alert: It doesn’t have to be fancy!) as we aim to understand what a well-rounded technologist looks like today’s virtual world. We also cover staying safe (and sane!) online, being both appropriately private and public, and how to be authentic while not giving away too many personal things.

Tuesday 9/22 1:45PM PST: Sustaining Human Connection in a Virtual World with Technology

Speakers: Megan Lawrence, Michael Bohan, Allexa Laycock, Leah Katz-Hernandez

Come learn about how Microsoft uses human-centered research to build technology that strives to match the natural ways in which our brains work and helps minimizes strain so we can stay productive as we adjust to the new world of remote work.

Wednesday 9/23 7:15AM PST: You + Power Platform: Be the Hero Of Your Own Story

Speakers: Jeremiah Marble, Gomolemo Mohapi, Ashlee Culmsee, Joe Camp, Mary Thompson

Have you ever felt like you’re a supporting or background character in this whole production known as work and life? Well, let’s end that nonsense TODAY and have you emerge as the hero of your own story. Come and hear from these 4 amazing humans who decided to be the active hero of their own stories…using the Power Platform as the sword as they marched into the modern battle. You’ll walk away from this session, 100% inspired with real, practical steps on how you, too, can #DoTheThing.

Wednesday 9/23 11:30AM PST: The Intersection of AI and Humanity: Solving World Challenges through AI Innovation

Speakers: Siddharta Chatuverdi, Alice Piterova, Kelvin Summoogum

The world we live in is constantly evolving, and humanity as a whole is now grappling with increasingly complex and challenging issues. Yet, innovative solutions can be found in the most surprising places. Join us in this session to hear from a panel of innovators at the forefront of technology, and be inspired by their stories of how they successfully combine their imagination and creativity with the power of AI to help solve health, societal and humanitarian challenges.

Wednesday 9/23 1:00 PM: Choose Your Adventure: How to Hack Your Tech Career and Carve The Path YOU Want

Speakers: Iasia Brown, Ryen Macababbad, Chloé Brown, Karen Alarcon

Want to learn how to manage up (“manage your manager”)? Looking to overcome the notorious Imposter Syndrome that continues to be a challenge for many of us in tech? While we cannot solve all the world’s problems, we can come together as #HumansofIT and share tips on how to hack your tech careers and discover tools you can use to keep calm and thrive in the new normal.

Wednesday 9/23 2:30PM: Want to start a startup? Passing on the collective experience of 50 founders in just 30mins

Speakers: Annie Parker, Lahini Arunachalam, Kai Frazier, Mikaela Jade

Would you like to learn from the collective advice of 50 badass women and other under-represented founders from around the world who have recently started companies and what they learnt along the way so you can learn from their successes and failures? Join Microsoft for Startups Execs Annie Parker and Lahini Arunachalam in this session as they walk through the findings from some recent customer research they have from speaking with some of the most amazing founders and they will be bringing some special guests to share their inspiring stories with us too.

Wednesday 9/23 7:30 PM: Mentoring Future Technologists in Tech: Students Get Real

Speakers: Tammy Richardson, Errol Blaize Jr, Kaylynn Armstrong, Trent Dalcourt, Lauren Gray, Desha Wise

Join us in this session to hear from our Microsoft Ignite Humans of IT Student Ambassadors from five Historically Black Colleges and Universities (HBCUs) to hear about how they discovered their passion in technology, their personal development journey and their goals to transform the future of tech. Gain insights on what students from underrepresented communities are looking for in their future tech employers, and how tech professionals like you can help support their growth.

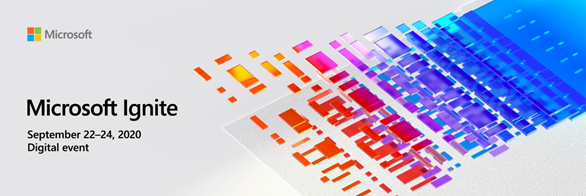

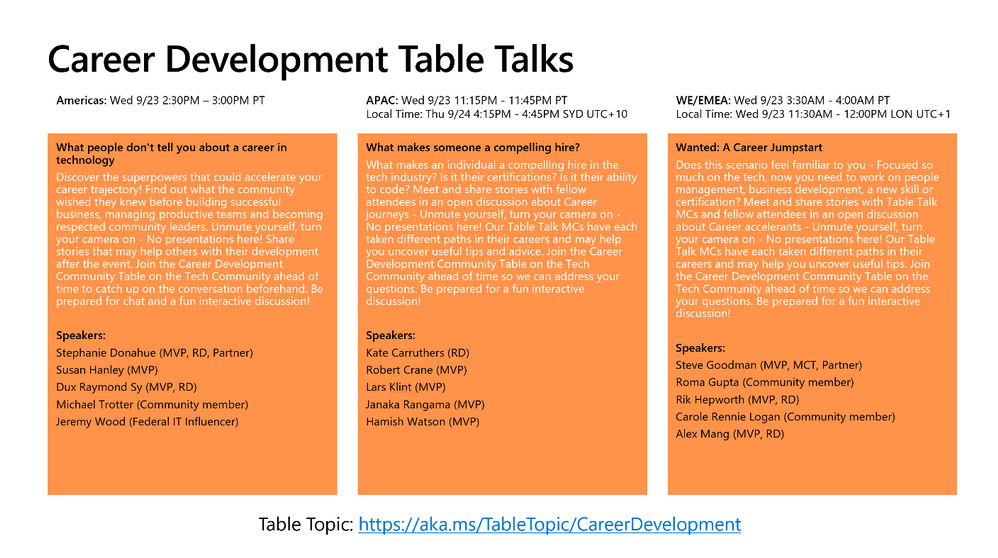

Human-focused Microsoft Ignite Table Talks

Looking for ways to interact and connect with your peers? Be sure to check out the community table talks! Share insights with other attendees in an on-going live chat. Then, tune in to hear community leads highlight what’s been discussed! Our top picks of the lot are:

See you at Microsoft Ignite 2020, #HumansofIT- Register online to secure your virtual seat today!

#HumansofIT

#MSIgnite

#CommunityRocks

#ConnectionZone

by Scott Muniz | Sep 10, 2020 | Uncategorized

This article is contributed. See the original author and article here.

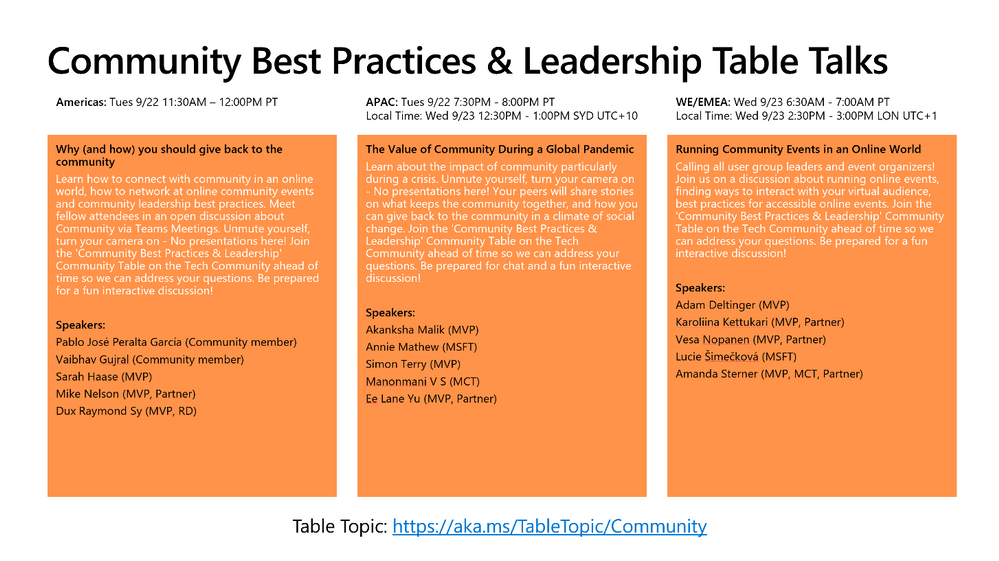

Microsoft Ignite 2020 is quickly approaching. The world has changed a lot since the new Yammer was announced last November and this year’s event will be entirely digital, making it available to attendees instantly around the globe.

The new Yammer is now generally available, which means we’ve got a lot to share about what’s new and how you can use Yammer to drive community and employee experience.

To get started, check out our latest video.

You can find the entire Ignite session catalog at https://myignite.microsoft.com on September 22nd, but here is a list of our dedicated sessions if you’re looking to learn more about Yammer and employee engagement.

Hero

Enabling collaboration, communication, and knowledge sharing with Microsoft Teams, SharePoint, Project Cortex, and more (Repeats 3x)

Organizations are moving to a hybrid workplace to support the needs of remote and onsite employees. In the 2nd part of a 2-part series, we look at how teams can collaborate fluidly with the latest innovations across Microsoft Teams, SharePoint, and OneDrive. We also look at how organizations can improve employee engagement, communications, and knowledge sharing through SharePoint, Yammer, and Project Cortex.

Employee Engagement and Communities in Microsoft 365 (Repeats 3x)

Learn how Microsoft 365 powers employee engagement using Yammer, Microsoft Teams, SharePoint, and Stream to empower leaders to connect with their organizations, align people to common goals, and to drive cultural transformation. Dive into the latest innovations including live events, new Yammer experiences and integrations, and the intelligent intranet and see how it all comes together.

Roadmap

Engage employees with the new Yammer: Communities, Communications, Knowledge Sharing – Session 1101

The new Yammer is here. See how Yammer has been completely redesigned to power leadership engagement, company-wide communication, and knowledge sharing. This session will cover what’s new and what’s next for Yammer.

Skilling

Supercharging communications and employee engagement with Microsoft 365 – Session 1045

This session provides both the strategy and tools needed to drive communications in your company. Learn how to keep everyone informed and engaged using SharePoint, Yammer, Microsoft Teams, and live events.

Driving open sharing and knowledge in communities – Session 1069

Capture, shape, and share knowledge across your organization using communities and your intranet. See how Yammer creates more resilient, intelligent organizations that drive innovation, responsiveness, and engagement at every level.

Yammer Governance in a Microsoft 365 World – Session 1100

Your one stop session for administering your Yammer network and managing compliance, including a double-click into the latest Yammer data governance features like eDiscovery, local data residency, and Native Mode.

Engaging employees with Yammer and Microsoft 365 – Session 1043

Similar to the Digital Breakout, see how Yammer, SharePoint, Stream, and Teams work together to deliver a modern workplace solution to engage employees and accelerate cultural transformation across organizational silos, geographic boundaries, and remote workstyles.

Optimize your new Yammer Communities – Session 1102

A lot has changed in the new Yammer. In this one-stop shop for community management, you’ll learn what’s new and what’s possible for Yammer community admins. Including:

- the latest features

- managing communications and campaigns

- branding a community

- hosting live events

- community moderation

- and more!

There will also be three (3x) Ask the Experts opportunities running in different timezones to ensure everyone has a chance to connect with our engineering and marketing teams for Q&A. Add them to your session calendar:

Wednesday, September 23 — 1:00pm-1:30pm PT

Wednesday, September 23 — 9:00pm-9:30pm PT

Thursday, September 24 — 5:00am-5:30am PT

Stay tuned! There will be more ways to connect and consume content at Microsoft Ignite 2020 and Yammer will appear in a number of other Microsoft 365 sessions as well. In the meantime, be sure to watch our blog for the latest updates and follow us on Twitter for more news, timing, and discussions.

Michael Holste is a Senior Product Marketing Manager for Yammer and Employee Engagement in Microsoft 365.

by Scott Muniz | Sep 10, 2020 | Uncategorized

This article is contributed. See the original author and article here.

Hi Everyone,

Zoheb here again with my colleague Peter Chaukura from Microsoft South Africa and today we will be sharing some details on how we helped one of our SMC customers reduce the attack vector by enabling Azure AD Password Protection.

If you have not read the 1st blog which covers the background do give it a read now before continuing here. How the Microsoft Mission Critical Team helped secure AAD

Hope you found the initial blog a valuable read.

Let me continue our story about Protecting your Passwords in Azure AD.

The Problem:

Through internal audits our customer had found that there is a high usage of “Common Passwords” in their organization. They discovered that password spray attacks were on the rise and had no solution other than the “password meets complexity requirements” setting under the password policy in their Active Directory environment.

This SMC customer urgently needed a way to block weak passwords from the domain and understand the usage of these weak passwords across the organization as well as the impact these may have.

In other words, they were looking to find out how many users have weak passwords in the organization before enforcing Password Protection in their environment.

The Solution:

As the Mission Critical Trusted Advisor, we stepped in and informed our customer that it is possible to block weak passwords by using Azure AD Password Protection. We also had the answer to their more critical question “is it even possible to view how many users have weak password in my organization?”

Before I share details on how we helped implement this, let us try to understand the basics of this feature.

Azure AD Password Protection detects, and blocks known weak passwords and their variants from a global Microsoft curated list. In addition, you can specify custom banned words or phrases that are unique to your organization. The on-premises deployment of Azure AD Password Protection uses the same global and custom banned password lists that are stored in Azure AD, and it does the same checks for on-premises password changes as Azure AD does for cloud-based changes. These checks are performed during password changes and password reset events against on-premises Active Directory Domain Services (AD DS) domain controllers.

There are two modes in Azure AD Password Protection as described below:

AUDIT MODE: Microsoft recommends that initial deployment and testing always starts out in Audit mode.

- Audit mode is intended to run the software in a “what if” mode.

- Each DC agent service evaluates an incoming password according to the currently active policy.

- “bad” passwords result in event log messages but are accepted.

ENFORCE MODE: Enforce mode is intended as the final configuration.

- A password that is considered unsecure according to the policy is rejected.

- When a password is rejected by the Azure AD password protection DC (domain controllers) Agent, the end user experience is identical to what they would see if their password were rejected by traditional on-premises password complexity enforcement.

Read more details here. Ban-Weak-Passwords-on-premises

The Solution

Our SMC customer was specifically looking at enabling Password Protection and some mechanism which can give more details on the present weak password status before enforcing “Azure AD Password Protection” feature.

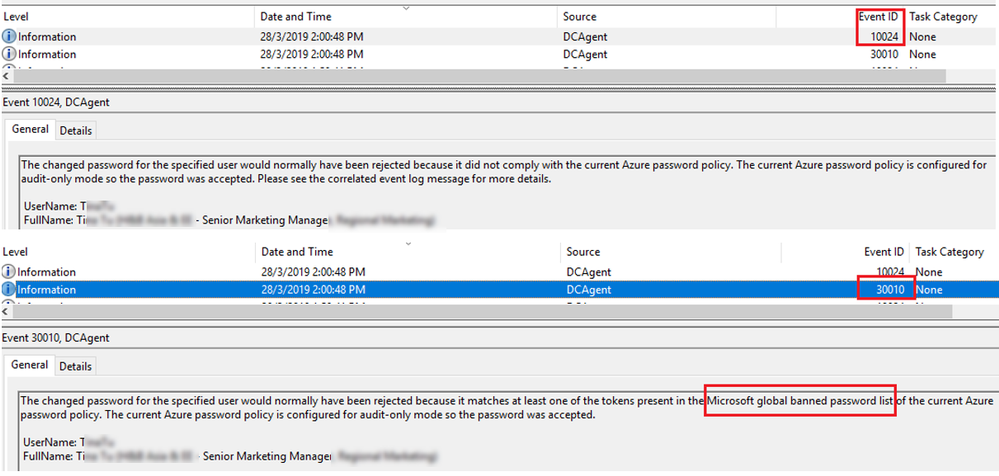

We told them events has more details. to see the sample events please refer the blog below. https://docs.microsoft.com/en-us/azure/active-directory/authentication/howto-password-ban-bad-on-premises-monitor

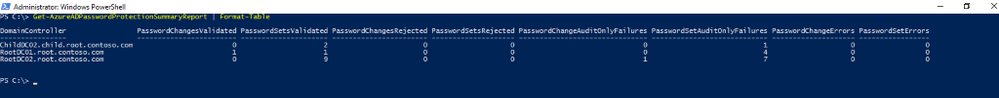

Peter also gave an option of Get-AzureADPasswordProtectionSummaryReport cmdlet generates a summary report as shown.

But customer was not happy with the above output and they were looking for something more and detailed, they asked Peter that they needed something with the below capabilities: –

- Status of Weak Passwords across Domains in Forest

- Password Compliance report based on Microsoft banned Password list.

- User details who have weak passwords

- Did users change Password or reset?

- Password Policy count

- Visual dashboard which can be updated regularly/Automatically.

Clearly nothing is built by default which can help us give a visual view of organization “Password compliance”.

So, Peter helped build PowerBI Dashboards to ingest data extracted from domain controller event logs Applications and Services LogsMicrosoftAzureADPasswordProtectionDCAgentAdmin.

NOTE: This dashboard gets fully populated only after all/most of the users have reset/Changed password at least once, so you can assume this may take a full Password Life cycle of your organization to get an overview of weak Passwords in your organization.

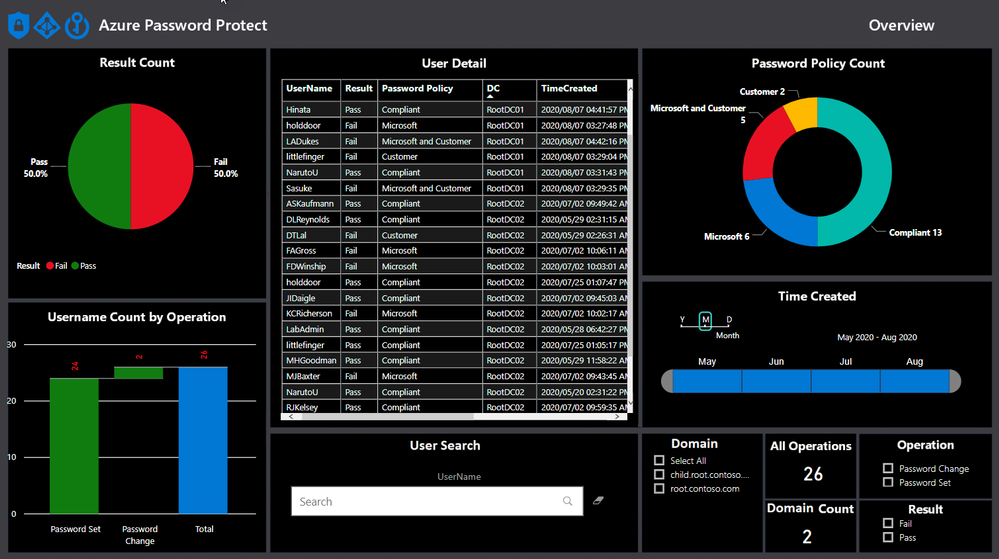

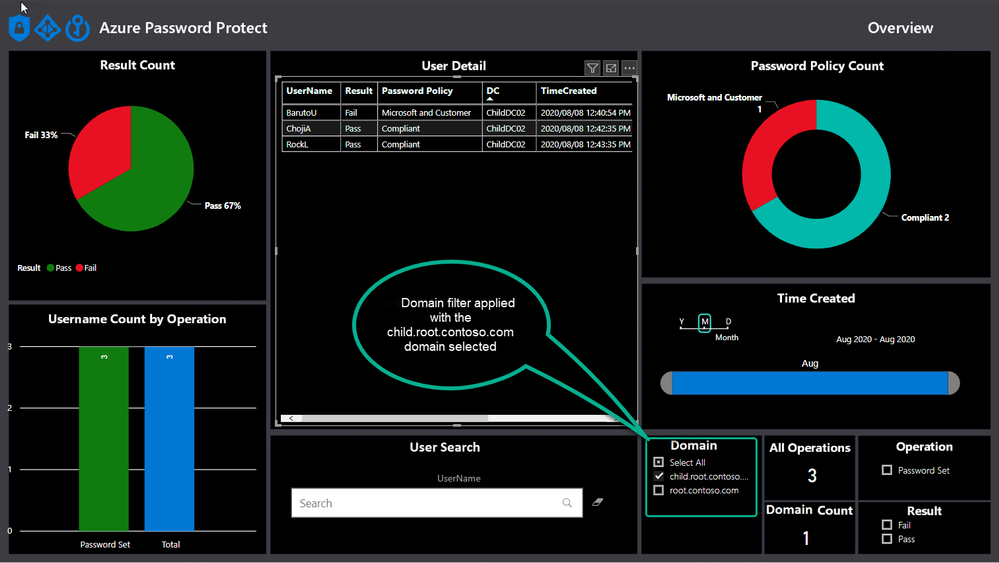

The Custom Azure AD Password Protection Power BI Dashboard

How do we collect the data and build the dashboard?

- We collected events(10024, 30010) from all domain controllers where the Azure AD Password Protection DC agent is installed and exported them into a csv file.

- The collection of event log entries is done via a PowerShell script that is configured to run a scheduled task.

- Further ingests the Csv into a Power BI dashboard.

- Build a dashboard view in Power BI using this data as shown below!

If you are new to Power BI and not sure how to create a dashboard using data from an Excel file, go check out this small video and the blog on step by step instructions to do this. https://docs.microsoft.com/en-us/power-bi/create-reports/service-dashboard-create

POWERB dashboard break down.

Result Count

The view shows the overall status in terms of total statistics relating to account with weak passwords and policy compliant passwords.

The default view shows the result without any filters turned on and will change when filters are applied, like the domain filter, as shown below.

Username Count by Operation

Azure AD Password Protection operations take place whenever a password is changed or reset and this view helps to draw a nice picture around how many passwords are being set and changed, as well as the total.

A password change is when a user chooses a new password after proving they have knowledge of the old password. For example, a password change is what happens when a user logs into Windows and is then prompted to choose a new password.

A password set (sometimes called a password reset) is when an administrator replaces the password on an account with a new password, for example by using the Active Directory Users and Computers management tool.

User details

This view shows all the details about the operations to answer questions like:

- Which user account?

- On which domain controller?

- At what time?

- What password policy applied for?

- Was it a password set or change operation?

The information can easily be exported to csv from the PowerBI to leverage the data for further analysis or targeting specific users for training around weak passwords.

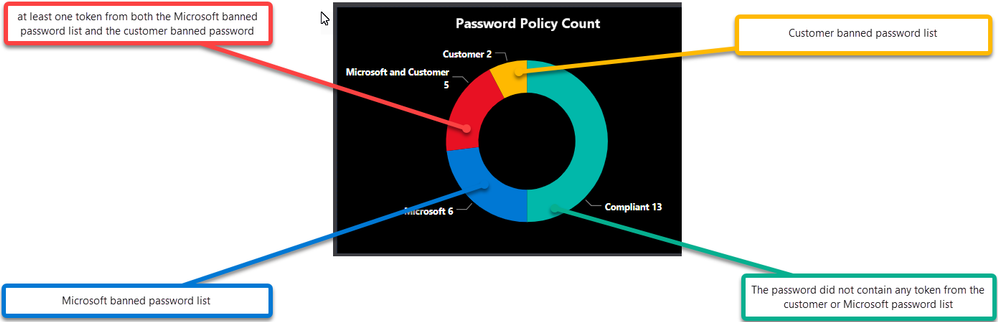

Password Policy Count

Publishing the Dashboard

PowerBI allows you to publish the dashboard via the PowerBI gateway, which allows users and administrators with permission to view the dashboard from any location or device.

We assisted the customer to publish this Dashboard and with the data being updated daily via the scheduled task it allows for most recent data to viewed.

This can also be implemented on free Power BI Desktop version.

Indicators

- A lot of password sets operations resulting in failures to comply with policy might be an indicator that password adminsservice desk is setting initial weaker passwords for users.

- Many operations for password sets compared to password changes might be an indication that the Service Desk is resetting passwords and not checking the “change password at next logon” tick box.

- High failure rate means greater impact of Policy change in the environment, ideally try to reduce the failure count before changing AAD Password Protection to Enforce mode

- Many passwords matching the customer policy might indicate a greater risk of password spray attacks from internal bad actors using commonly used passwords in the environment.

CONCLUSION

In conclusion, we tremendously raised the awareness about Password Security at our customer and their Identity Admins can view the status on their Password Security from anywhere with our Power BI Dashboard.

If you want this to be implemented for you, feel free to contact your Microsoft Customer Success Account Manager (previously known as TAM) and someone can help you.

Hope this helps,

Zoheb & Peter

by Scott Muniz | Sep 10, 2020 | Uncategorized

This article is contributed. See the original author and article here.

Hello,

My name is Jeffrey Worline, and I am a Senior Support Escalation Engineer on the Windows Performance Team at Microsoft. This blog addresses troubleshooting leaks occurring in a process to include identifying and data collection. This article assumes that you have already identified the process that is leaking. If you have not yet been able to identify where your leak is, please see my blog: MYSTERY MEMORY LEAK: WHERE DID MY MEMORY GO!

First thing we need to determine is memory consumption being caused by private data, heap data or some other memory type. We need to address the memory types differently.

- Download a Windows Sysinternals tool called VMMap

VMMap is an utility application analyzing virtual and physical memory. It shows a breakdown of a process’s committed virtual memory types as well as the amount of physical memory (working set) assigned by the operating system to those types.

This tool is used to attach to an individual process allowing a snapshot to be taken to see the memory map for that process.

- Simply launch VMMap and from the process list it displays, pick the instance showing the high private working set.

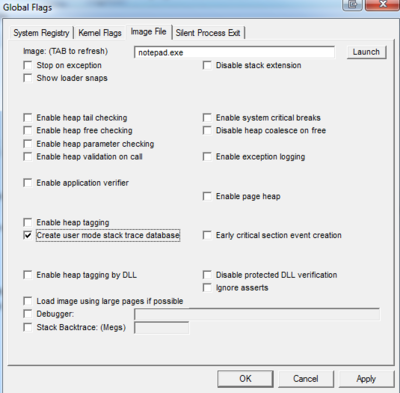

If the high memory is being caused by Heap, you will need to enable User Stack Tracking (UST) against the process using gflags.exe which is part of the Debugging Tools for Windows.

Note: If the high memory shows as Private Data or some other type other than heap, simply continue with getting procdump when memory usage is high.

Scenario A: Uniquely named process with high memory by Heap

- Download Debugging Tools for Windows

Just need the standalone version since we only need the debugging tool and not the whole WDK package.

Note: If the high memory shows as Private Data or some other type other than heap, simply continue with getting procdump when memory usage is high.

Disclaimer: The intent of this blog is not to teach you how to debug. If you are not familiar with debugging process dumps, please open a case with Microsoft support for assistance.

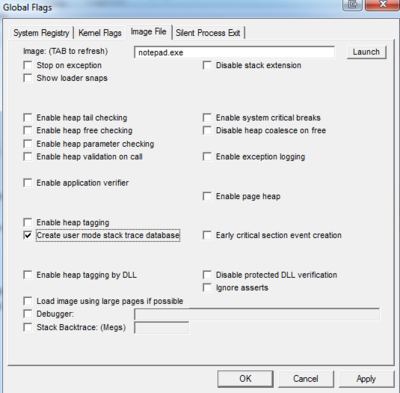

- Go to the directory where you installed the tool and you will find gflags.exe as one of the files, right-click on it and select “Run as administrator.”

- Click on “Image File” tab.

- Type in the process name, for example notepad.exe

- Hit the keyboard TAB key to refresh

- Place check mark in “Create user mode stack trace database.”

Note: Be sure to reverse your gflag setting also by unchecking the “Create user mode stack trace database” when no longer needed.

- Click “OK”.

- The process or service will need to be restarted to put in to effect.

- Get procdump of process when memory is high.

Scenario B: High memory occurring in svchost.exe process by Heap

If the svchost process contains more than one service, you will need to break each service out to run in its own svchost process to determine which service is causing the high memory. Once that has been determined, then need to uniquely name that svchost process the service runs in and then enable UST against it. You do not want to enable UST globally against all svchost process as it will put a serious performance hit.

Note: We don’t ever want to enable UST against svchost.exe as that would enable against any and all instances of svchost.exe running and could cause a performance hit on the machine because of the overhead.

- Use Task Manager to document the PID of the service that is demonstrating high memory usage.

- From administrative command prompt run following command:

tasklist /svc

- Using PID you documented from Task Manager, locate that svchost process and document the services that it is hosting.

- Break each service out into its own svchost process if it is a shared svchost process hosting several services by running following command for each service:

sc config <service name> type= own

replace <service name> with actual service name

Note: there is no space in “type=” and there is a space between “= own”

- Restart the service for setting to take effect.

- Verify the services are running in their own svchost process by running tasklist /svc from command prompt again.

- At this point, you have broken each service out into its own svchost process; now identify which service was driving up memory usage before proceeding to next step.

- Once the service has been identified, from administrative command prompt change command focus to c:windowssystem32 folder if needed and run following command:

copy svchost.exe <unique name>

Replace <unique name> with something that represents the service. Example for wmi service – wmisvchost.exe

- Launch registry editor (Start > Run > “regedit.exe”) and navigate to HKLMSystemCurrentControlSetServices then the appropriate key for the service you are uniquely naming svchost process for.

- Modify existing ImagePath from %systemroot%system32svchost.exe -k netsvcs to %systemroot%system32<unique name> -k netsvcs

Replace <unique name> with that used in step 8. In this example that would be: %systemroot%system32wmisvchost.exe -k netsvcs

Note: Backup the registry key before modifying it

- Restart the service.

- Use gflags as noted earlier to enable “Create user mode stack trace database” against the uniquely name svchost process, then restart the service to apply the new settings.

Note: It is important that you go back and reverse what you did in step 4 and modify path back to original after you are no longer needing the service to be broken out and uniquely named as failure to do so can prevent future hotfixes from being installed associated with that service.

To reverse, replace sc config <service name> type= own with sc config <service name> type= share

Reverse your gflag setting also by unchecking the “Create user mode stack trace database”.

Reverse your setting in the registry under the service key for the ImagePath.

- Get a procdump of the process when memory is high.

Directions for Procdump

- Download Procdump tool from Sysinternals

Note: Use procdump if dumping 32-bit process and use procdump64 if dumping a 64-bit process

- From and administrative command prompt, navigate to the directory where you downloaded and unzipped procdump tool.

- Run the following command to dump a unique name process:

procdump -ma <process name>

The -ma switch is to perform a full memory dump of the process.

Replace <process name> with actual process name.

If there is more than one instance of a process running with the same name you must dump the process by PID as opposed to name.

e.g. procdump -ma <PID>

Replace <PID> with actual PID of the process.

Next Up: MYSTERY MEMORY LEAK: WHERE DID MY MEMORY GO!

– Jeffrey Worline

Recent Comments