by Contributed | Jun 9, 2021 | Technology

This article is contributed. See the original author and article here.

As IT Administrators, we all face daily challenges solving problems for our constituents. As we have seen over the last year, the world can use our help. In our small corner of the world, we would like to do our part to give back. When we, as IT Admins, use self-help resources to fix issues without opening support cases, we enable Microsoft to repurpose a portion of these savings towards social good.

As IT Administrators, we all face daily challenges solving problems for our constituents. As we have seen over the last year, the world can use our help. In our small corner of the world, we would like to do our part to give back. When we, as IT Admins, use self-help resources to fix issues without opening support cases, we enable Microsoft to repurpose a portion of these savings towards social good.

Therefore, we invite you to join us in our effort to give back to communities in need! The Diagnostics for Social Good campaign is designed to help solve your technical issues while at the same time helping support global communities.

How it Works

IT admins run customer diagnostics in the Microsoft 365 admin center to resolve issues without logging a support request and Microsoft will donate to global non-profits that support COVID-19 relief efforts in India as well as vaccination supplies across the world. (Read more about the Microsoft 365 admin center here.) Learn more about covered scenarios and how to run the diagnostics below:

Why we are focused on Diagnostics for Social Good

We all have an opportunity to change the world. In the technology industry, we are privileged to be able to have a broad impact on millions of people. We know what’s possible and we know that we can contribute to help. As we introduce our new Diagnostics for Social Good initiative, we want to recognize the opportunity we all have to support others. As we move forward, we are looking for feedback and areas where we can help IT admins keep their technology environment healthy while giving back.

Microsoft has demonstrated a passion for giving to address critical issues facing local communities and abroad. Giving has become part of our culture, and one way we live our mission to empower every person and organization on the planet to achieve more.

Our business practices and policies reflect our commitment to making a positive impact around the globe, and we work continuously to apply the power of technology to earn and sustain the trust of our customers and partners, and the communities in which we live and work.

by Contributed | Jun 9, 2021 | Technology

This article is contributed. See the original author and article here.

The 21.06 Azure Sphere OS quality update is now available for evaluation in the Retail Eval feed. The retail evaluation period provides 14 days for backwards compatibility testing. During this time, please verify that your applications and devices operate properly with this release before it is deployed broadly via the Retail feed. The Retail feed will continue to deliver OS version 21.04 until we publish 21.06 in two weeks. Note: there was no 21.05 update.

This evaluation release of 21.06 includes enhancements and bug fixes for the OS only; it does not include an updated SDK.

Areas of special focus for compatibility testing with the 21.06 release should include:

- Apps and functionality utilizing SPI Flash.

- Apps and functionality utilizing wolfSSL.

For more information on Azure Sphere OS feeds and setting up an evaluation device group, see Azure Sphere OS feeds and Set up devices for OS evaluation.

For self-help technical inquiries, please visit Microsoft Q&A or Stack Overflow. If you require technical support and have a support plan, please submit a support ticket in Microsoft Azure Support or work with your Microsoft Technical Account Manager. If you would like to purchase a support plan, please explore the Azure support plans.

![[Event Recap] Humans of IT @ Microsoft Build (May 25th-27th, 2021)](https://www.drware.com/wp-content/uploads/2021/06/fb_image-112.png)

by Contributed | Jun 9, 2021 | Technology

This article is contributed. See the original author and article here.

We hope you were able to attend the Humans of IT sessions at Microsoft Build 2021! If you missed the live sessions, no worries! Read on for a recap and links to view the sessions on demand.

Over 70,000 software engineers and web developers from all over the world came together virtually for a 48-hour event that was jam packed with topics covering Windows, Microsoft Azure and other Microsoft technologies. Humans of IT had the opportunity to have 5 sessions over the course of this event, covering topics that aligned with the human side of developing and highlighted people using tech for good.

Microsoft Build: Day 1 (Tuesday, May 25)

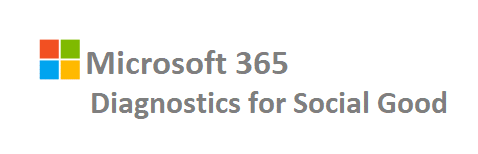

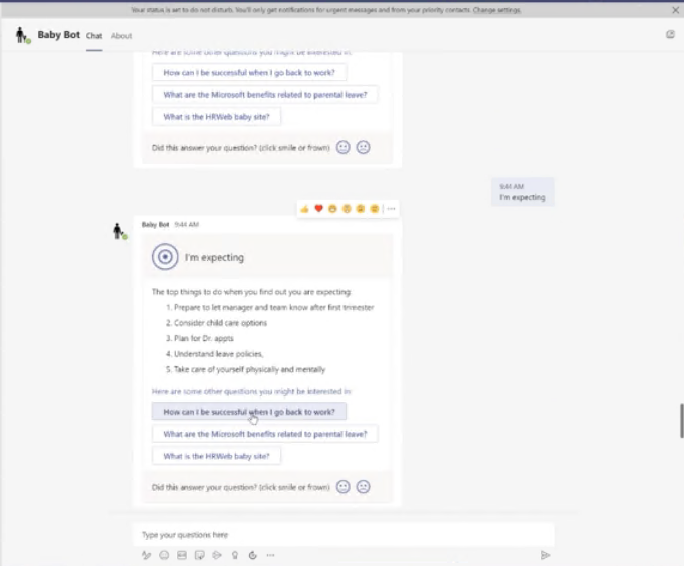

The Humans of IT sessions within the Connection Zone kicked off with Thao Le and Nirupa Kalathur discussing how the BabyBot can enable easier access to information and help solve communication gaps between HR and employees. Thao began the session explaining what exactly the BabyBot does and how it can be used followed by sharing a heartwarming video, highlighting the benefits of the bot. Nirupa finished the session with a demo before taking some time to answers questions from the audience.

What viewers thought of this session:

- “Baby Bot was eye-opening! I found myself thinking of all the ways it would be beneficial to use w/in my own company. New Hire Bot, New Manager/Lead Bot, just so many places it would be useful and much more user friendly for how people think vs placing documents or handbooks or on a SharePoint site”

- “This was a good session with powerful insight on how to connect HR with employees. I am excited to see what else the bot can do for HR and other departments.”

Jay Harris and Chris Koenig wrapped up Day 1 of the Humans of IT sessions and taught attendees about how they can use their developer skills to give back with GiveCamp. Chris kicked off this session discussing the background for GiveCamp and how he came up with the idea. Jay spoke about how GiveCamp got to where it is today, how other developers can participate, and upcoming events they are hosting.

Microsoft Build: Day 2 (Wednesday, May 26)

Day 2 of Microsoft Build Humans of IT sessions kicked off with Chris Gill who spoke about languishing and his experience with it. He covered tips that helped him that may be able to help others dealing with languishing.

What viewers thought of this session:

- “I left this session feeling a sense of calm and with some concrete things to work on. Thank you.”

- “These type of sessions are extremely valuable, we need to remove the stigmatism of mental health and be transparent about how we are feeling. Openly talking about our struggles allows others to understand they are not alone and that provides relief.”

- “Please have more talks like this in future events. This session was relevant to my current professional situation.”

Day 2 Human of IT sessions wrapped up with Leslie Ramirez and Leomaris Reyes discussing how MVPs from the Dominican Republic went to Haiti to create the first Microsoft Developers Community in Haiti. Hear about their story, what inspired them, and how they made it happen.

Microsoft Build: Day 3 (Thursday, May 27)

The final Humans of IT session at Build had quite the lineup with a panel including Kolaru Adeola, Clement Dike, Ayodeji Folarin, Bettirose Ngugi, Oluwaseyi Oluwawumiju, and Samuel Omodadepo expertly moderated by David Okeyode. They discussed their passion for working with grassroot communities and shared their individual stories of how they are all working towards the same goal of transforming the future of tech in Africa.

We are truly grateful to have been able to share the voices of so many amazing speakers and (virtually) encourage and inspire thousands of attendees this year on a global scale in a deeply meaningful, human way.

We hope you enjoyed the Humans of IT track @ Microsoft Build as much as we did, and we’ll see you next year!

Missed a session and want to catch up? Or perhaps you want to re-watch them all over again?

Access all the Humans of IT session recordings here.

Share your Microsoft Build experience with us:

- What were your favorite Humans of IT session? Why?

- What do you hope to see from Humans of IT at the next virtual conference?

We want to hear from you in the comments below. Thank you for being a part of the community and this MS Build experience.

See you next time!

#HumansofIT

#MSBuild

#CommunityRocks

#ConnectionZone

by Contributed | Jun 9, 2021 | Technology

This article is contributed. See the original author and article here.

So, you have a frontend app, written in a SPA framework like Angular, React, Vue or maybe Svelte? And you want to find the quickest way to get it to the Cloud? There are many ways to do so, GitHub, Azure Storage, but there’s also Azure Static Web Apps service.

So, there are a few moving parts that you may or may not use, like the below

Frontend, this is your app written in a framework like Angular, React.js, Vue.js etc

API, this is your backend that you build using Azure functions, so it’s Serverless which is great on your wallet but also for architecting with events in the Cloud

Auth, auth is always a bit cumbersome but great once it’s up and running.

Your problem

So, you want to get all this deployed? but before you get so far, you want to make sure that you can test everything on your own machine first, but can you? In a lot of cases, you can’t and that leads you to mock certain things like maybe the API or the auth part. So once deploying you are fairly confident it will work, but you are still crossing your fingers. But does it really have to be that way? Why can’t you have a great developer experience where everything is testable offline?

Well, Azure Static Web Apps, does come with CLI tool for this, and it delivers on the promise, you can develop your app 100% locally and only when you feel ready can you deploy, if you want. Doesn’t that sound nice? Of course it does !

CLI features

You are probably a bit tentative at this point, you are liking the sales pitch but isn’t 100% convinced.

Hmm ok, show me the features?

Sure, here they are:

- Serve static static app assets, or proxy to your app dev server

- Serve API requests, or proxy to APIs running in Azure Functions Core Tools

- Emulate authentication and authorization

- Emulate Static Web Apps configuration, including routing

Ok, sounds good, but I bet it’s a pain to install?

Not so much, it’s one line of Node.js, like so:

npm install -g @azure/static-web-apps-cli

and to start you type:

Hmm, sounds simple enough, so where’s my app at?

It’s at http://localhost:4280.

The API

Ok, nice, you let me server up my frontend. What about the API you said I could have?

Because it’s using Azure Functions, you need the libs to run that, so it’s a one time install of azure functions core tools like so:

npm install -g azure-functions-core-tools@3 –unsafe-perm true

and then there’s the command for starting both the frontend and the API:

swa start ./my-dist –api ./api-folder

Ok, I’m liking this more and more, you said something about auth too?

Authentication

There’s a local authentication API where auth lives here http://localhost:4280/.auth/login/ and provider is something like Twitter, Facebook etc.

Wait, and that wil just work?

Yep :)

Ok, that’s it you got me, I’ll go check it out. Where was it again?

The docs are here https://github.com/Azure/static-web-apps-cli

Summary

This article talked about the local emulator for Azure Static Web Apps that’s really competent and is capable of serving up you entire app, that’s frontend, API and even auth. And capable of doing so locally.

Additional links

by Contributed | Jun 9, 2021 | Technology

This article is contributed. See the original author and article here.

The following courses will guide you to becoming an Azure Defender for IoT Ninja.

Curriculum

This training program includes over 22 modules. For each module, the post includes a video, and/or a presentation, along with supporting information when relevant: product documentation, blog posts, and additional resources.

The modules are organized into the following groups:

- Overview

- Basic Features

- Deployment

- Sentinel Integration

- Advanced

Check back often as additional items will be published regularly.

Overview

Azure Defender for IoT enables IT and OT teams to auto-discover their unmanaged IoT/OT assets, identify critical vulnerabilities, and detect anomalous or unauthorized behavior — without impacting IoT/OT stability or performance.

Azure Defender for IoT delivers insights within minutes of being connected to the network, leveraging patented IoT/OT-aware behavioral analytics and machine learning to eliminate the need to configure any rules, signatures, or other static IOCs. To capture the traffic, it uses an on-premises network sensor deployed as a virtual or physical appliance connected to a SPAN port or tap. The sensor implements non-invasive passive monitoring with Network Traffic Analysis (NTA) and Layer 7 Deep Packet Inspection (DPI) to extract detailed IoT/OT information in real-time.

This section provides background information on IoT and OT networks and an overview of the Microsoft Azure Defender for IoT platform.

Basic Features

Learn about the core features of the platform including asset discovery, deployment options, reporting, alert handling, event timeline, risk assessment, attack vector simulations, and data mining and baselining.

Deployment

This section provides details on the deployment and tuning specifics. Learn about the differences between on-premises-only and cloud-connected options. Walk through the licensing components within the Azure portal.

Sentinel Integration

For cloud-connected options, remote sensors will send logging and analysis data to Azure. Once in the cloud, logging and asset data may be forwarded to Sentinel. All of the tools within Sentinel become available including automation/playbooks, workbooks, threat hunting and analytics, incident handling, notebooks, and more.

Advanced

Learn about advanced features and integrations including custom alerts, MITRE framework, enterprise data integration, large scale deployments, SOC integration, and more.

Azure Defender for IoT Product Documentation

You may find product documentation in the Azure portal:

by Contributed | Jun 9, 2021 | Technology

This article is contributed. See the original author and article here.

We have released for general availability Microsoft.Data.SqlClient 3.0. This .NET Data Provider for SQL Server provides general connectivity to the database and supports all the latest SQL Server features for applications targeting .NET Framework, .NET Core, and .NET Standard.

To try out the new package, add a NuGet reference to Microsoft.Data.SqlClient in your application.

If you’ve been following our preview releases, you know we’ve been busy working to add features and improve stability and performance of the Microsoft.Data.SqlClient library.

Some of the highlights of new features in 3.0 over the 2.1 release of Microsoft.Data.SqlClient include:

There are a few minor breaking changes in 3.0 over previous releases.

- .NET Framework 4.6.1 is the new minimum .NET Framework version supported

- The User Id connection property now requires Client Id instead of Object Id for User-Assigned Managed Identity when using the Active Directory Managed Identity authentication option

- SqlDataReader now returns a DBNull value instead of an empty byte[] for RowVersion/Timestamp values. Legacy behavior can be enabled by setting an AppContext switch.

For the full list of added features, fixes, and changes in Microsoft.Data.SqlClient 3.0, please see the Release Notes.

Again, to try out the new package, add a NuGet reference to Microsoft.Data.SqlClient in your application. If you encounter any issues or have any feedback, head over to the SqlClient GitHub repository and submit an issue.

David Engel

by Contributed | Jun 9, 2021 | Technology

This article is contributed. See the original author and article here.

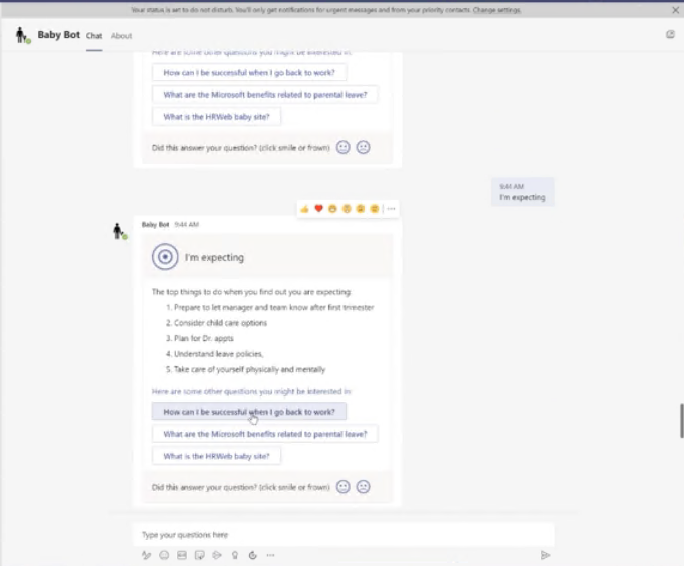

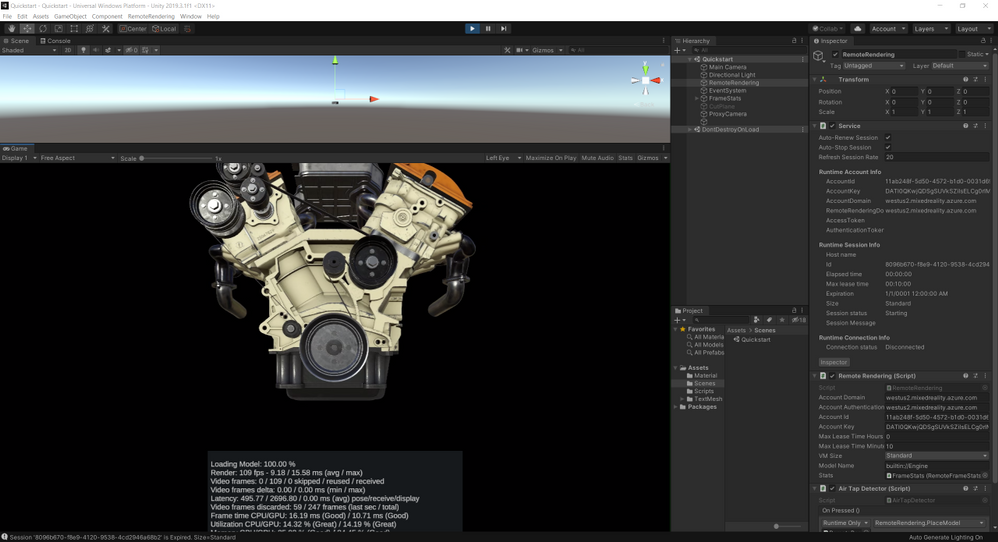

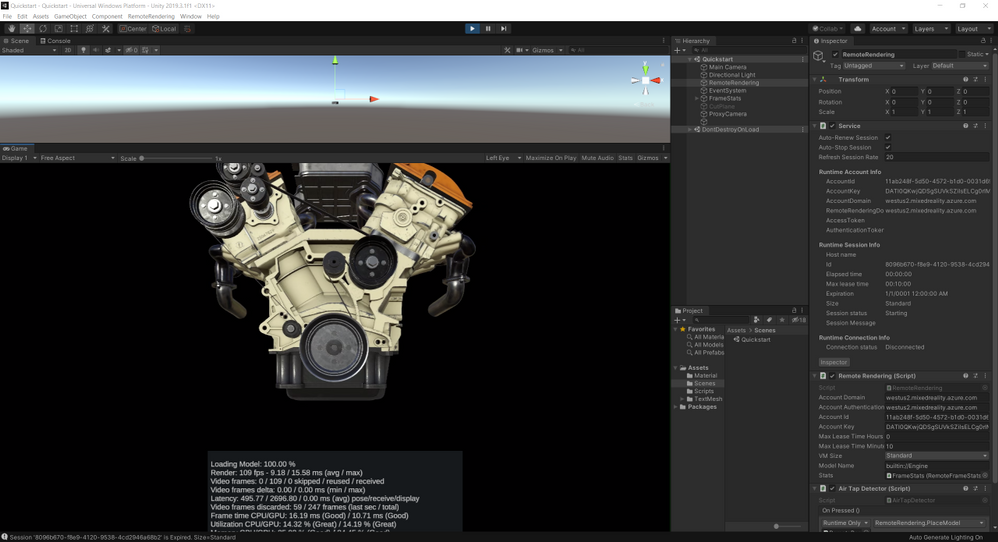

Mixed reality merges the digital and physical worlds. A key piece of the digital world is visualizing 3D models. Being able to view and interact with valuable 3D assets in mixed reality is improving workflows across many industries.

Azure Remote Rendering allows you to view these 3D models in mixed reality without the limitation of edge compute.

What is Azure Remote Rendering

Remote Rendering is a Mixed Reality Azure service that enables you to render high quality, interactive 3D content in Azure and stream it to devices like HoloLens 2 in real time. This lets you experience your 3D content in mixed reality with full detail, going beyond the limits of low powered devices. We offer an intuitive SDK backed by a powerful cloud service that makes it easy for you to integrate into your existing apps or any new apps.

Why use Azure Remote Rendering

As industries move to take advantage of mixed reality, being able to view complex 3D models unlocks a ton of business value. While HoloLens 2 is an incredible, untethered computer, it’s still limited in its processing power. To work around the limitation, designers and developers have traditionally resorted to a process called decimation where detail is removed from a model to enable visualization on mixed reality headsets. Decimation not only simplifies the model but can also take a large amount of time.

With Remote Rendering, you can skip the decimation process and view your complex 3D models in HoloLens 2 without any loss of detail.

Azure Remote Rendering Use Cases

Remote Rendering can bring value across many industries that require complex 3D models. Two use cases I want to highlight are 3D design review and layout visualization.

3D Design Review

Whether you are building a car engine or designing a new skyscraper, the ability to view the model in 3D and in mixed reality allows you to see how the product fits together. In addition to the benefits of viewing your product in 3D and spotting any issues, using Remote Rendering can create common understanding and speed up decision making, without having to go through a time intensive decimation process.

Layout Visualization

Imagine you are designing a factory line for a new product. With Remote Rendering and HoloLens 2, you can view the entire factory line in the real space to easily spot any errors, misalignments or missing pieces, thereby saving valuable time and avoiding costly rework.

Learn Module

We created a Microsoft Learn module to help you get started with Remote Rendering! In this module, we explore foundational concepts for Remote Rendering and take you through the process of creating the Azure resource followed by rendering a model in Unity. No device is required to complete the module; however, you’re welcome to deploy to a HoloLens to try out the experience in your real-world environment.

Check-out the video preview and visit aka.ms/learn-arr to get started!

by Contributed | Jun 9, 2021 | Technology

This article is contributed. See the original author and article here.

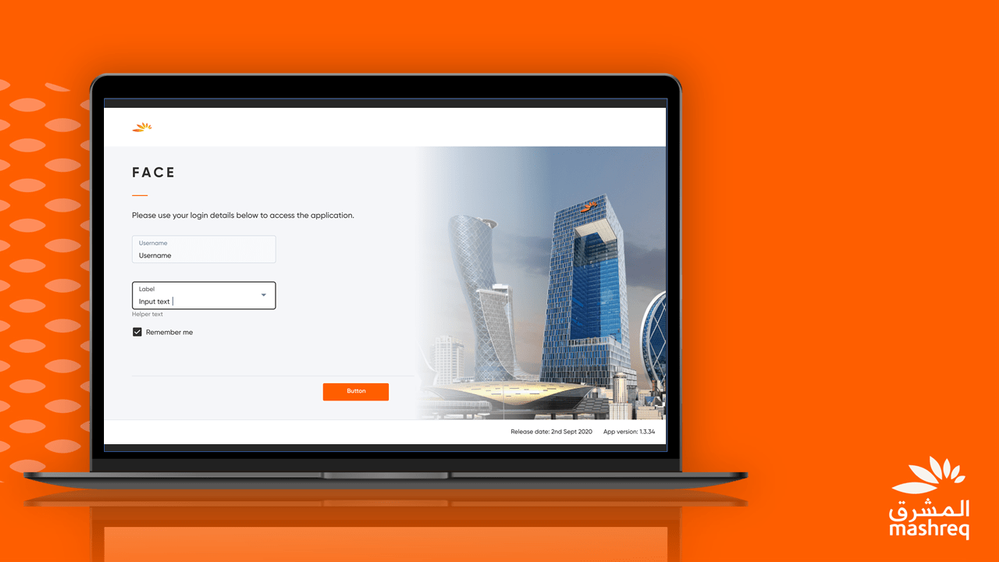

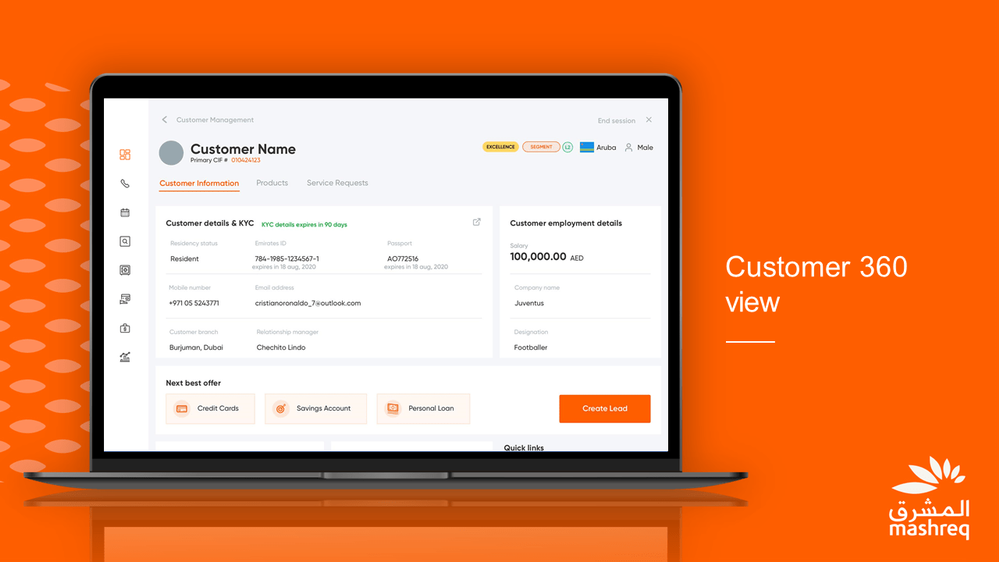

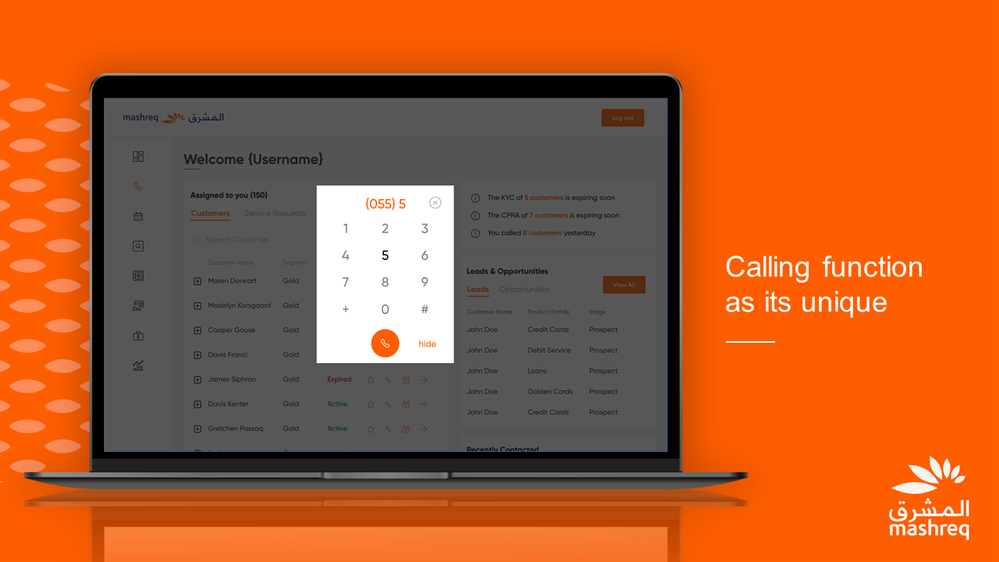

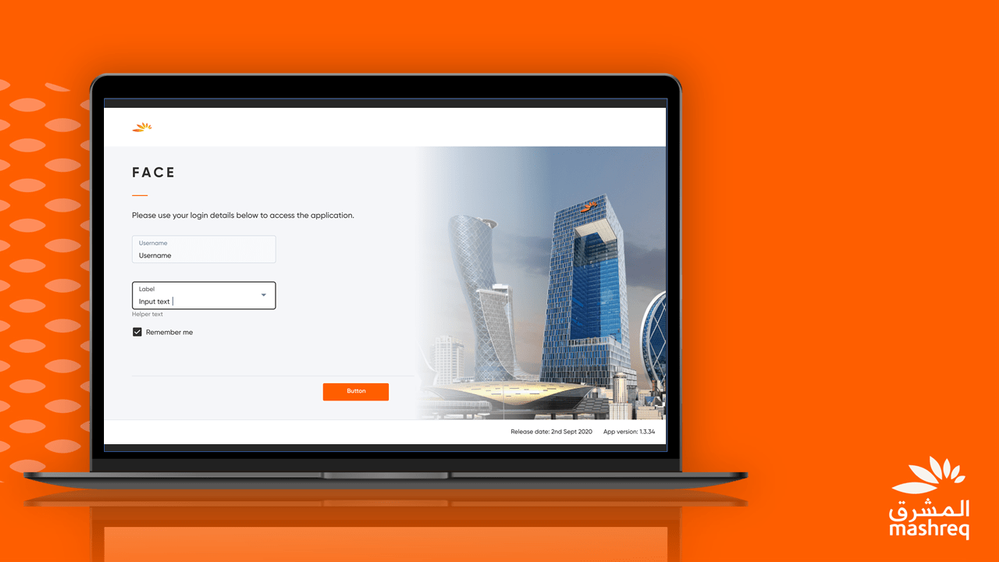

Introduction

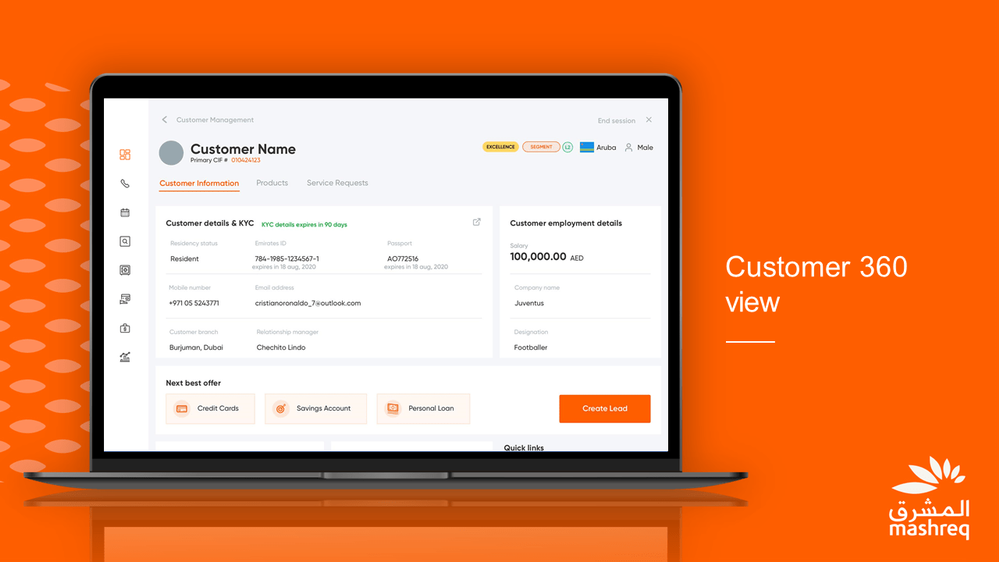

Mashreq is the oldest regional bank based in UAE, with a strong presence in most GCC countries and a leading international network with offices in Asia, Europe, and United States. Based in Dubai, they have 15 domestic branches and 11 international ones, with more than 2,000 Windows devices deployed across all of them. Mashreq is a leader in digital transformation in the banking sector and, in 2019, they started digital transformation journey with Microsoft 365 and Dynamics 365 and switched later to Surface Pro device for their frontline employees.

Mashreq bank developed an application Universal Banker App (UB App) with React Native to empower frontline employees working at branches to serve customers across a broad range of inquiries and journeys. The application helped them to increase the proximity with the customer, improving the customer experience and reducing the service time. After the success in one Business Unit, they decided to deploy the tool to all other business group, however they faced different challenges:

- UB app was built for Android, while the other business group were using Windows PCs and Microsoft Surfaces.

- UB App was optimized for touch input, which is best for mobile devices, while other business group often work with a mouse & keyboard setup.

- Mashreq development team had a limited experience with Windows native development.

Thanks to React Native for Windows, Mashreq was able to address all these challenges on a very short time and developed Mashreq FACE App for Windows platform.

Reusing the existing investments to deliver a first-class experience on Windows

React Native for Windows has enabled the development team to reuse the assets they build on React Native for the Android application. “React Native for Windows allowed us to extend the same experience of the original Android application with maximum code reusability” said Anubhav Jain, Digital Product Lead in Mashreq. Thanks to the modularity provided by React Native, the development team was able to build a native Windows experience tailored for the other business groups by utilizing the existing components they had built for the Android version.

Thanks to the investments done by Microsoft on React Native, this is no longer a mobile-only cross-platform framework, but it’s a great solution to support cross-platform scenarios across desktop as well. This has enabled Mashreq to provide a first-class experience to the employees who are interacting with the application on Windows, whether if they are using PCs optimized for mouse and keyboard or departments who have adopted Microsoft Surface to provide a great touch experience.

React Native for Windows has enabled the development team at Mashreq to develop with their existing workforce and allowed them to iterate faster on the project targeting multiple platforms.

React Native for Windows has enabled Mashreq not only to reuse their existing skills and code to bring their application to Windows, but also to enhance it by tailoring it with specific Windows features.

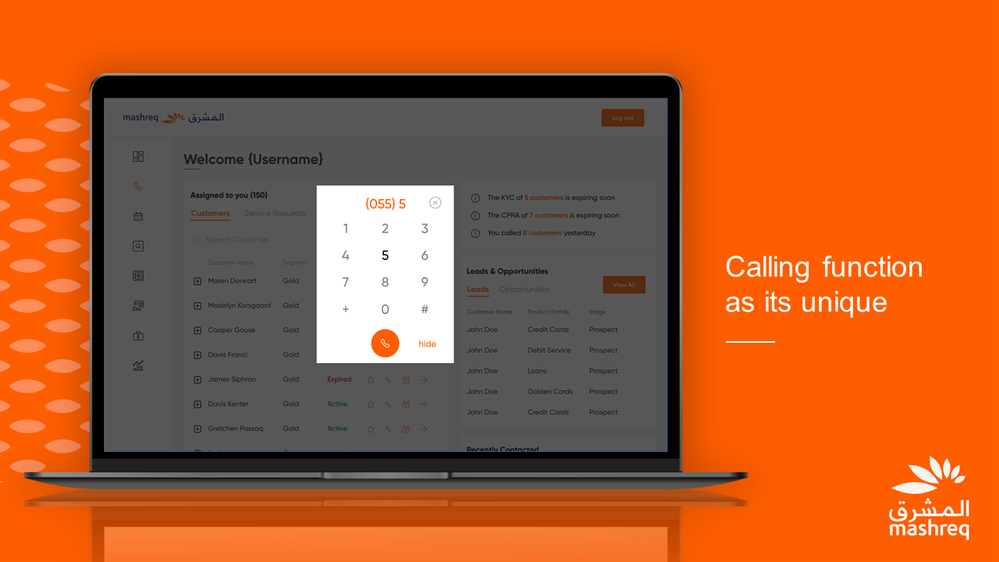

As part of their digital transformation journey, Mashreq has also deployed Microsoft Teams as official communication app internally and for external customers. The development team has integrated Microsoft Teams in FACE App on Windows, by enabling employees to call customers directly with just one click by using Microsoft Teams communication capabilities.

Since React Native for Windows generates a native Windows application, it empowers developers to support a wide range of Windows-only scenarios, like interacting with specialized Hardware. This feature has enabled Mashreq to implement specialized biometric authentication in FACE. By connecting to various types of biometric devices (such as card readers, fingerprint readers, etc.)-Mashreq can validate customer information simply by scanning the ID card provided by the government and do the fingerprint scanning. By working closely with Microsoft, Mashreq has been able to integrate inside the Windows version of FACE application the components required to enable the biometric authentication process while complying with government regulations. This integration enables to securely process financial & non-financial transactions.

Conclusion

Thanks to React Native for Windows, Mashreq will be able to seamlessly evolve Apps on Android and Windows while, at the same time, continue their digital transformation journey gradually adopting more Teams and Windows specific capabilities.

by Scott Muniz | Jun 9, 2021 | Security

This article was originally posted by the FTC. See the original article here.

Every day, the FTC is working to protect people from fraud. Today, the FTC announced that it returned $30 million in one of these cases.

The FTC reached a settlement with Career Education Corp. (CEC) over how the company promoted its schools. According to the FTC, CEC bought sales leads from companies that used deceptive websites to collect people’s contact information. The websites seemed to offer help finding jobs, enlisting in the military, and getting government benefits. Instead, the FTC says CEC used the information collected by these websites to make high-pressure sales calls to market its schools.

The FTC is mailing 8,050 checks averaging more than $3,700 each to people who paid thousands of dollars to CEC after engaging with one of these websites. The checks will expire 90 days after mailing, on September 9, 2021. If you have questions about these refunds, call 1-833-916-3603.

The FTC’s interactive map shows how much money and how many checks the FTC mailed to each state in this case, as well as in other recent FTC cases. There are also details about the FTC’s refund process and a list of recent cases that resulted in refunds at ftc.gov/refunds.

If you want to report a fraud, scam, or bad business practice to the FTC, visit ReportFraud.ftc.gov.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

by Contributed | Jun 9, 2021 | Technology

This article is contributed. See the original author and article here.

I am part of the team that is working on Digital transformation of Microsoft campuses across the globe. The idea is to provide experiences like Room Occupancy, People count, Thermal comfort, etc. to all our employees. To power these experiences, we deal with real estate like Buildings, IoT Devices and Sensors installed in the buildings and of course Employees. Our system ingests data from all kinds of IoT Devices and Sensors, processes and stores them in Azure Digital Twin (or ADT in short).

Since we are dealing with Microsoft buildings across the globe which results in a lot of data, customers often ask us about using ADT at scale – the challenges we faced and how we overcame them.

In this article, we will be sharing how we worked with ADT at scale. We will touch upon some of the areas that we focused on:

- Our approach to

- handle Model, Twins and Relationships Limits that impact Schema and Data.

- handle transaction limits like DT API, DT Query API, etc. that deal with continuous workload

- Scale Testing ADT under load.

- Design Optimizations.

- Monitoring and Alerting for a running ADT instance.

So, let’s begin our journey at scale .

.

ADT Limits

Before we get into understanding how to deal with scale, let’s understand the limits that ADT puts. ADT has various limits categorized under Functional Limits and Rate Limits.

Functional limits refer to the limits on the number of objects that can be created like Models, Twins, and Relationships to name a few. These primarily deal with the schema and amount of data that can be created within ADT.

Rate limits refer to the limits that are put on ADT transactions. These are the transactional limitations that every developer should be aware of, as the code we write will directly lead to consumption of these rates.

Many of the limits defined by ADT are “Adjustable”, which means ADT team is open to changing these limits based on your requirements via a support ticket.

For further details and a full list of limitations, please refer to Service limits – Azure Digital Twins | Microsoft Docs.

Functional Limits (Model, Twin and Relationships)

The first step in dealing with scale is to figure out if ADT will be able to hold the entire dataset that we want it to or not. This starts with the schema first followed by data.

Schema

This first step, as with any system, is to define the schema, which is the structure needed to hold the data. Schema in case of ADT is defined in terms of Models. Once Models were defined, it was straightforward to see that the number of Models were within the ADT limits.

While the initial set of Models may be within the limit, it is also important to ensure that there is enough capacity left for future enhancements like new models or different versions of existing models.

One learning that we had during this exercise was to ensure regular cleanup of old Model or old versions after they are decommissioned to free up capacity.

Data

Once the schema was taken care of, came the check for amount of data that we wanted to store. For this, we looked at the number of Twins and Relationships needed for each Twin. There are limits for incoming and outgoing relationships, so we needed to assess their impact as well.

During the data check, we ran into a challenge where for a twin, we had lot of incoming relationships which was beyond the allowed ADT limits. This meant that we had to go back and modify the original schema. We restructured our schema by removing the incoming relationship and instead created a property to flatten the schema.

To elaborate more on our scenario – we were trying to create a relationship to link Employees with a Building where they sit and work. For some bigger buildings, the number of employees in that building, were beyond the supported ADT limits for incoming relationships, hence we added a direct property in Employee model to refer to Building directly instead of relationship.

With that out of our way, we moved on to checking the number of Twins. Keep in mind that Twin limit applies to all kind of twins including incoming and outgoing relationships. Looking at number of twins that we will have in our system was easier as we knew the number of buildings and other related data that would flow into the system.

As in the case of Models, we also looked at our future growth to ensure we have enough buffer to cater to new buildings for future.

Pro Tip: We wrote a tool to simulate creation of twins and relationship as per our requirements, to test out the limits. The tool was also of great help in benchmarking our needs. Don’t forget to cleanup .

.

Rate Limits (Twin APIs / Query APIs / Query Units /…)

Now that we know ADT can handle the data we are going to store, the next step was to check the rate limits. Most ADT rate limits are handled per second e.g., Twin API operations RPS limit, Query Units consumed per seconds, etc.

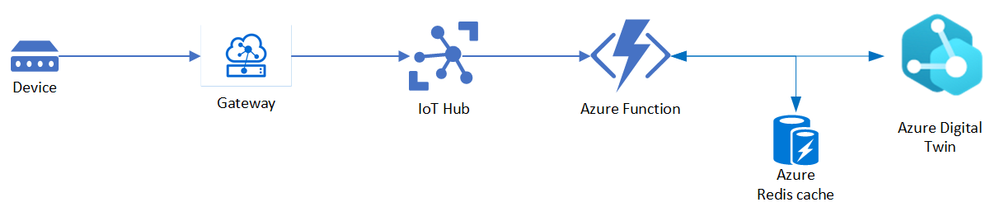

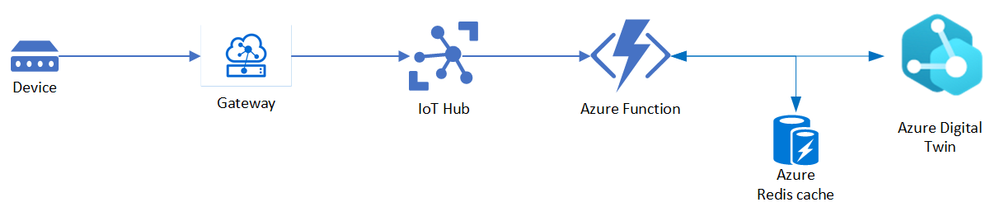

Before we get into details about Rate limits, here’s a simplistic view of our main sensor reading processing pipeline, which is where the bulk of processing happens and thus contributes to the load. The main component that does the processing here is implemented as Azure Function that sits between IoT Hub and ADT and works on ingesting the sensor readings to ADT.

This is pretty similar to the approach suggested by ADT team for ingesting IoT Hub telemetry. Please refer to Ingest telemetry from IoT Hub – Azure Digital Twins | Microsoft Docs for more information on the approach and few implementation details regarding this.

To identify the load which our Azure Function will be processing continuously, we looked at the sensor readings that will be ingested across the buildings. We also looked at the frequency at which a sensor sends a reading thus resulting into a specific load for the hour / day.

With this we identified our daily load which resulted into identifying a per second load or RPS as we call it. We were expected to process around 100 sensor readings per second. Our load was mostly consistent throughout as we process data from buildings all over the world.

Load (RPS)

|

100

|

Load (per day)

|

~8.5 million readings

|

Once the load data was available, we needed to convert load into the total number of ADT operations. So, we identified the number of ADT operations we will perform for every sensor reading that is ingested. For each reading, we identified below items:

- Operation type i.e., Twin API vs Query API operation

- Number of ADT operations required to process every reading.

- Query charge (or query unit consumption) for Query operations. This is available as part of the response header in the ADT client method.

This is the table we got after doing all the stuff mentioned above.

Twin API operation per reading

|

2

|

Query API operation per reading

|

2

|

Avg Query Unit Consumed per query

|

30

|

Multiplying the above numbers by the load gave us the expected ADT operations per second.

Apart from the sensor reading processing, we also had few other components like background jobs, APIs for querying data, etc. We also added the ADT usage from these components on top of our regular processing number calculations above, to get final numbers.

Armed with these numbers, we put up a calculation to get the actual ADT consumption we are going to hit when we go live. Since these numbers were within the ADT Rate limits, we were good.

Again, as with Models and Twins, we must ensure some buffer is there otherwise future growth will be restricted.

Additionally, when a service limit is reached, ADT will throttle the requests. For more suggestions on working with limits by ADT team, please refer to Service limits – Azure Digital Twins | Microsoft Docs

Scale

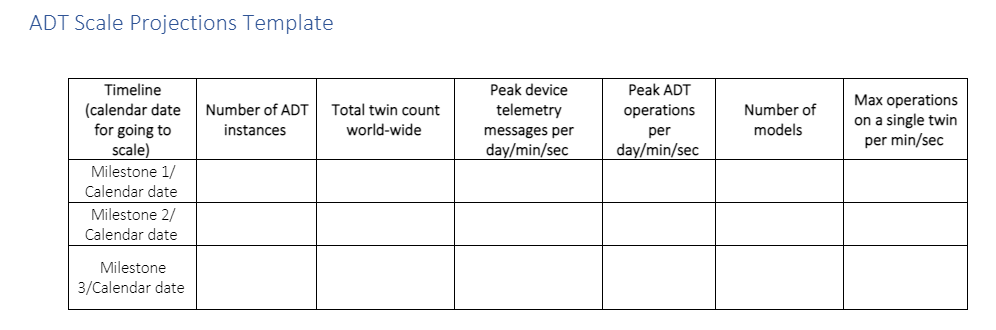

For future scale requirements, the first thing we did was to figure out our future load projections. We were expected to grow up to twice the current rate in next 2 years. So, we just doubled up all the numbers that we got above and got the future scale needs as well.

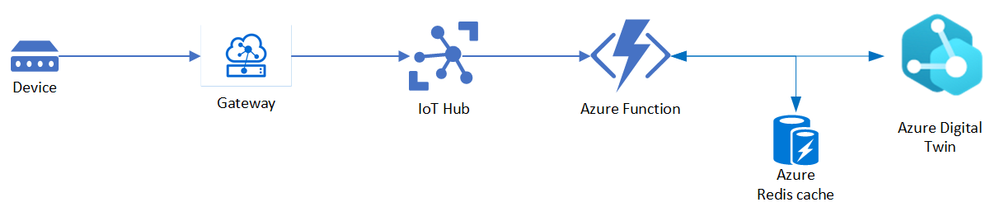

ADT team provides a template (sample below) that helps in organizing all this information at one place.

Once the load numbers and future projections were available, we worked with ADT team on the adjustable limits / getting the green light for the Scale numbers.

Performance Test

Based on the above numbers and after getting the green light from ADT team – to validate & verify the scale and ADT limits, we did performance test for our system. We used AKS based clusters to simulate ingestion of sensor readings, while also running all our other background jobs at the same time.

We ran multiple rounds of perf runs for different loads like X and 2X and gathered metrics around ADT performance. We also ran some endurance tests, where we ran varying loads continuously for a day or two to measure the performance.

Design Optimization (Cache / Edge Processing)

Typically, sensors send lot of unchanged readings. For example, a motion will come as false for a long duration and once it changes to true it will most likely stick to being true for some time before going back to false. As such, we don’t need to process each reading and such “no change” readings can be filtered out.

With this principle in mind, we added a Cache component in our processing pipeline which helped in reducing the load on ADT. Using Cache, we were able to reduce our ADT operations by around 50%. This helped us achieve a support for higher load with added advantage of faster processing.

Another change we did to optimize our sensor traffic was to add edge processing. We introduced an Edge module which acted as the Gateway between Device where the readings are generated and IoT Hub which acts as a storage.

The Gateway module processes the data closer to the actual physical devices and helped us in filtering out certain readings based on the rules defined e.g., filtering out health readings from telemetry readings. We also used this module to enrich our sensor readings being sent to IoT Hub which helped in reducing overall processing time.

Pro-active Monitoring and Alerts

All said and done, we have tested everything at scale and things look good. But does that mean we will never run into a problem or never reach a limit? Answer is “No”. Since there is no guarantee, we need to prepare ourselves for such eventuality.

ADT provides various Out of the Box (OOB) metrics that helps in tracking the Twin Count, RPS for Twin API or Query API operation. We can always write our own code to track more metrics if required, but in our case, we didn’t need to, as the OOB metrics were fulfilling our requirements.

To proactively monitor the system behavior, we created a dashboard in our Application Insights for monitoring ADT where we added widgets to track the consumptions for each of the important ADT limits. Here’s how the widget we have look like:

Query API Operations RPS

|

|

Twin API Operations RPS

|

Min

|

Avg

|

Max

|

|

Min

|

Avg

|

Max

|

50

|

80

|

400

|

|

100

|

200

|

500

|

Twin and Model Count

|

Model

|

Model Consumption %

|

Twin Count

|

Twin Consumption %

|

100

|

10%

|

80 K

|

40%

|

For being notified of any discrepancy in system – we have alerts configured to raise a flag in case we consistently hit the ADT limits over a period. As an example, we have alerts defined at various levels for Twin count say raise warning at 80%, critical error at 95% capacity, for folks to act.

An example of how it helped us – once due to some bug (or was it a feature J), a piece of code kept on adding unwanted Twins overnight. In morning we started getting alerts about Twin capacity crossing 80% limit and thus helped us getting notified of the issue and eventually fixing and cleaning up.

Summary

I would leave you with a simple summary – while dealing with ADT at Scale, work within the various limits by ADT and design your system keeping them in mind. Plan to test / performance test your system to catch issues early and incorporate changes as required. Setup regular monitoring and alerting, so that you can track system behavior regularly.

Last but not the least, keep in mind dealing with Scale is not a one-time thing, but rather a continuous work where you need to be constantly evolving, testing, and optimizing your system as the system itself evolves and grows and you add more features to it.

As IT Administrators, we all face daily challenges solving problems for our constituents. As we have seen over the last year, the world can use our help. In our small corner of the world, we would like to do our part to give back. When we, as IT Admins, use self-help resources to fix issues without opening support cases, we enable Microsoft to repurpose a portion of these savings towards social good.

As IT Administrators, we all face daily challenges solving problems for our constituents. As we have seen over the last year, the world can use our help. In our small corner of the world, we would like to do our part to give back. When we, as IT Admins, use self-help resources to fix issues without opening support cases, we enable Microsoft to repurpose a portion of these savings towards social good.

![[Event Recap] Humans of IT @ Microsoft Build (May 25th-27th, 2021)](https://www.drware.com/wp-content/uploads/2021/06/fb_image-112.png)

Recent Comments