by Contributed | Jun 25, 2021 | Technology

This article is contributed. See the original author and article here.

Guest blog by Electronic and Information Engineering Project team at Imperial College.

Introduction

We are a group of students studying engineering at Imperial College London!

Teams Project Repo and Resources

For a full breakdown of our project see https://r15hil.github.io/ICL-Project15-ELP/

Project context

The Elephant Listening Project (ELP) began in 2000, our client Peter Howard Wrege took over in 2007 as director https://elephantlisteningproject.org/.

ELP focuses on helping protect one of three types of elephants, the forest elephant which has a unique lifestyle and is adapted to living in dense vegetation such as the forest. They depend on fruits found in the forest and are known as the architect of the rainforest since they disperse seeds all around the forest. Since they spend their time in the forest it is extremely hard to track them since the canopy of the rainforest is so thick, you cannot see the forest floor, so ELP use an acoustic recorder to record vocalisations of the elephants to track them. Currently they have put one acoustic recorder per 25 square kilometre. These devices can detect elephant calls in a 1km radius and gunshots 2-2.5km radius around each recording site. Currently the recordings are recorded to an SD card, and then collection from all the sites takes a total of 21 days in the forest. This happens every 4 months. Once all the data is collected it goes through a simple ‘template detector’ which has 7 examples of gunshot sounds, cross correlation analysis is carried out, locations in the audio file with a high correlation get flagged as a gunshot.

The problem with the simple cross correlation method is that it produces an extremely large amount of false positives, such as tree branch falls and even things as simple as raindrops hitting the recording device. This means that there is a 15-20 day analysis effort, however it is predicted that with a better detector, this analysis effort can be cut down to a 2-3 day effort!

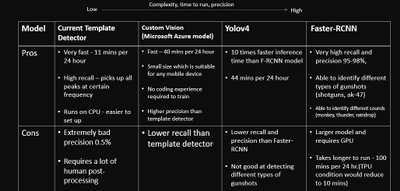

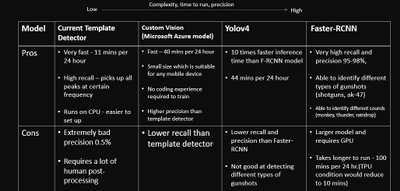

Currently the detector has an extremely high recall, but a very bad precision (0.5%!!!). The ideal scenario would be to improve the precision, in order to remove these false positives and make sure that when a gunshot is detected, the gunshot is in fact a gunshot (high precision).

The expectation is to have a much better detector than the one in place, that can detect gunshots to a very high precision with a reasonable accuracy. Distinguishing between automatic weapons (eg AK-47) and shotguns is also important, since automatic weapons are usually used to poach elephants. This is an important first step to real time detection.

Key Challenges

The dataset we had was very varied and sometimes noisy! It consisted of thousands of hours of audio recordings of the rainforest and a spreadsheet of the gunshots located in the files. The gunshots we were given varied, some close to the recorder and some far, sometimes multiple shots and sometimes single shots.

A lot of sounds in the rainforest sound like gunshots, but it is relatively easier distinguishing them using a frequency representation which brought us onto our initial approach.

Approach(es)

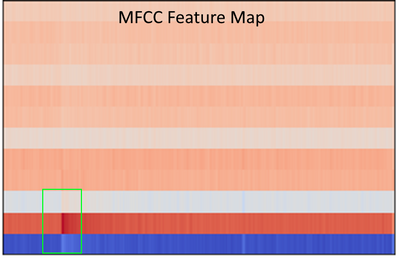

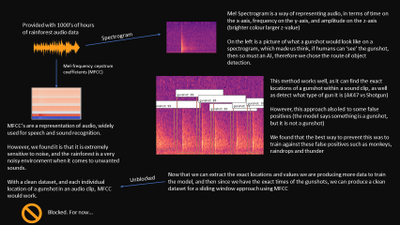

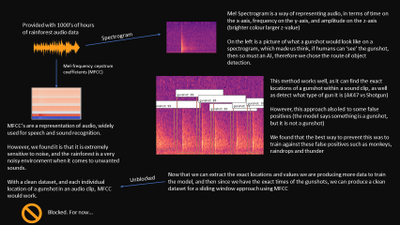

Our first approach was to use convolutional neural network known as CNN. We trained this using a method called mel-frequency cepstrum coefficients known as MFCCs.

This MFCC method is a well known way to tackle audio machine learning classification.

MFCC extracts features from frequency, amplitude and the time component. However, MFCCs are very sensitive to noise, and because the rainforest contains various sounds, testing it on 24 hour sound clips did not work. The reason it didn’t work is because our data of the gunshots to train our model also included other sounds (noise), which meant that our machine learning model learnt to recognise random forest background noises instead of gunshots. Due to a lack of time and knowledge on identifying gunshot, checking and relabelling all of our dataset manually so that it only clean gunshots was not viable. Hence, we decided to use a computer vision method as a second approach.

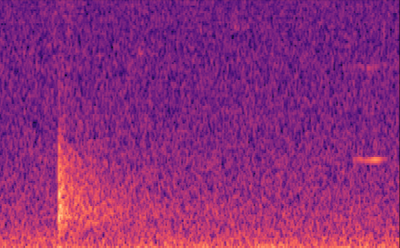

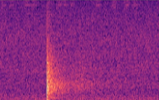

We focused on labelling gunshots on a visual representation of sound called the mel-spectrogram which allows us to ignore the noise other parts of the audio clip which would be noise, and specifically focus on the gunshot.

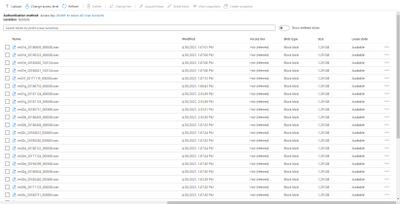

Labelling our data was extremely easy for our computer vision (object detection) model. We used the Data Labelling service on Azure Machine Learning where we could label our mel-spectrograms for gunshots and build our dataset to train our object detection models. These object detection models (Yolov4 and Faster R-CNN ) use XML files in training, Azure Machine Learning allowed us to export our labelled data in a COCO format in a JSON file. The conversion from COCO to the XML format we needed to train our models was done with ease.

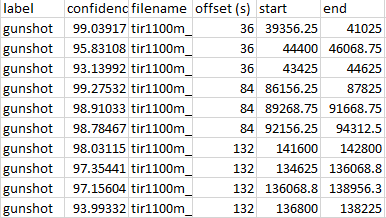

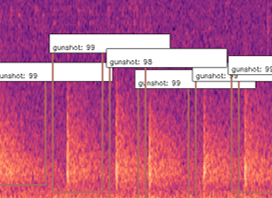

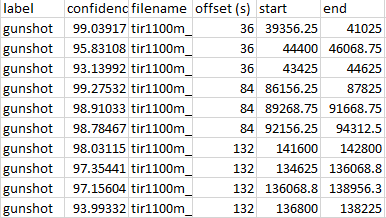

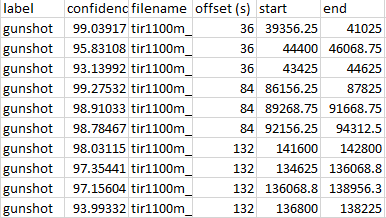

With the new object detectors, we were able to find the exact start and end times of gunshots and save it to a spreadsheet!

This is extremely significant because essentially what our system is doing is not only detecting gunshots, but generating clean and labelled data of gunshots, and with this data more standard methods of machine learning for audio methods can be used, such as MFCC.

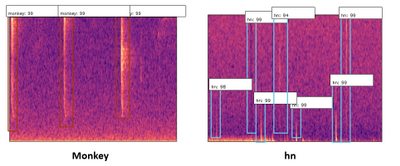

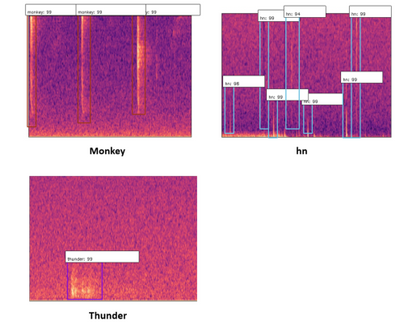

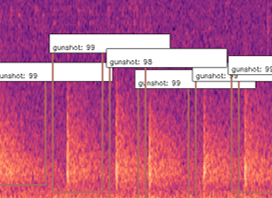

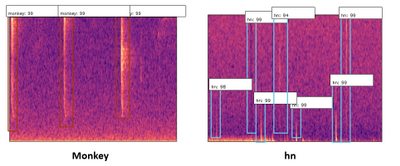

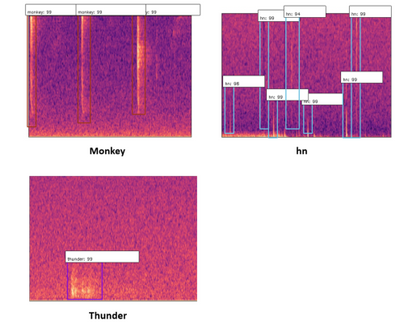

To expand on this, we also found that our detector was picking up “gunshot like” sound events such as thunder, monkey alarm calls and raindrops hitting the detector (‘hn’ in the image below). This meant that we could use that data to train our model further, to say ‘that is not a gunshot, it is a…’, training for the other sound events to be detected too.

Our Design History – Overview

Concept

The concept of our system was to train a machine learning model in order to classify sound. A sound classification model would be to detect whether or not there was a gunshot.

Signal Processing

Trying to detect gunshots directly from sound waveform is hard because it does not give you much information to work with, only amplitude. Hence, we needed to do some signal processing to extract some features from the sound.

Initially we began research by reading papers and watching video lectures in signal processing. All sources of research we used can be found in “Code and resources used”.

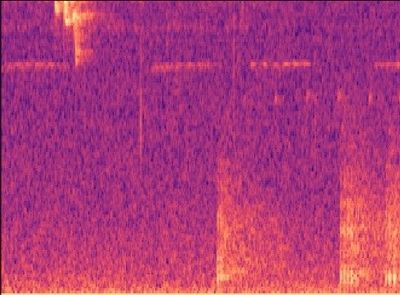

What we found were two common signal processing techniques in order to analyse sound, the Mel-Frequency Cepstrum Coefficients (MFCCs, on the left below) and the Mel-Spectrogram (on the right below).

Since MFCCs were a smaller representation and most commonly used in audio machine learning for speech and sound classification, we believed it would be a great place to start, and build a dataset on. We also built a dataset with Mel-spectrograms.

Machine Learning – Multi-layer perceptron (MLP)

The multi-layer perceptron is a common machine learning model used for things such as regression or classification, from our knowledge of machine learning we believed this network would not perform well due to its ‘global approach’ limitation, where all values are passed into the network at once. This will also have an extremely high complexity with the data representation of sounds we are using as we would have to flatten all the 3D arrays into a single 1D array and input all of this data at once.

Convolutional Neural Network (CNN)

CNNs are mainly applied to analyse visual 2D data therefore we do not have to flatten our data like we have to do with MLP. Using this you can classify an image to find whether or not a gunshot is present since is “scans through” the data and looks for patterns, in our case a gunshot pattern. We could use both MFCC or Mel-spectrograms to train this.

Recurrent Neural Network (RNN)

A recurrent neural network is a special type of network which can recognise sequencing, and therefore we believed it would work very well in order the detect gunshots. This is because a gunshot typically has an initial impulse and then a trailing echo, an RNN would be able to learn this impulse and trailing echo type scenario and output whether it was a gunshot or something else. This would be trained with MFCCs.

Object Detection

Although usually a larger architecture, object detection would work well in order to detect gunshots from Mel-Spectrograms due to a gunshots unique shape. This means that we can detect whether there is a gunshot present, but also how many gunshots are present as Object Detection won’t classify the entire image like a CNN would, it would classify regions of the image.

Implementation -The Dataset

The data we were given was a key factor and had a direct impact in which models worked for us and did not.

We were given thousands of hours of audio data, each file being 24 hours long. Since we were provided with ‘relative’ gunshot locations in audio clips, our dataset was very noisy, the time locations we were given usually contained a gunshot within 12 seconds of the given time, and sometimes contained multiple gunshots. Due to the lack of time on this project, it was not realistic to listen to all of the sound clips and manually relabel all our data by ear, also, gunshots are sometimes very hard to distinguish from other rainforests sounds by ear, and many of these other sounds were very new to us. This is why we took a 12 second window in our signal processing, to ensure all of our data contains the gunshot(s). Luckily, in our testing of different machine learning techniques, we found an approach to solve the problem of detecting gunshots in noisy data, as well as building a clean dataset for the future!

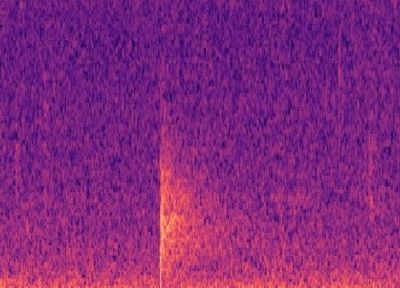

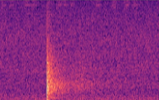

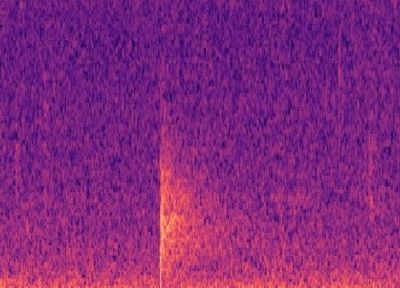

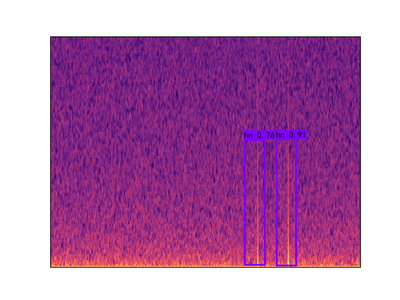

CNN with Mel-Spectrogram

Building a simple CNN with only a few convolutional layers, which a dense layer classifying whether there is a gunshot or not, worked quite well, with precision and recall above 80% on our validation sets! However, this method on noisy images did not work well, noisy images being a Mel-spectrogram with a lot of different sounds, such as below. Although it has an OK precision and recall, we cannot guarantee all the gunshots will be the only sounds occuring at once. Below you can see an example of a gunshot which can be detected using a CNN, and a gunshot that cannot be detected using a CNN.

CNN and RNN with MFCC

This method suffered a similar problem with noise as the CNN with Mel-Spectrogram did. However with a clean dataset, we think an RNN with MFCC would work extremely well!

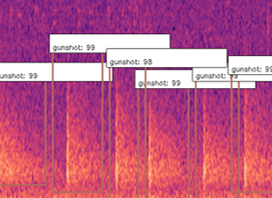

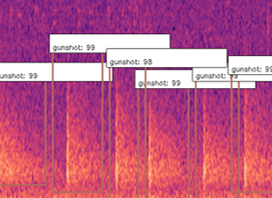

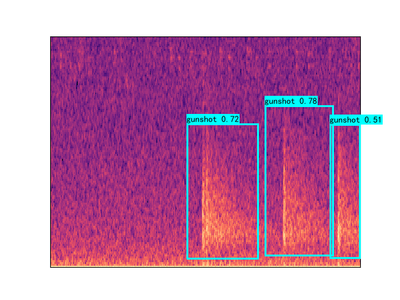

Object Detection with Mel-Spectrogram

Object Detection won’t classify the entire image like a CNN would, it would classify regions of the image, and therefore it can ignore other sound events on a Mel-spectrogram. In our testing we found that object detection worked very well in detecting gunshots on a mel-spectrogram, and could even be trained to detect other sound events such as raindrops hitting the acoustic recorder, monkey alarm calls and thunder!

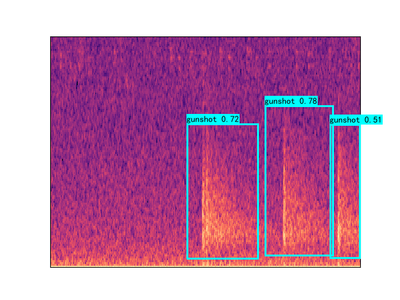

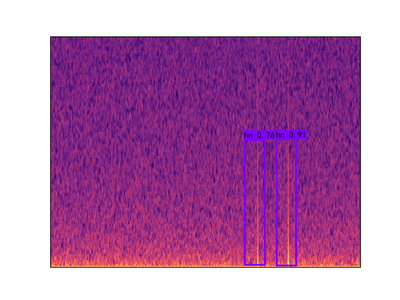

What this allowed us to do was to also get the exact start and end times of gunshots, and hence automatically building a clean dataset that can be used to train networks in the future with much cleaner data that contains the exact location of these sound events! This method also allowed us to count the gunshots by seeing how many were detected in the 12s window, as you can see below.

Build – Use of cloud technology

In this project, the use of cloud technology allowed us to speed up many processes, such as building the dataset. We used Microsoft Azure to store our thousands of hours of sound files, and Azure Machine Learning Services to train some of our models, pre-process our data and build our dataset!

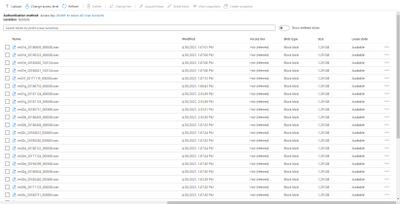

Storage

In order to store all of our sound files, we used blob storages on Azure. This meant that we could import these large 24 hour audio files into Azure Machine Learning Services for building our dataset. Azure Machine Learning Services allowed us to create IPYNB notebooks to run our scripts to find the gunshots in the files, extract them and build our dataset.

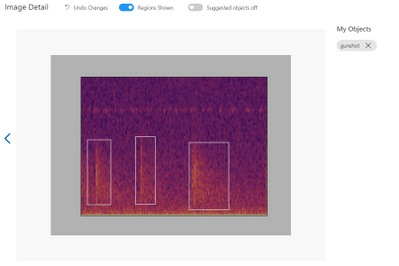

Data labelling

Azure ML also provided us with a Data Labelling service where we could label our Mel-spectrograms for gunshots and build our dataset to train our object detection models. These object detection models (Yolov4 and Faster R-CNN ) use XML files in training, Azure ML studio allows us to export our labelled data in a COCO format in a JSON file. The conversion from COCO to the XML format we needed to train our models was done with ease.

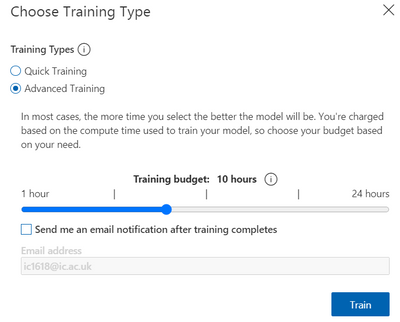

Custom Vision

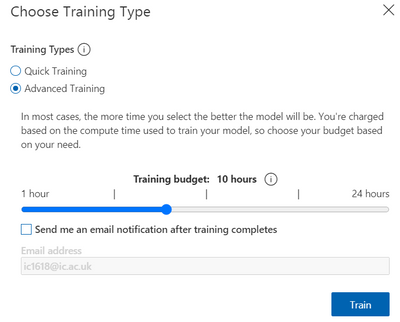

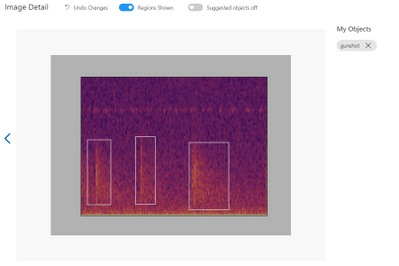

The Custom Vision model is the simplest model of the three to train. We did this by using Azure Custom Vision, it allowed us to upload images, label the images and train models with no code at all!

Custom Vision is a part of the Azure Cognitive Services provided by Microsoft which is used for training different machine learning models in order to perform image classification and object detection. This service provides us with an efficient method of labelling our sound events (“the objects”) for training models that can detect gunshot sound events in a spectrogram.

First, we have to upload a set of images which will represent our dataset and tag them by creating a bounding box around the sound event (“around the object”). Once the labelling is done, the dataset is used for training. The model will be trained in the cloud so no coding is needed in the training process. The only feature that we have to adjust when we train the model is the time spent on training. A longer time budget allowed means better learning. However, when the model cannot be improved anymore, the training will stop even if assigned a longer time.

The service provides us with some charts for the model’s precision and recall. By adjusting the probability threshold and the overlap threshold of our model we can see how the precision and recall changes. This is helpful when trying to find the optimal probability threshold for detecting the gunshots.

The final model can be exported for many uses, in many formats. We use the Tensorflow model. The system contains two python files for object detection, a pb file that contains the model, a json file with metadata properties and some txt files. We adapted the system from the standard package provided. The sound files are preprocessed inside the predict.py file and it extracts the audio files from the sounds folder and it exports labelled pictures with the identified gunshots in cache/images folder. It then does inference on the spectrogram to detect gunshots. Once all the soundfiles have been checked a CSV is generated with the start and end times of the gunshots detected within the audio.

Yolov4 Introduction

The full name of YOLOV4 is YOU ONLY LOOK ONCE. It is an object detector which consists of a bunch of deep learning techniques and completely based on Convolutional Neural Network(CNN). The primary advantages of YOLOv4 compared to the other object detector are the fast speed and relatively high precision.

The objective of Microsoft project 15 is detecting the gunshots in forest. The conventional audio classification method is not effective and efficient on this project, because the labelling of true locations of gunshots in training set is inaccurate. Therefore, we start to consider computer vision method, like YOLOV4, to do the detection. Firstly, the raw audio files are converted into thousands of 12 seconds clips and represented in Mel-spectrum. After that, according to the information of true locations of gunshots, the images that contain the gunshots are picked out and construct training data set. These pre-processing steps are the same with the other techniques used to do the detection for project 15 and you can find the detailed explanations from https://r15hil.github.io/ICL-Project15-ELP/.

In this project, YOLOV4 can be trained and deployed on NVIDIA GeForce GTX 1060 GPU and the training time is approximately one hour.

Implementation

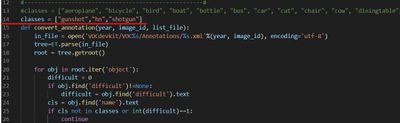

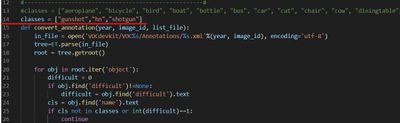

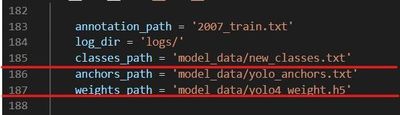

1. Load Training Data

In order to train the model, the first step we need to do is loading the training data in yolov4-keras-masterVOCdevkitVOC2007Annotations. The data has to be in XML form and includes the information about the type of the object and the position of the bounding box.

Then run voc_annotations file to save the information of training data into a .txt file. Remember to modify the classes list in line 14 before operating the file.

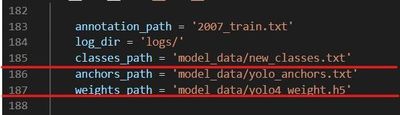

2. Train the Model

The train.py file is used to train the model. Before running the file, make sure the following file paths are correct.

3. Test

For more detailed testing tutorial, follow How to run

More Explanations

The idea of using YOLOV4 is after training and testing Faster R-CNN method. Faster R-CNN model achieves 95%-98% precision on gunshot detection which is extraordinarily improved compared to the current detector. The training data for YOLOV4 model is totally same with that used in the Faster R-CNN model. Therefore, in this document, the detection results of YOLOV4 will not be the main points to talk about. Briefly speaking, the YOLOV4 can achieve 85% precision but detect the events with lower probability than Faster R-CNN .

The reason why we still build YOLOV4 model is that it can make our clients to have diversified options and choose the most appropriate proposal in the real application condition. Even though Faster R-CNN model has the best detection performance, the disadvantage is obvious which is its operation time. In the case of similar GPU, the Faster R-CNN needs 100 minutes to process 24 hours audio file while YOLOV4 only takes 44 minutes to do the same work.

Faster R-CNN – Introduction

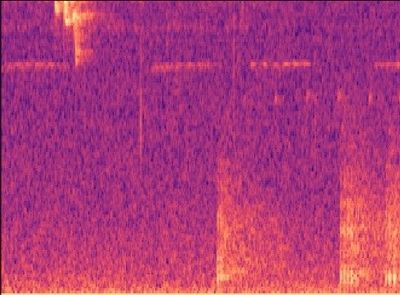

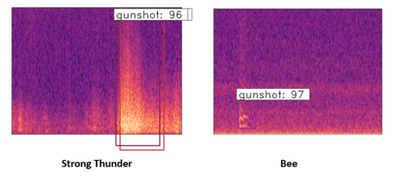

Figure1. Gunshot and some example sounds that cause errors for the current detectors

The Faster R-CNN code provided above is used to tackle the gunshot detection problem of Microsoft project 15. (see Project 15 and Documentation). The gunshot detection is solved by object detection method instead of any audio classification network method under certain conditions.

- The Limited number of forest gunshot datasets given with random labeled true location, which generally require 12s to involve single or multi gunshot in the extracted sound clip. The random label means that the true location provided is not the exact location of the start of the gunshot. Gunshot may happen after the given true location in the range of one to twelve 12 seconds. The number of gunshots after true location also ranging from single gunshots over to 30 gunshots.

- We do not have enough time for manually relabelling the audio dataset and limited knowledge to identify gunshots from any other similar sounds in the forest. In some case, even gunshot expert find difficulty to identify gunshot from those similar forest sound. e.g., tree branch.

- Forest gunshots shoot at different angles and may shoot from miles. Online gunshot dataset would not implement to this task directly.

- Current detector used in the forest has only 0.5% precision. It will peak up sound like raindrop and thunder shown in Figure1. Detection needs long manual post-processing time. Hence, the given audio dataset would be limited and involve mistakes.

The above conditions challenge audio classification methods like MFCC with CNN. Hence, the objection detection method provides an easier approach under a limited time. Object detection provides faster labeling through Mel-spectrum images, which are shown above. Mel spectrum also provides an easy path to identify gunshots without the specialty of gunshot audio sound. The Faster R-CNN model provides high precision 95%-98% on gunshot detection. The detection bounding box can also be used to re-calculate both the start and end times of the gunshot. This reduces manual post-processing time from 20 days to less than an hour. A more standard dataset also be generated for any audio classification algorithm in future development.

Set up

tensorflow 1.13-gpu (CUDA 10.0)

keras 2.1.5

h5py 2.10.0

pre-trained model ‘retrain.hdf5’ can be download from here.

The more detailed tutorial on setting up can be found here.

Pre-trained model

The latest model is trained under a multi-classes dataset including AK-47 gunshot, shotgun, monkey, thunder, and hn, where hn represent hard negative. Hard negatives are high-frequency mistakes made by the current detector, which are mostly peak without an echo shape spectrum. For example, any sounds have the shape of the raindrop (just peak without echo) but can be appeared in the different frequency domain with a raindrop. The initial model is only trained with gunshot and hn datasets due to the limited given manual dataset. Then the network test under a low threshold value, e.g.0.6, to obtain more false positive datasets or true gunshot among thousand hours forest sound file testing. The model will then use those hard negatives to re-train. This technic also calls hard mining. The following images Figure.2 show three hard negative datasets (monkey, thunder, hn) used to train the lastest model.

Figure2. three type of hard negative enter into training

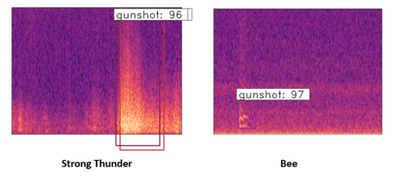

The model also provides classification between different gunshot which are AK-47 and shotgun shown in Figure3.

Figure3. Mel-spectrum image of AK-47 and shotgun

Result

The following testing result shown in Table1 is based on the experiment on over 400 hours of forest sound clips given by our clients. The more detailed information on other models except Faster R-CNN can be found in https://r15hil.github.io/ICL-Project15-ELP/.

Model name |

current template detector |

custom AI(Microsoft) |

YOLOV4 |

Faster R-CNN |

|---|

Gunshot dectection precision |

0.5% |

60% |

85% |

95% |

Table1. testing result from different models

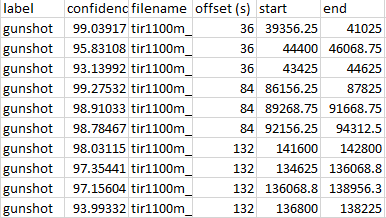

The model also generates a CSV file shown in Figure4. It includes the file name, label, offset, start time, and end time. The offset is the left end axis of the Mel-spectrum, where each Mel-spectrum is conducted from 12s sound clips. The start time and end time can then calculated using the offset and the detection bounding coordinate. Therefore, this CSV file would provide a more standard dataset, where the true location will more likely sit just before the gunshot happen. Each cutting sound clip is likely to include a single gunshot. Therefore, this dataset would involve less noise and wrong labeling. It will benefit future development on any audio classification method.

Figure4. example of csv file for gunshot labelling

Current model weaknesses and Future development

The number of the hard negative dataset is still limited after 400 hours of sound clip testing. Hard negatives like the bee and strong thunder sound(shown in Figure5) are the main predicted errors for the current model. To improve the model further, it should find more hard negatives using a lower threshold of the network through thousands of hours of sound clip testing. Then, the obtained hard negative dataset can be used to re-train and improve the model. However, the current model can avoid those false positives by setting around a 0.98 threshold value, but it will reduce the recall rate by 5-10%. The missing gunshots are mainly long-distance gunshots. This is also caused by very limited long-distance gunshots provided in the training dataset.

Figure5. Mel-spectrum image of the strong thunder and bee

Reference

https://github.com/yhenon/keras-rcnn

Results

Model name |

current template detector |

custom AI(Microsoft) |

YOLOV4 |

Faster R-CNN |

|---|

Gunshot dectection precision |

0.5% |

60% |

85% |

95% |

Table1. testing result from different models

What we learnt

Throughout this capstone project we learnt a wide range of things, not only to do with technologies but also the importance and safety of forest elephants, and how big of an impact they have on rainforests.

Poaching these elephants means that there is a reduce in the amount of seed dispersion, essentially preventing the diverse nature of rainforests and the spread of fruits and plants across the forest in which many animals depend on.

“Without intervention to stop poaching, as much as 96 percent of Central Africa’s forests will undergo major changes in tree-species composition and structure as local populations of elephants are extirpated, and surviving populations are crowded into ever-smaller forest remnants,” explained John Poulson from Duke University’s Nicholas School of the Environment.

In terms of technologies, we learnt a lot about signal processing through MFCCs and mel-spectrograms; how to train and use different object detection models; feature extraction from data; how to use Azure ML studio and blob storage.

Above all, we learnt that in projects with a set goal, there will always be obstacles but tackling those obstacles is really when your creative and innovative ideas come to life.

For full documentation and code visit https://r15hil.github.io/ICL-Project15-ELP/

Learning Resources

Classify endangered bird species with Custom Vision – Learn | Microsoft Docs

Classify images with the Custom Vision service – Learn | Microsoft Docs

Build a Web App with Refreshable Machine Learning Models – Learn | Microsoft Docs

Explore computer vision in Microsoft Azure – Learn | Microsoft Docs

Introduction to Computer Vision with PyTorch – Learn | Microsoft Docs

Train and evaluate deep learning models – Learn | Microsoft Docs

PyTorch Fundamentals – Learn | Microsoft Docs

by Contributed | Jun 25, 2021 | Technology

This article is contributed. See the original author and article here.

ATTACK SURFACE REDUCTION: WHY IS IMPORTANT AND HOW TO CONFIGURE IN PRODUCTION

Silvio Di Benedetto is founder and CEO at Inside Technologies. He is a Digital Transformation helper, and Microsoft MVP for Cloud Datacenter Management. Silvio is a speaker and author, and collaborates side-by-side with some of the most important IT companies including Microsoft, Veeam, Parallels, and 5nine to provide technical sessions. Follow him on Twitter @s_net.

WSP Deployer: Deploy WSP SharePoint 2019 Solutions using PowerShell

Mohamed El-Qassas is a Microsoft MVP, SharePoint StackExchange (StackOverflow) Moderator, C# Corner MVP, Microsoft TechNet Wiki Judge, Blogger, and Senior Technical Consultant with +10 years of experience in SharePoint, Project Server, and BI. In SharePoint StackExchange, he has been elected as the 1st Moderator in the GCC, Middle East, and Africa, and ranked as the 2nd top contributor of all the time. Check out his blog here.

My favorite Power BI announcements from the Business Application Summit

Marc Lelijveld is a Data Platform MVP, Power BI enthusiast, and public speaker who is passionate about anything which transforms data into action. Currently employed as a Data & AI consultant in The Netherlands, Marc is often sharing his thoughts, experience, and best-practices about Microsoft Data Platform with others. For more on Marc, check out his blog.

Microsoft Whiteboard in Teams meeting has new look and tools

Vesku Nopanen is a Principal Consultant in Office 365 and Modern Work and passionate about Microsoft Teams. He helps and coaches customers to find benefits and value when adopting new tools, methods, ways or working and practices into daily work-life equation. He focuses especially on Microsoft Teams and how it can change organizations’ work. He lives in Turku, Finland. Follow him on Twitter: @Vesanopanen

Teams Real Simple with Pictures: Getting Hands on with Boards

Chris Hoard is a Microsoft Certified Trainer Regional Lead (MCT RL), Educator (MCEd) and Teams MVP. With over 10 years of cloud computing experience, he is currently building an education practice for Vuzion (Tier 2 UK CSP). His focus areas are Microsoft Teams, Microsoft 365 and entry-level Azure. Follow Chris on Twitter at @Microsoft365Pro and check out his blog here.

by Contributed | Jun 25, 2021 | Technology

This article is contributed. See the original author and article here.

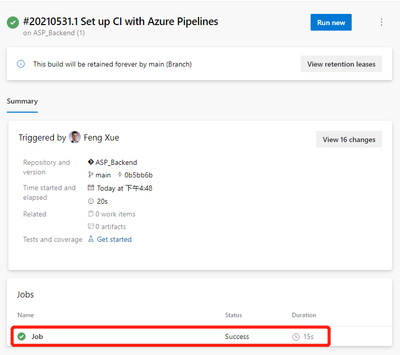

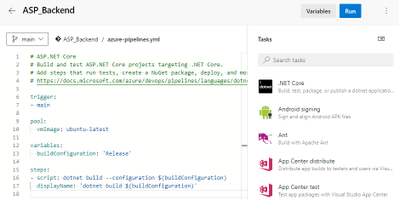

Configure the CI pipeline

The source and target are ready, let’s configure the CI pipeline first.

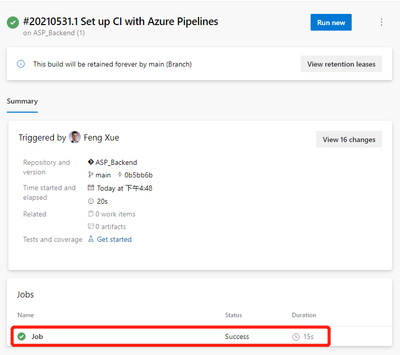

Create a pipeline and build the back-end project first

In the Azure DevOps console click the left navigation link Pipelines under Pipelines and the New pipeline button in the upper right corner.

Follow the wizard. Choose Azure Repos Git for Where your code.

For Select a repository selects the ASP_Backend library prepared earlier.

For Configure your pipeline, click the Show more button, and then click ASP.Net Core.

Click the Save and run button in the upper right corner. For the prompted float layer, use the default value. Click Save and run buttons in the lower right corner to run the pipeline. It then jumps to the pipeline execution page. Wait a minute, we’ll see the job done, and there’s a green check icon in front of Job, indicating that this first step, ASP.Net build, was successful.

The integrated pipeline was created successfully, and the first task was completed. But until now, nothing has been generated or saved. It doesn’t matter, let’s go step by step to explain in detail, leading you to create a pipeline from scratch. Next, let’s save the artifact.

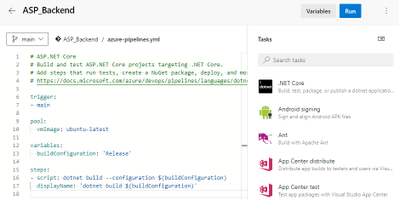

Save the artifact

Let’s go back to the Pipeline we just created and click the Edit button in the upper right corner to see that this is a YAML file.

Here’s the concrete steps to be taken by this pipeline, and you can see that there’s only one step now, which is to build the current back-end project using dotnet build. Let’s add another 2 steps.

- task: DotNetCoreCLI@2

displayName: 'dotnet publish'

inputs:

command: publish

publishWebProjects: false

projects: '**/*. csproj'

arguments: '--configuration $(BuildConfiguration) --output $(build.artifactstagingdirectory)'

zipAfterPublish: true

- task: PublishBuildArtifacts@1

inputs:

PathtoPublish: '$(Build.ArtifactStagingDirectory)/'

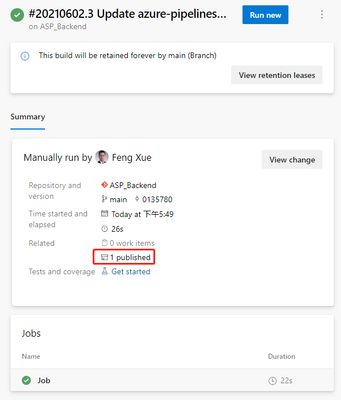

The first of the two new steps is to publish the artifact to the specified path, and the second is to save the published artifact.

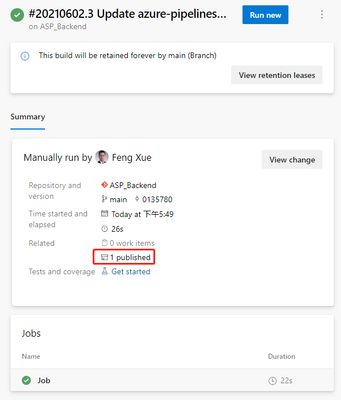

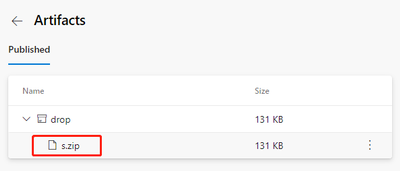

Click the Save button in the upper right corner, then the Run button, and wait patiently for a while for our updated Pipeline to finish. Then come back to the results page of the task execution and you’ll see 1 Published under Related.

Clicking on this 1 Published link will take you to the following artifact page.

We can download this artifact file s.zip by clicking its name. After downloading, open this compressed package file with unzip command, you can see that inside the package is built files by dotnet build command.

unzip -l s.zip

Archive: s.zip

Length Date Time Name

-------- ---- ---- ----

138528 06-02-21 09:50 ASP_Backend

10240 06-02-21 09:50 ASP_Backend.Views.dll

19136 06-02-21 09:50 ASP_Backend.Views.pdb

106734 06-02-21 09:50 ASP_Backend.deps.json

11264 06-02-21 09:50 ASP_Backend.dll

20392 06-02-21 09:50 ASP_Backend.pdb

292 06-02-21 09:50 ASP_Backend.runtimeconfig.json

62328 04-23-21 18:32 Microsoft.AspNetCore.SpaServices.Extensions.dll

162 06-02-21 09:50 appsettings. Development.json

196 06-02-21 09:50 appsettings.json

487 06-02-21 09:50 web.config

0 06-02-21 09:50 wwwroot/

5430 06-02-21 09:50 wwwroot/favicon.ico

-------- -------

375189 13 files

At this point, back-end builds and artifacts are saved. Let’s take a look at the build of the front end. Before we can build the front end, we need to include a second source repository into the current pipeline.

by Contributed | Jun 25, 2021 | Technology

This article is contributed. See the original author and article here.

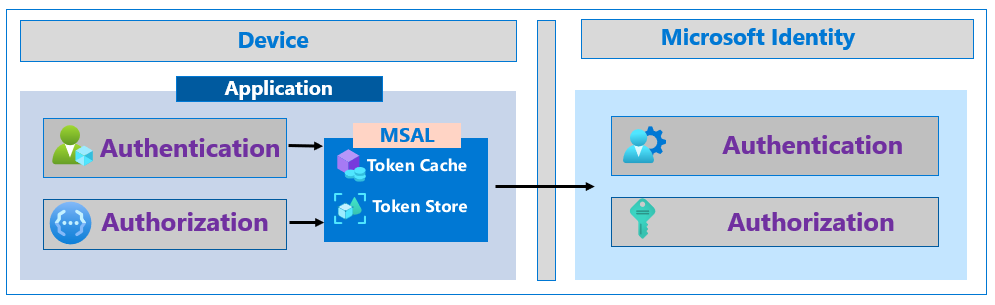

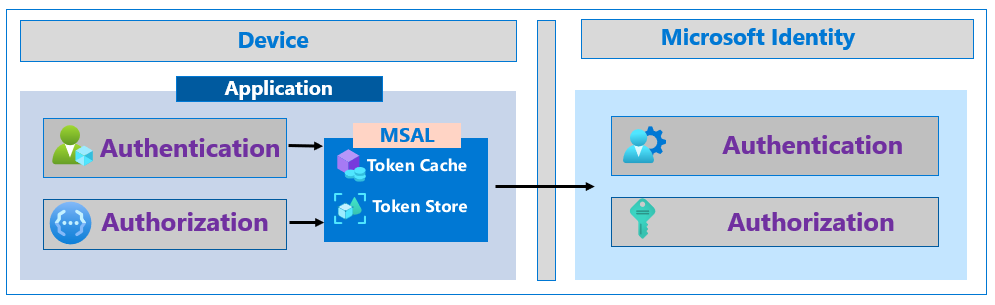

Call Summary:

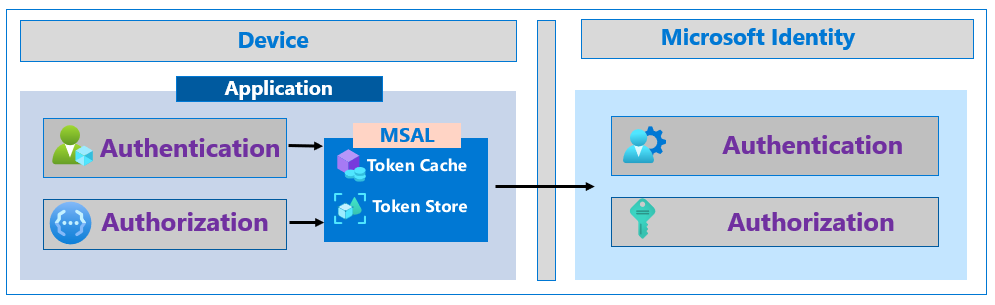

This month’s in-depth topic: Increase the resilience of authentication and authorization applications you develop. Tips for adding and increasing resiliency in apps that sign-in users and apps without users. Using a Microsoft Authentication Library and best practices to follow if you use a different library. Authorization with JWT and using Microsoft Continuous Access Evaluation (CAE). Demo and tips on evaluating/adopting CAE. Resilient methods for fetching metadata and validating tokens including use of customized token validation, as needed. This session was delivered by Microsoft Program Managers Harish Suresh | @harish_suresh and Kyle Marsh | @kylemar and was recorded on June 17, 2021. Live and in chat Q&A throughout call

Resources:

Actions:

Stay connected:

by Scott Muniz | Jun 25, 2021 | Security, Technology

This article is contributed. See the original author and article here.

Citrix has released security updates to address vulnerabilities in Hypervisor. An attacker could exploit these vulnerabilities to cause a denial-of-service condition.

CISA encourages users and administrators to review Citrix Security Update CTX316325 and apply the necessary updates.

by Contributed | Jun 25, 2021 | Technology

This article is contributed. See the original author and article here.

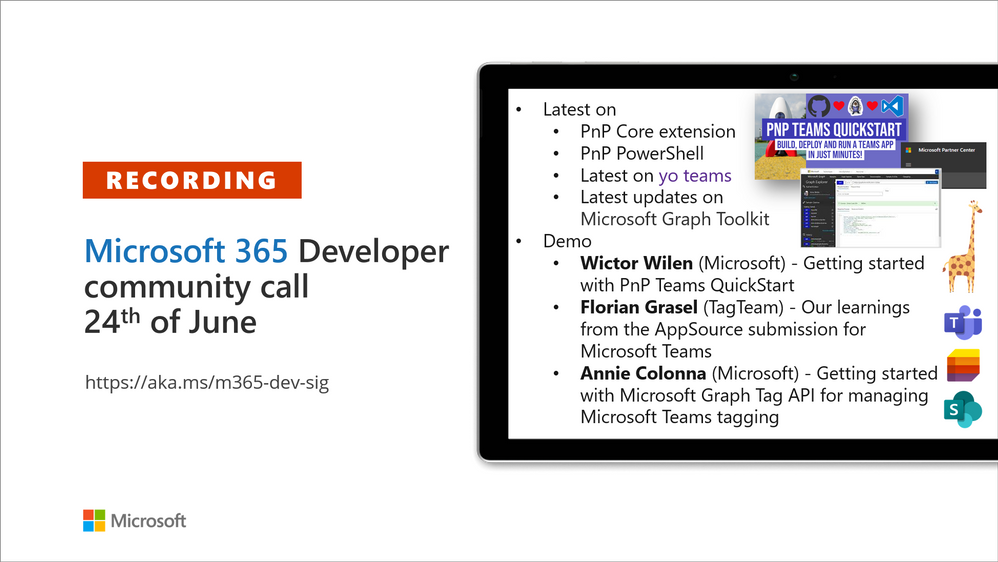

Recording of the Microsoft 365 – General M365 development Special Interest Group (SIG) community call from June 24, 2021.

Call Summary

Summer break and community call schedule updates reviewed. Preview the new Microsoft 365 Extensibility look book gallery. Looking to get started with Microsoft Teams development? Don’t miss out on our Teams samples gallery (updated sample browser in June), and the new Microsoft 365 tenant – script samples gallery – scripts for PowerShell and CLIs. Sign up and attend one of a growing list of events hosted by Sharing is Caring this month. Announced PnP Recognition Program. Check out the new PnP Teams Quickstart. Latest updates on PnP projects covered off. Added Teams SSO Provider, sample and other components to Microsoft Graph Toolkit (MGT) v.2.2.0 GA.

Open-source project status: (Bold indicates new this call)

Project |

Current Version |

Release/Status |

PnP .NET Libraries – PnP Framework |

v1.5.0 GA |

Version 1.6.0 – Summer 2021 |

PnP .NET Libraries – PnP Core SDK |

v1.2.0 GA |

Version 1.3.0 – Summer 2021 |

PnP PowerShell |

v1.6.0 GA |

|

Yo teams – generator-teams |

v3.2.0 GA |

v3.3.0 Preview soon |

Yo teams – yoteams-build-core |

v1.2.0 GA, v1.2.1 Preview |

|

Yo teams – yoteams-deploy |

v1.1.0 GA |

|

Yo teams – msteams-react-base-component |

v3.1.0 |

|

Microsoft Graph Toolkit (MGT) |

v2.2.0 GA |

Added Teams SSO Provider in Preview |

Additionally, 1 new Teams samples were delivered in the last 2 weeks. Great work! The host of this call was David Warner II (Catapult Systems) | @DavidWarnerII. Q&A takes place in chat throughout the call.

Actions:

- Register for Sharing is Caring Events:

- First Time Contributor Session – June 29th (EMEA, APAC & US friendly times available)

- Community Docs Session – TBD

- PnP – SPFx Developer Workstation Setup – TBD

- PnP SPFx Samples – Solving SPFx version differences using Node Version Manager – June 24th

- Ask Me Anything – Teams Dev – July 13th

- First Time Presenter – June 30th

- More than Code with VSCode – TBD

- Maturity Model Practitioners – 3rd Tuesday of month, 7:00am PT

- PnP Office Hours – 1:1 session – Register

- PnP Buddy System – Request a Buddy

- Download the recurrent invite for this call – http://aka.ms/m365-dev-sig

- Call attention to your great work by using the #PnPWeekly on Twitter.

Microsoft Teams Development Samples: (https://aka.ms/TeamsSampleBrowser)

Thank you for joining for today’s PnP Community call. It’s a full house!

Demos delivered in this session

Getting started with PnP Teams QuickStart – create a Teams SSO tab in 15 minutes using browser-based Codespaces currently in preview. Follow presenter as he creates a new Teams tab, registers it in Azure AD, accesses Graph for presence courtesy of Microsoft/Teamsfx js library, deploys app in App Store, and deletes app when done. PnP Teams Quick Start is based on GitHub Codespaces = your virtual machine in the cloud.

Our learnings from the AppSource submission for Microsoft Teams – a first timer documents the journey – a 7-step process going from idea to app in AppSource. Solid tips beyond the process that every product team should consider ranging from extension opportunities and testing to devices and post publishing maintenance. Prepare to fail gracefully and learn openly as the journey includes working closely with a Microsoft submissions team that’s completely interested in your success.

Getting started with Microsoft Graph Tag API for managing Microsoft Teams tagging – this presentation focuses on people centric tags used in Teams to categorize, to @mention and to start a chat. Teams makes it nearly effortless to create, manage and use tags to connect people and groups. New Beta APIs, available week of June 28th, address many tag management challenges – permissions, membership updates, tapping data that exists outside immediate org. Glimpse at what’s next.

Thank you for your work. Samples are often showcased in Demos.

Topics covered in this call

Resources:

Additional resources around the covered topics and links from the slides.

General resources:

Upcoming Calls | Recurrent Invites:

General Microsoft 365 Dev Special Interest Group bi-weekly calls are targeted at anyone who’s interested in the general Microsoft 365 development topics. This includes Microsoft Teams, Bots, Microsoft Graph, CSOM, REST, site provisioning, PnP PowerShell, PnP Sites Core, Site Designs, Microsoft Flow, PowerApps, Column Formatting, list formatting, etc. topics. More details on the Microsoft 365 community from http://aka.ms/m365pnp. We also welcome community demos, if you are interested in doing a live demo in these calls!

You can download recurrent invite from http://aka.ms/m365-dev-sig. Welcome and join in the discussion. If you have any questions, comments, or feedback, feel free to provide your input as comments to this post as well. More details on the Microsoft 365 community and options to get involved are available from http://aka.ms/m365pnp.

“Sharing is caring”

Microsoft 365 PnP team, Microsoft – 25th of June 2021

by Contributed | Jun 25, 2021 | Technology

This article is contributed. See the original author and article here.

Many security announcements were shared this week including Azure Key Vault Managed Hardware Security Module was made generally available, general data scientist roles added to RBAC capabilities in Azure Machine Learning, eliminating data silos with large-scale NFS workloads in Azure Blog Storage, Microsoft Defender unmanaged device protection capabilities and a security focused Microsoft Learn Module of the week.

Azure Key Vault Managed Hardware Security Module (HSM) reaches general availablity

Managed HSM offers a fully managed, highly available, single-tenant, high-throughput, standards-compliant cloud service to safeguard cryptographic keys for your cloud applications, using FIPS 140-2 Level 3 validated HSMs.

Key features and benefits:

- Fully managed, highly available, single-tenant, high-throughput HSM as a service: No need to provision, configure, patch, and maintain HSMs for key management. Each HSM cluster uses a separate customer-specific security domain that cryptographically isolates your HSM cluster.

- Access control, enhanced data protection, and compliance: Centralize key management and set permissions at key level granularity. Managed HSM uses FIPS 140-2 Level 3 validated HSMs to help you meet compliance requirements. Use private endpoints to connect securely and privately from your applications.

- Integrated with Azure services: Encrypt data at rest with a customer managed key in Managed HSM for Azure Storage, Azure SQL, and Azure Information Protection. Get complete logs of all activity via Azure Monitor and use Log Analytics for analytics and alerts. Some third party solutions are also integrated with Managed HSM.

- Uses the same API as Key Vault: Managed HSM allows you to store and manage HSM-keys for your cloud applications using the same Key Vault APIs, which means migrating from vaults to managed HSM pools is very simple.

Azure Key Vault Managed HSM is another service that is built on Azure’s confidential computing platform. Azure confidential computing protects the confidentiality and integrity of your data and code while it’s processed in the public cloud.

Learn more.

Azure Machine Learning public preview announcements for June 2021

The RBAC capabilities in Azure Machine Learning now offers a new pre-built role defined for the general data scientist user. When assigned, this role will allow a user to perform all actions within a workspace, except for creating/deleting the compute and any workspace level operations.

Text Classification labeling capability in Azure Machine Learning studio allows our users to create text labeling projects and assign labels to their text documents. It supports text classification either multi-label or multi-class project types.

Environments in the Azure Machine Learning studio allows you to create and edit environments through the UI. You can also view both custom and curated environments in your workspace as well as details around properties, dependencies, and image build logs.

How to assign built-in roles.

How to create labeling projects.

Learn more about environments UI.

Modernize large-scale NFS workloads and eliminate data silos with Azure Blob Storage

Azure Blob Storage, Microsoft’s object storage platform for storing large-scale data, recieves Network File System (NFS) 3.0 protocol support for Azure Blob Storage now generally available. Many organizations from various industries such as manufacturing, media, life science, financial services, and automotive have embraced this feature during our preview and are deploying their workloads in production and have been using NFS 3.0 for a wide array of workloads such as high-performance computing (HPC), analytics, and backup.

To get started, check out this video on introducing NFS 3.0 support for Azure Blob Storage and read more about the NFS 3.0 protocol support in Azure Blob Storage.

Microsoft Defender for Endpoint Unmanaged device protection capabilities are now generally available

Microsoft recently announced the general availablilty of a new set of capabilities that gives Microsoft Defender for Endpoint customers visibility over unmanaged devices running on their networks addressing some of the greatest risks to an organization’s cybersecurity posture. This release delivers the following set of new capabilities:

- Discovery of unmanaged workstations, servers, and mobile endpoints (Windows, Linux, macOS, iOS, and Android) that haven’t been onboarded and secured. Additionally, network devices (e.g.: switches, routers, firewalls, WLAN controllers, VPN gateways and others) can be discovered and added to the device inventory using periodic authenticated scans of preconfigured network devices.

- Onboard discovered, unmanaged endpoint and network devices connected to your networks to Defender for Endpoint. Integrated new workflows and new security recommendations in the threat and vulnerability management experience make it easy to onboard and secure these devices.

- Review assessments and address threats and vulnerabilities on newly discovered devices to create security recommendations that can be used to address issues on devices helping to reduce an organization’s threat and risk exposure.

To read more about our new device discovery and assessment capabilities, check out:

Community Events

- Patch and Switch – It has been a fortnight and Patch and Switch are back to share the stories they have amassed over the past two weeks.

MS Learn Module of the Week

Protect against threats with Microsoft Defender for Endpoint

Learn how Microsoft Defender for Endpoint can help your organization stay secure.

In this module, you will learn how to:

- Define the capabilities of Microsoft Defender for Endpoint.

- Understand how to hunt threats within your network.

- Explain how Microsoft Defender for Endpoint can remediate risks in your environment.

Learn more here: Protect against threats with Microsoft Defender for Endpoint

Let us know in the comments below if there are any news items you would like to see covered in the next show. Be sure to catch the next AzUpdate episode and join us in the live chat.

by Contributed | Jun 25, 2021 | Technology

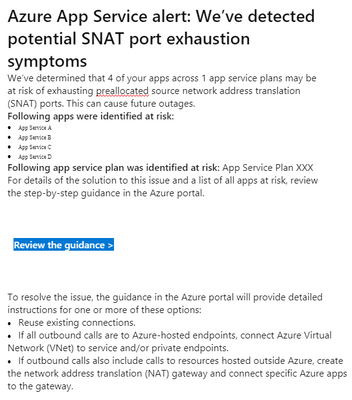

This article is contributed. See the original author and article here.

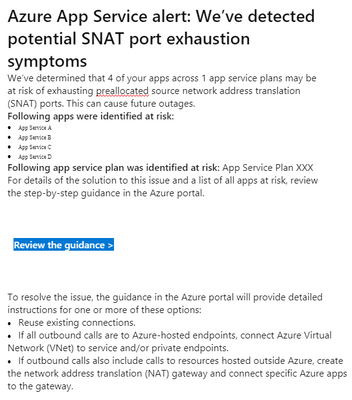

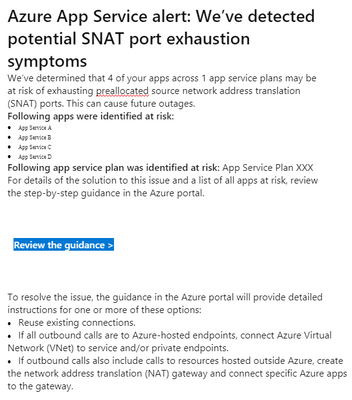

Recently Azure App Service users might receive email alert on potential SNAT Port Exhaustion risk of services. Here is a sample email.

This post is about looking at this alert rationally.

This alert alone does not mean there is a drop in availability or performance of our app services.

If we suspect the availability or performance of our app services degraded and SNAT Port Exhaustion is a possible reason for that, we can have a quick check if there were below symptoms correlated with the alert.

- Slow response times on all or some of the instances in a service plan.

- Intermittent 5xx or Bad Gateway errors

- Timeout error messages

- Could not connect to external endpoints (like SQLDB, Service Fabric, other App services etc.)

Because SNAT Port is consumed only when there are outbound connections from App Service Plan instances to public endpoints. If port exhausted, there must be delay or failure in those outbound calls. Above symptoms will help justify if we are on the right track looking into SNAT Port Exhaustion.

If we did observe slowness or failure in outbound calls that correlated with the email alert, we may refer to the guidance section mentioned in alert email and this document Troubleshooting intermittent outbound connection errors in Azure App Service – Azure App Service | Microsoft Docs for further troubleshooting.

by Contributed | Jun 24, 2021 | Technology

This article is contributed. See the original author and article here.

For years, a successful actuarial services company relied on a vital financial application that ran on Alpha hardware—a server well past its end-of-life date. To modernize its infrastructure with as little risk as possible, the company turned to Stromasys Inc., experts in cross-platform server virtualization solutions. In a matter of days, the company was running its mission-critical application on Azure. Soon after, it began to offer the software as a service (SaaS) to other companies, turning the formerly high-maintenance legacy software into a growing profit center.

The challenge of the not-so-modern mainframe

Aging servers are vulnerable servers. Stromasys was founded in 1998 with a mission to help companies running core applications on servers from an earlier generation, such as SPARC, VAX, Alpha, and HP 3000. With headquarters in Raleigh, North Carolina, Stromasys is a wholly owned subsidiary of Stromasys SA in Geneva, Switzerland. Its virtualization solutions are used by top organizations worldwide.

Stromasys developed a niche in the computer industry by recognizing the need for specialized virtualization environments that could replace servers nearing their end of life. Stromasys solutions can host applications designed for Solaris, VMS, Tru64 UNIX, and MPE/iX operating systems. By rehosting applications on Azure using emulation software—known as a lift-and-shift migration—organizations can safely phase out legacy hardware in a matter of days and immediately begin taking advantage of the scalability and flexibility of cloud computing.

The financial services industry has been among the first to adopt Stromasys server emulation solutions. A lift-and-shift approach is a quick, safe way to move legacy workloads to the cloud. Actuarial services are all about risk assessment, and the Stromasys customer knew it needed to reduce the risk associated with running mission-critical software on a decades-old Alpha system.

The legendary Digital Equipment Corporation (DEC) introduced the AlphaServer in 1994. Even after the system was officially retired in 2007, organizations around the world continued to trust the Alpha’s underlying OpenVMS and Tru64 UNIX operating systems for their proven stability. Stromasys saw an opportunity, and in 2006, it began offering an Alpha hardware emulation solution, Charon-AXP. Today, HP recognizes Charon-AXP as a valid Alpha replacement platform to run OpenVMS or Tru64.

The actuarial services company had kept its AlphaServer running through the years with help from user groups that located vintage hardware components. However, parts can be hard to find for any classic machine—from cars to computers.

“Our business had exclusively involved on-premises solutions,” explains Thomas Netherland, global head of Alliances & Channels at Stromasys. “So we were surprised and intrigued when the customer opted for the cloud. They simply did not want to be in the IT infrastructure business.”

The actuarial services company wanted to take advantage of the scalability, security, and other benefits that come with Azure. This is when Stromasys decided as a company to get serious about offering cloud-ready solutions.

“Stromasys solutions on Azure extend the lives of mission-critical legacy applications.”

– Thomas Netherland: global head of Alliances & Channels, Stromasys Inc.

Hardware emulation in a virtual environment

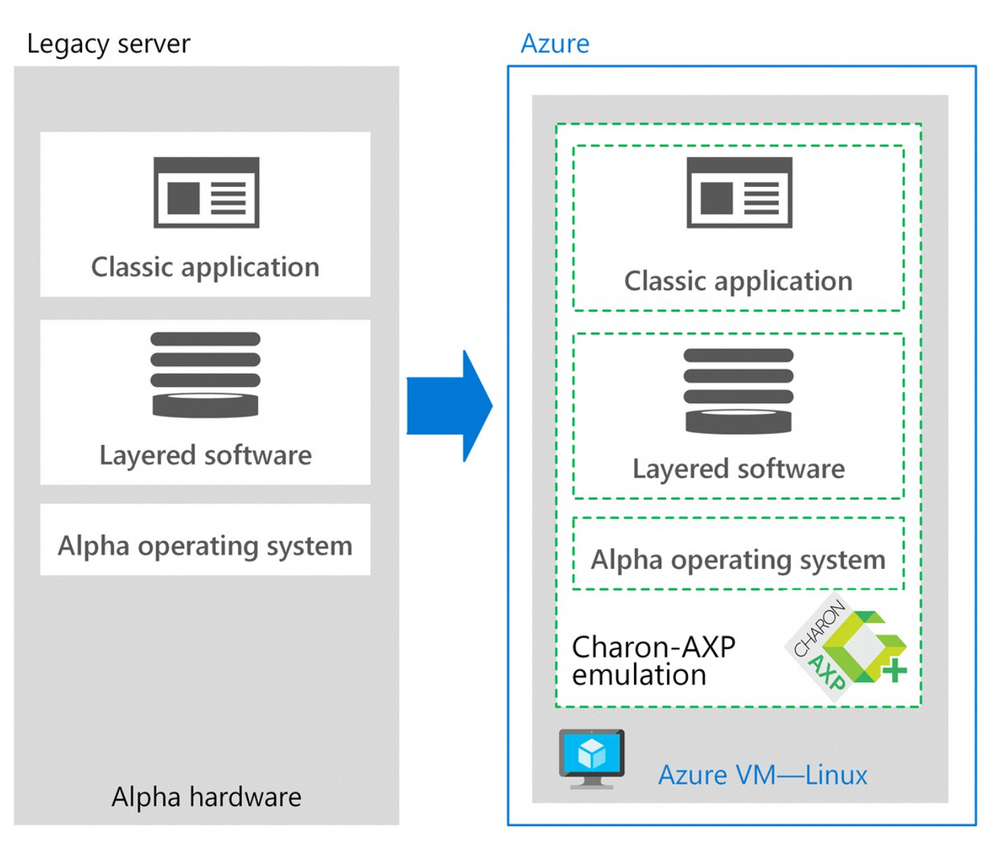

Stromasys and Microsoft worked together to find a solution for the actuarial services customer. Stromasys proposed using Charon-AXP, one of a family of legacy system cross-platform hypervisors. With this emulator, the customer could phase out its aging and increasingly expensive hardware and replace it quickly and safely with an enterprise-grade, virtual Alpha environment on Azure that uses an industry-standard x86 platform.

According to Dave Clements, a systems engineer at Stromasys, Charon means no risky migration projects. “There are no changes to the original software, operating system, or layered products—so no need for source code and no application recompile,” he says. In addition, the actuarial services company didn’t have to worry about recertifying its application, because the legacy code is untouched.

Charon-AXP creates a virtual Alpha environment on an Azure virtual machine (VM), which is used to isolate and manage the resources for a specific application on a single instance. Charon-AXP presents an Alpha hardware interface to the original Alpha software, which cannot detect a difference. After the user programs and data are copied to the VM, the legacy application continues to run unchanged.

The engineers didn’t know how well Charon-AXP would perform in the cloud, so they set up a proof-of-solution test. “We wanted to ensure that the Azure infrastructure processor speed was enough to compensate for the additional CPU overhead introduced by Charon,” says Netherland. Turns out, it wasn’t a problem. The clock speed of most legacy systems is on the order of hundreds of megahertz (MHz), as opposed to the several gigahertz (GHz) offered by VMs on Azure. Performance was the same or better.

The entire migration process, including the proof of solution test, took only two weeks.

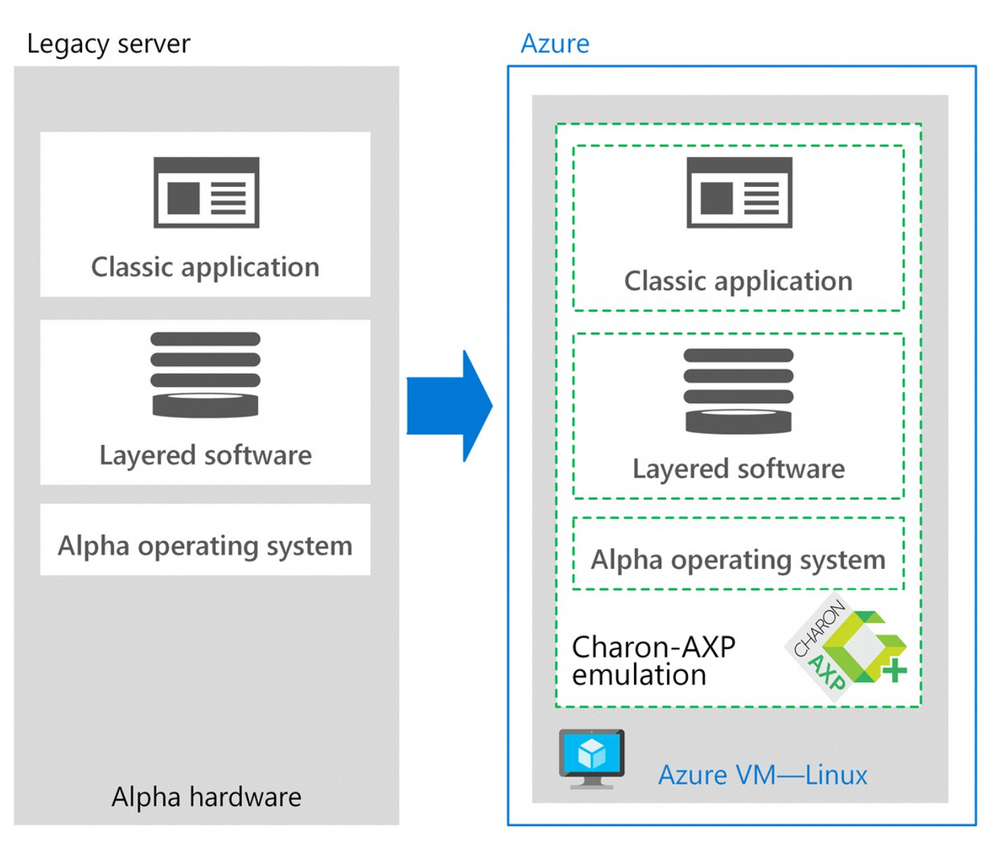

The following image demonstrates the architectural differences:

“We like Azure because of the high processor speeds that are available and for the support available from Microsoft

and our reseller community.”

– Dave Clements: systems engineer, Stromasys Inc.

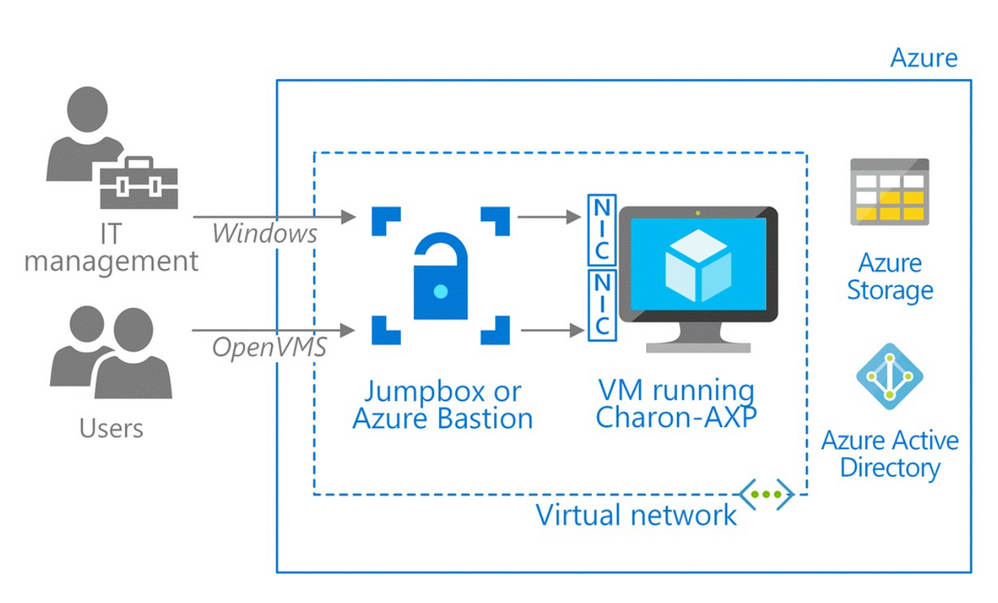

Architecture on Azure

The original application ran on a DEC Alpha ES40 server with four CPUs (1 GHz), 16 GB of RAM, and 400 GB of storage. The new architecture on Azure includes Charon-AXP for Windows on a VM with eight CPUs (3 GHz), 32 GB of RAM, and 500 GB of storage.

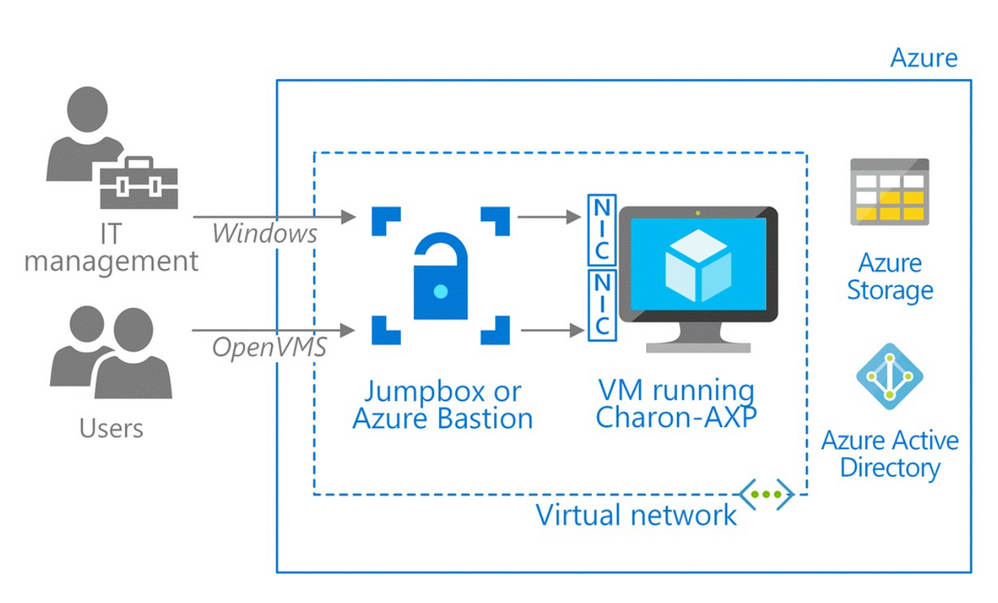

During proof-of-solution testing, Stromasys engineers created multiple virtual network interfaces to provide separate networks paths, depending on the type of user. One path is provisioned for Windows only, to give IT managers access for host configuration and management tasks. The other network path provides OpenVMS users and administrators access to the OpenVMS operating system and applications. This gives users access to their applications through their organization’s preferred type of connection—for example, Secure Shell (SSH), a virtual private network (VPN), or a public IP address.

“Two network interfaces keep the connections separate, which is our preference for security and ease of use,” explains Sandra Levitt, an engineer at Stromasys. “It lets users connect the way they’re used to.” A best practice is to configure the VM running Charon behind a jumpbox or a service, such as Azure Bastion, which uses Secure Sockets Layer (SSL) to provide access without any exposure through public IP addresses.

For this customer, the engineers set up a VPN to accelerate communications between the legacy operating system running in the company’s datacenter and Charon on Azure. Users connect to the VM running the application using remote desktop protocol (RDP).

The new architecture also improves the company’s business continuity. Azure Backup backs up the VMs, and the internal OpenVMS backup agents protect the application.

The following image demonstrates the Azure architecture:

“Our relationship with Microsoft started with this customer, and now we work closely with the Azure migration services team.

This partnership has really helped us succeed with our customers.”

– Dave Clements: systems engineer, Stromasys Inc.

A legacy is reinvented as SaaS

Before working with this customer, Stromasys hadn’t ventured far into the cloud. Running Charon-AXP on Azure showed Stromasys and the customer how a lift-and-shift migration can transform a legacy application. Azure provides a modern platform for security with storage that can expand and contract as the company’s usage varies, while the pay-as-you-go pricing makes Azure cost effective.

After the legacy application was running in a Charon-AXP emulator on Azure, the actuarial services company began offering its solution as a service to other financial companies. In effect, the company reinvented its mainframe application as a SaaS option. Two major insurance companies immediately signed up for this service.

“Their focus shifted from managing the hardware and software to just managing their real business,” says Clements. “All that without touching the legacy code.”

“Azure allows our customers to take full advantage of the benefits of a modern infrastructure.”

– Dave Clements: systems engineer, Stromasys Inc.

by Contributed | Jun 24, 2021 | Technology

This article is contributed. See the original author and article here.

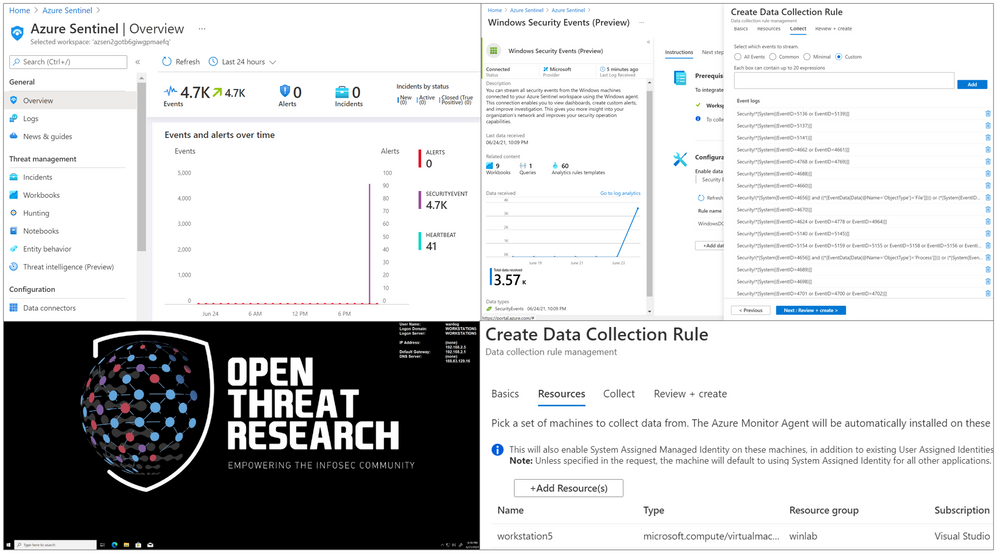

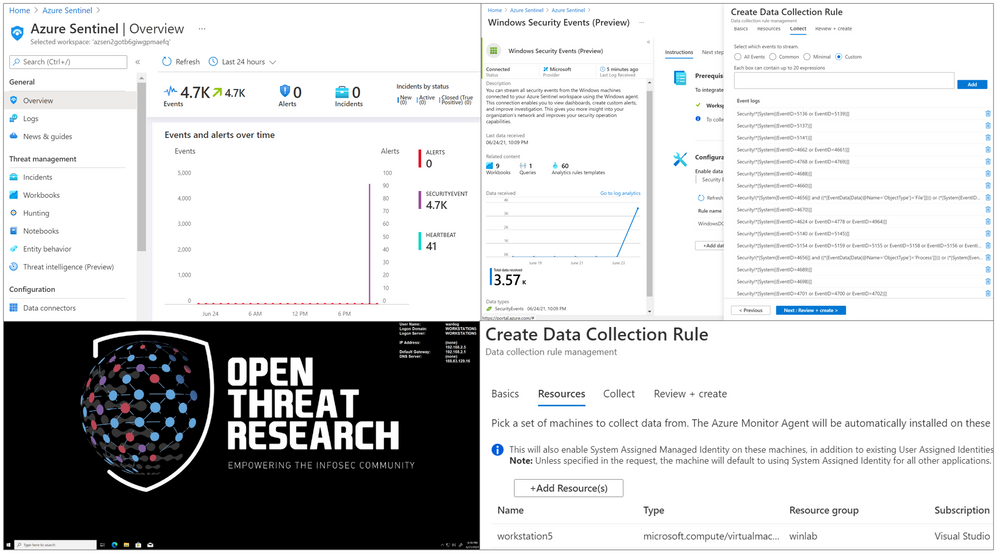

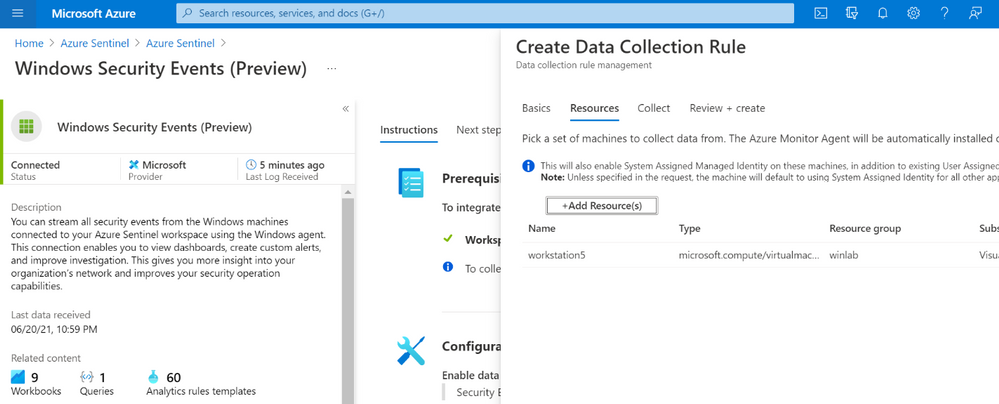

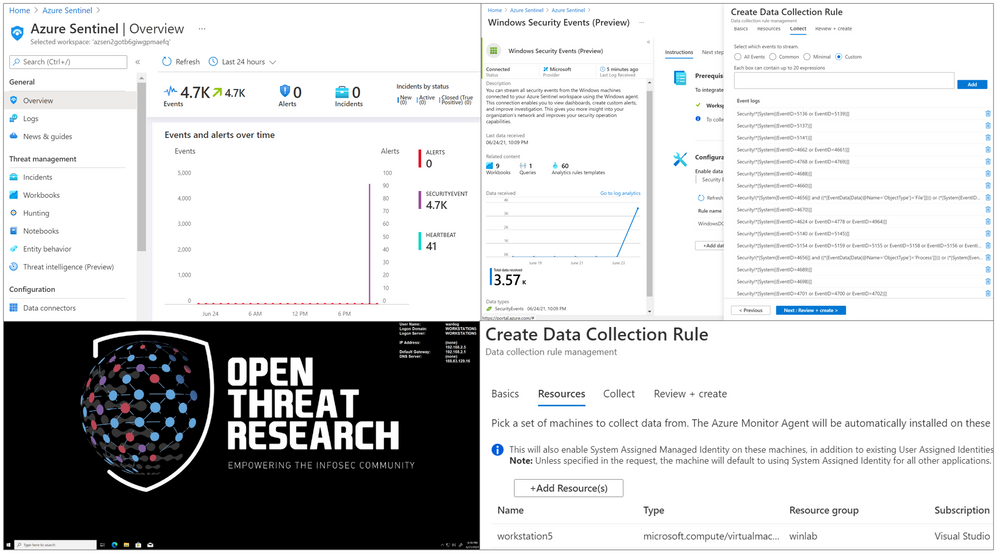

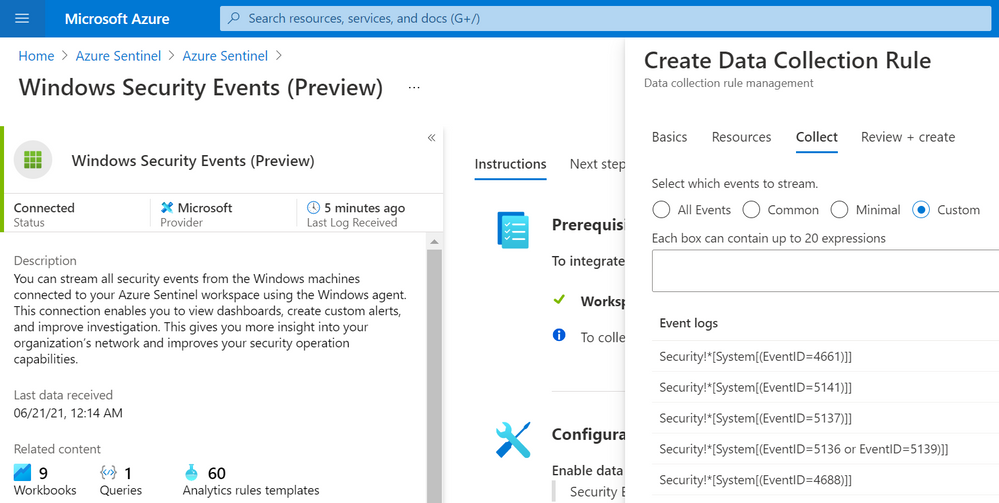

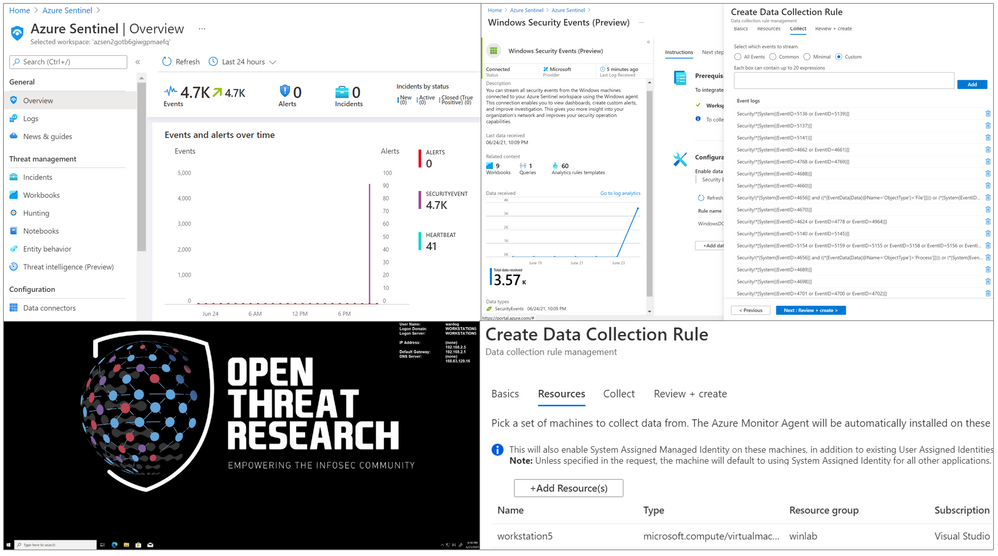

Last week, on Monday June 14th, 2021, a new version of the Windows Security Events data connector reached public preview. This is the first data connector created leveraging the new generally available Azure Monitor Agent (AMA) and Data Collection Rules (DCR) features from the Azure Monitor ecosystem. As any other new feature in Azure Sentinel, I wanted to expedite the testing process and empower others in the InfoSec community through a lab environment to learn more about it.

In this post, I will talk about the new features of the new data connector and how to automate the deployment of an Azure Sentinel instance with the connector enabled, the creation and association of DCRs and installation of the AMA on a Windows workstation. This is an extension of a blog post I wrote, last year (2020), where I covered the collection of Windows security events via the Log Analytics Agent (Legacy).

Recommended Reading

I highly recommend reading the following blog posts to learn more about the announcement of the new Azure Monitor features and the Windows Security Events data connector:

Azure Sentinel To-Go!?

Azure Sentinel2Go is an open-source project maintained and developed by the Open Threat Research community to automate the deployment of an Azure Sentinel research lab and a data ingestion pipeline to consume pre-recorded datasets. Every environment I release through this initiative is an environment I use and test while performing research as part of my role in the MSTIC R&D team. Therefore, I am constantly trying to improve the deployment templates as I cover more scenarios. Feedback is greatly appreciated.

A New Version of the Windows Security Events Connector?

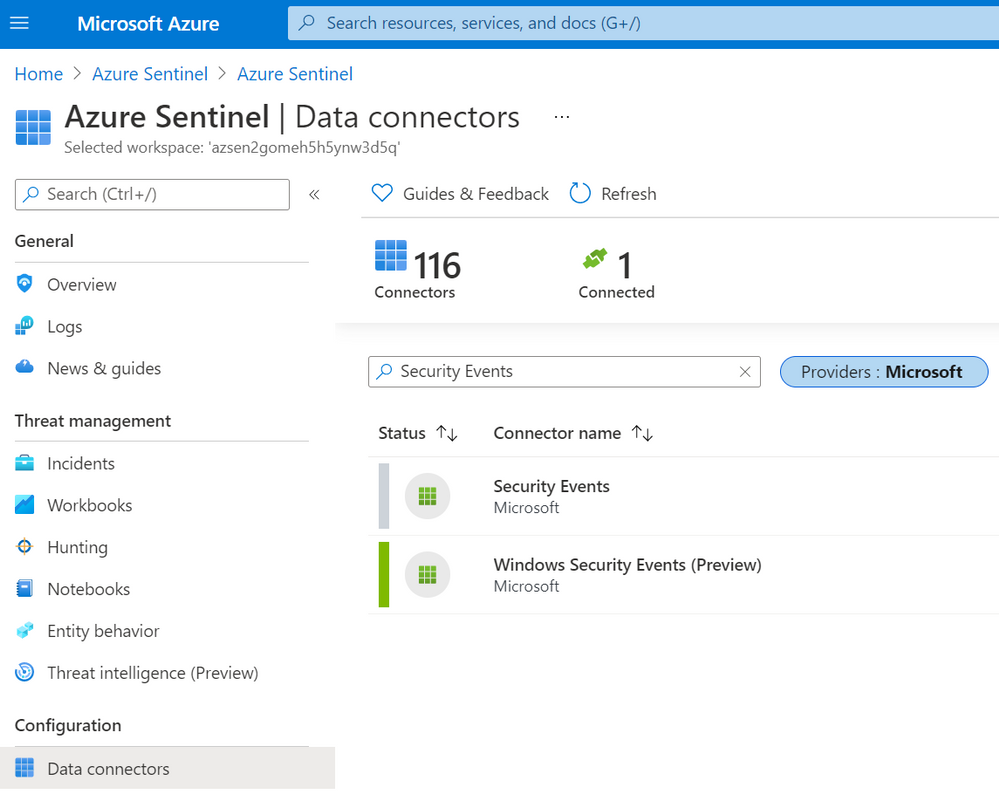

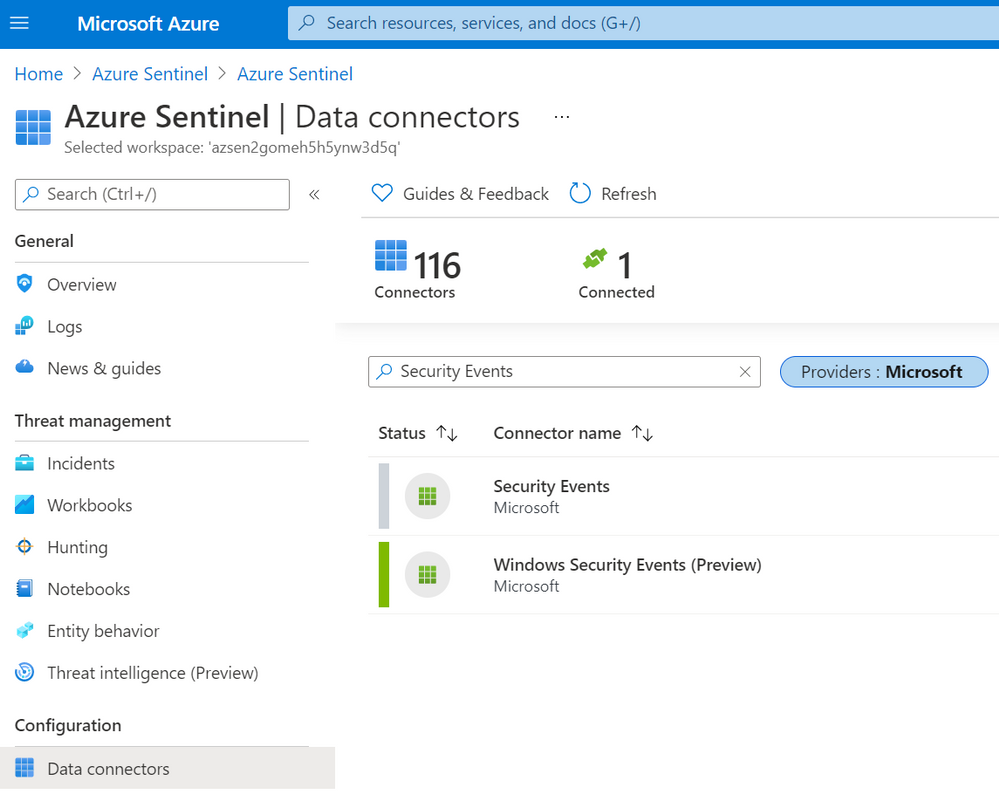

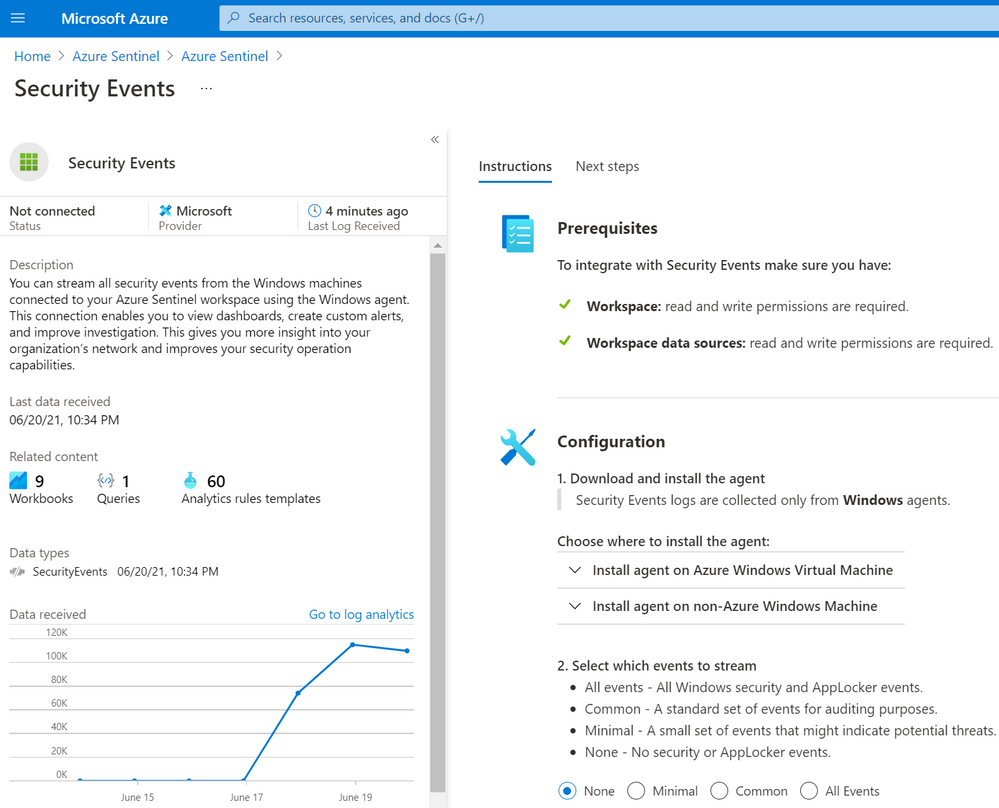

According to Microsoft docs, the Windows Security Events connector lets you stream security events from any Windows server (physical or virtual, on-premises or in any cloud) connected to your Azure Sentinel workspace. After last week, there are now two versions of this connector:

- Security events (legacy version): Based on the Log Analytics Agent (Usually known as the Microsoft Monitoring Agent (MMA) or Operations Management Suite (OMS) agent).

- Windows Security Events (new version): Based on the new Azure Monitor Agent (AMA).

In your Azure Sentinel data connector’s view, you can now see both connectors:

A New Version? What is New?

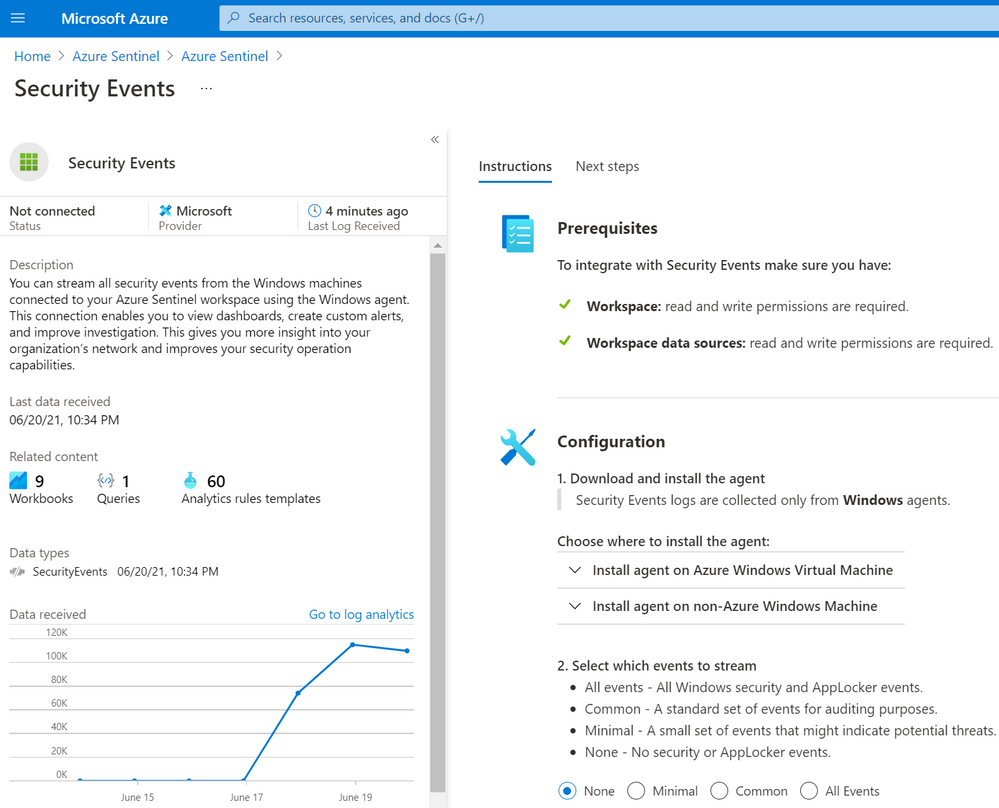

Data Connector Deployment

Besides using the Log Analytics Agent to collect and ship events, the old connector uses the Data Sources resource from the Log Analytics Workspace resource to set the collection tier of Windows security events.

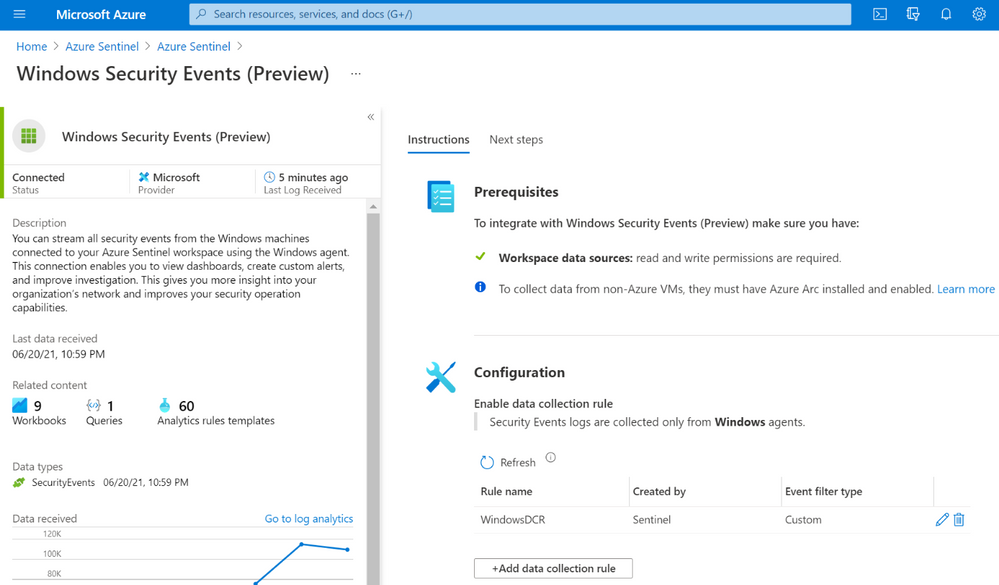

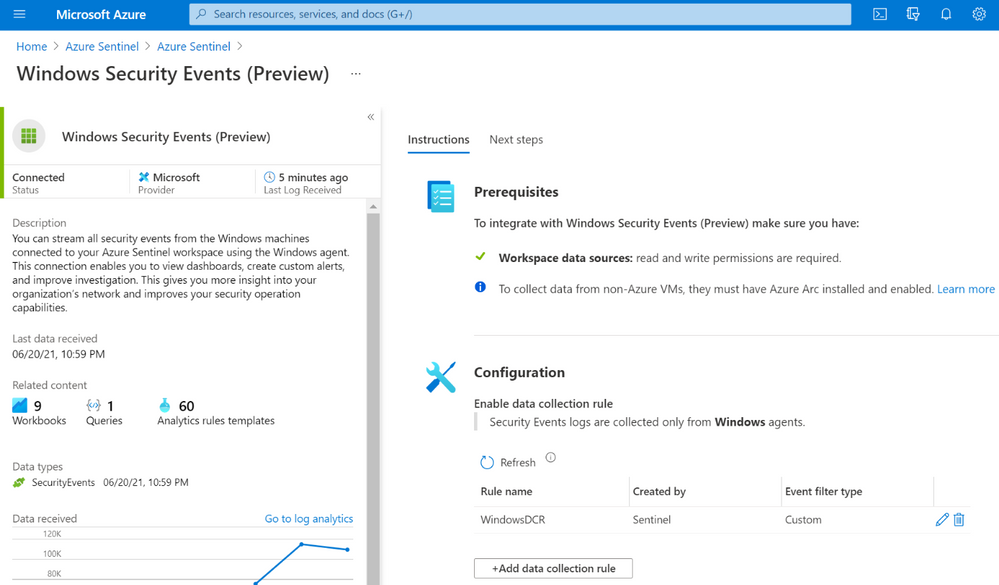

The new connector, on the other hand, uses a combination of Data Connection Rules (DCR) and Data Connector Rules Association (DCRA). DCRs define what data to collect and where it should be sent. Here is where we can set it to send data to the log analytics workspace backing up our Azure Sentinel instance.

In order to apply a DCR to a virtual machine, one needs to create an association between the machine and the rule. A virtual machine may have an association with multiple DCRs, and a DCR may have multiple virtual machines associated with it.

For more detailed information about setting up the Windows Security Events connector with both Log Analytics Agent and Azure Monitor Agents manually, take a look at this document.

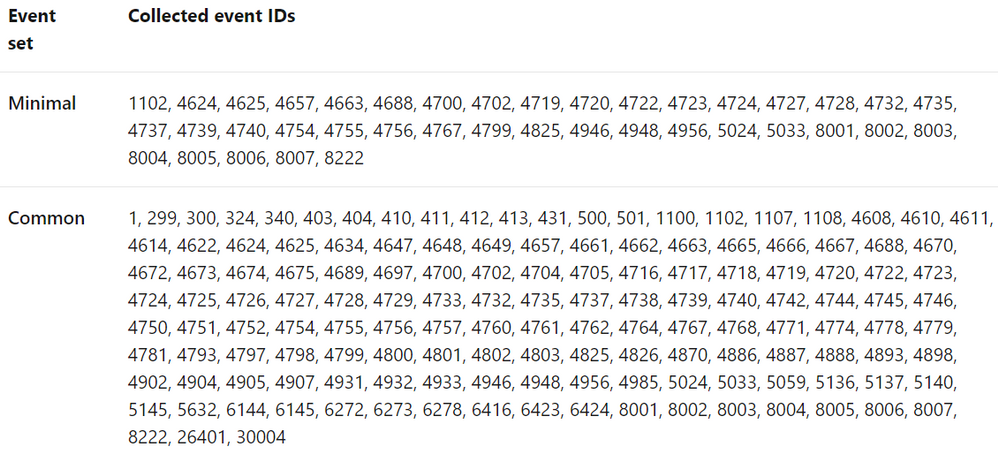

Data Collection Filtering Capabilities

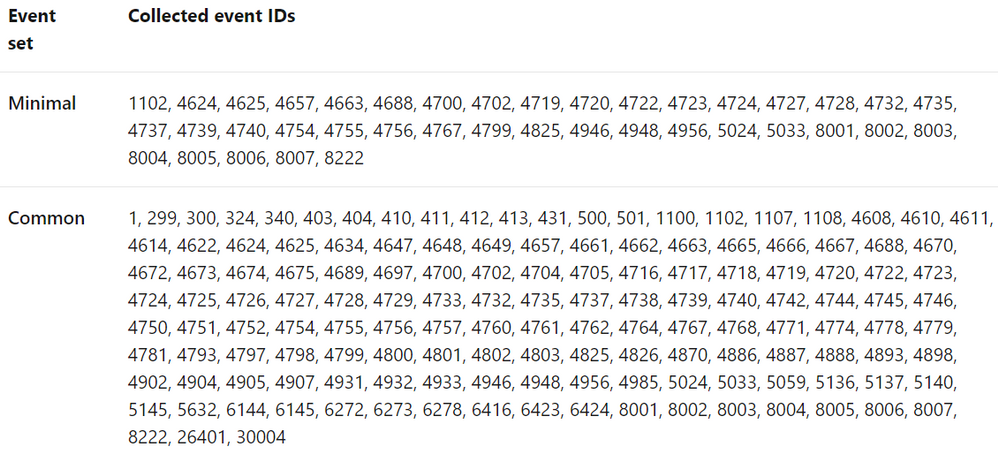

The old connector is not flexible enough to choose what specific events to collect. For example, these are the only options to collect data from Windows machines with the old connector:

- All events – All Windows security and AppLocker events.

- Common – A standard set of events for auditing purposes. The Common event set may contain some types of events that aren’t so common. This is because the main point of the Common set is to reduce the volume of events to a more manageable level, while still maintaining full audit trail capability.

- Minimal – A small set of events that might indicate potential threats. This set does not contain a full audit trail. It covers only events that might indicate a successful breach, and other important events that have very low rates of occurrence.

- None – No security or AppLocker events. (This setting is used to disable the connector.)

According to Microsoft docs, these are the pre-defined security event collection groups depending on the tier set:

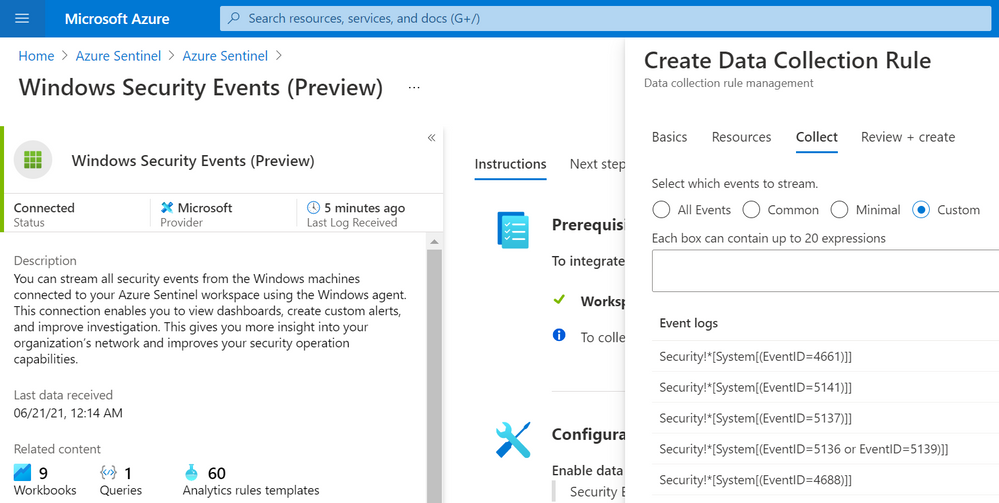

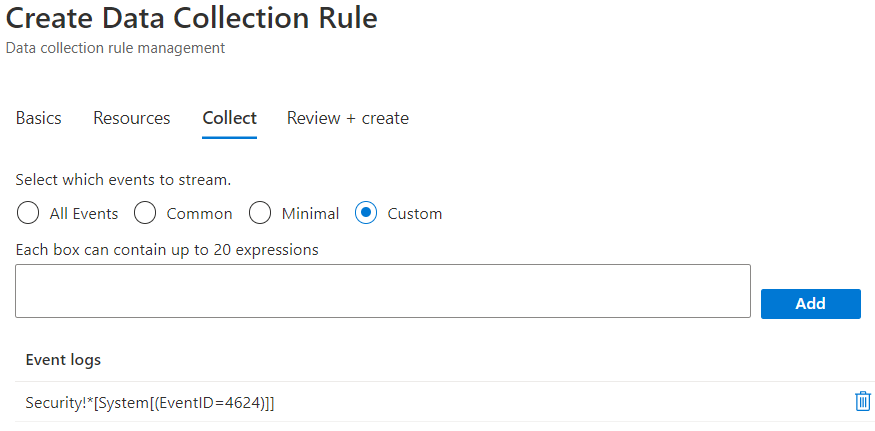

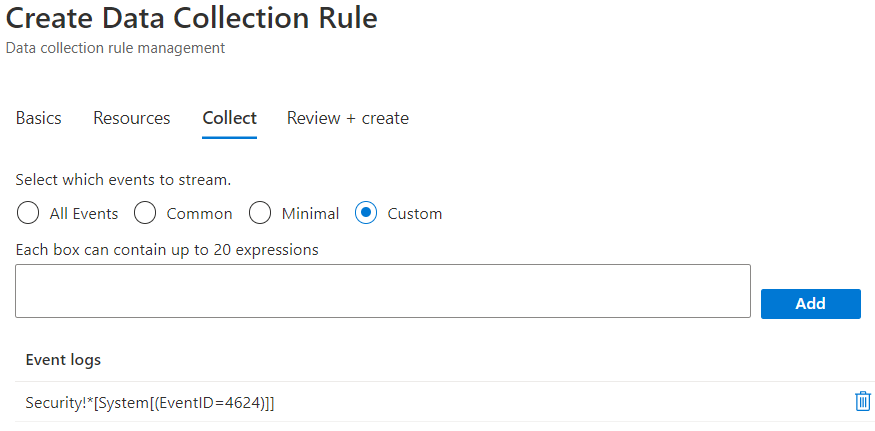

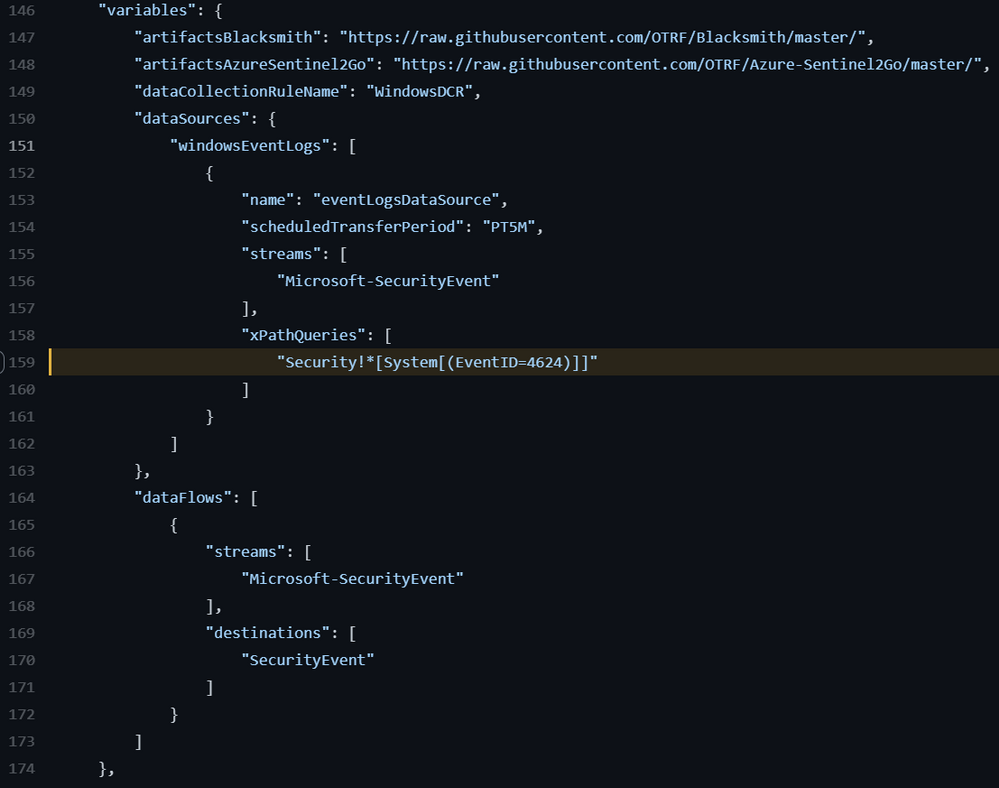

On the other hand, the new connector allows custom data collection via XPath queries. These XPath queries are defined during the creation of the data collection rule and are written in the form of LogName!XPathQuery. Here are a few examples:

- Collect only Security events with Event ID = 4624

Security!*[System[(EventID=4624)]]

- Collect only Security events with Event ID = 4624 or Security Events with Event ID = 4688

Security!*[System[(EventID=4624 or EventID=4688)]]

- Collect only Security events with Event ID = 4688 and with a process name of consent.exe.

Security!*[System[(EventID=4688)]] and *[EventData[Data[@Name=’ProcessName’]=’C:WindowsSystem32consent.exe’]]

You can select the custom option to select which events to stream:

Important!

Based on the new connector docs, make sure to query only Windows Security and AppLocker logs. Events from other Windows logs, or from security logs from other environments, may not adhere to the Windows Security Events schema and won’t be parsed properly, in which case they won’t be ingested to your workspace.

Also, the Azure Monitor agent supports XPath queries for XPath version 1.0 only. I recommend reading the Xpath 1.0 Limitation documentation before writing XPath Queries.

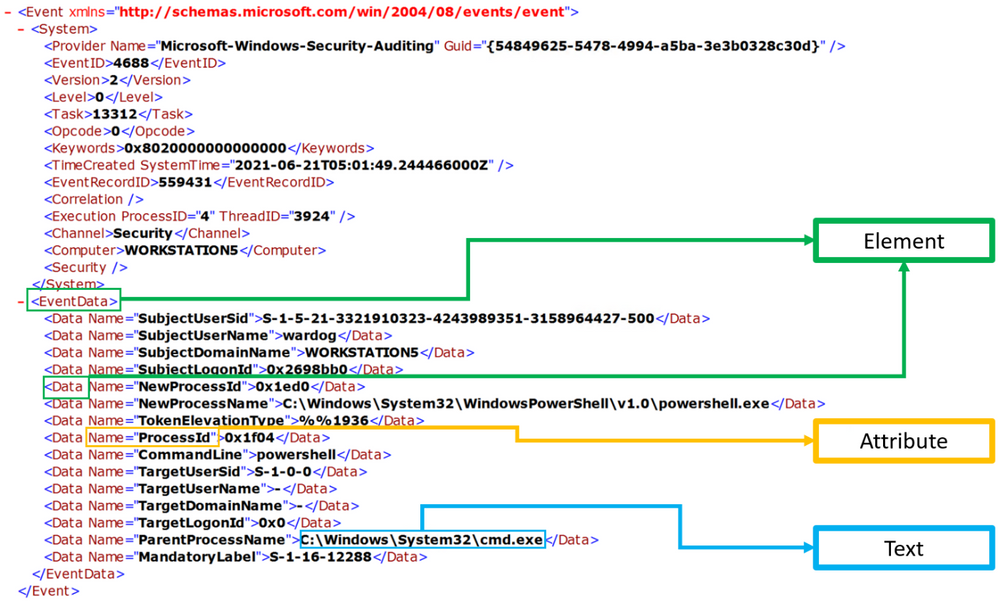

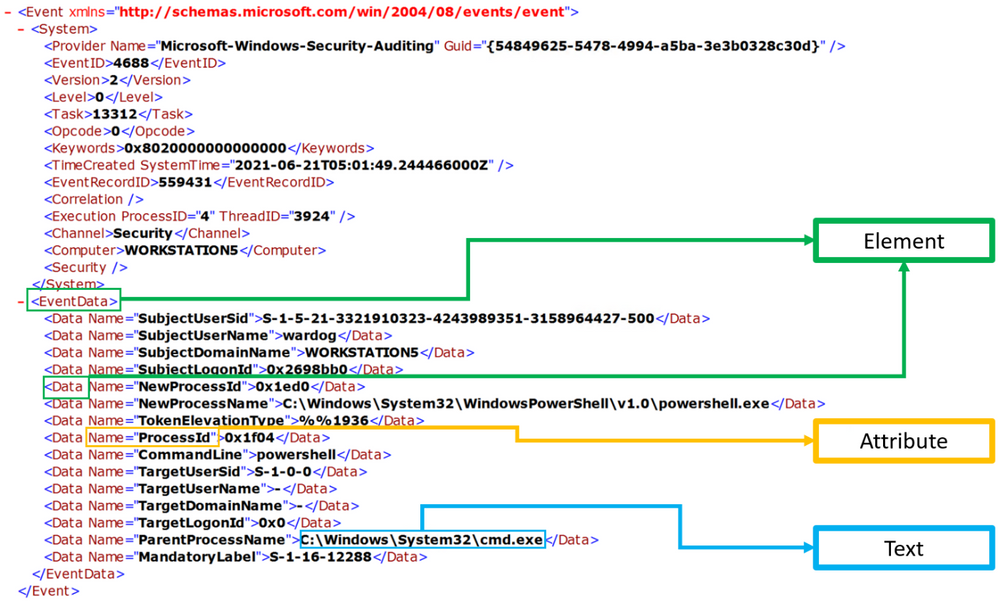

XPath?

XPath stands for XML (Extensible Markup Language) Path language, and it is used to explore and model XML documents as a tree of nodes. Nodes can be represented as elements, attributes, and text.

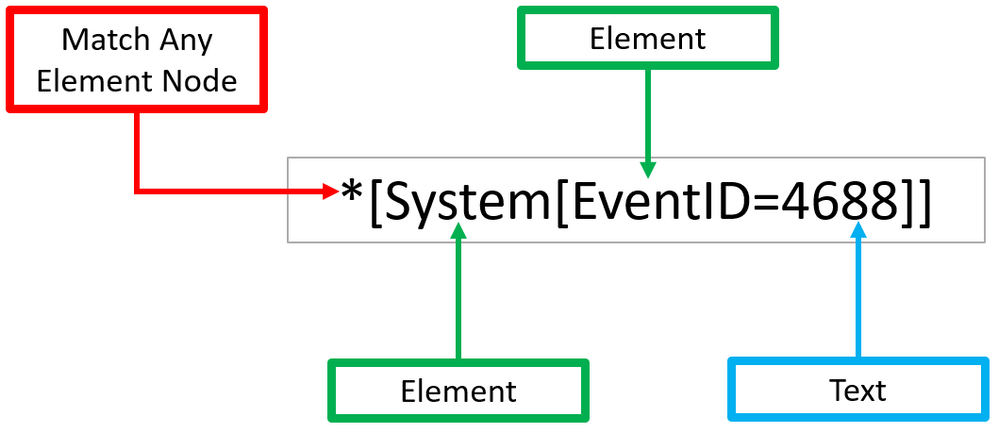

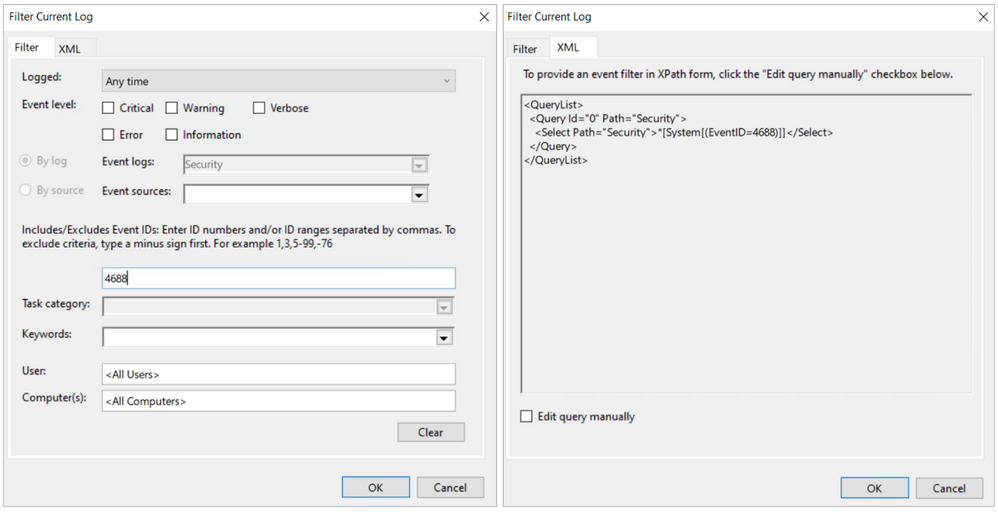

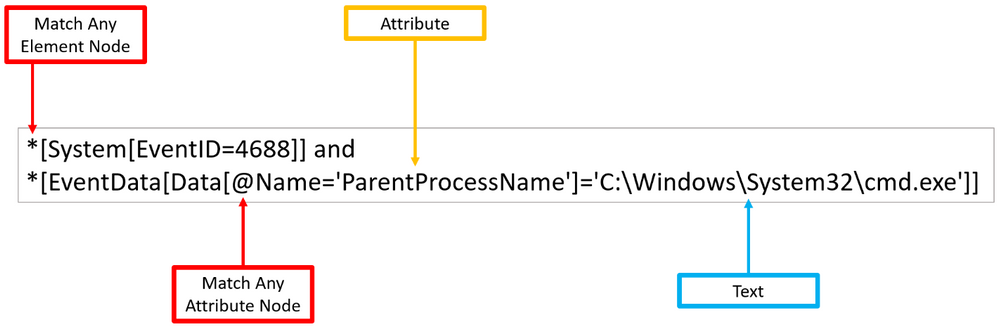

In the image below, we can see a few node examples in the XML representation of a Windows security event:

XPath Queries?

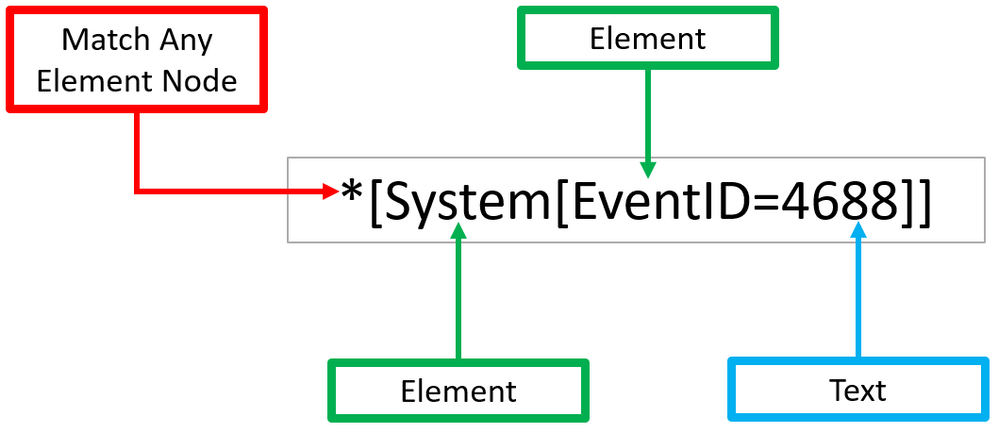

XPath queries are used to search for patterns in XML documents and leverage path expressions and predicates to find a node or filter specific nodes that contain a specific value. Wildcards such as ‘*’ and ‘@’ are used to select nodes and predicates are always embedded in square brackets “[]”.

Matching any element node with ‘*’

Using our previous Windows Security event XML example, we can process Windows Security events using the wildcard ‘*’ at the `Element` node level.

The example below walks through two ‘Element’ nodes to get to the ‘Text’ node of value ‘4688’.

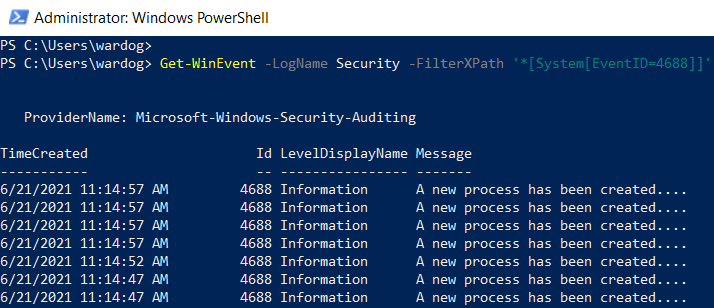

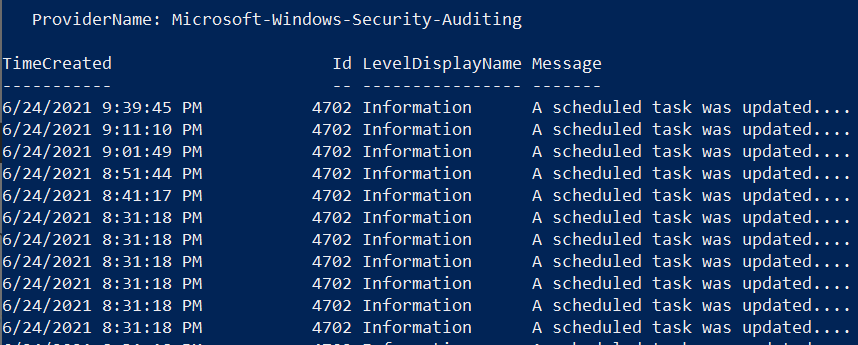

You can test this basic ‘XPath’ query via PowerShell.

- Open a PowerShell console as ‘Administrator’.

- Use the Get-WinEvent command to pass the XPath query.

- Use the ‘Logname’ parameter to define what event channel to run the query against.

- Use the ‘FilterXPath’ parameter to set the XPath query.

Get-WinEvent -LogName Security -FilterXPath ‘*[System[EventID=4688]]

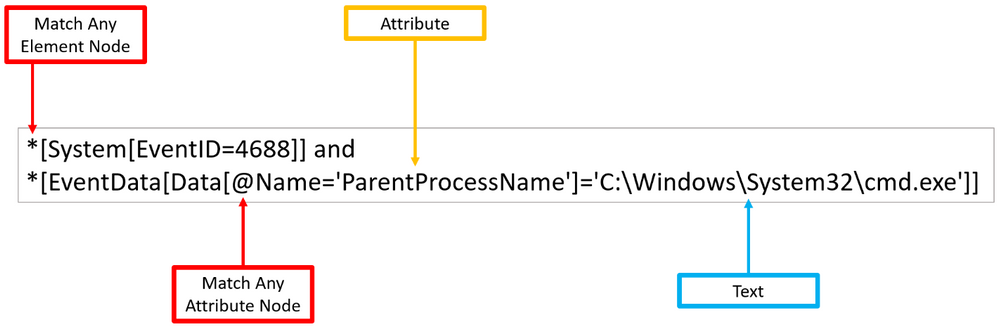

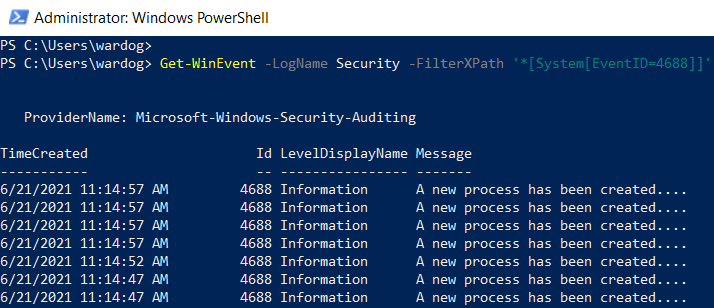

Matching any attribute node with ‘@’

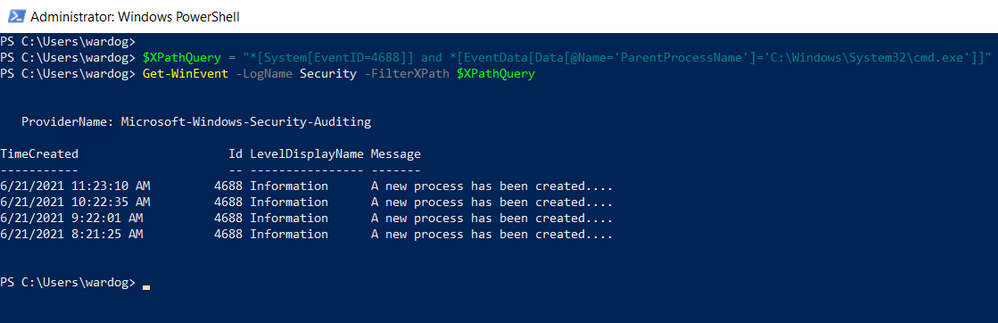

As shown before, ‘Element’ nodes can contain ‘Attributes’ and we can use the wildcard ‘@’ to search for ‘Text’ nodes at the ‘Attribute’ node level. The example below extends the previous one and adds a filter to search for a specific ‘Attribute’ node that contains the following text: ‘C:WindowsSystem32cmd.exe’.

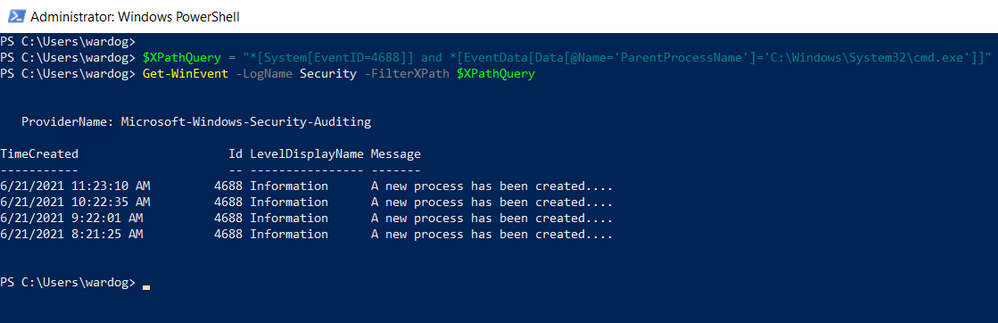

Once again, you can test the XPath query via PowerShell as Administrator.

$XPathQuery = “*[System[EventID=4688]] and *[EventData[Data[@Name=’ParentProcessName’]=’C:WindowsSystem32cmd.exe’]]”

Get-WinEvent -LogName Security -FilterXPath $XPathQuery

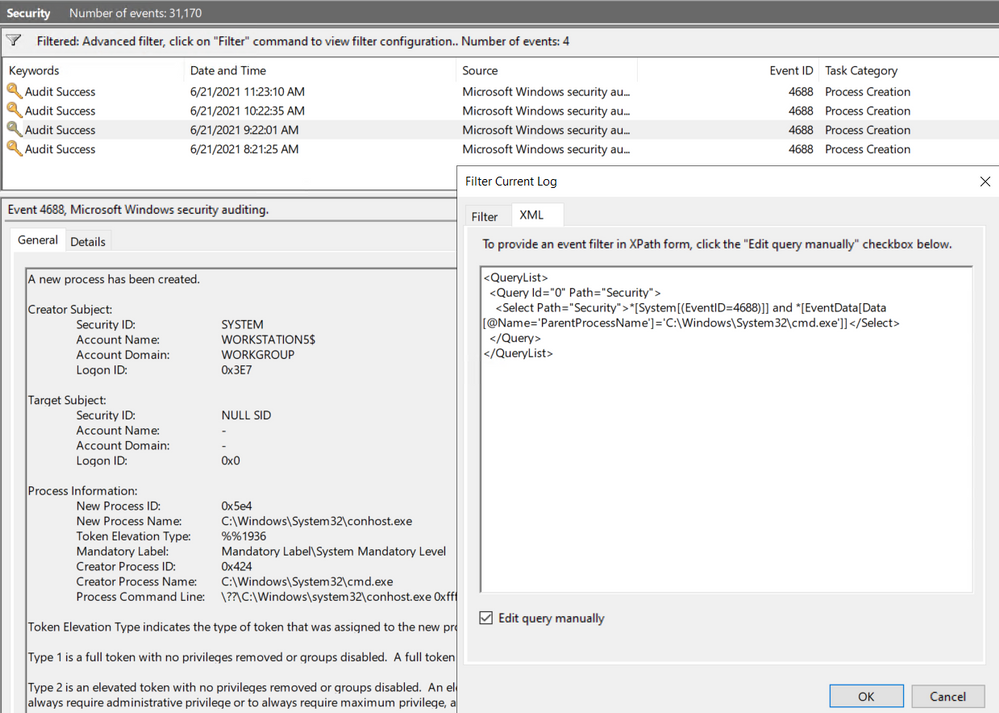

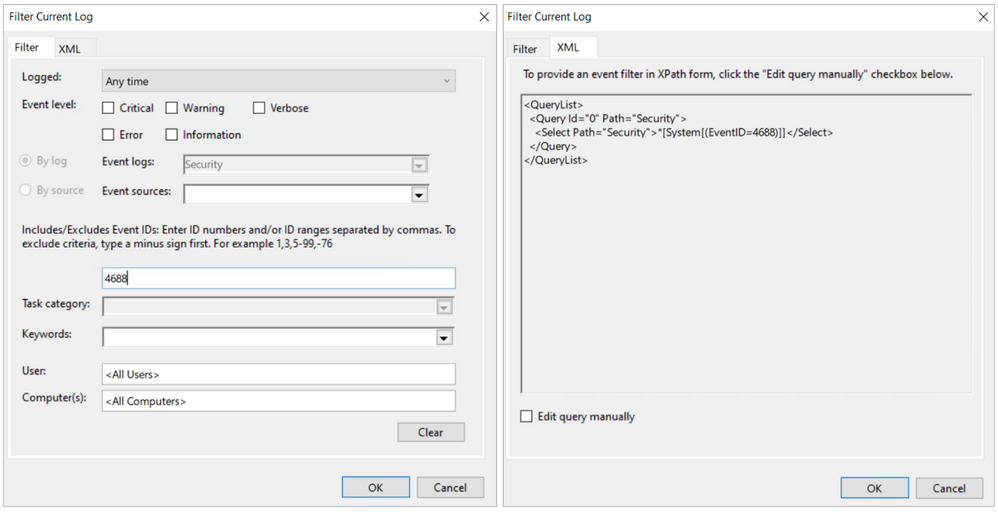

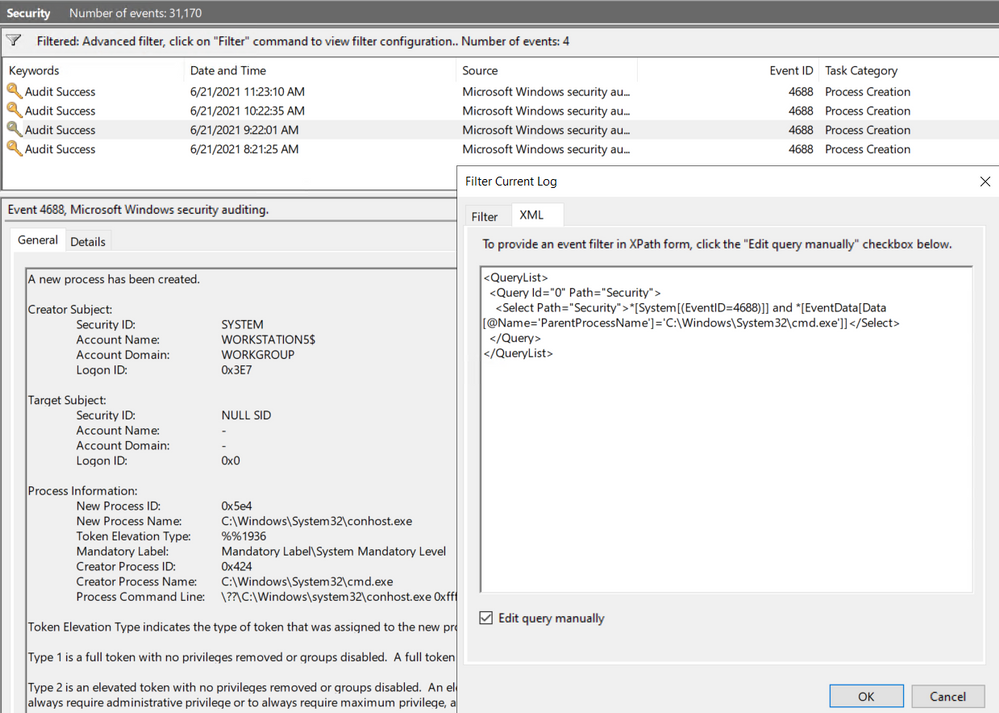

Can I Use XPath Queries in Event Viewer?

Every time you add a filter through the Event Viewer UI, you can also get to the XPath query representation of the filter. The XPath query is part of a QueryList node which allows you to define and run multiple queries at once.

We can take our previous example where we searched for a specific attribute and run it through the Event Viewer Filter XML UI.

<QueryList>

<Query Id=”0″ Path=”Security”>

<Select Path=”Security”>*[System[(EventID=4688)]] and *[EventData[Data[@Name=’ParentProcessName’]=’C:WindowsSystem32cmd.exe’]]</Select>

</Query>

</QueryList>

Now that we have covered some of the main changes and features of the new version of the Windows Security Events data connector, it is time to show you how to create a lab environment for you to test your own XPath queries for research purposes and before pushing them to production.

Deploy Lab Environment

- Identify the right Azure resources to deploy.

- Create deployment template.

- Run deployment template.

Identify the Right Azure Resources to Deploy

As mentioned earlier in this post, the old connector uses the Data Sources resource from the Log Analytics Workspace resource to set the collection tier of Windows security events.

This is the Azure Resource Manager (ARM) template I use in Azure-Sentinel2Go to set it up:

Azure-Sentinel2Go/securityEvents.json at master · OTRF/Azure-Sentinel2Go (github.com)

Data Sources Azure Resource

{

“type”: “Microsoft.OperationalInsights/workspaces/dataSources”,

“apiVersion”: “2020-03-01-preview”,

“location”: “eastus”,

“name”: “WORKSPACE/SecurityInsightsSecurityEventCollectionConfiguration”,

“kind”: “SecurityInsightsSecurityEventCollectionConfiguration”,

“properties”: {

“tier”: “All”,

“tierSetMethod”: “Custom”

}

}

However, the new connector uses a combination of Data Connection Rules (DCR) and Data Connector Rules Association (DCRA).

This is the ARM template I use to create data collection rules:

Azure-Sentinel2Go/creation-azureresource.json at master · OTRF/Azure-Sentinel2Go (github.com)

Data Collection Rules Azure Resource

{

“type”: “microsoft.insights/dataCollectionRules”,

“apiVersion”: “2019-11-01-preview”,

“name”: “WindowsDCR”,

“location”: “eastus”,

“tags”: {

“createdBy”: “Sentinel”

},

“properties”: {

“dataSources”: {

“windowsEventLogs”: [

{

“name”: “eventLogsDataSource”,

“scheduledTransferPeriod”: “PT5M”,

“streams”: [

“Microsoft-SecurityEvent”

],

“xPathQueries”: [

“Security!*[System[(EventID=4624)]]”

]

}

]

},

“destinations”: {

“logAnalytics”: [

{

“name”: “SecurityEvent”,

“workspaceId”: “AZURE-SENTINEL-WORKSPACEID”,

“workspaceResourceId”: “AZURE-SENTINEL-WORKSPACERESOURCEID”

}

]

},

“dataFlows”: [

{

“streams”: [

“Microsoft-SecurityEvent”

],

“destinations”: [

“SecurityEvent”

]

}

]

}

}

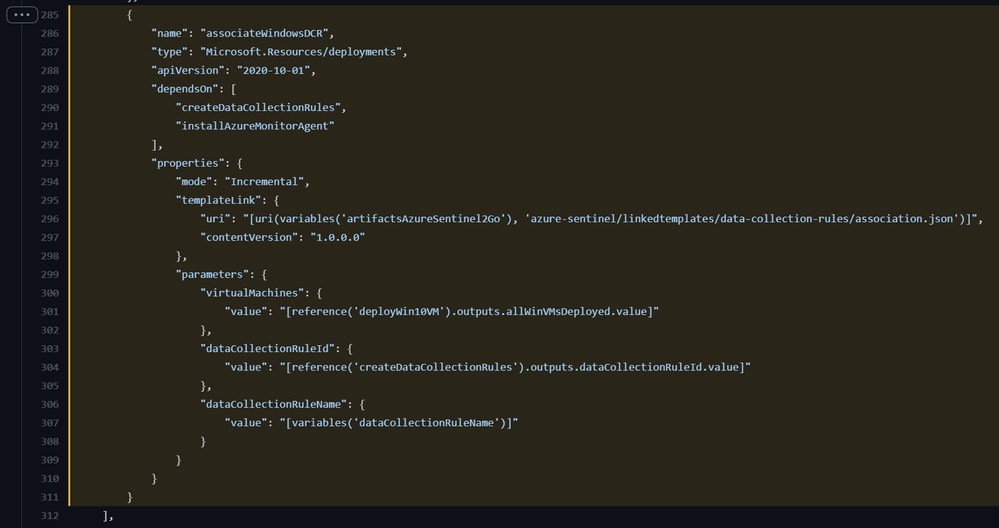

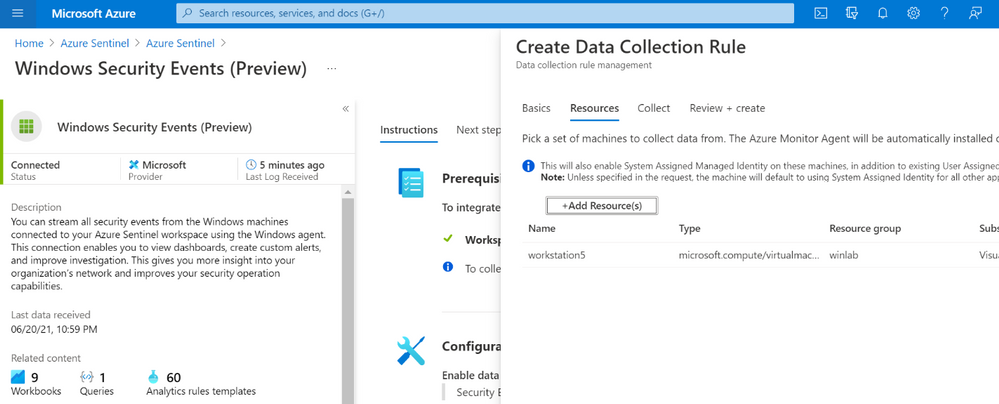

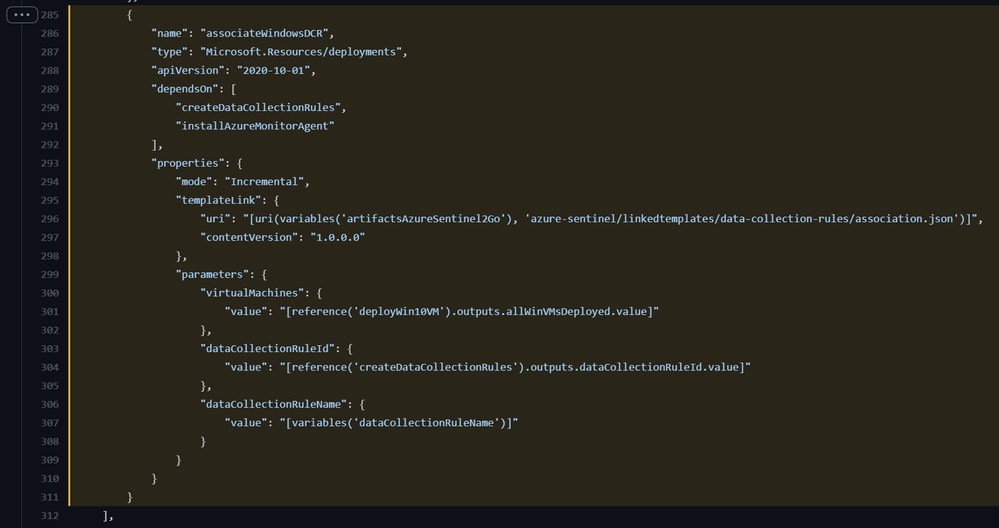

One additional step in the setup of the new connector is the association of the DCR with Virtual Machines.

This is the ARM template I use to create DCRAs:

Azure-Sentinel2Go/association.json at master · OTRF/Azure-Sentinel2Go (github.com)

Data Collection Rule Associations Azure Resource

{

“name”: “WORKSTATION5/microsoft.insights/WindowsDCR”,

“type”: “Microsoft.Compute/virtualMachines/providers/dataCollectionRuleAssociations”,

“apiVersion”: “2019-11-01-preview”,

“location”: “eastus”,

“properties”: {

“description”: “Association of data collection rule. Deleting this association will break the data collection for this virtual machine.”,

“dataCollectionRuleId”: “DATACOLLECTIONRULEID”

}

}

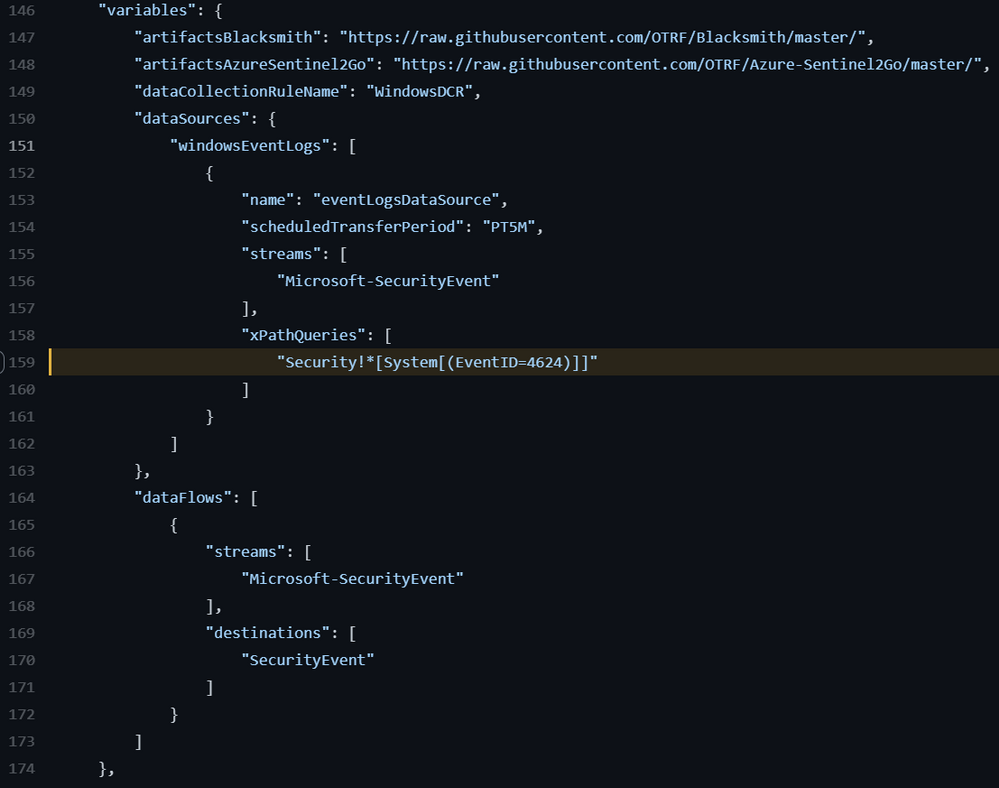

What about the XPath Queries?

As shown in the previous section, the XPath query is part of the “dataSources” section of the data collection rule resource. It is defined under the ‘windowsEventLogs’ data source type.

“dataSources”: {

“windowsEventLogs”: [

{

“name”: “eventLogsDataSource”,

“scheduledTransferPeriod”: “PT5M”,

“streams”: [

“Microsoft-SecurityEvent”

],

“xPathQueries”: [

“Security!*[System[(EventID=4624)]]”

]

}

]

}

Create Deployment Template

We can easily add all those ARM templates to an ‘Azure Sentinel & Win10 Workstation’ basic template. We just need to make sure we install the Azure Monitor Agent instead of the Log Analytics one, and enable the system-assigned managed identity in the Azure VM.

Template Resource List to Deploy:

- Azure Sentinel Instance

- Windows Virtual Machine

- Azure Monitor Agent Installed.

- System-assigned managed identity Enabled.

- Data Collection Rule

- Log Analytics Workspace ID

- Log Analytics Workspace Resource ID

- Data Collection Rule Association

- Data Collection Rule ID

- Windows Virtual Machine Resource Name

The following ARM template can be used for our first basic scenario:

Azure-Sentinel2Go/Win10-DCR-AzureResource.json at master · OTRF/Azure-Sentinel2Go (github.com)

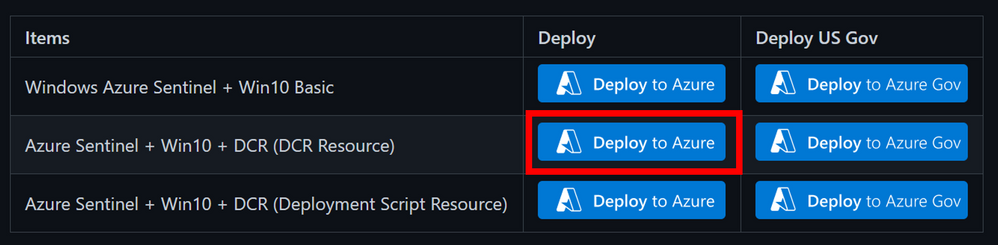

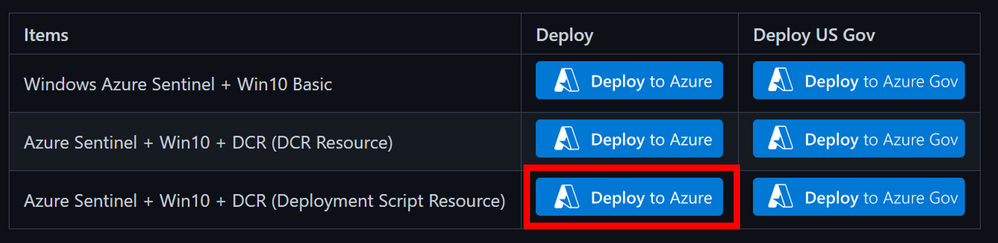

Run Deployment Template

You can deploy the ARM template via a “Deploy to Azure” button or via Azure CLI.

“Deploy to Azure” Button

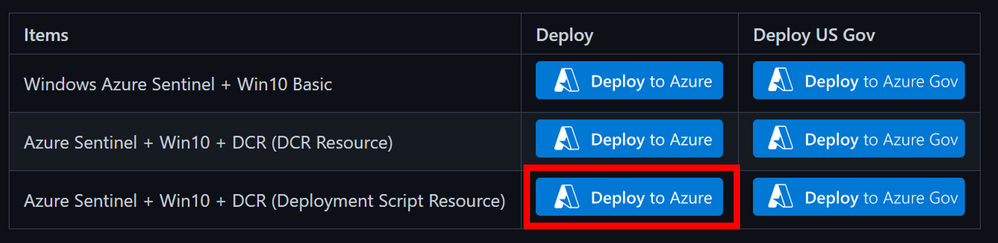

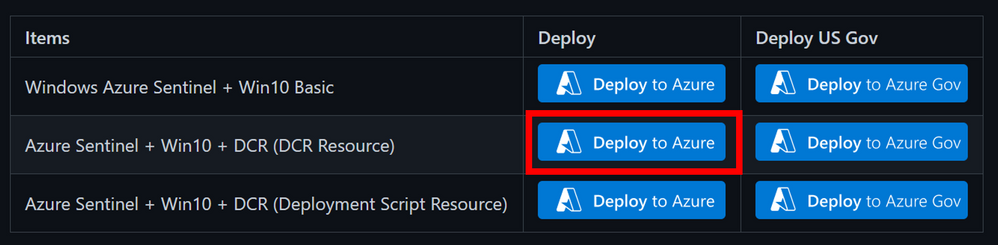

- Browse to Azure Sentinel2Go repository

- Go to grocery-list/Win10/demos.

- Click on the “Deploy to Azure” button next to “Azure Sentinel + Win10 + DCR (DCR Resource)”

- Fill out the required parameters:

- adminUsername: admin user to create in the Windows workstation.

- adminPassword: password for admin user.

- allowedIPAddresses: Public IP address to restrict access to the lab environment.

- Wait 5-10 mins and your environment should be ready.

Azure CLI

- Download demo template.

- Open a terminal where you can run Azure CLI from (i.e. PowerShell).

- Log in to your Azure Tenant locally.

az login

- Create Resource Group (Optional)

az group create -n AzSentinelDemo -l eastus

- Deploy ARM template locally.

az deployment group create –f ./ Win10-DCR-AzureResource.json -g MYRESOURCRGROUP –adminUsername MYUSER –adminPassword MYUSERPASSWORD –allowedIPAddresses x.x.x.x

- Wait 5-10 mins and your environment should be ready.

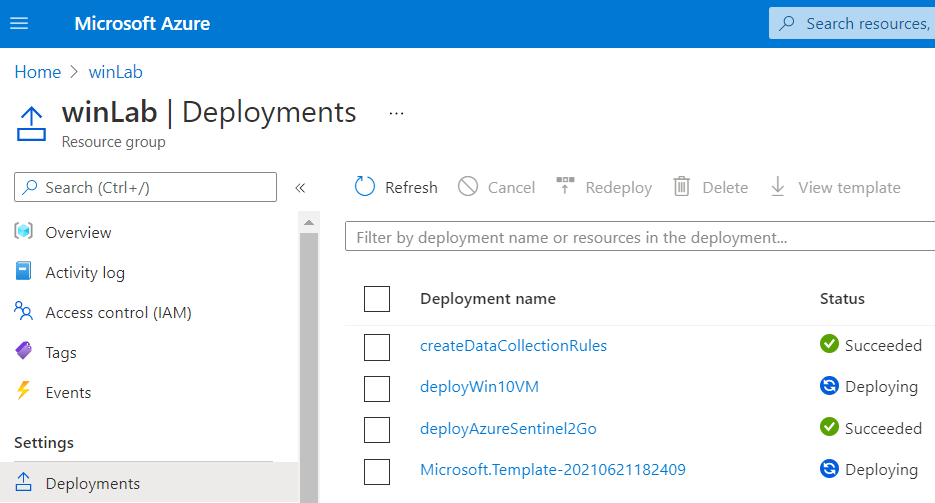

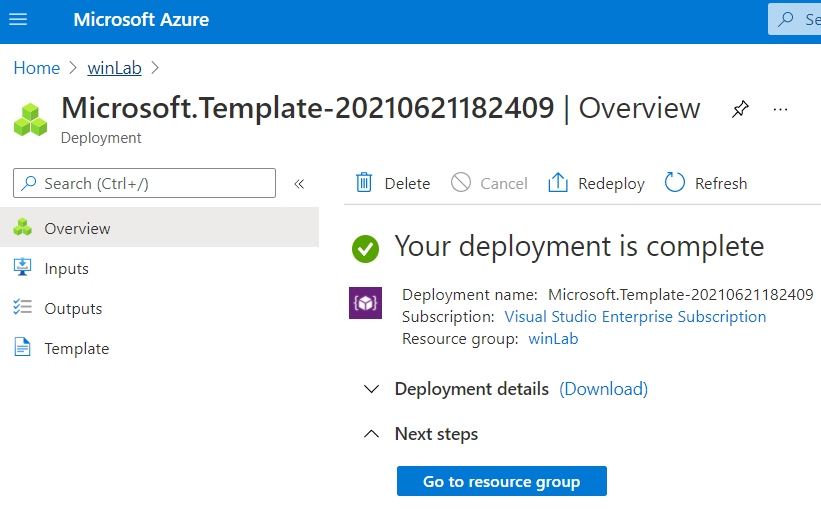

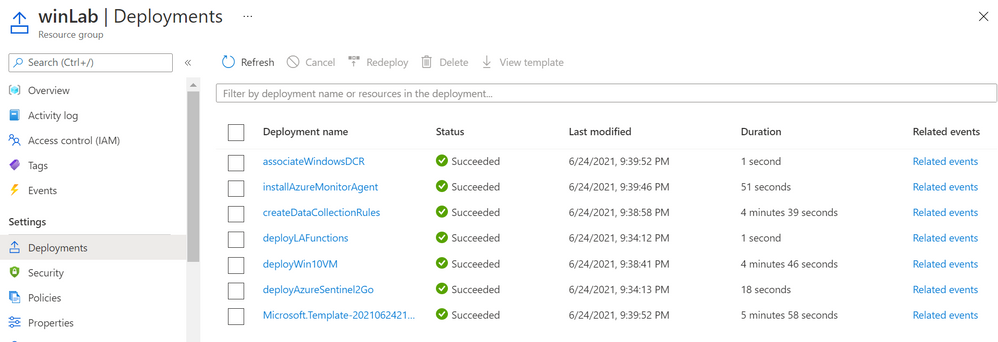

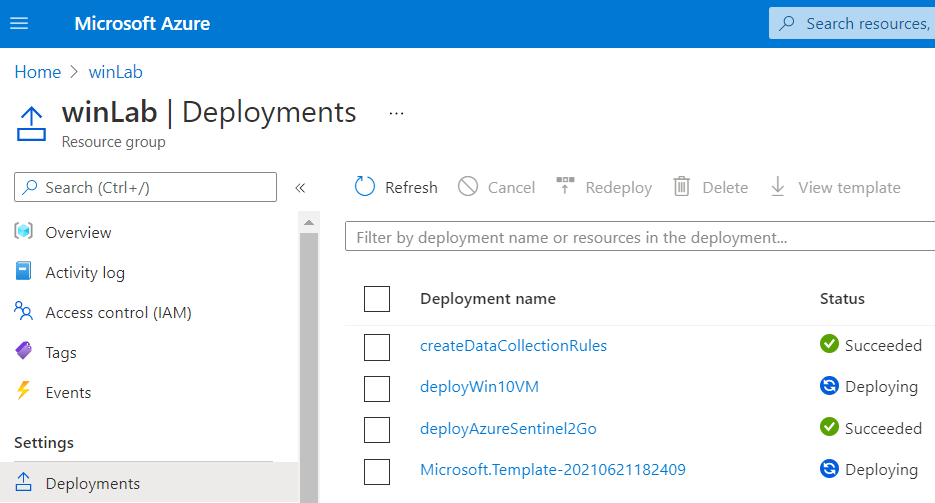

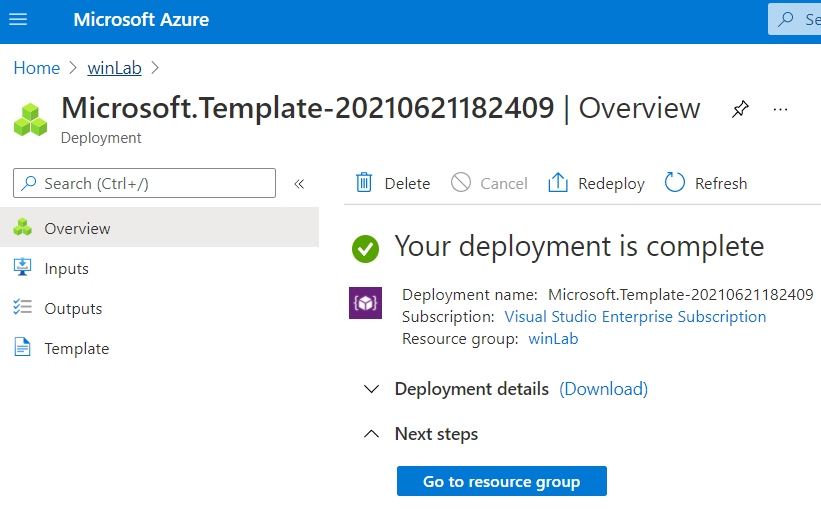

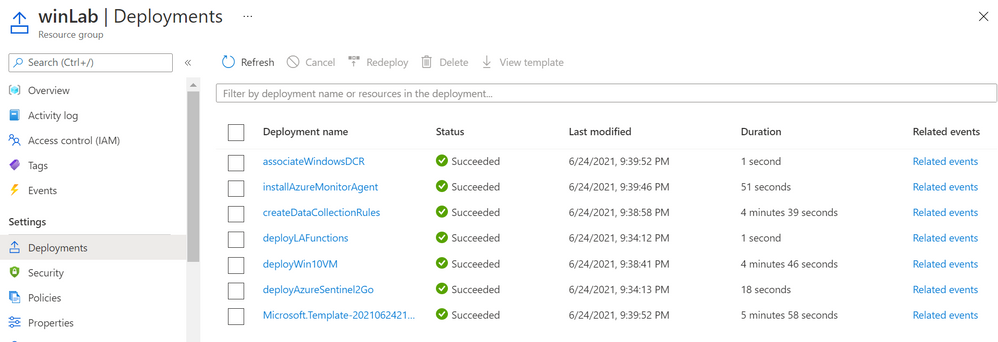

Whether you use the UI or the CLI, you can monitor your deployment by going to Resource Group > Deployments:

Verify Lab Resources

Once your environment is deployed successfully, I recommend verifying every resource that was deployed.

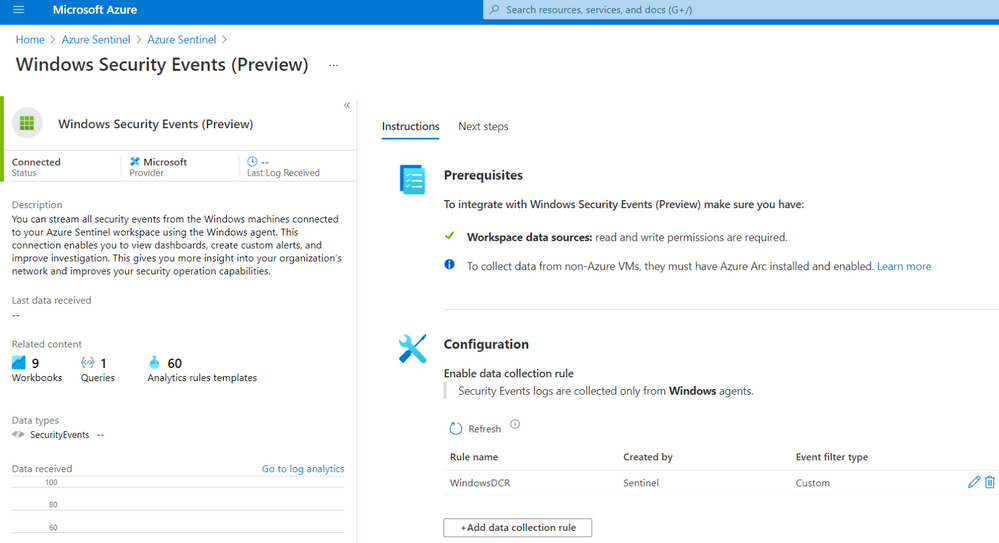

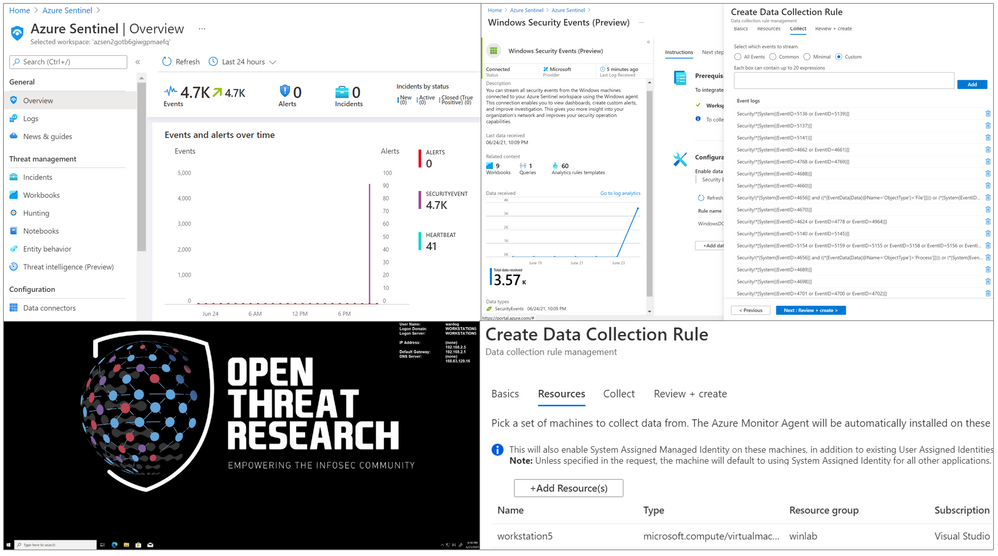

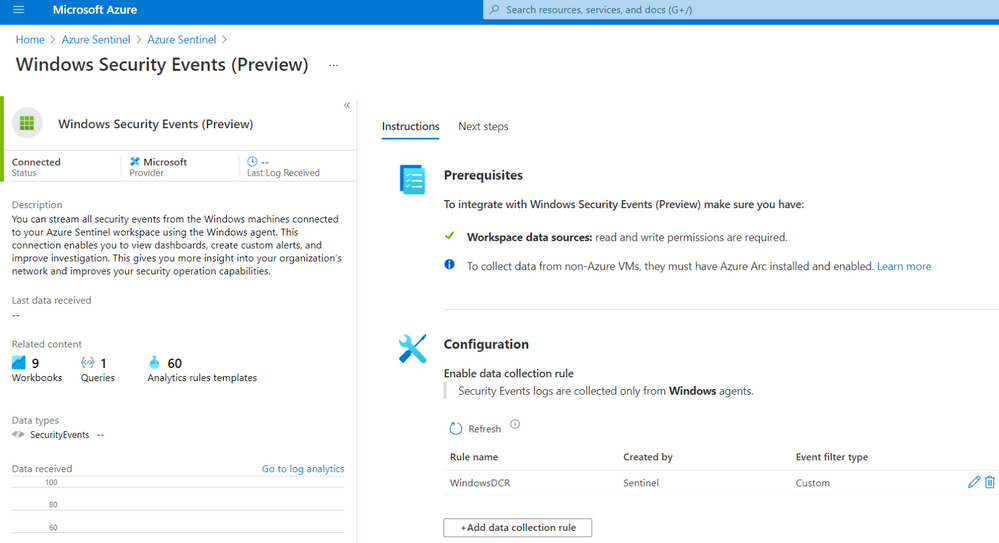

Azure Sentinel New Data Connector

You will see the Windows Security Events (Preview) data connector enabled with a custom Data Collection Rules (DCR):

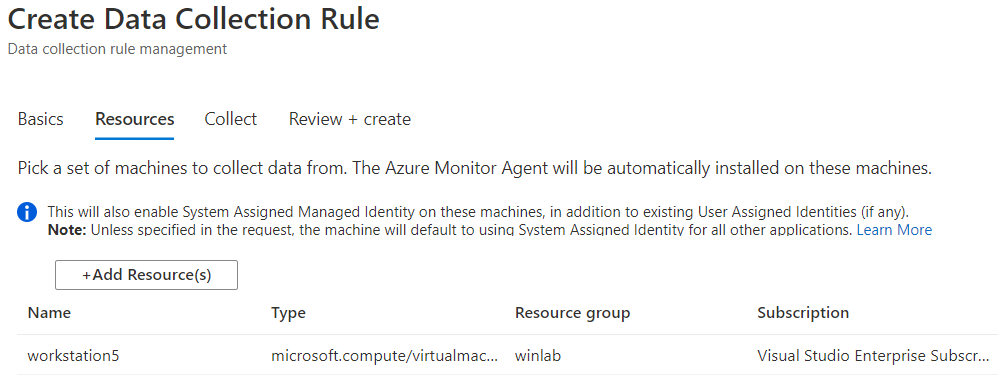

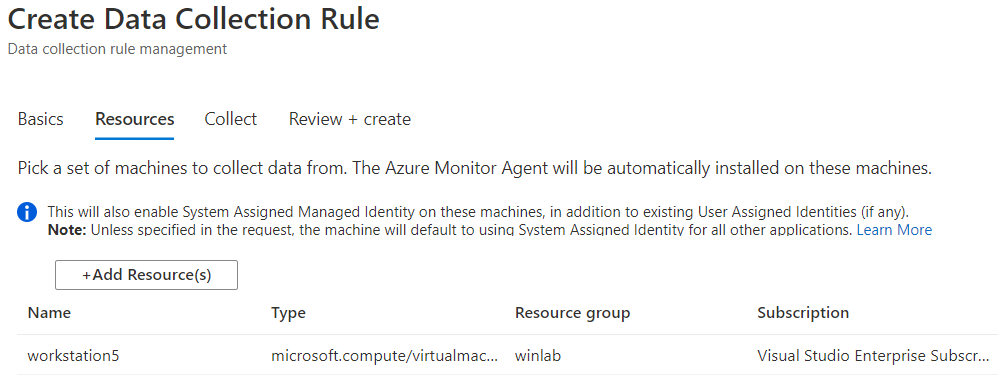

If you edit the custom DCR, you will see the XPath query and the resource that it got associated with. The image below shows the association of the DCR with a machine named workstation5.

You can also see that the data collection is set to custom and, for this example, we only set the event stream to collect events with Event ID 4624.

Windows Workstation

I recommend to RDP to the Windows Workstation by using its Public IP Address. Go to your resource group and select the Azure VM. You should see the public IP address to the right of the screen. This would generate authentication events which will be captured by the custom DCR associated with the endpoint.

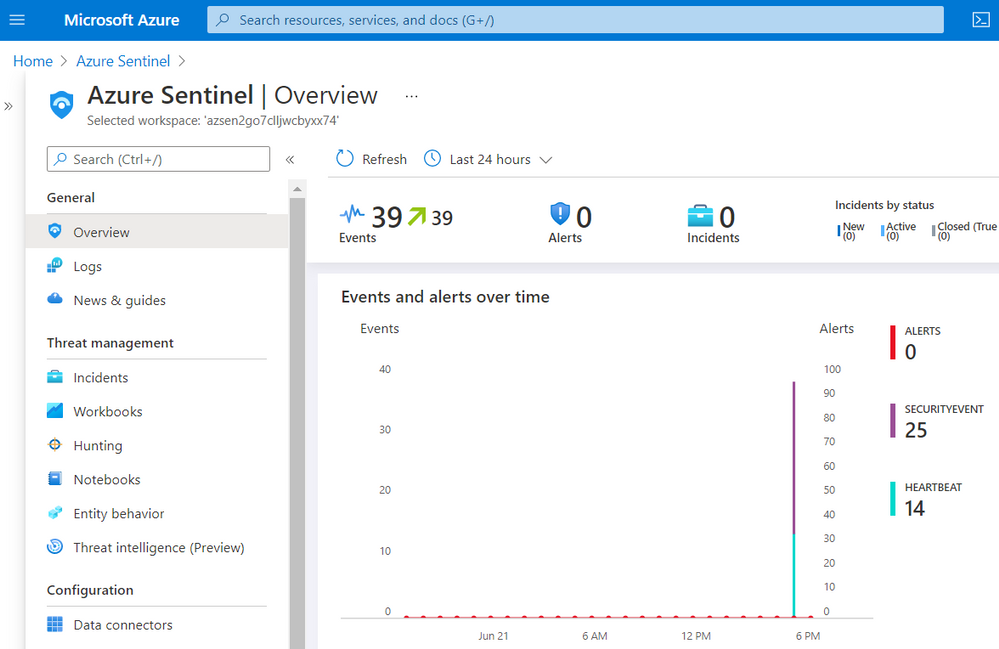

Check Azure Sentinel Logs

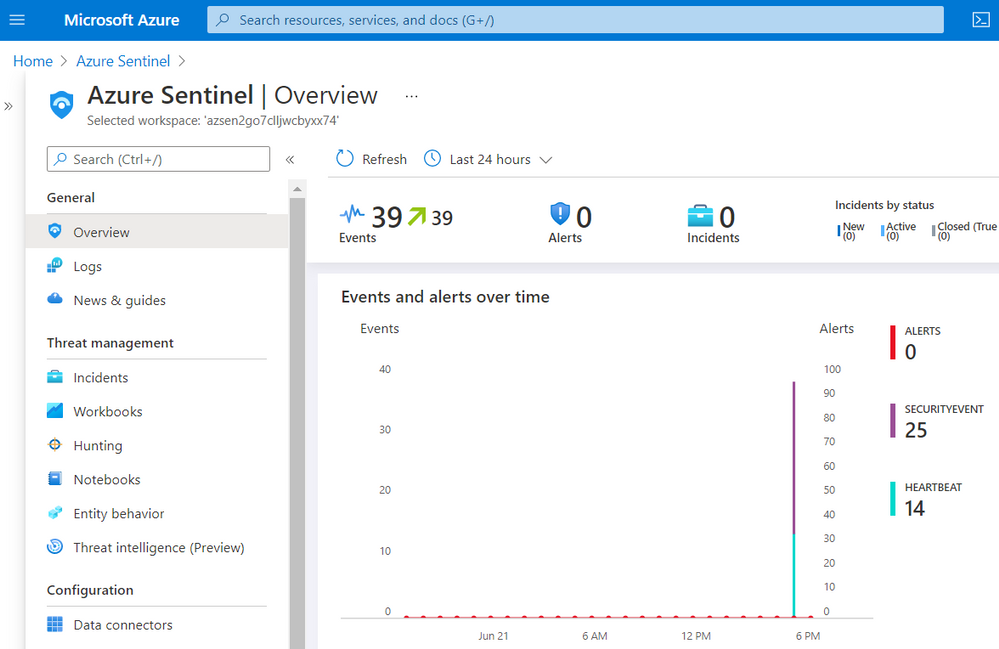

Go back to your Azure Sentinel, and you should start seeing some events on the Overview page:

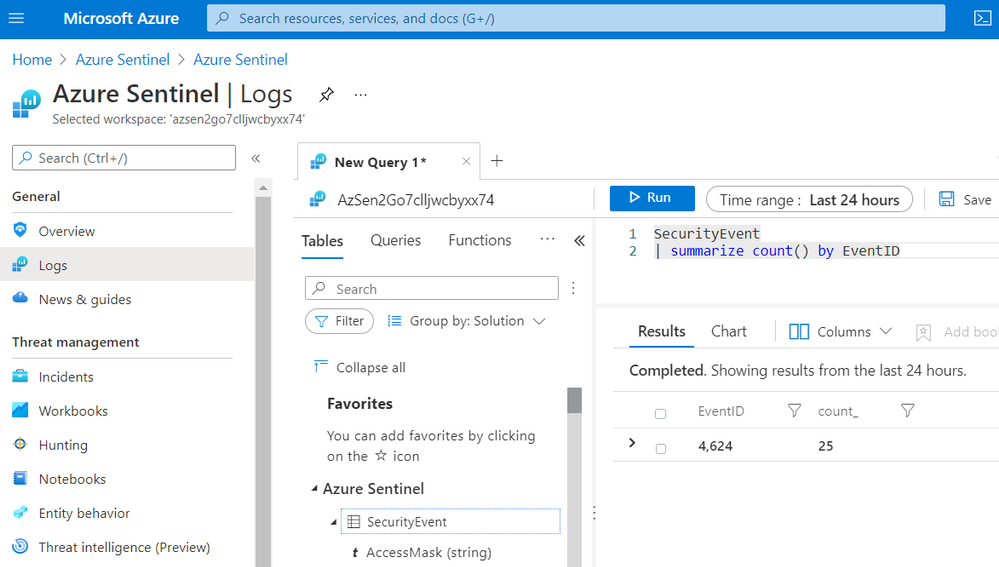

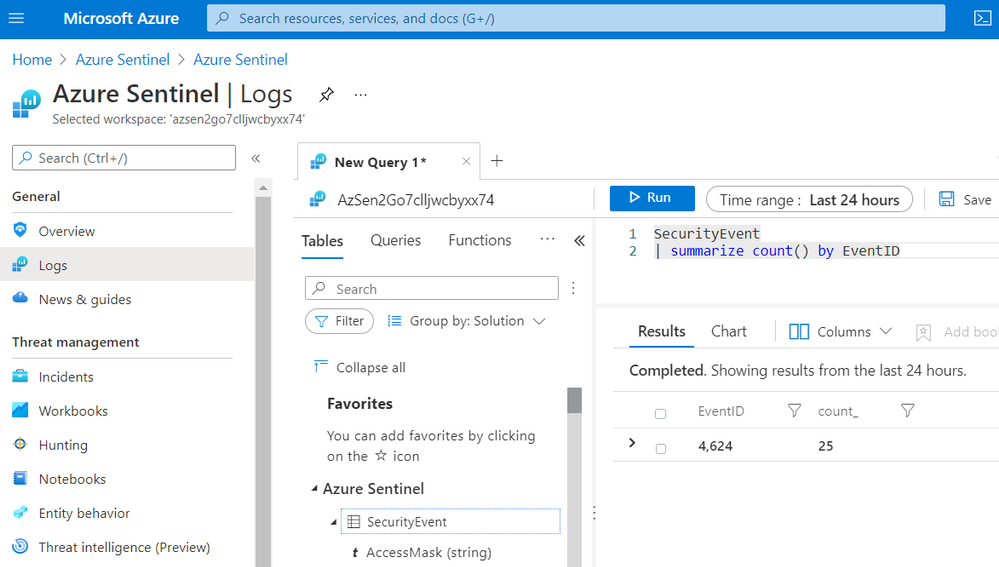

Go to Logs and run the following KQL query:

SecurityEvent

| summarize count() by EventID

As you can see in the image below, only events with Event ID 4624 were collected by the Azure Monitor Agent.

You might be asking yourself, “Who would only want to collect events with Event ID 4624 from a Windows endpoint?”. Believe it or not, there are network environments where due to bandwidth constraints, they can only collect certain events. Therefore, this custom filtering capability is amazing and very useful to cover more use cases and even save storage!

Any Good XPath Queries Repositories in the InfoSec Community?

Now that we know the internals of the new connector and how to deploy a simple lab environment, we can test multiple XPath queries depending on your organization and research use cases and bandwidth constraints. There are a few projects that you can use.

Palantir WEF Subscriptions

One of many repositories out there that contain XPath queries is the ‘windows-event-forwarding’ project from Palantir. The XPath queries are Inside of the Windows Event Forwarding (WEF) subscriptions. We could take all the subscriptions and parse them programmatically to extract all the XPath queries saving them in a format that can be used to be part of the automatic deployment.

You can run the following steps in this document available in Azure Sentinel To-go and extract XPath queries from the Palantir project.

Azure-Sentinel2Go/README.md at master · OTRF/Azure-Sentinel2Go (github.com)

OSSEM Detection Model + ATT&CK Data Sources

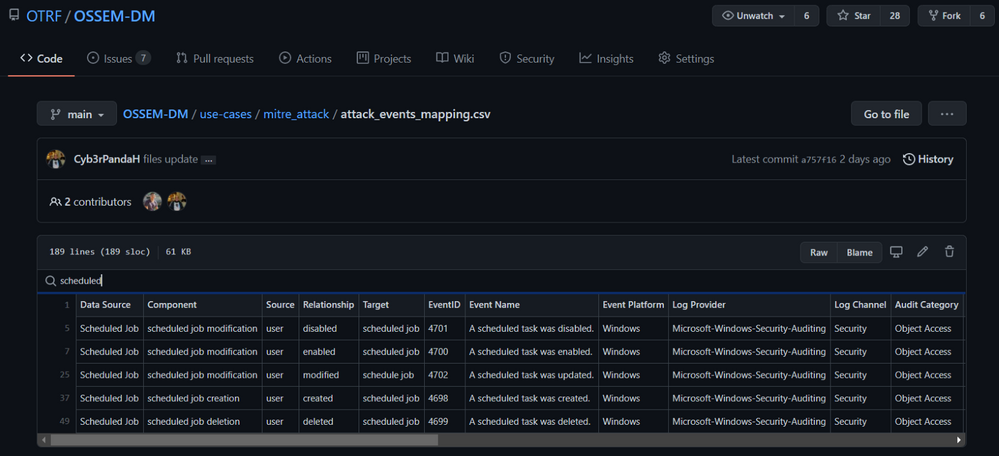

From a community perspective, another great resource you can use to extract XPath Queries from is the Open Source Security Event Metadata (OSSEM) Detection Model (DM) project. A community driven effort to help researchers model attack behaviors from a data perspective and share relationships identified in security events across several operating systems.

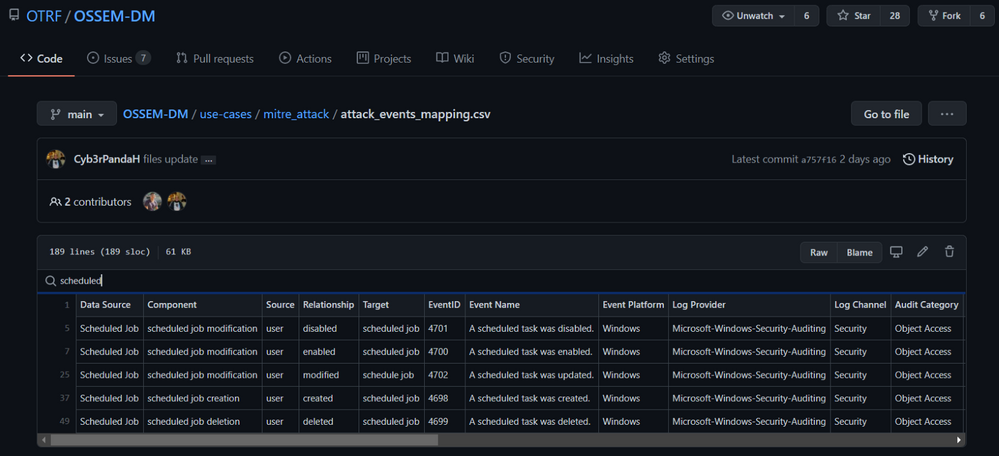

One of the use cases from this initiative is to map all security events in the project to the new ‘Data Sources’ objects provided by the MITRE ATT&CK framework. In the image below, we can see how the OSSEM DM project provides an interactive document (.CSV) for researchers to explore the mappings (Research output):

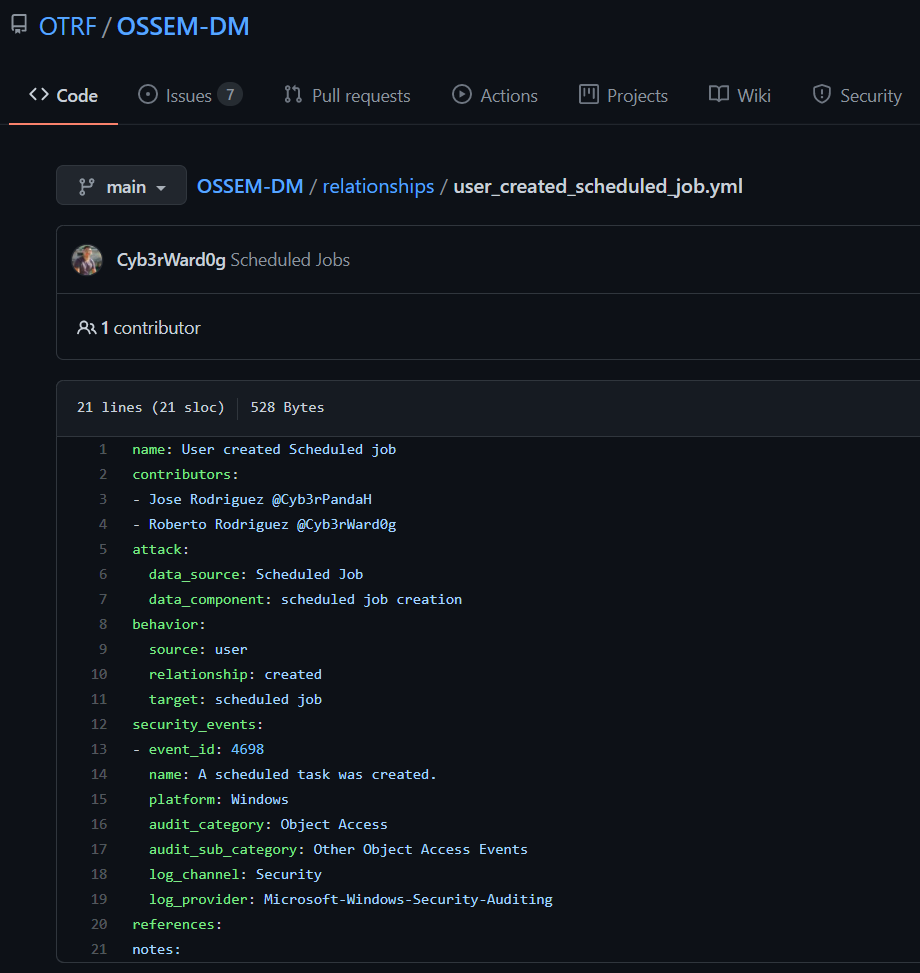

One of the advantages of this project over others is that all its data relationships are in YAML format which makes it easy to translate to others formats. For example, XML. We can use the Event IDs defined in each data relationship documented in OSSEM DM and create XML files with XPath queries in them.

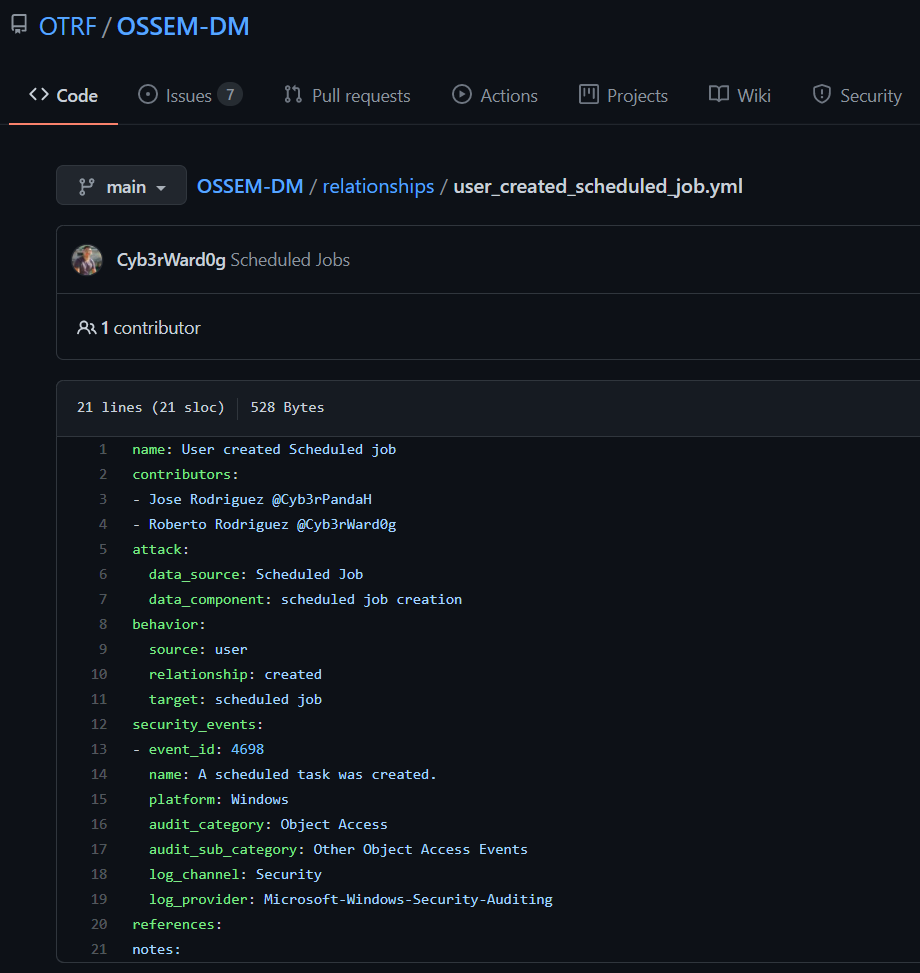

Exploring OSSEM DM Relationships (YAML Files)

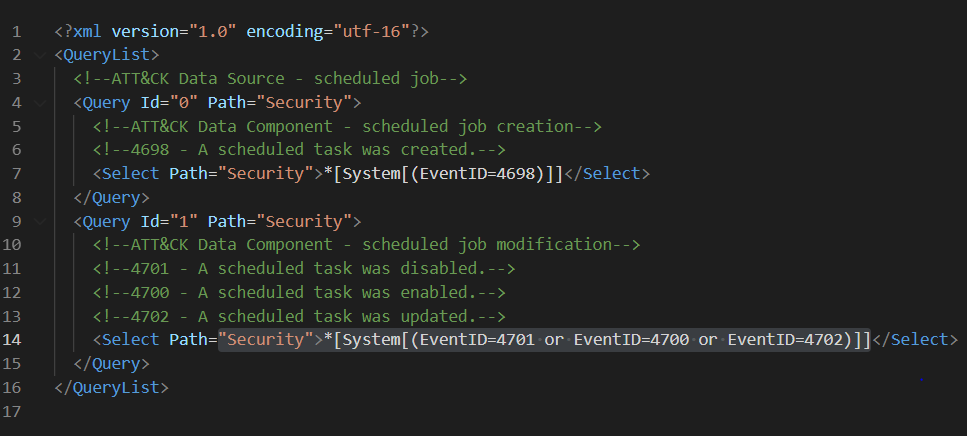

Let’s say we want to use relationships related to scheduled jobs in Windows.

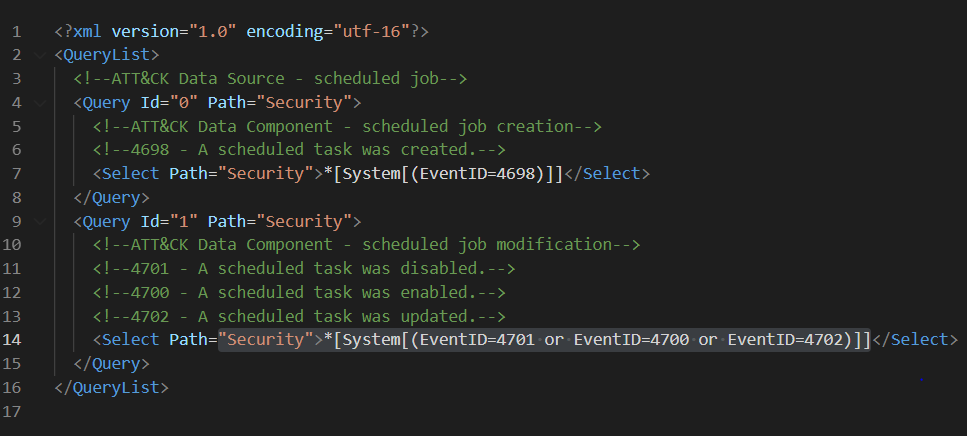

Translate YAML files to XML Query Lists

We can process all the YAML files and export the data in an XML files. One thing that I like about this OSSEM DM use case is that we can group the XML files by ATT&CK data sources. This can help organizations organize their data collection in a way that can be mapped to detections or other ATT&CK based frameworks internally.

We can use the QueryList format to document all ‘scheduled jobs relationships‘ XPath queries in one XML file.

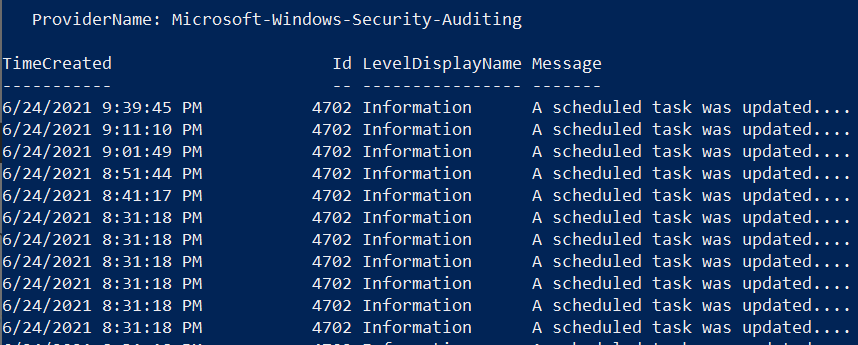

I like to document my XPath queries first in this format because it expedites the validation process of the XPath queries locally on a Windows endpoint. You can use that XML file in a PowerShell command to query Windows Security events and make sure there are not syntax issues:

[xml]$scheduledjobs = get-content .scheduled-job.xml

Get-WinEvent -FilterXml $scheduledjobs

Translate XML Query Lists to DCR Data Source:

Finally, once the XPath queries have been validated, we could simply extract them from the XML files and put them in a format that could be used in ARM templates to create DCRs. Do you remember the dataSources property of the DCR Azure resource we talked about earlier? What if we could get the values of the windowsEventLogs data source directly from a file instead of hardcoding them in an ARM template? The example below is how it was previously being hardcoded.

“dataSources”: {

“windowsEventLogs”: [

{

“name”: “eventLogsDataSource”,

“scheduledTransferPeriod”: “PT5M”,

“streams”: [

“Microsoft-SecurityEvent”

],

“xPathQueries”: [

“Security!*[System[(EventID=4624)]]”

]

}

]

}

We could use the XML files created after processing OSSEM DM relationships mapped to ATT&CK data sources and creating the following document. We can pass the URL of the document as a parameter in an ARM template to deploy our lab environment:

Azure-Sentinel2Go/ossem-attack.json at master · OTRF/Azure-Sentinel2Go (github.com)

Wait! How Do You Create the Document?

The OSSEM team is contributing and maintaining the JSON file from the previous section in the Azure Sentinel2Go repository. However, if you want to go through the whole process on your own, Jose Rodriguez (@Cyb3rpandah) was kind enough to write every single step to get to that output file in the following blog post:

OSSEM Detection Model: Leveraging Data Relationships to Generate Windows Event XPath Queries (openthreatresearch.com)

Ok, But, How Do I Pass the JSON file to our Initial ARM template?

In our initial ARM template, we had the XPath query as an ARM template variable as shown in the image below.

We could also have it as a template parameter. However, it is not flexible enough to define multiple DCRs or even update the whole DCR Data Source object (Think about future coverage beyond Windows logs).

Data Collection Rules – CREATE API

For more complex use cases, I would use the DCR Create API. This can be executed via a PowerShell script which can also be used inside of an ARM template via deployment scripts. Keep in mind that, the deployment script resource requires an identity to execute the script. This managed identity of type user-assigned can be created at deployment time and used to create the DCRs programmatically.

PowerShell Script

If you have an Azure Sentinel instance without the data connector enabled, you can use the following PowerShell script to create DCRs in it. This is good for testing and it also works in ARM templates.

Keep in mind, that you would need to have a file where you can define the structure of the windowsEventLogs data source object used in the creation of DCRs. We created that in the previous section remember? Here is where we can use the OSSEM Detection Model XPath Queries File ;)

Azure-Sentinel2Go/ossem-attack.json at master · OTRF/Azure-Sentinel2Go (github.com)

FileExample.json

{

“windowsEventLogs”: [

{

“Name”: “eventLogsDataSource”,

“scheduledTransferPeriod”: “PT1M”,

“streams”: [

“Microsoft-SecurityEvent”

],

“xPathQueries”: [

“Security!*[System[(EventID=5141)]]”,

“Security!*[System[(EventID=5137)]]”,

“Security!*[System[(EventID=5136 or EventID=5139)]]”,

“Security!*[System[(EventID=4688)]]”,

“Security!*[System[(EventID=4660)]]”,

“Security!*[System[(EventID=4656 or EventID=4661)]]”,

“Security!*[System[(EventID=4670)]]”

]

}

]

}

Run Script

Once you have a JSON file similar to the one in the previous section, you can run the script from a PowerShell console:

.Create-DataCollectionRules.ps1 -WorkspaceId xxxx -WorkspaceResourceId xxxx -ResourceGroup MYGROUP -Kind Windows -DataCollectionRuleName WinDCR -DataSourcesFile FileExample.json -Location eastus –verbose

One thing to remember is that you can only have 10 Data Collection rules. That is different than XPath queries inside of one DCR. If you attempt to create more than 10 DCRs, you will get the following error message:

ERROR

VERBOSE: @{Headers=System.Object[]; Version=1.1; StatusCode=400; Method=PUT;

Content={“error”:{“code”:”InvalidPayload”,”message”:”Data collection rule is invalid”,”details”:[{“code”:”InvalidProperty”,”message”:”‘Data Sources. Windows Event Logs’ item count should be 10 or less. Specified list has 11 items.”,”target”:”Properties.DataSources.WindowsEventLogs”}]}}}