by Contributed | Oct 7, 2021 | Dynamics 365, Microsoft 365, Technology

This article is contributed. See the original author and article here.

Dynamics 365 Sales helps teams better understand business relationships, take actions based on insights, and close opportunities faster. One of the many ways the app helps sales teams succeed is enabling them to build segments for use in assignment rules to ensure leads and opportunities are routed to the right sellers.

What is a segment?

A segment is a collection of leads and opportunities that are grouped together based on certain conditions, such as location, deal value, language, and product. You can create segments for both lead and opportunity entities. For details, see this article: Create and activate a segment.

You can use segments in assignment rules and sequences. With segments, you can choose the set of characteristics a lead or opportunity should have in order to get assigned to relevant sellers or to connect to a certain sequence, without the need to create the same conditions repeatedly.

When a new lead or opportunity is created in Dynamics 365 Sales and matches the conditions of a specified segment, it will automatically become a member of that segment, and will assign to sellers and connect to a sequence based on how you build your organization’s process automation.

Let’s take an example to understand how it works.

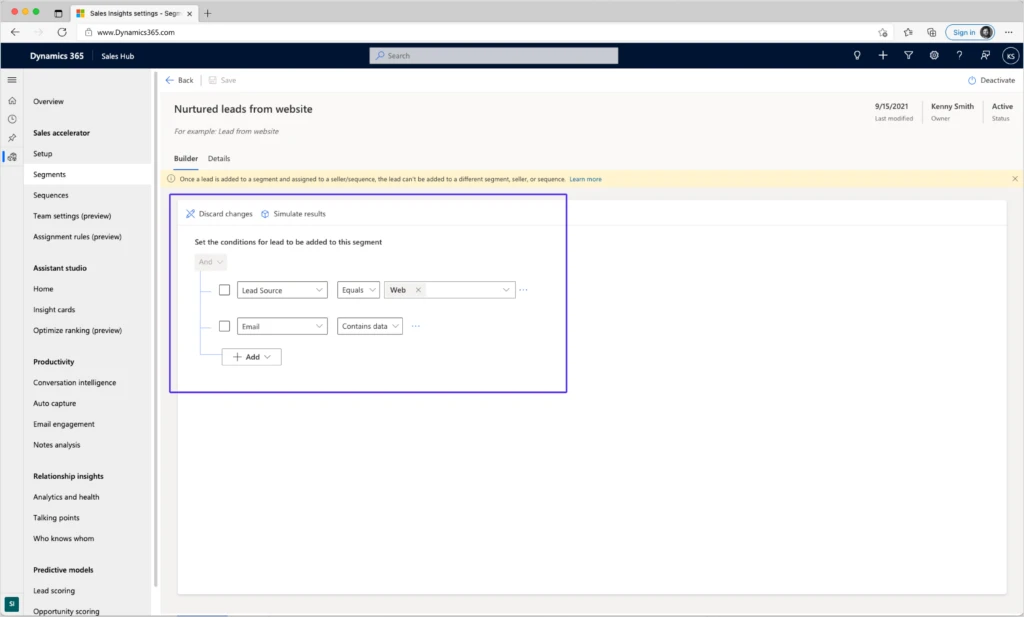

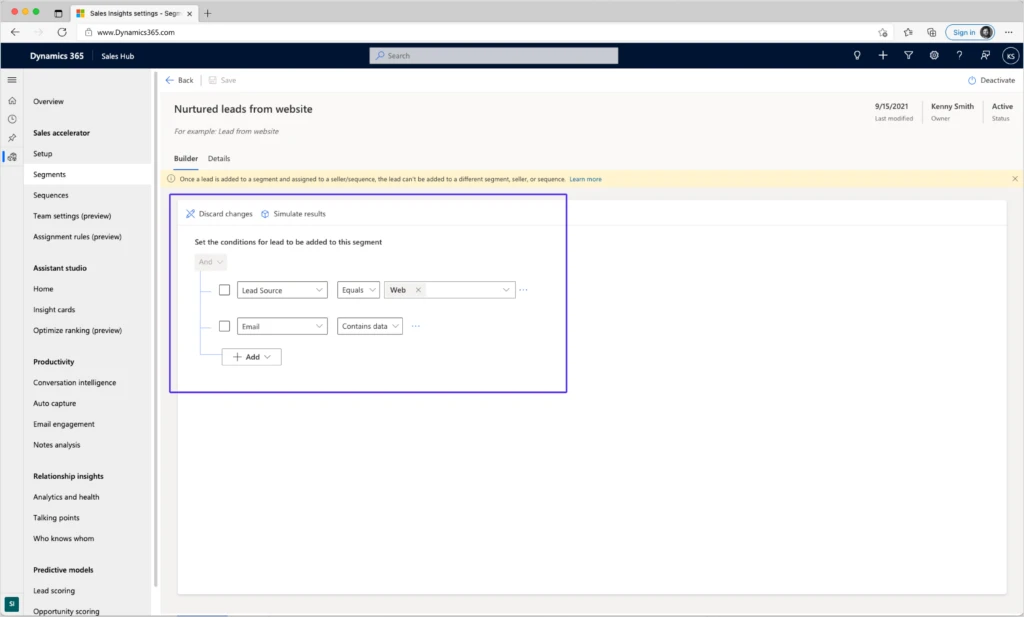

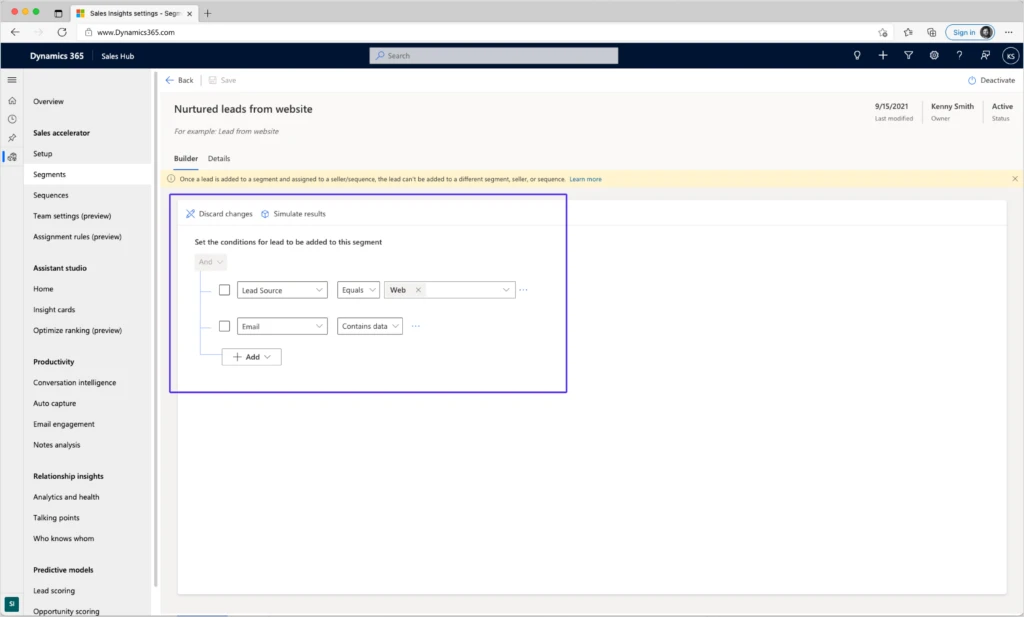

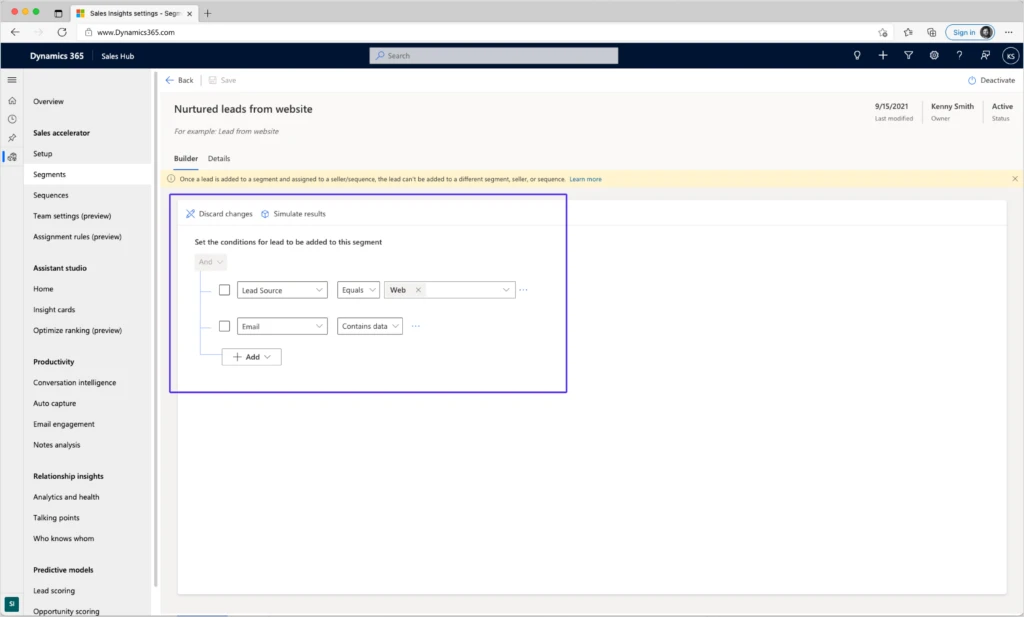

Define segment conditions

The following segment is defined with simple conditions, to catch all leads coming from the company’s website and that have an email address.

A segment can also include more complex parameters, using groups of AND/OR conditions or a link to a related entity. For example, you can create a segment that will capture all opportunities that are interested in printers or monitors, and that are related to one of two relevant accounts.

Simulate segment members

You can simulate the results based on existing data in your system, to make sure the segment will catch the lead or opportunity with the desired characteristics. The simulation results are not actual members of that segment and are just an example of the types of leads the segment will capture when it is activated.

Important highlights and limitations

- A lead or opportunity will be evaluated for a segment when it is created, and again when it is being updated. For example, a lead can enter the system without a populated email address, but after going through a nurturing process, an email address will be added, and the lead will become a member of the “Nurtured leads from website” segment in the above example.

- Your segment must be activated to catch new leads or opportunities.

- A lead or opportunity can become a member of only one segment. If a lead matches the conditions of more than one segment, it will randomly become a member of one of them.

- When a lead is added to a segment and assigned to a seller or connected to a sequence, it can’t be added to a different segment, seller, or sequence.

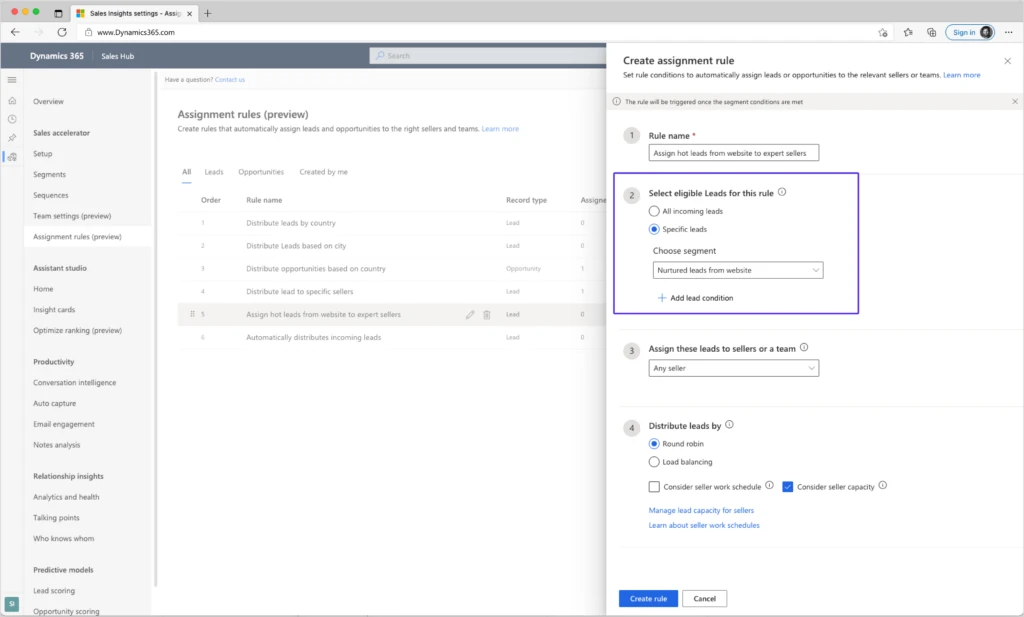

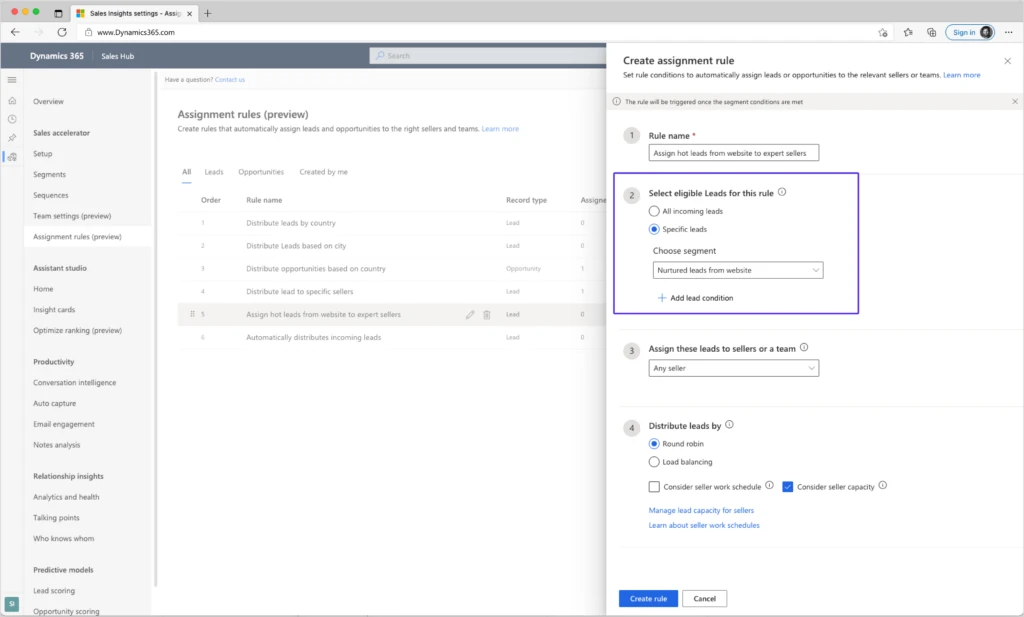

How to use a segment in assignment rules

When creating assignment rules for a lead or opportunity, you can use a segment to define the type of record that will be assigned to sellers via each rule. You can create multiple rules based on the same segment and add specific conditions to each rule to match your business process.

In the following example, we can select the “Nurtured leads from website” segment. This means that all leads that will become members of that segment will be assigned to sellers by this rule’s conditions.

We can add another condition to that segment that will route the hot leads from the segment to the most experienced sellers.

For this scenario, we can create two rules: one rule to capture hot leads from the segment, and another rule to catch all remaining leads from the segment.

By placing the rule for assigning hot leads above the default rule, hot leads will be evaluated first and will be assigned to experienced sellers. The rest of the leads will be assigned by the default rule.

Next steps

For more information about segments, read the documentation:

The post Automatically route deals to the right sellers by using segments in assignment rules appeared first on Microsoft Dynamics 365 Blog.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

by Contributed | Oct 7, 2021 | Technology

This article is contributed. See the original author and article here.

Are you looking to modernize your on-premises databases to Azure SQL? Join Alexandra Ciortea, Raymond Truong, Wenjing Wang, and Anna Hoffman to understand how you can size your Azure SQL target accordingly, based on the current performance and business requirements. We will walk you through several approaches and models that can suit your needs.

by Scott Muniz | Oct 7, 2021 | Security, Technology

This article is contributed. See the original author and article here.

Official websites use .gov

A .gov website belongs to an official government organization in the United States.

Secure .gov websites use HTTPS A

lock ( )

) or

https:// means you’ve safely connected to the .gov website. Share sensitive information only on official, secure websites.

by Scott Muniz | Oct 7, 2021 | Security, Technology

This article is contributed. See the original author and article here.

In coordination with the Office of Management and Budget (OMB), the Federal Chief Information Security Officer Council (FCISO) Trusted Internet Connections (TIC) Subcommittee, and the General Services Administration, CISA has released Trusted Internet Connections 3.0 Remote User Use Case. The Remote User Use Case provides federal agencies with guidance on applying network and multi-boundary security for agencies that permit remote users on their networks. In accordance with OMB Memorandum M-19-26, this use case builds off TIC 3.0 Interim Telework Guidance originally released in Spring 2020.

The TIC 3.0 Remote User Use Case considers additional security patterns agencies may face with remote users and includes four new security capabilities:

- User Awareness and Training,

- Domain Name Monitoring,

- Application Container, and

- Remote Desktop Access.

In conjunction with the Remote User Use Case, CISA has also released Response to Comments on TIC 3.0 Remote User Use Case and the Pilot Process Handbook. These additional documents provide feedback on the Remote User Use Case and describes the process by which agencies should conduct TIC 3.0 pilots.

CISA encourages all federal government agencies and organizations to review the TIC 3.0 Remote User Use Case and visit the CISA TIC page for updates and additional information on the TIC program.

by Scott Muniz | Oct 6, 2021 | Security, Technology

This article is contributed. See the original author and article here.

Mozilla has released security updates to address vulnerabilities in Firefox and Firefox ESR . An attacker could exploit some of these vulnerabilities to take control of an affected system.

CISA encourages users and administrators to review the Mozilla security advisories for Firefox 93, Firefox ESR 78.15, and Firefox ESR 91.2.

by Scott Muniz | Oct 6, 2021 | Security, Technology

This article is contributed. See the original author and article here.

The Apache Software Foundation has released Apache HTTP Server version 2.4.50 to address two vulnerabilities. An attacker could exploit these vulnerabilities to take control of an affected system. One vulnerability, CVE-2021-41773, has been exploited in the wild.

The Cybersecurity and Infrastructure Security Agency (CISA) encourages users and administrators to review the Apache HTTP Server 2.4.50 vulnerabilities page and apply the necessary update.

by Contributed | Oct 6, 2021 | Technology

This article is contributed. See the original author and article here.

Building a great product means listening to what our customers need, and we’ve heard loud and clear from our customers that Zero Trust adoption is more important than ever. In the 2021 Zero Trust Adoption Report, we learned that 96% of security decision-makers state that Zero Trust is critical to their organization’s success, and 76% of organizations have at least started implementing a Zero Trust strategy. In the next couple years, Zero Trust strategy is expected to remain the top security priority and organizations anticipate increasing their investment.

Zero Trust adoption has been accelerated by the U.S. government as well. In May 2021, the White House signed an executive order calling for improvement to the nation’s cybersecurity, including advancing towards a Zero Trust architecture. More recently, the Office of Management and Budget released a draft federal strategy for moving towards Zero Trust architecture, with key goals to be achieved by 2024. Microsoft has published customer guidance and resources for meeting Executive Order objectives.

These government and industry imperatives create a huge opportunity for Microsoft and our partners to enhance support for our customers as they move towards an end-to-end Zero Trust security posture. At Microsoft, we strive to make it easy for partners, such as independent software vendors, to integrate with us so customers can easily adopt the most comprehensive security solutions. We recognize that customers take varied paths on their journey to Zero Trust and have multiple security solutions in their environment. When we work together to meet these needs, we build stronger protections for our companies and nations.

To support partner integration and Zero Trust readiness, we recently released partner integration guidance at our Zero Trust Guidance Center. This guidance is organized across the pillars of Zero Trust, supporting integrations across a wide variety of products and partners. Examples include:

We applaud those who are embracing a Zero Trust approach for security solutions. We will close out with a few examples of how ISV partners, F5 and Yubico, have benefited from this integration guidance in the Zero Trust Guidance Center.

F5 and Microsoft rescue a county from malware

Many companies rely line-of-business applications that were developed before adoption of the latest authentication protocols like SAML and OIDC. This means organization must manage multiple ways to authenticate users, which complicates user experience and increases costs.

BIG-IP Access Policy Manager (APM) is F5’s access management proxy solution that centralizes access to apps, APIs and data. BIG-IP APM integrated with Microsoft Azure AD to provide conditional access to the BIG-IP APM user interface.

Last year, Durham County enhanced security across a hybrid environment with Azure AD and F5 BIG-IP APM in the wake of a serious cybersecurity incident. F5 BIG-IP APM gave employees the unified solution they needed to access legacy on-premises apps. F5 used Azure AD to apply security controls to all their apps, enforce multifactor authentication, and use finetuned policies based on circumstances like employee login location. In addition, self-service password reset powered by the solution reduced help desk calls for passwords by 80%.

Government of Nunavut turns to Yubico and Microsoft to build phishing resistance following ransomware attack

In 2019, the Canadian government of Nunavut experienced a spear phishing attack that took down critical IT resources for the territory. In the wake of the attack, protecting identities and applications was a top priority.

Together, Azure AD and YubiKey offered a solution that upgraded the security of the Government of Nunavut and fit their unique needs. The Government of Nunavut wanted to implement a phishing-resistant authentication solution. In addition, the government agencies used a variety of Windows-based systems, and, because of their remote locations, had inconsistent network access. To address these needs, they adopted YubiKeys, which are a hardware device that can be used for multi-factor authentication with no network, power source, or client software. You can read the full story from Yubico and learn more from the video below.

Learn more

We are incredibly proud of the work our partners are doing to provide customers with critical cybersecurity solutions using the principles of Zero Trust. Check out our newly published partner integration guidance for Zero Trust readiness to learn more about opportunities.

Learn more about Microsoft identity:

by Contributed | Oct 6, 2021 | Technology

This article is contributed. See the original author and article here.

Getting started with Azure Purview for data governance is quick and easy. First, if you don’t already have an Azure account, get instant access and $200 of credit to try Azure Purview by signing up for a free account.

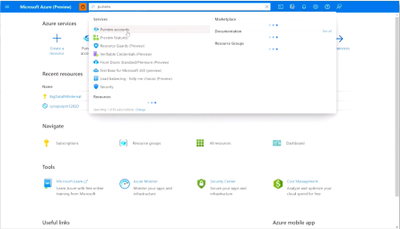

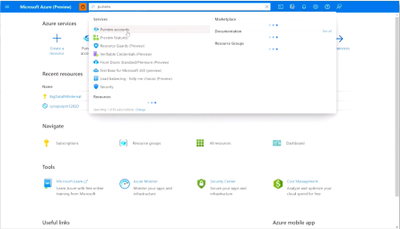

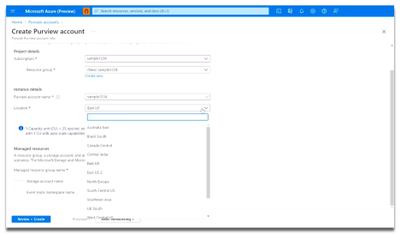

After you create an Azure account, sign into the Azure portal and search for Purview accounts.

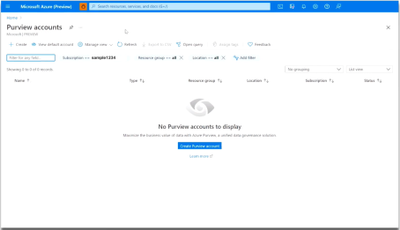

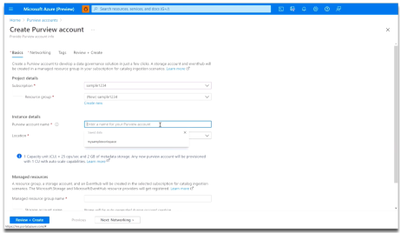

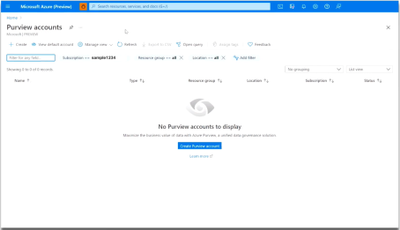

Then select Create to start an Azure Purview account. Note that you can add only one Azure Purview account at a time.

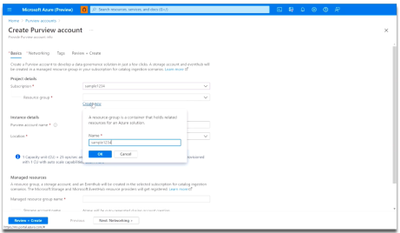

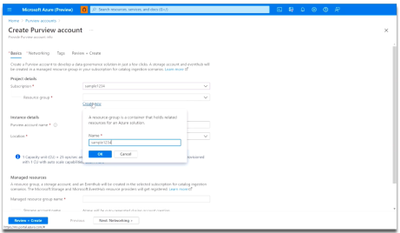

In the Basics tab, select an existing Resource group or create a new one.

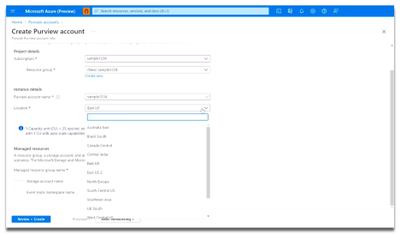

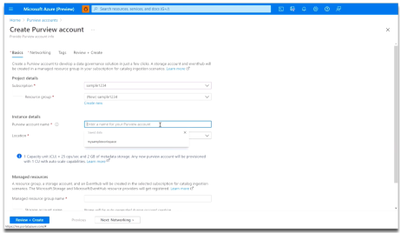

Now, enter a name for your Azure Purview account. Note that spaces and symbols are not allowed.

Next, choose your Location.

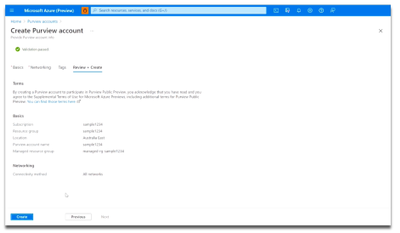

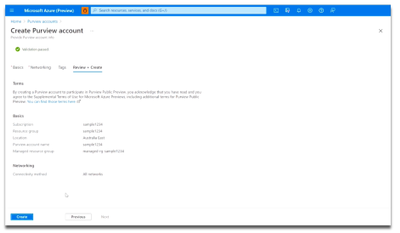

Finally, select the Review + Create button, then the Create button. Your Azure Purview account will be ready in a few minutes!

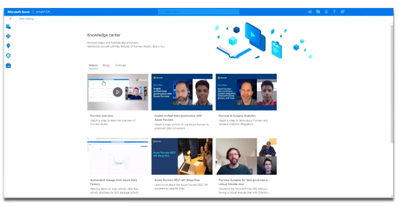

Once you’ve launched an Azure Purview account, be sure to first visit the Knowledge center in the Azure Purview Studio.

The Knowledge center can be accessed via the home page.

Here, you can watch videos to learn more about the capabilities of Azure Purview, read blog posts about the latest product announcements, and do tutorials to get started with registering and scanning new data sources. Learn how to set up a business glossary and take a tour of the Azure Purview Studio to familiarize yourself with key features.

Try Azure Purview today

by Contributed | Oct 6, 2021 | Dynamics 365, Microsoft 365, Technology

This article is contributed. See the original author and article here.

Many organizations face supply chain challenges that can be addressed through digital transformation, yet organizational limitations make this transformation difficult. Disparate and disconnected enterprise systems can prohibit companies from gaining end-to-end visibility into their supply chains, while outdated technology can create a lack of business continuity during disruptions or lead to an inability to rapidly adapt to changing customer demand. New technologies that enable and automate data-driven decision-making should be central to any strategy designed to meet these challenges. Microsoft Dynamics 365 Intelligent Order Management is a nimble and modern, open platform that empowers businesses to do exactly that.

Intelligent fulfillment optimization

Dynamics 365 Intelligent Order Management comes with a fulfillment optimization engine that intelligently manages order complexities, while also providing real-time end-to-end visibility into all inventory and order flows. Companies that ship direct-to-consumer, such as retailers, manufacturers, and distributors, face considerable challenges in this arena. This is one reason that in a recent Economist survey sponsored by Microsoft, ninety-nine percent of OEMs (original equipment manufacturers) believe that the digital transformation of their supply chain is important to meeting their organizations’ strategic objectives.1

The challenge comes from the complexity created by combining a myriad of order sources such as e-commerce marketplaces, physical stores, and call centers. Plus, a growing number of options to fulfill, including distribution centers, third-party logistics providers, and supplier drop-shipping. And finally, any number of inventory holding locations from which physical inventory can be pulled to fulfill customer orders. Leveraging and optimizing the use of these options and assets is crucial for businesses seeking to profitably deliver on their order promise.

With our rules-based, event-driven, AI-infused fulfillment optimization engine, we make it easier than ever to connect to any of these enterprise applications via simple API interfaces. The result is that companies can quickly connect their existing order management systems, such as ERP and WMS, and extend these with our pre-built partner connectors. By giving businesses this unprecedented flexibility, Intelligent Order Management plays a pivotal role in digitally transforming the supply chain while also making supply chains more resilient.

Learn more in our recent e-book: The Savvy CSCO’s Guide to Transforming Order Management.

Spotlight on ShipStation integration

Last month we introduced our new integration with ShipStation. This month, we want to look at how ShipStation is used within Intelligent Order Management with a simple walkthrough.

Learn more in our recent blog post: Dynamics 365 Intelligent Order Management accelerates adaptability.

For those who haven’t had an opportunity to check out the recent blog above, ShipStation is a cloud-based e-commerce shipping platform specializing in creating shipping labels. With ShipStation, users can automatically import all e-commerce orders, create labels with discounted rates, send out shipping notification emails to customers, and send shipment details back to the order source. By integrating ShipStation with the Intelligent Order Management platform, users can bring all their carriers and order sources together within one unified solution.

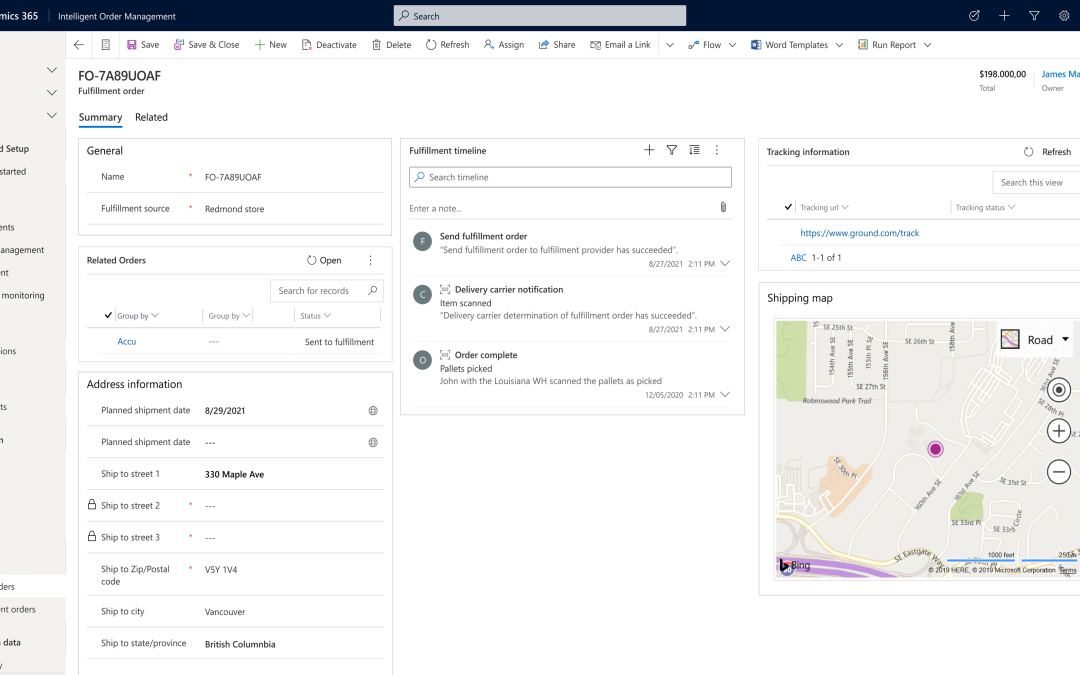

When an order is received and ShipStation is selected for use, carrier service and delivery timeline information from ShipStation are captured in real-time by Intelligent Order Management to provide users with visibility of the shipment details.

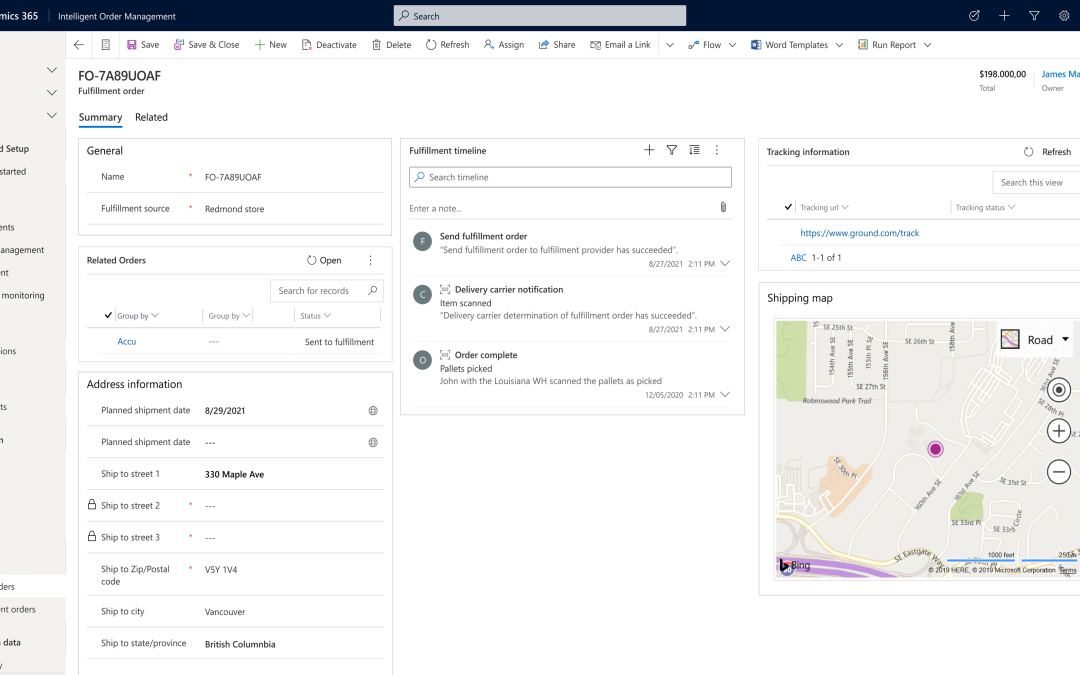

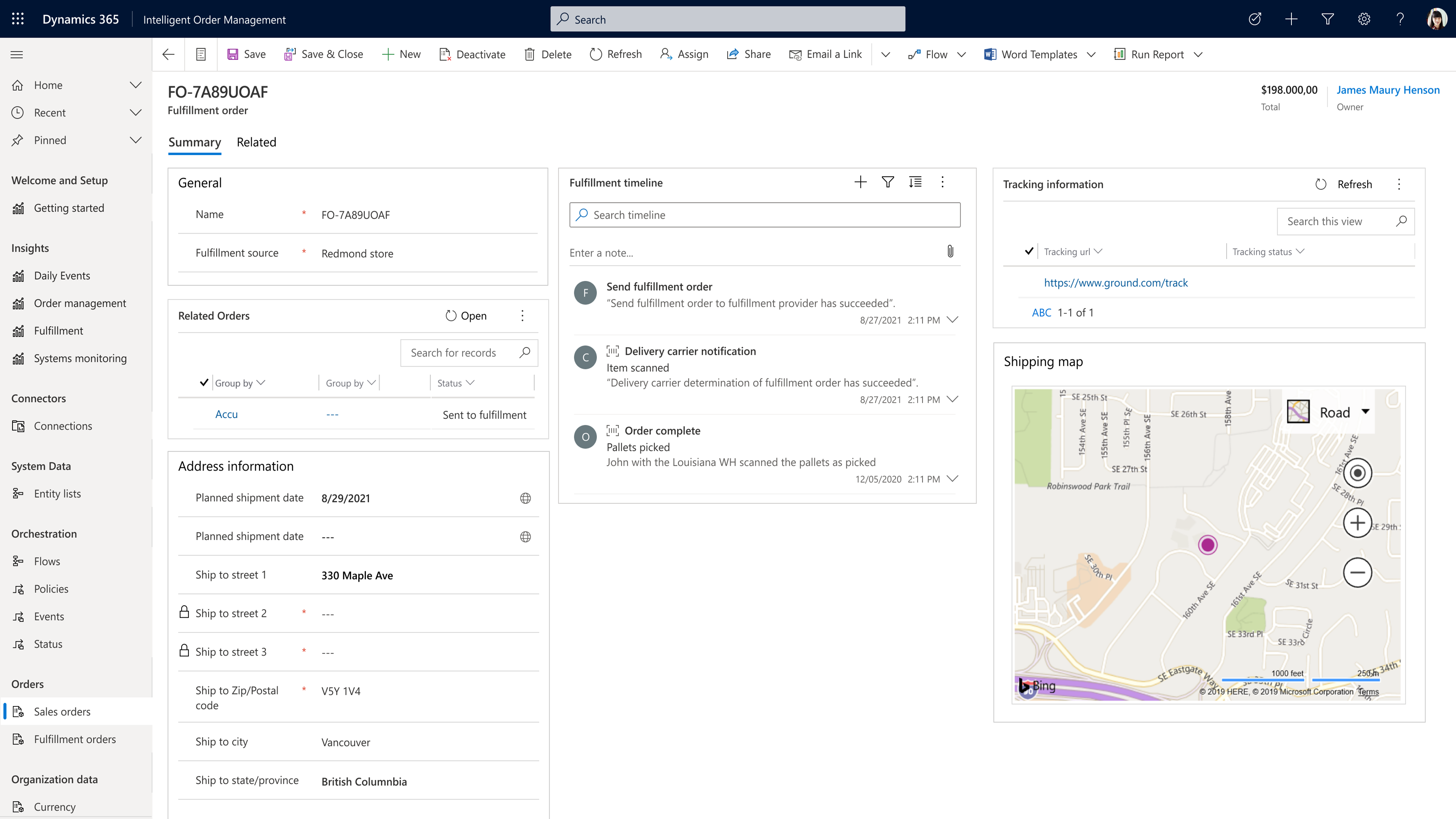

The following screenshot shows the ShipStation information integrated into the Intelligent Order Management application. As you can see, Intelligent Order Management gives you visibility into the full details on the creation of the fulfillment order, including its unique timeline, which is added as a map to show how the delivery route looks.

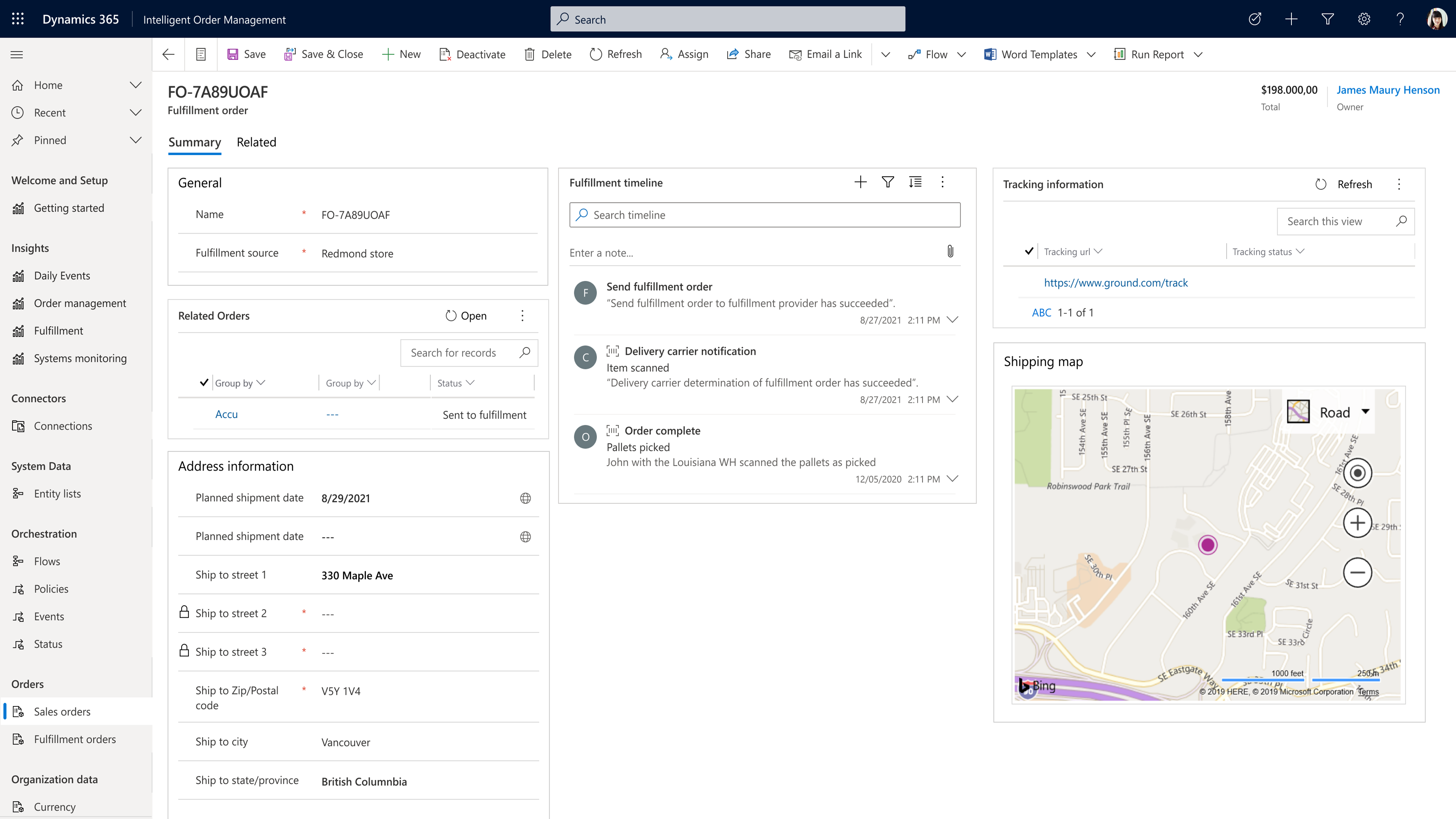

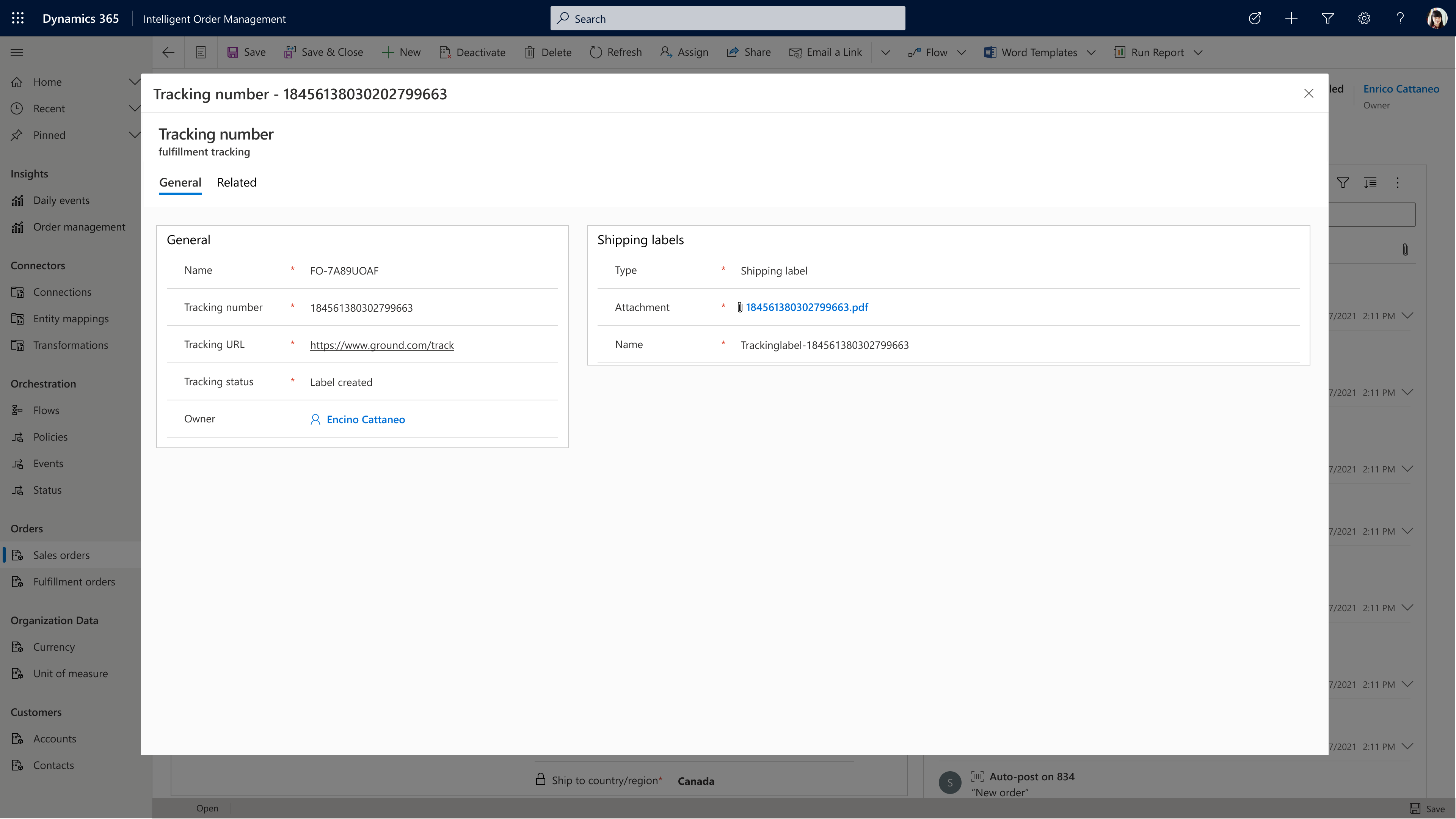

In the screenshot below, you can see that ShipStation has also generated a tracking number that is automatically shown in Intelligent Order Management.

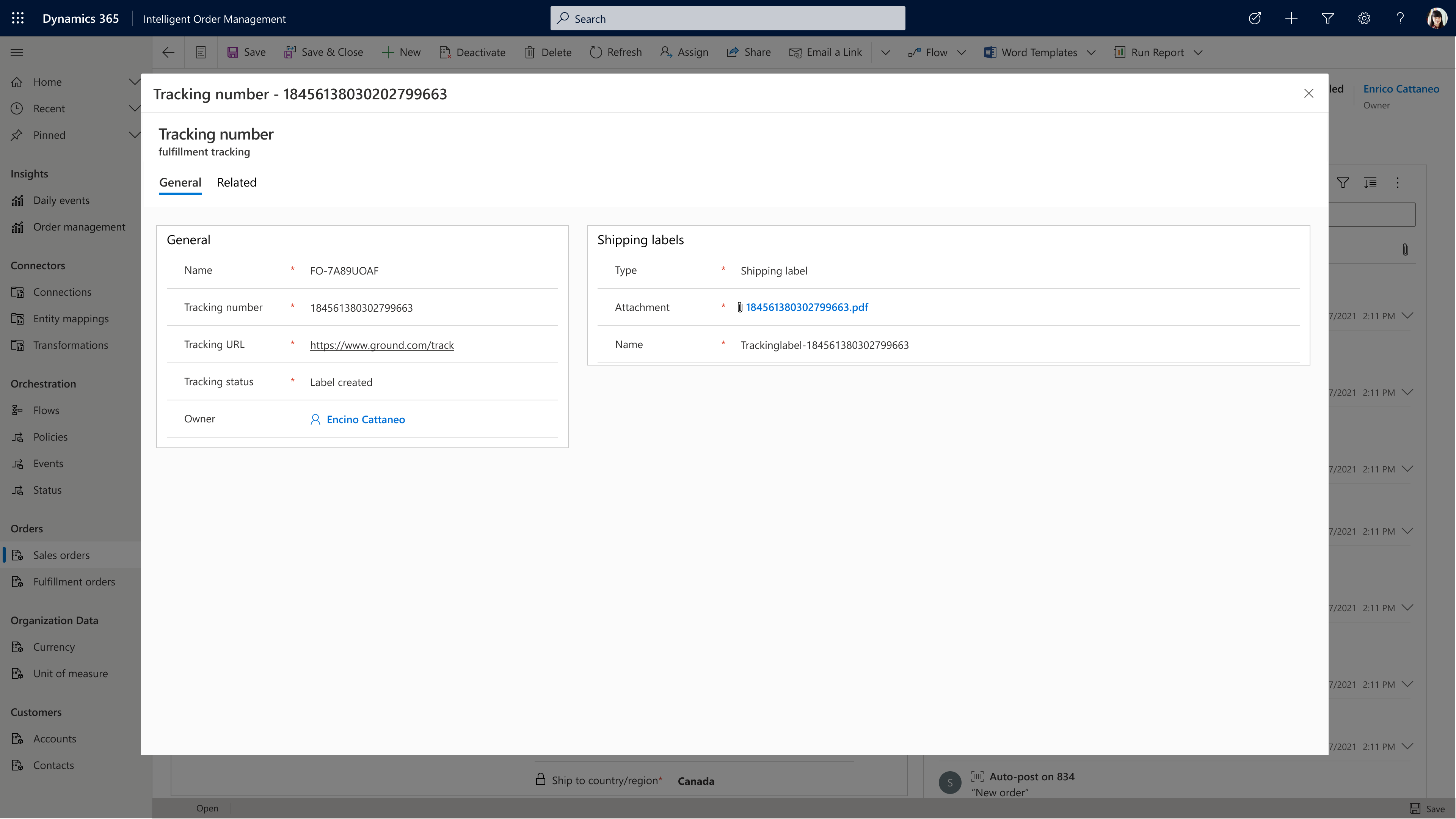

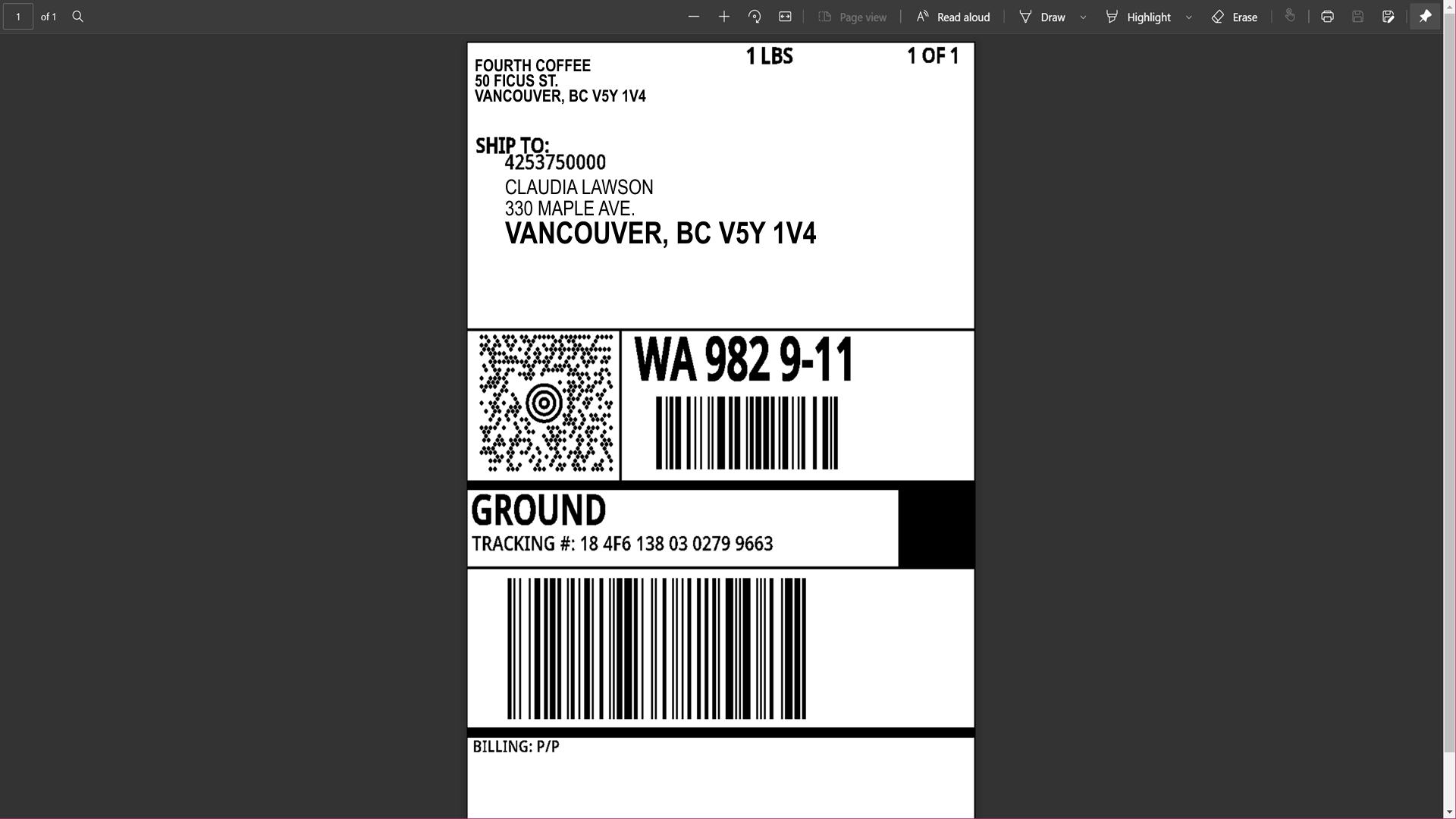

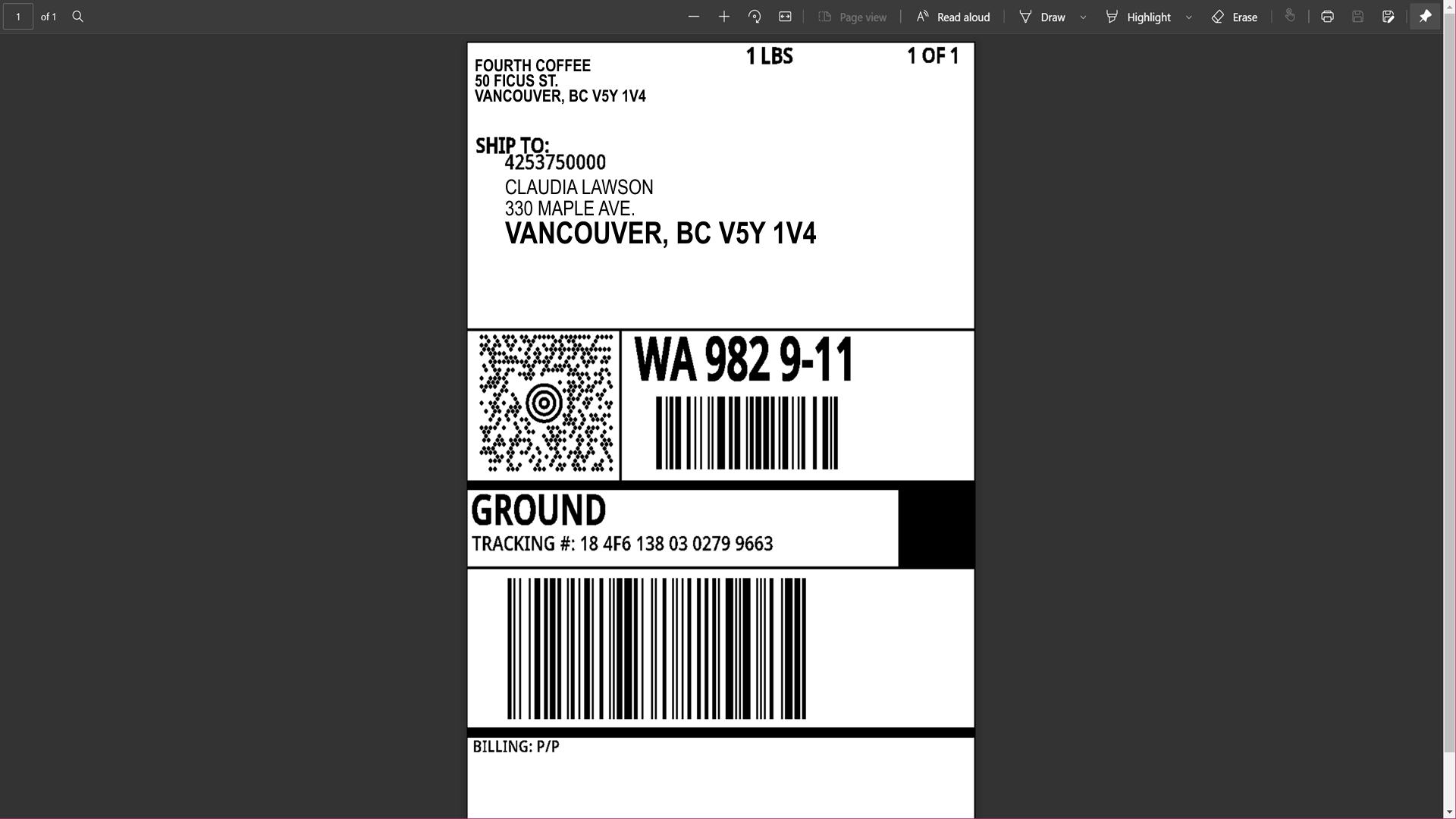

And the shipping label is also available from a single click without ever leaving the application, as shown below.

The label information generated by ShipStation is then passed back to the warehouse, which receives the pick list of items that need to be shipped to fulfill the order.

By combining new partner connectors like ShipStation with our fulfillment optimization engine, users of Intelligent Order Management can fulfill orders better, faster, and cheaper than ever before.

What’s next?

Organizations that ship direct-to-consumer need technology solutions to have end-to-end visibility into their supply chains, which will help them to achieve supply chain resiliency. A central part of a supply chain digital transformation is an order management solution with a fulfillment optimization engine. Dynamics 365 Intelligent Order Management comes with a powerful fulfillment optimization engine that allows companies to simplify the complexity of managing numerous orders, fulfillment, and inventory sources. On top of this, we have added new provider connectors such as ShipStation so that companies can act before orders become late, gain access to discounted shipping rates, and offer customers greater visibility into order delivery status with email notifications.

If you would like to discover how you can turn order fulfillment into a competitive advantage and enhance your customers’ experience, you can get started today with a free trial of Dynamics 365 Intelligent Order Management or check out our recent webinar to learn more.

1The Economist Intelligence Unit. Putting customers at the centre of the OEM supply chain. 2019

The post Dynamics 365 Intelligent Order Management enables fulfillment optimization and supply chain resiliency appeared first on Microsoft Dynamics 365 Blog.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

by Scott Muniz | Oct 6, 2021 | Security

This article was originally posted by the FTC. See the original article here.

For-profit colleges sometimes use overblown — or flat-out false — promises to attract new students and their money. The FTC is ramping up its efforts to stop shady practices on campus. The Commission is sending a Notice of Penalty Offenses to the largest 70 for-profits, warning them that the FTC will not stand for unfair or deceptive practices.

Why the heads-up? Under federal law, the FTC may put companies on notice that some practices have been found to be unfair or deceptive in administrative cases with final cease and desist orders, other than consent orders. If a company knows about (the law says has “actual knowledge” about) the orders and uses those same deceptive marketing tactics, the FTC can sue the company in federal court for civil penalties. The Notice outlines those prohibited practices: claims about the career or earning prospects of their graduates, the percentage of graduates that get jobs in their chosen field, whether the school can help a graduate get a job, and more. These are just the kinds of information a student would want to know before committing to a program — and it’s exactly how some for-profit schools market their programs.

The FTC has been going after false and misleading claims in education for nearly a century, but fraud in this sector persists. Most recently, the University of Phoenix agreed to a $191 million judgment to settle the FTC’s charges that, to attract students, it used deceptive ads that falsely touted its relationships with and job opportunities at companies such as AT&T, Yahoo!, Microsoft, Twitter, and The American Red Cross. In another matter, DeVry University paid $100 million to settle the FTC’s charges that the for-profit misrepresented the employment and salary prospects of its graduates. Additionally, the Commission has published a guide for vocational schools describing practices that the agency determined are deceptive.

Servicemembers and student veterans are often the targets of schools’ deceptive marketing. For-profit schools have had a strong incentive to enroll veterans because of the education benefits servicemembers can use to pay for college. This has led to aggressive targeting of servicemembers, veterans, and their families. For example, the FTC’s case against Career Education Corporation (“CEC”) charged it with recruiting prospective students using marketers who falsely claimed to be affiliated with the U.S. military, tricking students who were looking to serve their country.

There are tools to help veterans, servicemembers, and all kinds of students navigate the education marketplace and blow the whistle on bad actors. If you have a federal student loan and feel like a school misled you or broke the law, apply for loan forgiveness through the Department of Education’s (ED’s) Borrower Defense to Repayment procedures. If you’re getting started (or re-started), ED’s Opportunity Centers are designed to help prospective students (including people of modest means, first-generation college students, and veterans) apply for admission to college and arrange for financial aid and loans. Find one near you.

Servicemembers: talk with your Personal Financial Manager to get hands-on help with your next steps. And vets can call the VA’s GI Bill Hotline to discuss questions about education benefits: 1-888-GIBILL (1-888-442-4551), or visit the VA site to learn more. Before enrolling, you can find out important information about any school — including whether it’s a for-profit school — at the U.S. Department of Education’s sites, College Scorecard or College Navigator. The FTC’s Military Consumer site also has helpful advice on finding and paying for school.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

Recent Comments