by Scott Muniz | Feb 8, 2022 | Security, Technology

This article is contributed. See the original author and article here.

Mozilla has released security updates to address vulnerabilities in Firefox and Firefox ESR. An attacker could exploit some of these vulnerabilities to take control of an affected system.

CISA encourages users and administrators to review the Mozilla security advisories for Firefox 97 and Firefox ESR 91.6 and apply the necessary updates.

by Contributed | Feb 7, 2022 | Dynamics 365, Microsoft 365, Technology

This article is contributed. See the original author and article here.

For SOLEVO Grouplike many other businesseshaving a finance system that meets their needs for agility, flexibility, and scale was incredibly important, so in 2019, they implemented Microsoft Dynamics 365 Finance. Paired with interconnected tools such as Microsoft Power Platform, Dynamics 365 gave them a high degree of visibility into the past and present state of their business, but SOLEVO wanted more insights into where the business was headed.

SOLEVO was one of our first customers to implement finance insights, gaining access to more accurate payment predictions and cash flow forecasts, as well as prescriptive tools that would streamline the budgeting process. We recently sat down with Martin Sengel, the CIO of SOLEVO Group, and Vacaba Diaby, SOLEVO’s Global Credit Risk Officer, to learn about their experience working with finance insights and hear how it was transforming their business.

Interview with SOLEVO

What business goals and challenges are you trying to solve for? What are you hoping finance insights can unlock to help achieve this?

“Our Global Risk Officer at SOLEVO wanted to have more insights on Customer Payments, especially to take automatic actions based on the historical analysis for each customer. We hope that finance insights can help us setup a process more proactive and help him get a better overview. We wanted to have a Cashflow forecast directly in Finance Operations in combination with an optimization our budget for each department.”

Why was Microsoft the right solution? What were the key benefits that lead you to choose Microsoft for your business solutions? Were AI and machine learning (ML)-powered capabilities a key differentiator in your vendor selection process?

“Microsoft was the right solution for us to replace our old ERP systems back in 2019. We wanted a global solution that support not only ERP Processes but also a publisher who has a perfect fit and understanding of our business, offering regular updates and evolutions and a range of interconnected tools such as Power Platform, Cloud Azure and all Dynamics 365 applications. Dynagile Consulting was the right partner to help us integrate Dynamics 365 Finance Operations in all our countries and help us right now to leverage the ERP with the Power Platform, Azure Data and now AI and ML including of course finance insights. AI and ML-powered capabilities was indeed a key selection for us when we had taken this selection.”

How will finance insights help you re-imagine the way you operate your ERP business and respond to customers and trends? What is the impact of being able to operate in this new way?

“Finance insights will help us to have intelligence/insights on our data in the system. Top management of SOLEVO have already Power BI Reporting to investigate the past, but what about the future/predictions? Finance insights will provide for us directly in the ERP some key elements for our key users to take action. It will save time, and therefore money, with an end-to-end automated solution process. Budgeting processes will be reduced every year now, a more accurate and intelligent company cash flow and when we will receive payments. At the end, it will provide a rich and intelligent financial system that drives decision making and helps us take actions to respond effectively to current and anticipated business challenges.”

What kinds of insights do you hope to gain from the solution, especially with regards to revenue, growth, and planning? How will you be able to make more intelligent decisions and drive operational efficiency through these insights and business automations?

“With COVID-19 challenge, it was very important for us to have a better accuracy on the Customer Payment Predictions and an intelligent Cash Flow forecast. All the way of the three solutions provided by finance insights, this will speed up all our finance processes facilitating the planning of our teams. Of course, that will lead to drive operational efficiency and therefore to revenue.”

Do you have any details on specific scenarios being enabled or problems that will be solved by the deployment of these technologies?

“For example, thanks to finance insights, one of our use cases will be automating credit collection letters and notifications to our Global Risk Officer with the Customer Payment Prediction based on each customer for each legal entity. Based on the confidence score and on the estimated average number of days late which is refreshed every day with our latest data from Dynamics 365 Finance and scored for new or updated rows we will send a notification via Power Automate to the end customer and the regional CFO, if we found this customer as high-risk.”

Learn more

In a short time, finance insights have already had a significant impact on SOLEVO’s business, bringing a layer of intelligence to their operations. Not only has it provided more accurate forecastscritical for strategic planningit has saved them time and money by allowing them to automate processes, such as automating credit collection letters and notifications. With a rich and intelligent financial system in place, SOLEVO is now better positioned to make strategic decisions and respond more effectively to current and future business challenges.

To learn more about how finance insights can transform your financial operations, read our finance insights announcement blog, check out our support documentation, or watch our webinar on reshaping the future of financials through AI with Ray Wang from Constellation Research Inc.

The post SOLEVO Group transforms their financial operations with finance insights appeared first on Microsoft Dynamics 365 Blog.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

by Contributed | Feb 7, 2022 | Technology

This article is contributed. See the original author and article here.

It’s a challenging time in software security; migration to the modern cloud, the largest number of remote workers ever, and a global pandemic impacting staffing and supply chains all contribute to changes in organizations. Unfortunately, these changes also give bad actors opportunities to exploit organizations:

“Cybercriminals are targeting and attacking all sectors of critical infrastructure, including healthcare and public health, information technology (IT), financial services, and energy sectors. Ransomware attacks are increasingly successful, crippling governments and businesses, and the profits from these attacks are soaring.”

– Microsoft Digital Defense Report, Oct 2021

|

For years Microsoft Office has shipped powerful automation capabilities called active content, the most common kind are macros. While we provided a notification bar to warn users about these macros, users could still decide to enable the macros by clicking a button. Bad actors send macros in Office files to end users who unknowingly enable them, malicious payloads are delivered, and the impact can be severe including malware, compromised identity, data loss, and remote access. See more in this blog post.

“A wide range of threat actors continue to target our customers by sending documents and luring them into enabling malicious macro code. Usually, the malicious code is part of a document that originates from the internet (email attachment, link, internet download, etc.). Once enabled, the malicious code gains access to the identity, documents, and network of the person who enabled it.”

– Tom Gallagher, Partner Group Engineering Manager, Office Security

|

For the protection of our customers, we need to make it more difficult to enable macros in files obtained from the internet.

Changing Default Behavior

We’re introducing a default change for five Office apps that run macros:

VBA macros obtained from the internet will now be blocked by default.

For macros in files obtained from the internet, users will no longer be able to enable content with a click of a button. A message bar will appear for users notifying them with a button to learn more. The default is more secure and is expected to keep more users safe including home users and information workers in managed organizations.

“We will continue to adjust our user experience for macros, as we’ve done here, to make it more difficult to trick users into running malicious code via social engineering while maintaining a path for legitimate macros to be enabled where appropriate via Trusted Publishers and/or Trusted Locations.”

– Tristan Davis, Partner Group Program Manager, Office Platform

|

This change only affects Office on devices running Windows and only affects the following applications: Access, Excel, PowerPoint, Visio, and Word. The change will begin rolling out in Version 2203, starting with Current Channel (Preview) in early April 2022. Later, the change will be available in the other update channels, such as Current Channel, Monthly Enterprise Channel, and Semi-Annual Enterprise Channel.

At a future date to be determined, we also plan to make this change to Office LTSC, Office 2021, Office 2019, Office 2016, and Office 2013.

End User Experience

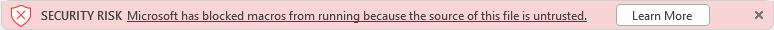

Once a user opens an attachment or downloads from the internet an untrusted Office file containing macros, a message bar displays a Security Risk that the file contains Visual Basic for Applications (VBA) macros obtained from the internet with a Learn More button.

A message bar displays a Security Risk showing blocked VBA macros from the internet

A message bar displays a Security Risk showing blocked VBA macros from the internet

The Learn More button goes to an article for end users and information workers that contains information about the security risk of bad actors using macros, safe practices to prevent phishing & malware, and instructions on how to enable these macros by saving the file and removing the Mark of the Web (MOTW).

What is Mark of the Web (MOTW)?

The MOTW is an attribute added to files by Windows when it is sourced from an untrusted location (Internet or Restricted Zone). The files must be saved to a NTFS file system, the MOTW is not added to files on FAT32 formatted devices.

IT Administrator Options

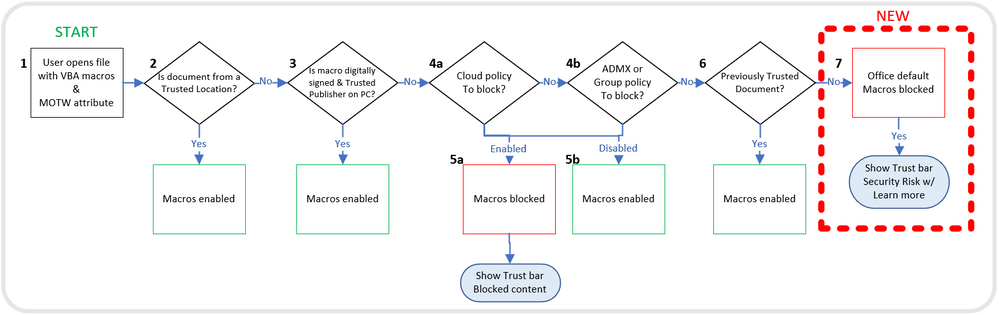

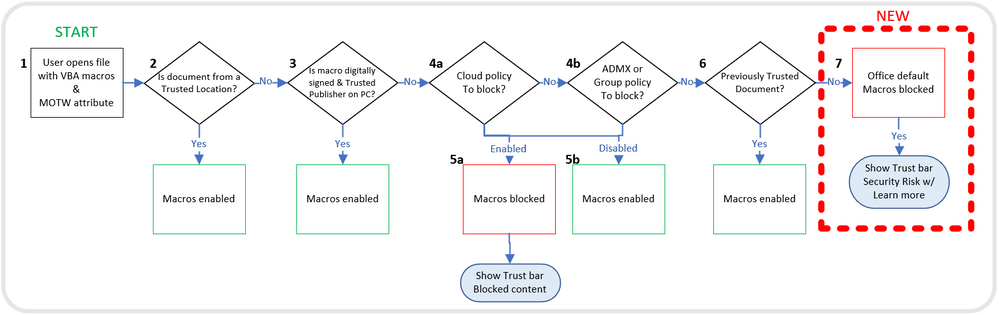

This chart shows the evaluation flow for Office files with VBA macros and MOTW:

Evaluation flow for Office files with VBA macros and MOTW

Evaluation flow for Office files with VBA macros and MOTW

Organizations can use the “Block macros from running in Office files from the Internet” policy to prevent users from inadvertently opening files from the internet that contain macros. Microsoft recommends enabling this policy, and if you do enable it, your organization won’t be affected by this default change.

“Setting policy is a powerful tool for IT Admins to protect their organizations. For years we’ve recommended blocking macros obtained from the internet in our security baselines, and many customers have done so. I’m pleased Microsoft is taking the next step to securing everyone with this policy by default!”

– Hani Saliba, Partner Director of Engineering, Office Calc

|

Additionally, there are two other options to know your files are safe:

- Opening files from a Trusted Location

- Opening files with digitally signed macros and providing the certificate to the user, who then installs it as a Trusted Publisher on their local machine

To learn more about how to get ready for this change and recommendations for managing VBA macros in Office files, read this article for Office admins.

Thank you,

Office Product Group

VBA Team & Office Security Team

More helpful information on the threats of Ransomware:

Continue the conversation by joining us in the Microsoft 365 Tech Community! Whether you have product questions or just want to stay informed with the latest updates on new releases, tools, and blogs, Microsoft 365 Tech Community is your go-to resource to stay connected!

by Scott Muniz | Feb 7, 2022 | Security, Technology

This article is contributed. See the original author and article here.

The Federal Bureau of Investigation (FBI) has released a Flash report detailing indicators of compromise (IOCs) associated with attacks, using LockBit 2.0, a Ransomware-as-a-Service that employs a wide variety of tactics, techniques, and procedures, creating significant challenges for defense and mitigation.

CISA encourages users and administrators to review the IOCs and technical details in FBI Flash CU-000162-MW and apply the recommend mitigations.

by Contributed | Feb 6, 2022 | Technology

This article is contributed. See the original author and article here.

Move from: bobsql.com

I received a question from Jonathan as he read over prior posts on locking and I cannot take credit for the question nor the answer, just being the middle man for this transaction.

Question:

“The way I have understood lock partitioning and a regular index rebuild to interact, is that the final SCH-M lock for the object is taken by acquiring it across all of the lock partitions, in ascending order to avoid any deadlock conditions. More precisely I understood that it does not even attempt to acquire the SCH-M in lock partition 4 say, until it has acquired it successfully in lock partition 3. Once the SCH-M is acquired in the final partition it’s good to go.

If we attempt the same with low priority waits for the index rebuild … on a non-partitioned system it seems straightforward, if my SCH-M request is blocked by an existing SCH-S, and a new SCH-S request arrives, it is not blocked by me. If we apply the same behavior on each lock partition in ascending order, then at the point where I am trying to acquire SCH-M in the final partition, that would mean I already have acquired it in all the lower partitions, and I would be blocking processes on every other scheduler.

Do you know how this works?” – Jonathan Kehayias

The developer’s (Panagiotis) answer:

The goal of WAIT_AT_LOW_PRIORITY is to avoid blocking any other requests while waiting for a lock. In the case of lock partitioning, the lock might be acquired on a few partitions and then end up waiting on partition 4 because there is a conflicting lock being held there. If we simply waited with low priority on partition 4, we would not block any new requests on partition 4, but since we are holding locks on earlier partitions the user requests would be blocked. Based on that, when WAIT_AT_LOW_PRIORITY is used, we wait with low priority on the first partition and if acquired we attempt to lock all other partitions without waiting. If we can’t take the lock on partition 4, we will unlock all earlier partitions to eliminate blocking and start waiting with low priority on 4. Once the lock on partition 4 is acquired, we follow the same process of acquiring the next partitions without waiting, cycling back to partition 0 once we hit the last partition.

Bob Dorr

by Contributed | Feb 5, 2022 | Technology

This article is contributed. See the original author and article here.

Moved from: bobsql.com

Download attachment to read the full content.

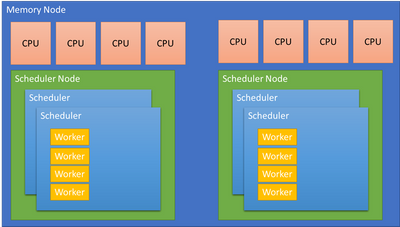

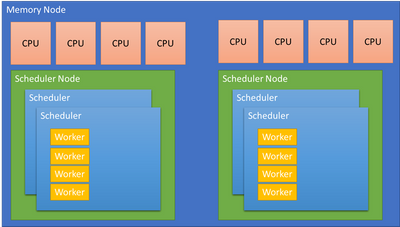

SQL Server uses 3 types of affinity to control where the SQL Server worker threads execute. Before explaining the different scheduler affinity types let me clarify some terminology.

Node Types

SQL Server makes a specific distinction between scheduling and memory nodes.

Scheduling nodes: sys.dm_os_nodes

Memory nodes: sys.dm_os_memory_nodes

A scheduling node is a used to group a set of SQLOS schedulers. The scheduling node must :

- Remain within a single memory node.

- Can be configured to use a subset of the CPUs presented by the OS from the same memory node.

For example: A memory node with 64 CPUs is a complete, Operating System, scheduler group. SQL Server may choose to divide the memory node allowing for better partitioning and performance. The Soft Numa feature may take the 64 CPUs and create 8 scheduler nodes, each managing 8 CPUs or 4 scheduler nodes managing 16 CPUs, etc. The decision is performance driven.

A memory node represents the memory associated with a group of CPUs from the physical hardware. SQL Server aligns schedulers and other partitioned structures with the memory node to reduce access to remote, NUMA node memory when possible. A memory node may have 1 or more scheduling nodes, but a scheduling node can only be assigned to a single memory node.

…

by Scott Muniz | Feb 4, 2022 | Security

This article was originally posted by the FTC. See the original article here.

When identity theft happens, it’s hard to know where to begin. That’s why the FTC created IdentityTheft.gov, a one-stop resource for people to report identity theft to law enforcement and to get step-by-step instructions on how to recover from any type of identity theft.

The first step in avoiding identity theft, or stopping the damage, is placing a fraud alert on your credit report. This makes it harder for a thief to open new credit in your name, and lets you get free copies of your credit report from each of the three credit bureaus. Next, read through your reports and note any accounts or transactions that don’t belong. Then, go to IdentityTheft.gov.

When you report at IdentityTheft.gov, you’ll answer questions and give details about what happened. Include information about any problems you spotted on your credit reports. IdentityTheft.gov will use that information to create your personalized:

- Identity Theft Report, which shows that someone stole your identity, and

- recovery plan with step-by-step advice to help you fix problems.

Your Identity Theft Report, recovery plan, and sample letters from IdentityTheft.gov will help you repair problems caused by identity theft. Your recovery plan may tell you to:

Learn more about protecting your identity and recovering from identity theft at ftc.gov/idtheft.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

by Contributed | Feb 4, 2022 | Technology

This article is contributed. See the original author and article here.

Many organizations want to provide Microsoft Teams to their employees, but not in a “no strings attached” way. With our customers, we often see the need for a provisioning solution, so people aren’t creating teams without thinking.

With “traditional” team creation (i.e. the built-in “Create a team” functionality) a lot of the teams that get created don’t leverage the full potential that Microsoft Teams has to offer. When a team is created as a blank slate the owner has to know what the possibilities are and how to set it up, to create a team fit for their need. On the other hand, templates don’t satisfy users’ needs either. There is no such thing as “one size fits all”. Again, to modify it, the user needs to have a certain level of knowledge.

A different approach

To tackle these two challenges when it comes to Microsoft Teams provisioning, we had a vision of a provisioning solution where we blend learning with the process of creating your team. We provide the why, the user decides the what, and the tool takes care of the how. This is how ProvisionGenie ? was born.

ProvisionGenie is a tool that guides the user through the creation process. Someone who desires a team can start up the application from inside Microsoft Teams, so they stay in the flow of their work. They will need to provide some basic information such as the name, description, and members of the team. Then the tool continues with some of the essential building blocks of a great Microsoft Teams team: channels, lists and libraries. The user gets information on why they would care about these things, and they can customize them to their liking.

An enterprise-ready solution

ProvisionGenie is built with companies in mind: a scalable database, a reliable workflow engine and a Teams-like user interface.

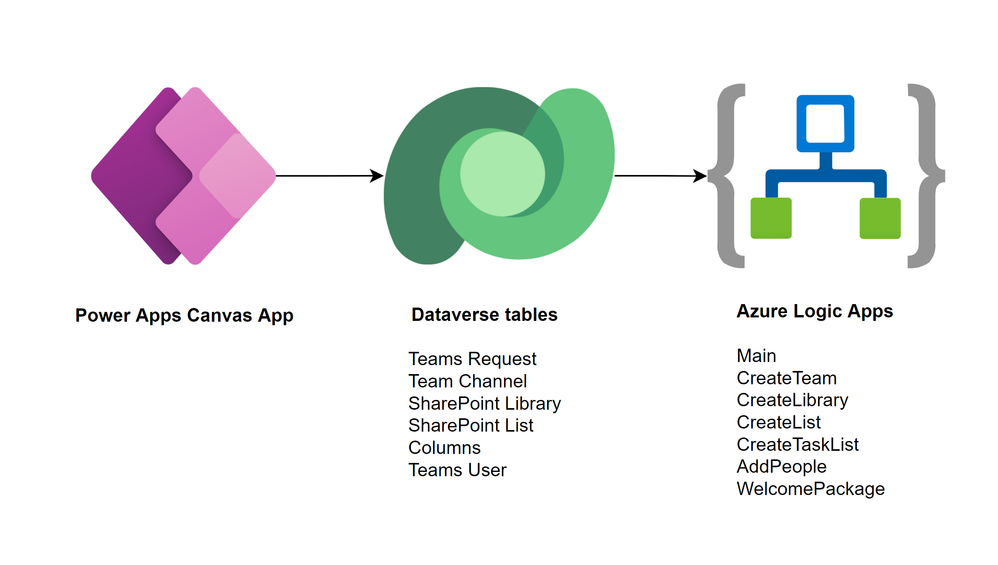

The data is stored in Microsoft Dataverse, the relational database built into the Power Platform that allows for advanced security scenarios. In the current version, we focus on the front-end for the users of the application. By storing our data in Dataverse however, we can expand this relatively easily in the future with a model-driven application for administrators.

Azure Logic Apps take care of the workflows and logic of creating the team with its resources. Logic Apps not only offer better permission management, they can also be deployed across tenants automatically. They are more scalable and therefore performant compared to their low-code counterpart Power Automate.

Finally, a Power Apps canvas app is used to create a beautiful user interface that fits seamlessly into Microsoft Teams. With a canvas app, there is full control over the look and feel of the UI. The canvas app provides the different options to the user and saves the configuration in Dataverse. There is no direct link between the canvas app and the Logic Apps.

A community driven initiative

ProvisionGenie was born out of the collaboration of two community members because we wanted to provide a different solution for Microsoft Teams provisioning.

We decided quite early in the process that we wanted this to be a solution for the community, by the community. Therefore, this is an open-source project which you can find on GitHub (<<link>>) and to which everyone is welcome to contribute.

Bios

Carmen Ysewijn

Power Platform Architect | Microsoft Business Applications MVP

Carmen is a Business Applications MVP and Power Platform Architect at Qubix (Belgium) with a passion to find the right solution for any challenge that arises. With this solution-oriented approach, she helps customers improve their business processes. She loves to share the knowledge she gains along the way on her blog or speaking at conferences.

Luise Freese

Microsoft MVP, Microsoft 365 Consultant, Power Platform Developer

Luise helps customers around the globe to improve their business processes and to get rid of everything that only keeps them busy without adding value in a meaningful way. She is a member of the M365 PnP team and supports developers in extending Microsoft 365. She loves all things community, open-source, stickers, and the number 42.

To write your own blog on a topic of interest as a guest blogger in the Microsoft Teams Community, please submit your idea here: https://aka.ms/TeamsCommunityBlogger

by Contributed | Feb 4, 2022 | Business, Microsoft 365, Microsoft Viva, Technology

This article is contributed. See the original author and article here.

The pandemic has fundamentally changed how people value where they work, how they work, and most importantly—why they work. One year ago today, we announced Microsoft Viva to help accelerate this transformation.

The post Microsoft Viva celebrates 1 year: Transforming the employee experience appeared first on Microsoft 365 Blog.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

by Scott Muniz | Feb 4, 2022 | Security

This article was originally posted by the FTC. See the original article here.

Lots of people recently got an email or letter about free credit monitoring through the Equifax settlement. That’s because the settlement with Equifax was just approved by a court. So now, if you signed up for credit monitoring as part of that settlement, you can take a few steps to switch it on. The email or letter tells you how. Learn more at the FTC’s official site for information: ftc.gov/Equifax.

Remember that you don’t have to pay for credit monitoring as part of this settlement, and nobody will call, text, or email out of the blue to ask you for your credit card or bank account numbers, or to “help” you get your free credit monitoring. Anyone who does is a scammer, so please tell the FTC at ReportFraud.ftc.gov.

Learn more about the settlement and free credit monitoring at ftc.gov/Equifax.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

Recent Comments