At Microsoft Ignite 2022, we are showcasing new AI, automation, and collaborative solutions that help your business be more efficient, engage better across teams and departments, and deliver more breakthrough customer experiences. By helping your business better connect people, data, and processes, you can be more agile and reduce complexity, so you can do more with less.

In a special Into Focus session, we will showcase these and other new investments introduced in our second biannual release wave, release wave 2, to help you adapt to change, innovate, and modernize your processes across the organizationfrom sales to service to supply chain and finance.

Product updates at a glance

Be more efficient with new AI and automation updates:

- Empower sellers to focus on closing deals with Microsoft Viva Sales, the new seller experience application is now generally available.

- Make every sales conversation more useful and engaging with conversation intelligence, available for no extra charge in Microsoft Dynamics 365 Sales Enterprise and Viva Sales.

- Create more consistent sales processes with sequencing for sales in Dynamics 365 Sales.

- Enable service agents to better serve customers with AI-generated conversation summaries in Microsoft Dynamics 365 Customer Service.

Collaborate in the flow of work with new integrations between Microsoft Teams and Microsoft Dynamics 365:

- Solve complex service cases faster by easily collaborating with subject matter experts over Teams, right within Microsoft Dynamics 365 Customer Service.

- Enable Teams users to access Dynamics 365 Business Central dataeven without a Dynamics 365 license.

Improve employee and customer experiences with AI and automation

We continue to infuse AI and automation into business processes, driving more effective customer journeys. The updates we are introducing will help marketers, sellers, and service agents to hyper-personalize customer experiences.

Introducing unlimited conversation intelligence for Dynamics 365 Sales Enterprise and Viva Sales

Sellers can take advantage of new AI capabilities that help them prioritize their work and surface in-context collaboration experiences so that they can reclaim time to engage more authentically and efficiently.

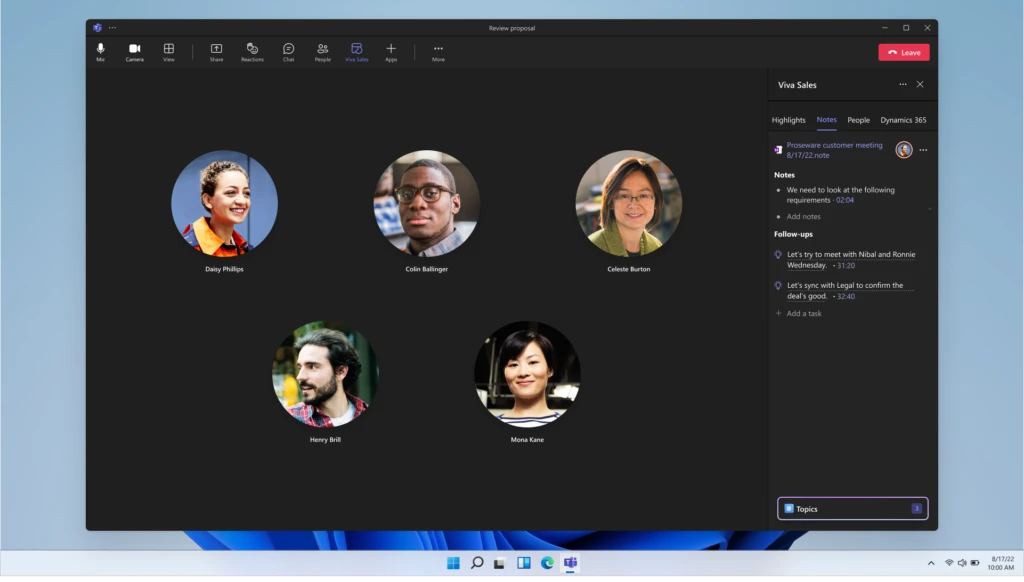

Now generally available, Viva Sales is a new seller experience application that brings together any customer relationship management (CRM) system, Microsoft 365, and Teams to provide a more streamlined and AI-powered selling experience. Viva Sales captures customer insights and deal insights from Microsoft 365including Outlook emails and Teams chatsand then populates it within any CRM system, eliminating manual data entry and freeing time to focus on selling.

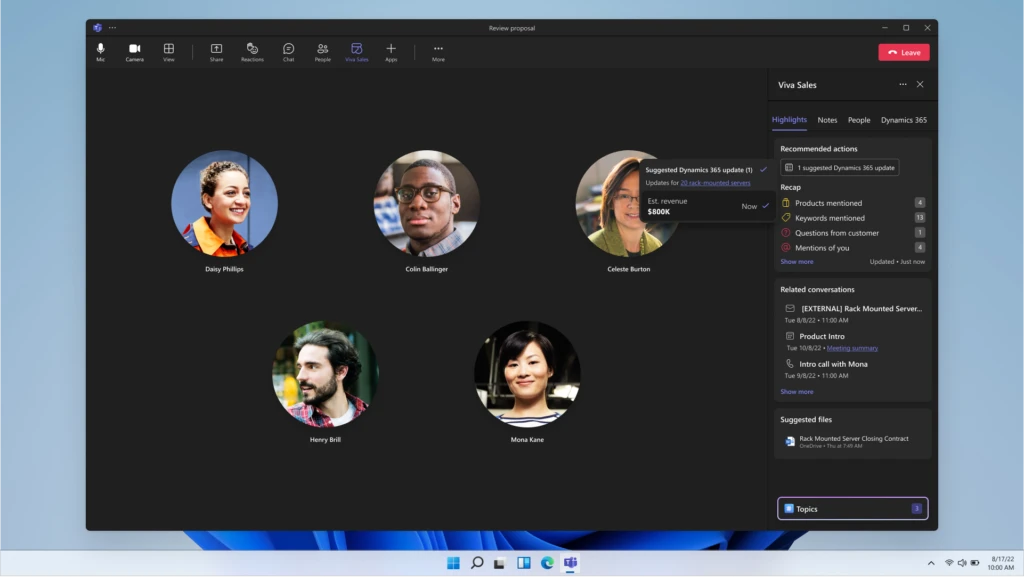

Additionally, while meeting with customers in a Teams call, sellers can record and transcribe the meeting to get a rich summary using conversation intelligence. The meeting summary helps sellers understand the overall sentiment of the call and tracks helpful conversation key performance indicators (KPIs), such as a seller’s talk-to-listen ratio.

We are confident that conversation intelligence will change the way sellers engage with customers, and we want to make it available to as many sellers as possible. That’s why we are announcing that users of both Microsoft Dynamics 365 Sales Enterprise and Viva Sales will have full access to conversation intelligence capabilities at no extra charge.

In addition, we are announcing several feature updates, coming to preview early next year, for sales conversation intelligence, including:

- Real-time, in-conversation content suggestions: As sellers engage customers on a Teams call or meeting, they will be guided with content to share or help inform the conversation, such as product and pricing details and competitive battle cardsall surfaced in real time as they chat.

- Recommended resources and insights: Content such as talking points, important mentions, customer sentiment, and conversation stylehelp provide a deeper understanding of what comprises winning sales strategies and how sellers’ behaviors directly correlate to business results.

- Email intelligence: This supports sellers suggesting prompts for updates needed in your CRM.

Learn more about conversation intelligence in this detailed blog post. Also, read more about what’s possible with Viva Sales.

Enable sales journey orchestration with new sequencing for sales features

We are also introducing new AI-powered sequencingfor sales capabilities for Dynamics 365 Sales that help create consistent step-by-step activities for sellers to perform in the selling process, giving them a better understanding of what to do next. With sequencing for sales, sellers are empowered to build similar customer journeys ensuring the best experiences for their customers and their sellers.

All of these updates join a host of new features for Dynamics 365 Sales and Dynamics 365 Marketing, which introduce moments-based marketing with real-time journey orchestration, using AI to market at scale and achieve higher levels of marketing maturity.

Watch 2022 release wave 2 release highlights for Dynamics 365 Sales.

Empower service agents to always exceed customer expectations

As a frontline for customer satisfaction and retention, service agents need modern tools to scale the personalized support customers need. In the 2022 release wave 2, our focus is on enriching contact centers with AI and automation across every engagement channel.

Earlier this year, we introduced the Microsoft Digital Contact Center Platform, an open, extensible, and collaborative contact center solution. The platform is powered by Dynamics 365, Teams, Microsoft Power Platform, and Nuancedelivering best-in-class AI that powers self-service experiences, live customer engagements, collaborative agent experiences, business process automation, advanced telephony, and fraud prevention capabilities.

We are introducing the ability to automate an AI-generated conversation summary in Dynamics 365 Customer Service when an agent uses the embedded Teams capabilities. This accelerates issue resolution with an auto-generated structured conversation summary that shares context, including the summary of the customer issue and the result of the resolution tried by the agent. In addition, agents can review a customer’s previous chat history to get visibility and context to conversations. This is especially helpful in scenarios where a customer service agent has a case transferred to them.

The feature is in preview as part of Dynamics 365 Customer Service and is expected to be generally available in October 2022.

Watch 2022 release wave 2 release highlights for Dynamics 365 Customer Service.

More ways to collaborate in the flow of work with Teams and Dynamics 365

At last year’s Microsoft Ignite, we introduced Context IQ, a set of capabilities for Dynamics 365 and Microsoft 365 that enables business users to access documents and records, colleagues across the organization, and conversations in the flow of work, whether from within Dynamics 365 or Microsoft 365 applications.

We’ve already embedded Teams within Dynamics 365 Sales, helping sellers to team up with other sellers and subject matter experts to close deals faster. Now, we’re extending the integrated Teams experience to other Dynamics 365 applications.

Now in preview and slated for general availability in October 2022, service agents can engage with colleagues over Teams chat right from within Dynamics 365 Customer Service. This enables agents to solve complex service cases faster by easily collaborating with subject matter experts within the organization, such as agents from other departments, supervisors, customer service peers, or support experts. Chats over Teams will be linked directly to customer service records, enabling a contextual experience.

For small and medium-sized businesses (SMBs), 2022 release wave 2 updates for Dynamics 365 Business Central include new ways to collaborate and share data over Teams. Starting November 4, 2022, Teams users will have access to Business Central data from within the collaboration app, regardless of whether they have a Dynamics 365 license. Admins will be able to set permission and access rules to restrict access to business records. Business Central users can invite people from across the organization to connect and collaborate in the flow of work, no matter where they work.

With Business Central embedded in Teams, people can collaborate on critical initiatives and projects directly where they connect. SMBs can now ensure that all team members are empowered with Context IQaccess to the right information and insights contextually, wherever, and however they work.

Learn more

These updates are just a few of the hundreds of new and updated capabilities in the 2022 release wave 2. We invite you to virtually attend our Microsoft Ignite Into Focus session on Wednesday, October 12, streaming live at 3:00 PM Pacific Time, then on-demand, for a first look at the new innovations coming to market. In addition to overviews of new innovations in release wave 2, we will spotlight customers using these new technologies to drive better operational outcomes and customer success.

Be sure to also check out our roadmap for detailed release plans for Dynamics 365 and Power Platform.

The post Live from Microsoft Ignite 2022: Introducing new AI, automation, and collaboration capabilities for Dynamics 365 appeared first on Microsoft Dynamics 365 Blog.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

Recent Comments