by Contributed | Jul 7, 2021 | Technology

This article is contributed. See the original author and article here.

Are you a software developer who extends and customizes Microsoft Power Platform? The Microsoft Certified Power Platform Developer Associate certification is designed for you. Do you wonder how you can use your Microsoft Power Platform skills to help advance your career and to earn the recognition you deserve? A Microsoft Certification validates to the world that you have these development skills—whether you’re just starting out and growing your dev skills, looking to use your dev skills to advance your career, or wanting to change to a new, in-demand development career.

Software development is core to the digital transformation in organizations around the world. Companies are investing in evolving technology, and they need devs who understand the next generation of automation. A Microsoft white paper on role-based tech training and certifications explains that the need for workers who are deeply knowledgeable about the latest technology is skyrocketing. If you want to pursue these opportunities, certification could give you a boost. Sixty-one percent of respondents in a Nigel Frank survey believe that certifications can give you an edge in the job market. In that survey, one participant observed, “Certifications are ‘worldwide,’ so I am able to prove to partners worldwide that I am familiar with the product, and not just based on local references.”

You can add your certifications to your résumé and LinkedIn page to alert employers to your expertise. In this post in our series on discovering your career path, we look at the benefits of achieving an industry-recognized Microsoft Certified: Power Platform Developer Associate certification after passing Exam PL-400, and we suggest other certifications that can be useful for devs—whether you’re just starting out or brushing up on your skills as a seasoned professional.

The developer role and certification

Greg Hurlman, a Microsoft developer with more than 20 years of experience and a member of the Microsoft Power Platform Advocacy team, shares a helpful message with the traditional developer community—using Power Apps saves time and development effort. He points out, “I’ve been coding forever, but I will create an app with Power Apps first before I try to create anything with custom code—just because it’s so much faster and does so much of what you’re trying to do.”

You fit the profile for this developer role if you’re passionate about designing, developing, securing, and extending Microsoft Power Platform solutions. Developers who earn this certification must be able to create solution components (including application enhancements), custom user experiences, system integrations, data conversions, custom process automations, and custom visualizations. You need a strong applied knowledge of Microsoft Power Platform services and a basic understanding of DevOps practices for Microsoft Power Platform. In addition, your background should include development experience using JavaScript, JSON, TypeScript, C#, HTML, .NET, Azure, Microsoft 365, RESTful Web Services, ASP.NET, and Power BI.

You should have experience working with app makers, functional consultants, solution architects, and IT administrators. Your responsibilities are typically varied and include:

- Creating technical designs and Power Apps.

- Configuring Dataverse, Power Apps, and business process automation.

- Extending the platform and the user experience.

- Developing integrations.

Benefits of certification

Even developers with established skills need to stay ahead of the continuing changes in IT and technology. The role-based technical training white paper, mentioned earlier, reports that data shows the average life of a skill is about five years and that many technical professionals are only somewhat confident in their cloud computing abilities. Training and certification can help build that confidence.

There’s a growing demand for workers to build the next generation of automation. In the Global Knowledge 2020 IT Skills and Salary Report, over half of global IT decision-makers report that they expect their organization to invest in Microsoft technology this year. The survey also uncovered that IT professionals have plenty of opportunities to boost their pay. Those who earned a certification or learned a new skill could see an increase of as much as $12,000 a year.

In addition to highlighting your specific technical skills, a certification also indicates that you’re willing to learn new technology to get ahead and stay ahead. Employers are building tech-intensive organizations where people have not only the skills they’re looking for but also a willingness to embrace a culture of learning.

In the Pearson VUE report on the value of IT certification, many professionals report numerous personal benefits from having earned certifications, such as more credibility in the workplace and greater self-confidence. In the Global Knowledge survey, mentioned earlier, 93 percent said certified employees add more value, close skills gaps, are more productive than their non-certified peers, and meet their clients’ needs better.

Obtaining a variety of certifications can help you as you explore different pathways to success. Certifications give you career flexibility and earning options. The Global Knowledge survey found that the more certifications someone holds, the higher their salary tends to be.

Other certifications for you

Depending on your development skills and what you want to do with them, investigate the different Microsoft Power Platform certifications available to you. A Microsoft Certification signals that you have the skills that organizations are looking for when they hire and advance employees. Certification, combined with your drive and abilities, can help open career doors for you.

Many developers hold multiple certifications. We recently profiled April Dunnam, a Microsoft developer on the Microsoft Power Platform Advocacy team. Dunnam, who had been a Power Apps and Power Automate Most Valuable Professional (MVP), noted that certification helped her break into her development career during a recession and that her multiple certifications signaled to prospective employers her readiness for other roles.

Those with experience creating application enhancements, custom user experiences, system integrations, data conversions, and custom visualizations with Microsoft Power Platform might consider earning a Microsoft Certified: Power Platform Functional Consultant Associate certification (pass Exam PL-200). This and your Microsoft Power Platform Developer Associate certification can help set you up for later success with a Microsoft Certified: Power Platform Solution Architect Expert certification (pass Exam PL-600).

How do I get started?

Technical acumen, agility, and flexibility characterize traditional developers. You’re driven to solve problems and create automated solutions. These days, you might not be following a traditional career path, but you have successfully built, customized, and extended Microsoft Power Platform apps.

However, if you don’t feel ready to take the development certification exam just yet, we recommend that you work closely with a Microsoft Power Platform or Power Apps developer that you know and take on a project or two to deepen your skills. Get some real-life experience designing, configuring, and customizing Power Apps. Explore training opportunities to deepen your programming skills, or take an instructor-led course. No matter where you are on your journey, we have training that can help you get underway.

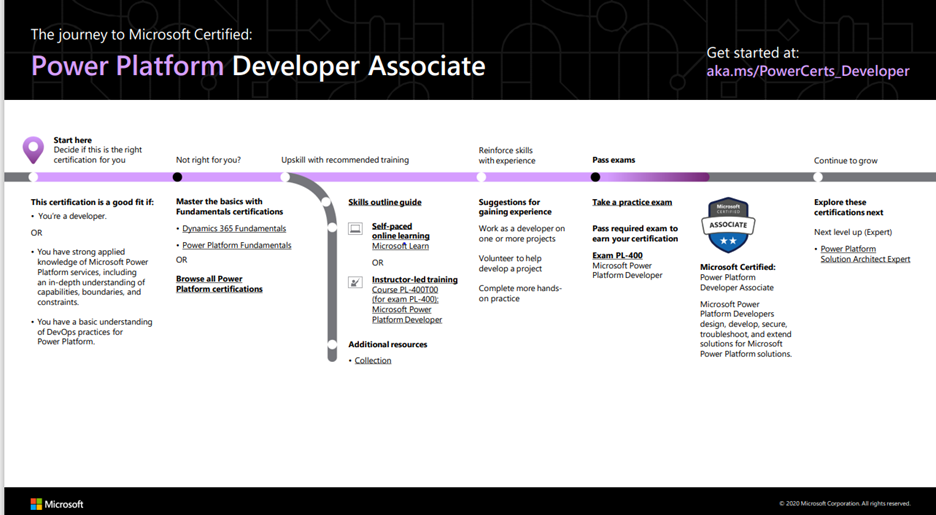

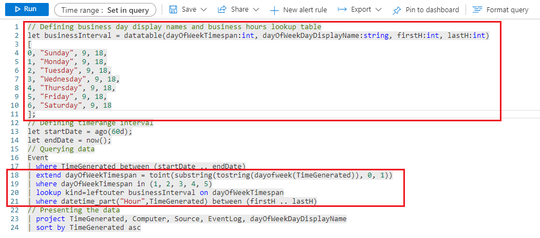

To get started with this certification, check out our Microsoft Power Platform Developer Associate certification journey map. You can also find the journey map in the resources section on the Microsoft Power Platform Developer Associate certification and exam pages.

To map out your journey, follow the sequence on the Microsoft Power Platform Developer Associate journey map. Start at the beginning by deciding whether this certification is a good fit for you.

Next, choose the training you’ll need to be prepared. To understand what you’ll be measured on when you take the exam, review the Exam PL-400 skills outline guide. Then sign up for the training that fits your learning style and experience:

A practice exam is available for this certification. You can take a trial run for the Microsoft Power Platform App Maker Associate exam with the PL-400 Microsoft Official Practice Test. All the exam objectives are covered in depth, so you’ll find what you need to be ready for any question.

After you pass the exam and earn your certification, you can continue to broaden your technical expertise by taking one of the other certifications described in this blog post or by exploring other certification offerings. Consider our associate and expert certifications when you achieve the next milestones on your career journey. Map out the possibilities with Microsoft Power Platform and Dynamics 365 certification paths.

Where’s my community?

Join other developers in a community! Share your career journey with devs who have similar interests in Microsoft Power Platform technologies. Here are some places where you can share and gather information and learn from your peers and others.

Celebrate with the world: Post your badge on LinkedIn

When you earn a certification or learn a new skill, celebrate your accomplishment with your network. It often takes less than a minute to update your LinkedIn profile and share your achievements, highlight your skills, and help boost your career potential. Here’s how:

- If you’ve earned a certification already, follow the instructions in the congratulations email you received. Or find your badge on your Certification Dashboard, and follow the instructions there to share it. (You’ll be transferred to the Acclaim website.)

- For more information on badges, check out the Certification Badges FAQ.

- To add specific skills to your LinkedIn profile, go to your profile page and update the Skills and endorsements section. Tip: We recommend that you choose skills listed in the skills outline guide for your certification.

It’s easy to renew your certification

When your Microsoft Power Platform Developer Associate certification expires, we’ve got good news. You can renew your current certifications by passing a free renewal assessment on Microsoft Learn—anytime within six months before your certification expires. For more details, read our blog post, Stay current with in-demand skills through free certification renewals.

Microsoft Power Platform resources

Ready to discover your career path?

Career prospects for developers are looking good. You’ve probably heard that millions of apps will be created in the next few years. To set yourself apart and ensure that you’re ready to be part of this tech intensity, remember that certifications validate your technical skills and experience. They’re a sign to employers that you’re ready to build for the future.

by Contributed | Jul 7, 2021 | Technology

This article is contributed. See the original author and article here.

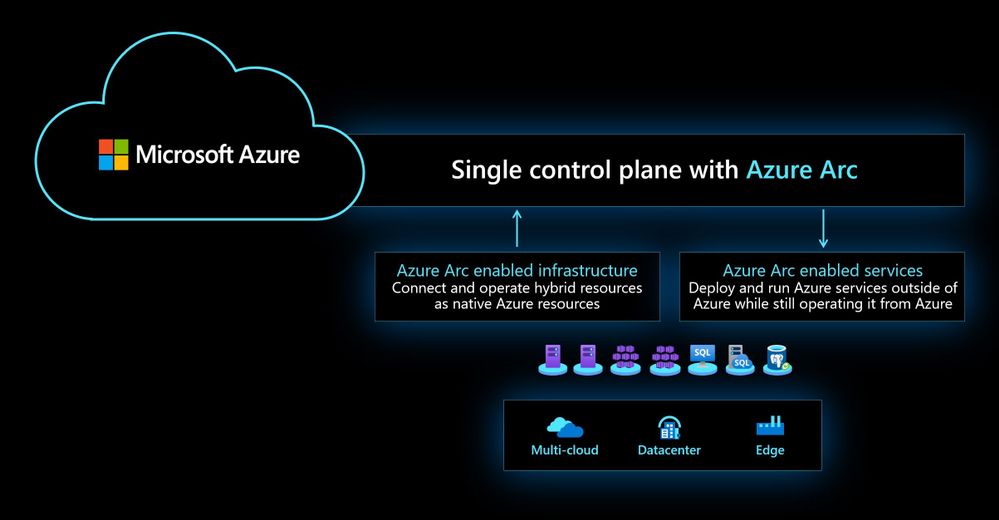

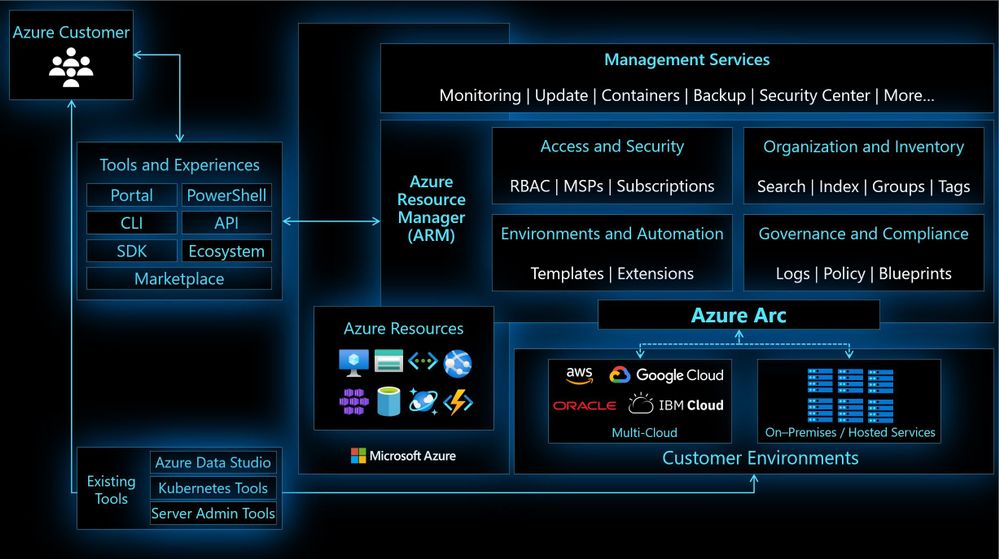

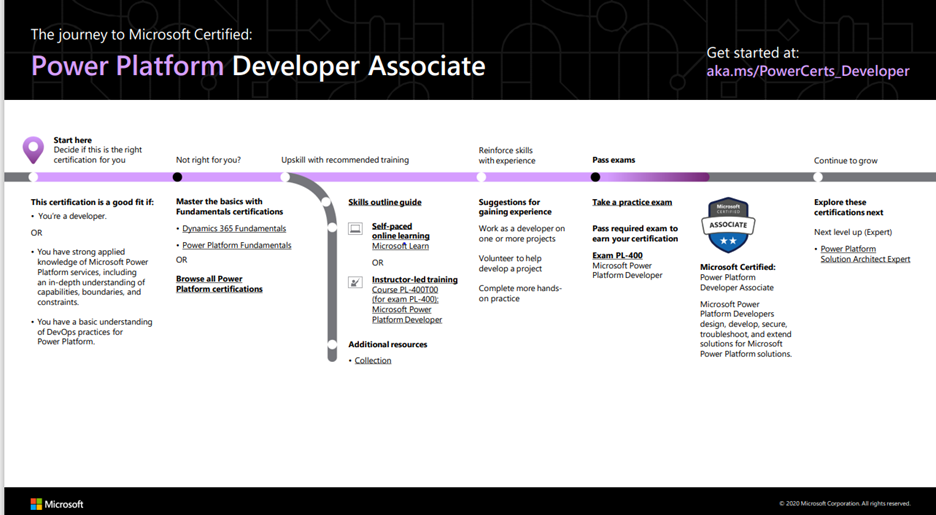

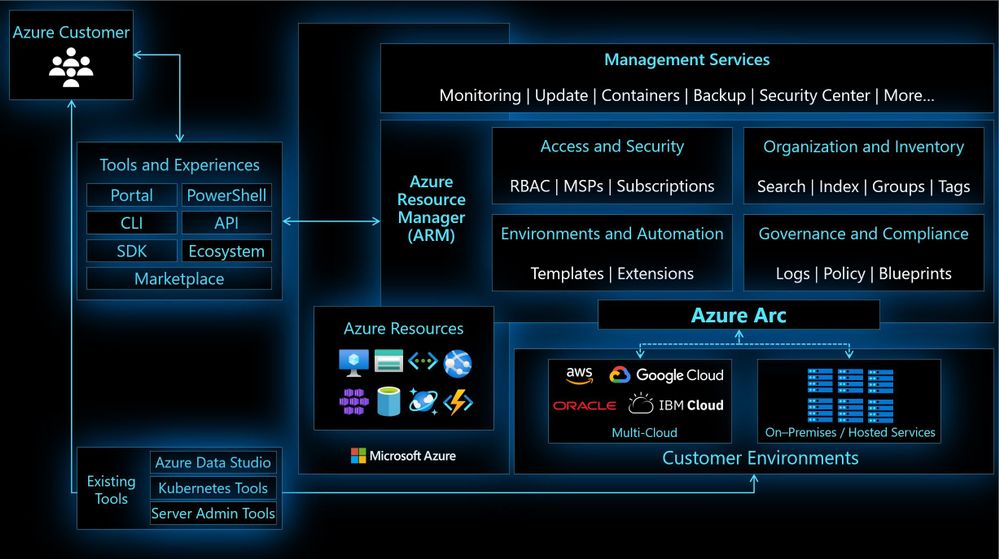

Azure Arc and the Azure control plane enables Cloud Solutions Architects to build hybrid and mutlicloud architectures. Taking advantage of the Azure control plane to manage infrastructure and allows to deploy Azure services anywhere. This allows customers to build cloud solutions and applications architectures consistently, independent of where the application is running.

Azure Arc Overview

Azure Arc Overview

In this blog post, we will have a look at Azure Arc for Cloud Solutions Architects. Azure Arc allows you to extend Azure management and Azure services to anywhere. Meaning that you can deploy, manage and govern resources running across hybrid and multi cloud environments, and bring services such as Azure SQL Database and Azure PostgreSQL Hyperscale to your on-premise datacenter, edge location, or other cloud providers. Since Azure Arc can help in many different scenarios.

Azure Arc

Azure Arc simplifies governance and management by delivering a consistent multi-cloud and on-premises management platform. Azure Arc enables you to manage your entire environment, with a single pane of glass, by projecting your existing resources into Azure Resource Manager. You can now manage virtual machines, Kubernetes clusters, and databases as if they are running in Azure. Regardless of where they live, you can use familiar Azure services and management capabilities. Azure Arc enables you to continue using traditional ITOps, while introducing DevOps practices to support new cloud-native patterns in your environment.

Azure Arc Architecture Single Control Plane

Azure Arc Architecture Single Control Plane

This provides you with a single control plane for your hybrid and multicloud environment.

Azure Arc for Cloud Solutions Architects

Let’s have a look at some key Azure Arc scenarios for Cloud Solutions Architects.

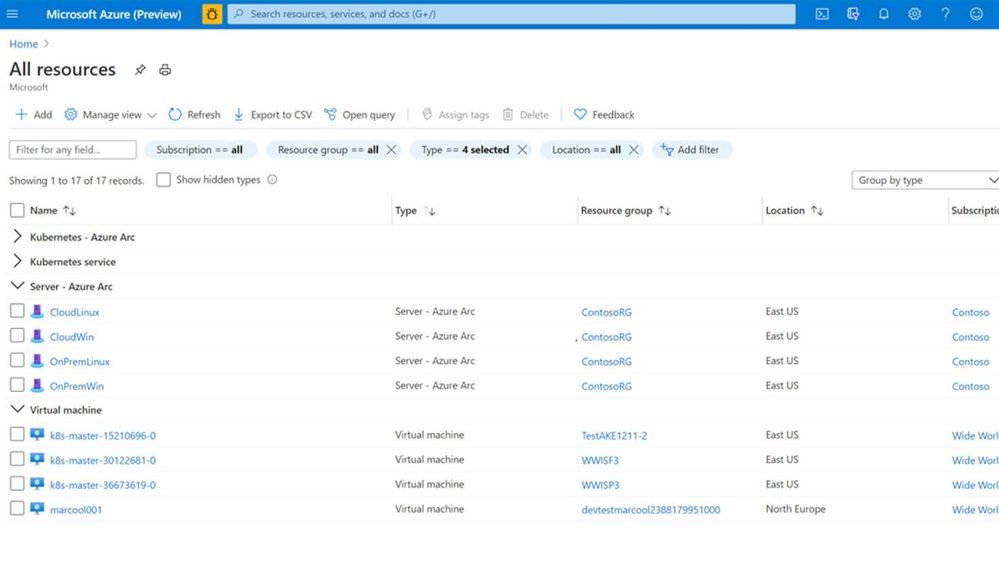

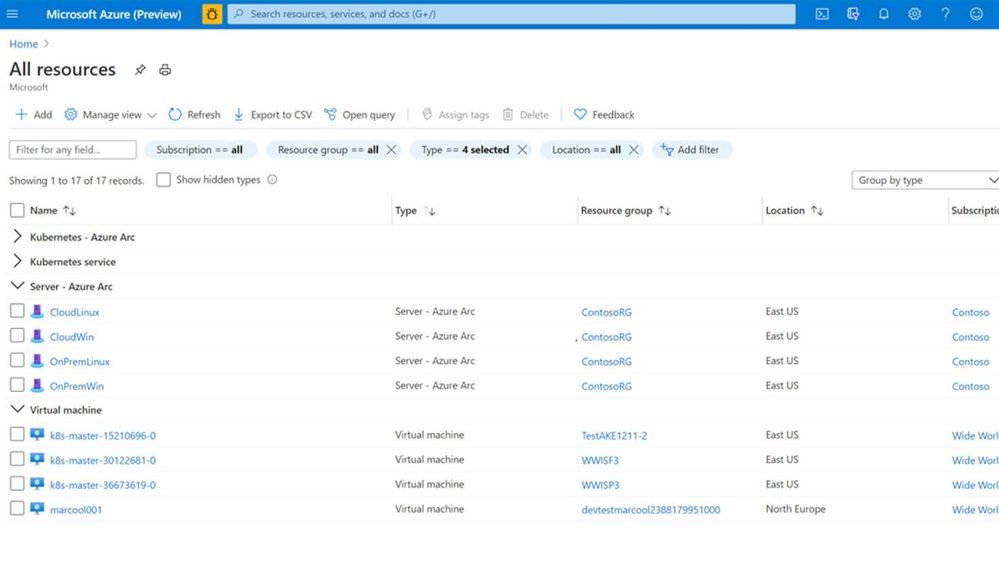

Use the Azure Portal to gain central visibility

In hybrid and multicloud environments, it can be difficult for Cloud Solutions Architects to get a central view of all the resources they need to manage. Some of these resources are running in Azure, some on-premises, branch offices, or even at other cloud providers. By connecting resources to the Azure Resource Manager using Azure Arc, Security Engineers can get central visibility of a wide range of resources, including Windows and Linux servers, SQL server, Kubernetes clusters, and Azure services running in Azure and outside of Azure.

Azure Arc and Azure resources in the Azure Portal

Azure Arc and Azure resources in the Azure Portal

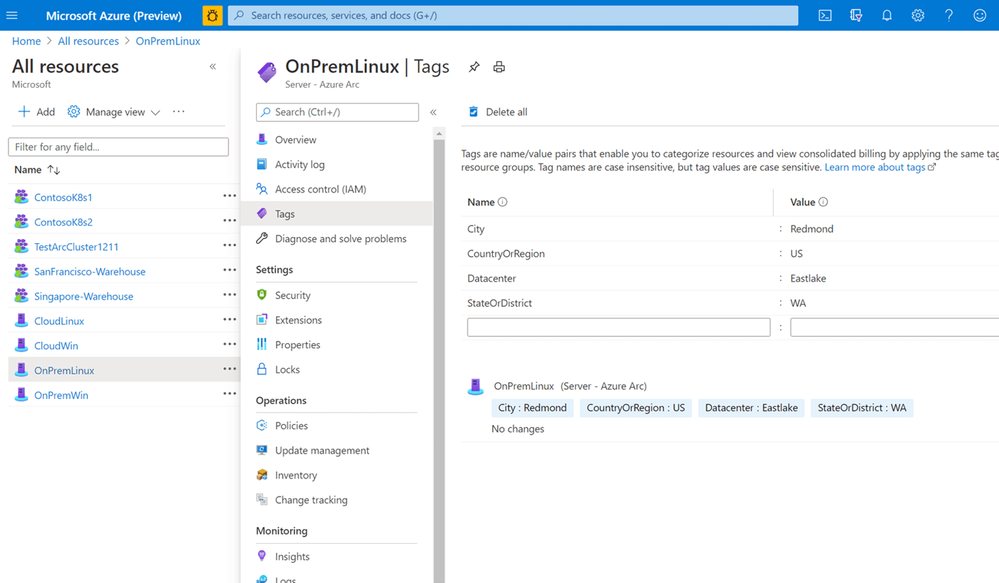

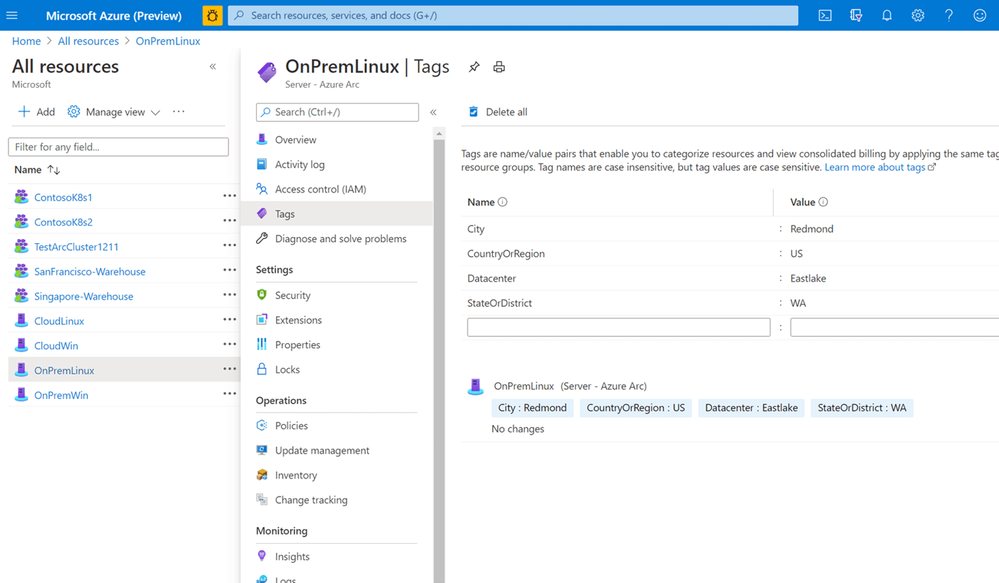

Organization and Inventory

The single control plane using Azure Resource Manager lets you organize and inventory assets through various Azure scopes, such as management groups, subscriptions, resource groups, and tags.

Azure Arc Tagging

Azure Arc Tagging

Azure Resource Graph

Establish central visibility in the Azure portal and enable multi-environment search with Azure Resource Graph. This allows you to run queries against the Azure resource graph and provide a centralized view of all your resources running in Azure and outside of Azure.

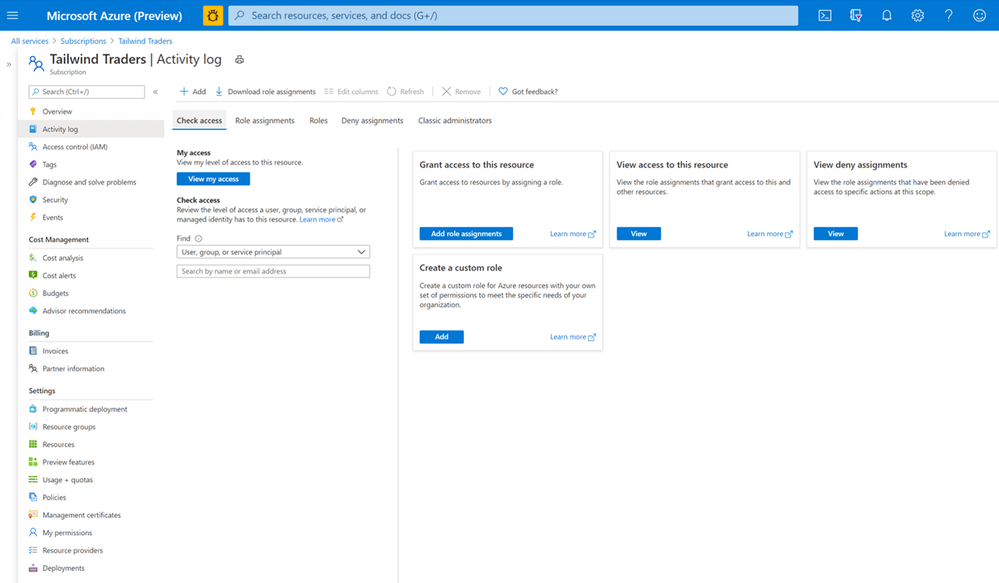

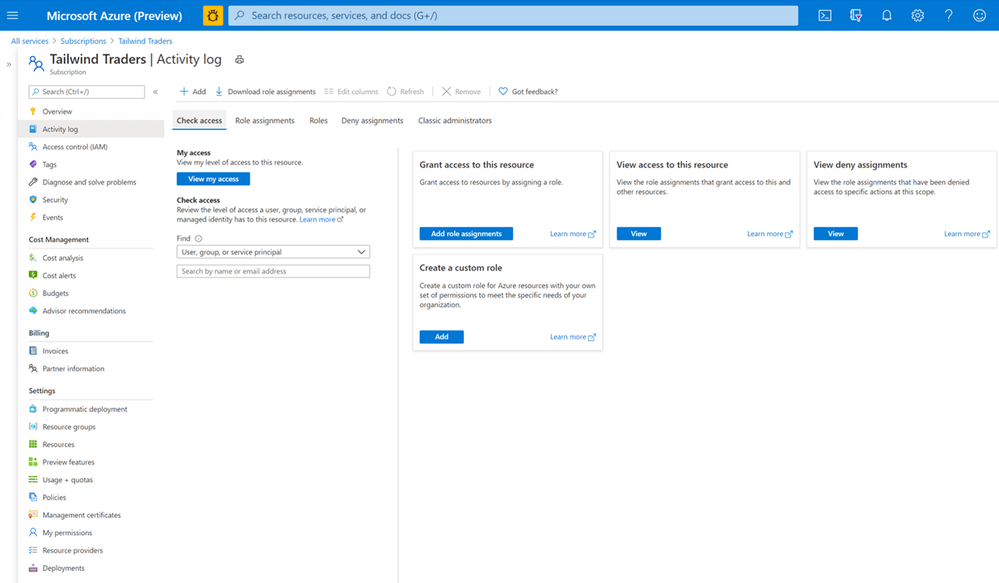

Manage Access

As a Cloud Solutions Architect, you want to make sure that only people who need access can access these systems. You can delegate access and manage security policies for resources using role-based access control (RBAC) in Azure. With Azure Arc enabled servers, we are seeing customers removing the local access for administrators and only provide them access to the system in the Azure portal using Azure Arc and Azure Management services. If you run in multiple environments and tenants, Azure Arc also integrated perfectly in Azure Lighthouse. Azure Lighthouse is especially interesting for managed services providers.

Role-based Access Control

Role-based Access Control

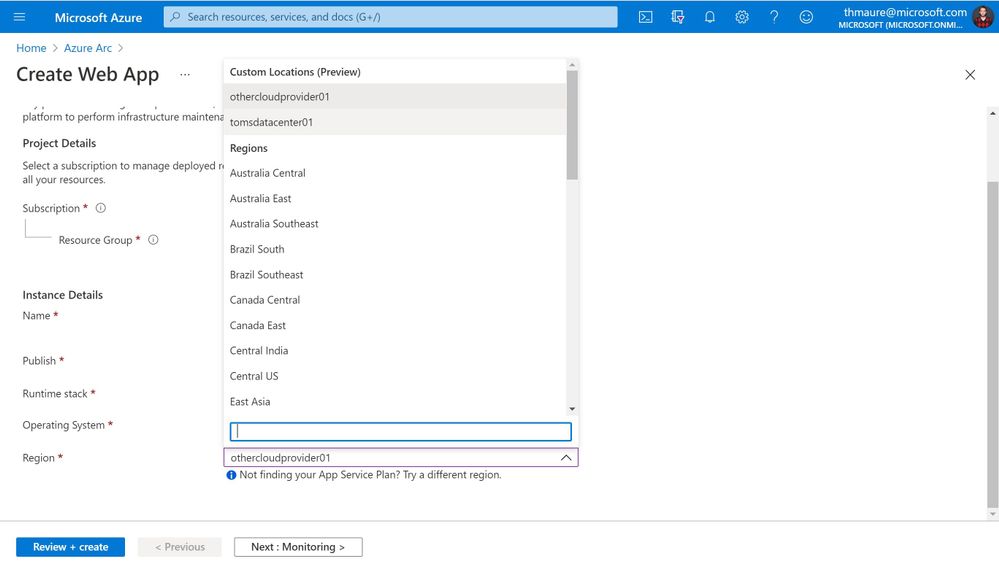

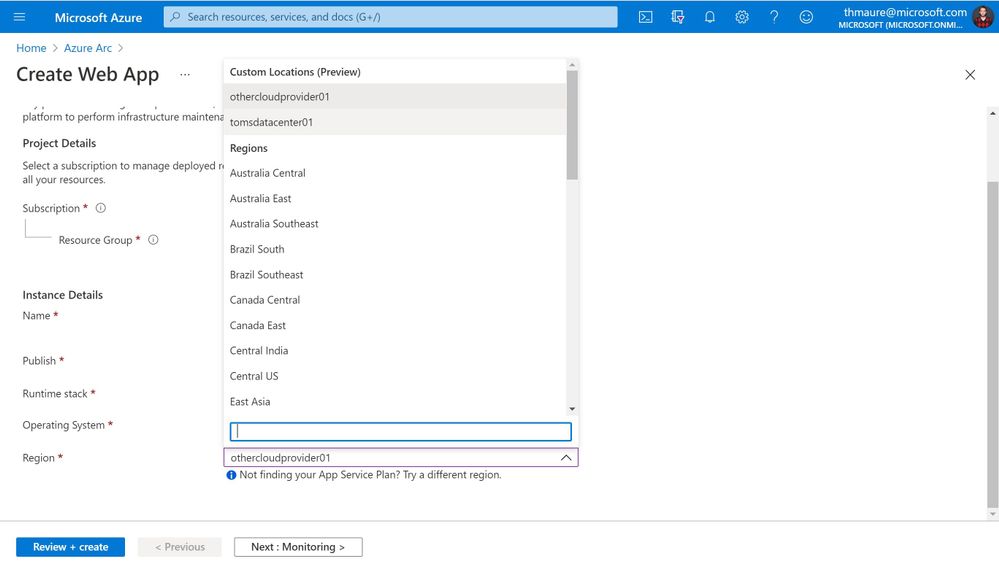

Enable your custom deployment locations

Azure Arc enables you to create custom locations, so you can use the Azure Resource Manager not just to deploy to Azure Regions but also to your own custom locations. You can learn more about custom locations on Microsoft Docs.

Azure Regions and custom locations

Azure Regions and custom locations

Run cloud-native apps on Azure PaaS anywhere

Azure Arc allows you to deploy Azure application services such as Azure App Service, Functions, Logic Apps, Event Grid, and API Management anywhere, on-premises, edge locations, or any other cloud provider. This is great if you are building and running cloud-native applications on Azure PaaS services and want them to run outside of Azure without rearchitecting them.

These are the new Azure Arc-enabled Application services announced at Microsoft Build 2021. These allow you to run Azure PaaS services on-premises and at other cloud providers.

- Azure App Service makes building and managing web applications and APIs easy with a fully managed platform and features like autoscaling, deployment slots, and integrated web authentication.

- Azure Functions makes event-driven programming simple, with state-of-the-art autoscaling, and triggers and bindings to integrate with other Azure services.

- Azure Logic Apps produces automated workflows for integrating apps, data, services, and backend systems with a library of more than 400 connectors.

- Azure Event Grid simplifies event-based applications with a single service for managing the routing of events from any source to any destination.

- Azure API Management provides a unified management experience and full observability across all internal and external APIs.

Create App Service and select a custom location

Create App Service and select a custom location

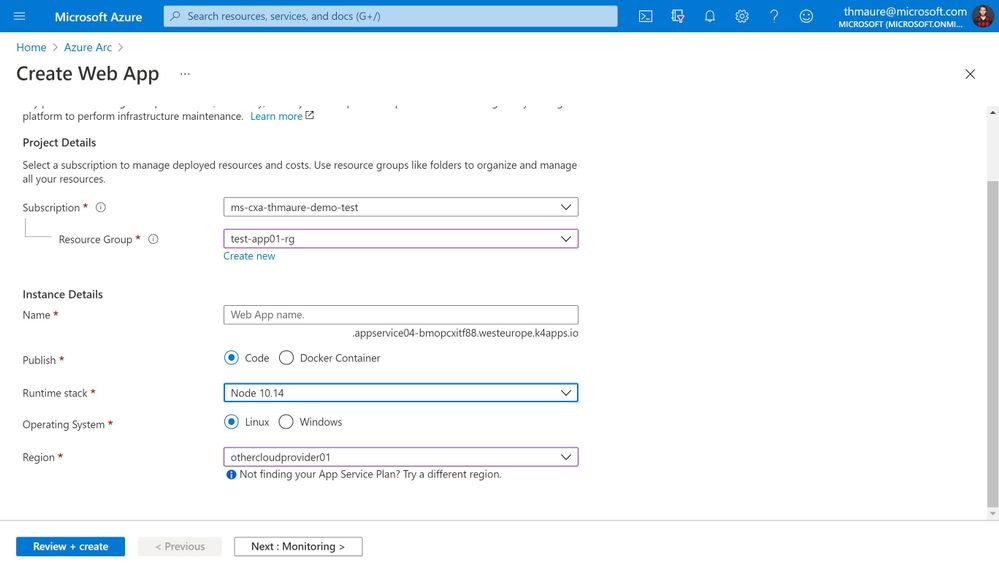

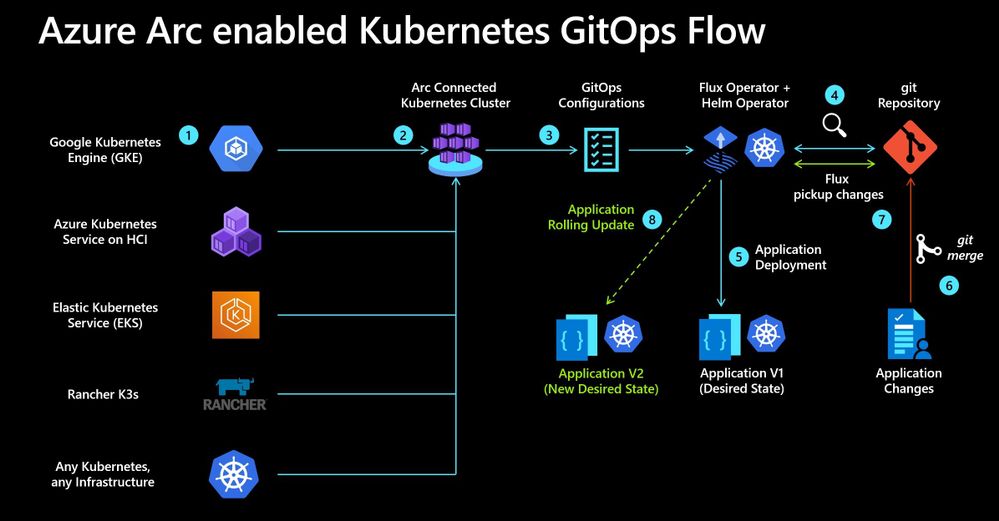

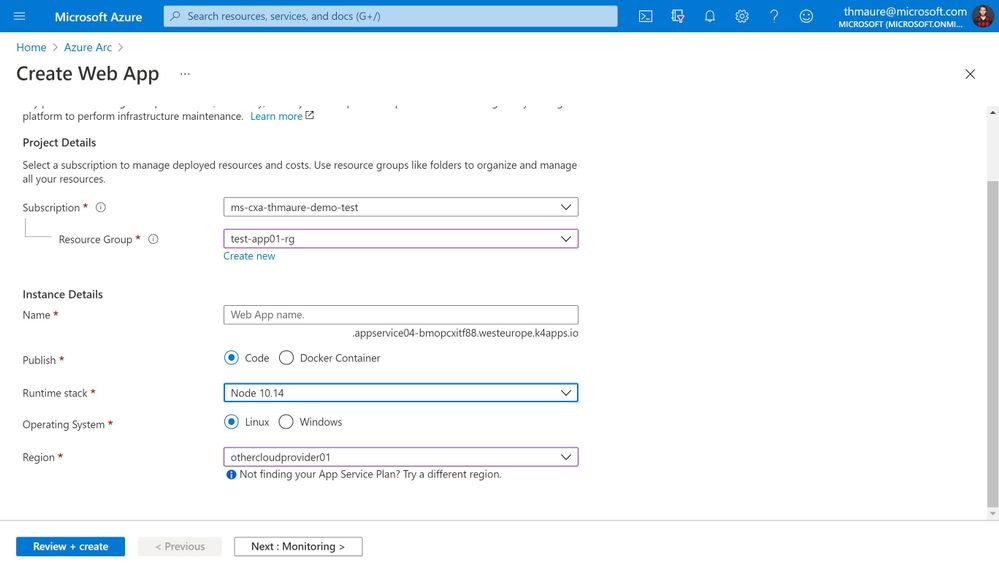

Azure Arc enabled Data Services

Next to Azure Application services to run services like Web Apps and Logic Apps, you also want to leverage data services and databases. With Azure Arc enabled Data services you can run services like Azure SQL Managed Instances anywhere.

The applications services can be combined with the Azure Arc enabled Data services which include:

- Azure Arc enabled Azure SQL Managed Instance – Azure Arc enabled SQL Managed Instance has near 100% compatibility with the latest SQL Server database engine, and enables existing SQL Server customers to lift and shift their applications to Azure Arc data services with minimal application and database changes while maintaining data sovereignty. At the same time, SQL Managed Instance includes built-in management capabilities that drastically reduce management overhead.

- Azure Arc enabled Azure PostgreSQL Hyperscale – This is the hyperscale form factor of the Postgres database engine that is available with Azure Arc enabled data services. It is also powered by the Citus extension that enables the hyperscale experience. In this form factor, our customers provide the infrastructure that hosts the systems and operate them.

Azure Arc enabled data services

Azure Arc enabled data services

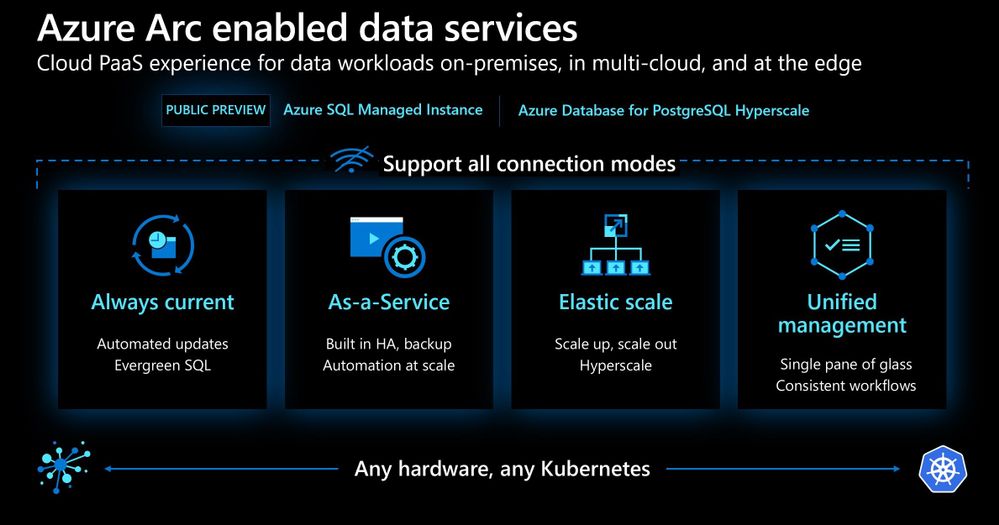

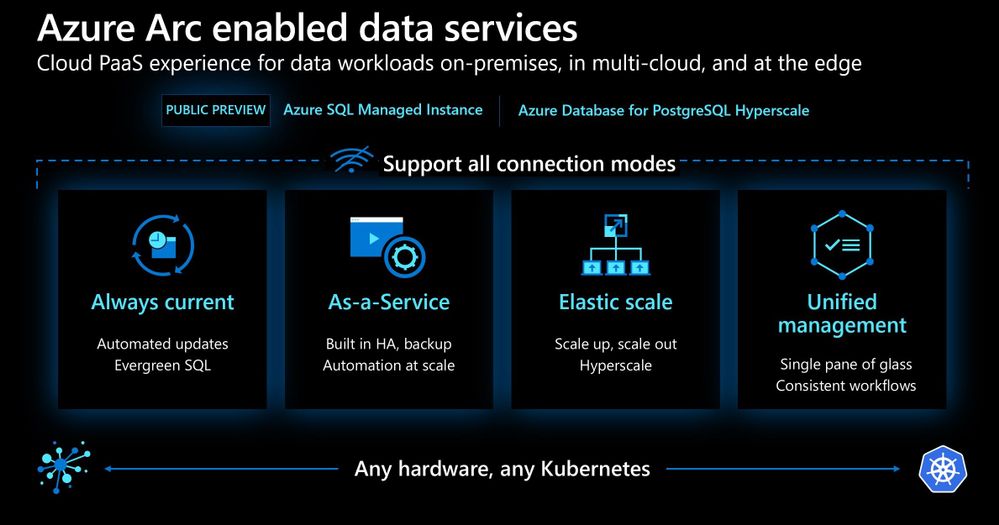

CI/CD workflow using GitOps – Azure Arc enabled Kubernetes

Azure Arc brings DevOps practices anywhere. Modern Kubernetes deployments house multiple applications, clusters, and environments. With GitOps, you can manage these complex setups more easily, tracking the desired state of the Kubernetes environments declaratively with Git. Using common Git tooling to track cluster state, you can increase accountability, facilitate fault investigation, and enable automation to manage environments.

Azure Arc enabled Kubernetes GitOps Flow

Azure Arc enabled Kubernetes GitOps Flow

Deploy and run Azure Kubernetes Services (AKS) on-premises on Azure Stack HCI

With Azure Arc and Azure Stack HCI, you can run the Azure Kubernetes Services (AKS) on-premises in your own datacenter or edge location on top of Azure Stack HCI. This AKS cluster can be Azure Arc enabled, to allow management and deployment of applications to your Kubernetes clusters. You can learn more on Microsoft Docs.

Run Machine Learning anywhere

Azure Arc enabled machine learning lets you configure and use an Azure Arc enabled Kubernetes clusters to train and manage machine learning models in Azure Machine Learning.

Azure Arc enabled machine learning supports the following training scenarios:

- Train models with 2.0 CLI

- Distributed training

- Hyperparameter sweeping

- Train models with Azure Machine Learning Python SDK

- Build and use machine learning pipelines

- Train model on-premise with outbound proxy server

- Train model on-premise with NFS datastore

Learn more on Microsoft Docs.

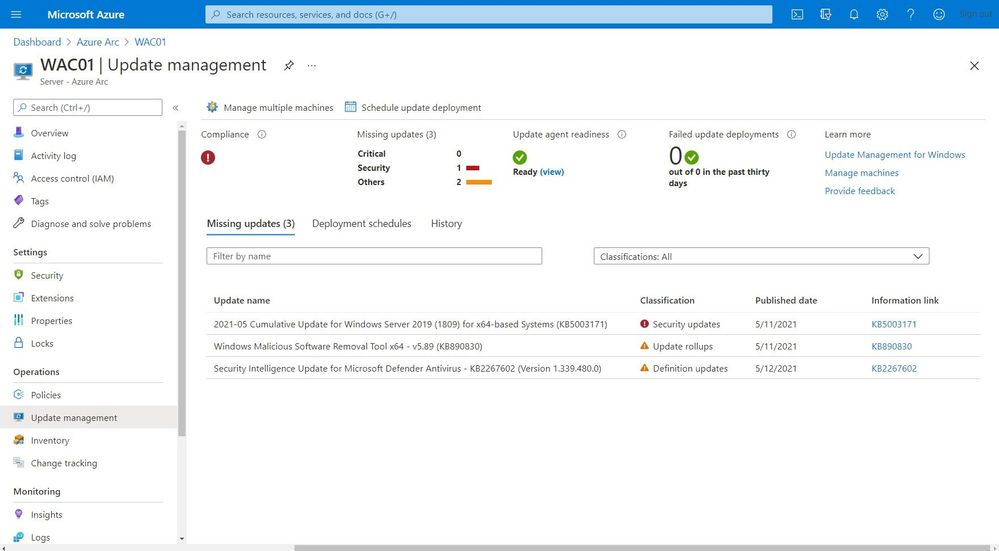

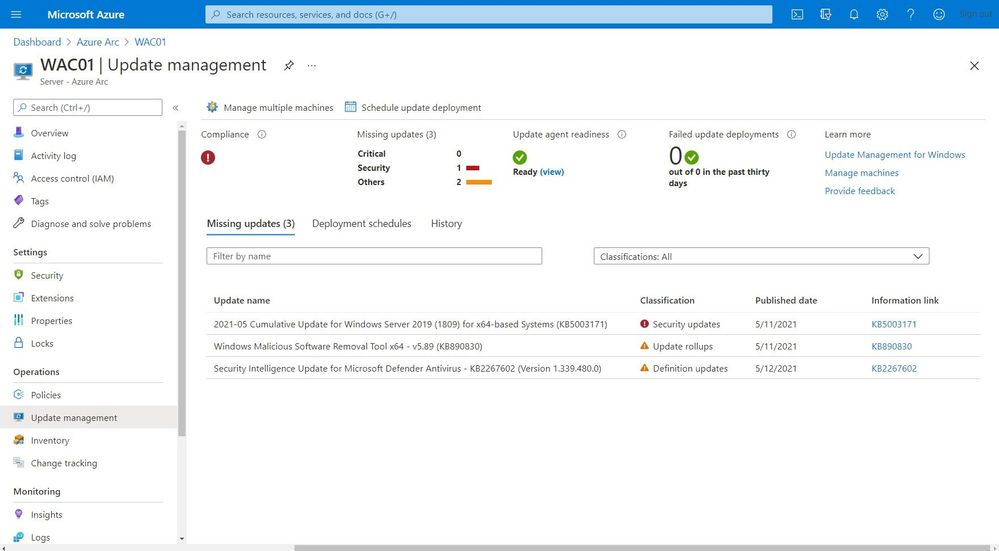

Update Management

As a Cloud Solutions Architect, one of your jobs is to make sure that all the systems have the latest updates and patches installed to protect against vulnerabilities. Often customers spend hours orchestrating or deploying patched or building automation for their patch management. With Update Management, you can manage operating system updates for your Windows and Linux servers. In addition, it allows you to schedule and automates patching for your servers.

Update Management

Update Management

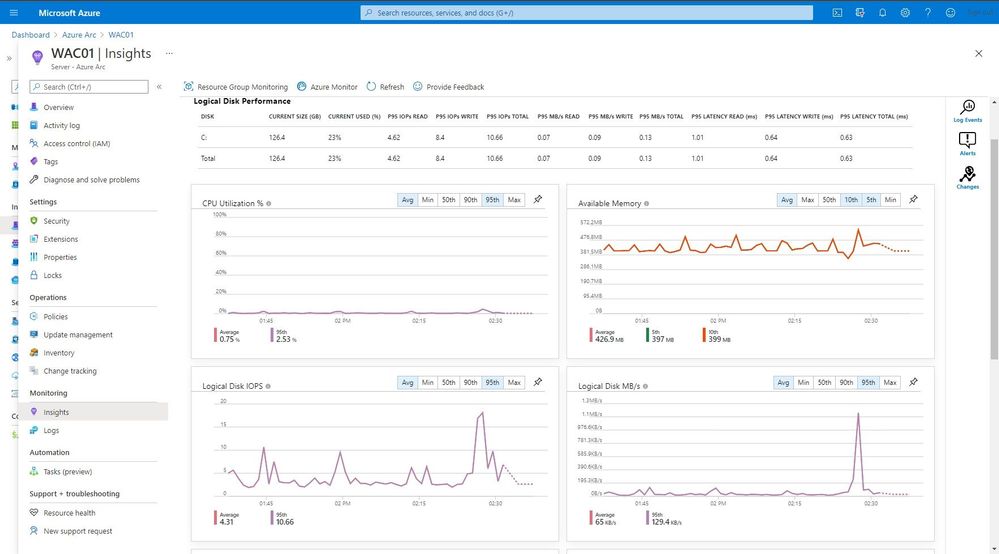

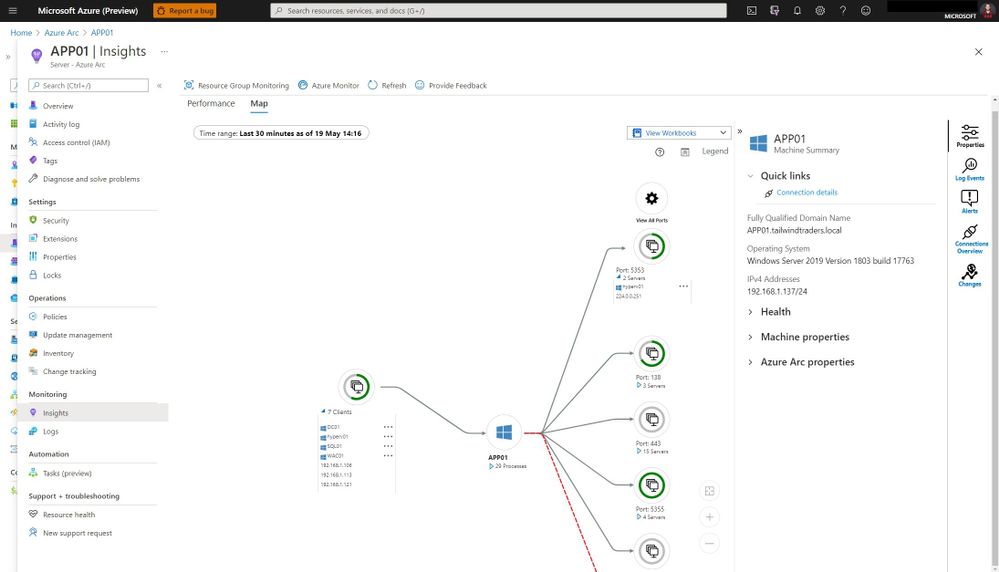

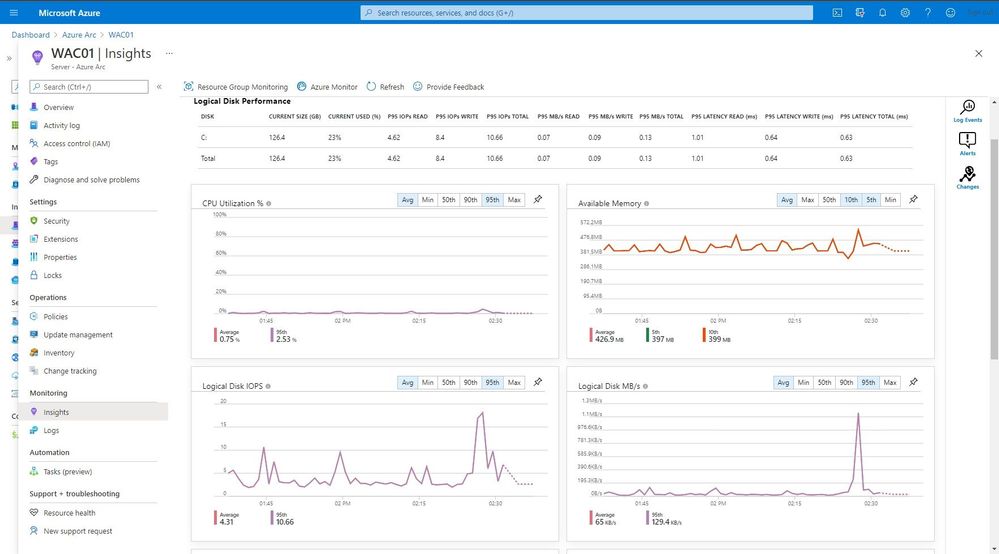

Monitoring

You do not just want to manage your systems; you also want to monitor them and make sure that you get alerted in case anything is happening which you disrupted your environment and applications. You can monitor your Kubernetes clusters and containers, Linux, and Windows Servers. Azure Monitor provides you with monitoring guest operating system performance and discover application components to monitor their processes and dependencies with other resources the application communicates using VM insights.

Monitoring

Monitoring

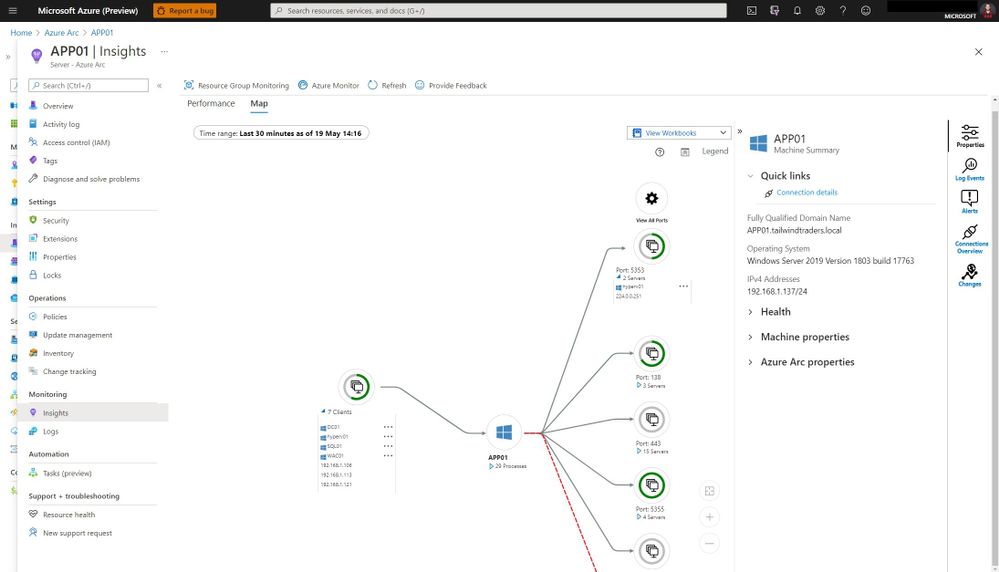

One of the great features in Azure Monitor which can help Cloud Solutions Architects is the Microsoft Dependency agent. This provides you with information about the incoming and outgoing connections to a specific server.

Azure Monitor Map

Azure Monitor Map

Log collection and analytics

Log collection and analytics can be very helpful to a Cloud Solutions Architect in many ways. With Azure Log Analytics you can collect, sort, filter, and analyze your logs centrally. It allows Security Engineers to get a central view of all the security logs of the systems they manage. These logs can also be used for thread hunting using Azure Sentinel.

Microsoft Azure Sentinel is a scalable, cloud-native, security information event management (SIEM) and security orchestration automated response (SOAR) solution. Azure Sentinel delivers intelligent security analytics and threat intelligence across the enterprise, providing a single solution for alert detection, threat visibility, proactive hunting, and threat response.

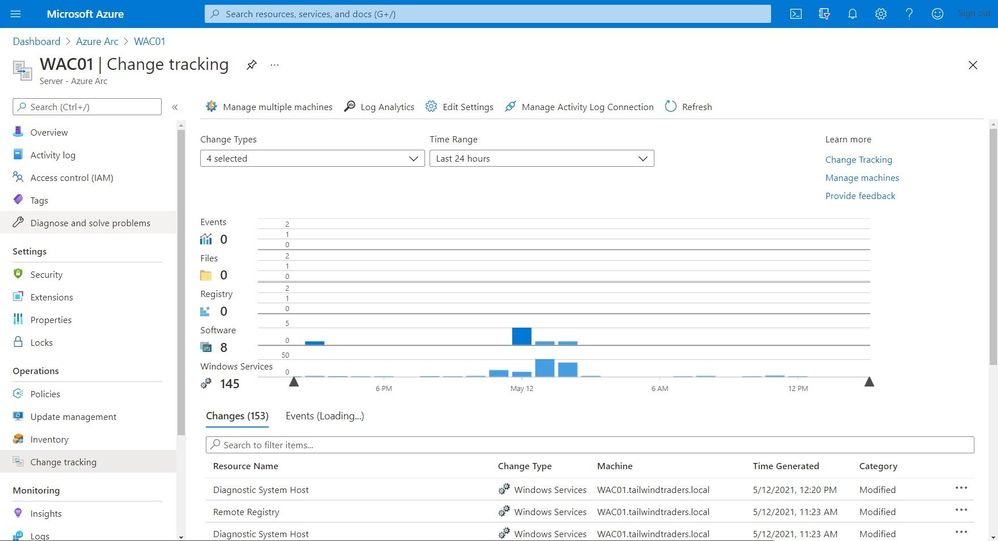

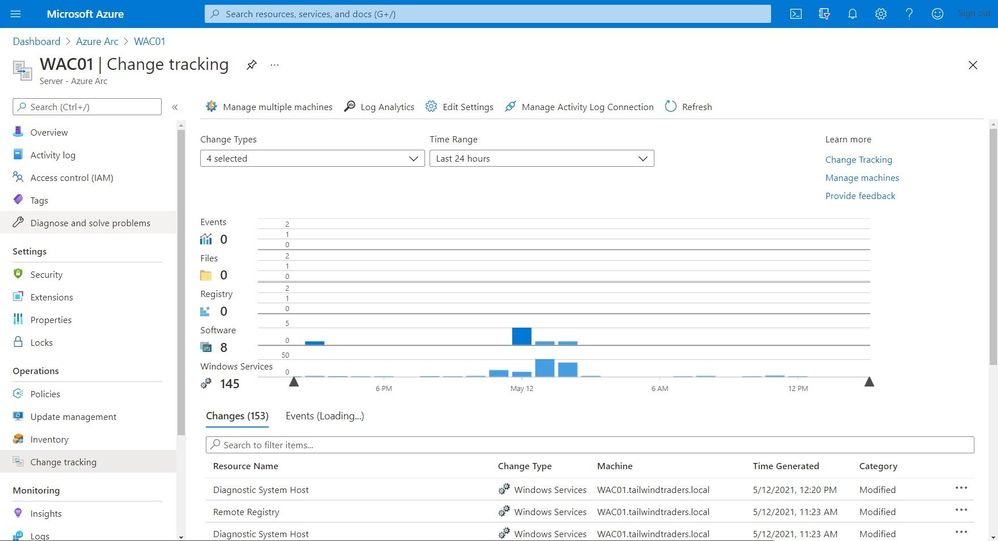

Change Tracking and Inventory

With change tracking and inventory, you can get an overview of the changes happening in your environment and get an inventory of software installed on your Windows and Linux servers.

Change Tracking and Inventory

Change Tracking and Inventory

Certificate Management

You might have managed certificates on your servers using Active Directory and Group Policies for your local environment. In hybrid cloud or mutlicloud environments, servers are often not even domain joined. That can make managing certificates a challenge. With a combination of the Azure AD Managed Identity assigned by the Azure Arc agent and Azure Key Vault you can easily and securely deploy and manage certificates to your Windows and Linux servers.

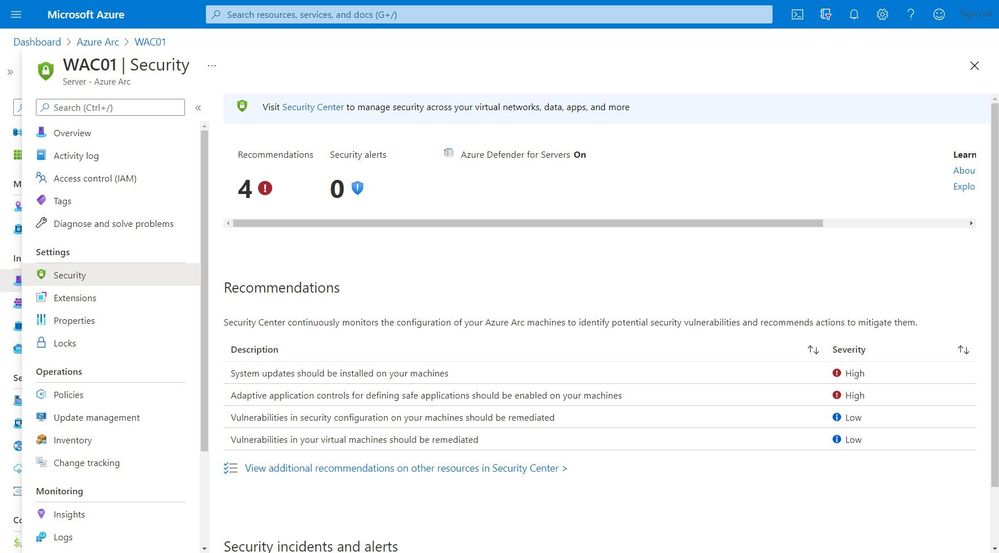

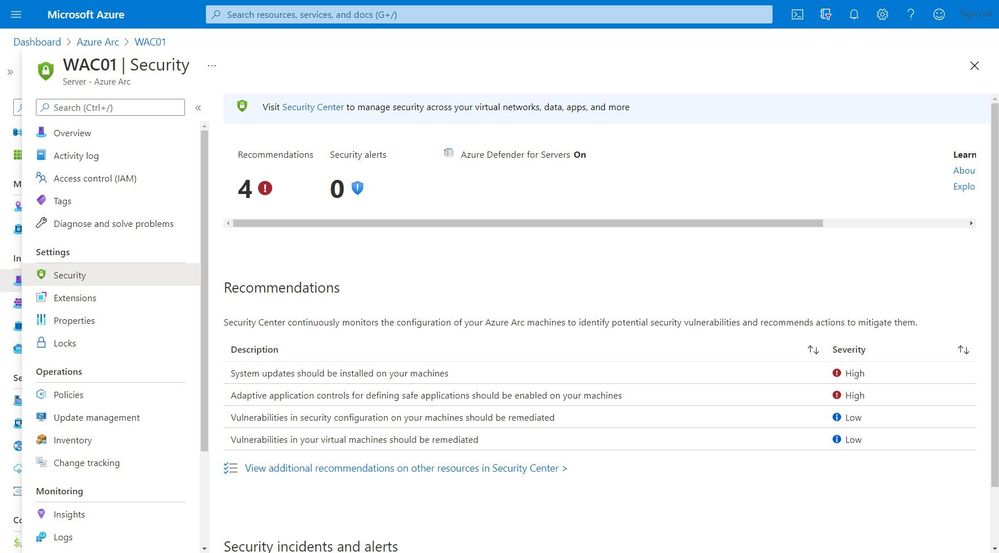

Security Center

Making sure that your servers and Kubernetes clusters are secured is often a challenging task, especially in a hybrid or multicloud environment. With Azure Security Center you get threat detection and proactively monitor for potential security threats for your Azure Arc resources. It allows you to deploy Azure Defender for servers and Azure Defender for Kubernetes to your hybrid and multicloud resources.

Security Center

Security Center

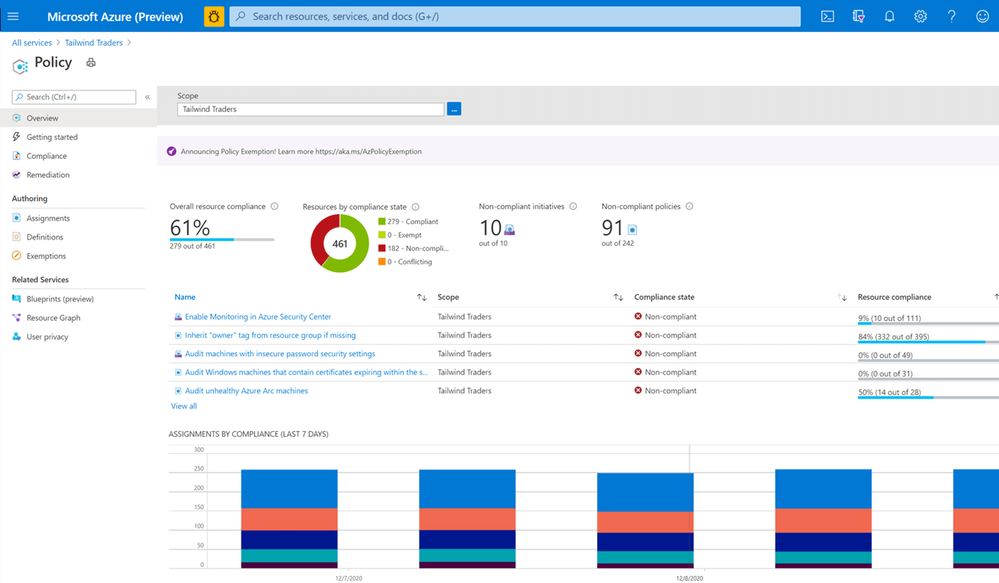

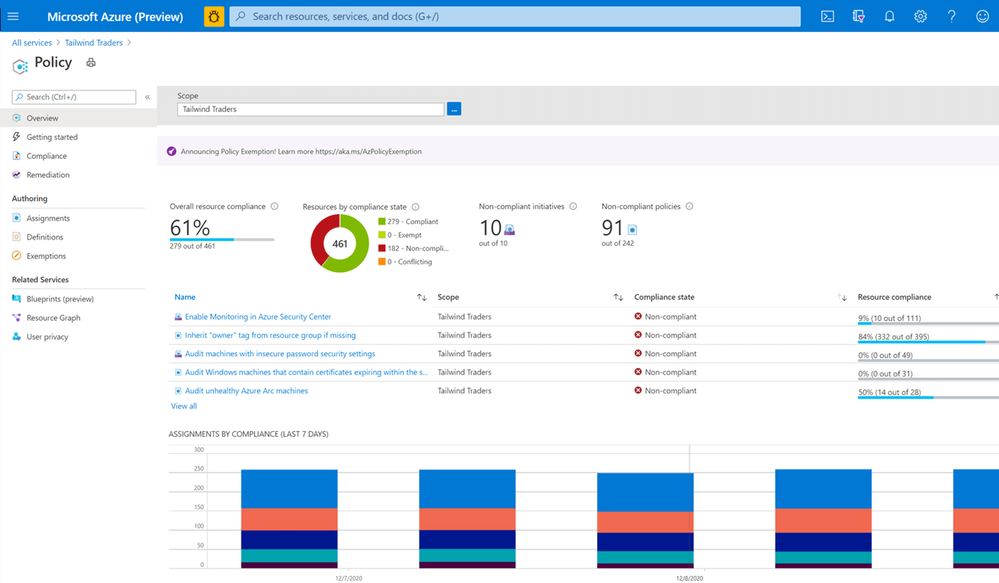

Get compliance state

As a Cloud Solutions Architect, you want to know if your servers or Kubernetes clusters are compliant with the company policies. Or you are even in charge to make sure that all your systems are configured correctly and secure. This is where Azure Policy Guest Configuration on your Azure Arc enabled servers can help you to make sure that everything is compliant.

Azure Policy

Azure Policy

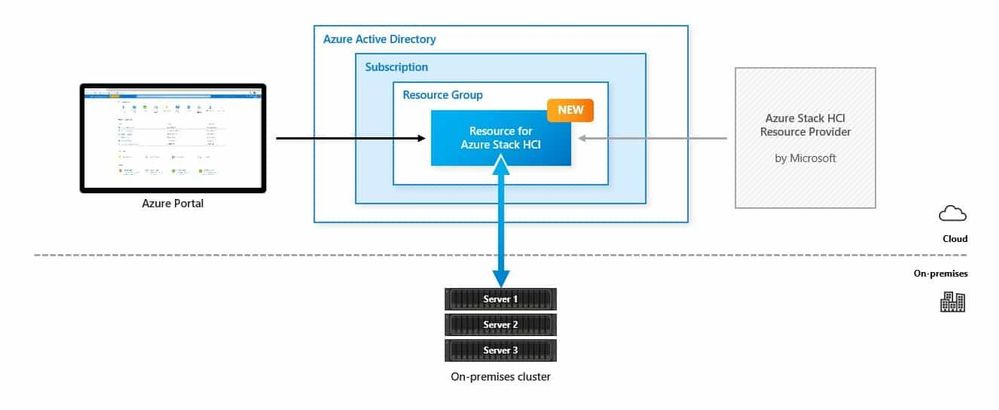

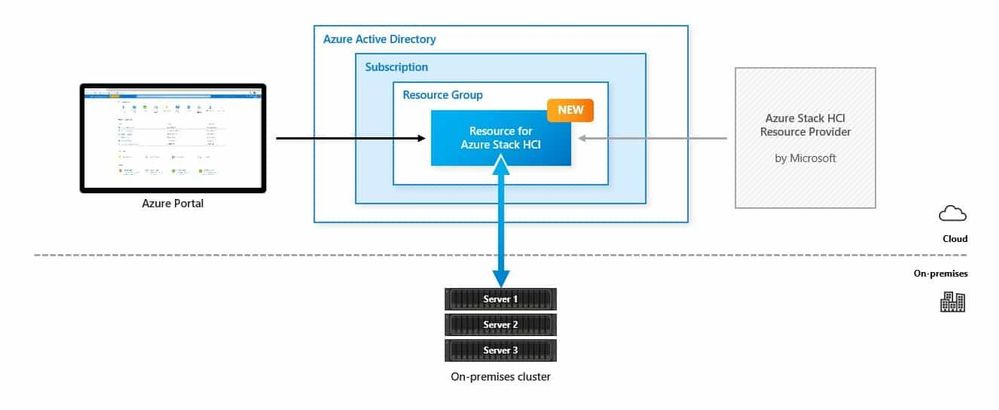

Manage your Azure Stack HCI

Azure Stack HCI is a new hyperconverged infrastructure (HCI) operating system delivered as an Azure service that provides the latest security, performance, and feature updates. Azure Stack HCI has Azure Arc build-in and can be managed through the Azure Portal.

Azure Stack HCI Native Integration in to Microsoft Azure

Azure Stack HCI Native Integration in to Microsoft Azure

Next steps

Learn more about Arc enabled servers, see the following overview

Learn more about Arc enabled Kubernetes, see the following overview

Learn more about Arc enabled data services, see the following overview

Experience Arc enabled services from the Jumpstart proof of concept

Also, check out my video on how to manage your hybrid cloud using Azure Arc on Microsoft Channel 9.

https://channel9.msdn.com/Shows/IT-Ops-Talk/Manage-your-hybrid-cloud-environment-using-Azure-Arc/player?WT.mc_id=modinfra-23500-thmaure

Conclusion

Azure Arc enables Cloud Solutions Architects and others to build hybrid and multicloud solutions and with the right tooling to manage and operate hybrid and multicloud resources such as Windows and Linux servers, Kubernetes clusters, and other resources. If you have any questions, feel free to leave a comment below.

by Contributed | Jul 7, 2021 | Technology

This article is contributed. See the original author and article here.

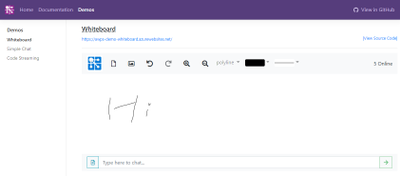

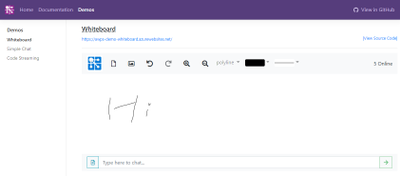

The COVID-19 pandemic has led to unprecedented measures and is also changing the way we work. In addition to restricting travel and canceling large events, a growing number of companies are encouraging to work remotely. In order to continue working efficiently and creating value under these new circumstances, organizations need to adopt different applications with different scenarios, e.g., web conference, remote collaboration, etc. The real-time whiteboard is one of the tools to help you build remote collaboration and bring your teams together, anytime, anywhere, e.g., running productive and engaging online meetings and workshops, building and developing ideas with distributed teams, explaining complex process and system with visual map or diagram, etc. The Azure Web PubSub (AWPS) which is a fully managed service could help you build the real-time whiteboard application. Let’s learn how to build a whiteboard demo together.

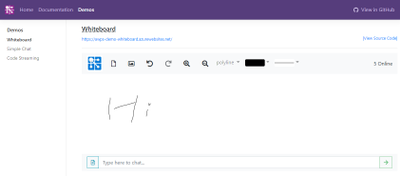

What’s the whiteboard demo?

This whiteboard demo demonstrates how to build a web application for real time collaboration using Azure and other related technologies. The fundamental feature of this application is allowing anyone painting on it and synchronizing the paint to others in real-time. The user could paint with the basic paint tool, touching on mobile devices or uploading images.

Before we start digging into details, you can first play with this demo online. Open this site and input your username, then draw anything you like in the whiteboard. Open another browser window you’ll see your changes are synchronized in real-time.

You can also find the source code of this demo here.

Synchronize real-time data between client applications

One of the key features in this whiteboard is its ability to synchronize the drawing between multiple client apps in real-time. This is implemented by using WebSocket technology, which is commonly used in web applications for bidirectional communication. If you’re already familiar with WebSocket and look into the implementation in server.js, you’ll notice it’s very different than a typical WebSocket server. In a typical WebSocket application, server needs to manage all client connections and handle data transfer between clients. So you can imagine in a whiteboard app, server will receive the drawing from one client and broadcast it to all other clients, which will generate huge traffic when everyone is drawing and processing all those data will be a big burden to the server.

If you look at our whiteboard server, you’ll see it’s a standard express.js server application (we don’t go through details about how to use express.js here, you can refer to its official docs to learn more). Instead of having code to handle WebSocket connections, it creates a WebPubSubEventHandler and use it as a middleware in the express app.

let handler = new WebPubSubEventHandler(hubName, ['*'], {

path: '/eventhandler',

handleConnect: ...

onConnected: ...

onDisconnected: ...

handleUserEvent: ...

});

app.use(handler.getMiddleware());

By using this Web PubSub event handler, we’re leveraging Azure Web PubSub service to manage the client connections for us.

In the event handler there’re some callbacks like onConnected and onDisconnected, which are similar to open and close events in WebSocket, but the key difference here is when using Azure Web PubSub service, the connection is connected to the service, your server just gets a notification when this happens but does not need to manage the lifetime of the connection. This is usually challenging in real scenarios where you need to handle things like connection routing and load balancing. In Azure Web PubSub they’re all taken care of by the service.

Also in the server code you’ll notice there is no code to pass the data from one client to another, this is purely done at client side. Look at the client you’ll see code like this:

this._webSocket.send(JSON.stringify({

type: 'sendToGroup',

group: group,

dataType: 'json',

data: data

}));

This code sends a message to the WebSocket connection but there is no code at server side to handle it! This is because it is processed at service side. Azure Web PubSub can understand message from client (in this case it’s asking service to send this message to a group) and send the message to the corresponding clients (which is called publish/subscribe pattern). So you can see by using Azure Web PubSub you can save a lot of server resources (like CPU and network bandwidth) by offloading WebSocket connections to the service.

Maintain state at server side

Even Azure Web PubSub helps us deliver real-time updates between clients, client still needs to send drawing data to server so it can be saved at server side. So next time when a new user opens the whiteboard, they can see all paintings others draw before.

This is done by sending an event from client to server. Event is another communication pattern (comparing to publish/subscribe pattern we use for sending real-time updates) in Azure Web PubSub for clients to send data to server.

In client, there is code like this:

this._webSocket.send(JSON.stringify({

type: 'event',

event: 'message',

dataType: 'json',

data: data

}));

This code sends an event to server and there is corresponding server code to handle it:

let handler = new WebPubSubEventHandler(hubName, ['*'], {

path: '/eventhandler',

...

handleUserEvent: async (req, res) => {

let message = req.data;

switch (message.name) {

case 'addShape': ...

case 'removeShape': ...

case 'clear': ...

}

res.success();

}

});

You can see the code above handles three types of events (when user adds a new shape, removes an existing shape or clears the whiteboard) and save the data to a local diagram object (for demo purpose, in a real application you should use a persistent storage to store this diagram).

You can see there is still data communication between client and server, but comparing to the real-time updates between clients (which happens whenever a user moves their mouse on the screen), this only happens when a user finishes drawing a shape, so the amount of data is much less than the real-time updates.

Besides user events, Azure Web PubSub also supports some system events like connected and disconnected so server can know the status of client connections. You can see in the server code, they’re used to track the total number of clients online.

Use WebSocket API in client

Azure Web PubSub uses WebSocket API for its client programming interface. As long as your programming language supports WebSocket, you don’t need to install any third-party library. You already see how to send messages through WebSocket in previous sections, but there are a few more things you need to be aware before using it.

Authenticate with service

Azure Web PubSub doesn’t support anonymous connection, so in order to connect to the service each client needs to authenticate with it using a JWT token. Azure Web PubSub SDK already provides an API to generate the token from connection string. A recommended implementation is to expose a Web API (usually called negotiate) at server to return this token (the API itself can be protected by your own authentication mechanism). In the demo app it’s implemented like this:

app.get('/negotiate', async (req, res) => {

let token = await serviceClient.getAuthenticationToken({

roles: ['webpubsub.sendToGroup.draw']

});

res.json({

url: token.url

});

})

In the negotiate you can also control the permission of client (like which group it can send message to).

Create connection

Create connection is really simple but you need to specify a subprotocol if you want to directly send messages between clients.

let res = await fetch('/negotiate');

let url = res.json().url;

let ws = new WebSocket(url, 'json.webpubsub.azure.v1');

With json.webpubsub.azure.v1 subprotocol, you’ll be able to join, leave and publish messages from client (more details can be found here).

If you don’t specify subprotocol you can still connect, but all messages you send will be treated as events and be sent to server.

Handle reconnect

It’s very common that WebSocket connection will drop due to things like network glitches, long time inactivity at client side, etc. So to improve the stability of the client app you should always consider reconnect when it disconnects.

In Javascript if a WebSocket connection is closed you need to create a new WebSocket object to reconnect, which means for all callbacks you registered on the old object you need to re-register in the new one. In this demo we created a simple WebSocketClient class to wrap the raw WebSocket object so it will automatically reconnect and re-register all callbacks. Check out the source code to see how it works.

Next Steps

Now, we already go through the key points to build the real-time whiteboard application with Azure Web PubSub service. If you are looking for more details about this demo, you can refer to the whiteboard application on Github where the code is hosted, along with information and docs on how to deploy and run it yourself.

If you are trying to build your first real-time application with Azure Web PubSub, you could also get more helpful resources from the getting stared contents. We are looking forward your feedback and ideas to help us become better via Azure Feedback Forum!

by Contributed | Jul 7, 2021 | Technology

This article is contributed. See the original author and article here.

py-spy is a sampling profiler for Python programs. It lets you visualize what your Python program is spending time on without restarting the program or modifying the code in any way. py-spy is extremely low overhead: it is written in Rust for speed and doesn’t run in the same process as the profiled Python program. This means py-spy is safe to use against production Python code.

py-spy works on Linux, OSX, Windows and FreeBSD, and supports profiling all recent versions of the CPython interpreter (versions 2.3-2.7 and 3.3-3.9).

This article shows a demonstration of how to use py-spy to run sampling profiler for your Python application running in Linux App Service.

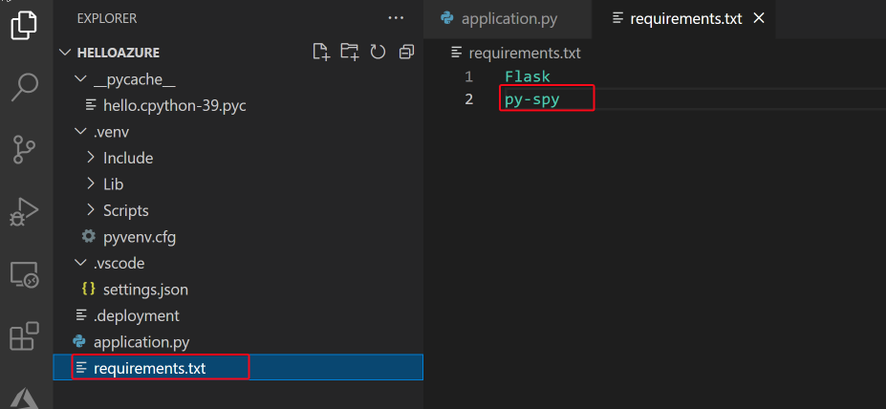

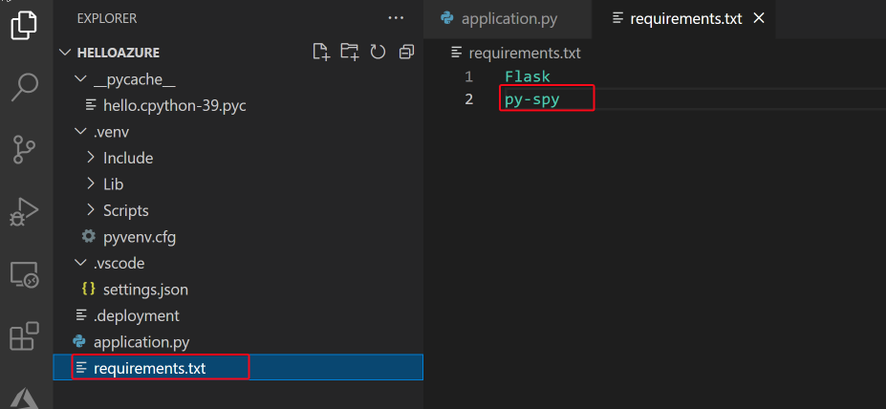

Step 1: Install py-spy for your Python application

In your Python application project, find your requirements.txt file. Add “py-spy” in the file.

This is the only change we need to make. No other code changes are required.

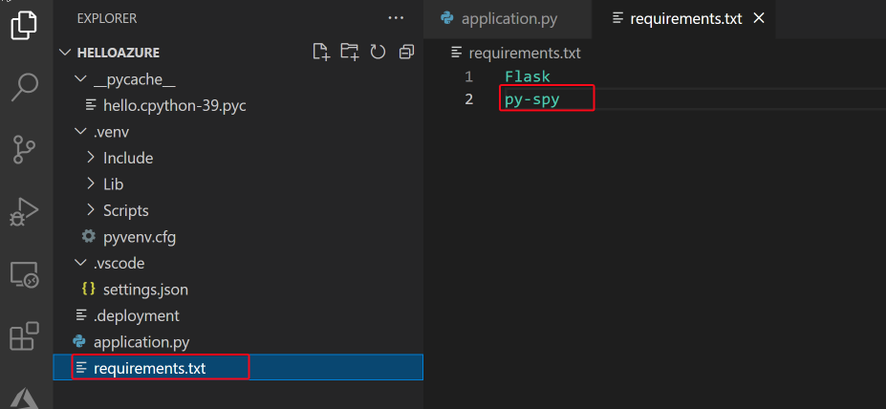

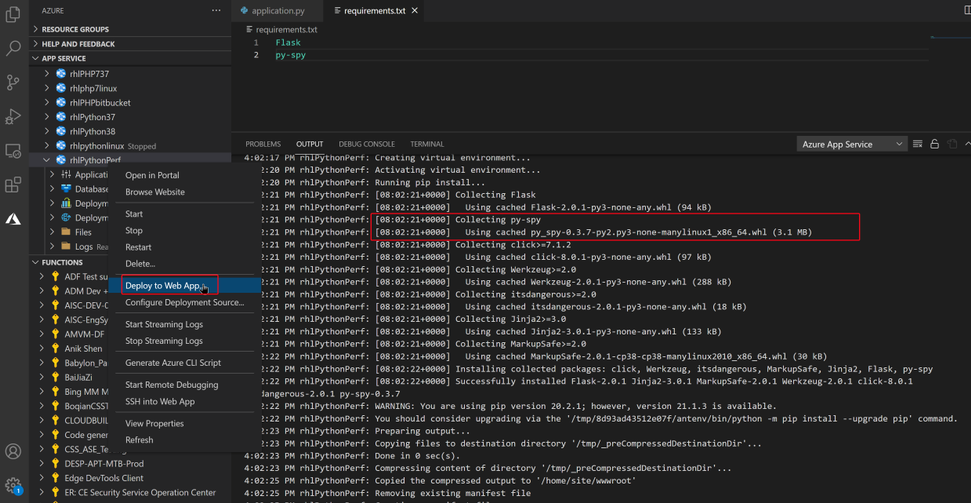

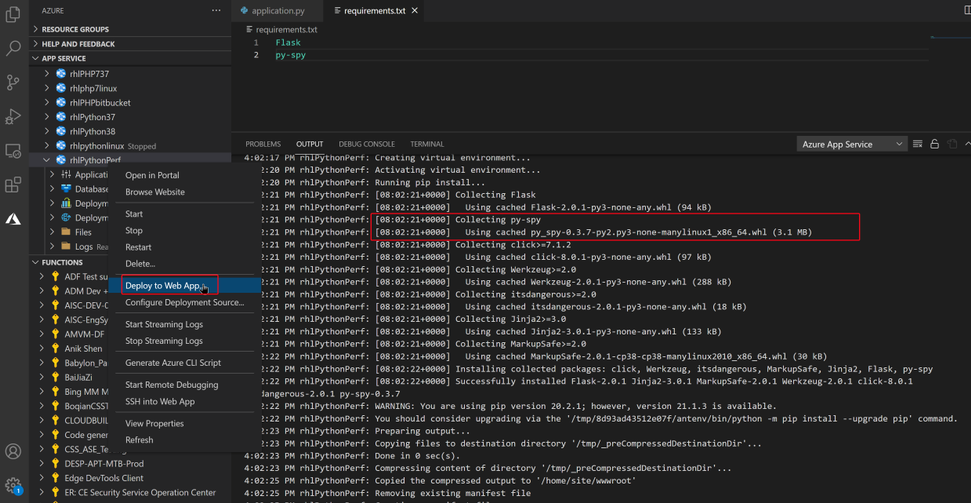

Step 2: Deploy your application to Azure Web App.

When deploying the code, Azure orxy build will help us install the py-spy package.

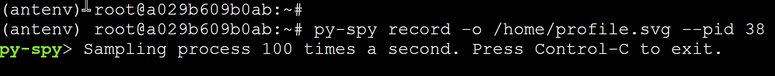

Step 3: Run live performance monitor for your application

Py-spy allow us attach to a running Python process, and monitor its performance.

The tool is similar to the Unix top command, it shows a live view of what functions are taking the most time in your python program, . Running py-spy with:

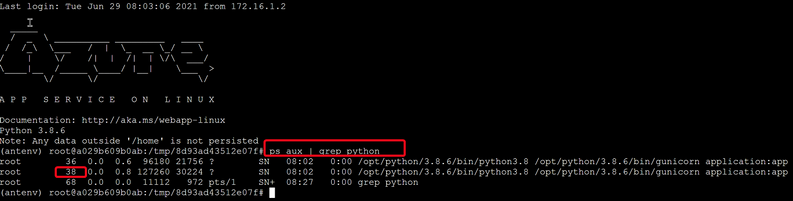

- Login to your Linux App Service WEBSSH, https://<webapp-name>.scm.azurewbsites.net/

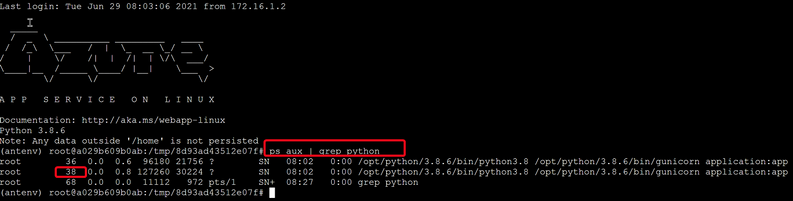

- First use “ps aux | grep python” command to find your python process ID.

- Run “py-spy top –pid <python process id>”

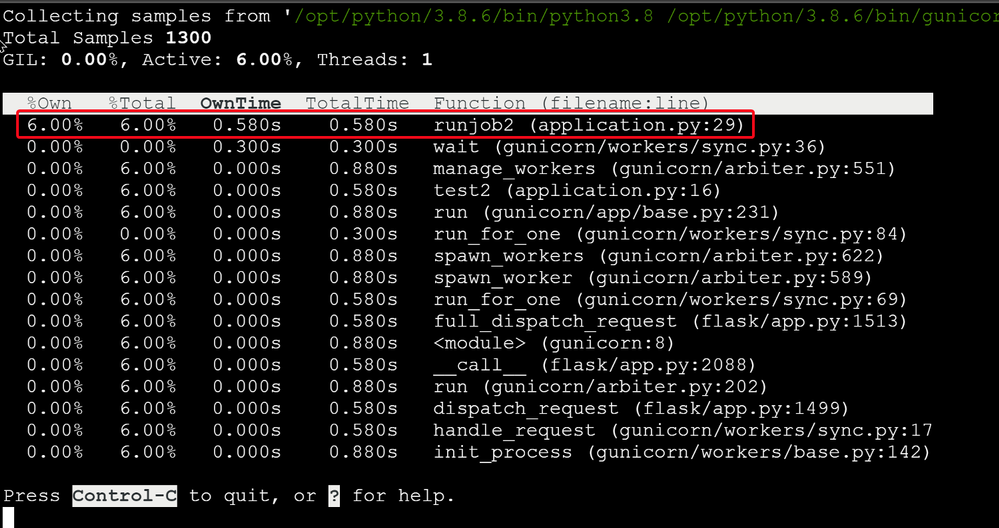

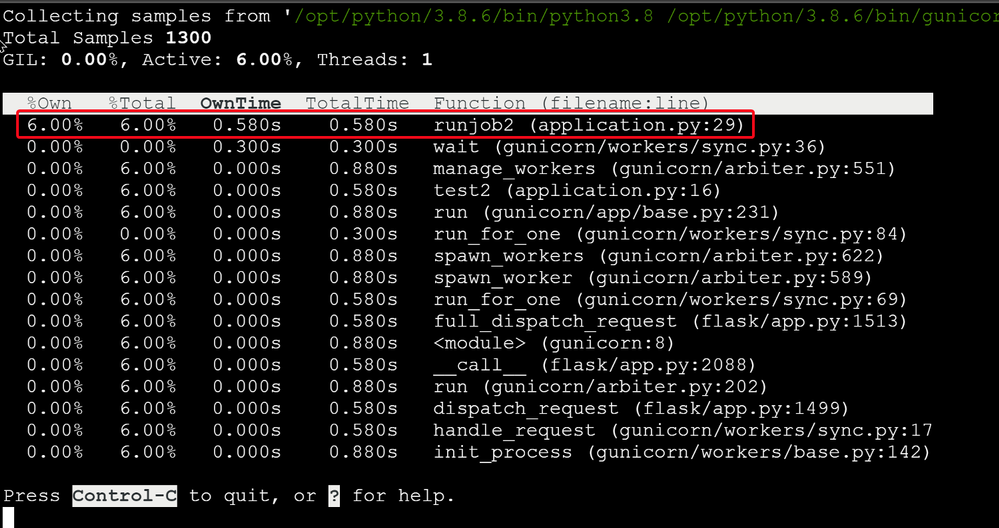

Here is my sample Python code. When I access http://<webapp-name>.azurewebsites.net/test2, I can see a lot cpu time consumed by my runjob2() function.

import time

from flask import Flask

app = Flask(__name__)

@app.route("/")

def hello():

runjob()

return "Hello Azure, from Flask!"

@app.route("/test1")

def test1():

return "Hello Test1!"

@app.route("/test2")

def test2():

runjob2()

return "Hello Test2!"

def runjob():

counter = []

for i in range(0,5):

test()

counter.append(i)

print (counter)

def runjob2():

for i in range(0,10):

i = i+1

time.sleep(1)

def test():

print(">>>>>start test")

time.sleep(1)

print("<<<<<<<done")

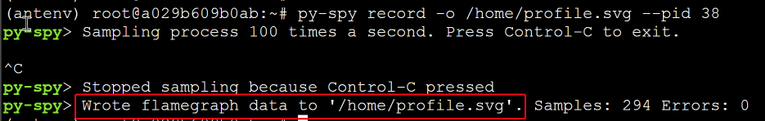

Step 3: Use record command to record profiles to a flame graph file

by Contributed | Jul 7, 2021 | Technology

This article is contributed. See the original author and article here.

Hello readers,

Today I am back with another post to discuss one of the common needs that customers have: retrieving data in a given business interval. The interval could one or more days, one or more hours as well as the combination of both. Let’s see how to do it.

As first step let’s identify the scenario in which this filter can be of help. Say that the IT department must only take care of data (and notification) which are inside the following interval:

- Monday to Friday.

- 9 a.m. to 6 p.m.

According to above scenario, the IT department needs to retrieve the events occurred during the last 60 days according to the business interval defined above.

Now that we know the scenario and the business interval, what else do we need? Of course, an existing workspace containing data, then the knowledge of the data we will going to query, a lookup table and, finally, the query we use to retrieve data.

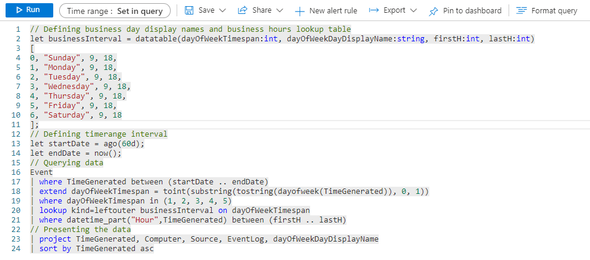

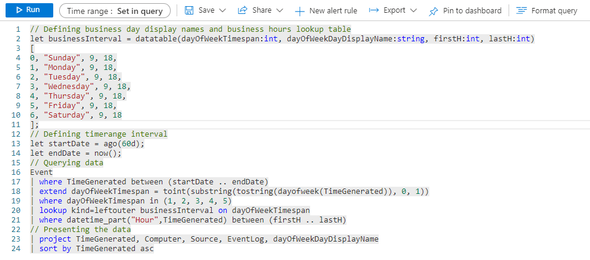

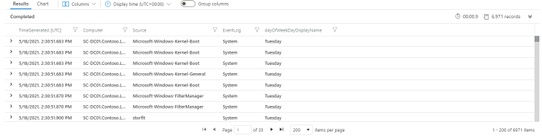

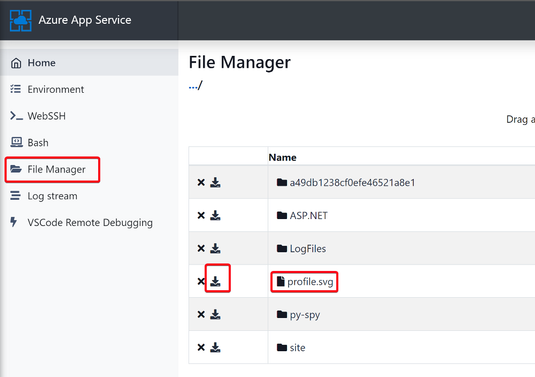

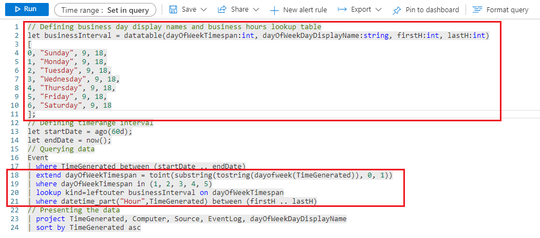

As far as the lookup table goes, we will create it inside the query. It will be a simple step. In the Azure portal, got to the relevant workspace, open the query page and paste the code below:

// Defining business day display names and business hours lookup table

let businessInterval = datatable(dayOfWeekTimespan:int, dayOfWeekDayDisplayName:string, firstH:int, lastH:int)

[

0, "Sunday", 9, 18,

1, "Monday", 9, 18,

2, "Tuesday", 9, 18,

3, "Wednesday", 9, 18,

4, "Thursday", 9, 18,

5, "Friday", 9, 18,

6, "Saturday", 9, 18

];

The code above defines a multidimensional array, containing 7 rows, with the following schema that will be used as dimension table:

- dayOfWeekTimespan:int à the timespan value used to convert the result of dayofweek function. This function will return the integer number of days since the preceding Sunday, as a timespan.

- dayOfWeekDayDisplayName:string à the display name to convert the timespan value to.

- firstH:int à the lower boundary of the business hours.

- lastH:int à the upper boundary of the business hours.

Given the schema, you can also define different business hour interval on different days.

NOTE: The lookup table does not honor the various locales. For non-English representation, feel free to change the value in the dayOfWeekDayDisplayName column accordingly. Same concept applies to the TimeGenerated which needs to be adapted using the relevant time zone. Records in Log Analytics are stored only in UTC. You can convert the time during the presentation in the portal or from within the query.

Moving on with the query, let me first explain why I called the multidimensional array “Lookpup table”. The reason is inside the query code. It will use, in fact, the lookup operator. In short, the lookup operator performs an operation similar to the join operator with the following differences:

- The result does not repeat columns from the $right table that are the basis for the join operation.

- Only two kinds of lookup are supported, leftouter and inner, with leftouter being the default.

- In terms of performance, the system by default assumes that the $left table is the larger (facts) table, and the $right table is the smaller (dimensions) table. This is exactly opposite to the assumption used by the join operator.

- The lookup operator automatically broadcasts the $right table to the $left table (essentially, behaves as if hint.broadcast was specified). Note that this limits the size of the $right table.

With the lookup operator clear in mind, let’s assemble the query considering the following:

- Customer needs to query the last 30 days of data.

- Customer is interested on data occurred inside the business interval.

Right after the lookup table code, we will define 2 variables to set the Time Range interval according to the 60 days requirement expressed by the customer. Paste the following after the last line of the table code:

// Defining timerange interval

let startDate = ago(60d);

let endDate = now();

Now, let’s go ahead querying and presenting the data using the lookup table for the defined time range interval. The remaining part of your query, which you can write right after the time range interval lines above, could be similar to the sample one reported below:

// Querying data

Event

| where TimeGenerated between (startDate .. endDate)

| extend dayOfWeekTimespan = toint(substring(tostring(dayofweek(TimeGenerated)), 0, 1))

| where dayOfWeekTimespan in (1, 2, 3, 4, 5)

| lookup kind=leftouter businessInterval on dayOfWeekTimespan

| where datetime_part("Hour",TimeGenerated) between (firstH .. lastH)

// Presenting the data

| project TimeGenerated, Computer, Source, EventLog, dayOfWeekDayDisplayName

| sort by TimeGenerated asc

The complete code will look as follow:

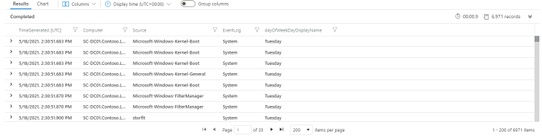

The results will be shown according to the project operator:

As you can understand from the query code, the relevant part for the filtering concept is represented by the lookup table and by the lines from 18 to 21 in the screenshot:

You can use this approach in your workbook, in your alerts and in all your queries. Of course, the charts will show some blank or missing values, but this is in line with the filter.

Hope that this is going to help taking care of or analyzing the data only during the desired business interval.

Thanks for reading

Disclaimer

The sample scripts are not supported under any Microsoft standard support program or service. The sample scripts are provided AS IS without warranty of any kind. Microsoft further disclaims all implied warranties including, without limitation, any implied warranties of merchantability or of fitness for a particular purpose. The entire risk arising out of the use or performance of the sample scripts and documentation remains with you. In no event shall Microsoft, its authors, or anyone else involved in the creation, production, or delivery of the scripts be liable for any damages whatsoever (including, without limitation, damages for loss of business profits, business interruption, loss of business information, or other pecuniary loss) arising out of the use of or inability to use the sample scripts or documentation, even if Microsoft has been advised of the possibility of such damages.

Recent Comments