by Contributed | Mar 26, 2021 | Technology

This article is contributed. See the original author and article here.

Hear about Microsoft & SAP’s expanded partnership and joint investments to support customer journeys to an intelligent enterprise, explore how to build a modern data estate with SAP and Azure, dive into telemetry & monitoring, Central data analytics and more!

Microsoft & SAP jointly participated at the SAPInsider event on SAP S/4HANA Data & Analytics, Cloud & Admin Mar 23-25, 2021. In case you missed the event, watch the sessions on-demand now through Apr 25. Click on the links below and use MICROSOFTGUEST to register.

by Scott Muniz | Mar 26, 2021 | Security, Technology

This article is contributed. See the original author and article here.

OpenSSL has released a security update to address vulnerabilities affecting versions 1.1.1–1.1.1j. An attacker could exploit these vulnerabilities to cause a denial-of-service condition.

CISA encourages users and administrators to review the OpenSSL Security Advisory and apply the necessary update.

by Contributed | Mar 26, 2021 | Technology

This article is contributed. See the original author and article here.

Review the latest governance capabilities in Microsoft’s Power Platform, including more granular data loss prevention controls and enhanced visibility through new tenant-wide built-in analytics.

Power Platform makes it easy for anyone to build rich experiences around their data, apps, and processes, and integrate them with the apps they use every day. While this adds value to the business and developers, governance and productivity must be balanced. Microsoft CVP, Charles Lamanna, joins Jeremy Chapman to give you all the controls to support the shift to low-code development safely.

Visibility: Out-of-the-box analytics allow you to easily discover and monitor flows and apps and how they’re being used.

Connector Action Control: A new feature to create more granular controls over the actions you can allow or deny.

Endpoint Filtering, Tenant Isolation, Block Email Exfiltration: For more control over what’s permitted or blocked.

Out-of-box Analytics: Track adoption usage and health monitoring across Dataverse, Power Automate, and Power Apps. See which apps are being used across your environments, and quickly spot best performing apps.

Troubleshooting & Diagnostics: Run your own diagnostics and troubleshooting using data about your app included out-of-the-box.

QUICK LINKS:

03:21 — Visibility

04:35 — Granular controls: Connector Action Control

06:24 — Endpoint filtering

08:04 — Out-of-box analytics

08:56 — Troubleshooting and diagnostics

11:01 — Wrap Up

Link References:

For more about security and governance with the Power Platform, go to https://aka.ms/PowerPlatformGovern

To see best practices our largest customers are using, check out our detailed white paper at https://aka.ms/powerappsadminwhitepaper

Unfamiliar with Microsoft Mechanics?

We are Microsoft’s official video series for IT. You can watch and share valuable content and demos of current and upcoming tech from the people who build it at Microsoft.

Video Transcript:

– Up next, on this special edition of Microsoft Mechanics we’re joined again by Microsoft CVP, Charles Lamanna. Now with so many organizations now using Microsoft’s Power Platform, we’re going to review the latest governance capabilities, and this includes more granular data loss prevention controls, enhanced visibility through new tenant-wide built-in analytics and more. So Charles, welcome back to the Microsoft Mechanics.

– Thank you for having me it’s always great to be back.

– And thanks so much for joining us from home today. So every time we have you on the show you really expand our understanding of the different types of apps that you can build with the Power Platform. Now we’ve shown how the Power Platform makes it easy for literally anyone with an idea to build rich experiences around their data, their apps, and processes, even integrating them with the apps that they use everyday like Microsoft Teams. Now this is great for citizen developers and pro developers and also business users. But at the same time, I know a lot of the folks that are watching maybe in security and governance, might feel a bit anxious about people developing apps or having access to business critical data and processes.

– Yeah, and I empathize with their concern, wanting to protect your data and your users. The good news though is that we give you all the controls to support the shift to low-code development safely. And these are important tools to use and take advantage of because this shift is very real. Power Automate, for example, is one of the most popular automation platforms on the planet right now, with over 25 billion actions being taken every day. And each month over 4 million users are actively leveraging the Power Platform to develop low-code solutions. So this is something that has huge value to the business and developers because the makers of these flows and apps are thinking of custom experiences around the core business processes that can improve their day-to-day jobs. So we have to balance governance and productivity When we look at the Power Platform. Which means rather than stopping these efforts you can instead establish the right guard rails for innovation to take place safely.

– Right, and it’s really hard to ignore the momentum now. You know, over half a million organizations worldwide now use the Power Platform. So if you’re in IT, it’s more of a question of when your business users will get on board.

– It really is, but you have more control and visibility to establish your governance foundation than you think. So for example, we have a dedicated admin portal which offers a single location to discover and access all these capabilities, spanning environment creation and management with analytics and data loss prevention policies. And we give you multiple layers of security. At the tenant level everything is identity-based with the Azure Active Directory, which provides native integration with the Power Platform. This allows you to define things like conditional access policies for your users. The next level is the environment. Here you can scope permissions by defining environment strategies to govern access to resources like apps, flows and data sources as users create content. You can also even determine who can be the admin or a maker inside of these environments. And the layer beyond that is the data itself. That includes mechanisms to secure data stored in Dataverse, as well as data being accessed at runtime via connectors. For external data you can control access to specific data sources with data loss prevention policies. And for data stored in Dataverse, you can control data access right down to a specific table, field, or even record.

– Okay so what are some of the things that you can now do to establish a governance strategy right now?

– A lot of it starts with visibility. For example we give you out-of-the-box analytics that allow you to easily discover and monitor flows and apps and how they’re being used. So if you see a new killer app start to emerge, and you want to go add more guard rails around it you can do that. You can even move it to a dedicated environment. Then because you have visibility into the apps and flows and your environments, you can start to publish approved apps to your organization’s catalog for even greater discovery. And we have tools to manage all of this application life cycle from creation to deletion.

– Right, and the approach here makes for a really strong partnership as well between the IT department and also business users.

– It does, and what we have seen happen in a lot of organizations is that having a good governance structure goes a long way to encouraging a culture for app innovation. It’s not unusual to see internal maker communities that actively share tips and tricks to help train new users so it’s not all on IT. In fact we have a lot of free training available from Microsoft, built by my team to help you get started and get your first users apps up and running really quickly.

– So the governance building blocks are there, and thanks so much for bringing us up to speed on all the updates of what exists today. But that said, I know that you and the team are making things even better and taking things to the next level.

– We are, and the first thing I want to show you is our focus on bringing you more granular controls for data loss prevention policies. The Power Platform gives you the ability to connect your apps to any data source. We have over 450 available connectors that can take care of integration for you. And as you can see here, currently with data loss prevention policies we allow you to classify connectors as business. These are the connectors with sensitive data and will only work with other data sources defined as business as well. You can also keep them in the non-business category, which means they contain nonsensitive data and are not intended to connect with business data sources. And the last category is blocked, which means the connector can’t be used. And you can scope these policies at the environment level or the tenant level. And that said, we’ve just released the preview of a brand new feature called Connector Action Control. For most connectors, specifically the blockable ones, you can now create more granular controls over the actions that you can allow or deny. In this case, I’ll select Twitter and click on the configure connector and under connector actions it opens up the side panel. This shows you the types of connector actions that you can allow verses block access to. And for example, you might have a Twitter handle associated with an app to create feedback for customers. So with these controls you can do things like block makers from using Power Apps or Power Automate to post a tweet, and block the collection of followers for PII reasons, but still allow searching tweets for marketing teams to gauge customer sentiment. And you simply can block or allow any of these actions using these really easy to use radio buttons. And this level of control is an area that many of our existing customers, like Unilever, are exploring as a way to open up the usage of some of the connectors they had previously blocked for their tenant.

– And that’s really a lot more control than simply blocking at the all up connector level. But what else are you adding then to protect the flow of data?

– So at the endpoint level we now have endpoint filtering for some of our most commonly used connectors. So for example you can see here how you can use this feature for more granular control of your SQL servers. So that you can allow access to some SQL databases and deny access to others. And beyond endpoints you can restrict the flow of data between tenants and block cross-tenant connections with a new tenant capability we call tenant isolation. So based on business requirements you can allow connections to or from a specific list of tenants. And to do this you just have to configure which domains you’d like to allow for inbound or outbound communication when you enable this tenant isolation feature.

– This is super important for organizations that are comprised of multiple tenants, like maybe companies that they’ve got subsidiaries or departments that are managed through a multi-tenant architecture.

– Totally, and one more critical data production capability that we are adding based on high demand is the ability to block email exfiltration. This allows you to configure rules for email messages being sent from PowerApps and Flow. For example, you can do things like block emails from being sent to recipients outside of your organization, or Scope just to block emails being sent from specific apps and flows. Because we use consistent email message headers as you can see here, you can easily set up rules that block sending emails from your Power Apps or your Power Automate flows outside of your organization as part of preventing data exfiltration through email.

– That’s a lot more granularity in terms of how you can define what’s permitted or blocked, but in order to really know where to spend your time and act you really need to have visibility into what’s going on in your environment. As you’ve shown we’ve had reporting available for a while now. But what are we doing to make things even better?

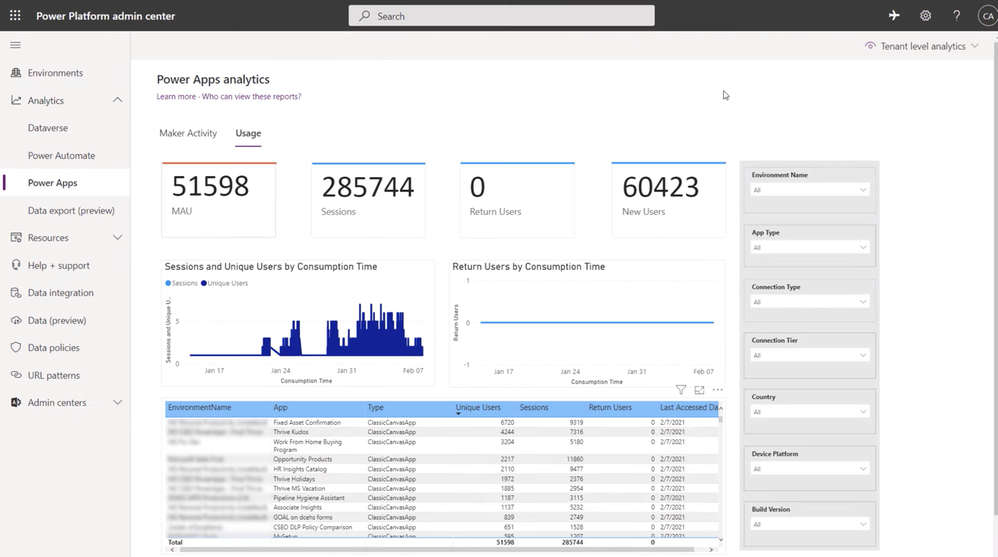

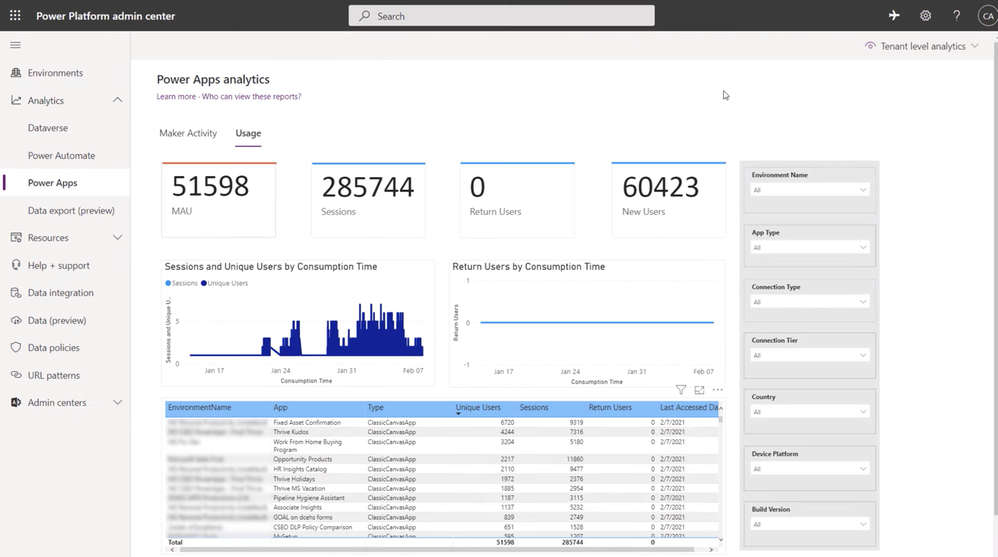

– So analytics is a huge area of focus for us. And one of the things I’m most excited about is the new out-of-the-box analytics. These now make it easier for better inventory, to track adoption, usage and health monitoring across Dataverse, Power Automate, and Power Apps. In fact, I’ll switch these reports to tenant level and that will load reporting across your entire tenant and all of your environments as you can see here. And because we’re using Power BI integration, these can also be exported as Excel files really easily. And I’m showing tenant-level reporting for Power Apps today, and this tenant-level reporting will be coming to the rest of the Power Platform in the future.

– This is pretty significant, because I remember previously we had to install the Center of Excellence starter kit to really get this level of reporting across your environments and across your entire tenant.

– That’s right. So now you can see which apps are being used across all of your environments and quickly spot the best performing apps right out-of-the-box.

– Can you also use the data then for things like troubleshooting or diagnostics?

– You can, and let me show you an example of that with an app that a lot of organizations are using right now as people start to return to the office. This app is a Canvas app template that lets you check out computer accessories, which is useful if you left something at home or maybe you’re at a hot desk and you need to borrow a keyboard or mouse for the day or week. In order to hook this app up to App Insights, I just grabbed the instrumentation key and add in the app properties right here. And this is a brand new app and you can see I already have three users. So if I scroll down I can see a few more insights, including things like events, performance, location of users and their operating systems to name a few. Then if I click into the sessions, this shows me the number of times the app’s been launched, I can see similar stats per session and events break this down even further. And what I love about this view is that I can see the engagement levels per screen of the app. This chart tracks engagement and user flows, so it lets me see how the app is being used and which screens are the most popular. If I flip back to the Canvas App and click on this button you can see a custom trace event that captures app feedback. And this trace event has custom dimensions to include with the message, things like username, email and the active screen. And if I go back to App Insights into logs I can skip the custom trace function with granular details whenever this scenario or issue is hit by my users. And here I can look for messages related to the app feedback, see lots of information. So there’s tons of great data about your apps included out-of-the-box. You can even extend it using these custom trace functions like I did here. And by the way, what I just showed for troubleshooting is pretty much what Microsoft support engineers might do in response to a customer support ticket. So now you can do the same types of diagnostics immediately without having to wait for us. And of course, there are even more capabilities in App Insights coming in the future. For example we’re going to make it so all of your apps and environment can be connected and configured all at once with App Insights.

– It’s really great to see the focus on reporting and monitoring and diagnostics. Of course these insights can be used to improve your governance as well. But what’s the team working on next?

– So what I’ve shown today is just the beginning. We’re going to continue to expand all your options for visibility and reporting. And one of the things I’m really looking forward to is our integration work with Microsoft Information Protection to scan, classify and protect sensitive data inside of the Power Platform.

– This is such an important topic really, as people do more with the Power Platform and at the same time want to be able to keep their data safe but where should people go then to learn more?

– You can find the guidance you need at aka.ms/PowerPlatformGovern And if you want to see some of the best practices that our largest customers are using check out our detailed white paper at aka.ms/powerappsadminwhitepaper

– Thanks so much again for joining us today Charles and I hope that we were able to answer the many questions that you had on this important topic. And of course if you haven’t already please subscribe to Microsoft Mechanics for the latest tech updates. Thanks for watching we’ll see you next time.

by Contributed | Mar 26, 2021 | Technology

This article is contributed. See the original author and article here.

Welcome to our new series highlighting Microsoft Learn Student Ambassadors who achieved the Gold milestone and have recently graduated from university. Each blog will feature a different student and highlight their accomplishments, their experience with the Student Ambassador program, and what they’re up to now.

Today, we’d like to introduce Manbir Singh who graduated last fall from Bharati Vidhyapeeth’s College of Engineering in New Delhi and recently joined Packt, a learning platform for professional developers. As a developer advocate, he manages a global community of more than 3000 influential developers, authors, and technical reviewers and works alongside the Packt product team to provide educational content to aspiring developers.

Responses have been edited for clarity and length.

Q: Hi Manbir. Thanks for taking the time to meet with us. When you joined the Microsoft Learn Student Ambassador program, did you have specific goals you wanted to reach, and did you achieve them? How has the program helped to prepare you for the next chapter in your life?

A: The only goal for me when I joined the program was to keep learning and helping people around me in any capacity possible and to fill the gaps that might exist in a community be it based on any tech stack or in general. The program didn’t just help me achieve my goal; it also helped me figure out the path that I wanted to walk ahead in my life. I’ve always been passionate about technology and communities as a whole, and thanks to Microsoft for igniting the passion for developer relations in me. It helped me improve my tech skills as well as soft skills.

I’ve learned the value of being more empathetic and inclusive while being a part of a community and the value of collaboration. It connected me with growth–oriented peers—not just from India but across the globe, and it surely instilled a long-term passion for people and technology within me. Even though I’ve been into communities for more than four years now, I have never experienced such a vibrant and welcoming environment that I experienced being a Microsoft Student Ambassador. It made me realize that even if even when we’re not traveling, even when we are locale–restricted, we can create an impact globally if only we have the right mindset and a pinch of willingness.

Q: What was the transition like for you going from school into your career?

A: Stepping into developer relations straight from being a student was definitely unconventional because companies really don’t prefer to offer DevRel roles to students, someone who is just graduating from college. So that was really tough for me, but it’s everything that I used to do as a student.

Q: What is the biggest lesson that you’ve learned as you’ve started your career and entered into this field that you’re so passionate about?

A: One thing that I’ve learned is learning never stops. As we progress in our journey in our careers, into life as a whole, we get to learn something new at every point of our life, be it from people, from academics or resources. Learning never stops, and it’s always important to stay curious and keep progressing in life.

Q: In the Student Ambassador program, what was the one accomplishment that you’re the proudest of and why?

A: I have conducted and participated in various workshops, technical talks, and hackathons such as Azure sessions, HackCBS held by Major League Hacking in partnership with Microsoft in Delhi, the Microsoft Student Ambassador Summit where I hosted a GitHub booth as well, and workshops on Open Source where there were over a hundred participants. I’ve impacted thousands of students in a short span of less than a year, I got selected for the Asia Summit among 10 students from India, and I presented a session at Microsoft Ignite last year with Arkodyuti Saha, Program Manager on the Microsoft Developer Relations team.

Q: What advice would you give to new Student Ambassadors?

A: I don’t have 1, but 5 pieces of advice actually.

- First of all, being a student, the most important thing to do is to explore as many opportunities as you can, meet and learn from as many people as possible. That expands your horizons and helps you better understand in which direction you should head towards.

- Second, focus on quality over quantity. One quality project over ten incomplete projects, one productive hackathon over ten events that you just join to hang out at, one hour of dedicated learning over ten hours of sitting in front of the screen waiting for miracles to happen.

- Third, collaborate with people. We learn the best while we’re working together. No matter if you are helping people or seeking help from them, there’s a win–win situation in both cases.

- Fourth, don’t just follow the trends, but be a trend. Think out of the box. That’s when you are the most creative, that’s when your originality comes out and makes you stand out.

- Fifth, give up on the fear of making mistakes. You are not learning enough if you keep living with the fear of failure because those setbacks are really what define you and would help you grow.

Q: If you were to describe the program to a student who is interested in joining, what would you say to them?

A: If you’re passionate about technology learning and contributing towards the growth of your peers, then the Microsoft Learn Student Ambassador program is where you can shine. Come join us and be a part of our vibrant community where we learn and thrive–together!

At the close of the interview, we asked Manbir for his motto in life; what drives him. He stated that “Technology is just a medium. What I love is to be a part of people’s journey towards success and to help them in any and every possible way that I can in any capacity. Impact is what I truly seek in life.”

Good luck to you, Manbir, in all your future endeavors!

by Contributed | Mar 26, 2021 | Technology

This article is contributed. See the original author and article here.

Hi!

It is known that SQL Server can be accessed with many different authentication methods. We often recommend the use of Integrated Security using Kerberos mainly because it allows delegated authentication, besides being an efficient method compared to others such as NTLM, for example.

On today’s post we will be following the steps to help you configuring Kerberos on Linux and test it by connecting to a SQL Server instance found On-Premise/Virtual Machine .

The steps will be covered in the following sections:

- Joining the Linux Server to the Windows Domain

- Setting Kerberos on Linux

- Testing the connection

- Troubleshooting

1 – Joining the Server to the Windows Domain:

To join Linux server into a Windows Domain it is needed to change the network device to look for the right DNS entries.

This can be done by editing the files /etc/network/interfaces on Ubuntu versions < 18 or /etc/netplan/******.yaml on newer Ubuntu

Ubuntu 16: Join SQL Server on Linux to Active Directory – SQL Server | Microsoft Docs

Ubuntu 18: Join SQL Server on Linux to Active Directory – SQL Server | Microsoft Docs

Red Hat 7.x: Join SQL Server on Linux to Active Directory – SQL Server | Microsoft Docs

Suse 12: Join SQL Server on Linux to Active Directory – SQL Server | Microsoft Docs

The final files may vary depending on the distro. As an example, this is the outcome for Ubuntu 18 (available on Azure):

Test environment:

Domain Name: BORBA.LOCAL

Domain Controller (BORBADC.borba.local) IP: 10.0.1.4

File /etc/netplan/50-cloud-init.yaml :

# This file is generated from information provided by the datasource. Changes

# to it will not persist across an instance reboot. To disable cloud-init’s

# network configuration capabilities, write a file

# /etc/cloud/cloud.cfg.d/99-disable-network-config.cfg with the following:

# network: {config: disabled}

network:

ethernets:

eth0:

dhcp4: true

dhcp4-overrides:

route-metric: 100

dhcp6: false

match:

driver: hv_netvsc

macaddress: 00:0d:3a:da:4d:53

set-name: eth0

nameservers:

addresses: [10.0.1.4]

version: 2

As soon as you change the yaml file under /etc/netplan you will need to apply the same by running the following command-line:

netplan apply

You will also need to add your DNS to /etc/resolv.conf:

File /etc/resolv.conf:

# This file is managed by man:systemd-resolved(8). Do not edit.

#

# This is a dynamic resolv.conf file for connecting local clients to the

# internal DNS stub resolver of systemd-resolved. This file lists all

# configured search domains.

#

# Run “systemd-resolve –status” to see details about the uplink DNS servers

# currently in use.

#

# Third party programs must not access this file directly, but only through the

# symlink at /etc/resolv.conf. To manage man:resolv.conf(5) in a different way,

# replace this symlink by a static file or a different symlink.

#

# See man:systemd-resolved.service(8) for details about the supported modes of

# operation for /etc/resolv.conf.

nameserver 10.0.1.4

options edns0

search borba.local

Now that your DNS settings are properly set, you can test it by running a nslookup with the domain controller as parameter. On my environment the domain controller is called “BORBADC”. Check the output from nslookup command below:

Testing name resolution with the command “nslookup borbadc.borba.local”:

dineu@LinuxDev:~$ nslookup borbadc

Server: 10.0.1.4

Address: 10.0.1.4#53

Name: borbadc.borba.local

Address: 10.0.1.4

You’ve just finished the first part :grinning_face:

As we could see, nslookup is correctly resolving the names for the realm BORBA.LOCAL.

2- Setting Kerberos on Linux:

Now that your Linux server is joined to the Windows Active Directory, we will now continue configuring settings for Kerberos.

The process goes through two simple steps:

- Installing the required libraries

- Adapting Kerberos Configuration file.

2.1) Installing the required libraries:

As usual, the command lines may vary depending on what is you Linux distro. I will be sharing two solutions that can be used if your Linux is Ubuntu or Red Hat.

Ubuntu:

sudo apt-get install realmd krb5-user

RedHat:

sudo yum install realmd krb5-workstation

Once the libraries are installed, you can move on to the next step.

2.2) Adapting Kerberos Configuration File

The whole settings for Kerberos are defined in one single file called “krb5.conf”.

This is found under /etc/krb5.conf and you will need to adapt the following sections:

- [libdefaults]

- [realms]

- [domain_realm]

As an example, please check the final version of my krb5.conf used to configure the settings in the same Test Environment:

[libdefaults]

default_realm = BORBA.LOCAL

dns_lookup_kdc = true

dns_lookup_realm = true

# The following krb5.conf variables are only for MIT Kerberos.

kdc_timesync = 1

ccache_type = 4

forwardable = true

proxiable = true

[realms]

BORBA.LOCAL = {

kdc = BORBADC.BORBA.LOCAL

admin_server = BORBADC.BORBA.LOCAL

}

[domain_realm]

.borba.local = BORBA.LOCAL

3- Testing the connection

Ok, so you have the server joined to the domain and also finished configuring the Krb5.conf.

As you know, Kerberos relies on a ticket-granting service and you will need to have this first ticket (TGT) if you want to connect to any service using your credentials.

This process responsible for asking and receiving the TGT is called “kinit”.

Once you get the TGT, other processes (such as your application that connects to SQL Server) will be able to ask another ticket called Ticket-Granting-Service (TGS).

1.1) Get Ticket-Granting Ticket

To test the connection on Linux you will first need to get a TGT (Ticket-Granting-Ticket). This is done throgh the command kinit as follow:

kinit username@DOMAIN.COMPANY.COM

*** Please notice that realm is in capital letters

In our example, this was the output:

dineu@LinuxDev:~$ kinit dineu@BORBA.LOCAL

Password for dineu@BORBA.LOCAL:

dineu@LinuxDev:~$

You can see the ticket received by running the command “klist” (see the krbtgt in the output):

aa

dineu@LinuxDev:~/JavaTest$ klist

Ticket cache: FILE:/tmp/krb5cc_1000

Default principal: dineu@BORBA.LOCAL

Valid starting Expires Service principal

03/26/21 13:02:41 03/26/21 23:02:41 krbtgt/BORBA.LOCAL@BORBA.LOCAL

renew until 03/27/21 13:02:38

1.2) Connect to SQL Server

Brillant!

I see you already have a TGT from the output of your klist. Now it is time to test our connection to SQL Server.

1.2.1) Testing the connection using sqlcmd:

To test the connection using SQLCMD you will first need to install it:

Once the tool is installed, you can can connect to one of your SQL Instances as below:

aa

dineu@LinuxDev:~$ sqlcmd -S MySQLInstance.borba.local -E

1> SELECT auth_scheme from sys.dm_exec_connections where session_id = @@SPID;

2> GO

auth_scheme

—————————————-

KERBEROS

(1 rows affected)

1.2.2) Testing the connection using Microsoft JDBC (MS-JDBC):

To test the connection using Microsoft JDBC please ensure you have the latest JRE and JDK properly installed on your server

- Please check the steps 1.2 and 1.3 of the following article:

https://sqlchoice.azurewebsites.net/en-us/sql-server/developer-get-started/java/ubuntu/

1.2.2.1) Create / Compile you Java file to test the connection:

Connection String used as an example:

jdbc:sqlserver://MySQLInstance.borba.local:1433;databaseName=master;integratedSecurity=true;authenticationScheme=JavaKerberos

And this is our sample script (found as a sample code when you install MS-JDBC) adapted with the connection string that will ensure Kerberos is used:

File ConnectUrl.java:

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.ResultSet;

import java.sql.SQLException;

import java.sql.Statement;

public class ConnectURL {

public static void main(String[] args) {

// Create a variable for the connection string.

String connectionUrl = “jdbc:sqlserver://MySqlInstance.borba.local:1433;databaseName=master;integratedSecurity=true;authenticationScheme=JavaKerberos”;

try (Connection con = DriverManager.getConnection(connectionUrl); Statement stmt = con.createStatement();) {

String SQL = “SELECT auth_sceme from sys.dm_exec_connections where session_id = @@SPID”;

ResultSet rs = stmt.executeQuery(SQL);

// Iterate through the data in the result set and display it.

while (rs.next()) {

System.out.println(rs.getString(“auth_scheme”));

}

}

// Handle any errors that may have occurred.

catch (SQLException e) {

e.printStackTrace();

}

}

}

Compile the script by running the command below:

javac ConnectURL.java

Finally, you can now call java to execute the script (don’t forget to inform the path for the MSJDBC jar files):

java -cp .:/home/dineu/JavaTest/mssql-jdbc-8.4.0.jre8.jar ConnectURL

Output: If the settings were properly configured, you will find the following output:

KERBEROS

…and this is Done! If you found Kerberos as the output of the execution, you’ve finished to configure Kerberosn on your Linux Environment.

4 – Troubleshooting

Okay, we know sometimes things may behave in an unexpected way. I would like to share here some error messages that you may find and also some suggestions on how to collect more information if you get stuck anywhere while configuring all these settings.

4.1) Error Messages

Please find below some error messages that you may find along this journey:

Error #1) kinit: Cannot find KDC for realm “borba.local” while getting initial credentials

– Check if the name of your realm is being correctly resolved:

– You can find the IP Address of your DNS under /etc/resolv.conf

– You can also test it by running the nslookup: nslookup YourDomain or nslookup YourDomainController

Error #2) kinit: KDC reply did not match expectations while getting initial credentials

This message says the kinit failed to get the TGT. Possible reasons are:

– Realm was not in capital letters. Example: (wrong) kinit myuser@domain.com versus (right) kinit myuser@DOMAIN.COM.

– Krb5.conf was not properly configured (please review the sections [libdefaults], [realms] and [domain_realm] under /etc/krb5.conf

Error #3) Cannot generate SSPI context.

Usually the error message “Cannot Generate SSPI Context” comes with some other error messages, just like below:

Testing from SQLCMD:

dineu@LinuxDev:~/JavaTest$ sqlcmd -S sqlao1 -E

Sqlcmd: Error: Microsoft ODBC Driver 17 for SQL Server : SSPI Provider: Server not found in Kerberos database.

Sqlcmd: Error: Microsoft ODBC Driver 17 for SQL Server : Cannot generate SSPI context.

Testing from MS-JDBC:

aa

com.microsoft.sqlserver.jdbc.SQLServerException: Integrated authentication failed. ClientConnectionId:….

Caused by: GSSException: No valid credentials provided (Mechanism level: Server not found in Kerberos database (7)

Caused by: KrbException: Server not found in Kerberos database (7)

Caused by: KrbException: Identifier doesn’t match expected value (906)

In this case, it is clearly saying that the SPN was not found.

Therefore you can check if the SPNs for SQL Service are properly registered to the SQL Service Account.

More info: Register a Service Principal Name for Kerberos Connections

4.2) Logs

If you find an unexpected error, the following logs can help to understand what could be causing the problem.

4.2.1) Provider Logs:

You can always collect provider logs from ODBC or MSJDBC.

4.2.2) Network Traces:

You may consider to use a network capture tool (such as tcpdump) on Linux and then filter by the Kerberos messages.

The following command line will start a circular capture in the server. It will create up to 10 files, each one containing the maximum size of 2Gb

sudo tcpdump -i any -w /var/tmp/trace -W 10 -C 2000 -K -n

Once you start capturing the traces, reproduce the issue and then press Ctrl+C to stop capturing them.

The files would be stored in the same folder used in the command line (in this case, /var/tmp).

…and that’s all folks!

I would love to hear your feedback..!

Did you find the steps described in this article helpful?

What else would you like to have added to this post?

We hope you have enjoyed reading about this content.

See you in the next post!

Recent Comments