by Contributed | Feb 26, 2021 | Technology

This article is contributed. See the original author and article here.

As cities continue connecting their urban environments, the concept of digital twins—a digital representation of real-world environments brought to life with real time data from sensors and other data sources—has entered the realm of smart cities and promises to enable city administrations and urban planners to make better decisions with the help of data integration and visualization from across the urban space.

Last year, we announced the general availability of the Azure Digital Twins platform which enables developers to model and create digital representations of connected environments like buildings, factories, farms, energy networks, railways, stadiums, and cities, then bring these entities to life with a live execution environment that integrates IoT and other data sources.

Today, we are excited to announce that the open-source GitHub repository of Smart Cities ontology for Azure Digital Twins available to the ecosystem.

Why ontologies

To drive openness and interoperability, Azure Digital Twins comes with an open modeling language, Digital Twins Definition Language (DTDL), which provides flexibility, ease of use, and integration into the rest of the Azure platform. Using DTDL, developers can describe twins in terms of the telemetry they emit, the properties they report or synchronize and the commands they respond to. Most importantly, DTDL also allows describing the relationship between twins.

Common representation of places, infrastructure, and assets will be paramount for interoperability and enabling data sharing between multiple domains. It’s our goal to partner with industry experts and provide DTDL-based ontologies which learn from, build on, and/or use industry standards, meet the needs of developers, and are adopted by the industry. The resulting open-source ontologies provide common ground for modeling connected environments, accelerate developers’ time to results, and enable interoperability between DTDL-based solutions from different solution providers.

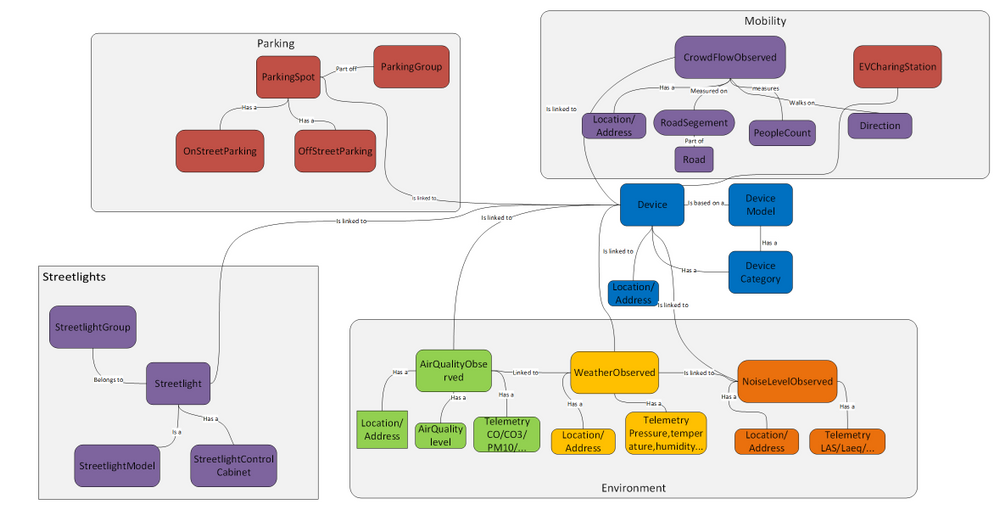

Smart Cities ontology approach and overview

We collaborated with Open Agile Smart Cities (OASC) and Sirus to provide a DTDL-based ontology, starting with ETSI CIM NGSI-LD, and accelerate development of digital twins-based solutions for smart cities.

In addition to ETSI NGSI-LD, we’ve also evaluated Saref4City, CityGML, ISO and others.

The ETSI CIM NGSI-LD specification defines an open framework for context information exchange named NGSI-LD which comes with an information model that defines the meaning of the most needed terms, and a domain-specific extension to model any information. The core meta-model provides a basis for representing property graphs using RDF/RDFS/OWL, and is formed of Entities, their Relationships, and their Properties with values, encoded in JSON-LD. In addition to the core meta-model, NGSI-LD compliant open models for aspects of smart cities have been defined by organizations and projects, including OASC, FIWARE, GSMA and the Synchronicity project. The NGSI-LD models for Smart Cites comprise models in the domains of Mobility, Environment, Waste, Parking, Building, Park, Port, etc.

The property graph nature of NGSI-LD made it quite straightforward to map it to DTDL, and with today’s release, we are making an initial set of DTDL models adapted from the NGSI-LD open models for Smart Cities available to the community.

We’ve focused our initial set on use cases that are increasingly relevant to cities given the availability of IoT devices and sensors, like measuring the air quality in a neighborhood, understanding the noise level in a district, the crowd flow in a road segment, traffic flow in a road segment, monitoring on-street parking in parking spots, availability of EV-Charging, or monitoring streetlights and reducing energy consumption, but as well enabling streetlight infrastructure for additional smart cities services enabling citizen-centric use cases.

In addition to the ETSI NGSI-LD, we’ve also started leveraging ETSI SAREF extension for Smart Cities ontology framework for Topology, Administrative Area and City Object modeling. Using Saref4City ontology constructs represented in DTDL allowed us to model city objects like poles, their containment within an administrative area of a city, and linked to the smart models in the domain of mobility, environmental, parking adapted in DTDL from NGSI-LD models for Smart Cities described above.

Watch this IoT Show episode to learn how we approached the DTDL-based Smart Cities ontology, how we mapped the ETSI NGSI-LD models to DTDL, extending the ontology based on Saref4City, while bringing it all together with an example use case brought to life with Azure Digital Twins Explorer.

Partners

We collaborated with OASC and Sirus to bring the first DTDL ontology for Smart Cities for Azure Digital Twins to the community. We are also working with more partners that are validating and contributing to the open source ontology.

Open & Agile Smart Cities – OASC in short –is an international network of cities and communities, working with local public administrations of all sizes to support their digital transformation journey. Together with its members, partners, and experts, OASC creates sustainable impact for cities with data driven solutions based on a Minimal Interoperability Mechanisms which includes open standards, open APIs, and a recommended catalog of open data models, including NGSI-LD models, to enable interoperability for applications and systems among different cities.

Sirus is a dynamic software integration company that specializes in building IoT and Smart City solutions. Sirus enables cities to build digital solutions and integrate data from a variety of IoT devices and other systems through an open standards-based approach, like ETSI NGSI-LD, and has pioneered multiple smart cities data integration platforms including for the City of Antwerp. Sirus invests heavily in innovation, like smart city digital twins based on Azure and pioneers in open standards implementations. Sirus is also an SME partner of OASC.

Siemens MindSphere City Graph is a solution that offers a new way to optimize city operations. It creates a digital twin of urban spaces allowing cities to model, monitor, and control physical infrastructure, powered by Azure Digital Twins. MindSphere City Graph uses the Digital Twins Definition Language and context information management specifications ETSI NGSI-LD to drive openness for solution providers to integrate and deliver sustainable value for a city while enabling open data for cities through open standard approach. The open source DTDL-based ontologies for Smart Cities based on ETSI NGSI-LD will accelerate the development of digital twins solutions and integration.

ENE.HUB, a portfolio of Brookfield Infrastructure Partners, is a fully integrated smart city infrastructure as a service provider. ENE.HUB’s flagship product, the SMART.NODE™ is a comprehensive and self-contained smart pole solution that integrates a range of smart city services including smart lighting, communication services, energy services, environment services, transport services, safety, and media services. ENE.HUB is collaborating with Microsoft on digital twin’s representation of smart poles based on the open DTDL-based Smart Cities ontology.

We also continue to collaborate with our partners Bentley Systems and Imec, experts in digital twin solutions for smart cities. Both Bentley Systems and Imec are also members of the Digital Twin Consortium along with Microsoft.

Next steps

With this release of smart cities ontology for digital twins, we’ve focused on an initial set of models and we welcome you to contribute to extend the initial set of use cases, as well as improve the existing models.

Explore the open-source GitHub repository, try it out with Azure Digital Twins, and learn how to contribute.

Our goal is to accelerate your development of digital twins solutions for smart cities and enable interoperability and data sharing for cities.

If you are interested in Smart Building solutions, we worked with the RealEstateCore consortium to provide DTDL based ontology for Smart Buildings. Similarly, we are also working on Energy Grid ontology which will be coming soon.

As part of our commitment to openness and interoperability, we also continue to promote best practices and shared digital twin models and use cases through the Digital Twin Consortium.

by Contributed | Feb 26, 2021 | Technology

This article is contributed. See the original author and article here.

Today, I worked on a service request that our customer wants to download a large resultset from Azure SQL Database to OnPremises. The table has several blob columns (XML,Varchar(max),text) and millions of rows. In this situation, I would like to share with you several tests that I did and how to reduce the download time spent.

Initial points

- Try to increase the packet size in your connection string to higher values.

- Instead to use Proxy connection policy use Redirection connection policy to improve the connection.

- About the redirection, remember to use the latest drivers because some old drivers are not able to use redirection.

- As this process is a pure data processing, if possible, try to use Premium or Business Critical to reduce the I/O latency.

- In OnPremises try to distribute the data and log files in different location to improve the IO.

In Azure SQL Database, I created a table and filling the data:

- Basically, I created the following table:

CREATE TABLE [dbo].[Destination](

[ID] [int] IDENTITY(1,1) NOT NULL,

[Name1] [varchar](4000) NULL,

[Name2] [varchar](4000) NULL,

[Name3] [varchar](4000) NULL,

[Name4] [varchar](4000) NULL,

[Name5] [varchar](4000) NULL,

PRIMARY KEY CLUSTERED

(

[ID] ASC

))

- Running multiple times the following query, I got around 7 millions of rows.

INSERT INTO Destination (Name1,Name2,Name3,Name4,Name5) values(Replicate('X',4000),Replicate('X',4000),Replicate('X',4000),Replicate('X',4000),Replicate('X',4000))

INSERT INTO DESTINATION (Name1,Name2,Name3,Name4,Name5) SELECT Name1,Name2,Name3,Name4,Name5 FROM DESTINATION

In OnPremise:

- I developed a small C# aplication that has 3 different process:

- The first process was to read the whole table from Azure SQL Database and using bulkcopy download the data, but the spent time was high. I saw that transfer ratio was about (100-200 mb/s).

private void LoadDataReaderWithoutCompression(C.SqlDataReader newProducts, int lCutOver = 10000, string sDestinationTable = "Destination")

{

using (C.SqlBulkCopy bulkCopy = new C.SqlBulkCopy(GetConnectionStringTarget(0), C.SqlBulkCopyOptions.KeepIdentity | C.SqlBulkCopyOptions.KeepNulls | C.SqlBulkCopyOptions.TableLock))

{

bulkCopy.DestinationTableName = sDestinationTable;

try

{

bulkCopy.BulkCopyTimeout = 6000;

bulkCopy.SqlRowsCopied += new C.SqlRowsCopiedEventHandler(OnSqlRowsCopied);

bulkCopy.NotifyAfter = 2000;

bulkCopy.EnableStreaming = false;

bulkCopy.BatchSize = lCutOver;

bulkCopy.WriteToServer(newProducts);

}

catch (Exception ex)

{

Console.WriteLine(ex.Message);

}

}

}

- The second process was to compress the data using COMPRESS function before downloading the data. Basically, the idea was:

- Create a table with the following structure.

- Execute the query INSERT INTO [_M$_Destination_X] (ID,NAME1,NAME2,NAME3,NAME4,NAME5) SELECT ID,COMPRESS(NAME1) AS NAME1,COMPRESS(NAME2) AS NAME2,COMPRESS(NAME3) AS NAME3, COMPRESS(NAME4) AS NAME4, COMPRESS(NAME5) AS NAME5 FROM Destination

- Download using bulkcopy the compressed data

- Uncompress the data in the destination, running the following TSQ:, INSERT INTO [Destination] (ID,NAME1,NAME2,NAME3,NAME4,NAME5) SELECT ID,DECOMPRESS(NAME1) AS NAME1,DECOMPRESS(NAME2) AS NAME2,DECOMPRESS(NAME3) AS NAME3, DECOMPRESS(NAME4) AS NAME4, DECOMPRESS(NAME5) AS NAME5 FROM [_M$_Destination_X]

CREATE TABLE [dbo].[_M$_Destination_X](

[ID] [int] NOT NULL,

[Name1] [varbinary](max) NULL,

[Name2] [varbinary](max) NULL,

[Name3] [varbinary](max) NULL,

[Name4] [varbinary](max) NULL,

[Name5] [varbinary](max) NULL,

PRIMARY KEY CLUSTERED ( [ID] ASC ))

- The second execution process was very good because I was able to skip the networking issue compressing and de-compressing. But, was only a thread running, what happening if I have millions and millions of rows, well, in this situation, I modified the source using a configurable number of threads (for example, running in parallel 10 threads reading 150000 rows each one).

- Every process read 150000 rows, using the following TSQL: INSERT INTO [_Tmp100] (ID,NAME1,NAME2,NAME3,NAME4,NAME5) SELECT ID,COMPRESS(NAME1) AS NAME1,COMPRESS(NAME2) AS NAME2,COMPRESS(NAME3) AS NAME3, COMPRESS(NAME4) AS NAME4, COMPRESS(NAME5) AS NAME5 FROM Destination ORDER BY ID OFFSET 0 ROWS FETCH NEXT 150000 ROWS ONLY

- Using bulkcopy I transferred the data to the OnPremise service.

- Finally running the query I was able to uncompress the data and save in the destination table, INSERT INTO [Destination] (ID,NAME1,NAME2,NAME3,NAME4,NAME5) SELECT ID,DECOMPRESS(NAME1) AS NAME1,DECOMPRESS(NAME2) AS NAME2,DECOMPRESS(NAME3) AS NAME3, DECOMPRESS(NAME4) AS NAME4, DECOMPRESS(NAME5) AS NAME5 FROM [_Tmp100]

At the end, I was able to reduce the time spent in hours for this process. I got other lessons learned for OnPremises to speed up the process and reduce the PageIOLatch contention, but, this will be for another post.

Enjoy!

by Contributed | Feb 26, 2021 | Technology

This article is contributed. See the original author and article here.

Microsoft partners like Ant Media, Claim Genius, and Uncrowd deliver transact-capable offers, which customers can purchase directly from Azure Marketplace. Learn about these offers below:

|

Ant Media Server Enterprise Edition: Ant Media Server Enterprise Edition is a streaming engine solution that uses WebRTC technology to provide adaptive, ultra-low-latency streaming. Supporting WebRTC, CMAF, HLS, RTMP, RTSP, and more, Ant Media Server is highly scalable horizontally and vertically and can run on-premises or in the cloud.

|

|

GeniusCLAIM: Claim Genius’s GeniusCLAIM platform helps insurance carriers reduce costly processing delays by using artificial intelligence-powered damage estimation. Try GeniusCLAIM for free to see how it can help your firm reduce claim processing times and loss adjustment expenses, increase throughput and profitability, and reduce customer churn.

|

|

Uncrowd FRi – Retail Customer Analytics Platform: Uncrowd’s Friction/Reward Indexing (FRi) analytics platform answers retail’s most fundamental question: “Why do customers choose Retailer X over Retailer Y?” FRi leverages artificial intelligence and machine learning to provide insights into shoppers’ choices, behaviors, and likelihood to buy your products.

|

|

by Contributed | Feb 26, 2021 | Technology

This article is contributed. See the original author and article here.

This is your guide to all things Windows & Devices at Microsoft Ignite, March 2-4!

This past year has underscored the critical importance of Windows – the Windows PC has become more essential than ever, serving as a connector for people and organizations all around the world. And at the center of it all is IT. Our goal at Microsoft Ignite in March is to quickly get you to speed on how you can use the latest capabilities, services, and devices to support your end users and your organization—and prepare you for what’s to come.

From sessions with the top leaders and engineers at Microsoft supporting Windows to an awesome catalog of in-depth videos that you can watch on demand at any time, Microsoft Ignite is your chance to get up to speed on Windows, Surface, and Microsoft Edge. We’ll have Ask the Experts sessions so you can engage directly with those building the capabilities that will support you as IT pros and developers today and in the future—and we’ll extend the time available for you to engage with our experts through Windows Office Hours here on Tech Community.

Now is a great time to be a part of the big, wide world of Windows. If you haven’t already, register at https://ignite.microsoft.com and start building your schedule. Click on the session names below to add them to your schedule (or digital “backpack”)—then bookmark this post as we’ll be updating it each day with links to all our announcements, depth learning on demand, and post-conference activities.

There is a lot happening around Windows at Microsoft Ignite so let’s dive in!

Jump to: Core sessions | Depth on demand | Ask the experts | Office hours | Additional resources

– – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – –

Core sessions

Windows fireside chat with Panos Panay & Roanne Sones

Kicking off our Windows experience at Microsoft Ignite at 11:30 AM PST on Tuesday, March 2nd is none other than Microsoft Chief Product Officer Panos Panay! In a fun, informative chat, Panos and Azure Edge + Platform CVP Roanne Sones will talk about why Windows matters, using examples of recent innovations in security, devices, browsing, and the cloud. We’ll have our product and engineering experts standing by in the chat to answer your questions as well!

Can’t make it at 11:30 AM PST? Not to worry! We’ll replay this session later in the day. Keep an eye on the main channel around 9:30 PM PST.

Engineer to engineer: Let’s talk Windows!

One of our goals for this Microsoft Ignite is to show and tell you how your feedback inspires our innovations! On Wednesday, Windows CVP Aidan Marcuss is sitting down with pivotal members of our Windows (Gabe Frost), security (David Weston), and endpoint management (Ramya Chitrakar) engineering teams for a lively discussion about the features and capabilities we’ve developed over the past year based on your ideas and suggestions—and how we’re taking those further to help prepare your and your organization for the future.

We’ll have live Q&A throughout this session too so pick the time that works best for you and add it to your schedule!

On demand: Microsoft Edge, Surface, and Microsoft Endpoint Manager

After the keynotes, make sure to check out our featured on demand sessions:

– – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – –

Depth on demand

We know you come to Microsoft Ignite to build your technical skills and knowledge. That’s why we’re offering deep dives, demos, and more in the Video Hub on Tech Community—direct from our engineering and product teams!

- What’s new in Windows servicing – Joe Lurie, Namrata Bachwani

- The how-to guide for managing Windows updates – Aria Carley, Kay Toma

- The key to rolling out Windows updates with confidence – Aria Carley, Blair Glennon, Kevin Scharpenberg

- A simple recipe to accelerate Windows 10 patch compliance – David Guyer

- Windows 10 update monitoring and reporting – Charles Inglis

- Delivery Optimization + ConfigMgr = cloud content made easy – Narkis Engler, Carmen Forsmann

- Modernize application validation with Test Base for Microsoft 365 – Maitreyee Agashe Wagh

- 10 tips to make the Windows update experience fast and easy – Steve DiAcetis

- This is Windows security! – Katharine Holdsworth, David Weston, Ron Aquino

- What’s new in MSIX – John Vintzel

- Driver and firmware servicing in the enterprise – Nir Froimovici, Bryan Keller, Thad Martin

- Enterprise development futures: Project Reunion – Thomas Fennel

- Windows 10 in cloud configuration: endpoint management simplified – Ravi Ashok, Joe Lurie

Note: Links to these great sessions will be available after the keynotes conclude on day one.

– – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – –

Ask the experts

Windows & Devices

While we’ll offer live Q&A during the fireside chat and the Let’s Talk Windows! panel session, you’ll have an opportunity to ask additional questions and get answers from a diverse group of engineering, support, and product experts with our Ask the Expert sessions. These sessions are conducted in Teams Live Events so there’s sure to be some lively banter as they answer your questions. Space is limited so click your desired day and time to RSVP!

Microsoft Edge

If you have specific questions around deploying and managing Microsoft Edge, we’ve got a special Microsoft Edge edition of Ask the Experts on Tuesday, March 2 from 11:30-11:59 PM PST.

Microsoft Endpoint Manager

Or, if you are looking for advice on endpoint security, on monitoring and analysis or discovering vulnerabilities and potential compliance issues in real time, check out Ask the Experts: Securing your endpoints with Defender and Microsoft Endpoint Manager on Wednesday, March 3 from 2:30 AM to 3:00 AM PST.

– – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – –

Windows office hours on Tech Community

We want to ensure you have plenty of time to get answers to your questions at Microsoft Ignite. That’s why we are holding four special editions of Windows office hours on Tech Community! Select any and all of the desired times below to save the slot and join us to get answers and tips to help you more easily manage Windows 10 updates and your Windows device estate.

– – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – –

Additional resources

If a prescriptive learning path is what you’re looking for, we’ve got a good one for you. Stay current with Windows 10 and Microsoft 365 Apps is designed to help you integrate a prescriptive, process-based model into the way you deploy Windows and manage updates.

– – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – –

Learn more

Windows is a universe, not an island. Here are some additional guides to the breadth of experiences open to you at Microsoft Ignite 2021: March edition.

Stay informed

Follow us at @MSWindowsITPro for announcements and updates throughout Microsoft Ignite—and to stay up-to-date with the latest resources, tips, and information for IT pros working in the Windows ecosystem.

by Contributed | Feb 26, 2021 | Technology

This article is contributed. See the original author and article here.

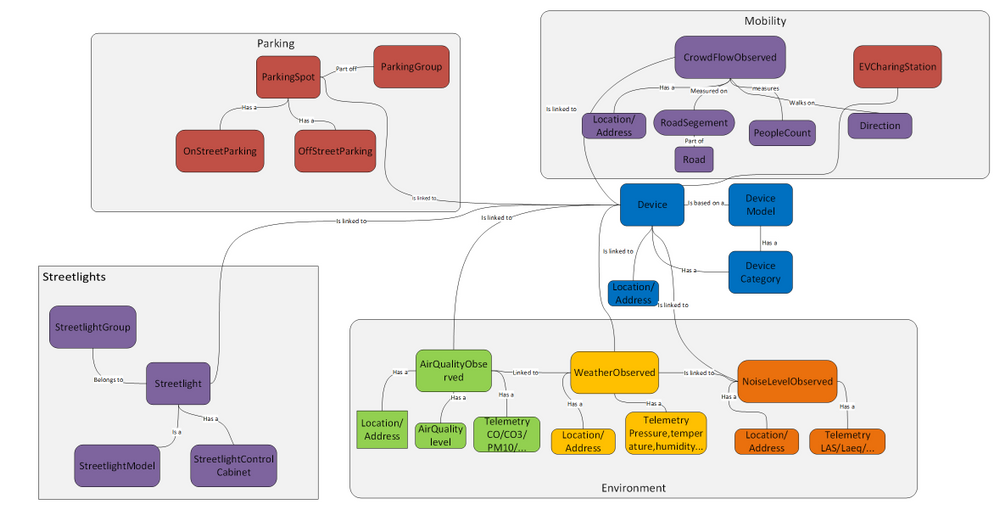

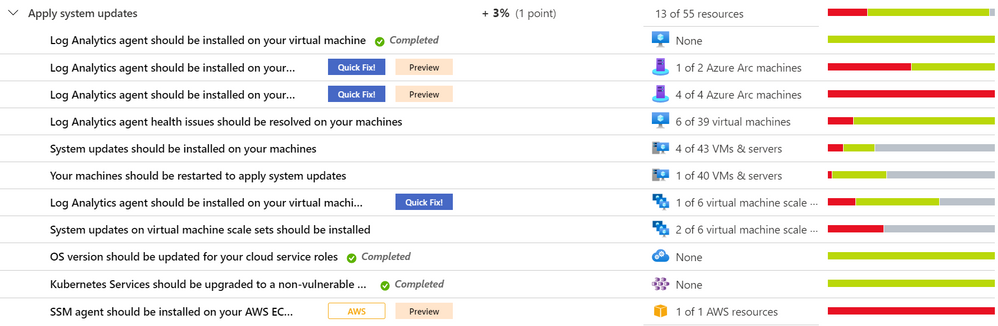

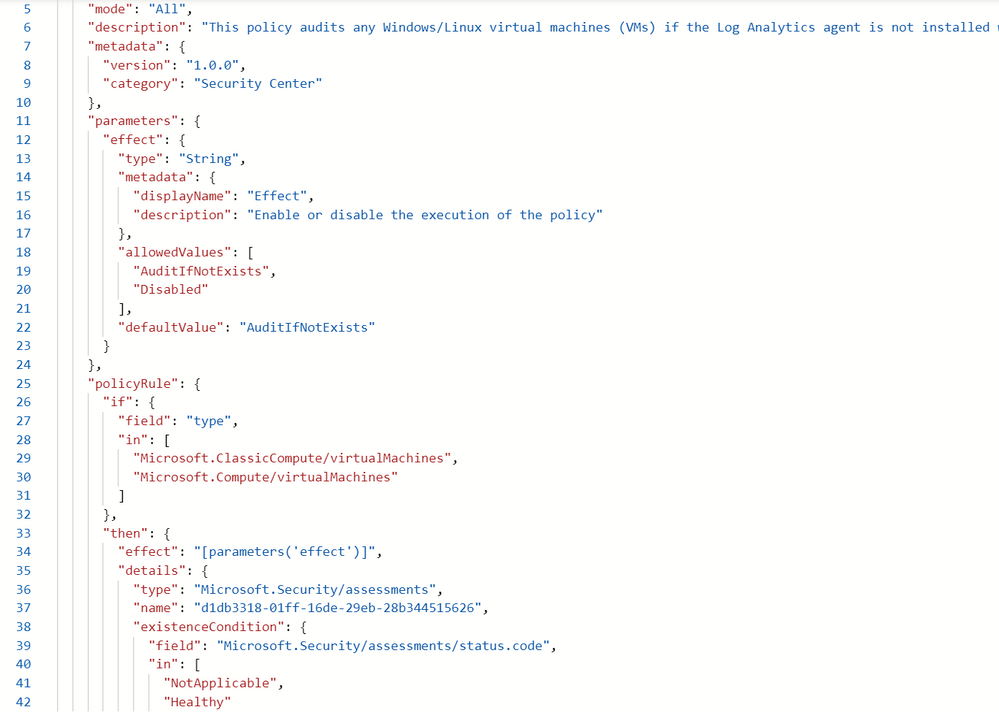

As part of our recent Azure Security Center (ASC) Blog Series, we are diving into the different controls within ASC’s Secure Score. In this post we will be discussing the security control Apply System Updates.

Image 1

Image 1

System updates bring fresh and enhanced features, deliver security fixes, greater compatibility and in general a better user experience that help improving your security posture. Azure Security Center takes this and transforms it in several recommendations – depending on the resource types you have – that have Quick Fixes and easily shows you the big picture in your environment so you can act. Let’s drill into some of the recommendations for this control.

Note

There are two recommendations from this security control that are being deprecated. Learn more about it in this article Important changes coming to Azure Security Center | Microsoft Docs.

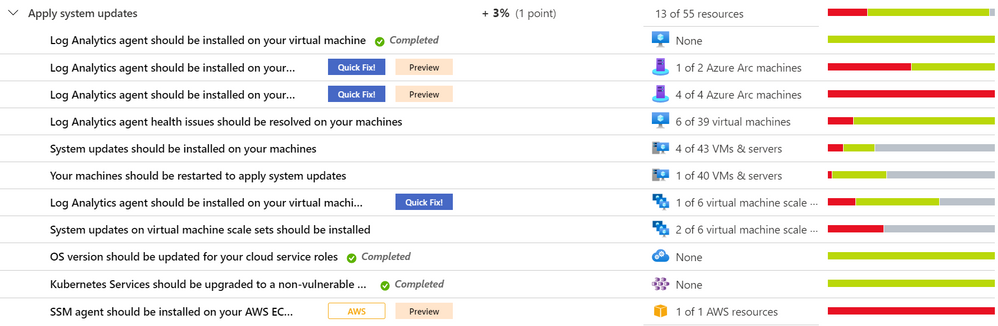

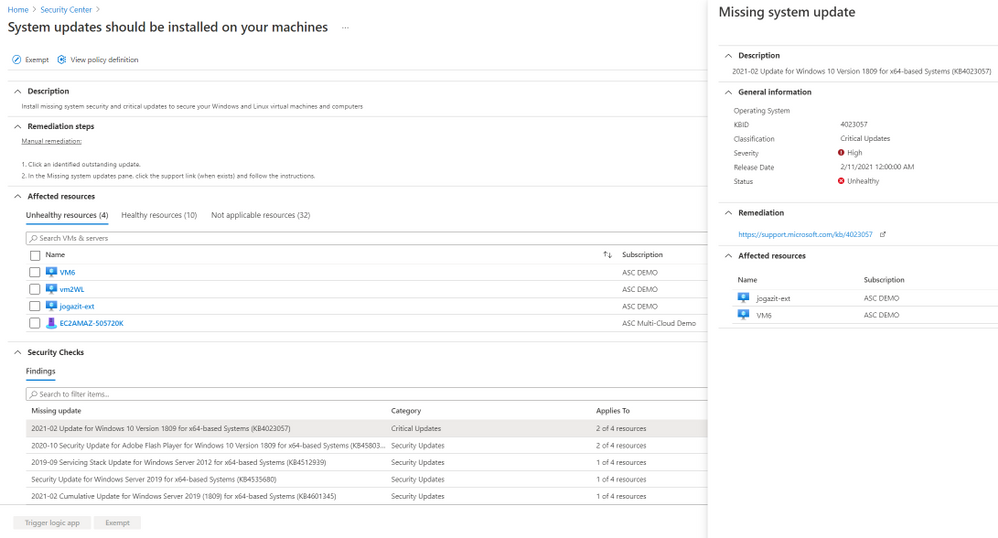

Log Analytics agent should be installed on…

Azure Security Center collects data using the Log Analytics agent (formerly known as Microsoft Monitoring Agent – MMA), which reads security-related configurations and event logs and then sends them to a Log Analytics workspace. Depending on the resource types you have, you may come across this recommendation for your virtual machines, virtual machine scale sets, Windows-based and Linux-based Azure Arc machines (Preview). The mapped policies audits if the Log Analytics agent is not installed.

Image 2

Image 2

This comes with a Quick Fix button that will install the MMAExtension. The workspaceID will be requested once the remediation script is triggered.

"parameters": {

"vmName": {

"value": "resourceName"

},

"location": {

"value": "resourceLocation"

},

"logAnalytics": {

"value": "workspaceId"

}

}

You can also use ARM template or Azure Policies to manage the extension deployment to Arc servers. Learn more about Log Analytics agent for Linux or Log Analytics agent for Windows. For multiple ways to install and configure your Log Analytics agent please see this article.

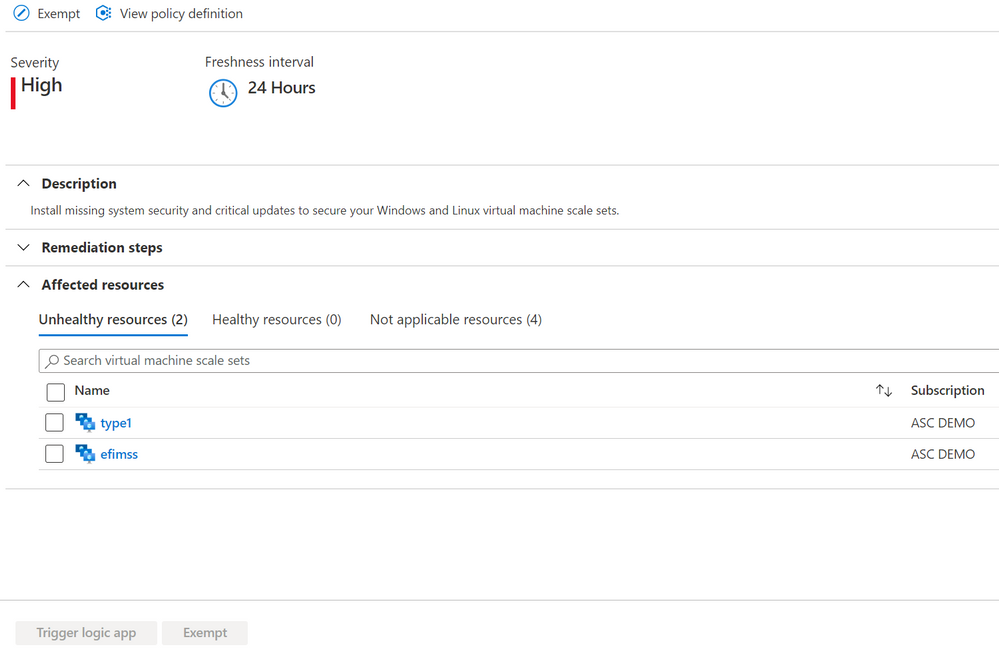

System updates should be installed on your virtual machines

This recommendation doesn’t come with a Quick Fix button, but it does come with the Exempt feature; that way you can set an exemption for specific resources either if you have already mitigated it through a third-party service or accept the risk and give a waiver. From ASC you will be able to see the outstanding updates of the unhealthy resources. The KB ID is provided as well for you to track down specs and the impact it may have.

Image 3

Image 3

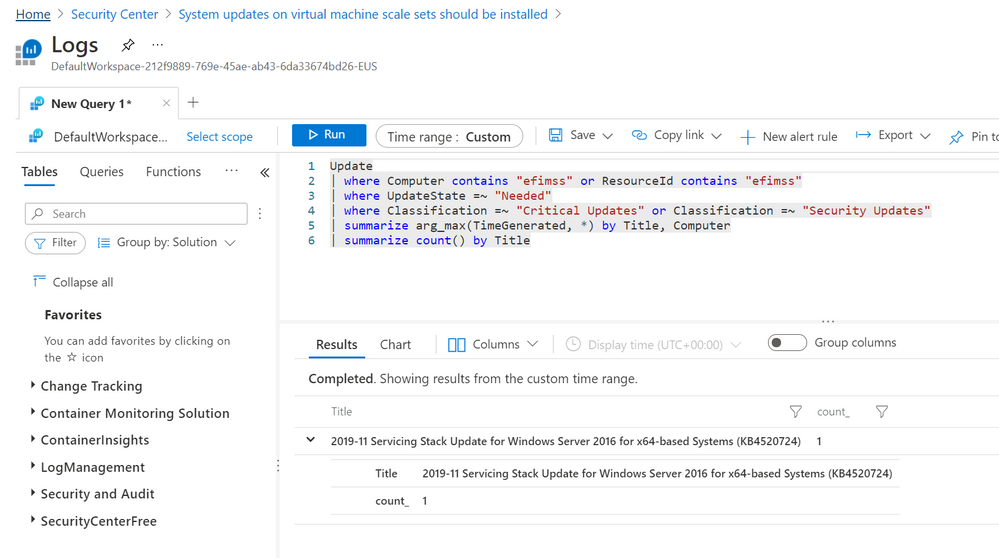

System updates on virtual machine scale sets should be installed

The information brought in this recommendation is like the one of VMs, but there are a few differences (see Image 4). To check the security updates, you will have to click o the VMSS that will take you to its Log Analytics Workspace query dashboard. Automatically, a query is deployed and will display the update and its count, because we are talking about scale sets (see Image 5). At this point, there are only manual remediation steps to follow, and that’s taking into consideration the corresponding Knowledge Base (KB) article ID. Nevertheless, there’s a Trigger Logic App option available in case you want to create an automation to remediate that.

Image 4

Image 4

Image 5

Image 5

OS version should be updated for your cloud service roles

If you happen to have a cloud service role (classic), you might come across this recommendation. The Exempt feature is also available. By default, Azure periodically updates your guest OS to the latest supported image within the OS family that you’ve specified in your service configuration; but choosing a specific OS version disables automatic OS updates, and here is when this comes handy. To learn more about how to solve this follow this article.

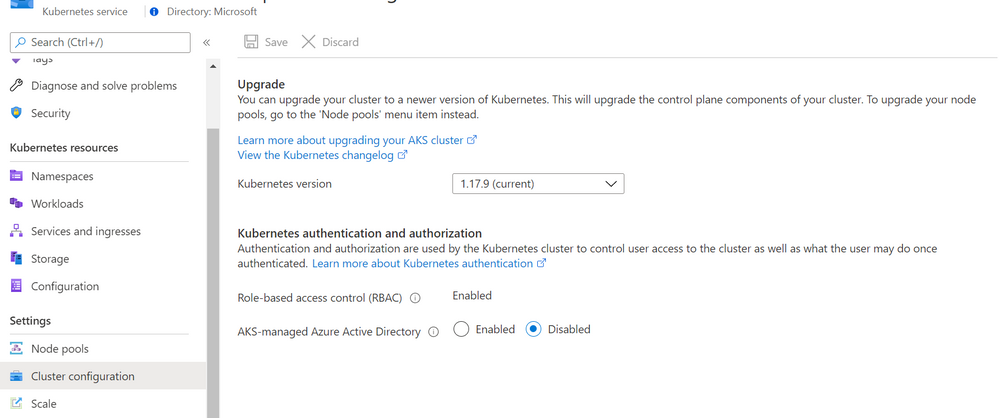

Kubernetes Services should be upgraded to a non-vulnerable Kubernetes version

This recommendation will appear if you must upgrade your Kubernetes service cluster to a later Kubernetes version (at the time this article was written the latest was 1.11.9+, 1.12.7+, 1.13.5+, and 1.14.0+) to protect against known vulnerabilities in your current Kubernetes version. For a tutorial on how to accomplish this, go to this article.

Image 6

Image 6

Next Steps

As with all security controls, you need to make sure to remediate all recommendations within the control that apply to a particular resource to gain a potential score increase for your security posture. Check out our GitHub repo for artifacts that may help you achieve your 100% Secure Score. For more content like this join the Microsoft Security Community at https://aka.ms/SecurityCommunity

Reviewer:

@Yuri Diogenes , Principal Program Manager – CxE ASC

Recent Comments