by Contributed | Feb 26, 2021 | Technology

This article is contributed. See the original author and article here.

My new year resolution of Year 2021 is to write more. Before I know, it’s already February and I even celebrated Chinese Lunar Near of 2021 two weeks ago. OK, I guess I can count the clock that way. This year is the Year of the Ox. In Chinese Ox is “牛“. That same Chinse character also means super cool, super awesome. I hope this speaks to the year of Windows containers too  .

.

There have been a lot to share and celebrate in the first 2 months.

AKS on Azure Stack HCI February Update

The biggest news is AKS on Azure Stack HCI February Update released last Friday! Ben Armstrong led the team and delivered lots of new changes and fixes in this release. Nice job! The crown jewel for me is the guide for evaluating AKS-HCI inside an Azure VM authored by Matt McSpirit. Anyone with an Azure subscription can try out AKS on Azure Stack HCI in an Azure VM and of course spin up your Windows containers on it. You won’t be constrained on hardware availability. Matt’s team runs the customer engagement program. They are actively looking at customers who are interested in enrolling in the Early Access Program (EAP) of AKS-HCI. You can fill out this survey if you are interested.

AKS-HCI now supports strong authentication using Active Directory Credentials is another great improvement that enables Active Directory authentication, fully integrated into Kubernetes authentication and configuration workflows. Sumit Lahiri did an excellent job explaining the architecture and what’s under the hood. The new scenario was delivered from the same team that we’ve been partnering closely to bring more gMSA related innovations to Windows containers to make the Lift and Shift experience easier for workloads that need Active Directory. If you are new to gMSA – Group Managed Service Account, it’s a service account that enables Windows containers to have an identity on the wire to allow Active Directory authentications. Our documentation gMSA for Windows containers have more details. We’ll share gMSA related improvements for Windows containers in coming months.

Docs. Docs. Docs.

The team took the quiet time during the Christmas holiday and made improvements on Windows container documentation. I want to list them so everyone can see and benefit.

- We added a new page Lift and Shift to containers under “Get Started”. It shares high level benefits of using containers, applications supported in Windows containers, and a decision tree. This page will help those of you who just started looking at moving your Windows applications to containers.

- We added a new section under “Tutorials” – Manage containers with Windows Admin Center starting with this page “Configure the Container extension on Windows Admin Center”. If you are looking for some tooling to help containerize your apps and deploy them, this will be a great starting point.

- We updated the Base image servicing cycle page under “Reference” to reflect that we have extended Nano Server container SAC1809 release to be supported to 1/9/2024, and the Window container SAC1809 to be supported to 5/11/2021. There is a bit more update on this that I’ll cover later in the blog.

- We added the Events page under “Reference” where all related content from last 2-3 years in major Microsoft and industry conferences are now compiled. We spent lot of effort and time on building quality slides and demos for events. So even though some content could be slightly outdated, they can still be valuable if you are just starting on Windows containers.

Overall, we aim to make this documentation page as a one-stop shop for you to find all the relevant resources no matter which stage you are in leveraging Windows containers to lift and shift and modernize your Windows applications. We welcome your feedback and love to see you help contributing directly on documentation.

Lifecyle Management Update

We streamlined Server Core container and Nano Server container support and lifecycle management. Some of you may recall Nano Server container SAC1809 release was about to reach its end of life (EOL) in Nov 2020. We listened to your feedback especially those of you in the Kubernetes community that moved to use Nano Server containers for comformance testing in addition to regular workload use. Nano Server container SAC1809 now has the same EOL as Server Core container LTSC2019/1809 on 1/9/2024.

The Windows container, sometimes also referred as “the 3rd Windows container”, has been gaining popularity thanks to its broader Windows API support. Recently we were brought to the attention that its SAC1809 release is going to reach its EOL on 5/11/2021. We understand customers who need to stay on Windows Server 2019 as the container host are concerned. That is because those customers can only use containers released in the same wave with the same Build number (also referred as “Major release number”) due to Windows container host and guest version compatibility We are actively looking at options and will update when we are ready.

New Development from the .NET Team

We work very closely with the .NET Team, and I know that many of you run .NET apps on Windows containers. There are two recently blogs from Richard Lander of the .NET team that I want to share.

You will notice this blog is mainly about Linux and only a small portion on Windows. I take it as a positive thing for Windows because it mostly just works with our current company-wide security practices. But we stay vigilant and keep innovating. There will be updates to our docs and blogs coming related to Windows Server container security.

In the Container section, you will notice this is mentioned: “Improve scaling in containers, and better support for Windows process-isolated containers.” This issue in process-isolated containers was reported from a few of our AKS customers. In a nutshell, the issue is that CPUs and memory are not being honored for process-isolated containers by .NET runtime. We are happy to see the .NET Team making improvements that will better take advantage of the capabilities of Windows containers.

I also wanted to call out this update .NET 5.0 Support for Windows Server Core Containers that was made available last November. Both .NET team and our team are very curious of your feedback on this.

What’s Ahead

Two exciting events are coming up on the horizon.

- Microsoft Spring Ignite 2021 is next week March 2-4. I am excited to see quite a few new things our team have been working on will be showcased.

- Microsoft Global MVP Summit 2021 is also coming on March 29-31. MVPs are our best customers and friends. I am excited to see some old and new friends again.

That reminds me recently I had a few email exchanges and GitHub discussions with one of our MVPs Tobias Fenster, CTO of COSMO CONSULT Group based in Germany. To my pleasant surprise, Tobias has been writing blogs on Windows containers, like this one ”Building Docker images for multiple Windows Server versions using self hosted containerized Github runners” . Hidden gems! Go check out Tobias’s presentation list.

To close, I really liked what Satya said in Microsoft Fiscal Year 2021 2nd Quarter Earnings Conference Call in January

“What we are witnessing is the dawn of a second wave of digital transformation sweeping every company and every industry.

Digital capability is key to both resilience and growth.

It’s no longer enough to just adopt technology. Businesses need to build their own technology to compete and grow. “

Borrowing that perspective, it’s no longer just about adopting Windows containers to lift and shift and modernize with AKS and AKS on Azure Stack HCI. It’s about leveraging Windows containers, differentiate your company and grow to new heights.

As always, we’d love to hear from you, how you use Windows containers, on AKS, AKS on Azure Stack HCI, or other environments, and what we can do better to help you make your digital transformation journey easier.

Weijuan

Twitter: @WeijuanLand

Email: win-containers@microsoft.com

by Contributed | Feb 26, 2021 | Technology

This article is contributed. See the original author and article here.

Welcome back to the Security Controls in Azure Security Center series! This time we are here to talk about “Protect applications against DDoS attacks”.

Distributed denial-of-service (DDoS) attacks overwhelm resources and render applications unusable.

Use Azure DDoS Protection Standard to defend your organization from the three main types of DDoS attacks:

- Volumetric attacks flood the network with legitimate traffic. DDoS Protection Standard mitigates these attacks by absorbing or scrubbing them automatically.

- Protocol attacks render a target inaccessible, by exploiting weaknesses in the layer 3 and layer 4 protocol stack. DDoS Protection Standard mitigates these attacks by blocking malicious traffic.

- Resource (application) layer attacks target web application packets. Defend against this type with a web application firewall and DDoS Protection Standard.

The “Protect applications against DDoS attacks” Security Control is worth two points and includes the recommendations below.

Azure DDoS Protection Standard should be enabled

DDoS attacks are often designed to make an application resource or online service unavailable by overwhelming the resource or service with more traffic than it can handle. Once the resource is no longer able to handle legitimate requests, it might also become vulnerable for code injection. The unavailability of the resource or service presents a significant issue considering legitimate parties also lose access to these resources or services. Daily business offerings may be halted as a result of the denial of service. Any endpoint that can be publicly reached through the internet is vulnerable to a DDoS attack. DDoS attacks can often be used to divert attention from larger targets such as injecting malware into company resources or data exfiltration.

Like most cyber threats, repairing a DDoS attack will take time and money. Aside from diverting resources to repair the attack, your organization could also be losing money due to the time it takes to get your resources and services back up and running. The best way to be prepared is to have precautions in place that will prevent these attacks from being successful. Azure resources are deployed with Azure Basic DDoS protection enabled, allowing for integrated defense against common network layer threats. Azure DDoS Protection Standard provides enhanced features that are designed specifically for your Azure resources including attack analytics and metrics.

Security Center works with Application Gateway, a web traffic load balancer, that enables users to manage traffic to their web applications. Application Gateway also utilizes Web Application Firewall (WAF) to respond, detect and prevent threats from web applications. APG/WAF is best combined with DDoS Protection to ensure Layer 4 – 7 protection.

Container CPU and memory limits should be enforced

Different types of DDoS attacks including Application Level Attacks focus on exhausting a server’s resources, including the CPU, in order to make the server unable to process legitimate requests. Enforcing container CPU and memory limits protect your container workloads from DDoS attacks by preventing the container from using more than the configured resource limit.

Azure Policy add-on for Kubernetes should be installed and enabled on your clusters

As discussed in our overview of the Remediate Security Configurations Control and Manage Access and Permissions, this recommendation is geared towards helping users safeguard their Kubernetes clusters by managing and reporting their compliance state.

Next Steps

Thanks for tuning back in to learn about the “Protect applications against DDoS attacks” Security Control within Azure Security Center. To gain credit for taking steps to protect your resources from DDoS attacks, you must remediate all the recommendations within this Security Control. As a reminder recommendations in Preview are not included in your Secure Score calculation until they are GA. Make sure to also check out our previous blogs and documentation to help you on your Secure Score journey!

- The main blog post to this series (found here)

- The DOCs article about secure score (which is this one)

Reviewers

@Tobi Otolorin, Program Manager 2, CxE Network Security

@Tom Janetscheck , Senior Program Manager, CxE ASC

by Contributed | Feb 26, 2021 | Technology

This article is contributed. See the original author and article here.

Welcome back to the Security Controls in Azure Security Center series! This time we are here to talk about “Protect applications against DDoS attacks”.

Distributed denial-of-service (DDoS) attacks overwhelm resources and render applications unusable.

Use Azure DDoS Protection Standard to defend your organization from the three main types of DDoS attacks:

- Volumetric attacks flood the network with legitimate traffic. DDoS Protection Standard mitigates these attacks by absorbing or scrubbing them automatically.

- Protocol attacks render a target inaccessible, by exploiting weaknesses in the layer 3 and layer 4 protocol stack. DDoS Protection Standard mitigates these attacks by blocking malicious traffic.

- Resource (application) layer attacks target web application packets. Defend against this type with a web application firewall and DDoS Protection Standard.

The “Protect applications against DDoS attacks” Security Control is worth two points and includes the recommendations below.

Azure DDoS Protection Standard should be enabled

DDoS attacks are often designed to make an application resource or online service unavailable by overwhelming the resource or service with more traffic than it can handle. Once the resource is no longer able to handle legitimate requests, it might also become vulnerable for code injection. The unavailability of the resource or service presents a significant issue considering legitimate parties also lose access to these resources or services. Daily business offerings may be halted as a result of the denial of service. Any endpoint that can be publicly reached through the internet is vulnerable to a DDoS attack. DDoS attacks can often be used to divert attention from larger targets such as injecting malware into company resources or data exfiltration.

Like most cyber threats, repairing a DDoS attack will take time and money. Aside from diverting resources to repair the attack, your organization could also be losing money due to the time it takes to get your resources and services back up and running. The best way to be prepared is to have precautions in place that will prevent these attacks from being successful. Azure resources are deployed with Azure Basic DDoS protection enabled, allowing for integrated defense against common network layer threats. Azure DDoS Protection Standard provides enhanced features that are designed specifically for your Azure resources including attack analytics and metrics.

Security Center works with Application Gateway, a web traffic load balancer, that enables users to manage traffic to their web applications. Application Gateway also utilizes Web Application Firewall (WAF) to respond, detect and prevent threats from web applications. APG/WAF is best combined with DDoS Protection to ensure Layer 4 – 7 protection.

Container CPU and memory limits should be enforced

Different types of DDoS attacks including Application Level Attacks focus on exhausting a server’s resources, including the CPU, in order to make the server unable to process legitimate requests. Enforcing container CPU and memory limits protect your container workloads from DDoS attacks by preventing the container from using more than the configured resource limit.

Azure Policy add-on for Kubernetes should be installed and enabled on your clusters

As discussed in our overview of the Remediate Security Configurations Control and Manage Access and Permissions, this recommendation is geared towards helping users safeguard their Kubernetes clusters by managing and reporting their compliance state.

Next Steps

Thanks for tuning back in to learn about the “Protect applications against DDoS attacks” Security Control within Azure Security Center. To gain credit for taking steps to protect your resources from DDoS attacks, you must remediate all the recommendations within this Security Control. As a reminder recommendations in Preview are not included in your Secure Score calculation until they are GA. Make sure to also check out our previous blogs and documentation to help you on your Secure Score journey!

- The main blog post to this series (found here)

- The DOCs article about secure score (which is this one)

Reviewers

@Tobi Otolorin, Program Manager 2, CxE Network Security

@Tom Janetscheck , Senior Program Manager, CxE ASC

by Contributed | Feb 26, 2021 | Technology

This article is contributed. See the original author and article here.

Did you know that Microsoft Learn Student Ambassadors speak at least 117 languages between them? Or that about 15 percent of them go to school more than 805 kilometers from where they grew up?

To learn more about the big stuff and the small stuff that makes them tick, check out this visual introduction to who they are and what student life looks like for them.

And if you see yourself in this incredible community and wonder what you could achieve with the right opportunity, we’d love to meet you. Learn more at StudentAmbassadors.microsoft.com.

by Contributed | Feb 26, 2021 | Technology

This article is contributed. See the original author and article here.

As cities continue connecting their urban environments, the concept of digital twins—a digital representation of real-world environments brought to life with real time data from sensors and other data sources—has entered the realm of smart cities and promises to enable city administrations and urban planners to make better decisions with the help of data integration and visualization from across the urban space.

Last year, we announced the general availability of the Azure Digital Twins platform which enables developers to model and create digital representations of connected environments like buildings, factories, farms, energy networks, railways, stadiums, and cities, then bring these entities to life with a live execution environment that integrates IoT and other data sources.

Today, we are excited to announce that the open-source GitHub repository of Smart Cities ontology for Azure Digital Twins available to the ecosystem.

Why ontologies

To drive openness and interoperability, Azure Digital Twins comes with an open modeling language, Digital Twins Definition Language (DTDL), which provides flexibility, ease of use, and integration into the rest of the Azure platform. Using DTDL, developers can describe twins in terms of the telemetry they emit, the properties they report or synchronize and the commands they respond to. Most importantly, DTDL also allows describing the relationship between twins.

Common representation of places, infrastructure, and assets will be paramount for interoperability and enabling data sharing between multiple domains. It’s our goal to partner with industry experts and provide DTDL-based ontologies which learn from, build on, and/or use industry standards, meet the needs of developers, and are adopted by the industry. The resulting open-source ontologies provide common ground for modeling connected environments, accelerate developers’ time to results, and enable interoperability between DTDL-based solutions from different solution providers.

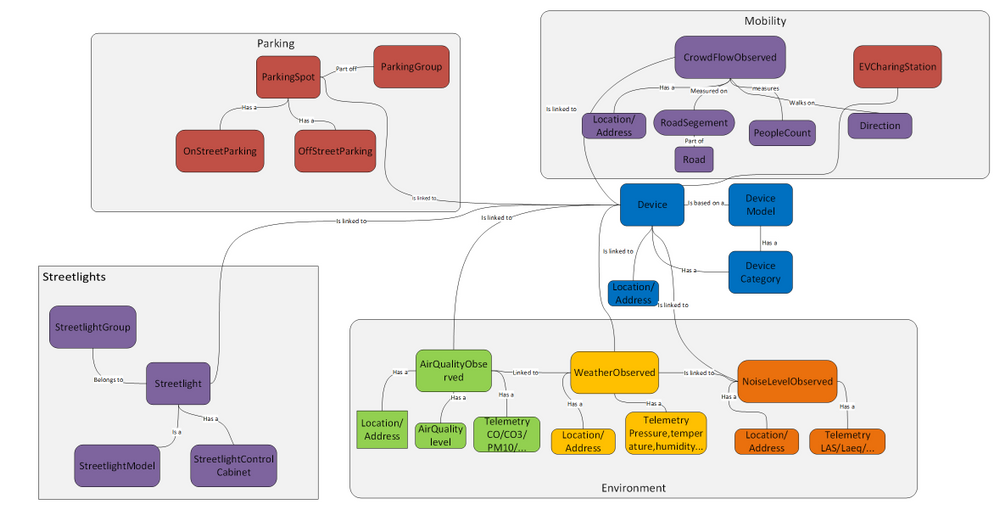

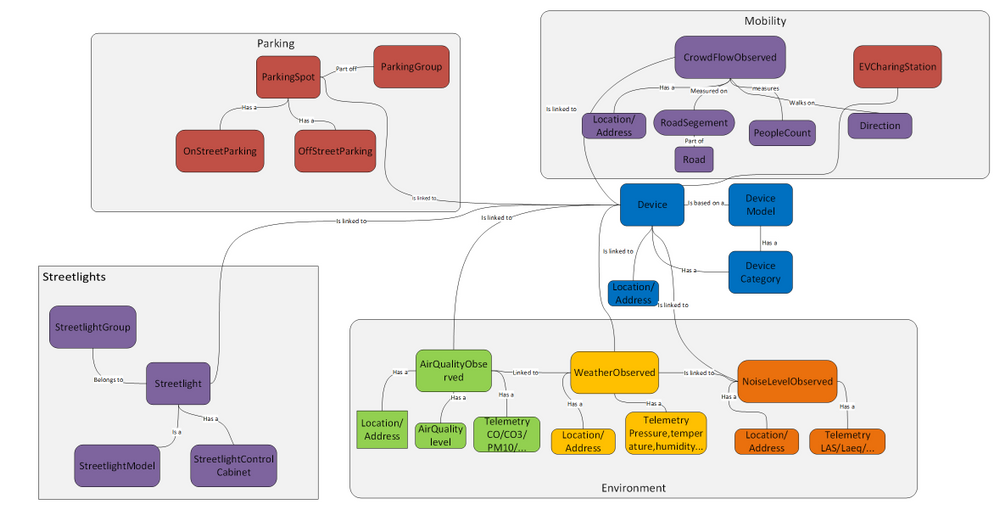

Smart Cities ontology approach and overview

We collaborated with Open Agile Smart Cities (OASC) and Sirus to provide a DTDL-based ontology, starting with ETSI CIM NGSI-LD, and accelerate development of digital twins-based solutions for smart cities.

In addition to ETSI NGSI-LD, we’ve also evaluated Saref4City, CityGML, ISO and others.

The ETSI CIM NGSI-LD specification defines an open framework for context information exchange named NGSI-LD which comes with an information model that defines the meaning of the most needed terms, and a domain-specific extension to model any information. The core meta-model provides a basis for representing property graphs using RDF/RDFS/OWL, and is formed of Entities, their Relationships, and their Properties with values, encoded in JSON-LD. In addition to the core meta-model, NGSI-LD compliant open models for aspects of smart cities have been defined by organizations and projects, including OASC, FIWARE, GSMA and the Synchronicity project. The NGSI-LD models for Smart Cites comprise models in the domains of Mobility, Environment, Waste, Parking, Building, Park, Port, etc.

The property graph nature of NGSI-LD made it quite straightforward to map it to DTDL, and with today’s release, we are making an initial set of DTDL models adapted from the NGSI-LD open models for Smart Cities available to the community.

We’ve focused our initial set on use cases that are increasingly relevant to cities given the availability of IoT devices and sensors, like measuring the air quality in a neighborhood, understanding the noise level in a district, the crowd flow in a road segment, traffic flow in a road segment, monitoring on-street parking in parking spots, availability of EV-Charging, or monitoring streetlights and reducing energy consumption, but as well enabling streetlight infrastructure for additional smart cities services enabling citizen-centric use cases.

In addition to the ETSI NGSI-LD, we’ve also started leveraging ETSI SAREF extension for Smart Cities ontology framework for Topology, Administrative Area and City Object modeling. Using Saref4City ontology constructs represented in DTDL allowed us to model city objects like poles, their containment within an administrative area of a city, and linked to the smart models in the domain of mobility, environmental, parking adapted in DTDL from NGSI-LD models for Smart Cities described above.

Watch this IoT Show episode to learn how we approached the DTDL-based Smart Cities ontology, how we mapped the ETSI NGSI-LD models to DTDL, extending the ontology based on Saref4City, while bringing it all together with an example use case brought to life with Azure Digital Twins Explorer.

Partners

We collaborated with OASC and Sirus to bring the first DTDL ontology for Smart Cities for Azure Digital Twins to the community. We are also working with more partners that are validating and contributing to the open source ontology.

Open & Agile Smart Cities – OASC in short –is an international network of cities and communities, working with local public administrations of all sizes to support their digital transformation journey. Together with its members, partners, and experts, OASC creates sustainable impact for cities with data driven solutions based on a Minimal Interoperability Mechanisms which includes open standards, open APIs, and a recommended catalog of open data models, including NGSI-LD models, to enable interoperability for applications and systems among different cities.

Sirus is a dynamic software integration company that specializes in building IoT and Smart City solutions. Sirus enables cities to build digital solutions and integrate data from a variety of IoT devices and other systems through an open standards-based approach, like ETSI NGSI-LD, and has pioneered multiple smart cities data integration platforms including for the City of Antwerp. Sirus invests heavily in innovation, like smart city digital twins based on Azure and pioneers in open standards implementations. Sirus is also an SME partner of OASC.

Siemens MindSphere City Graph is a solution that offers a new way to optimize city operations. It creates a digital twin of urban spaces allowing cities to model, monitor, and control physical infrastructure, powered by Azure Digital Twins. MindSphere City Graph uses the Digital Twins Definition Language and context information management specifications ETSI NGSI-LD to drive openness for solution providers to integrate and deliver sustainable value for a city while enabling open data for cities through open standard approach. The open source DTDL-based ontologies for Smart Cities based on ETSI NGSI-LD will accelerate the development of digital twins solutions and integration.

ENE.HUB, a portfolio of Brookfield Infrastructure Partners, is a fully integrated smart city infrastructure as a service provider. ENE.HUB’s flagship product, the SMART.NODE™ is a comprehensive and self-contained smart pole solution that integrates a range of smart city services including smart lighting, communication services, energy services, environment services, transport services, safety, and media services. ENE.HUB is collaborating with Microsoft on digital twin’s representation of smart poles based on the open DTDL-based Smart Cities ontology.

We also continue to collaborate with our partners Bentley Systems and Imec, experts in digital twin solutions for smart cities. Both Bentley Systems and Imec are also members of the Digital Twin Consortium along with Microsoft.

Next steps

With this release of smart cities ontology for digital twins, we’ve focused on an initial set of models and we welcome you to contribute to extend the initial set of use cases, as well as improve the existing models.

Explore the open-source GitHub repository, try it out with Azure Digital Twins, and learn how to contribute.

Our goal is to accelerate your development of digital twins solutions for smart cities and enable interoperability and data sharing for cities.

If you are interested in Smart Building solutions, we worked with the RealEstateCore consortium to provide DTDL based ontology for Smart Buildings. Similarly, we are also working on Energy Grid ontology which will be coming soon.

As part of our commitment to openness and interoperability, we also continue to promote best practices and shared digital twin models and use cases through the Digital Twin Consortium.

![]() .

.

Recent Comments