by Contributed | Jan 16, 2021 | Technology

This article is contributed. See the original author and article here.

SmartScreen has become a part of Windows 10 OS, it was named as Windows Defender SmartScreen. It is useful to protect not just Edge, Internet Explorer browsers but also other applications such as third-party browsers, Email Client and Apps from malicious Web link attack, malicious Web download threat. Let us have a quick look into SmartScreen functionalities:

- Anti-phishing and anti-malware support. Microsoft Defender SmartScreen helps to protect users from sites that are reported to host phishing attacks or attempt to distribute malicious software.

- Prevent drive-by attacks. Drive-by attacks are web-based attacks that tend to start on a trusted site, targeting security vulnerabilities in commonly used software. Because drive-by attacks can happen even if the user does not click or download anything on the page, the danger often goes unnoticed.

- Reputation-based URL and app protection. Microsoft Defender SmartScreen evaluates a website’s URLs to determine if they’re known to distribute or host unsafe content. It also provides reputation checks for apps, checking downloaded programs and the digital signature used to sign a file. If a URL, a file, an app, or a certificate has an established reputation, users won’t see any warnings. If, however, there’s no reputation, the item is marked as a higher risk and presents a warning to the user.

- Improved heuristics and diagnostic data. Microsoft Defender SmartScreen is constantly learning and endeavoring to stay up to date, so it can help to protect you against potentially malicious sites and files.

- Blocking URLs associated with potentially unwanted applications. software that can cause your machine to run slowly, display unexpected ads, or at worst, install other software

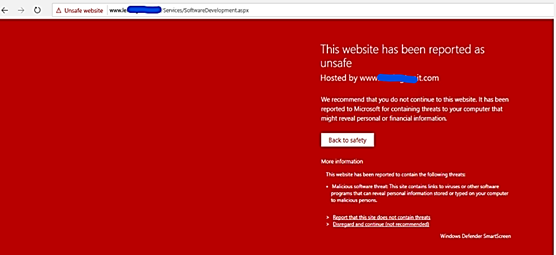

Website blacklist block example

App blocked by Defender SmartScreen example:

Starting with Windows 10 version 1703 to 2004 you will configure SmartScreen directly by Windows Defender SmartScreen GPO (not through Edge GPO anymore): Administrative TemplatesWindows ComponentsWindows Defender SmartScreenMicrosoft Edge

Submit File for Whitelisting to Microsoft Security Intelligence

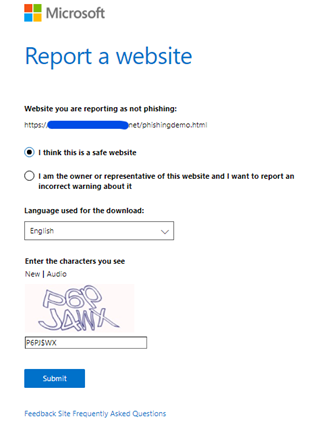

You may experience false positive block or warning by Defender SmartScreen when accessing in-house Web App’s URL or Website URLs and you need to whitelist those URLs.

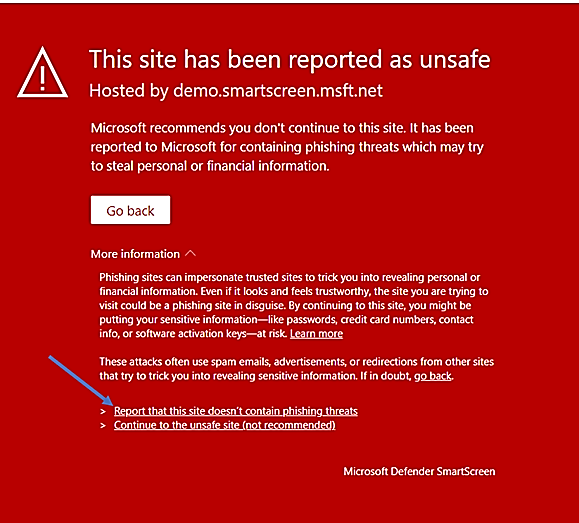

General rule, there will usually be a direct link in the product app notification for error reporting to Microsoft Security Support. In case of Defender SmartScreen, the link shown in the notification as seen here:

You should provide the information to the Defender SmartScreen Support Team if your Site gets a false-positive alarm of blockage or gets a false positive warning of unknow web site, …, the following screenshot shown the report’s content:

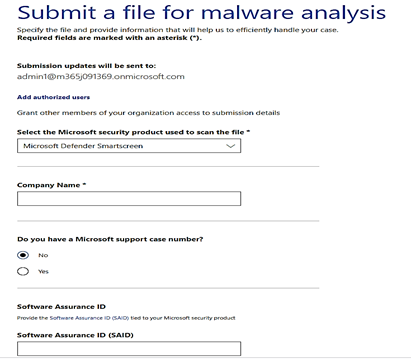

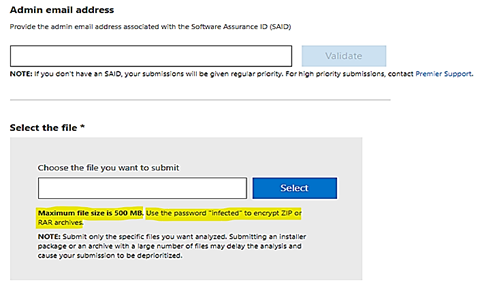

For download block or download warning report of false-positive, you could manually submit the sample files by accessing the WDSI Website and choose to submit as an enterprise user or a developer user. (You could also submit file as a home user too.)

- To upload sample file with size more than 500MB, you could compress it by Zip or RAR archive.

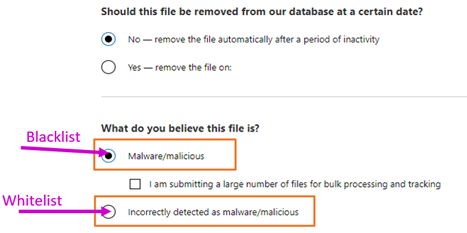

- You could report file for Blacklist to block access to it or report file for Whitelist to allow access.

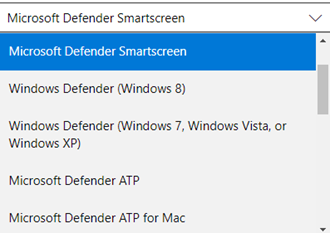

- You could submit filtering request for almost any of Microsoft’s software and service as per the dropped down list of the above Form:

Microsoft goal is to minimize false warnings or blocks. In the rare case of a false warning, Microsoft offered a web-based feedback system to help users and website owners report any errors as quickly as possible. These reports are verified by the support team and mistakes are corrected. Enterprise Premier Customer will get the highest priority in response time.

Flash Player should be removed from the Sites after December 2020

- Flash will not be disabled by default from Microsoft Edge classic (Edge legacy) or Internet Explorer 11 prior to its removal by December of 2020.

- Group policies are available for enterprise admins and IT pros to change the Flash behavior

Flash will be completely removed from all browsers by December 31, 2020, via Windows Update. Companies reliant on Flash for development and playback of content are encouraged to remove the dependency on Adobe Flash prior to December 2020.

Reference:

by Contributed | Jan 15, 2021 | Technology

This article is contributed. See the original author and article here.

Surface tools that assist IT admins with core security, management, and diagnostic tasks have been updated with support the latest enterprise devices. Updated tools include:

- Surface Diagnostic Tool for Business. Eases support experience through Surface Diagnostics. This tool provides a full suite of diagnostic tests and software repairs to quickly investigate, troubleshoot, and resolve hardware, software, and firmware issues with Surface devices. Note that Surface Hub Hardware Diagnostics is already built into the Teams OS device for easier troubleshooting and is available in Microsoft Stores for download.

Built in support

In addition, the latest versions of Surface Data Eraser, Surface Brightness Control , Surface Asset Tag already have built in support for Surface Pro 7+.

Download

You can download these and other tools from Surface Tools for IT, available on the Microsoft Download Center. Surface Pro 7+ and Surface Hub 2S 85” are available for purchase now.

by Contributed | Jan 15, 2021 | Technology

This article is contributed. See the original author and article here.

Overview

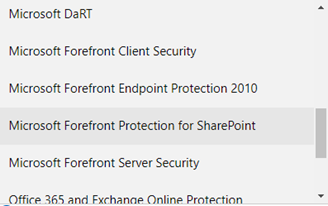

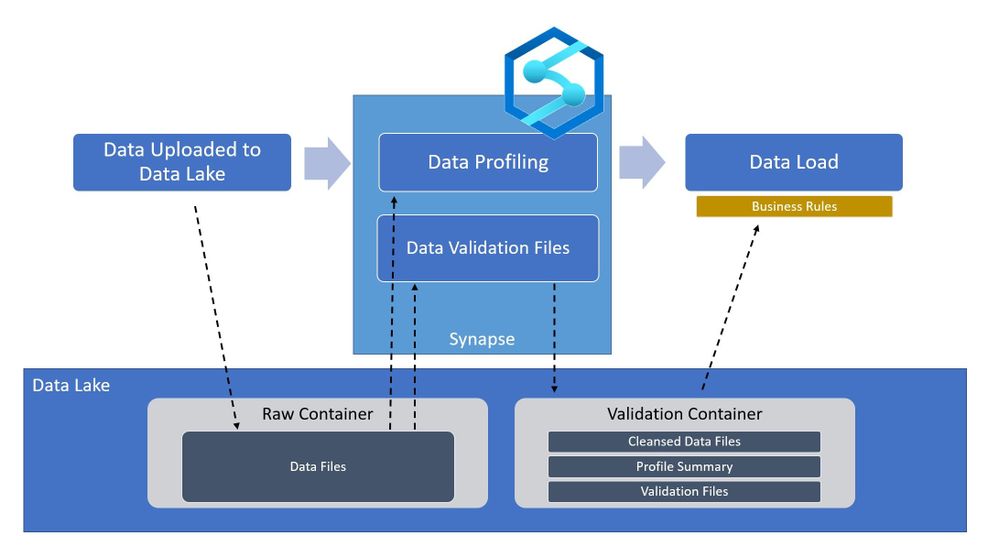

In the world of Artificial Intelligence and Machine Learning, data quality is paramount in ensuring our models and algorithms perform correctly. By leveraging the power of Spark on Azure Synapse, we can perform detailed data validation at a tremendous scale for your data science workloads.

What is Azure Synapse?

Azure Synapse is a Data Analytics Service that provides tools for end-to-end processing of data within Azure. The Azure Synapse Studio provides an interface for developing and deploying data extraction, transformation, and loading workflows within your environment. All of these workflows are built on scalable cloud infrastructure and can handle tremendous amounts of data if needed. For data validation within Azure Synapse, we will be using Apache Spark as the processing engine. Apache Spark is an industry-standard tool that has been integrated into Azure Synapse in the form of a SparkPool, this is an on-demand Spark engine that can be used to perform complex processes of your data.

Pre-requisites

Without getting into too much detail, the main requirements you will need for running this code is an Azure Synapse Workspace and a data set on which you would like to perform some validations loaded to Azure Storage. The technique shown here provides a starting point for performing these types of data validations within your own use case.

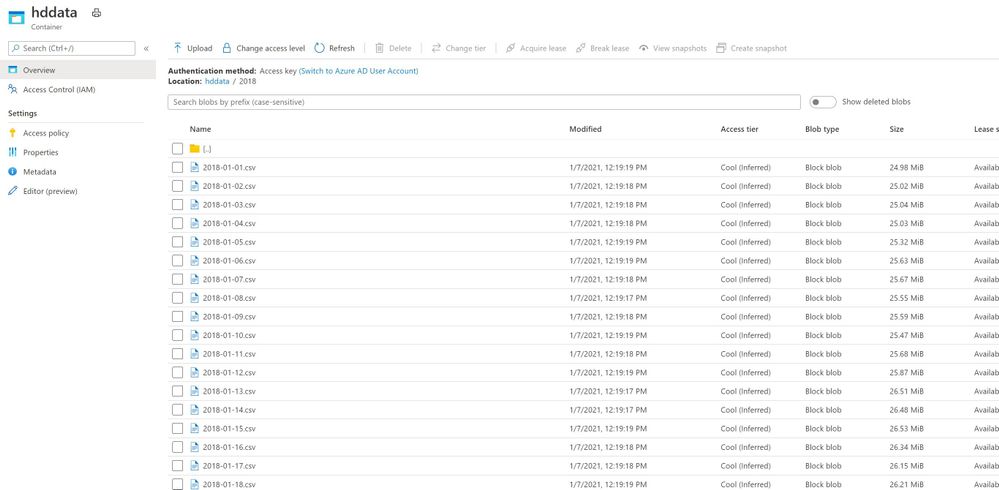

For demonstration purposes, I have loaded a data set of hard drive sensor data (link below) to an Azure Storage account and linked the storage account in Synapse (https://docs.microsoft.com/en-us/azure/synapse-analytics/get-started)

I have also set up a SparkPool (link) for developing in PySpark Notebooks within Azure Synapse, I can read the data by connecting to the data source and using some simple PySpark commands:

from pyspark.sql.functions import input_file_name

import os

df = spark.read.load('abfss://[container]@[storage_account].dfs.core.windows.net/[path]/*.csv', format='csv', header=True).withColumn('file', input_file_name())

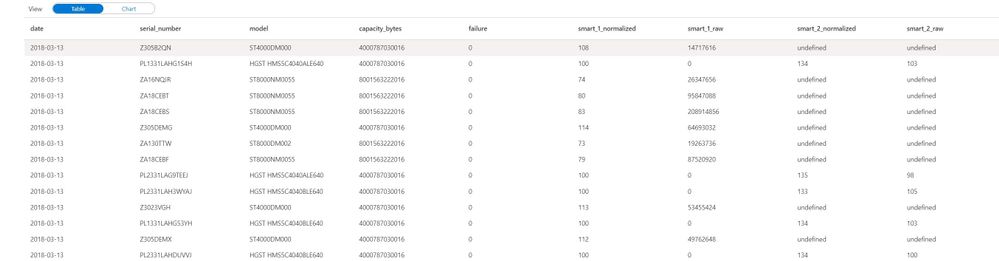

display(df)

One key part to take note of is the command .withColumn(‘file’, input_file_name()), this adds a column to your dataset named file with the name of the source data file. Very helpful when trying to find malformed rows.

Let’s start validating

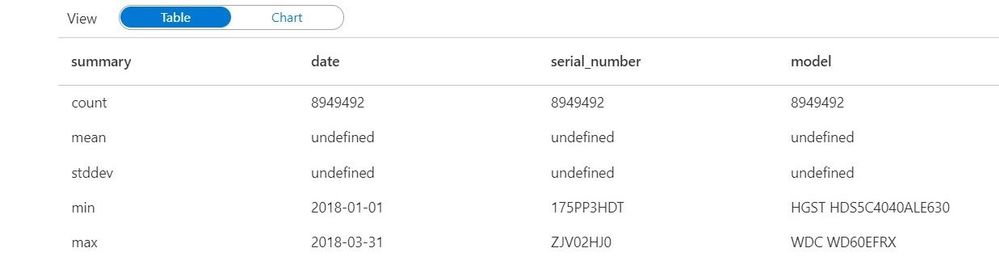

After loading the data you can begin by calculating some high-level statistics for simple validations.

df_desc = df.describe()

display(df_desc)

the describe() function calculates simple statistics (mean, standard deviation, min, max) that can be compared across data sets to make sure values are in the expected range. This is a built-in data function that can be used on any data. You can save these data sets back to your data lake for downstream processes using:

df_desc.write.parquet("[path][file].parquet")

But we can get much more complex by using spark queries to further validate the data.

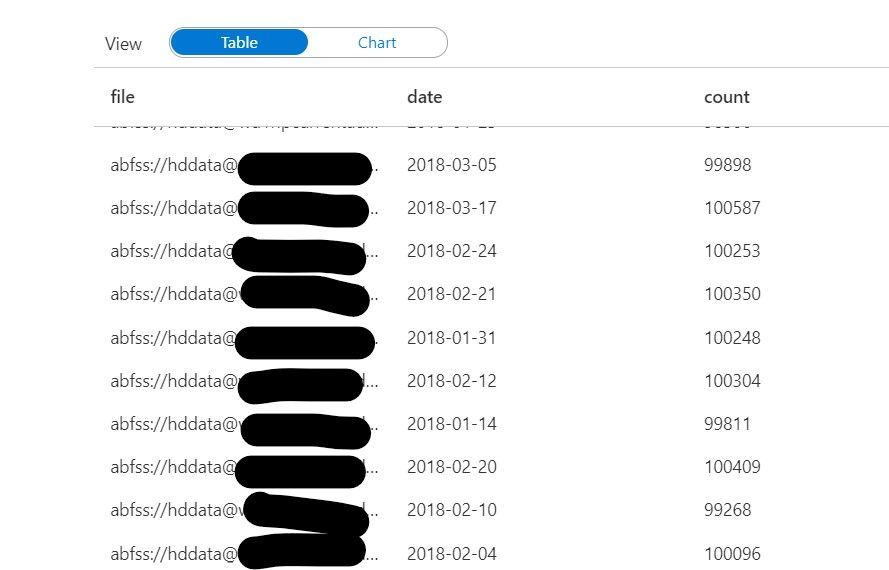

We can count how many rows were contained in each file using this code:

validation_count_by_date = df.groupBy('file','date').count()

This count can be useful in ensuring each file contains a complete dataset. If file sizes are outside of the expected range, it may mean that the file is either incomplete or contains excess data.

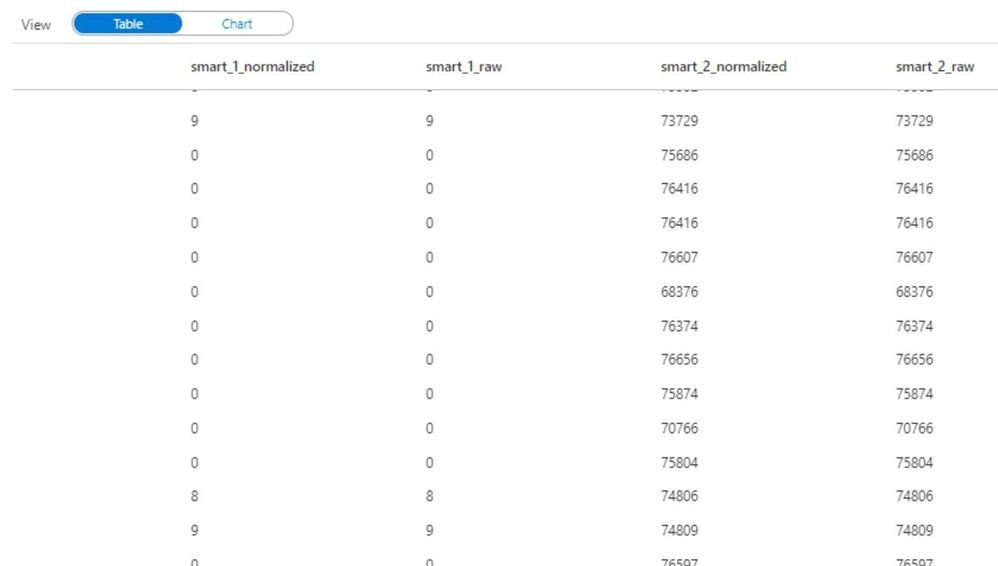

Furthermore, we can even look at the specific values within the data at a row-level granularity. The code below uses the named columns “file” and “date” to the group missing values across all files in your data set. Missing values are defined as Null or NaN values in the dataset.

from pyspark.sql.functions import isnull, isnan, when, count, col

cols = [count(when(isnan(c) | col(c).isNull(), c)).alias(c) if c not in ['file', 'date'] else count(col(c)).alias('count_{}'.format(c)) for c in df.columns]

missing_by_file = df.groupBy('file', 'date').agg(*cols)

display(missing_by_file)

Don’t be intimidated by the above code. I am essentially using some python loops to generate a list of columns cols, and then using the * (star) operator to pass those columns to the select function. I encourage you to try running these commands on your data to become familiar with the power of python.

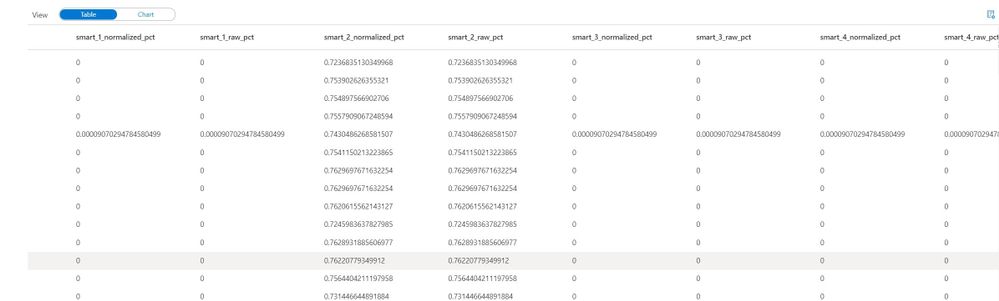

We can go a step further in calculating the ratio of missing values for each column within a file:

missing_by_file_pct = missing_by_file.select(['date', 'file', *[(col(c)/col('count_file')).alias('{}_pct'.format(c)) for c in df.columns]])

display(missing_by_file_pct)

Now instead of looking at total values, we can identify incomplete data by looking at the percentage of filled-in values in a file. Again, this technique accompanied by business rules can be very powerful in validating raw data sources and ensuring data quality in a scalable method.

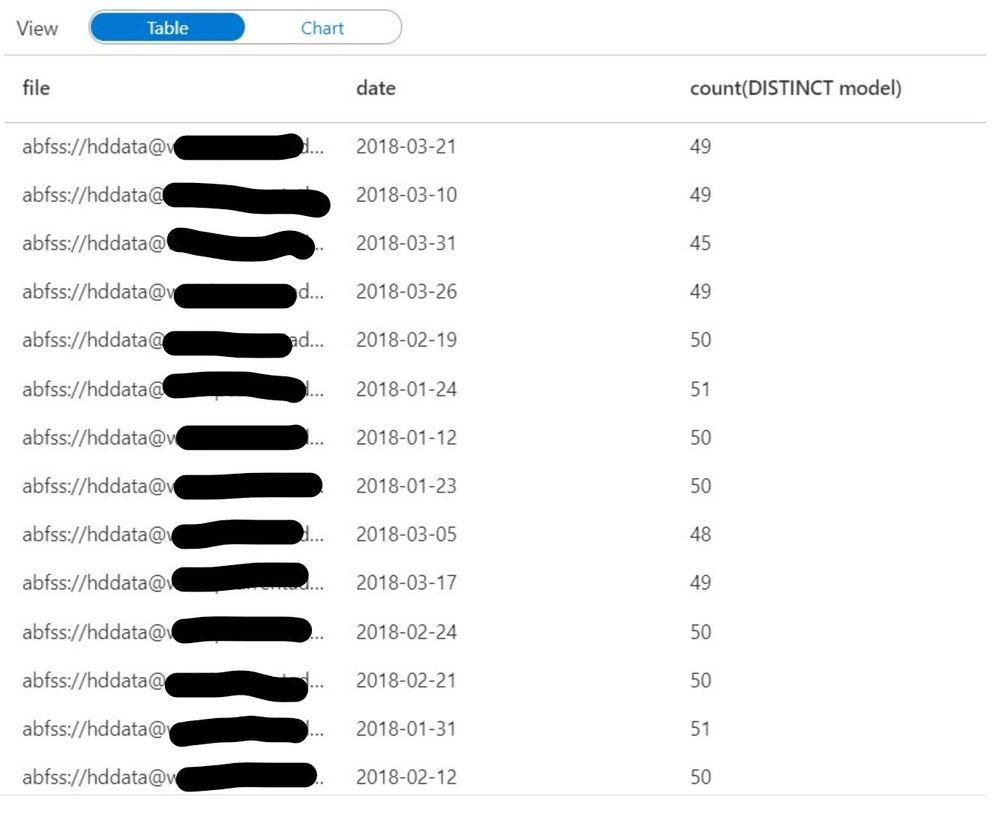

Up until this point, I have only defined the column names of “file” and “date”, all other columns have been derived from the source file header/schema so there is no need to maintain column lists as part of the validation rules. If you do require checking for specific values within a file, you can easily extend these examples such as this:

from pyspark.sql.functions import countDistinct

validation_modelcount_by_date = df.groupBy('file','date').agg(countDistinct('model'))

display(validation_modelcount_by_date)

The query above identifies all the distinct values for the column “model” which are present in each file. As you can see the count of distinct models varies slightly from file to file, we can establish an acceptable range fairly easily by looking at this data.

How can I use this in my processes?

Going back to the high-level architecture diagram shown at the beginning of the blog. This technique can be applied during the ingestion of your data to certify whether the raw files are qualified to be in the data lake. By using Synapse Spark we can perform the row-level checks fast and efficiently, and output the results back into the data lake. Downstream processes such as Machine Learning Models and/or Business Applications can then read the validation data to determine whether or not to use the raw data without having to re-validate it. With Azure Synapse and Spark, you can perform powerful validations on very large data sources with minimal coding effort.

Data reference: Backblaze Hard Drive Stats

Github Link: AzureSynapseDataValidation/Validation-Sample.ipynb

by Scott Muniz | Jan 15, 2021 | Security, Technology

This article is contributed. See the original author and article here.

The National Security Agency (NSA) has released an information sheet with guidance on adopting encrypted Domain Name System (DNS) over Hypertext Transfer Protocol over Transport Layer Security (HTTPS), referred to as DNS over HTTPS (DoH). When configured appropriately, strong enterprise DNS controls can help prevent many initial access, command and control, and exfiltration techniques used by threat actors.

CISA encourages enterprise owners and administrators to review the NSA Info Sheet: Adopting Encrypted DNS in Enterprise Environments and consider implementing the recommendations to enhance DNS security.

Recent Comments