by Scott Muniz | Jul 20, 2020 | Uncategorized

This article is contributed. See the original author and article here.

> This is a set of tutorials that was just released on the topic of Node.js. It takes you from the very beginning of your developer journey with Node.js to tell you the what and whys of Node.js to teaching you debugging, file management, dependency management, and even web development.

The full path is found here LEARN module path on Node.js

-1- Introduction to Node.js

This is a great primer if you are considering to use Node.js. It will explain the why but also show you can use it from the command-line.

This module teaches you the following:

- Explain what Node.js is

- Describe how Node.js works

- Identify when to use Node.js

- Create and run a Node.js script from the command line

Introduction to Node.js

-2- Create a new Node.js project and work with dependencies

Node.js has a rich ecosystem of packages that you can install and use in your app. Once installed these packages becomes dependencies that you need to learn how to manage as they will be with the project for a while. This module, therefore, teaches dependency management but also everything that goes with it like:

- Initialize Node.js projects

- Understand what the manifest file package.json consist of and use it to your advantage

- Add and remove packages to your Node.js project

- Manage your package dependencies and update them in a predictable way

Manage dependencies

-3- Work with files and directories

Working with files and directories is a great skill to have regardless of the programming language used. This module takes you through a lot of things that are good to know when dealing with input and output.

Sample:

const fs = require('fs').promises;

const items = await fs.readdir("stores");

console.log(items); // [ 201, 202, sales.json, totals.txt ]

This module teaches you the following:

- Work with directories

- Create and delete files

- Read from files

- Write to files

- Parse data in files

Work with files and directories

-4- Debugging

Being able to debug efficiently can save you a lot of time. This module takes you through all that you might possibly need to know to be really good at debugging in Node.js. The module covers the following topics:

- Use VS Code debugger with a Node.js program.

- Create breakpoints and run your code step by step to find issues.

- Inspect your program state at any execution step.

- Rewind the call stack to find the source of an exception.

Debugging module

-5- Use Express to build a Web App

Sample:

const express = require('express');

const app = express();

app.get('/', (req, res) => res.send('Welcome to the app'))

app.listen(8000, () => console.log('Server up and running'))

This module teaches you the following:

- Describe the core concepts of the web framework Express

- Configure middleware to control how a request is handled

- Respond to HTTP requests with different types of content like text and JSON

- Send data to an Express endpoint

Build web apps

by Scott Muniz | Jul 20, 2020 | Uncategorized

This article is contributed. See the original author and article here.

We are super excited to share the recent highlights that are now available!

- Advanced eDiscovery and Advanced Audit now rolling out to GCC, GCC High and DOD

- Yammer now fully available for eDiscovery including threaded review

- Improvements in search performance and reliability including:

- Search reliability improved significantly to 99.95%

- Search performance achieved an 83% reduction in time to complete large searches

- Collection throughput increased 5X to 50GB/HR

- Bulk-add custodians to manage custodians in bulk

- Advanced Indexing for non-custodial data sources

- Expanded review set limits 3x (now 300GB per load and 3M docs per set)

- Export improvements including support for direct-download model where content is zipped and available via browser (removed dependency on azure storage explorer), and enabled export to PST

- Custodian picker performance improved 10X to sub-second response

by Scott Muniz | Jul 20, 2020 | Uncategorized

This article is contributed. See the original author and article here.

This post was authored by @Pawel Partyka from the Office 365 ATP Customer Experience Engineering team, and Vipul Pandey from the Office 365 ATP PM team.

1 – Introduction

Office 365 Advanced Threat Protection provides several built-in security alerts to help customers detect and mitigate potential threats. In many large enterprises there is often a need to integrate these alerts with a SIEM platform or other case management tools to enable a Security Operations Center (SOC) team to monitor the alerts. The Office 365 Management Activity API provides these SOC teams the ability to integrate O365 ATP alerts with other platforms.

One of the challenges that organizations often face, particularly large enterprises, is the ever-increasing volume of alerts that the SOC needs to monitor. This at times makes it important to integrate and onboard only specific alerts to the monitoring and case management platforms or SIEM.

Let’s take an integration scenario that we worked on recently. As part of effort to deal with phishing related threats, one of our large enterprise customers wanted to fetch and integrate “user-reported phishing alerts”. However, they only wanted to their SOC to get those alerts that have already been processed by an Automated Investigation and Response (AIR) playbook to reduce false positives and focus on real threats.

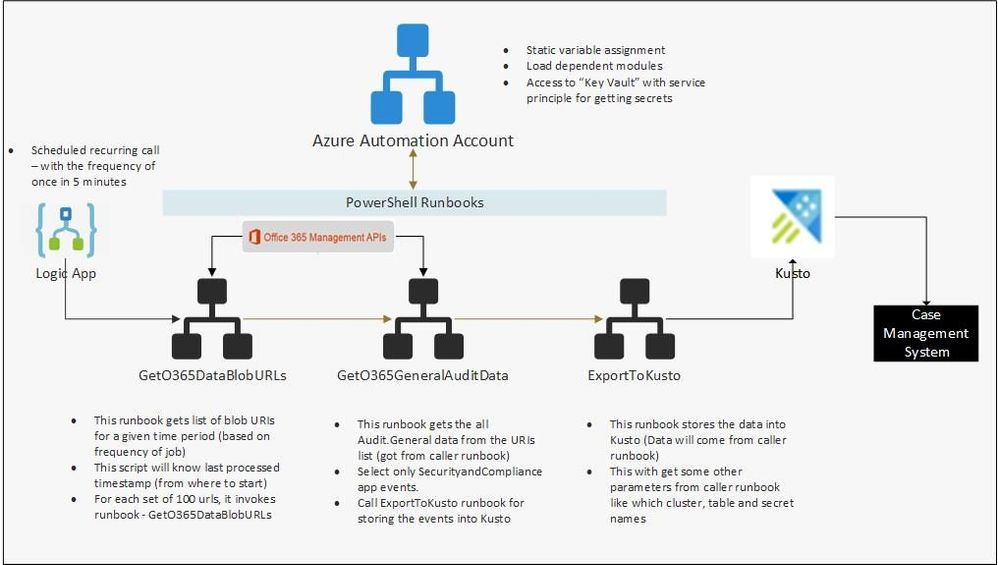

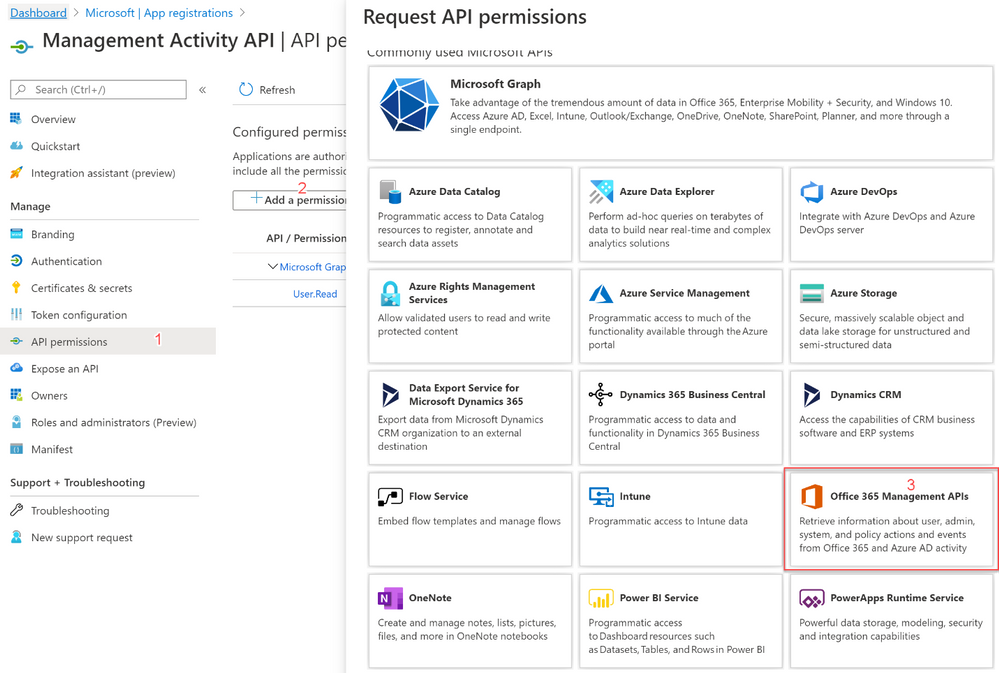

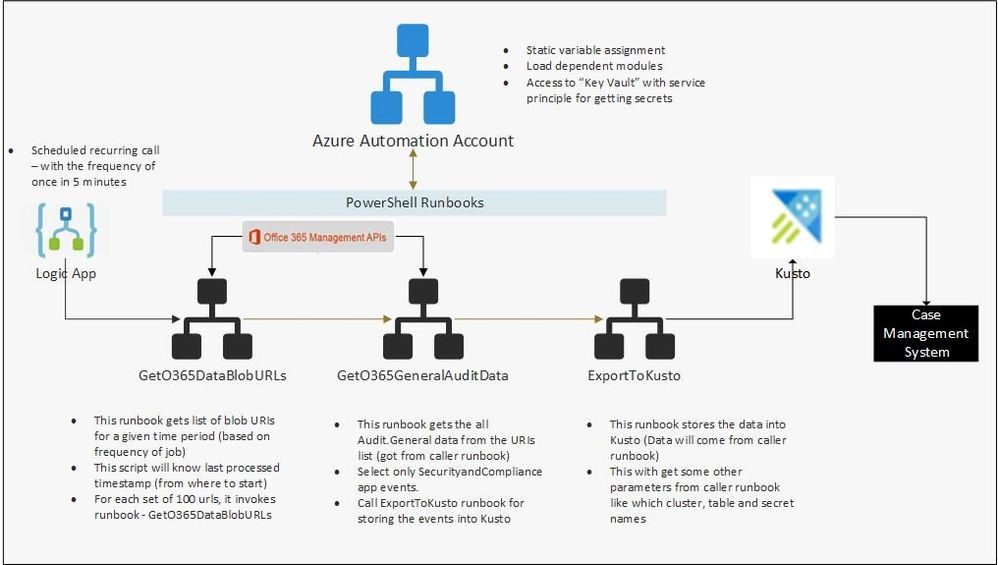

Our engineering team worked on a solution which efficiently fetches only the relevant alerts using the Office 365 Management API and integrates them with the SIEM and case management platform. Below is the solution and the reference architecture. This could potentially be used to fetch and integrate other relevant alerts from Office 365 ATP.

2 – Azure Components

As mentioned in the introduction, we have used the Azure cloud to set up end-to-end infrastructure for getting O365 audit events and storing the required filtered data for near real-time security monitoring and historical analysis. After evaluating and analyzing various combinations of Azure services, we have decided to use following Azure components.

- Azure Data Explorer (Kusto) for final storage, which gives us real-time querying capabilities for data analysis.

- Azure blob containers – As a staging area and for data archival.

- Azure automation account – For deploying and automating the PowerShell scripts we used to fetch audit data using the Office 365 Management API.

- Azure Logic app – For scheduling and calling the PowerShell runbooks.

- Azure Key Vault – For storing the secrets required for accessing the Management API.

The architecture diagram below depicts the end-to-end setup.

Figure 1: Architecture diagram

Figure 1: Architecture diagram

3 – Setting up the Azure Active Directory application

To access audit data from the O365 Management API, we’ll configure access though an Azure Active Directory (AAD) application. Create an AAD application using the steps below, and get access to this AAD application from the tenant admin. Once you have access, keep a note of the Client Id and secret key of this application, because we will need these details later.

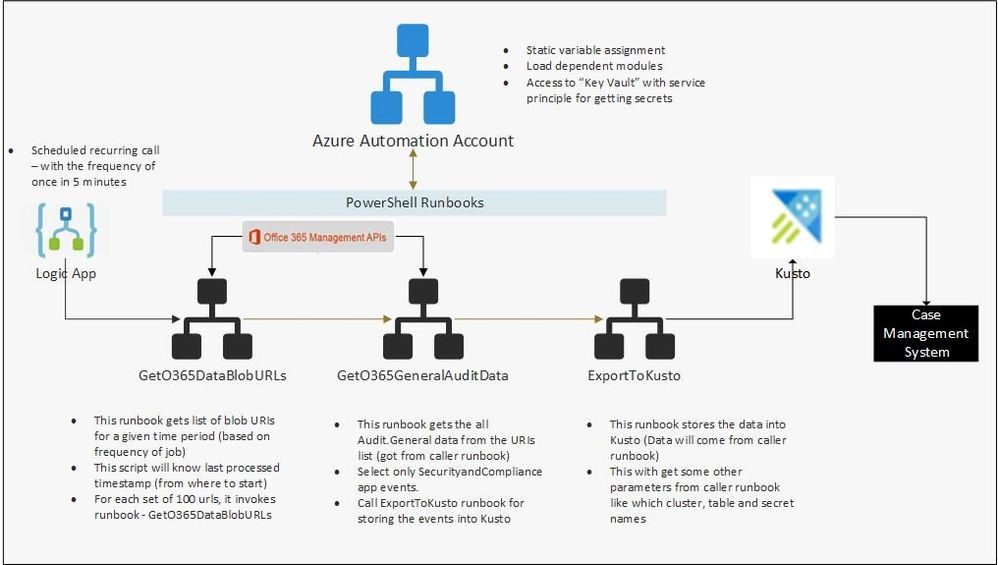

3.1 – Azure AD app registration

- Navigate to the Azure AD admin portal.

- Click “New registration”.

- Enter a name for your app (for example “Management Activity API”). Leave the “Accounts in this organizational directory only” option selected.

- Select “Web” and click “Register”.

Figure 2: Registering an Azure AD application

Figure 2: Registering an Azure AD application

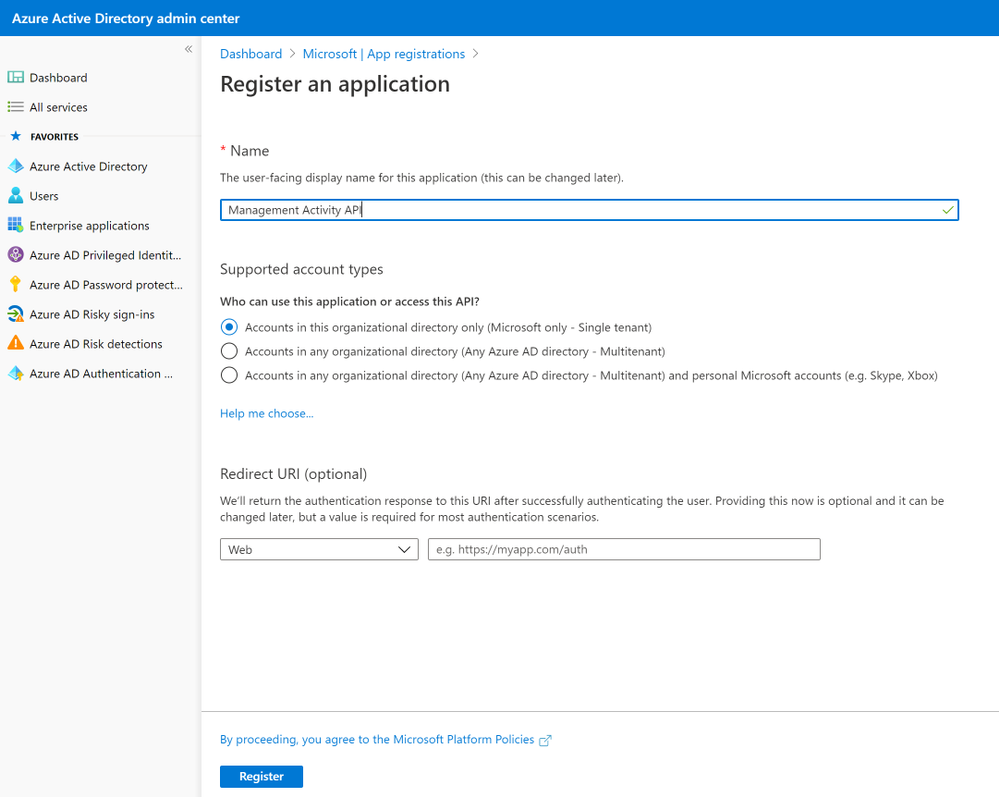

- Click “API permissions” from left navigation menu.

- Click “Add a permission” and then “Office 365 Management APIs”.

Figure 3: Requesting API permissions

Figure 3: Requesting API permissions

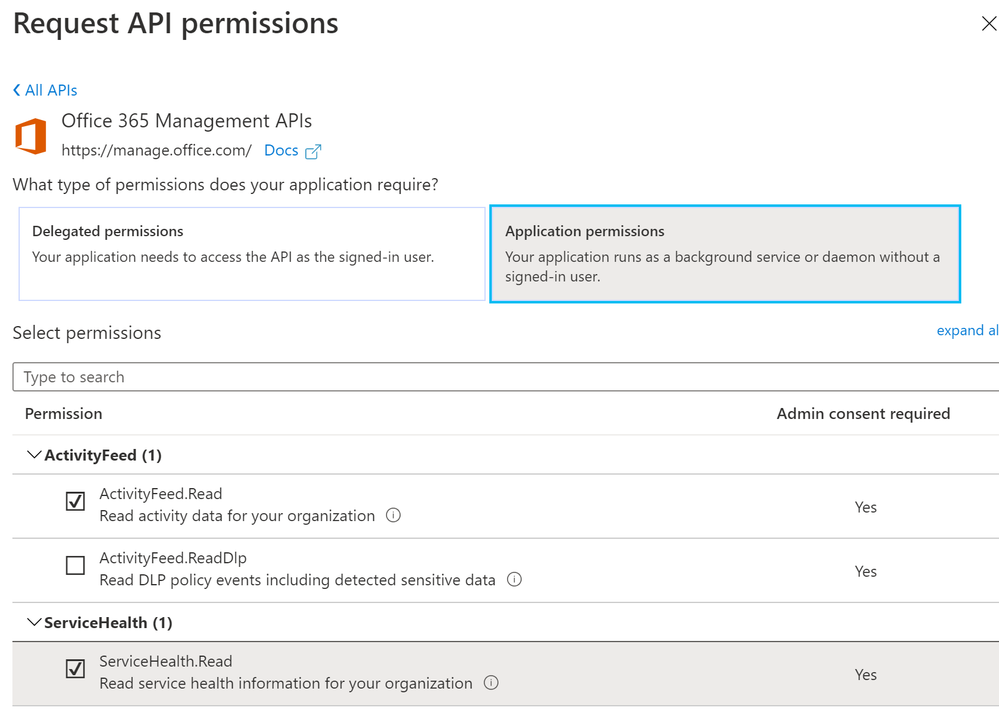

- Click “Application permissions”. Expand “ActivityFeed” and select “ActivityFeed.Read”, expand “ServiceHealth” and select “ServiceHealth.Read”.

Figure 4: Configuration of API permissions

Figure 4: Configuration of API permissions

- Click Add permissions.

- Refresh the list of permissions. Click “Grant admin consent for <your organization’s name>”. Click Yes.

- On the App screen click “Overview” and copy “Application (client) ID” to the clipboard. You will need this later when configuring the ClientIDtoAccessO365 Automation Account variable value.

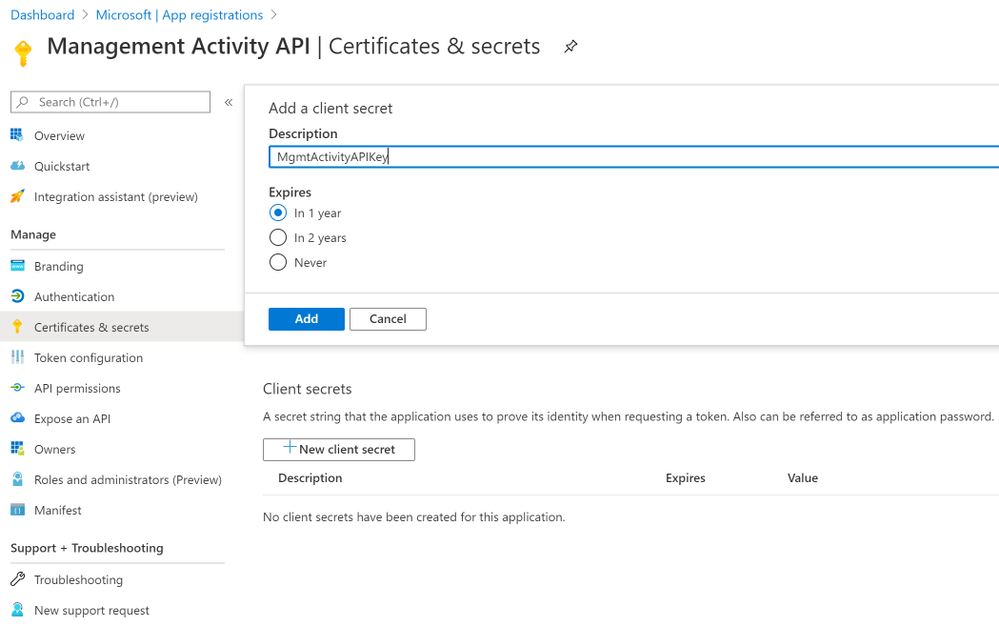

- Click “Certificates & secrets”. Click “New client secret”. Assign a name to the secret. Click Add.

Figure 5: Certificates & secrets

Figure 5: Certificates & secrets

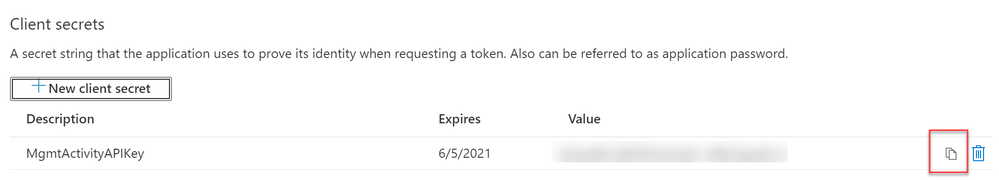

- After the secret is generated, copy the secret to the clipboard by clicking on the “copy” icon next to the secret value. You will need this later when configuring the ClientSecrettoAccessO365 Automation Account variable value.

Figure 6: Client secrets

Figure 6: Client secrets

4 – Setup storage account

We need to set up a storage account for data storage, which we will use as a staging area. We can retain data here for longer term, to be used for historical data analysis. Below is the step-by-step process for setting up the storage account.

- Note: Use the same “Resource Group Name” for all the resources we are setting up, so that we can track all the resources easily.

- Make a note of the name of the blob storage account and name of the container, because we will need to assign this to a variable in the automation account.

Once we create the container, we can see the container like below when we navigate to “Container” section inside the storage account.

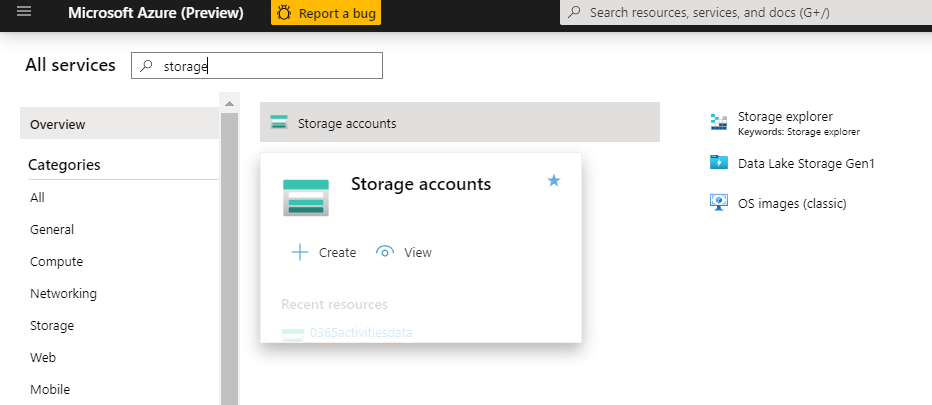

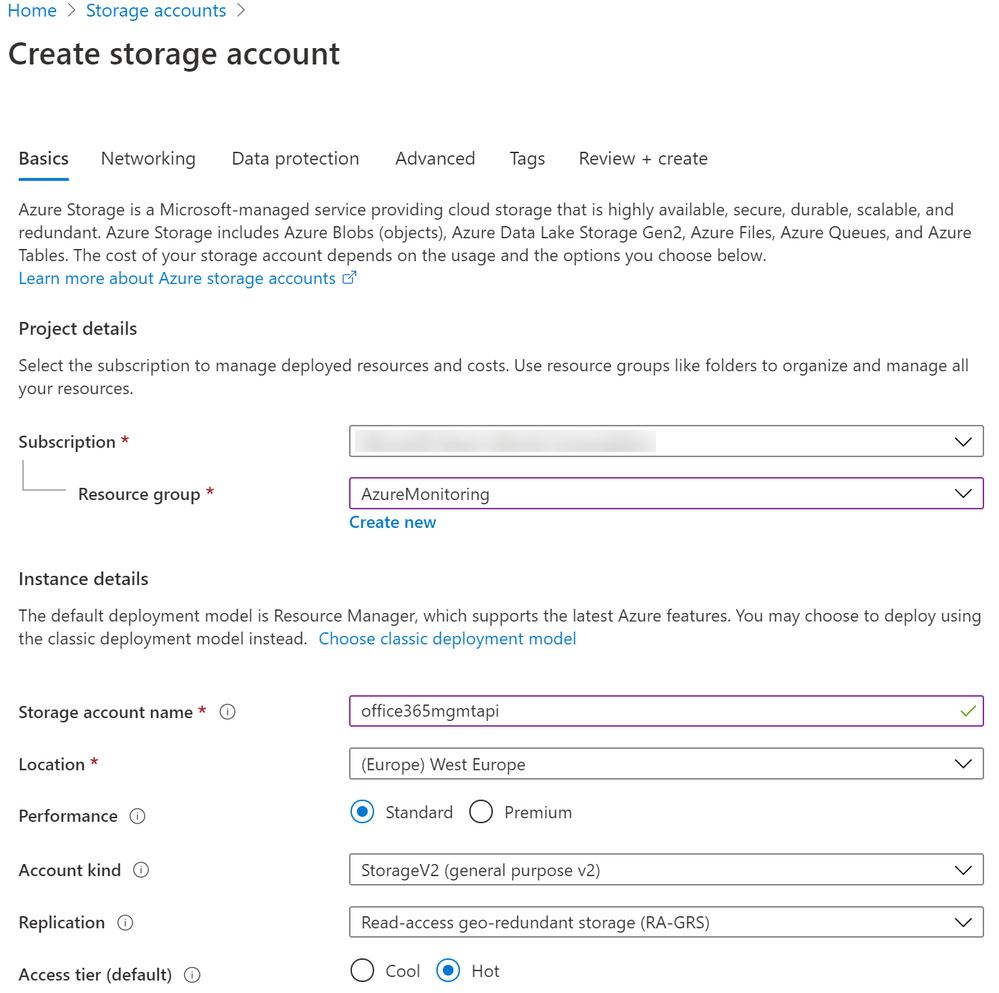

Steps to create Storage Account

- Navigate to “Storage account” service in the Azure portal and select “Add new” to create a new storage account.

Figure 7: Storage accounts

Figure 7: Storage accounts

Figure 8: Create storage account

Figure 8: Create storage account

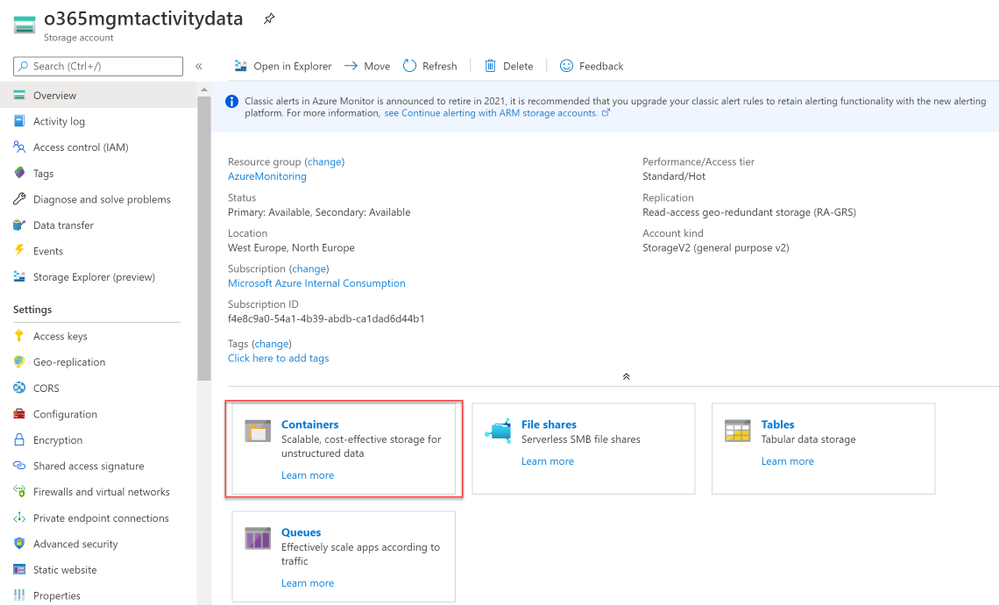

- Once we have a storage account created, click on “Containers” to create one container.

Figure 9: Create a container

Figure 9: Create a container

- Click “Container” to add a new container.

- Provide name for the container. Make sure that “Private” access is selected.

- For more details, refer to these steps to create a blob storage account.

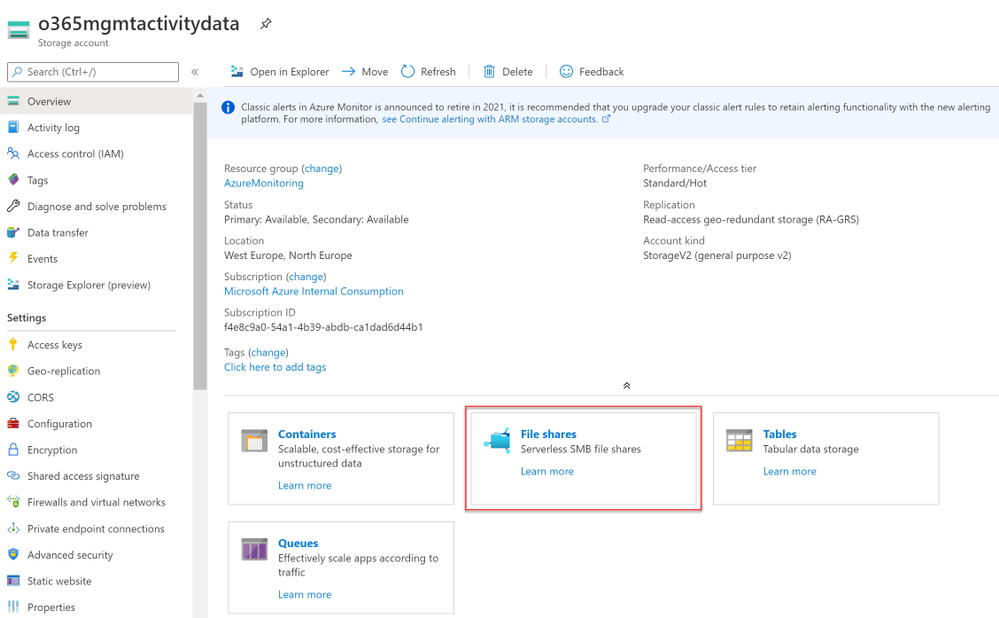

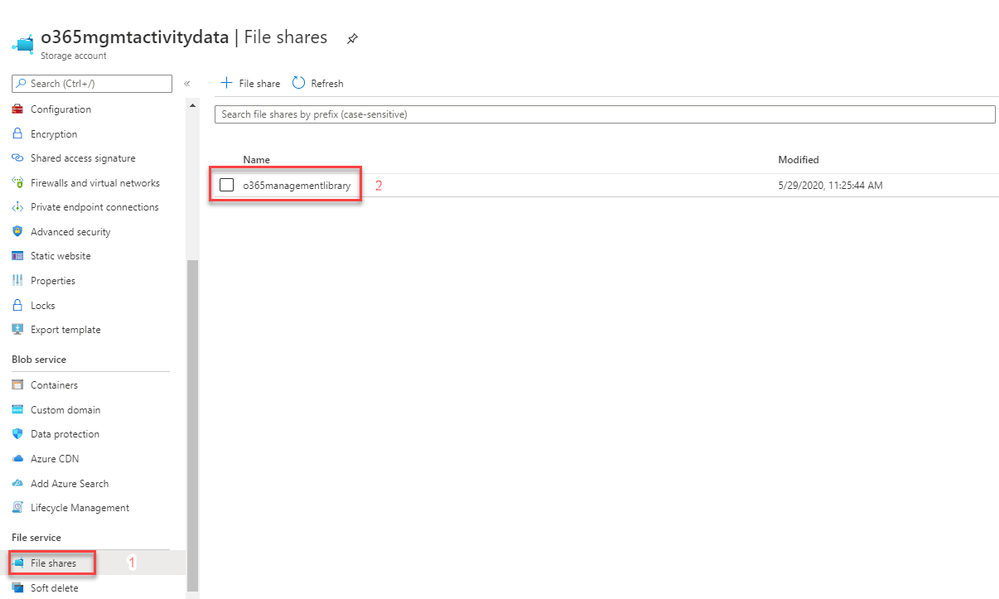

5 – Create a file share in the storage account

We will use Azure File share to store the .dlls needed by Azure Data Explorer for the ingestion process. Follow the step-by-step process as described to create an Azure file share in the storage account. We can create this file share under the same storage account, which we have created in previous step.

- Navigate to the storage account we have created above and select “File share”.

Figure 10: File shares

Figure 10: File shares

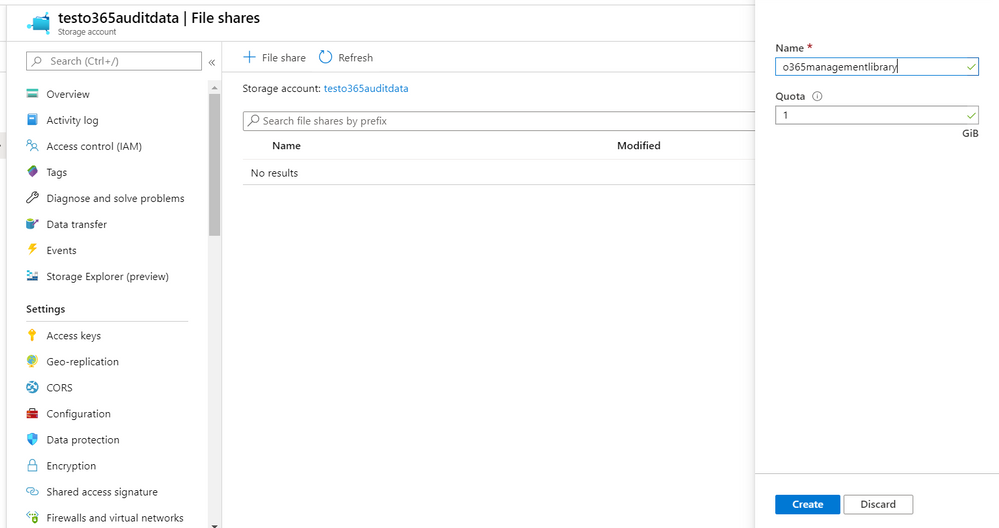

- Click “File share” to create new file share. Provide a name for the file share and allocate a 1GB quota.

Figure 11: Creating a file share

Figure 11: Creating a file share

- Keep a note on the storage account and file share name, we will require them in the Automation Account.

6 – Setting up Azure Data Explorer (Kusto) Data store

Create an Azure Data Explorer cluster and database by following this step-by-step guide or the steps given below. Once the cluster is created, copy the details of cluster and database which we’ll need when inputting the Azure Automation Account variables.

For writing data into Azure Data Explorer we will use “Service Principal / AAD application” access (using the service principal secret). We will need “Admin” permissions during the first run of the script to create a table in the Kusto database. After the table is successfully created, permissions can be reduced to “Ingestor” permissions.

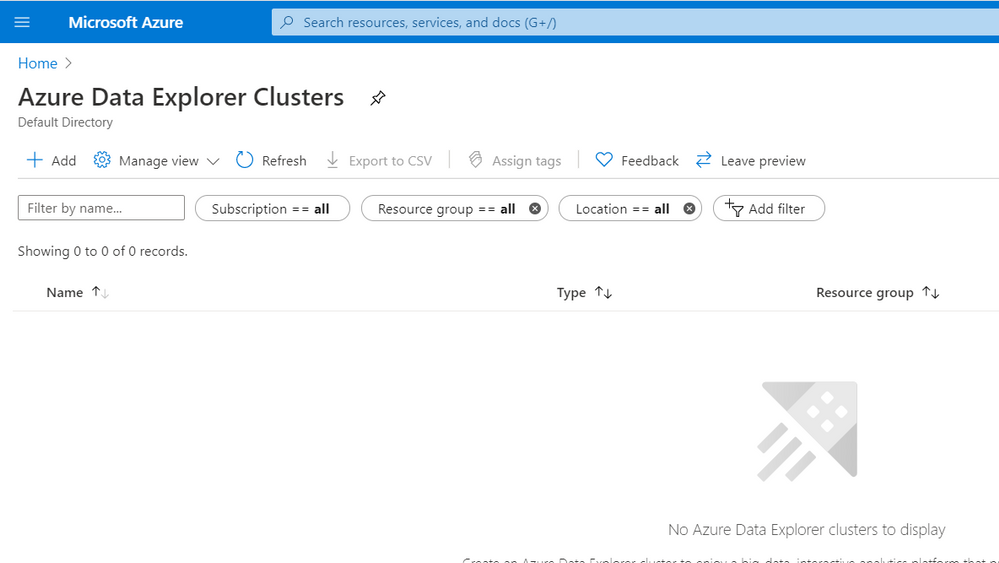

- Login to the Azure portal. Search for the service “Azure Data Explorer”. Click “Add” to create a new cluster.

Figure 12: Azure Data Explorer Clusters

Figure 12: Azure Data Explorer Clusters

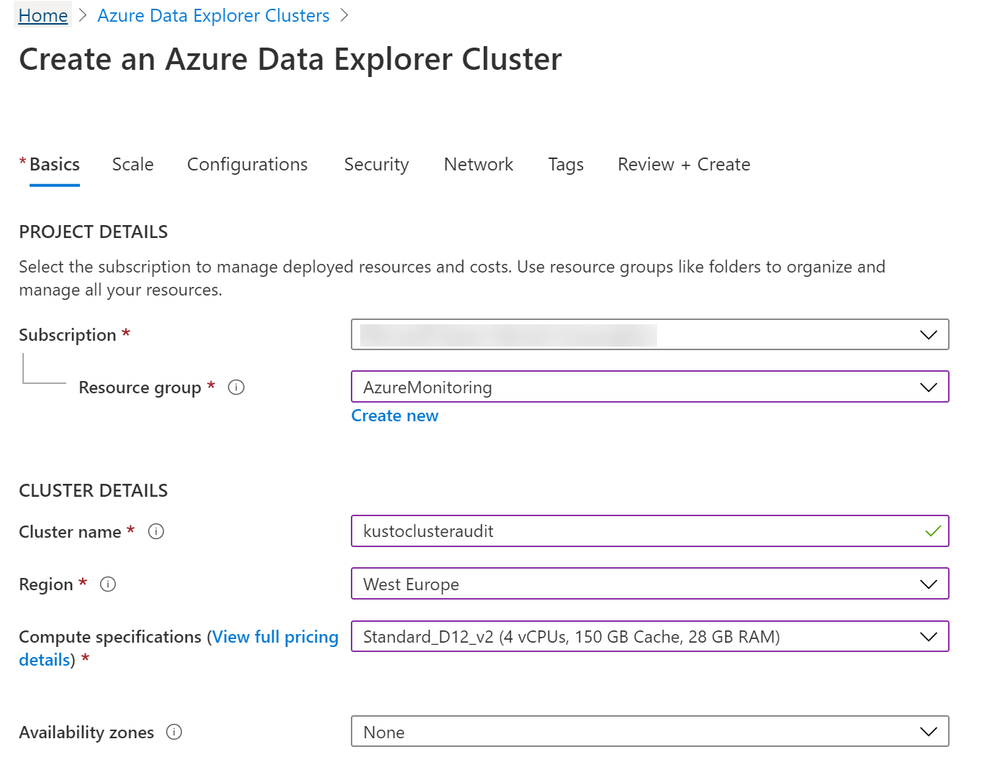

- Provide the required details and create the cluster.

Figure 13: Create an Azure Data Explorer Cluster

Figure 13: Create an Azure Data Explorer Cluster

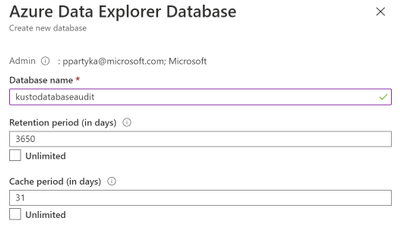

- Once we have the Azure Data Explorer Cluster created, we need to create a database under this cluster. Navigate to the cluster we have created and click “Add database”.

Figure 14: Create new database

Figure 14: Create new database

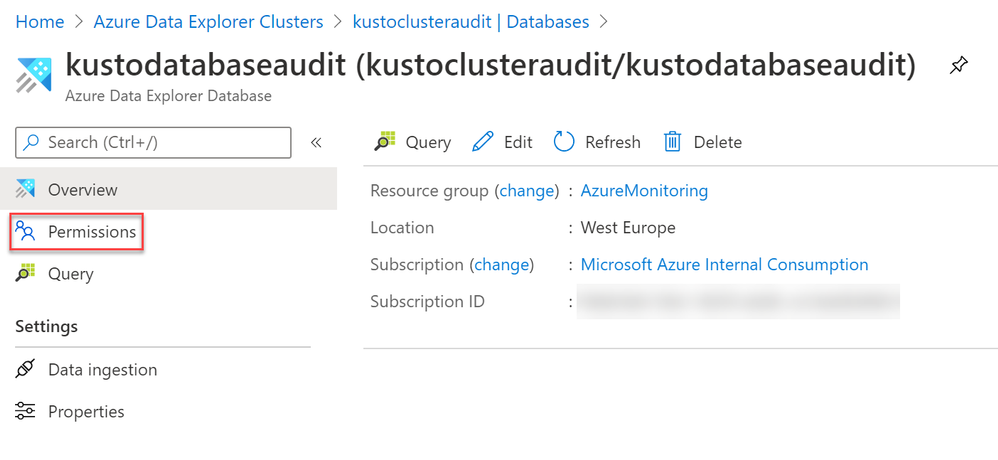

- Navigate to Azure Data Explorer Clusters.

- Open the cluster that you have created in previous step.

- Open the database created under Kusto cluster. Click “Permissions”.

Figure 15: Database Permissions

Figure 15: Database Permissions

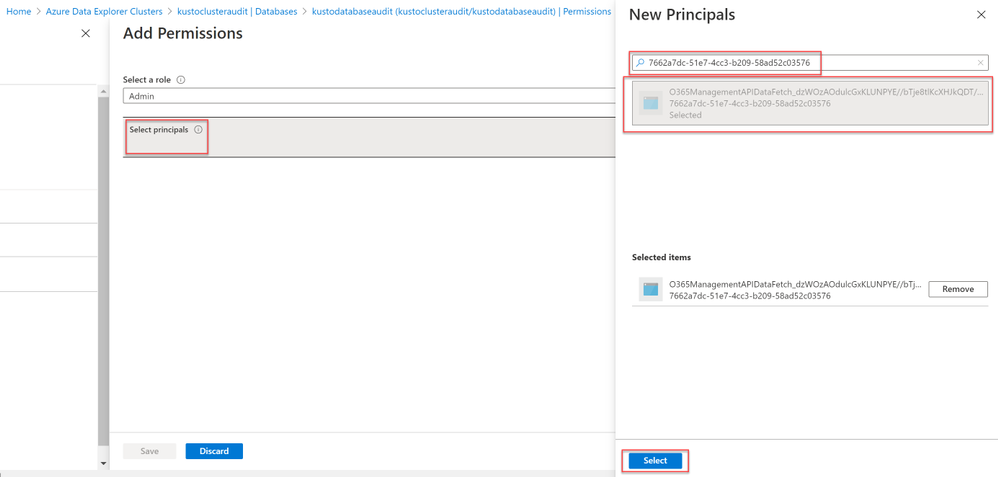

- Click Add. Make sure the “Admin” role is selected. Click “Select principals”. In the New Principals fly-out, paste the Application ID of the Run As Automation account. Select the Application ID entity. Click “Select” and click “Save”.

Figure 16: Add Permissions

Figure 16: Add Permissions

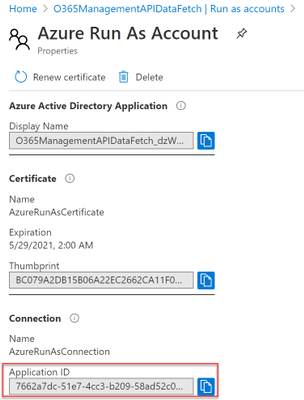

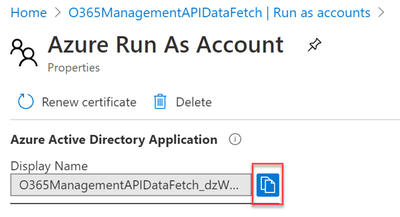

- To find the Azure Run As Account Application ID follow these steps: On the Automation Accounts page, select your Automation account from the list. In the left pane, select Run As Accounts in the account settings section. Click Azure Run As Account. Copy the Application ID.

Figure 17: AzureRunAs account

Figure 17: AzureRunAs account

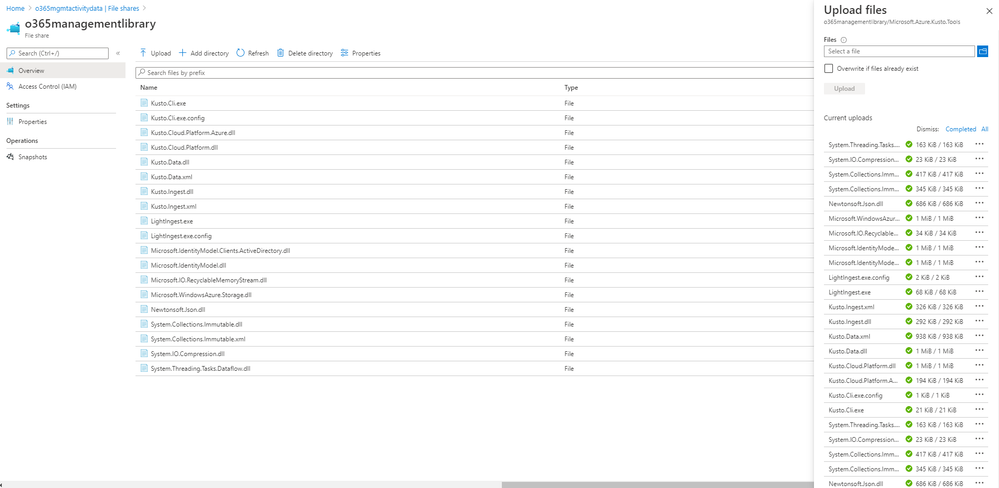

7 – Upload Kusto library to file share

We need to upload Kusto libraries to the Azure Storage Account File Share.

- Download Microsoft.Azure.Kusto.Tools Nuget package Here. https://www.nuget.org/packages/Microsoft.Azure.Kusto.Tools/

- The downloaded NuPKG file is just an archive file. Extract the files from “tools” folder NuGet package, to any folder on your computer.

- In the web browser navigate to the “File share” created in chapter 5. Open the file share.

Figure 18: File Shares

Figure 18: File Shares

- Click “Add directory”. Enter a name for the directory: “Microsoft.Azure.Kusto.Tools”

- Enter the newly created folder and click “Upload”. Select all the files extracted from “tools” folder package in step 2 and upload them. After the upload completes you should see following list of files in the folder:

Figure 19: Uploaded files

Figure 19: Uploaded files

8 – Setup Automation Account

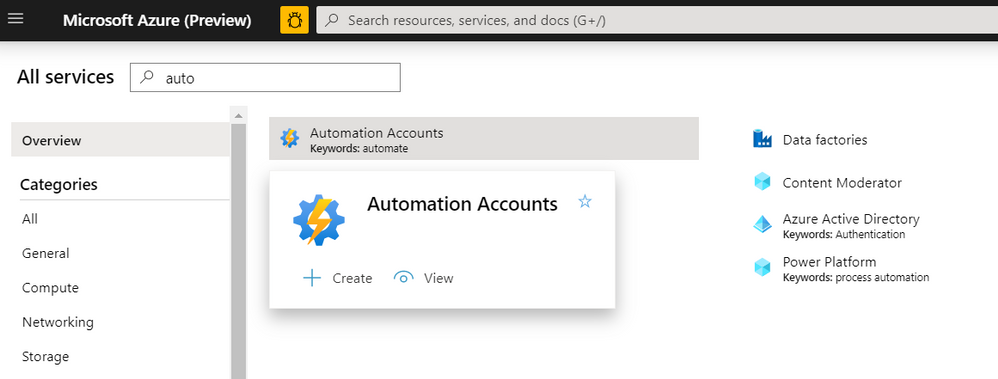

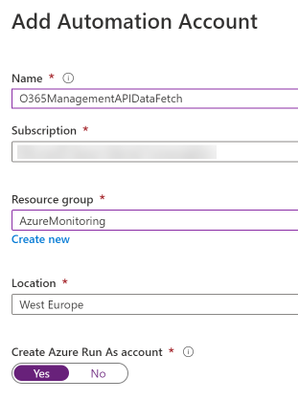

- In the Azure Portal, navigate to the Automation Accounts service and select to create new.

Figure 20: Automation Accounts

Figure 20: Automation Accounts

- Provide an Automation Account name, Azure subscription, and Resource group.

Figure 21: Add Automation Account

Figure 21: Add Automation Account

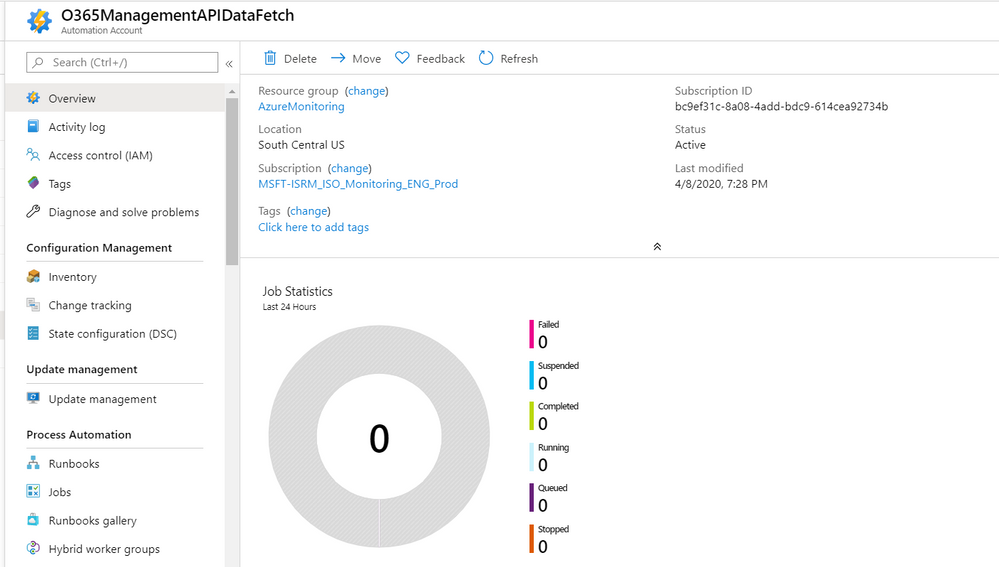

- Once we create the account, we can see the Automation Account in the Azure portal.

Figure 22: Created Automation account

Figure 22: Created Automation account

- For more details refer this document for creating the automation account.

9 – Setup Azure Key Vault and store secrets

Setup Azure Key Vault and store the required secrets. Key vault name and secret names will be used in PowerShell runbooks.

- StorageAccountforBlobstorageAccessKey

- StorageAccountforFileShareAccessKey

- ClientSecrettoAccessO365

- KustoAccessAppKey

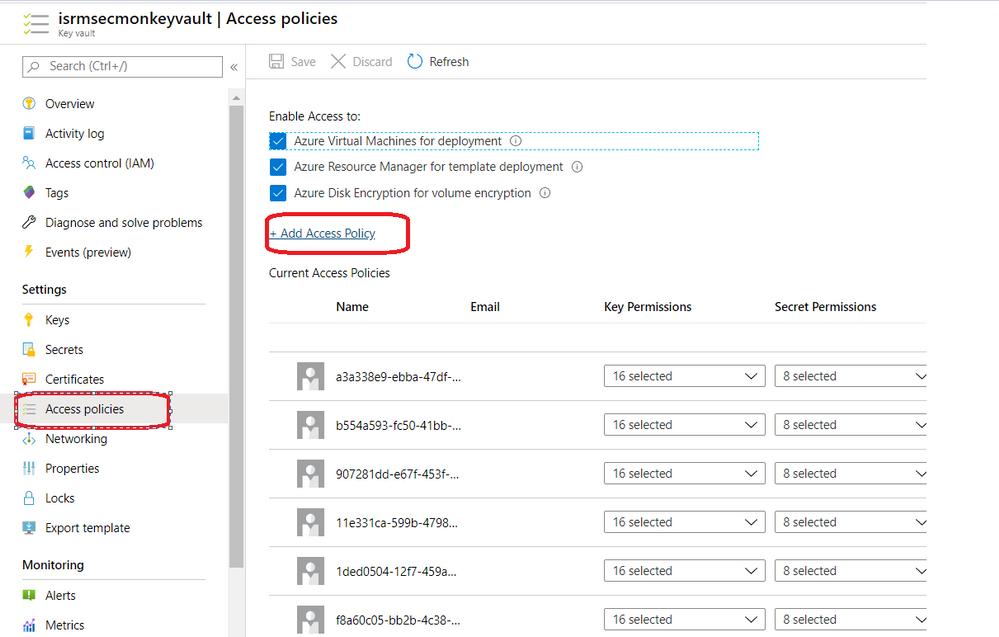

Make sure that Run-as account of Automation account has access to the Key Vault (Read – Get Keys and Secrets).

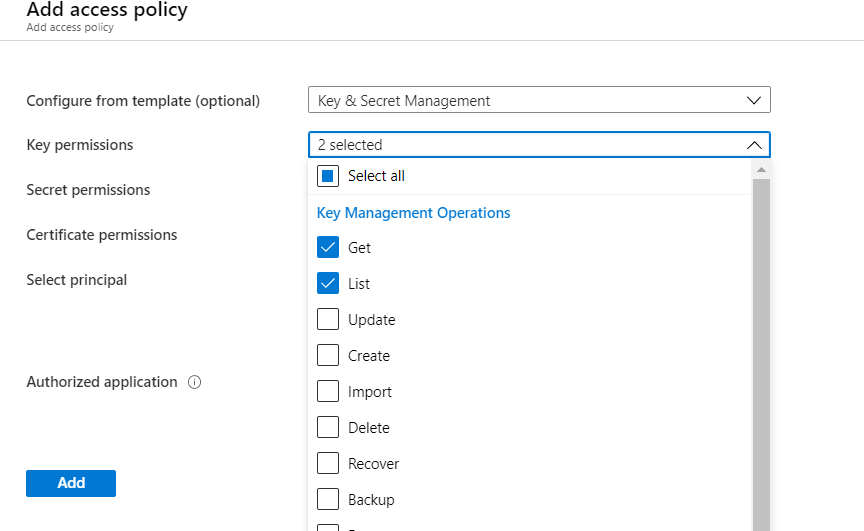

- Navigate to key vault from the portal and select access policies and add policy.

Figure 23: Key Vault Access Policies

Figure 23: Key Vault Access Policies

- Select “Get” and “List” secret permissions.

Figure 24: Add Access policy

Figure 24: Add Access policy

- In the “Select principal” field enter the name of your Azure Automation Account Run As Account. You can find it by navigating to your Automation Accounts. On the Automation Accounts page, select your Automation account from the list. In the left pane, select Run As Accounts in the account settings section. Click Azure Run As Account

Figure 25: Azure Run As Account

Figure 25: Azure Run As Account

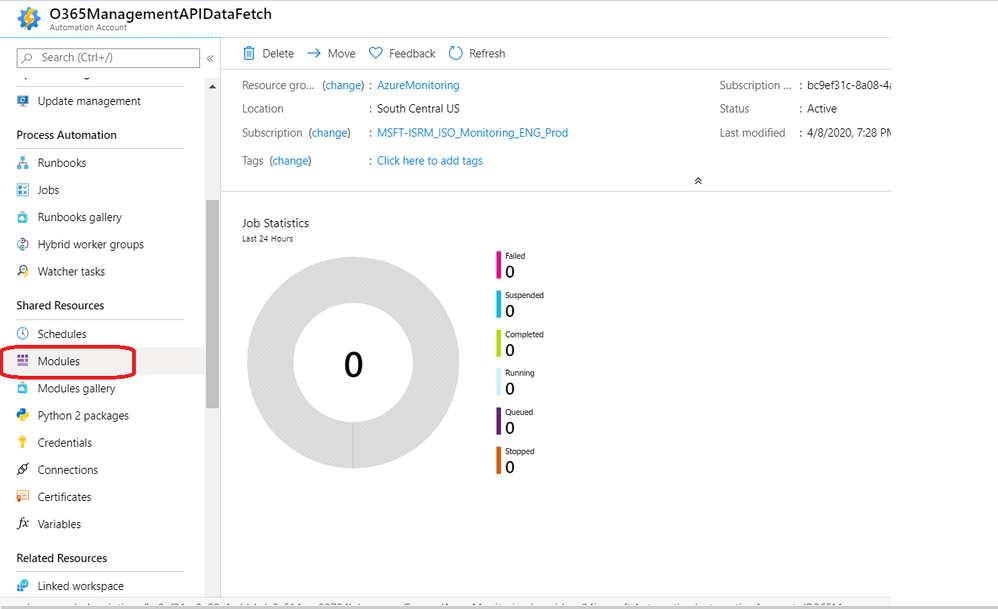

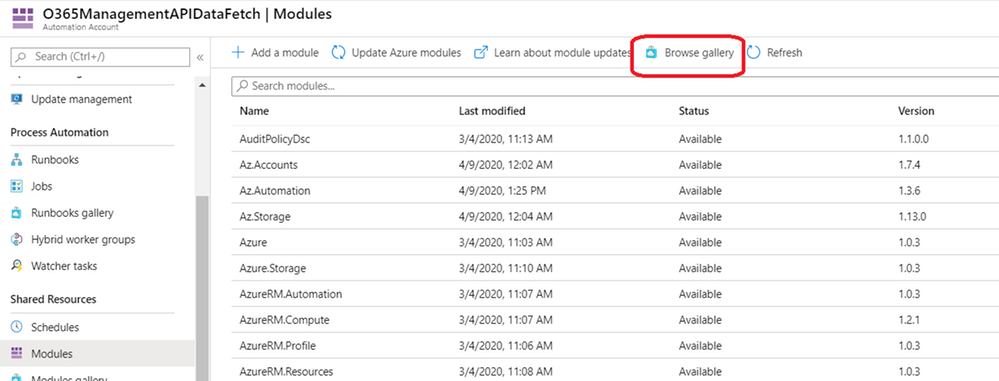

10 – Get dependent modules to Automation accounts

- We require the following modules. If these are not available, we need to import these into the automation account.

- For importing a new module, navigate to automation account and select “Modules” from the menu.

Figure 26: Modules

Figure 26: Modules

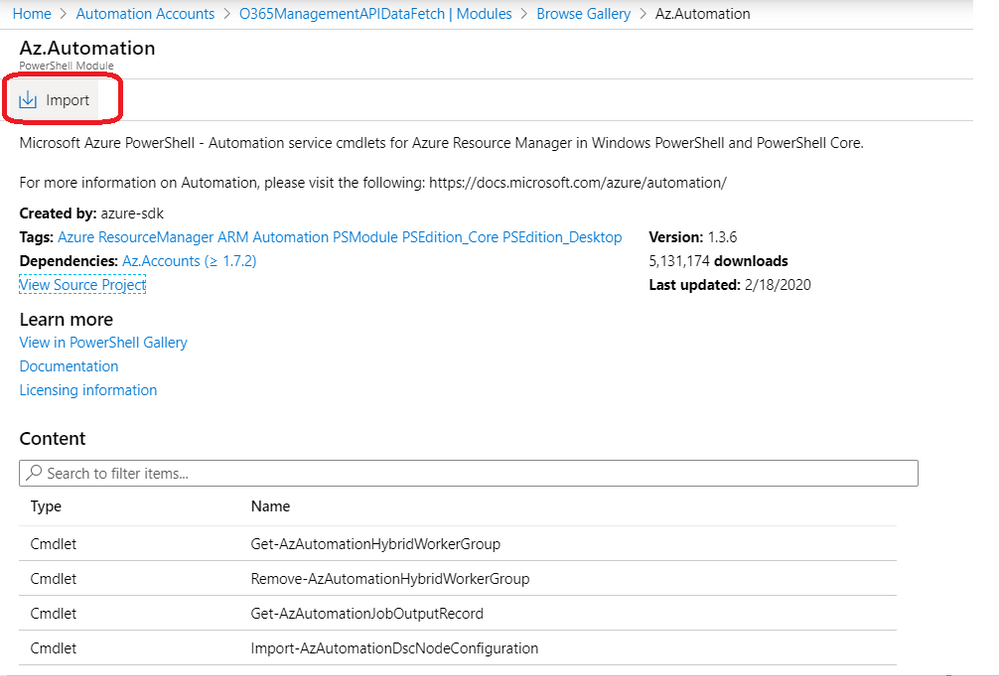

- Select “Browse gallery” and search for the required modules.

Figure 27: Browse Gallery

Figure 27: Browse Gallery

- Click on Import and select OK to import to the automation account.

Figure 28: Import module

Figure 28: Import module

- Repeat the same steps for all required modules.

11 – Adding Runbooks in automation account (PowerShell Runbooks)

We have broken down our PowerShell scripts into 3 different runbooks to achieve the following.

- Segregation of duties

- Parallelism

- Add plug-and-play capability

Deploy 3 runbooks into the automation account by following the steps below.

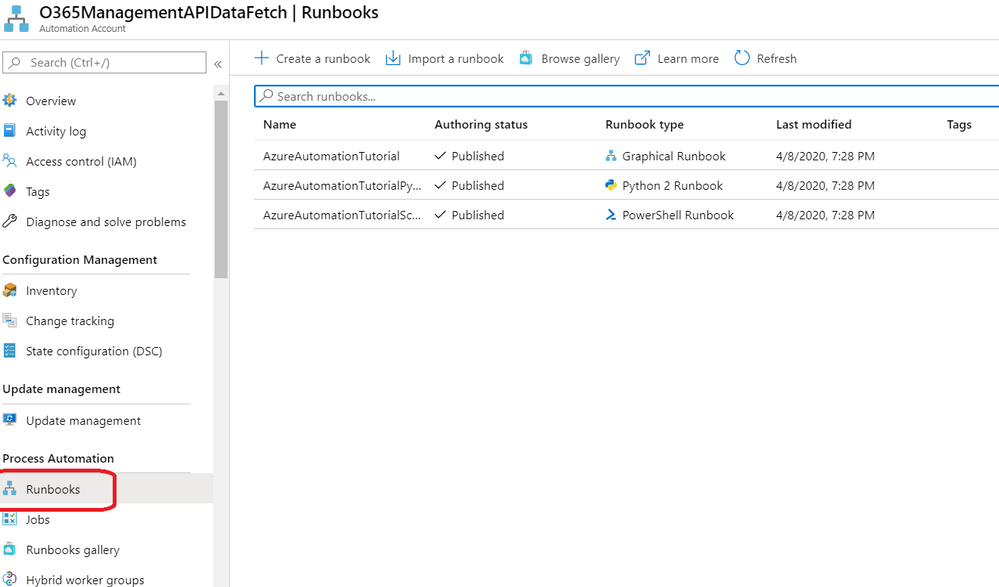

- Navigate to the Automation account we have created above and select “Runbooks”.

Figure 29: Runbooks

Figure 29: Runbooks

- Click on “Import Runbook”, choose the file from local system, and provide runbook type as “PowerShell”

- We have the following 3 PowerShell scripts, which need to be imported. Make sure to provide the names of the runbooks as we have specified in below table, otherwise scripts execution may fail.

- Download the zip file from here. Once you unzip, you can find the following 3 files.

Table 1: Runbook scripts

|

Name of the runbook (Provide the same as below while importing)

|

Type of Runbook

|

PowerShell Script to import

|

|

GetO365DataBlobURLs

|

PowerShell

|

GetO365DataBlobURLs.ps1

|

|

GetO365GeneralAuditData

|

PowerShell

|

GetO365GeneralAuditData.ps1

|

|

ExporttoKusto_O365AuditGeneralData

|

PowerShell

|

ExporttoKusto_O365AuditGeneralData.ps1

|

12 – Adding variables in the automation account

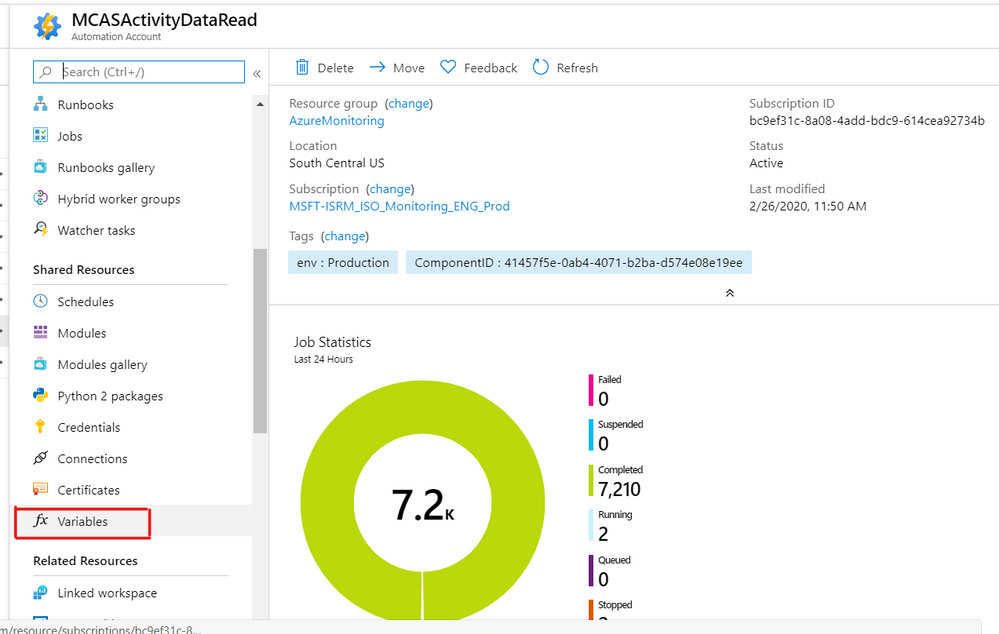

- Navigate to the automation account and select variables from the menu.

Figure 30: Variables

Figure 30: Variables

- The following table lists the variables to be added. Add variables by selecting “Add a Variable”. Make sure to select “encryption/hiding” in case of secrets.

Table 2: Variables

|

Variable Name

|

Data Type

|

Value description

|

|

AutomationAccountName

|

String

|

Name of the Automation Account created in chapter 8

|

|

AutomationAccountResourceGroupName

|

String

|

Name of Resource Group which Automation Account was created in.

|

|

BlobStorageContainerName

|

String

|

Name of Container created in chapter 4

|

|

ClientIDtoAccessO365

|

String

|

Azure AD application ID created in chapter 3.1

|

|

ClientSecrettoAccessO365

|

String (encrypted or from KeyVault)

|

Azure AD application secret created in chapter 3.1

|

|

FileShareNameinStorageAccount

|

String

|

Name of the file share created in chapter 5

|

|

KeyVaultName

|

String

|

Name of the Azure Key Vault created in chapter 9

|

|

KustoAccessAppId

|

String

|

Azure Run As Account application ID. Steps to find it are described in chapter 12.1

|

|

KustoAccessAppKey (optional)

|

String (encrypted or from KeyVault)

|

Not required if access key is stored in Azure Key Vault in KustoAccessAppKey

|

|

KustoClusterName

|

String

|

Azure Data Explorer cluster name created in chapter 6

|

|

KustoDatabaseName

|

String

|

Azure Data Explorer database name created in chapter 6

|

|

KustoIngestionURI

|

String

|

|

|

KustoTableName

|

String

|

Name of the Azure Data Explorer table that PowerShell script will create.

|

|

MicrosoftLoginURL

|

String

|

https://login.microsoftonline.com

|

|

O365ResourceUrl

|

String

|

https://manage.office.com/.default

|

|

O365TenantDomain

|

String

|

Default domain name of the tenant

|

|

O365TenantGUID

|

String

|

This is the ID of the Office 365 tenant where alerts and investigation will be pulled out from. Follow this article to locate tenant ID. https://docs.microsoft.com/en-us/onedrive/find-your-office-365-tenant-id

|

|

PathforKustoExportDlls

|

String

|

Name of the folder created in the file share in chapter 5.

|

|

RunbookNameforExportDatatoKusto

|

String

|

ExporttoKusto_O365AuditGeneralData

|

|

RunbookNameforGetAuditDataBlobURIs

|

String

|

GetO365DataBlobURLs

|

|

RunbookNameforGetAuditDataFromURIs

|

String

|

GetO365GeneralAuditData

|

|

StorageAccountforBlobstorage

|

String

|

Name of the storage account created in chapter 4

|

|

StorageAccountforBlobstorageAccessKey

(optional)

|

String (encrypted or from KeyVault)

|

Not required if access key is stored in Azure Key Vault in StorageAccountforBlobstorageAccessKey (default configuration)

|

|

StorageAccountforFileShare

|

String

|

Storage account created in chapter 4

|

|

StorageAccountforFileShareAccessKey

(optional)

|

String (encrypted or from KeyVault)

|

Not required if access key is stored in Azure Key Vault in StorageAccountforFileShareAccessKey (default configuration)

|

|

TenantIdforKustoAccessApp

|

String

|

This is the ID of the Azure AD tenant where Azure Run As Account is provisioned. Follow this article to locate tenant ID. https://docs.microsoft.com/en-us/onedrive/find-your-office-365-tenant-id

|

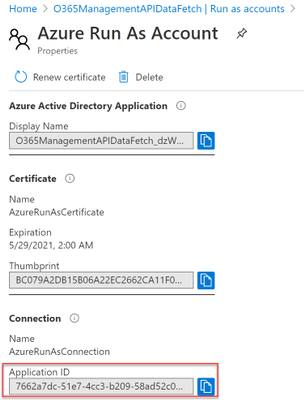

12.1 – Finding the Azure Run As Account application ID.

You can find the KustoAccessAppId by navigating to your Automation Accounts. On the Automation Accounts page, select your Automation account from the list. In the left pane, select Run As Accounts in the account settings section. Click Azure Run As Account. Copy Application ID and paste it as KustoAccessAppId variable value.

Figure 31: Copy the Application ID

Figure 31: Copy the Application ID

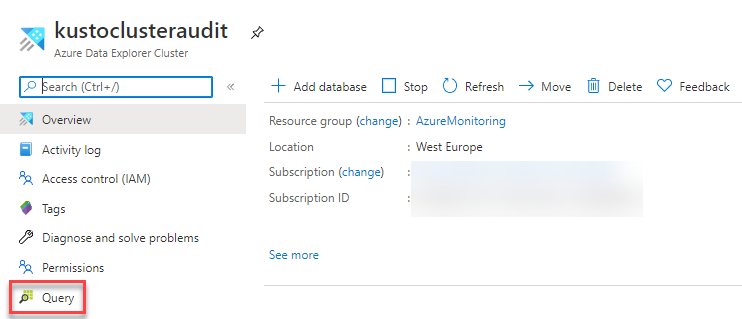

13 – Querying the data

After data is successfully imported by the scripts you can query it using KQL.

In the Azure Portal navigate to Azure Data Explorer Clusters. Click on the cluster name. Click on Query.

Figure 32: Query the data

Figure 32: Query the data

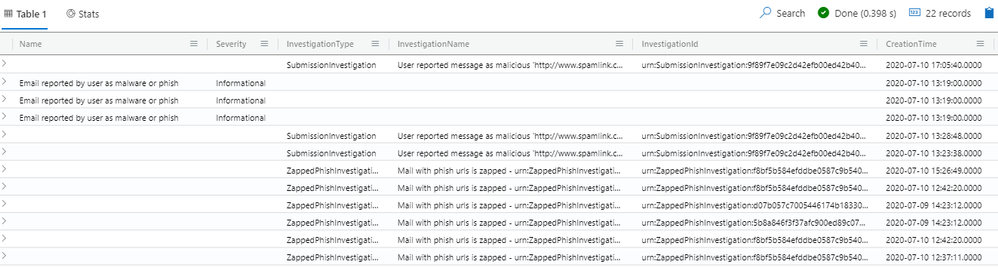

Example query to verify that data is ingested:

KustoAuditTable

| extend IngestionTime=ingestion_time()

| order by IngestionTime desc

| project Name,Severity,InvestigationType,InvestigationName,InvestigationId,CreationTime,StartTimeUtc,LastUpdateTimeUtc,EndTimeUtc,Operation,ResultStatus,UserKey,ObjectId,Data,Actions,Source,Comments,Status

And finally, after all this effort an example of the output:

Figure 33: Data Output

Figure 33: Data Output

14 – Final Remarks

- Use the following article to create a schedule to run scripts periodically (for example every hour). It is enough to create a schedule for the GetO365DataBlobURLs runbook.

- During the first execution of the PowerShell scripts, a Kusto table will be created with its entire schema. Afterwards permissions of the Azure Run As Account can be lowered from “Admins” to “Ingestor”.

- Data ingestion is delayed by approximately 5 minutes. Even after the script successfully completes, data may not show up immediately in the Azure Data Explorer cluster. This is caused by the IngestFromStreamAsync method used to ingest data to the Azure Data Explorer cluster.

- After Alerts and Investigations data is ingested into the Azure Data Explorer cluster you will notice some empty columns in the table. This is deliberate to accommodate for data coming from other workloads if you wish to ingest them as well.

Special thanks to @Satyajit Dash, Anki Narravula, and Sidhartha Bose for their contributions.

by Scott Muniz | Jul 20, 2020 | Uncategorized

This article is contributed. See the original author and article here.

We’re excited to introduce a new blog series that explores the concept of continuous learning across different audiences, and will start with releasing five blogs within the next couple months to discover the learning journeys of our future generation, customers, partners, and employees. Today, we kick off the series with a peek into how Microsoft is working with universities and colleges around the world to help skill future generations using Microsoft technologies.

In the East Midlands of England, you cannot miss a small, picturesque cathedral town, originally settled in the Roman age. With a population of 100,000, the city of Lincoln features well-known landmarks, such as Lincoln Cathedral, the tallest building in the world for over 200 years, and the 11th-century Lincoln Castle. It is also home to the University of Lincoln, a public research university, recently named number 17 in the prestigious, annual Guardian University League Table 2020. As of May 2020, the school also holds another important distinction: one of the first educational institutions in the world to certify a group of students on Microsoft Azure fundamentals.

Lincoln is one of 16 higher education institutions that joined the global program to help skill future innovators, launched by Microsoft to kick off its ‘Future Skills Initiative.’ This work and the lesson from it informed the recently-announced Microsoft Learn for Educators program. The skills initiative has one simple goal, according to Rachel Wortman Morris, Ph.D., Business Program Manager at Microsoft: empower students with Microsoft technology skills.

“We kicked off our initiative in January to test out what it means to skill-up students and certify them to be future-ready on Microsoft technologies through their existing classes and with their own faculty members at colleges and universities around the globe,” said Wortman Morris. “This effort helped us learn how we best support faculty members and their students who want innovative with hands-on, technical content from Microsoft as a part of their curriculum.”

University of Lincoln: Certification success in times of COVID-19

Lincoln is one of six universities spread out over the United Kingdom (UK) that participated in the program. And despite the challenges posed to professors and students by the imposed restrictions in the country due to the Corona Virus pandemic outbreak, Lincoln succeeded in skilling more than 100 participating students in Microsoft Azure fundamentals. The cohort used online lectures, workshops and created a group channel on Microsoft Teams to all work together virtually and ask questions.

“Adding certification opportunities for our students on top of their degree is a unique selling point for Lincoln, as not many universities in the UK offer this,” said Lincoln professor Derek Foster, who taught the Azure Fundamentals course for the first time this past semester. “This is extra-curricular study for our students but takes them a step beyond the Microsoft Technology Associate certifications that we already offer. We’re planning to expand this program in the next few years and offer a package with additional certification opportunities in AI, Power Platform and Data fundamentals.”

University of Cincinnati: Student learning to supply tech demand

But Lincoln was not alone in their early certification success. Across the pond, back in The United States, another key participant in the program was a much larger institution that has also taken a unique approach to skilling their technology students; the University of Cincinnati (UC). Founded in 1819, UC is the oldest institution of higher education in the city with an annual enrollment of over 44,000 students and a university IT program, which has also partnered early with Microsoft to get students certified on Microsoft Azure fundamentals.

“We are taking a wholistic approach, viewing this as an integrated supply chain to provide students with more than just a degree,” said David Adams, Chief Innovation Officer at the University of Cincinnati. “We’ve developed relationships with businesses in the region to deeply understand their current, future, and on-going ‘talent needs’ and are leveraging our university’s experienced-based and educational resources to deliver. We essentially see ourselves as a developer of talent and, as with any supply chain, are seeking to do this is the most efficient and agile manner possible to meet the needs of the market.

Enter the University of Cincinnati partnership with Microsoft to bolster its Cooperative Education (Co-Op) program, which delivers thousands of Co-Op placements each year in over 1,300 companies in 44 states and 22 countries around the globe. The University of Cincinnati founded the Co-Op program, ranked #1 in the US for experiential learning. and students generated in excess of $75M in personal income last year. As the Chief Innovation Officer and architect of the recently announced Cincinnati Innovation District, Adams looks at Co-Op as a foundation to make the region around Cincinnati a global technology hub, much like he has seen in other parts of the country.

The UC program has expanded the offering of Microsoft training curriculum beyond the traditional technology degrees to promote a concept Adams calls ‘digital fluency’ for non-technical career paths. Not only is it one of the first universities to successfully certify 90% of the inaugural class of students on Microsoft Azure fundamentals, UC has partnered with 30 school districts across the state of Ohio and currently have 1300 high school students enrolled in classes through the Early College IT program. Students can start classes on-line or in person as early as their freshman year, earning one year of college credit as they graduate, free of charge, which eliminates the affordability and access barriers to college.

“The partnership with Microsoft helps UC integrate technology certifications for both the high school programs and the Information Technology bachelor program,” adds Bunty Ranu, a cloud solutions architect at Microsoft, who recently returned to his alma mater to teach and augment the Azure Fundamentals course at UC. “Our vision is that Microsoft will partner with UC across programs in all facets of IT, from development to database to networking, and provide better content, while adding more certifications over time.”

Microsoft Learn for Educators

Microsoft’s initial engagements spanned across five continents (Africa, Asia, Europe, North America, South America) with a goal of training 30 professors to educate about 1000 students worldwide. Microsoft Learn for Educators has taken the learnings and feedback from the initial program, expanding global partnerships with additional colleges and universities to create new learning paths with Microsoft fundamentals training content to inform future curriculum.

Microsoft also added a new series of learning paths to inspire and challenge students to build with social impact and responsibility in addition to the foundational developer paths designed especially for students. The curriculum is designed to help to make these courses easy to teach for faculty, as the student population in universities become more and more diverse as digital transformation forces traditional companies to cross the technology chasm.

“As a mature student coming back to school after a 25-year career in manufacturing engineering, I thought it was a fantastic opportunity,” said Garry Clawson, one of the students who recently was certified in Microsoft Azure fundamentals at the University of Lincoln. “There is a group of mature students in this course and learning new technologies is becoming essential. The idea of getting a degree when you’re 21 and working nonstop is just not going to happen anymore with technology moving so fast. In today’s world you have to constantly change and relearn. It’s a mindset shift for sure.”

by Scott Muniz | Jul 20, 2020 | Uncategorized

This article is contributed. See the original author and article here.

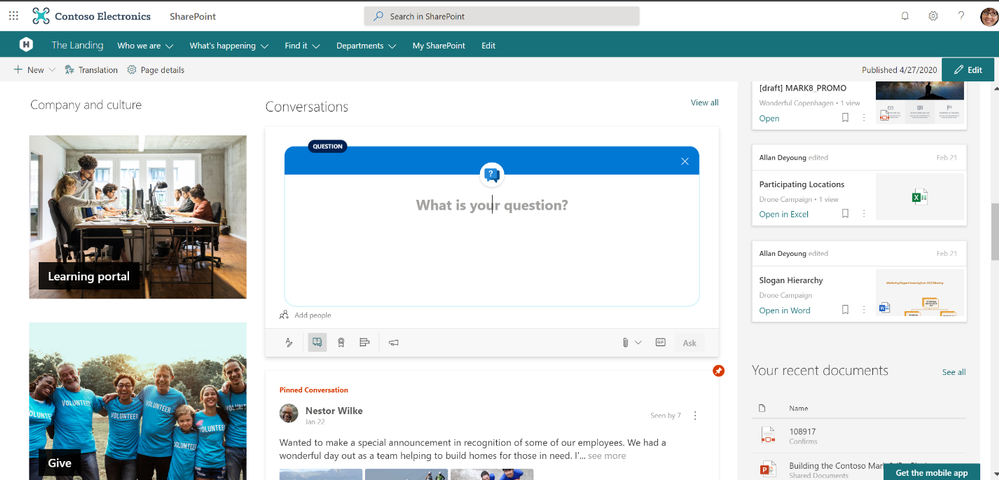

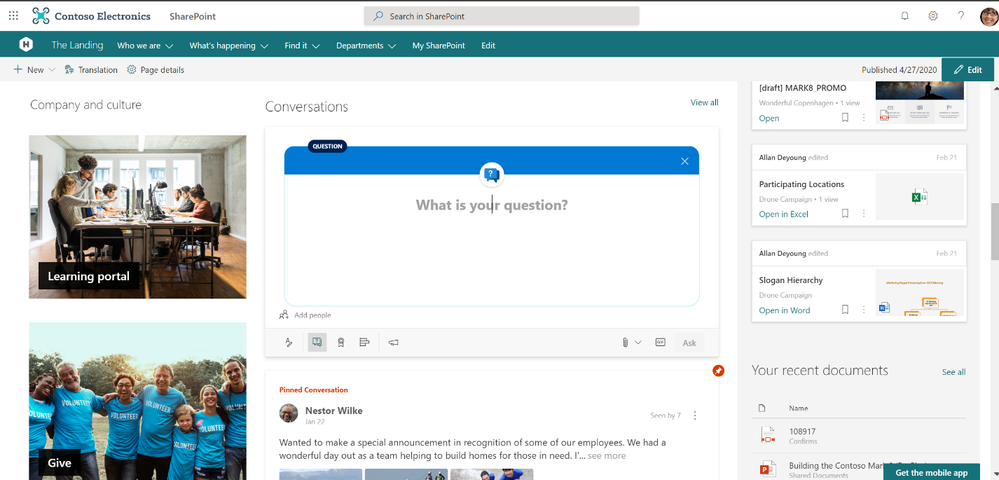

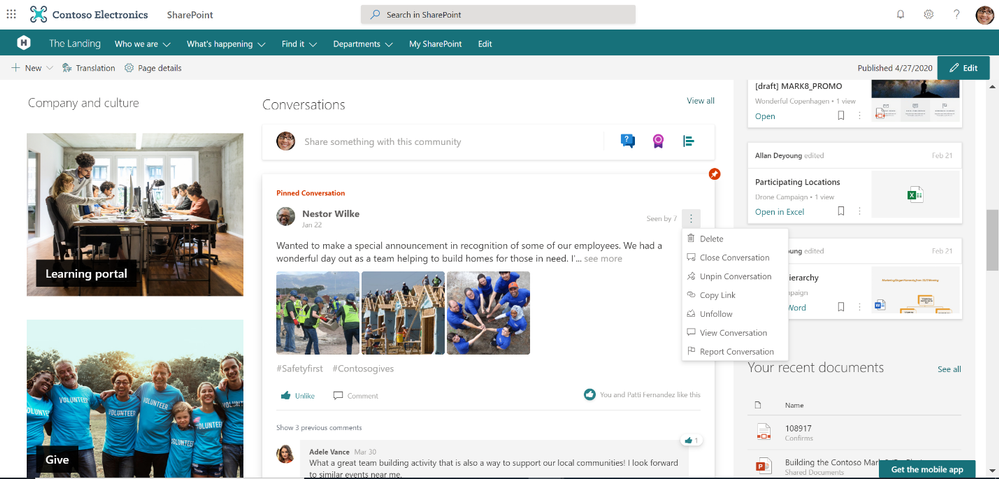

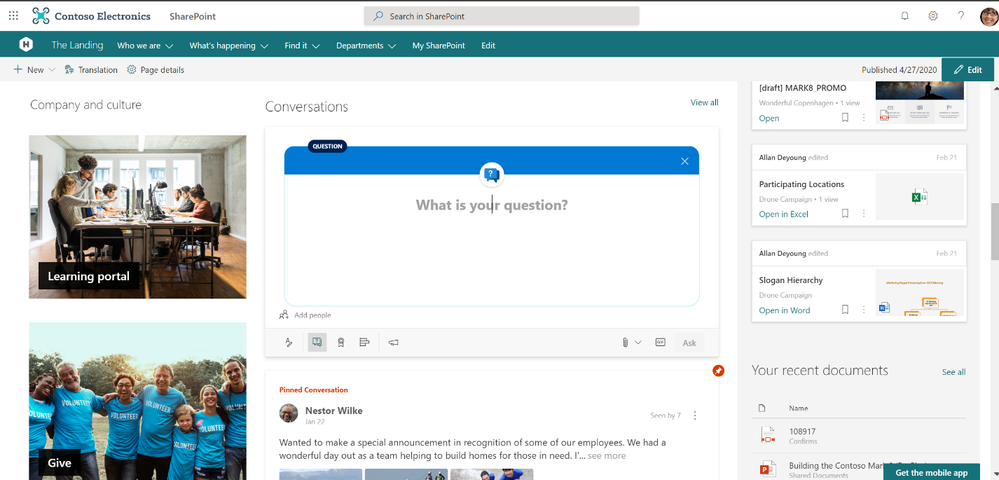

Bring the new Yammer styling and capabilities to your modern intranet. At Microsoft Ignite 2019, we announced that the Yammer Conversations web part for SharePoint Online would be updated with the new Yammer experience. Now, we are excited to announce that it is generally available, meaning that you get all the new Yammer experiences on your SharePoint pages, news articles, and SharePoint sites.

Add the power of community to your sites

The updated Yammer Conversations web parts integrates conversations from any Yammer community, topic, user, or home feed, so you can stay connected to the discussions happening across your organization and add social conversations while still in SharePoint. Starting today, it automatically replace the previous Yammer conversations web part without any action needed from site admins.

What’s New

- The new Yammer look including rich previews for media and visual treatment for questions, praise, and polls.

- The Yammer publisher with functionality like

- creation of any type of Yammer post directly from SharePoint – questions, polls, praise, etc.

- upload of images and files into new conversations and replies directly from SharePoint.

- usage of rich text on messages created from SharePoint Online.

- Yammer management actions such as Close Conversation, Mark Best Answer to a Question, and Pin a conversation, etc.

- An improved more relevant Home feed including the ability to start a conversation in any communit when configured to this mode.

- Customize it to view from 2 conversations to 12 conversations.

How to get the new web part

Sites that are already using the Yammer Conversations web part will be updated with the new experience. To add Yammer Conversations to new sites, just visit the web part gallery and select Yammer Conversations and Get Started. Then, you can filter your Community, User, Topic, or Home, and search for your source. Customize the number of conversations to display and select Republish to see and engage with your new Yammer Conversations web part.

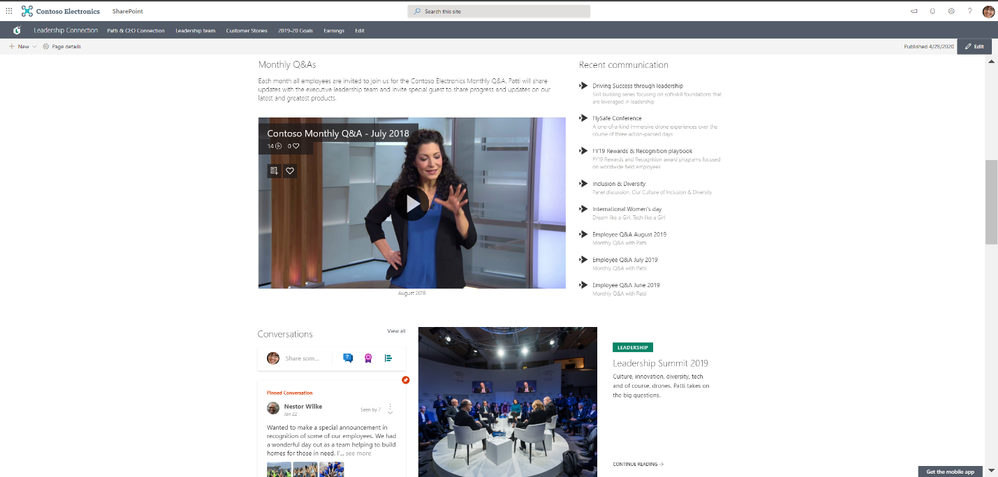

Whether you are looking to bring engaging conversations between employees and leaders to your Leadership sites, or allow employees to ask and resolve questions with key services on Employee Service Sites like IT, HR, Travel, or other Community Sites, the updated web part experience enables you to bring rich, social conversations to all of your SharePoint intranet.

Add Yammer community discussions to your leadership page.

Add Yammer community discussions to your leadership page.

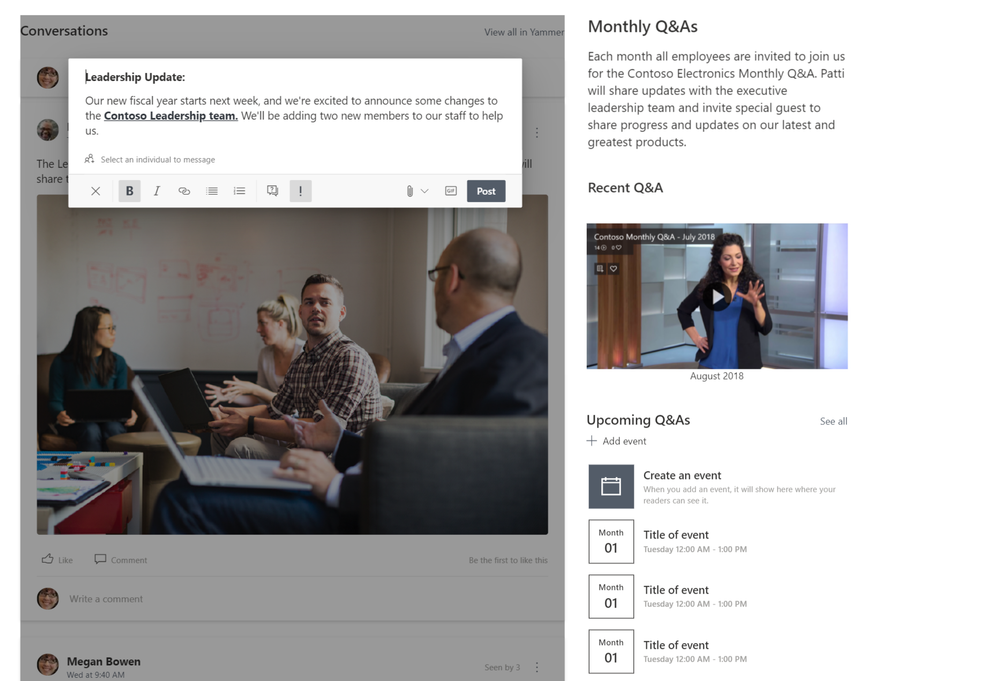

Share news and announcements directly from the web part.

Share news and announcements directly from the web part.

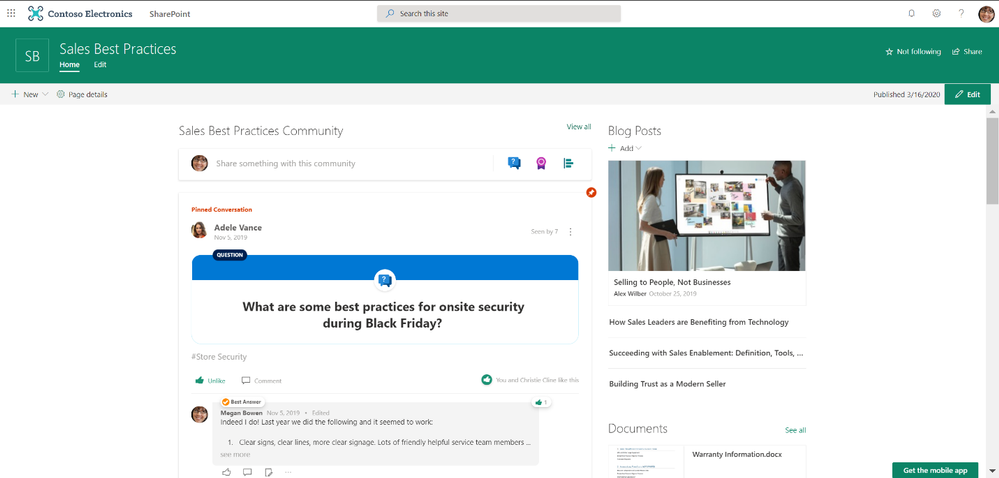

Leverage Yammer communities to share knowledge and best practices alongside helpful resources.

Leverage Yammer communities to share knowledge and best practices alongside helpful resources.

Yammer Everywhere

We’re continuing to build solutions that integrate Yammer communities and conversations into the apps that you use every day. Check out our latest Outlook integration and our Communities app for Microsoft Teams and stay tuned into our public roadmap and blog.

Recent Comments