Using Custom SharePoint Site Properties to Apply Microsoft 365 Retention with Adaptive Policy Scopes

This article is contributed. See the original author and article here.

Now that our new Microsoft Information Governance feature, adaptive policy scopes, has reached general availability (GA), we thought it would be helpful to dive a little deeper into SharePoint site scopes. One of the most common questions we receive regarding site scopes is how administrators can use custom properties to include or exclude SharePoint sites with them. With this post, let’s take a deeper look at what custom properties are, why you may want to use them, and how to configure them.

If you are unfamiliar with adaptive policy scopes, it is an exciting new feature for Information Governance and Records Management which provides the ultimate level of flexibility when applying retention to Microsoft 365 locations. It allows organizations to meet regulatory, legal, or business requirements that demand different retention rules to apply to various departments, locations, and roles.

For more information about adaptive policy scopes, check out the following resources:

- Documentation

- Adaptive Policy Scopes Introduction (Webinar): https://aka.ms/Adaptive-Webinar-EMEA-US

- Building Advanced Queries for Users and Groups with Adaptive Policy Scopes (Webinar): https://aka.ms/AdaptiveScopes-UsersGroups

- Using Adaptive Policy Scopes to Apply M365 Retention to Shared, Resource, and Inactive Mailboxes (Blog)

Additionally, much of the information we’ll discuss was also presented and demonstrated in our January 2022 webinar “Building Advanced Queries for SharePoint Sites with Adaptive Policy Scopes“: https://aka.ms/AdaptivePolicyScopes-AdvancedSharePoint

Introduction to SharePoint Site Scopes

Out of the box, adaptive policy scopes allow you to include or exclude SharePoint sites based on indexed properties such as the site’s URL or name. One common problem admins face, however, is that those properties don’t always work well for their retention requirements. Furthermore, SharePoint sites, by default, don’t have many other queryable properties that they find useful when scoping retention policies. Many times, they require more user-centric attributes, such as region or location, to align with regulatory requirements.

For that reason, we designed adaptive policy scopes to take advantage of refinable managed properties which allow administrators to inject and query whatever custom site-level information they want, enabling powerful complex scoping scenarios. For example, an administrator can create a queryable property that references the location in which the site is used, with a value such as “France”.

The most popular of these refinable managed properties – and ideal for our location example above – is the refinable string. Because it is the most commonly used refinable managed property, we added it as a selectable option in the simple query builder of the adaptive policy scope wizard when creating a site scope:

The simple query builder can be used to quickly create queries using the most common indexed site properties.

However, there are more refinable managed properties that may also be useful to administrators such as date and integer. These aren’t available in the simple query builder, but for maximum flexibility, can be queried using Keyword Query Language (KQL) within the advanced query builder:

The advanced query builder can be used to create more complex queries using Keyword Query Language (KQL)

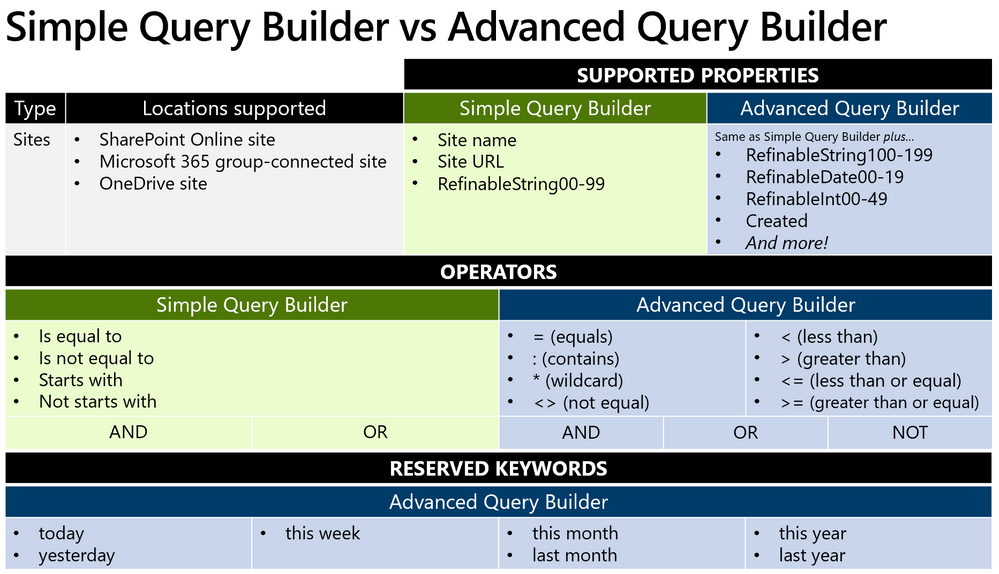

As you can probably guess, deciding whether to use the simple or advanced query builder will depend on the complexity of the scope, the properties which must be queried, and the operators that are required to achieve the intended result. To help understand the differences, refer to the following chart:

The advanced query builder supports more properties, but requires knowledge and experience of KQL.

The advanced query builder supports more properties, but requires knowledge and experience of KQL.

How custom properties work in SharePoint Online

Before using a custom property with an adaptive policy scope, it’s important to understand how they work. There are several components that are involved and at play when creating and querying custom properties with SharePoint sites:

- The site property bag: a per-site dictionary of key/value pairs. This is where an admin could add any custom properties to hold custom data that they’d want to query.

- Crawled property: when a new custom property is added to a site, a tenant-level crawled property is automatically generated during the SharePoint search and crawl process. This crawled property is not directly queryable and thus cannot be referenced in KQL queries. I like to think of it as unformatted data that has no data type.

- Refinable managed property: a queryable property that can be mapped to the previously generated crawled property. Mapping the refinable property will define the data type for the custom property, which can then be used to query the custom information. There are several different refinable managed properties, but here are the most common – along with the available operators that type supports:

| Managed Property | Data Type | Supported Operators |

| RefinableString00-199 | String | = : <> * |

| RefinableInt00-49 | Integer | = : <> * > >= < <= |

| RefinableDate00-19 | ISO 8601 Date/Time | = : <> * > >= < <= reserved keywords |

The following image gives an overview of the process each custom property goes through before it can be queried using KQL from within an adaptive policy scope:

Adding a custom property initiates a crawled property which then must be mapped to a managed property to become queryable.

NOTE: Since a tenant-level crawled property is created automatically the first time a custom property is added to a site, the managed property only needs to be mapped once. After mapping, the custom property can be added to more sites and the same managed property can be used to query them all (after indexing occurs).

Adding a custom property for use in adaptive policy scopes

Now that we have a basic understanding of the various components involved under-the-hood, let’s walk through how to create custom properties that can be queried using KQL from within an adaptive policy scope.

Step 1: Adding the custom property to the site property bag

At this time, there’s no way in the UI to add a custom property to a site property bag. So, to make the process as easy as possible, we’ve worked with the open-sourced PnP.PowerShell module team to create cmdlets designed specifically for easily adding/managing custom properties for use with adaptive policy scopes:

- Set-PnPAdaptiveScopeProperty: Add/modify a custom property

- Remove-PnPAdaptiveScopeProperty: Remove a custom property

To get started, you’ll need to make sure you have the latest version (1.9.0+) of the PnP.PowerShell module installed. Refer to their documentation for installation instructions.

Once installed – at least the first time that you connect to your tenant using PnP.PowerShell – you’ll need to give administrative consent to use the module. To do this, you must authenticate interactively. Choose a SharePoint Online site (we will use Project Wallaby), then use the following cmdlet to connect:

Connect-PnPOnline –Url <SPOSiteUrl> -Interactive

You must first connect to PnP Online interactively to consent to required permissions.

Once connected, use Set-PnPAdaptiveScopeProperty to add a custom property to the site’s property bag.

To provide a real-world example, let’s consider the following scenario:

Contoso wants to create a retention policy that applies to all project sites in the marketing department. The policy will apply indefinite retention while the project is active.

Given the above scenario, it would make sense to add three new custom properties to the property bag of all applicable sites. For our first site, we’ll use the marketing department’s Project Wallaby site:

- customDepartment:Marketing

- customSiteType:project

- customProjectEndDate:2023-01-01

NOTE: You don’t need to add ‘custom’ to the property name, but it can help distinguish custom properties from other properties.

Set-PnPAdaptiveScopeProperty –key customDepartment –value Marketing

Set-PnPAdaptiveScopeProperty –key customSiteType –value project

Set-PnPAdaptiveScopeProperty –key customProjectEndDate –value 2023-01-01

We can then use Get-PnPPropertyBag to verify the properties were successfully added:

Use Get-PnPPropertyBag to verify the custom properties have been added.

Step 2: Mapping the refinable managed properties

As we described above, once we’ve added the custom properties to the site’s property bag, the SharePoint search crawl process will generate a new tenant-level crawled property (if one doesn’t already exist). This requires the site to be crawled, so it may take some time. Once the crawled property has been generated, it can be viewed within your tenant’s SharePoint search schema:

https://<tenant>-admin.sharepoint.com/_layouts/15/searchadmin/ta_listcrawledproperties.aspx?level=tenant

A tenant-level crawled property is created for each custom property added, but they are not mapped to any managed property.

In the above image, notice that there are not any current mappings. This is where we would need to map each crawled property to a refinable managed property which will assign a data type and enable the ability to query the data based on that type.

To do that, select one of the newly created crawled properties to open the crawled property settings. Then, within “Mappings to managed properties”, search for and choose an applicable refinable managed property. You’ll need to do this for each custom property that was created, but as mentioned before, will only need to do it once for each.

In order to make a crawled property queryable, you must map it to a managed property which gives it a data type.

It is important to emphasize that the refinable property is what gives the crawled property a data type that we can then query. So, when deciding which refinable managed property to use, consider how you want to query the object, then choose the type that makes the most sense. For example, a date supports more operators than a string. Given the 3 properties/values we created, we can map them to the following managed properties:

| Custom Property | Data | Data Type | Managed Property |

| customDepartment | Marketing | String | RefinableString00 |

| customSiteType | Project | String | RefinableString01 |

| customProjectEndDate | 2023-01-01 | DateTime | RefinableDate00 |

The mappings can be viewed from the tenant-level crawled property page.

After creating the mappings, crawling of the site is again required before being queryable, which may take some time.

Step 3: Create the query

Finally, now that we’ve added the custom properties and mapped them to refinable managed properties so that they can be queried, we can create the query for use in an adaptive scope.

If we had chosen to use only refinable strings then the simple query builder would be fine to use – but since we chose to use a refinable date too, we must create a KQL query for use in the advanced query builder.

Remembering the example scenario outlined above – and given the custom properties we created – we could query the mapped refinable managed properties using the following KQL query:

RefinableString00=Marketing AND RefinableString01=project AND RefinableDate00>today

Once an adaptive policy scope is created, it generally takes about 24-48 hours for it to start populating with sites that match our query. Since that is a while to wait to simply confirm the query is valid, we can first test it using SharePoint search by navigating to:

https://<tenant-name>.sharepoint.com/Search

SharePoint search can be used to verify/validate KQL queries.

Now that we’ve confirmed it works, we can confidently create a new adaptive policy scope using the same KQL query that was tested above within the advanced query builder of the new adaptive scope wizard:

Creating a SharePoint site scope using KQL

Automating the process

As you can see, this process is very manual and would be extremely time-consuming to perform over a large number of sites.

For existing sites, we have an example script that can export all existing sites and allow you to set a custom property on any number of them: https://aka.ms/BulkPropertyBagScripts

For future sites, we recommend implementing a site provisioning solution to start integrating custom properties into your workflow. PnP has a provisioning framework, as one option: https://aka.ms/PnP-ProvisioningFramework

—

We hope you found this blog post useful. Thank you for reading!

Recent Comments