by Scott Muniz | Mar 8, 2022 | Security, Technology

This article is contributed. See the original author and article here.

Mozilla has released security updates to address vulnerabilities in Firefox, Firefox ESR, and Thunderbird. An attacker could exploit some of these vulnerabilities to take control of an affected system.

CISA encourages users and administrators to review the Mozilla security advisories for Firefox 98, Firefox ESR 91.7, and Thunderbird 91.7 and apply the necessary updates.

by Contributed | Mar 8, 2022 | Technology

This article is contributed. See the original author and article here.

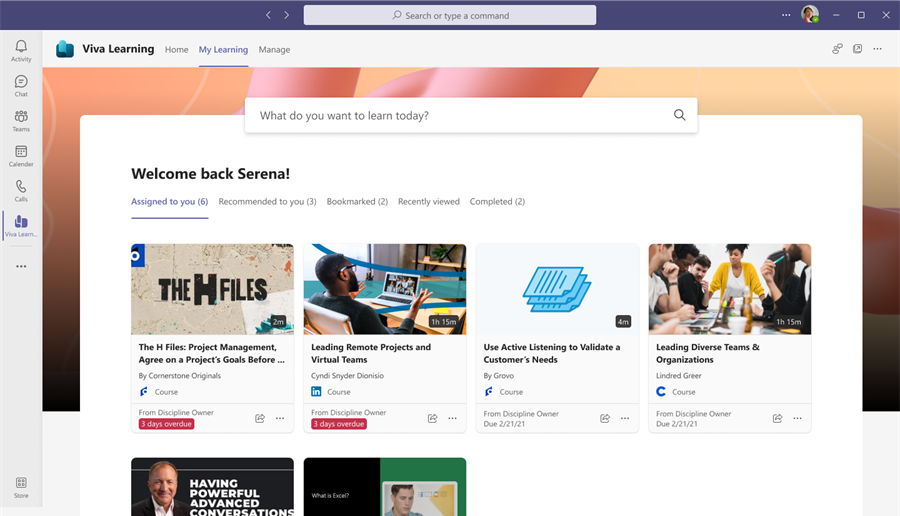

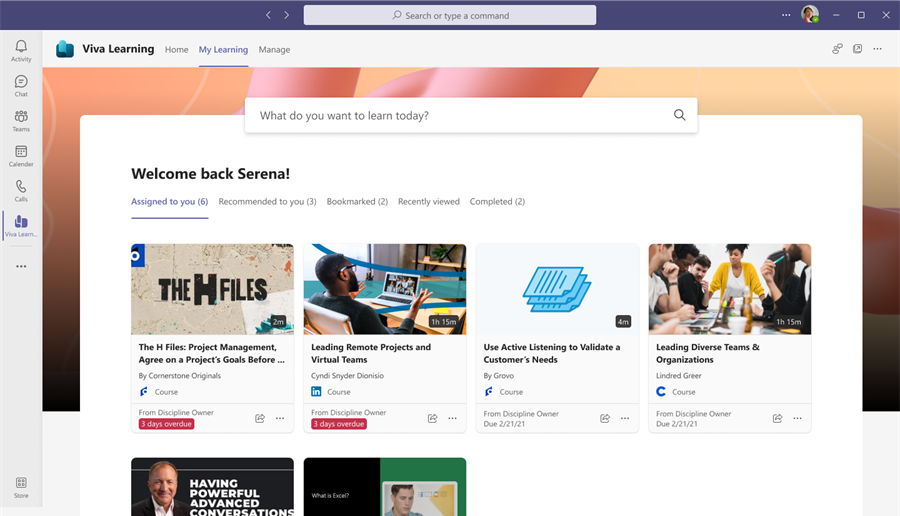

As we celebrate the first year of Microsoft Viva, it’s exciting to see so many companies seeking to foster human connection, align on a sense of mission, ensure employee wellbeing, and retain their best people. These are areas of critical important for business of any size as we collectively navigate the new world of Hybrid Work.

Since Microsoft Viva Learning’s general availability in November 2021 we’ve seen how employee upskilling, growth, and learning is becoming a top priority for organizational leaders. If you missed it, make sure to watch our announcement at Ignite.

But as we look back with pride, we also look forward with excitement. And there’s plenty on the horizon to be excited about with Viva Learning! Below we have a set of partner announcements, early adopter evidence, and new resources to share.

Let’s get to the good stuff!

Partner Announcements

Viva Learning partner integrations we’ve previously announced, we’re thrilled to add another big name to the list – Workday. With the planned integration of Viva Learning and Workday, we’ll deliver a personalized learning experience right in the flow of work with Viva and Microsoft 365. We expect this integration to be live in the coming months.

“Together, Workday and Microsoft will empower employees with a simpler, more connected learning experience in their natural flow of work for greater engagement and productivity.”

-Stuart Bowness, Vice President, Software Development, Experience Design & Development, Workday

We’re also pleased to announce that our existing Learning Management System (LMS) integrations have reached a new milestone with learner record and assignment syncing. This means assignments and completion records from Cornerstone OnDemand, Saba Cloud, and SAP SuccessFactors now surface directly in Viva Learning. This builds on our existing content catalog integration which provides users the ability to search, discover, recommend, and share content hosted on their LMS right within Teams.

For detailed setup instructions to integrate your LMS with Viva Learning, visit the Viva Learning docs page.

Finally, we’re excited to share that our integrations with Edcast and OpenSesame are live in Viva Learning! This means you can now import content libraries from Edcast and OpenSesame into Viva Learning so users can search for, discover, share, and recommend their content throughout the platform.

In addition to the dedicated integrations mentioned above, we’re also hard at work building our APIs to deliver an open and extensible Employee Experience platform with Viva. Expect more details on Viva Learning APIs in the coming months.

Early adopter spotlight – Music Tribe

Music Tribe is a multi-national leader for professional audio products and musical instruments with operations across the globe. Recognizing the importance of learning in attracting and retaining talent, Music Tribe decided to prioritize the growth and development of their Tribers’ (employees’) by seeking a personalized, social, and easy to use learning system.

Music Tribe already uses Teams, so bringing learning to their users within their existing platform was a key decision point to deliver an engaging, inclusive, and inspiring learning experience aligned to their core values.

By deploying Viva Learning and Go1 together, Music Tribe employees are now able to define their own learning journeys in a social and collaborative experience – seamlessly integrated into their flow of work.

“As part of our Vision “We Empower. You Create”, we wanted to empower our Tribers (employees) by means of learning and self-improvement. We looked for tools that would aggregate content from different providers and deliver it to our Tribers in an easily accessible way. The Go1 + Viva Learning solution does exactly that, with Go1 bringing the content together and Viva Learning delivering it as a natural part of the workday. This tremendously helped our Tribers sourcing content while giving more time developing their learning pathways and making the most of their professional development on our Employee Experience platform,”

-Uli Behringer, Founder and CEO, Music Tribe

“We are thrilled to deliver the world’s largest workplace online learning library to Music Tribe employees in the flow of their work, via Microsoft Viva Learning. With Go1 and Viva Learning together, Music Tribe’s employees are able to access relevant learning content from hundreds of different learning providers, all from the Microsoft tools like Teams that they use every day.”

-Andrew Barnes, CEO, Go1

Data from Go1 shows that in less than a month from launch over 40% of Music Tribe employees engaged in learning content, well over the industry standard for levels of employee engagement in learning content. Read more about Music Tribe’s Viva Learning journey in Go1’s blog.

New Viva Learning resources

For a comprehensive product walkthrough, see our guide at aka.ms/VivaLearningDemo

For setup and admin documentation, see our docs pages at aka.ms/VivaLearningDocs

For the list of 125 free LinkedIn Learning courses included with Viva Learning, see this page

For adoption guidance and best practices, see our new page at aka.ms/VivaLearningAdoption

For overview and license and pricing, or to start a free trial, see aka.ms/VivaLearning

Please add your questions and thoughts in the comments section and as always we will see you soon – learning in the flow of work!

by Scott Muniz | Mar 8, 2022 | Security, Technology

This article is contributed. See the original author and article here.

The Federal Bureau of Investigation (FBI) has released a Flash report detailing indicators of compromise (IOCs) associated with ransomware attacks by RagnarLocker, a group of a ransomware actors targeting critical infrastructure sectors.

CISA encourages users and administrators to review the IOCs and technical details in FBI Flash CU-000163-MW and apply the recommended mitigations.

by Scott Muniz | Mar 8, 2022 | Security, Technology

This article is contributed. See the original author and article here.

CISA has released an Industrial Controls Systems Advisory (ICSA), detailing vulnerabilities in PTC Axeda agent and Axeda Desktop Server. Successful exploitation of these vulnerabilities—collectively known as “Access:7”—could result in full system access, remote code execution, read/change configuration, file system read access, log information access, or a denial-of-service condition.

CISA encourages users and administrators to review ICS Advisory ICSA-22-067-01 PTC Axeda Agent and Axeda Desktop Server for technical details and mitigations and the Food and Drug Administration statement for additional information.

by Contributed | Mar 7, 2022 | Technology

This article is contributed. See the original author and article here.

Protegendo o backend com o Azure API Management

O Azure API Management é uma excelente opção para projetos que lidam com APIs. Estratégias como centralização, monitoria, gerenciamento e documentação são características que o APIM ajuda você a entregar. saiba mais.

No entanto muitas vezes esquecemos que nossos backends precisam estar protegidos de acessos externos. Pensando nisso vamos mostrar uma forma muito simples de proteger seu backend usando o recurso de private endpoint, VNETS e sub-redes. Assim podemos impedir chamadas públicas da internet no seu backend, porém permitindo que o APIM o acesse de forma simples e transparente.

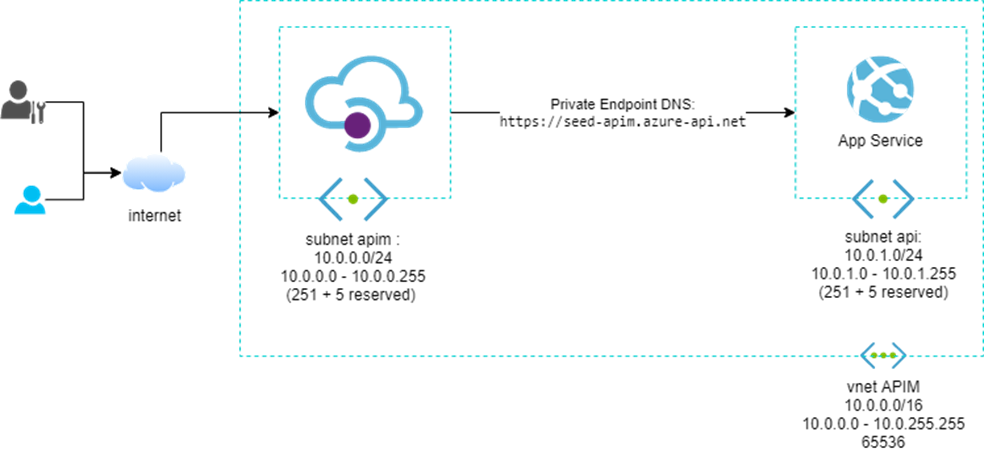

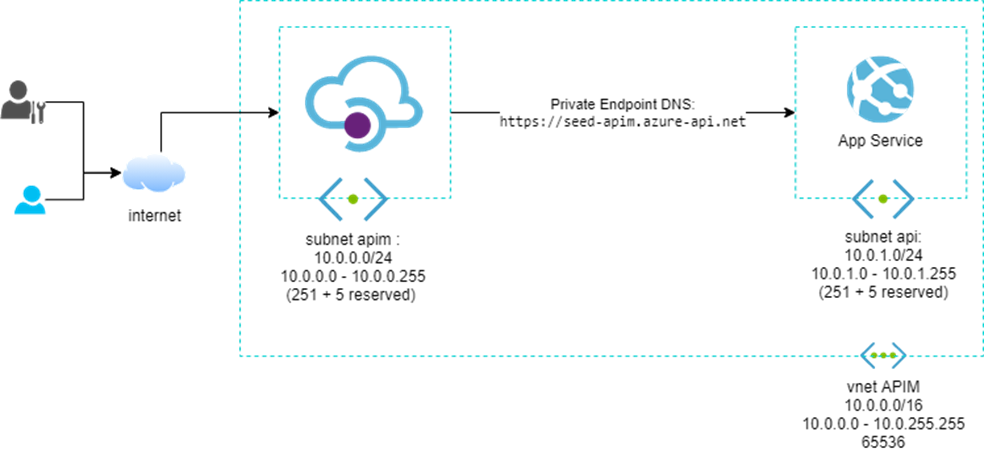

O primeiro passo é entender a VNET, que é a representação virtual de uma rede de computadores, ela permite que os recursos do Azure se comuniquem com segurança entre si, com a Internet e com redes locais. Como em qualquer rede, ela pode ser segmentada em partes menores chamadas sub-redes. Essa segmentação nos ajuda a definir a quantidade de endereços disponíveis em cada sub-redes, evitando conflitos entre essas redes e diminuindo o tráfego delas. Observe o desenho abaixo:

Diagrama com uma VNET de CIDR 10.0.0.0/16 e duas sub-redes com CIDR 10.0.0.0/24 e 10.0.1.0/24.

Diagrama com uma VNET de CIDR 10.0.0.0/16 e duas sub-redes com CIDR 10.0.0.0/24 e 10.0.1.0/24.

É importante entender quais opções de conexão com uma VNET (Modos) o APIM oferece:

- Off: Esse é o padrão sem rede virtual com os endpoints abertos para a internet.

- Externa: O portal de desenvolvedor e o gateway podem ser acessados pela Internet pública, e o gateway pode acessar recursos dentro da rede virtual e da internet.

- Interna: O portal de desenvolvedor e o gateway só podem ser acessados pela rede interna. e o gateway pode acessar recursos dentro da rede virtual e da internet.

Em um ambiente de produção essa arquitetura contaria com um firewall de borda, tal como um Application Gateway ou um Front Door, essas ferramentas aumentam a segurança do circuito oferecendo proteções automáticas contra os ataques comuns, por exemplo, SQL Injection, XSS Attack (cross-site scripting) entre outros. No entanto para fins de simplificação vamos ficar sem essa proteção.

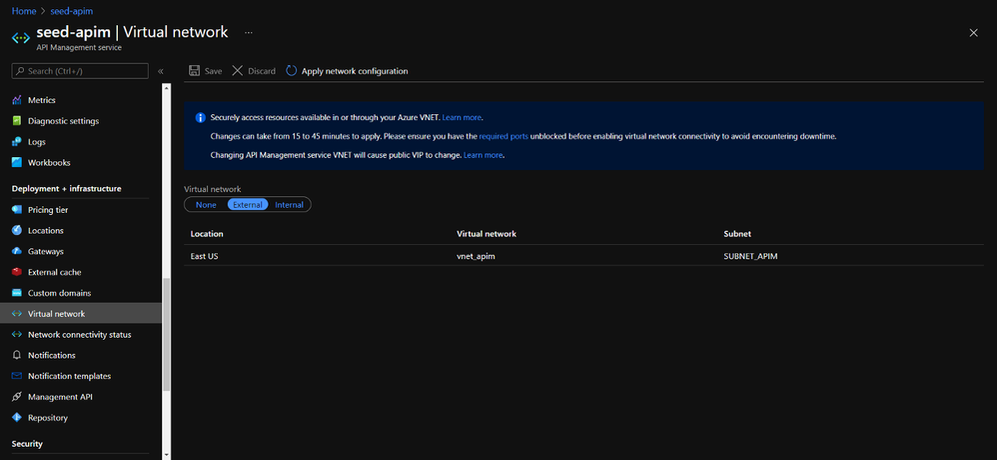

A configuração de rede do APIM pode ser feita no menu lateral Virtual Networking, no portal de gerenciamento do Azure.

APIM com configuração externa na VNET vnet_apim e sub-rede SUBNET_APIM

APIM com configuração externa na VNET vnet_apim e sub-rede SUBNET_APIM

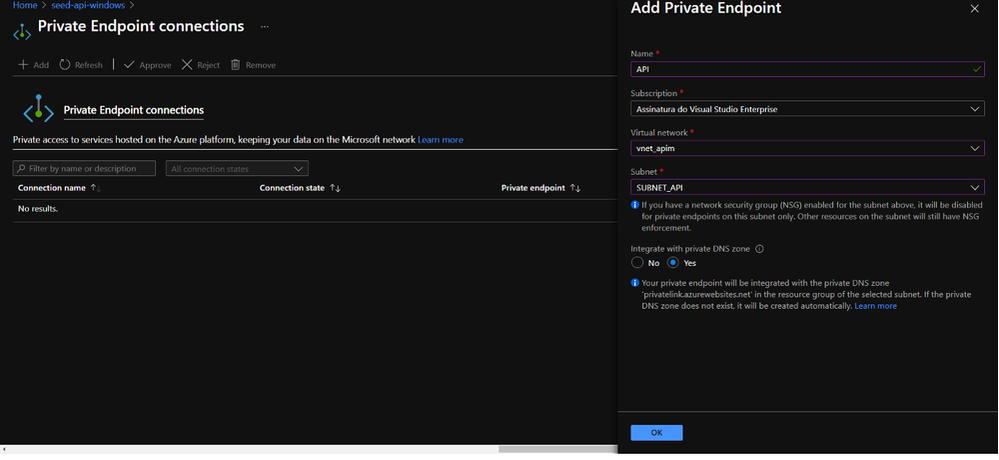

Depois de configurar o APIM, devemos configurar o App Services. Nele vamos até o menu Networking e configuramos um private endpoint, é com esse recurso que associamos um App Services a uma VNET e uma sub-rede. Durante essa configuração é importante marcamos a opção que integra com um DNS privado, para garantir a resolução de nomes dentro da rede privada.

Portal do Azure, configuração do private endpoint do App Services

Portal do Azure, configuração do private endpoint do App Services

Agora podemos conferir que foi criado um private zone para o domínio azure.websites.net apontando para o IP privado do App Services, isso permite que o APIM acesse o App Services de forma transparente, assim como era antes da implementação da VNET.

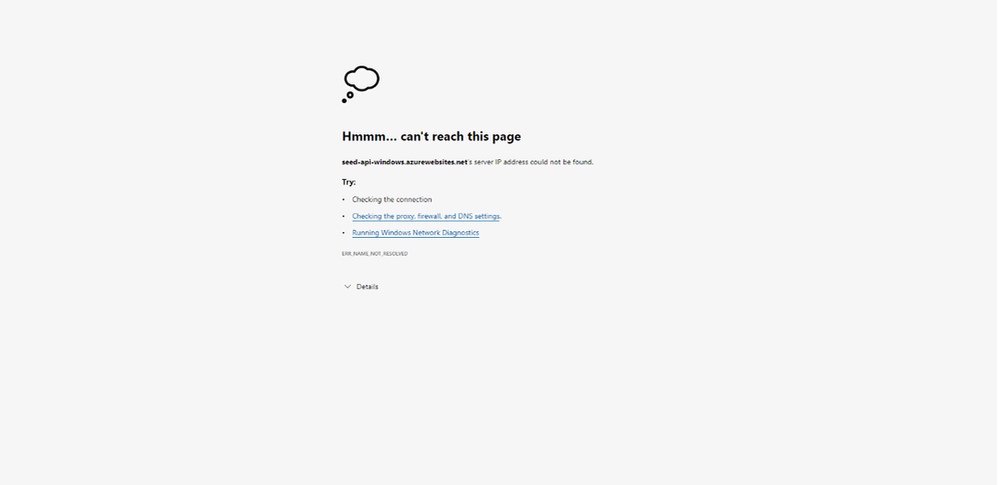

Para realmente termos certeza de que nosso App Services está protegido, podemos tentar acessar seu endereço pelo navegador, algo como a imagem abaixo deve acontecer. Não vamos conseguir resolver esse DNS.

Acessando URL do APP Services pelo navegador, e recebendo um erro de resolução de DNS.

Acessando URL do APP Services pelo navegador, e recebendo um erro de resolução de DNS.

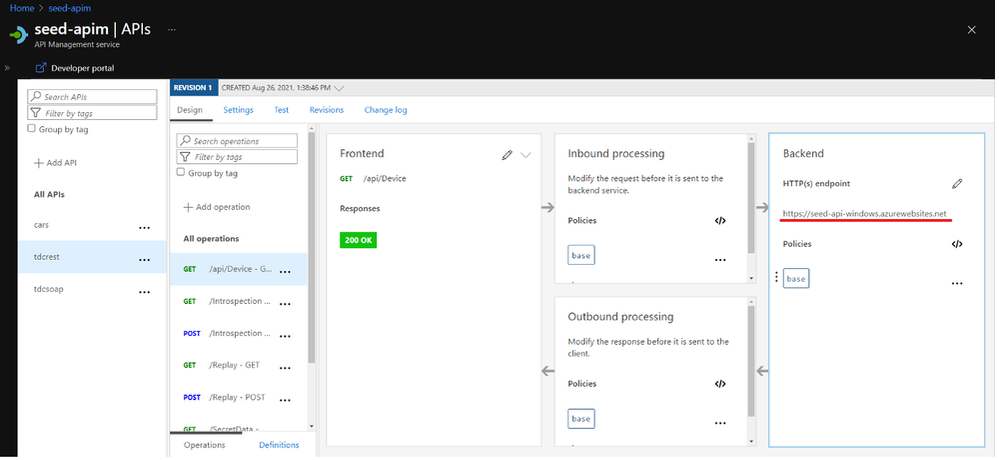

No entanto as APIs do APIM continuam funcionando com o mesmo endereço, já que o APIM está na mesma VNET que o App Services e o DNS privado resolve os nomes para endereços dessa VNET.

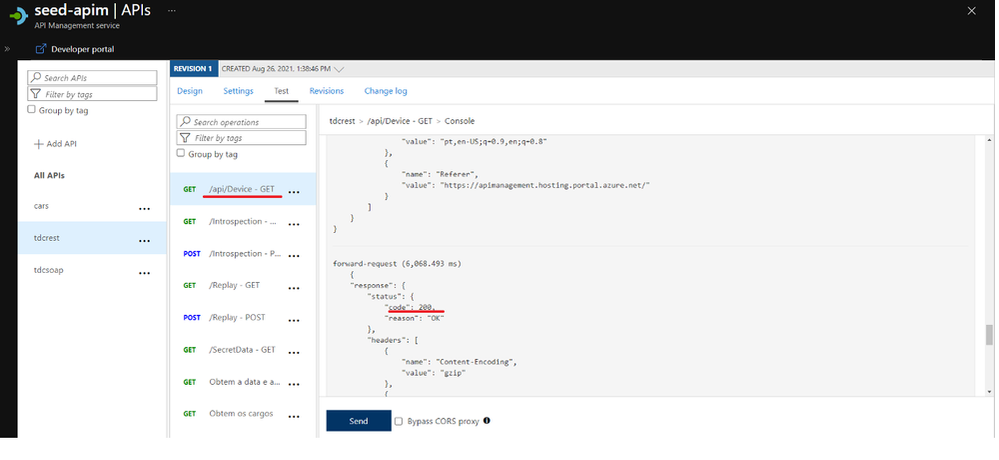

Tela do APIM com mesmo backend que não pode ser acessado pelo browser.

Tela do APIM com mesmo backend que não pode ser acessado pelo browser.

Usando a ferramenta de Teste do APIM podemos confirmar que o APIM consegue acessar o back-end.

Explorando a opção de Teste do APIM.

Explorando a opção de Teste do APIM.

Conclusão

A implantação da Rede Virtual do Azure fornece segurança aprimorada, isolamento e permite que você coloque seu serviço de gerenciamento de API em uma rede protegida da Internet. Todos os acessos como portas e serviços podem ser controlados pelo NSG da VNET, dessa forma você garante que suas APIS serão acessadas apenas pelo endpoint de gateway do APIM.

Referências

- https://docs.microsoft.com/pt-br/azure/api-management/api-management-using-with-vnet

- https://docs.microsoft.com/en-us/azure/api-management/api-management-using-with-internal-vnet

- https://docs.microsoft.com/en-us/azure/virtual-network/virtual-networks-overview

Recent Comments