by Contributed | May 2, 2022 | Business, Microsoft 365, Technology

This article is contributed. See the original author and article here.

Just last month, we released our 2022 Annual Work Trend Index to better understand how work has changed over the past two years. The biggest takeaway is clear: we’re not the same people that went home to work in early 2020.

The post Using research to unlock the potential of hybrid work appeared first on Microsoft 365 Blog.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

by Contributed | May 2, 2022 | Technology

This article is contributed. See the original author and article here.

Continuous integration and continuous delivery (CI/CD) culture started to get popular, and it brought the challenge of having everything automatized, aiming to make processes easier and maintainable for everyone.

One of the most valuable aspects of CI/CD is the integration of the Infrastructure as Code (IaC) concept, with IaC we can version our infrastructure, save money, creating new environments in minutes, among many more benefits. I won’t go deeper about IaC, but if you want to learn further visit: The benefits of Infrastructure as Code

IaC can also bring some challenges when creating resources needed for the projects. This is mostly due to creating all the scripts for the infrastructure is a task that is usually assigned to the infrastructure engineers, and it happens that we can’t have the opportunity to be helped for any reason.

As a Data Engineer, I would like to help you understand the CI/CD process with a hands-on. You’ll learn how to create Azure Databricks through Terraform and Azure DevOps, whether you are creating projects by yourself or supporting your Infrastructure Team.

In this article, you´ll learn how to integrate Azure Databricks with Terraform and Azure DevOps and the main reason is just because in this moment I’ve had some difficulties getting the information with these 3 technologies together.

First of all, you’ll need some prerequisites

- Azure Subscription

- Azure Resource Group (you can use an existing one)

- Azure DevOps account

- Azure Storage Account with a container named “tfstate”

- Visual Studio Code (it’s up to you)

So, let’s start and have some fun

Please, go ahead and download or clone this GitHub repository databrick-tf-ado and get demo-start branch.

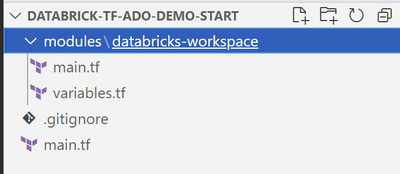

In the folder you’ll see a file named main.tf and 2 more files in the folder modules/databricks-workspace

It should be noted that this example is a basic one, so you can find more information of all the features for databricks in this link: https://registry.terraform.io/providers/databrickslabs/databricks/latest/docs

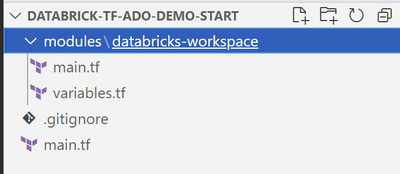

Now, go to the main.tf file in the root folder and find line 8 where the declaration of azurerm starts

backend "azurerm" {

resource_group_name = "demodb-rg"

storage_account_name = "demodbtfstate"

container_name = "tfstate"

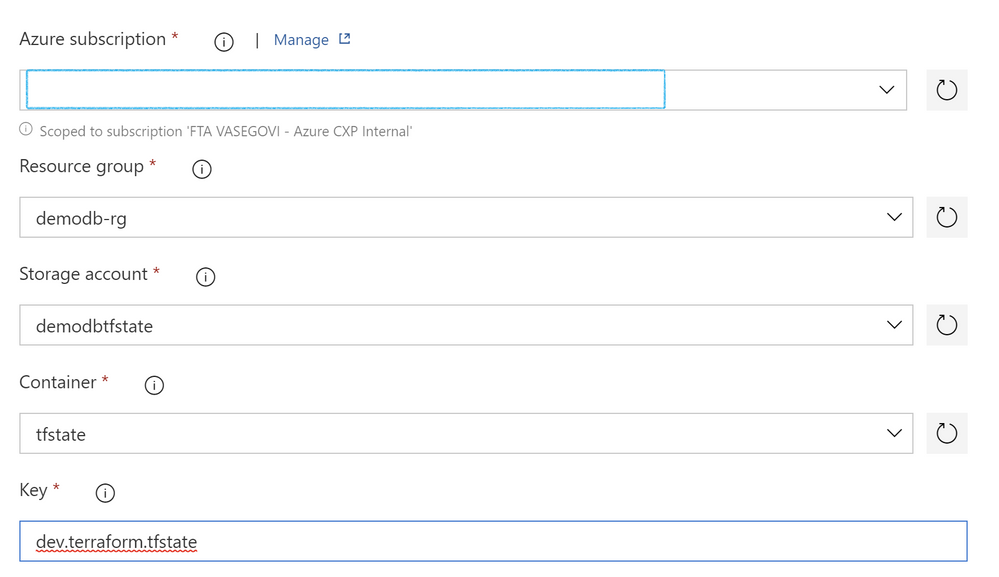

key = "dev.terraform.tfstate"

}

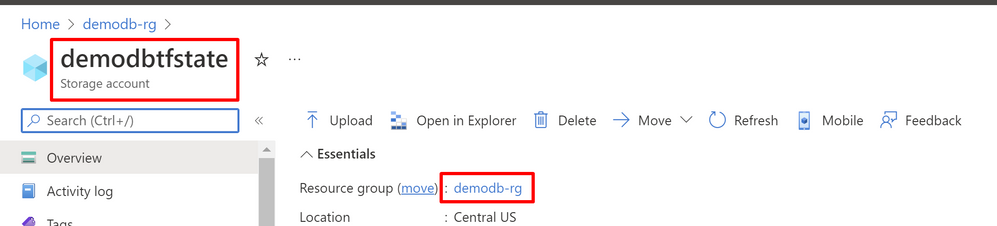

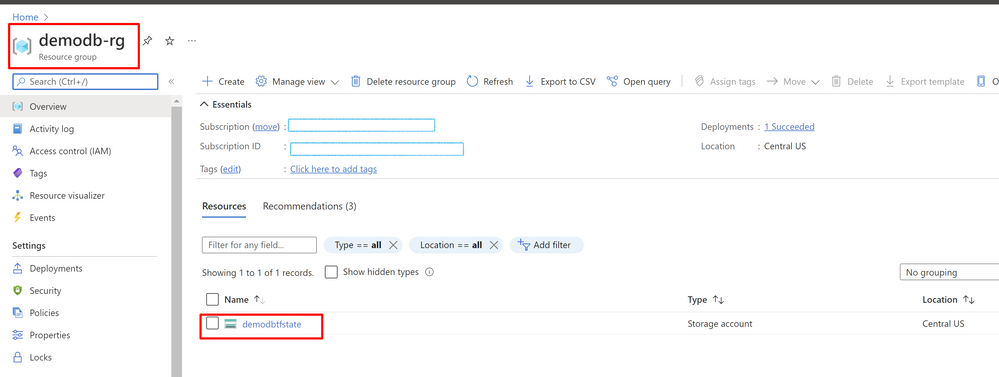

there you need to change the value of resource_group_name and storage_account_name for the values of you subscription, you can find those values in your Azure Portal, they need to be already created.

In main.tf file inside root folder there’s a reference to a module called “databricks-workspace”, now in that folder you can see 2 more files main.tf and variables.tf.

main.tf contains the definition to create a databricks workspace, a cluster, a scope, a secret and a notebook, in the format that terraform requires and variables.tf contains the information of the values that could change depending on the environment.

Now that you changed the values mentioned above into a GitHub or DevOps repository if you need assistance for that visit these pages: GitHub or DevOps.

At this moment we have our github or devops repository with the names that we require configured, so let´s create our pipeline to deploy our databricks environment into our Azure subscription.

First go to your azure subscription and check that you don’t have a databricks called demodb-workspace

You’ll need to install an extension so DevOps can use terraform commands so go to Terraform Extension.

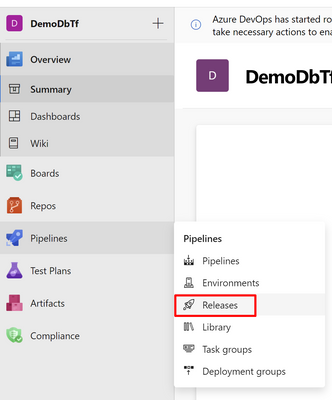

Once is installed in your project in Azure DevOps click on Pipelines-Release and Create “new pipeline”, it appears the option by creating the pipeline with YAML or with the Editor, I’ll choose the Editor so we can see it clearer.

In Add an Artifact in the Artifact section of the pipeline select your source type (provider where you uploaded your repository) and fill all the required information, like the image below and click “Add”

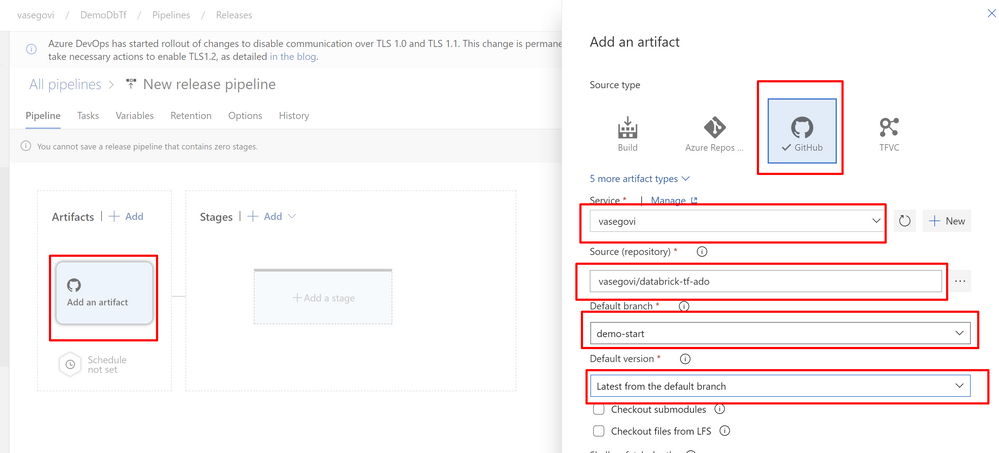

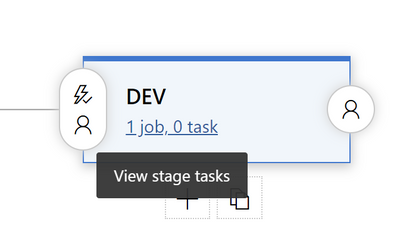

Then click on Add stage in Stages section and choose empty Job and name the stage as “DEV”

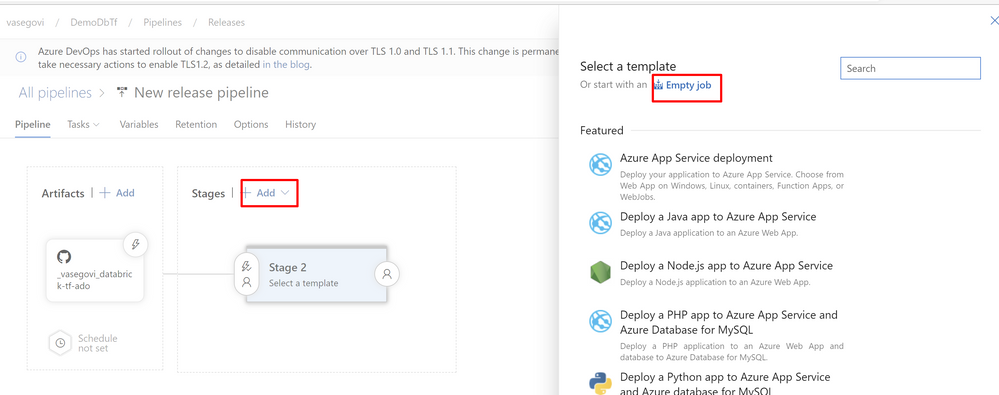

After that click on Jobs below the name of the stage

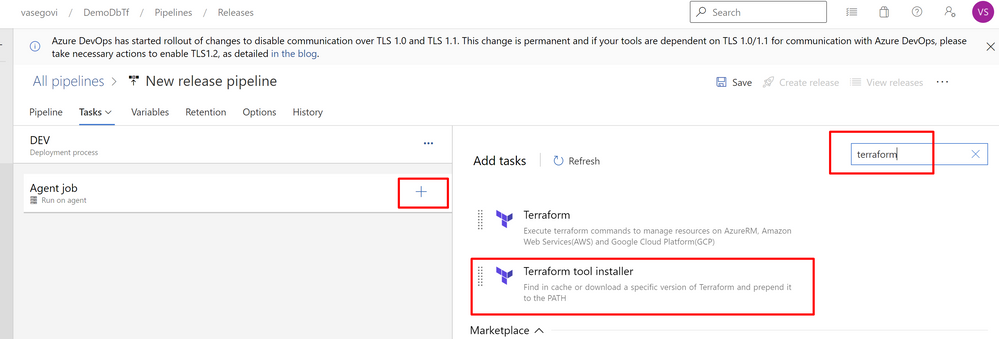

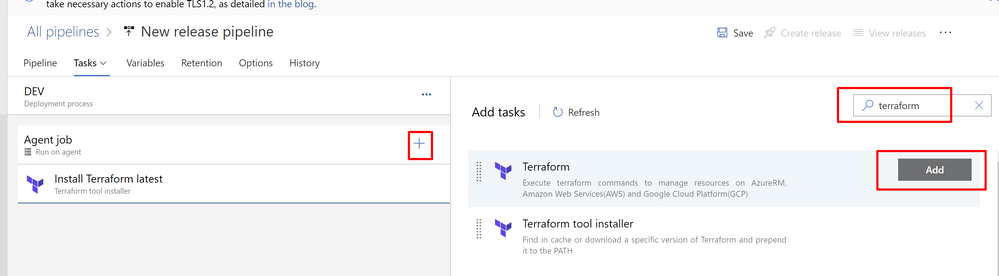

In the Agent job, press the “+” button and search for “terraform” select “Terraform tool installer”

Leave the default information

Then Add another 3 tasks of “Terraform” task

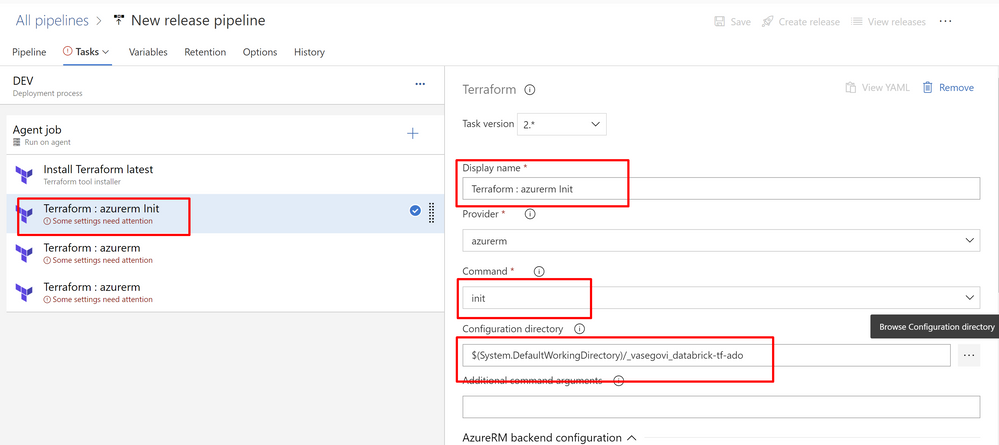

Name the second task after Installer as “Init” and fill the information required like the image:

For all these 3 tasks set the information of your subscription, resource group, storage account and container, and there’s also a value labeled key, there you have to set “dev.terraform.tfstate” is a key that terraform uses to keep tracking of your Infrastructure changes.

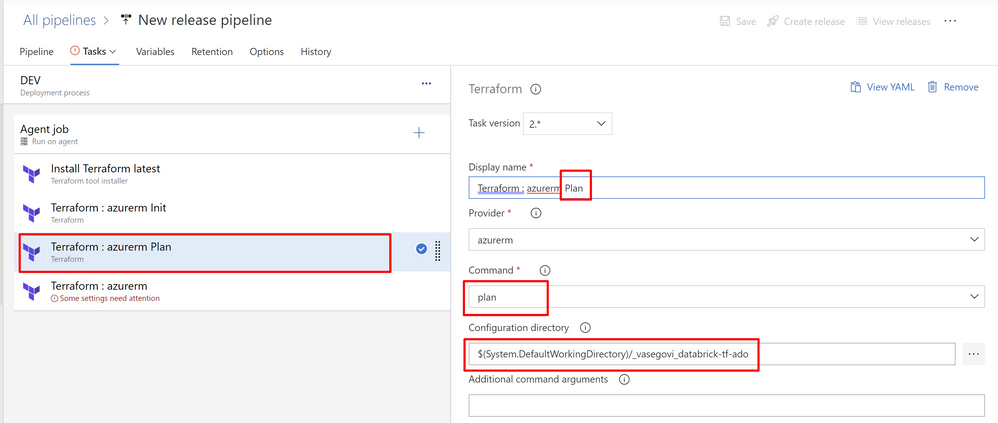

Name next task as “Plan”

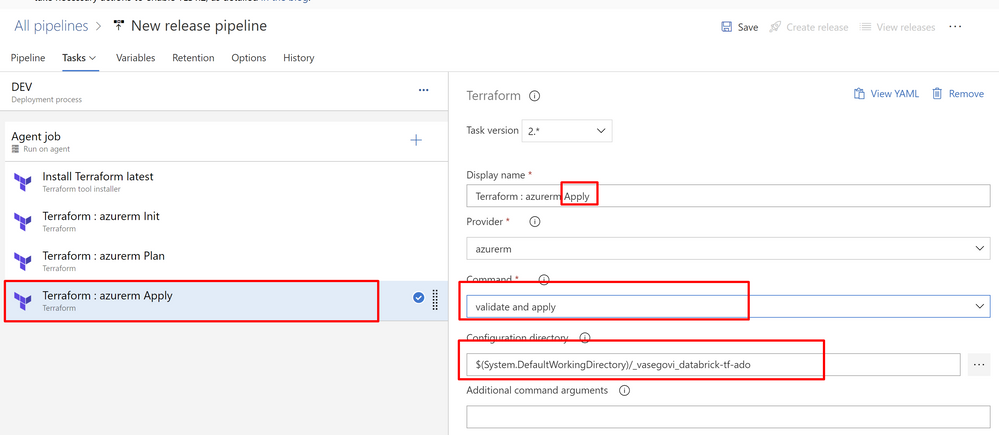

Next task “Apply”

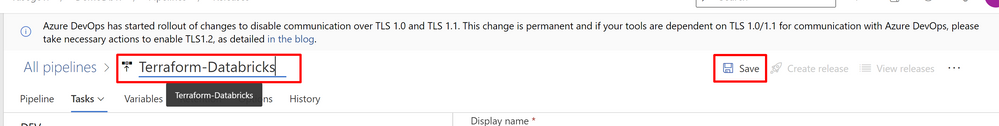

Now change the name of your pipeline and save it

And we only need to create a Release to test it

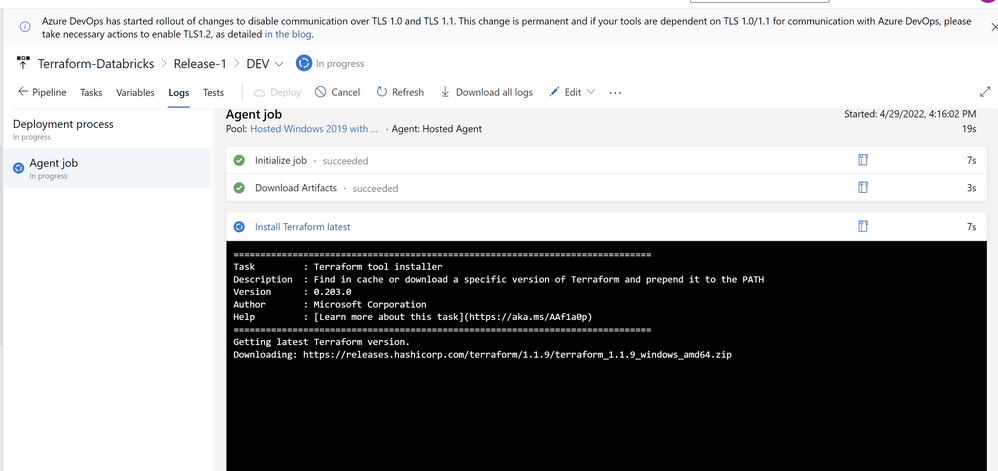

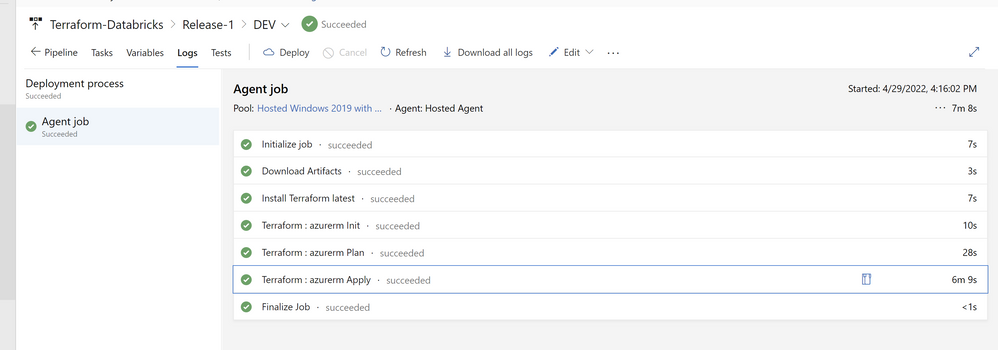

You can monitor the progress

When it finished, if everything was good you’ll see your pipeline as successful

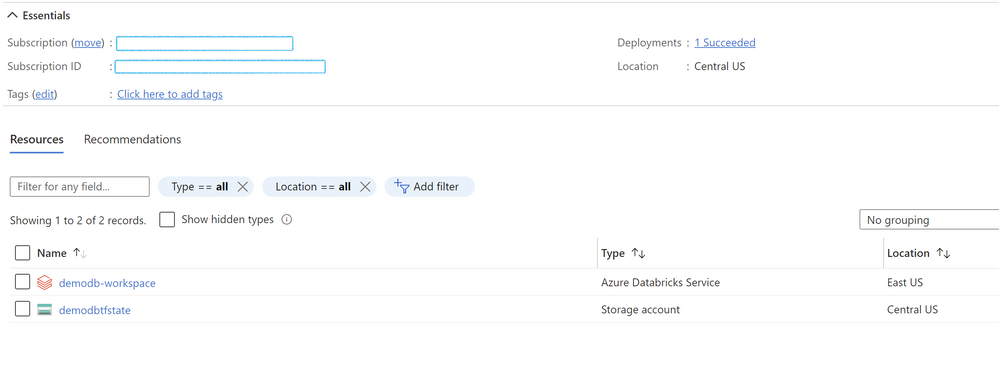

Lastly let´s confirm in the azure portal that everything is created correctly

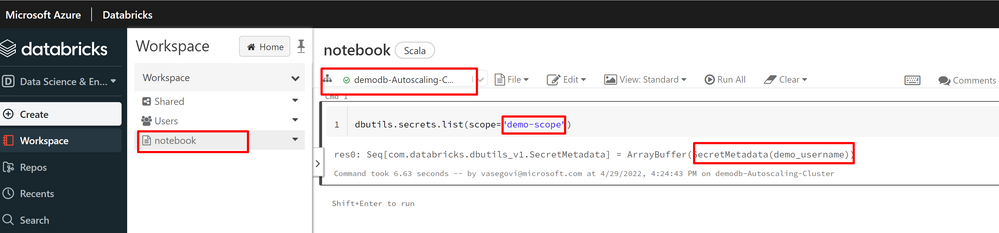

then login in your workspace and check the and run the notebook, so you can test that the cluster, the scope, the secret and the notebook are working correctly.

With that you can easily maintain your environments safe from the changes that contributors can do, only one way to accept modifications into your infrastructure.

Let us know any comments or questions.

by Contributed | Apr 30, 2022 | Technology

This article is contributed. See the original author and article here.

Restrict ADF pipeline developers to create connection using linked services

Azure Data Factory has some built-in role such as Data Factory Contributor. Once this role is granted to the developers, they can create and run pipelines in Azure Data Factory. The role can be granted at the resource group or above depending on the assignable scope you want the users or group to have access to.

When there is a requirement that the Azure Data Factory pipeline developers should not create or delete linked services to connect to the data sources that they have access to, the built-in role (Data Factory Contributor) will not restrict them. This calls for the creation of custom roles. However, you need to be cognizant about the number of role assignments that you can have depending on your subscription. This can be verified by choosing your resource group and selection the Role assignments under Access Control (IAM).

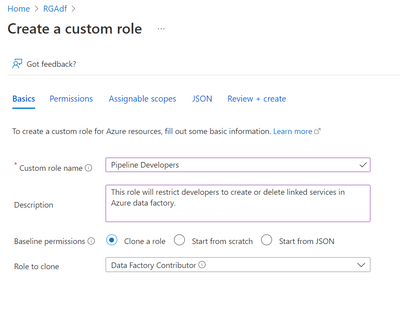

How do we create a custom role to allow the Data Factory pipeline developers to create pipelines but restrict them only to the existing linked service for connection but not create or delete them?

The following steps will help to restrict them:

- In the Azure portal, select the resource group where you have the data factory created.

- Select Access Control (IAM)

- Click + Add

- Select Add custom role

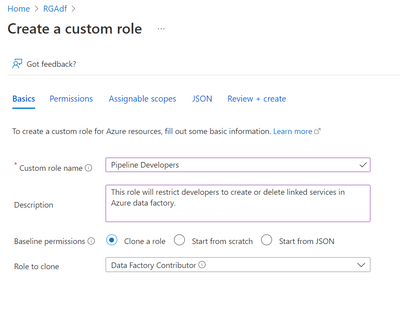

- Under Basics provide a Custom role name. For example: Pipeline Developers

- Provide a description

- Select Clone a role for Baseline permissions

- Select Data Factory Contributor for Role to clone

- Click Next

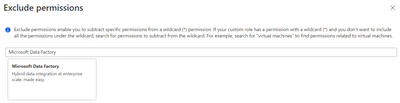

- Under Permissions select + Exclude permissions

- Under Exclude Permissions, type Microsoft Data Factory and select it.

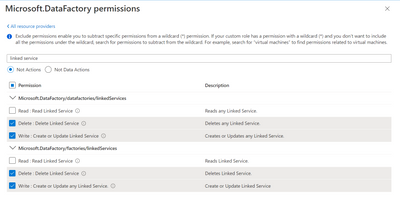

- Under Microsoft.DataFactory permissions, type Linked service

- Select Not Actions

- Select Delete: Delete Linked Service and Write: Create or Update any Linked service

- Click Add

- Click Next

- Under Assignable Scopes, make sure you want assignable scope to resource group or subscription. Delete and Add assignable scopes accordingly

- Go over the JSON Tab

- Click Review + create

- Once validated, click create

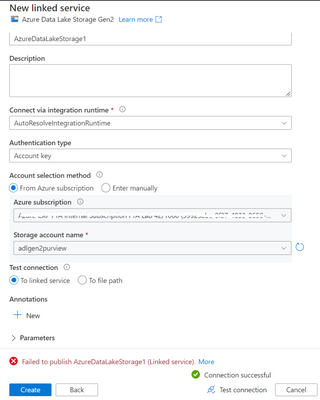

Note: Once the custom role is created, you can assign a user or group to this role. You can login with this user to Azure Data Factory. You will still be able to create a linked service but will not be able to save/publish.

by Contributed | Apr 29, 2022 | Technology

This article is contributed. See the original author and article here.

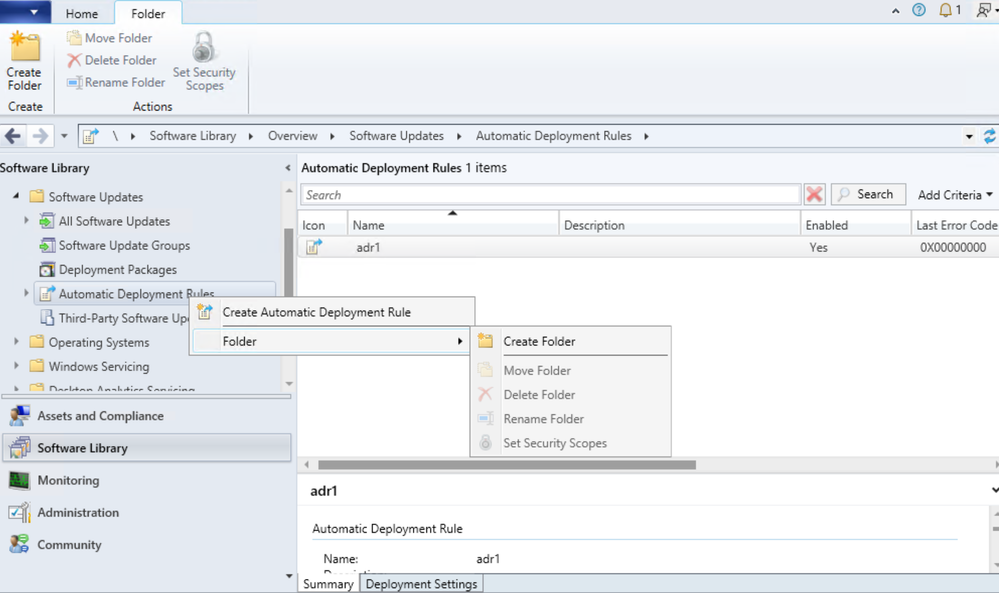

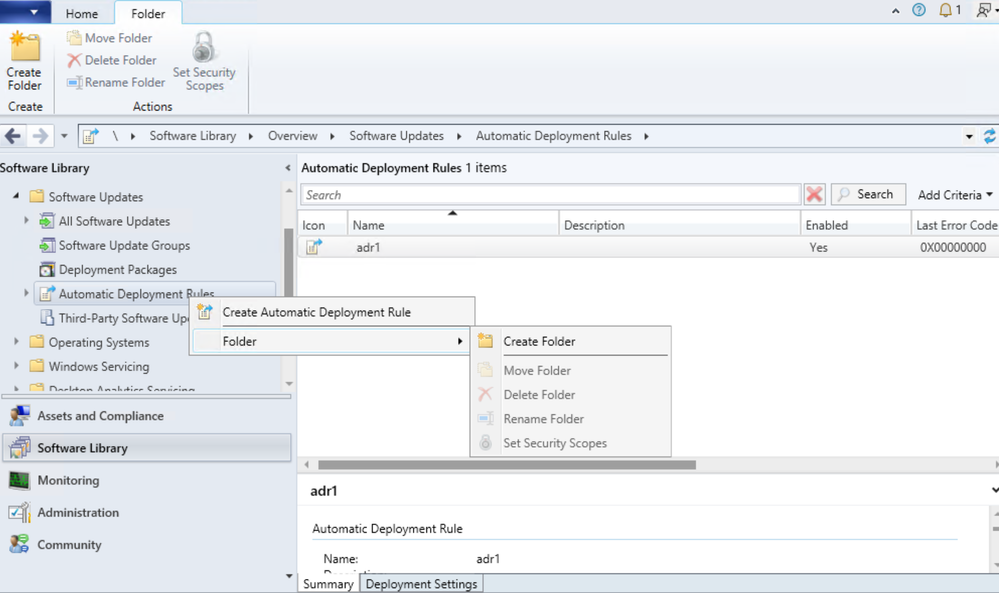

Update 2204 for the Technical Preview Branch of Microsoft Endpoint Configuration Manager has been released. In this release, administrators can now organize automatic deployment rules (ADR) using folders. This feature helps to enable better categorization and management of ADRs. Folder management is also supported with PowerShell Cmdlets.

This preview release also includes:

Administration Service Management option

When configuring Azure Services, a new option called Administration Service Management is now added for enhanced security. Selecting this option allows administrators to segment their admin privileges between cloud management gateway (CMG) and administration service. By enabling this option, access is restricted to only administration service endpoints. Configuration Management clients will authenticate to the site using Azure Active Directory.

Note:

Currently, the administration service management option can’t be used with CMG.

For more details and to view the full list of new features in this update, check out our Features in Configuration Manager technical preview version 2204 documentation.

Update 2204 for Technical Preview Branch is available in the Microsoft Endpoint Configuration Manager Technical Preview console. For new installations, the 2202 baseline version of Microsoft Endpoint Configuration Manager Technical Preview Branch is available on the Microsoft Evaluation Center. Technical Preview Branch releases give you an opportunity to try out new Configuration Manager features in a test environment before they are made generally available.

We would love to hear your thoughts about the latest Technical Preview! Send us feedback directly from the console.

Thanks,

The Configuration Manager team

Configuration Manager Resources:

Documentation for Configuration Manager Technical Previews

Try the Configuration Manager Technical Preview Branch

Documentation for Configuration Manager

Configuration Manager Forums

Configuration Manager Support

by Contributed | Apr 29, 2022 | Technology

This article is contributed. See the original author and article here.

The purpose of this series of articles is to describe some of the details of how High Availability works and how it is implemented in Azure SQL Managed Instance in both Service Tiers – General Purpose and Business Critical.

In this post, we shall introduce some of the high availability concepts and then dive into the details of the General Purpose service tier.

Introduction to High Availability

The goal of a high-availability solution is to mask the effects of a hardware or software failure and to maintain database availability so that the perceived downtime for users is minimized. In other words, high availability is about putting a set of technologies into place before a failure occurs to prevent the failure from affecting the availability of data.

The two main requirements around high availability are commonly known as RTO and RPO.

RTO – stands for Recovery Time Objective and is the maximum allowable downtime when a failure occurs. In other words, how much time it takes for your databases to be up and running.

RPO – stands for Recovery Point Objective and is the maximum allowable data-loss when a failure occurs. Of course, the ideal scenario is not to lose any data, but a more realistic (and also ideal) scenario is to not lose any committed data, also known as Zero Committed Data Loss.

In SQL Managed Instance the objective of the high availability architecture is to guarantee that your database is up and running 99.99% of the time (financially backed up by an SLA) minimizing the impact of maintenance operations (such as patching, upgrades, etc.) and outages (such as underlying hardware, software, or network failures) that might occur.

High Availability in the General Purpose service tier

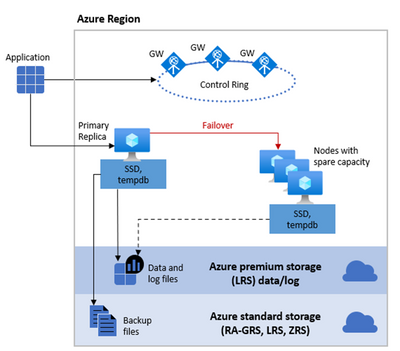

General Purpose service tier uses what is called the Standard Availability model. This architecture model is based on a separation of compute and storage. It relies on the high availability and reliability provided by the remote storage tier. This architecture is more suitable for budget-oriented business applications that can tolerate some performance degradation during maintenance activities.

The Standard Availability model includes two layers:

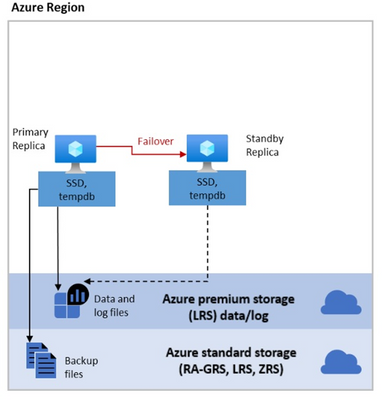

A stateless compute layer that runs the sqlservr.exe process and contains only transient and cached data, such as tempdb database, that resides on the attached SSD Disk, and memory structures such as the plan cache, the buffer pool, and columnstore pool that resides on memory.

It also contains a stateful data layer where the user database data & log files reside in an Azure Blob storage. This type of repository has built-in data availability and redundancy features (Local Redundant Storage or LRS). It guarantees that every record in the log file or page in the data file will be preserved even if sqlservr.exe process crashes.

The behavior of this architecture is similar to an SQL Server FCI (SQL Server Failover Cluster Instance) but without all the complexity that we currently have on-premises or in an Azure SQL VM. In that scenario we would need to first create and configure a WSFC (Windows Server Failover Cluster) and then create an SQL Server FCI (SQL Server Failover Cluster Instance). All of this is done behind the curtains for you when you provision an Azure SQL Managed Instance, so you don’t need to worry about it. As you can see from the diagram on the picture above, we have a shared storage functionality (again like in an SQL Server FCI), in this case in Azure premium storage, and also we have a stateless node, operated by Azure Service Fabric. The stateless node not only initializes sqlservr.exe process but also monitors & controls the health of the node and, if necessary, performs the failover to another node from a pool of spare nodes.

All the technical aspects and fine-tuning of a cluster (i.e. quorum, lease, votes, network issues, avoiding split-brain, etc.) are covered & managed transparently by the Azure Service Fabric. The specific details of Azure Service Fabric go beyond the scope of this article, but you can find more information in the article Disaster recovery in Azure Service Fabric.

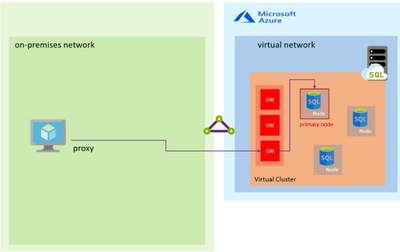

From the point of view of an application connected to an Azure SQL Managed Instance, you don’t have the concept of Listener (like in an Availability Groups implementation) or Virtual Name (like in an SQL Server FCI) – you connect to an endpoint via a Gateway. This is also an additional advantage since the Gateway is in charge of “redirecting” the connection to the Primary Node or a new Node in case of a Failover, so you don’t have to worry about changing the connection string or anything like that. Again, this is the same functionality that the Virtual Name or Listener provides, but more transparently to you. Also, notice in the Diagram above that we have redundancy on the Gateways to provide an additional level of availability.

Below is a diagram of the connection architecture, in this case using the Proxy connection type, which is the default:

In the Proxy connection type, the TCP session is established using the Gateway and all subsequent packets flow through it.

Storage

Regarding Storage, we use the same concept of “Shared Storage” that is used in a FCI but with additional advantages. In a traditional FCI On-Prem the Storage becomes what is known as a “Single Point of Failure” meaning that if something happens with the Storage – your whole Cluster solution goes down. One of the possible ways customers could work around this problem is with “Block Replication” technologies of the Storage (SAN) Providers replicating this shared Storage to another Storage (typically between a long distance for DR purposes). In SQL Managed Instance we provide this redundancy, using Azure Premium Storage for Data and Log files, with Local Redundancy Storage (LRS) and also separating the Backup Files (following our Best Practices) in an Azure Standard Storage Account also making them redundant using RA-GRS (Read Access Geo Redundant Storage). To know more about redundancy of backups files take a look at the post on Configuring backup storage redundancy in Azure SQL.

For performance reasons, the tempdb database is kept local in an SSD where we provide 24 GB per each of the allocated CPU vCores.

The following diagram illustrates this storage architecture:

It is worth mentioning that Locally Redundant Storage (LRS) replicates your data three times within a single data center in the primary region. LRS provides at least 99.999999999% (11 nines) durability of objects over a given year.

To find out more about redundancy in Azure Storage please see the following article in Microsoft documentation – https://docs.microsoft.com/en-us/azure/storage/common/storage-redundancy.

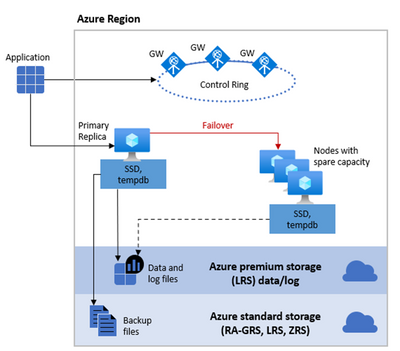

Failover

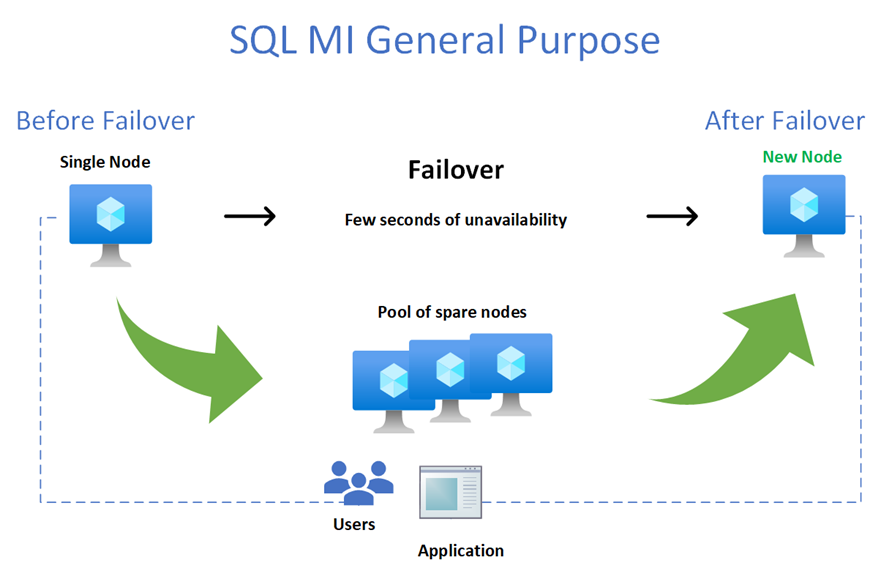

The process of Failover is very straightforward and of course you can have either a “planned failover” – such as a user-initiated manual failover or a system-initiated failover taking place because of a database engine or operating system upgrade operation, and an “unplanned failover” taking place due to a failure detection (i.e. hardware, software, network failure, etc.).

Regarding an “unplanned” or an “unexpected” failover, when there are critical errors in the Azure SQL Managed Instance functioning, an API call is made to communicate the Azure Service Fabric that a Failover needs to happen. Of course, the same happens when other errors (like a faulty node) are detected. In this case, the Azure Service Fabric will move the stateless sqlservr.exe process to another stateless compute node with sufficient free capacity. Data in Azure Blob storage is not affected by the move, and the data/log files are attached to the newly initialized sqlservr.exe process. After that a Recovery Process on the Databases is initiated. This process guarantees 99.99% availability, but a heavy workload may experience some performance degradation during the transition since the new sqlservr.exe process starts with cold cache.

Since a Failover can occur unexpectedly, customer might need to determine if such event took place and for that purpose customer can determine the timestamp of the last Failover with the help of T-SQL as described in the article How-to determine the timestamp of the last SQL MI failover from the SQL MI how-to series.

Also, you could see the Failover event listed in the Activity Log using the Azure Portal.

Below is a diagram of the failover process:

As you can see from the diagram, on the picture above, the Failover process will introduce a brief moment of unavailability while a new node from the Pool of spares nodes is allocated. In order to minimize the impact of a failover you would need to incorporate in your application a retry-logic. This is normally accomplished detecting the transient errors during a failover (4060, 40197, 40501, 40613, 49918, 49919, 49920, 11001) within a try-catch block of code, waiting a couple of seconds and then retrying the connection (re-connect). Alternatively, you could use the Microsoft.Data.SqlClient v3.0 Preview NuGet package in your application that have already incorporated a retry logic. To know more about this driver see the following article: Introducing Configurable Retry Logic in Microsoft.Data.SqlClient v3.0.0

Notice that that currently that only one failover call is allowed every 15 minutes.

In this article we have introduced the concepts of high availability and explained how it is implemented for the General Purpose service tier. In the second part of where we will cover High Availability in the Business Critical service tier.

Recent Comments