by Contributed | Aug 26, 2022 | Technology

This article is contributed. See the original author and article here.

by Contributed | Aug 25, 2022 | Business, Hybrid Work, Microsoft 365, Technology, Viva Goals, WorkLab

This article is contributed. See the original author and article here.

In today’s shifting macroeconomic climate, Microsoft is focused on helping organizations in every industry use technology to overcome challenges and emerge stronger. From enabling hybrid work to bringing business processes into the flow of work, Microsoft 365 helps organizations deliver on their digital imperative so they can do more with less.

The post From intuitive sharing with OneDrive to driving prioritization with Viva Goals—here’s what’s new in Microsoft 365 appeared first on Microsoft 365 Blog.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

by Contributed | Aug 24, 2022 | Technology

This article is contributed. See the original author and article here.

The importance of fast database restore operations in any environment cannot be overstated – it is essentially time business cannot carry on; everything is offline.

There is a known DBA saying that “one should not have a backup strategy, but a recovery strategy”, which means that just taking backups isn’t enough, and that the restore process should also be regularly tested, so that you measure and know how much time it takes to restore a production database if and when the need arises.

Azure SQL Managed Instance allows customers not only to recover their databases from when FULL or DIFFERENTIAL backups were taken, but it also allows customers to recover their database to any given point in time by offering PITR – Point-In-Time Restore functionality. Besides being able to restore data from recent backups, Azure SQL Managed Instance also supports recovering databases from discrete, individual backups from long term retention (LTR) storage – which can be configured up to 10 years.

Once the restore process has been initiated, there is nothing that a customer can do to help it – as the process executes asynchronously and cannot be cancelled. While customers can scale up Azure SQL Managed Instance before a restore operation to increase backup restore speed, it’s only possible to do so before a planned restore, and not when there is a sudden and unexpected need. Otherwise, no matter how fast the scaling operation executes, it would still further delay the overall speed of the database restoration process.

Improving restore speed

There are multiple phases of the SQL MI database restore process, which, aside from the usual SQL Server restore operations, includes registering databases as an Azure asset for visibility & management within the Azure Portal. Additionally, the restore process forces a number of specific internal options, and some property changes such as forcing the switch to the FULL recovery model and forcing the database option PAGE_VERIFY to CHECKSUM, as well as eventually performing a full backup to start the log chain and provide full point-in-time- restore options through the combination of full and log database backups.

The restore operation on SQL MI also includes log truncation, and the execution time for the truncation has been vastly improved, which means that customers can expect their entire database restore process to become faster on both service tiers.

Service tiers differences

It is important to understand that faster means different speeds on different service tiers. This has to do with an additional necessary operation for the Business Critical service tier. As we have described the internal architecture in High Availability in Azure SQL MI: Business Critical service tier, the Business Critical Service tier runs on a synchronous Availability Group with 4 replicas, meaning the initial backup must be replicated to all the replicas in order to complete the setup. The current implementation of the restore process on the Business Critical service tier uses direct seeding of the Availability Group to distribute the newly restored database between replicas. As such, the restore operation will not complete until the backup has been restored to every replica, and a full backup can’t be taken until direct seeding to the secondary replicas finishes.

The following diagram shows a conceptual explanation of some of the most time-consuming execution phases of the database restore process on Azure SQL Managed Instance.

Although the boxes are similar sizes, they don’t represent the real amount of time spent on each of those specific functions. The real amount of time vastly differs based on the number of transaction log backups, the ratio of data compression, and, of course, the sheer speed of write and log operations that are available to the Azure SQL Managed Instance. Another important factor to consider are the operations executed against the Azure SQL Managed Instance during the database restore process – either directly by the user, or automatically by the engine – such as Tuple Mover in Columnstore Indexes, or even automated operations configured by the customer, such as index maintenance jobs.

Test setup & obtained results

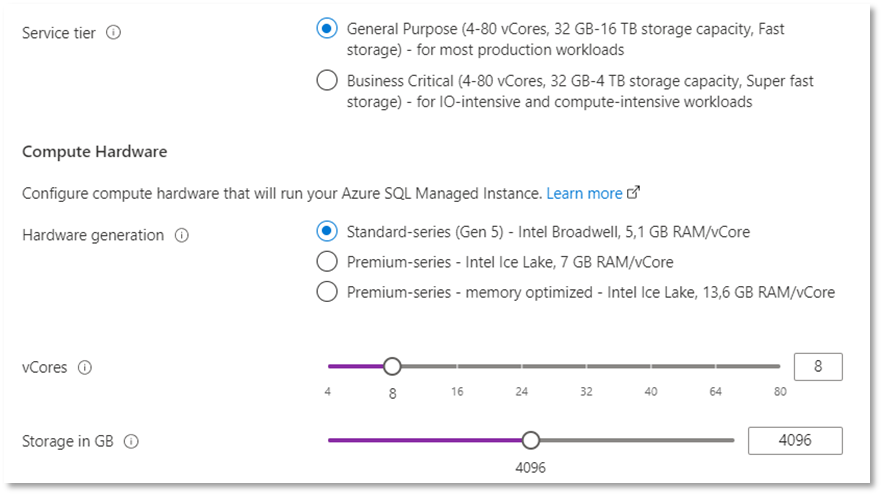

For the performance testing, we created two identical Azure SQL Managed Instances – one with the log truncation improvement included and activated, while the second one does not use the improvement. The two instances use the following hardware specifications:

- Service tier: General Purpose

- Hardware generation: Standard Series (Gen 5)

- CPU vCores: 8

- Storage: 4 TB

- Region: East US 2

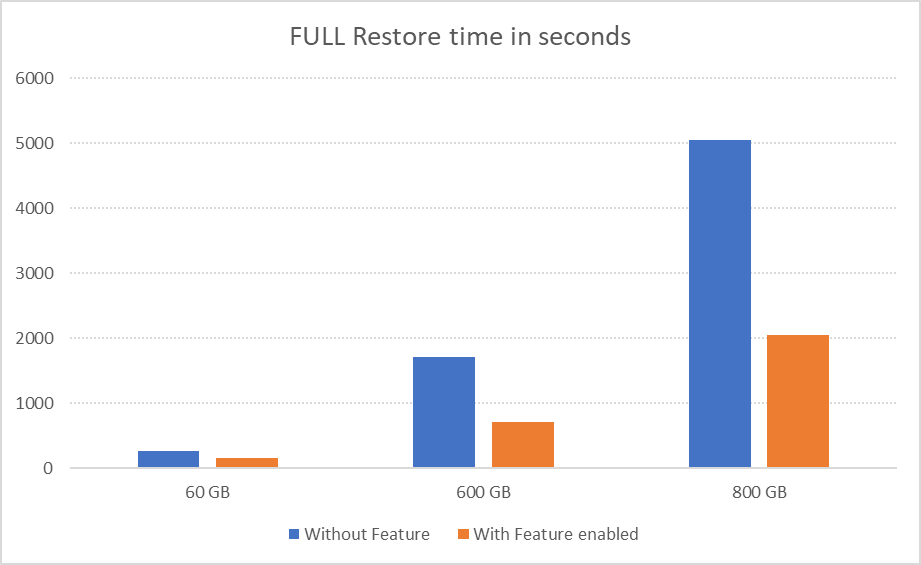

We have tested three databases, each one with a different size and a different fullness percentage, representing ranges from 60 GB up to 800 GB.

- db1: size of 800GB

- db2: size of 600GB

- db3: size of 60GB

Here are the results, in seconds, for multiple restore tests for each of the databases presented both for the default Azure SQL Managed Instance and for the Azure SQL Managed Instance that has the feature enabled.

|

60 GB

|

600 GB

|

800 GB

|

Without Feature

|

266 s

|

1718 s

|

5048 s

|

With Feature enabled

|

155 s

|

704 s

|

2054 s

|

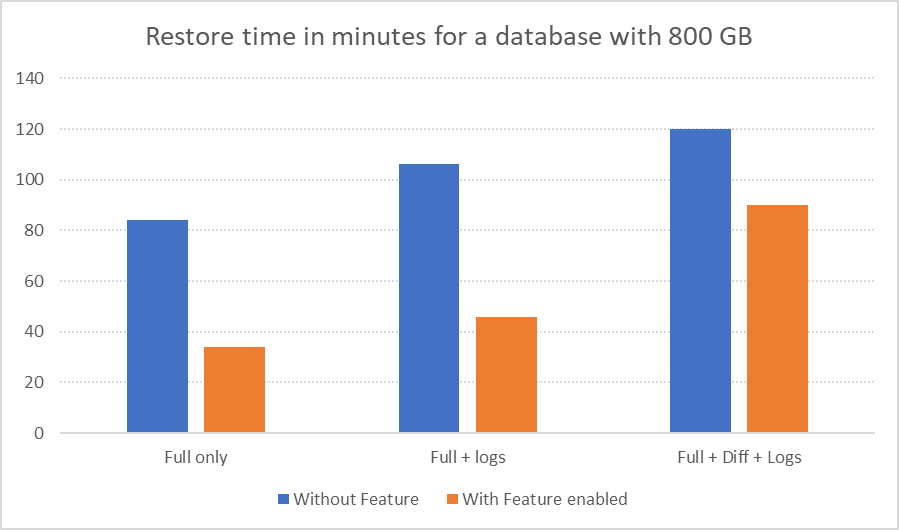

We went a step further, experimenting with the 800 GB database and tested different situations that included not just a fresh FULL backup but also such configurations as FULL backup with a number of Transaction Log backups, FULL and DIFFERENTIAL and Transaction Log backups. The observed results can be seen in the table below:

|

Full only

|

Full + logs

|

Full + Diff + Logs

|

Without Feature

|

84 min

|

106 min

|

120 min

|

With Feature enabled

|

34 min

|

46 min

|

90 min

|

Here are those results visualized for better understanding of the impact:

Benefit estimates

Exact benefits are very specific to the configuration and workload of each Azure SQL Managed Instance. On the General Purpose service tier, the exact size of database data and log files directly impacts the benefit, as it does with any kind of workload. If you are interested in more details, please consult our respective documentation article How-to improve data loading performance on SQL Managed Instance with General Purpose service tier. Additionally, customers should be mindful of the exact geographical region of their backup file locations, and the number of files, as a high number of transaction log files significantly impact restore times, as well as the number of differential backups, and their size.

For the databases smaller than 1 TB in the General Purpose service tier, we expect database restoration speed to increase from 20% to 70%. For databases that are greater than 1 TB, expect restoration speed improvements between 30% to 70%.

However, overall, customers on both service tiers should see a performance improvement to their database backup restoration process – but the improvement will vary for each case.

So, what is the catch, you might ask…?

How can you get an Azure SQL Managed Instance with this feature?

How can you enable faster restores?

The best news is that this feature is coming right now to all of our customers, and they won’t have to enable it, their restore operations will simply start working faster.

by Contributed | Aug 23, 2022 | Technology

This article is contributed. See the original author and article here.

Before we start, please note that if you want to see a table of contents for all the sections of this blog and their various Purview topics, you can locate them in the following link:

Microsoft Purview- Paint By Numbers Series (Part 0) – Overview – Microsoft Tech Community

Disclaimer

This document is not meant to replace any official documentation, including those found at docs.microsoft.com. Those documents are continually updated and maintained by Microsoft Corporation. If there is a discrepancy between this document and what you find in the Compliance User Interface (UI) or inside of a reference in docs.microsoft.com, you should always defer to that official documentation and contact your Microsoft Account team as needed. Links to the docs.microsoft.com data will be referenced both in the document steps as well as in the appendix.

All of the following steps should be done with test data, and where possible, testing should be performed in a test environment. Testing should never be performed against production data.

Target Audience

The Information Protection section of this blog series is aimed at Security and Compliance officers who need to properly label data, encrypt it where needed.

Document Scope

This document is meant to guide an administrator who is “net new” to Microsoft E5 Compliance through using Compliance Manager to run an assessment.

Out-of-Scope

This document does not cover any other aspect of Microsoft E5 Purview, including:

- Data Classification

- Information Protection

- Data Protection Loss (DLP) for Exchange, OneDrive, Devices

- Data Lifecycle Management (retention and disposal)

- Records Management (retention and disposal)

- eDiscovery

- Insider Risk Management (IRM)

- Priva

- Advanced Audit

- Microsoft Cloud App Security (MCAS)

- Information Barriers

- Communications Compliance

- Licensing

It is presumed that you have a pre-existing understanding of what Microsoft E5 Compliance does and how to navigate the User Interface (UI).

For details on licensing (i.e. which components and functions of Purview are in E3 vs E5) you will need to contact your Microsoft Security Specialist, Account Manager, or certified partner.

Overview of Document

This document will give a brief explanation of Compliance Manager and walk you through the basic tabs and aspects of the tool

- Add Assessment

- Step through the assessment sections

- Overview

- Progress (tab)

- Controls (tab)

- Improvement Actions (tab)

- Microsoft Actions (tab)

Use Case

An administrator wants to run their first Compliance Manager assessment.

Definitions

- Actions– the things that need to be done to mark a Control as completed and

- Assessments – these help you implement data protection controls specified by compliance, security, privacy, and data protection standards, regulations, and laws. Assessments include actions that have been taken by Microsoft to protect your data, and they’re completed when you take action to implement the controls included in the assessment.

- Assessment Templates – these templates track compliance with over 300 industry and government regulations around the world.

- Compliance Score – Compliance Manager awards you points for completing improvement actions taken to comply with a regulation, standard, or policy, and combines those points into an overall compliance score. Each action has a different impact on your score depending on the potential risks involved. Your compliance score can help prioritize which action to focus on to improve your overall compliance posture. You receive an initial score based on the Microsoft 365 data protection baseline. This baseline is a set of controls that includes key regulations and standards for data protection and general data governance.

- Controls – the various requirements in your tenant that must be met to meet a part of an assessment.

- Control Family – a group of Controls.

- Microsoft Actions – These are actions that Microsoft has performed inside of your tenant to help it meet a specific assessment.

- Progress – each assessment has a progress chart to help you visualize the progress you are making to meet the requirements of the assessment

- Your Improvement Actions – These are actions that you and your organization must perform to meet a specific assessment/certification/regulation.

Done – Notes

Types of Assessments in Compliance Manager:

This is taken from the official Microsoft documentation (see the link in the Appendix and Links section):

“Compliance Manger can be used to assess different types of products. All templates, except the Microsoft Data Protection Baseline default template, come in two versions:

- A version that applies to a pre-defined product, such as Microsoft 365, and

- A universal version that can be tailored to suit other products.

Assessments from universal templates are more generalized but offer expanded versatility, since they can help you easily track your organization’s compliance across multiple products.

Note that US Government Community (GCC) Moderate, GCC High, and Department of Defense (DoD) customers cannot currently use universal templates.”

Note – for this blog entry, we will not be running any further discussions or comparisons on Microsoft and/or Universal assessments.

Pre-requisites

It is recommended you read the official Microsoft documentation on Compliance Manager and the Paint By Numbers – Compliance Manager – Overview (Part 9a) blog.

Add an Assessment

Now that you have a basic understanding of Compliance Manager (See Part 9a – Compliance Manager – Overview blog entry), we will add an assessment.

- Go to Compliance Manager – Assessments

- Click Add assessment.

- Click Select template.

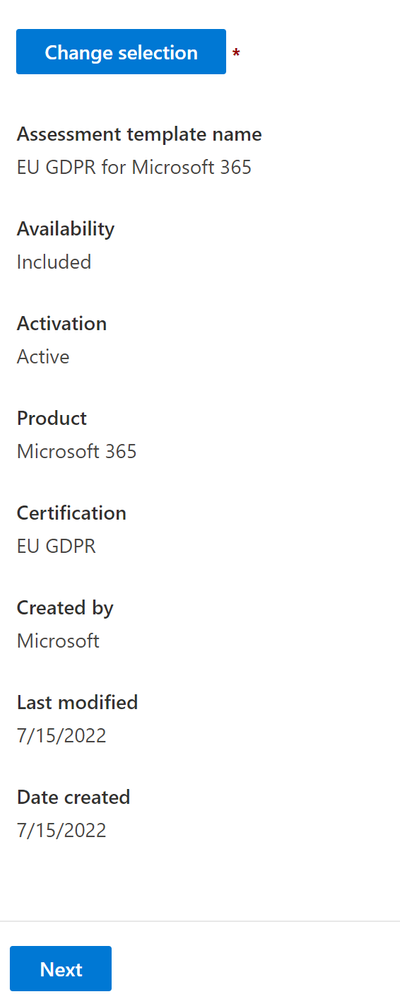

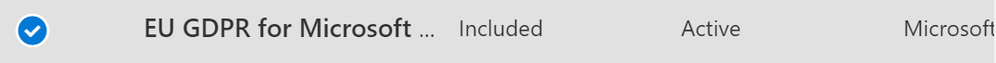

- At the time of writing this blog, there are 700+ templates. Search the list and see which assessment is most applicable for your need, regulation, certification, etc. I have chosen the EU GDPR for Microsoft assessment for this blog entry. Once you have the assessment you want, click Save.

- You will then see your assessment populated with information on the assessment. Click Next.

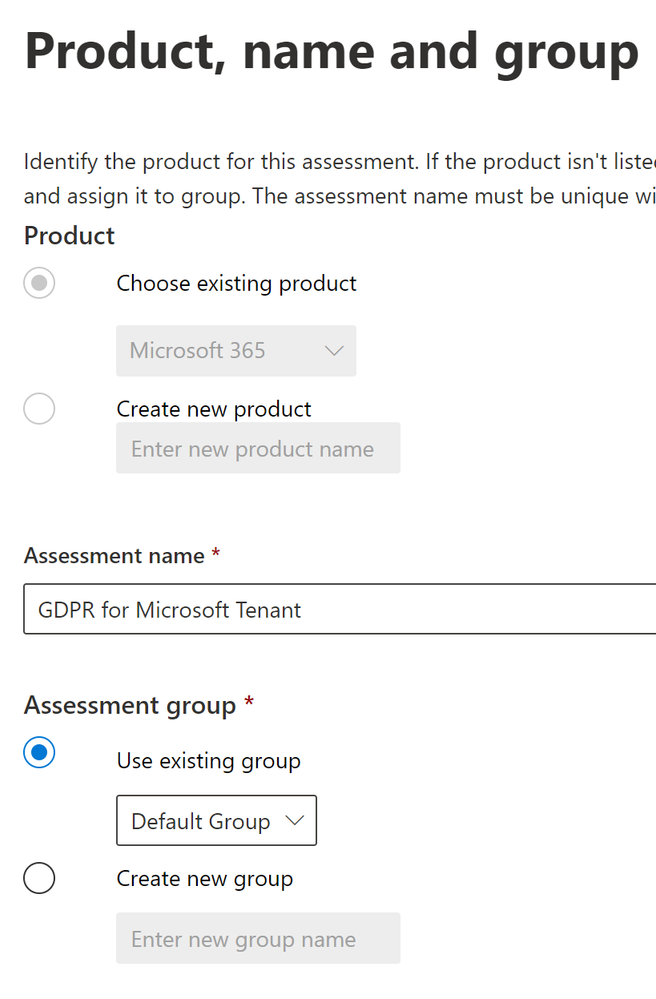

- On the next step of the wizard, the Product will be preselected (because I chose an assessment specific to Microsoft). We will just give it a name and then add an assessment Group.

- For the Assessment group, I selected Default Group. As for what an Assessment Group is, here is the official definition from the Microsoft Documentation:

“When you create an assessment, you’ll need to assign it to a group. Groups are containers that allow you to organize assessments in a way that is logical to you, such as by year or regulation, or based on your organization’s divisions or geographies.”

- When you are satisfied, click Next.

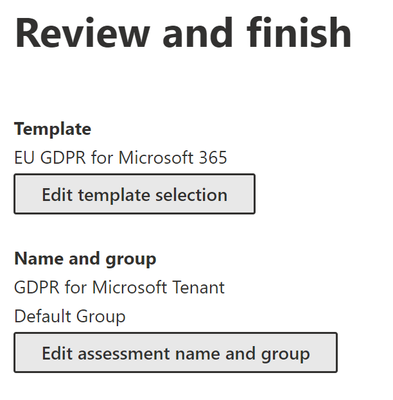

- Review your assessment information and make changes as needed. Then click Create Assessment and then Done.

- You will be automatically taken to your new assessment. Proceed to the next Overview and Tabs section below.

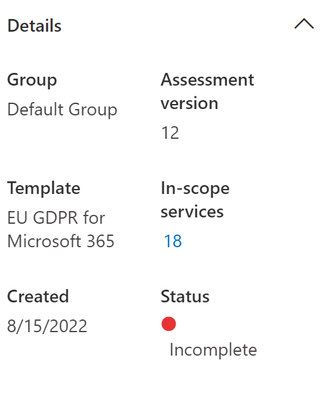

Assessment – Overview (tab)

We will start with the Overview section of the assessment on the left-hand side.

- The Overview tab does exactly what you will expect.

- The Details section will show you the highest of high-level states about your assessment.

- The About section will give a high-level comment about your particular regulation or certification. The link provided will take you to the official website for that regulation or certification.

- On the right you will see your 4 tabs for the assessment. We will take each of these one at a time, from left to right, beginning with the Progress tab.

- Let us move to the Progress tab on the right. Proceed to the next section.

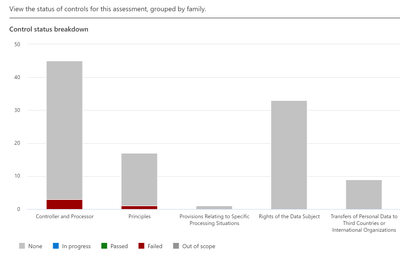

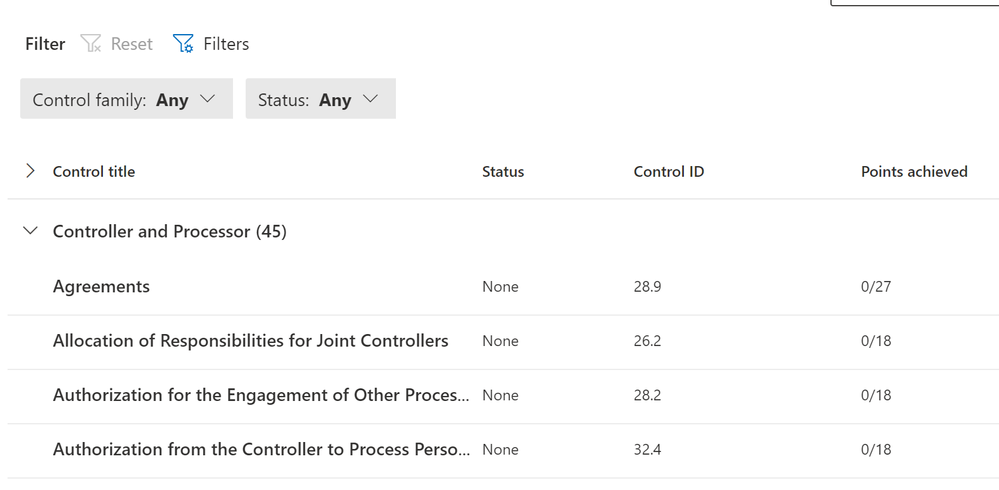

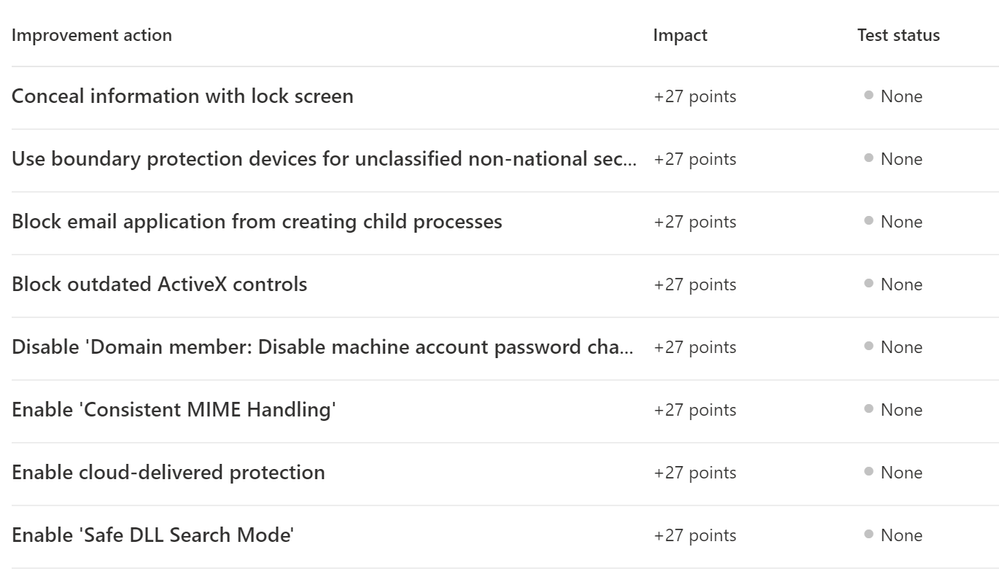

Assessment – Controls (tab)

This tab covers the controls and Control Families that are needed to meet your regulation/certification. Controls are the various requirements in your tenant that must be met to meet a part of an assessment. A Control Family is a grouping of Controls. This will be visible in the top section.

Top section:

In this example, there are 4 Controls Families. You can see by the color if something is done (colored) or not done (grey).

Bottom section:

In the bottom section, you will be able see the Control Family and under each, a Control.

- If you click on a family and then on a control, you will be taken to a specific Control. I will select Controller and Processor – Agreements.

- This will take your Improvements actions (and Microsoft actions). Here you can select a specific improvement action to run to help meet your regulation/certification.

- Note – Because there are multiple paths to arrive at a specific Control and the Improvements, you can make, we will cover how to take an action on a Control in the Control flows section below. You can skip to that section now if you wish to see how to run an improvement action. Otherwise, we will take a half step back and look at the Improvement Actions (tab)

- Here you will see the top 10 actions you can do to improve your assessment score. We will cover this in the Your Improvement action section below. If you want more detail on these improvement actions, skip to that section.

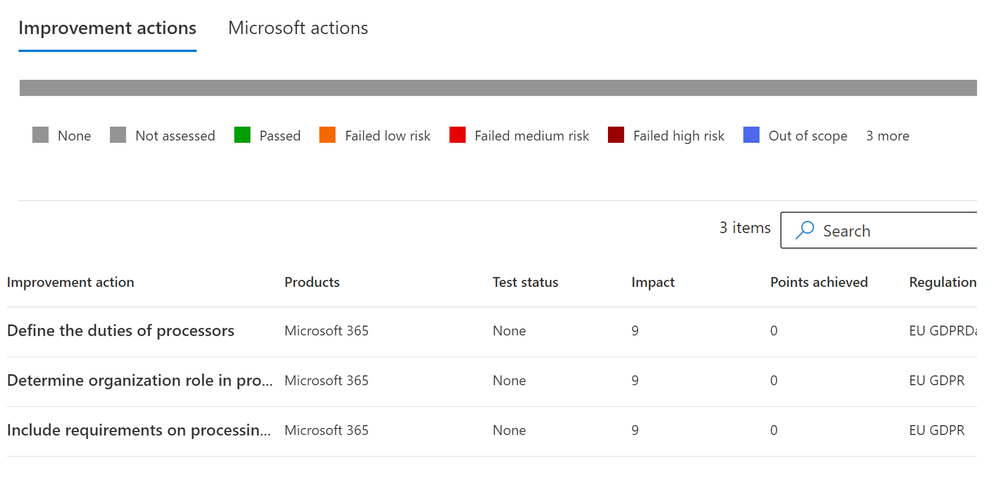

Assessment – Improvement Actions (tab)

This tab will show you ALL the improvements you can make to help meet your regulation/certification needs. Here is a sample screenshot.

If you click on any of these actions, it will take you to the Improvement Action.

- Note – Because there are multiple paths to arrive at a specific Control and the Improvements, you can make, we will cover how to take an action on a Control in the Control flows section below. You can skip to that section now if you wish to see how to run an improvement action. Otherwise, we will take a half step back and look at the Microsoft Actions (tab)

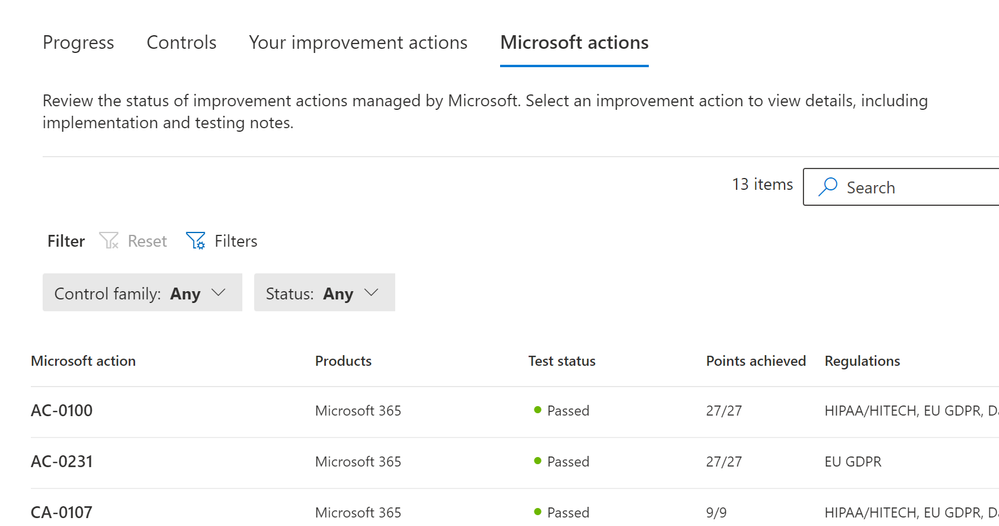

Assessment – Microsoft Actions (tab)

Listed here are all the items Microsoft will make sure are secured in your tenant.

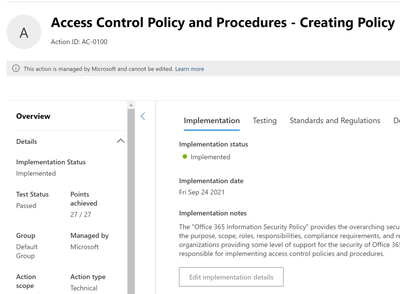

- Click on the first of these Microsoft actions. I’ve clicked on the action AC—0100.

- When you click on an action, it will take you to a pane similar to the one found in the Improvement Actions. So, I will not explain these steps here.

- There is nothing for you to do in this tab.

- You have now reached the end of this part of the blog. To know how to run improvements, please proceed to next part of this blog series Microsoft Purview – Paint By Numbers Series (Part 9c) – Compliance Manager – Improvement Actions.

Here is the link Microsoft Purview- Paint By Numbers Series (Part 9c) – Compliance Manager – Improvement Actions – Microsoft Tech Community

Appendix and Links

Microsoft Purview Compliance Manager – Microsoft Purview (compliance) | Microsoft Docs

Build and manage assessments in Microsoft Purview Compliance Manager – Microsoft Purview (compliance) | Microsoft Docs

Learn about assessment templates in Microsoft Purview Compliance Manager – Microsoft Purview (compliance) | Microsoft Docs

Build and manage assessments in Microsoft Purview Compliance Manager – Microsoft Purview (compliance) | Microsoft Docs

Working with improvement actions in Microsoft Purview Compliance Manager – Microsoft Purview (compliance) | Microsoft Docs

Microsoft Purview- Paint By Numbers Series (Part 9c) – Compliance Manager – Improvement Actions – Microsoft Tech Community

Note: This solution is a sample and may be used with Microsoft Compliance tools for dissemination of reference information only. This solution is not intended or made available for use as a replacement for professional and individualized technical advice from Microsoft or a Microsoft certified partner when it comes to the implementation of a compliance and/or advanced eDiscovery solution and no license or right is granted by Microsoft to use this solution for such purposes. This solution is not designed or intended to be a substitute for professional technical advice from Microsoft or a Microsoft certified partner when it comes to the design or implementation of a compliance and/or advanced eDiscovery solution and should not be used as such. Customer bears the sole risk and responsibility for any use. Microsoft does not warrant that the solution or any materials provided in connection therewith will be sufficient for any business purposes or meet the business requirements of any person or organization.

Recent Comments