by Contributed | Sep 2, 2022 | Technology

This article is contributed. See the original author and article here.

The first problem we hear from customers moving to Azure Data Factory (ADF), who have been using SQL Server Integration Services (SSIS) to get their Project Online OData, is that the authentication and authorization is not straightforward. There isn’t a simple choice to login to Project Online, so you have to make a call to get a token which can then be used in the REST calls to OData. The following post steps through the process. I’m not going deep into the details of ADF and won’t cover all the steps of making an App Registration – there are plenty of resources out there, and this concentrates on the authentication then pulls in some Project level data. It gets more complicated obviously when you also want tasks and assignments, but the same approaches used with SSIS will work just as well in ADF.

TL;DR – if you know all about ADF and Project Online and App Registrations and just want the auth piece – jump to the M365Login section – just about halfway down, or just take a look at https://github.com/LunchWithaLens/adf which has definitions for the whole pipeline.

What you will need:

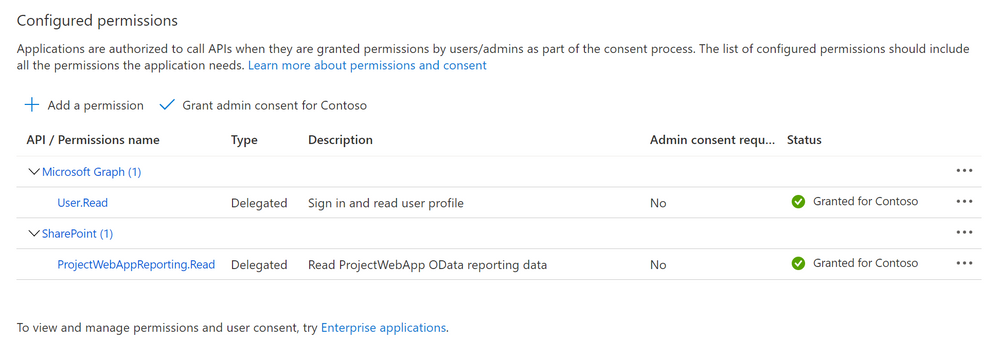

- An App Registration in Azure Active Directory that allows you to read the Project reporting data. You will need your Tenant ID and also the Client ID and registered secret of the App Registration

The require App Registration Settings

The require App Registration Settings

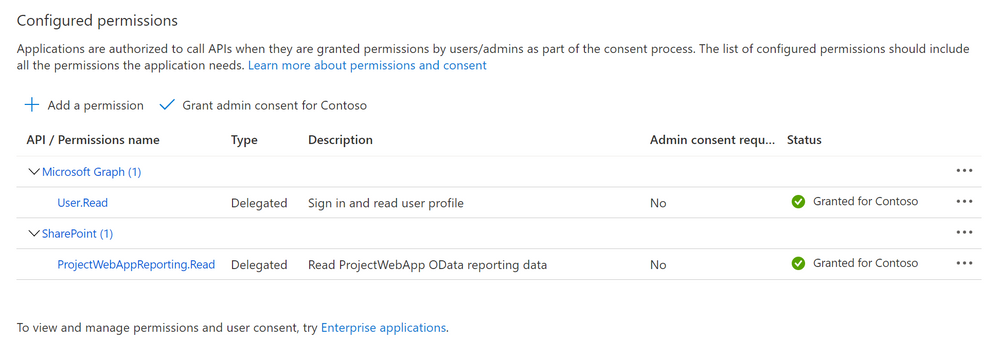

- A user account that just needs Access to Project Server reporting service. You will need the account name and password. The authentication will use the Resource Owner Password Credential (ROPC). This method of authentication is not recommended when other approaches are available (see Microsoft identity platform and OAuth 2.0 Resource Owner Password Credentials ) but as there is no “app-only” authentication options for Project Online this is one such occasion when this is the only way. To ensure this is as secure as possible we will be storing the username and password in Azure Key Vault (AKV).

Minimum user settings for the account (although they don’t need Team Member)

Minimum user settings for the account (although they don’t need Team Member)

In this example they are also a team member, but that is not necessary.

- An Azure Data Factory resource in Azure

- Somewhere to write the data. In this example I cover both saving out as Json to blob storage in Azure, as well as saving to SQL Server (in this case hosted in Azure. You will need connection strings for whatever storage you are using

- If using SQL Server you will need stored procedures that will do the data handling – more details later

Once you have all these pieces in place, we can continue with ADF to:

- Add Linked Services

- Add Datasets

- Build a pipeline

Linked Services

We need 4 linked services

- An Azure Key Vault where we will be storing our account details and App Registration secret

- A REST linked service – basically our OData endpoint

- Azure Blob Storage (not necessary – but I found it useful in debugging before I added it all into SQL Server)

- SQL Server

To keep this blog relatively short, I’m not going into all the details of setting up AKV, just that using a managed identity makes it fairly easy to use in AFD.

The REST linked literally just needs the base URL configured – and this will be the URL for your PWA instance’s OData feed, along with any select options to limit the returned fields. As an example, I used:

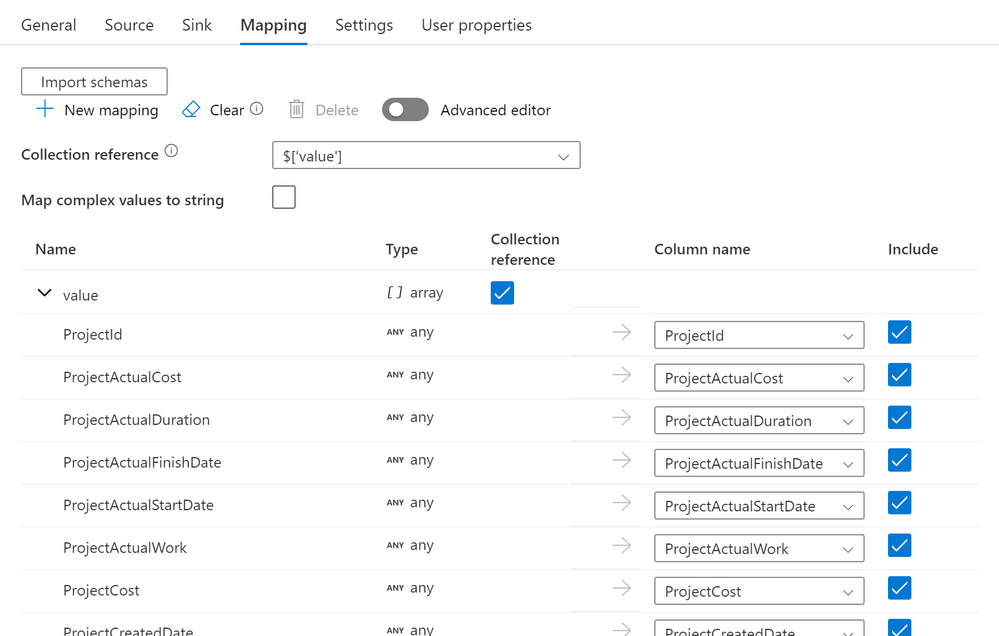

https://<tenantname>.sharepoint.com/sites/pwa/_api/ProjectData/Projects?$select=ProjectId,ProjectActualCost,ProjectActualDuration,ProjectActualFinishDate,ProjectActualStartDate,ProjectActualWork,ProjectCost,ProjectCreatedDate,ProjectCurrency,ProjectDescription,ProjectDuration,ProjectFinishDate,ProjectIdentifier,ProjectLastPublishedDate,ProjectModifiedDate,ProjectName,ProjectPercentCompleted,ProjectPercentWorkCompleted,ProjectStartDate,ProjectStatusDate,ProjectWork

This limited the columns returned to just those I needed. The authentication type was left as anonymous as I was handling this latter with a bearer token.

The Azure Blog storage isn’t a necessity – if you want to use one then easy to configure but I won’t go into the full details here. Ping me in the comments if you can’t find good resources to help.

Finally the SQL Server, and mine was a database I was already using for something else to which I just added a couple of tables and sprocs. In an earlier attempt I’d configured a more expensive SQL Server instance than I’d realised – and blown through my monthly allowance… The SQL Server linked service allows easy connectivity to an AKV to get the connection string – for a secure configuration.

Datasets

The datasets match up to 3 of the linked services. My “RestResource1” to link to my REST, my “ProjectTable” to match up to my SQL database and a specific table, and my “json1” that I use to connect to my blob storage to save a file. Again, configuring these I leave as an exercise for the reader :) , but the GitHub repo has definitions for all of these so you can see how they hang together. The pipeline will help them make more sense too – which comes next.

The Pipeline

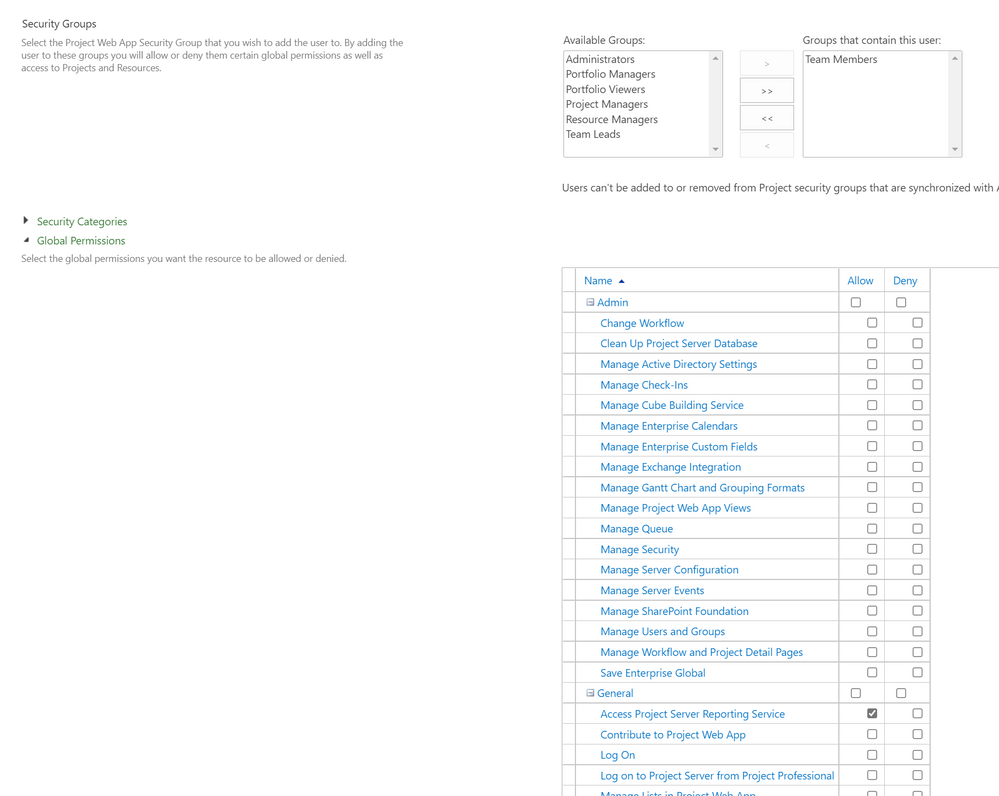

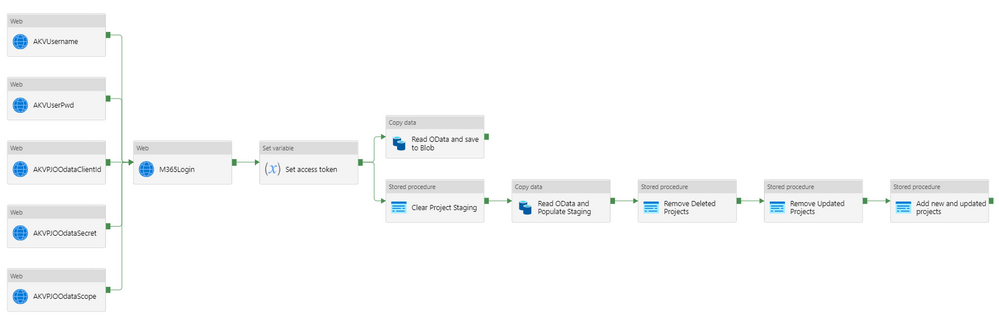

To help visualize where we are headed, first we can look at the final short pipeline:

The full end-to-end pipeline

The full end-to-end pipeline

The first column of activities is reading the required data from AKV. The names should make it obvious what the data is, the username and password, the ClientId and secret for the app registration, then finally the scope for the authentication call. This isn’t strictly a ‘secret’ but I put in in the AKV as it helps when demonstrating (or recording) the solution to be able to show the values. Exposing the scope is no big deal and avoids having to redact stuff in any recording I do.

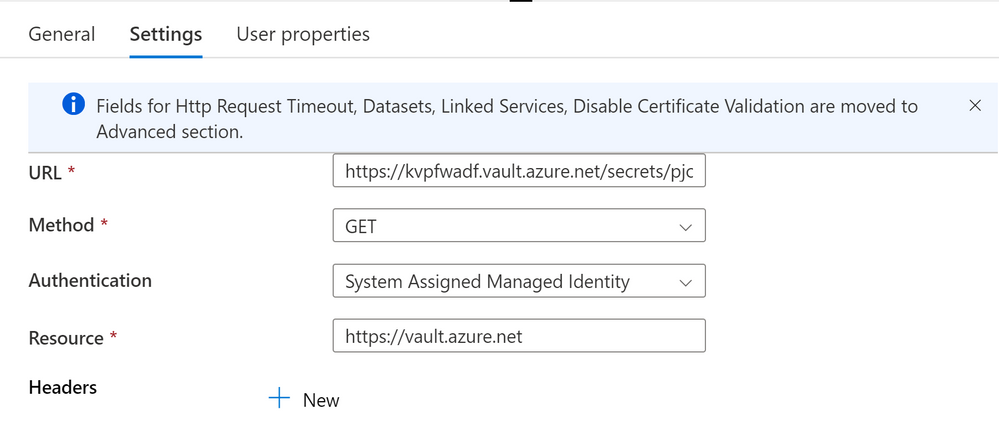

The only part defined for these activities are the settings – and the scope one is a good example:

Example KeyVault settings

Example KeyVault settings

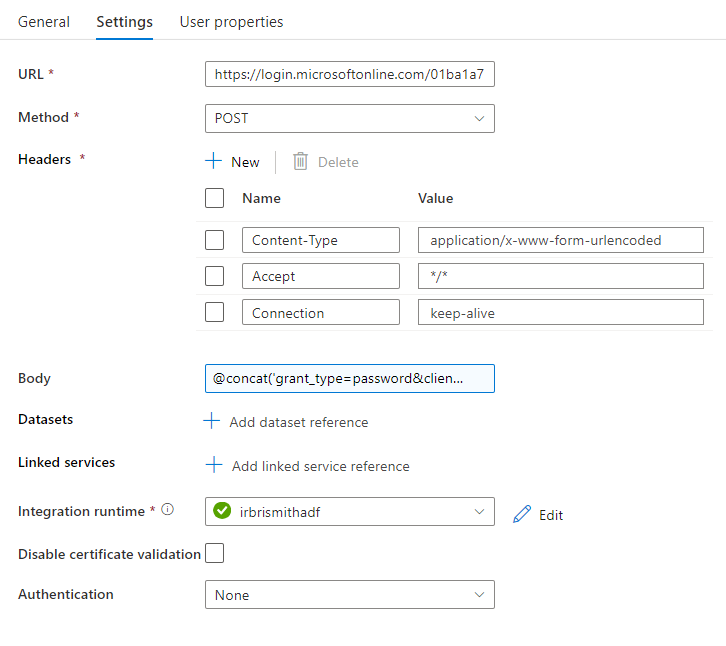

The most interesting step, and maybe the only one you are interested in, is the one I called M365Login – and that is just my name – there isn’t a special activity, it is just a web activity. The settings for this one are as follows:

Web call settings to get token

Web call settings to get token

The URL is of the form https://login.microsoftonline.com/<tenantid>/oauth2/v2.0/token and the method is POST and the headers configured as shown above with Content-Type application/x-www-form-urlencoded, Accept */* and Connection keep-alive. The Body is the key part – and is using the concatenation function and brings in the values from the previous calls to AKV. The full form looks something like the following, where I have used specific names for my AKV activities – yours may vary.

@concat(‘grant_type=password&client_id=’,activity(‘AKVPjoClientId’).output.value,‘&client_secret=’,activity(‘AKVPjoODataSecret’).output.value,‘&scope=’,activity(‘AKVPjoScope’).output.value,‘&username=’,activity(‘AKVUserName’).output.value,‘&password=’,activity(‘AKVUserPassword’).output.value)

Basically it is using the output.value property of the previous steps to complete the “grant_type” body needed for an ROPC call.

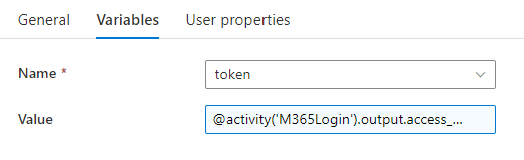

I then use a Set variable action to take the response and keep the token for later use.

Variable setting for token

Variable setting for tokenThe full string used in the Value is @activity(‘M365Login’).output.access_token

Now I have my token I can use that to make my REST call to Project Online’s OData endpoint using a Copy data activity. First I use a Stored procedure activity to clear out my staging table. Take a look at the GitHub for more details, but it is just a ‘delete from’ call.

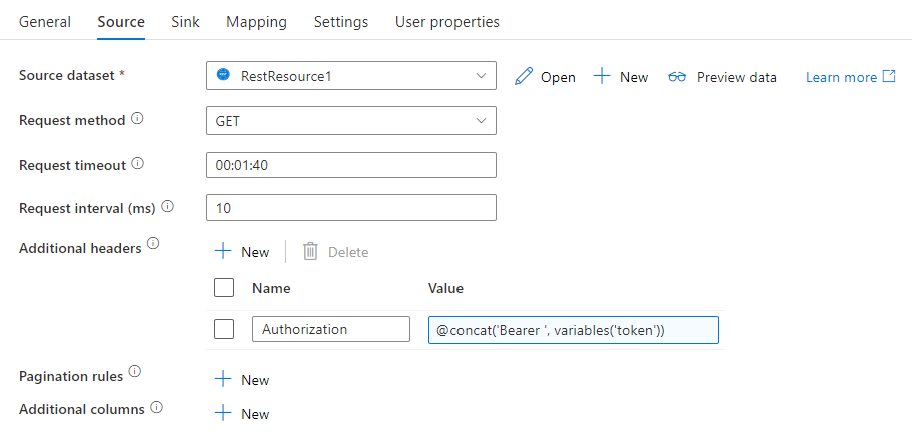

The copy data activity has a source and sink (destination) and I use one to read and then write to blob storage, then another to read and write to SQL. I’ll concentrate on the second, which has Source settings configured like this:

Source data settings

Source data settingsThe source dataset is my REST dataset, I add the header Authorization with a Value of

@concat(‘Bearer ‘,variables(‘token’))

which gets the token from my variable called token, and I have also set the Pagination rulesRFC5988 with a Value True (although that isn’t in the above screenshot.

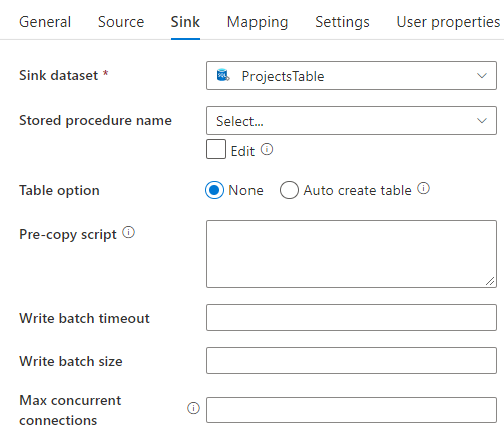

The Sink settings are as follows:

Sink data settings

Sink data settingswith the sink dataset as my SQL dataset ‘ProjectsTable’. The magic happens on the Mappings tab – and I had created a table that matched the columns I was returning from REST – so just a 1:1 mapping. You can get more adventurous here if you need to do anything fancy:

Data mapping from OData to my SQL table

Data mapping from OData to my SQL table

Once that is complete, we have a populated Project staging table with the current projects read from OData. The final steps are then just 3 stored procedure steps that remove deleted projects from the live project table (by deleting if they do not now exist in staging). also deleting any projects that have been updated (the modified date is newer in the staging table) and then finally copying in the updated and new plans from staging to the live table.

As mentioned, this is just the basics and only looks at Projects – but the main focus here was the authentication steps of getting the token with ROPC, then using the token in the REST call.

I appreciate I have glossed over a lot of the detail here so happy to fill in some of the gaps if required in the comments section or another blog if needed. However, if you know ADF and already use SSIS – the authentication piece was probably all you came for.

by Contributed | Sep 2, 2022 | Technology

This article is contributed. See the original author and article here.

Hey there, MTC! We’re dancin’ in September, so let’s boogie on down to this week’s recap!

MTC Moments of the Week

Our first MTC Member of the Week for the new month goes to @Harun24HR! Thank you for your contributions to help other MTC’ers in the Excel community!

We didn’t have any new community events this week, *but* September 1st did mark the start of the Azure Quantum Summer 2022 Hackathon in collaboration with IEEE QCE22! If you’re interested in quantum computing, check out this virtual hackathon happening through Wednesday, September 7, and get the chance to win a Surface Go 3 and other cool prizes!

And over on the blogs, you can read all about the roll out of To Do in Outlook for Windows in @avijityadav‘s new post. Check it out and let us know what you think in the comments!

Unanswered Questions – Can you help them out?

Every week, users come to the MTC seeking guidance or technical support for their Microsoft solutions, and we want to help highlight a few of these each week in the hopes of getting these questions answered by our amazing community!

In the OneDrive for Business community, @Julian12 is seeking guidance for a user who’s seeing previously-removed SharePoint libraries reappear and start syncing.

Meanwhile, in the Windows 11 community, @CindyAMO is asking for help resolving an error with the gdi32full.dll file that’s preventing Windows from updating.

Next Week in Events – Mark Your Calendars!

September 6 – Game Creation on Azure with Teradici

September 8 – Unpacking endpoint management: Managing Windows 365 Cloud PCs with Endpoint Manager

And for today’s fun fact… in the month of August, MTC members spent a combined total of 1,060,750 minutes online. That’s almost 2 years!!

And with that, have a great weekend, everyone!

by Contributed | Sep 1, 2022 | Business, Microsoft 365, Technology

This article is contributed. See the original author and article here.

As more sophisticated cyber criminals take aim at hybrid and remote workers, Microsoft is working to raise awareness among Exchange Online customers that one of the most important security steps they can take is to move away from outdated, less secure protocols, like Basic Authentication. As previously announced, we are turning off Basic Authentication in Exchange Online for all tenants starting October 1, 2022.

The post Microsoft retires Basic Authentication in Exchange Online appeared first on Microsoft 365 Blog.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

by Contributed | Aug 31, 2022 | Technology

This article is contributed. See the original author and article here.

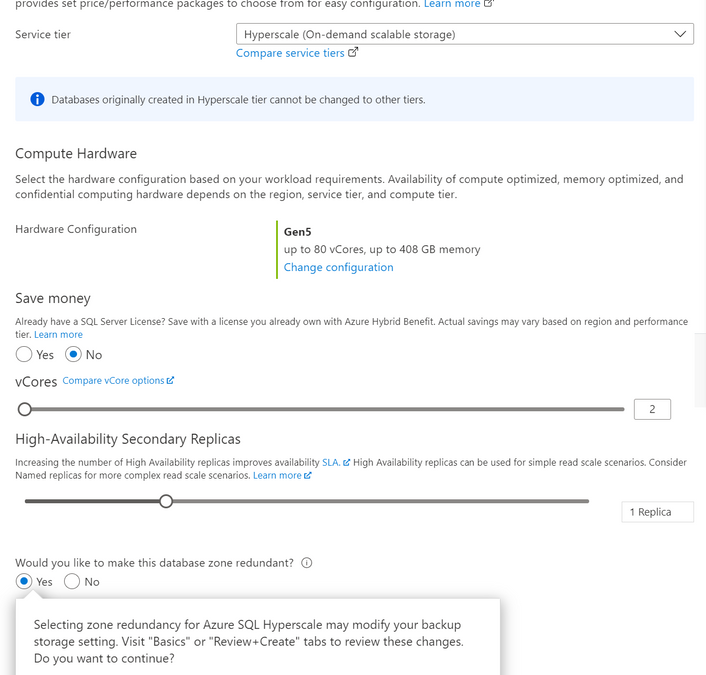

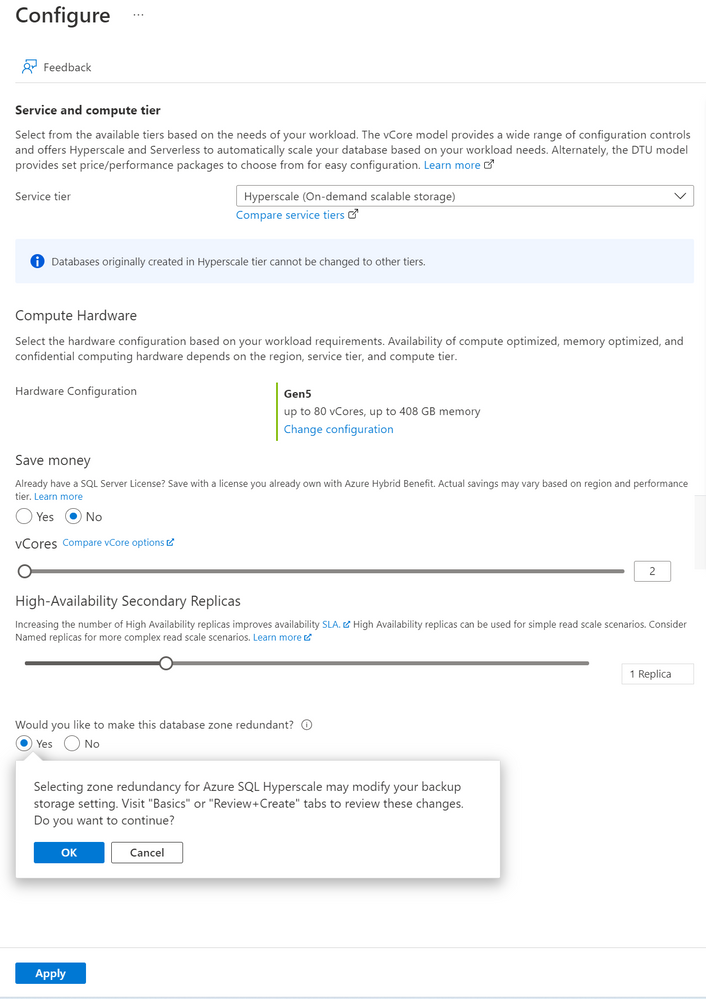

We are excited to announce the General Availability (GA) of enabling Zone Redundancy for Azure SQL Hyperscale databases. The zone redundant configuration utilizes Azure Availability Zones to replicate databases across multiple physical locations within an Azure region. By selecting zone redundancy, you can make all layers of your Hyperscale databases resilient to a much larger set of failures, including catastrophic datacenter outages, without any changes of the application logic. For more information see Hyperscale zone redundant availability.

Creating a zone redundant Hyperscale Database

A zone redundant Hyperscale database can be created with Portal, Azure CLI, PowerShell, or REST API. Zone redundancy for Hyperscale service tier can only be specified at database creation. This setting cannot be modified once the resource is provisioned. Database copy, point-in-time restore, or creating a geo-replica can be used to update the zone redundant configuration for an existing Hyperscale database.

Portal

The following image illustrates how to use Azure portal to configure a new Hyperscale database to be zone redundant. This can be configured in the Configure database blade when creating a new database, creating a geo replica, creating a copy database, doing a point in time restore or doing a geo restore. Zone-redundant or Geo-zone-redundant backup storage and at least 1 High-Availability Secondary Replica must be specified.

CLI

The following CLI commands can be used to create a zone redundant Hyperscale database using the –zone-redundant {false, true} parameter.

The Hyperscale database must have at least 1 high availability replica and zone-redundant or geo-zone-redundant backup storage. Below is an example CLI command for creating a new zone redundant Hyperscale database.

az sql db create -g mygroup -s myserver -n mydb -e Hyperscale -f Gen5 –ha-replicas 1 –-zone-redundant -–backup-storage-redundancy Zone

PowerShell

The following PowerShell commands can be used to create a zone redundant Hyperscale database using the -ZoneRedundant parameter.

The Hyperscale database must have at least 1 high availability replica and zone-redundant or geo-zone-redundant backup storage must be specified. Below is an example PowerShell command for creating a new zone redundant Hyperscale database.

New-AzSqlDatabase -ResourceGroupName "ResourceGroup01" -ServerName "Server01" -DatabaseName "Database01" -Edition “Hyperscale” -HighAvailabilityReplicaCount 1 -ZoneRedundant -BackupStorageRedundancy Zone

Current Limitations

- Zone redundant configuration can only be specified during database creation. This setting cannot be modified once the resource is provisioned. Database copy, point-in-time restore, or creating a geo-replica can be used to update the zone redundant configuration for an existing Hyperscale database. When using one of these update options, if the target database is in a different region than the source or if the database backup storage redundancy from the target differs from the source database, the copy operation will be a size of data operation.

- Named replicas are not currently supported.

- Only Gen5 compute is supported.

- Zone redundancy cannot currently be specified when migrating an existing database from another Azure SQL Database service tier to Hyperscale.

Regions where this is available

All Azure regions that have Availability Zones support zone redundant Hyperscale database.

The require App Registration Settings

Minimum user settings for the account (although they don’t need Team Member)

The full end-to-end pipeline

Example KeyVault settings

Web call settings to get token

Recent Comments