by Contributed | Nov 6, 2022 | Technology

This article is contributed. See the original author and article here.

In Part 1, I walked you through how to azdev-ify a simple Python app. In this post, we will:

- add the Azure resources to enable the observability features in azd

- add manual instrumentation code in the app

- create a launch.json file to run the app locally and make sure we can send data to Application Insights

- deploy the app to Azure

Previously…

We azdev-ified a simple Python app: TheCatSaidNo and deployed the app to Azure. Don’t worry if you have already deleted everything. I have updated the code for part 1 because of the Bicep modules improvements we shipped in the azure-dev-cli_0.4.0-beta.1 release. You don’t need to update your codes, just start from my GitHub repository (branch: part1):

- Make sure have the pre-requisites installed:

- In a new empty directory, run

azd up -t https://github.com/puicchan/theCatSaidNo -b part1

If you run `azd monitor –overview` at this point, you will get an error – “Error: application does not contain an Application Insights dashboard.” That’s because we didn’t create any Azure Monitor resources in part 1,

Step 1 – add Application Insights

The Azure Developer CLI (azd) provides a monitor command to help you get insight into how your applications are performing so that you can proactively identify issues. We need to first add the Azure resources to the resource group created in part 1.

- Refer to a sample, e.g., ToDo Python Mongo. Copy the directory /infra/core/monitor to your /infra folder.

- In main.bicep: add the following parameters. If you want to override the default azd naming convention, provide your own values here. This is new since version 0.4.0-beta.1.

param applicationInsightsDashboardName string = ''

param applicationInsightsName string = ''

param logAnalyticsName string = ''

- Add the call to monitoring.bicep in /core/monitor:

// Monitor application with Azure Monitor

module monitoring './core/monitor/monitoring.bicep' = {

name: 'monitoring'

scope: rg

params: {

location: location

tags: tags

logAnalyticsName: !empty(logAnalyticsName) ? logAnalyticsName : '${abbrs.operationalInsightsWorkspaces}${resourceToken}'

applicationInsightsName: !empty(applicationInsightsName) ? applicationInsightsName : '${abbrs.insightsComponents}${resourceToken}'

applicationInsightsDashboardName: !empty(applicationInsightsDashboardName) ? applicationInsightsDashboardName : '${abbrs.portalDashboards}${resourceToken}'

}

}

- Pass the application insight name as a param to appservice.bicep in the web module:

applicationInsightsName: monitoring.outputs.applicationInsightsName

- Add output for the App Insight connection string to make sure it’s stored in the .env file:

output APPLICATIONINSIGHTS_CONNECTION_STRING string = monitoring.outputs.applicationInsightsConnectionString

- Here’s the complete main.bicep:

targetScope = 'subscription'

@minLength(1)

@maxLength(64)

@description('Name of the the environment which is used to generate a short unique hash used in all resources.')

param environmentName string

@minLength(1)

@description('Primary location for all resources')

param location string

// Optional parameters to override the default azd resource naming conventions. Update the main.parameters.json file to provide values. e.g.,:

// "resourceGroupName": {

// "value": "myGroupName"

// }

param appServicePlanName string = ''

param resourceGroupName string = ''

param webServiceName string = ''

param applicationInsightsDashboardName string = ''

param applicationInsightsName string = ''

param logAnalyticsName string = ''

// serviceName is used as value for the tag (azd-service-name) azd uses to identify

param serviceName string = 'web'

@description('Id of the user or app to assign application roles')

param principalId string = ''

var abbrs = loadJsonContent('./abbreviations.json')

var resourceToken = toLower(uniqueString(subscription().id, environmentName, location))

var tags = { 'azd-env-name': environmentName }

// Organize resources in a resource group

resource rg 'Microsoft.Resources/resourceGroups@2021-04-01' = {

name: !empty(resourceGroupName) ? resourceGroupName : '${abbrs.resourcesResourceGroups}${environmentName}'

location: location

tags: tags

}

// The application frontend

module web './core/host/appservice.bicep' = {

name: serviceName

scope: rg

params: {

name: !empty(webServiceName) ? webServiceName : '${abbrs.webSitesAppService}web-${resourceToken}'

location: location

tags: union(tags, { 'azd-service-name': serviceName })

applicationInsightsName: monitoring.outputs.applicationInsightsName

appServicePlanId: appServicePlan.outputs.id

runtimeName: 'python'

runtimeVersion: '3.8'

scmDoBuildDuringDeployment: true

}

}

// Create an App Service Plan to group applications under the same payment plan and SKU

module appServicePlan './core/host/appserviceplan.bicep' = {

name: 'appserviceplan'

scope: rg

params: {

name: !empty(appServicePlanName) ? appServicePlanName : '${abbrs.webServerFarms}${resourceToken}'

location: location

tags: tags

sku: {

name: 'B1'

}

}

}

// Monitor application with Azure Monitor

module monitoring './core/monitor/monitoring.bicep' = {

name: 'monitoring'

scope: rg

params: {

location: location

tags: tags

logAnalyticsName: !empty(logAnalyticsName) ? logAnalyticsName : '${abbrs.operationalInsightsWorkspaces}${resourceToken}'

applicationInsightsName: !empty(applicationInsightsName) ? applicationInsightsName : '${abbrs.insightsComponents}${resourceToken}'

applicationInsightsDashboardName: !empty(applicationInsightsDashboardName) ? applicationInsightsDashboardName : '${abbrs.portalDashboards}${resourceToken}'

}

}

// App outputs

output AZURE_LOCATION string = location

output AZURE_TENANT_ID string = tenant().tenantId

output REACT_APP_WEB_BASE_URL string = web.outputs.uri

output APPLICATIONINSIGHTS_CONNECTION_STRING string = monitoring.outputs.applicationInsightsConnectionString

- Run `azd provision` to provision the additional Azure resources

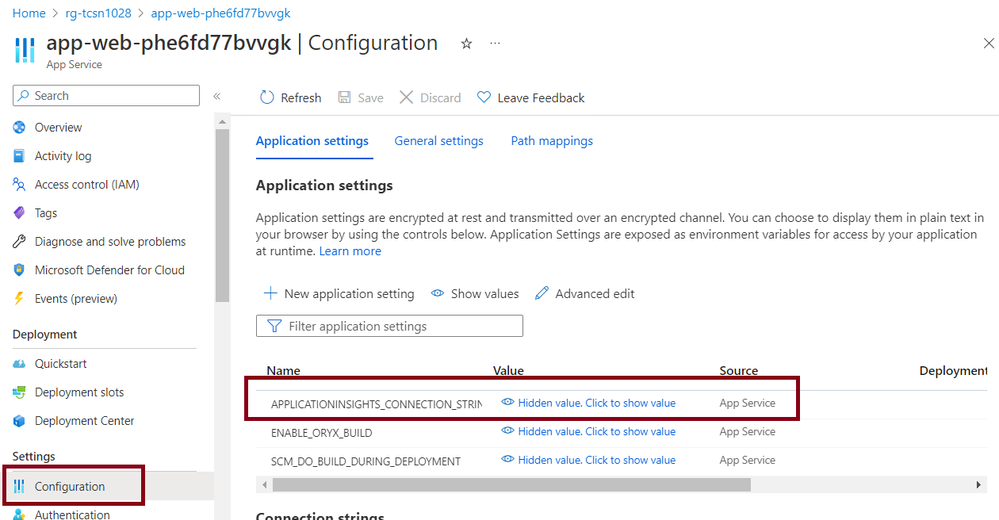

- Once provisioning is complete, run `azd monitor –overview` to open the Application Insight dashboard in the browser.

The dashboard is not that exciting yet. Auto-instrumentation application monitoring is not yet available for Python app. However, if you examine your code, you will see that:

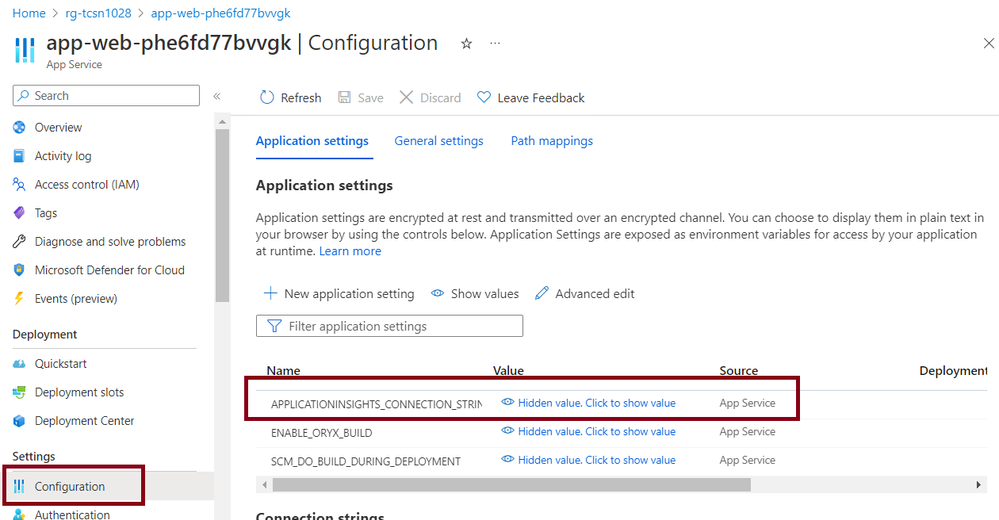

- APPLICATIONINSIGHTS_CONNECTION_STRING is added to the .env file for your current azd environment.

- The same connection string is added to the application settings in the configuration of your web app in Azure Portal:

Step 2 – manually instrumenting your app

Let’s track incoming requests with OpenCensus Python and instrument your application with the flask middleware so that incoming requests sent to your app is tracked. (To learn more about what Azure Monitor supports, refer to setting up Azure Monitor for your Python app.)

For this step, I recommend using Visual Studio Code and the following extensions:

Get Started Tutorial for Python in Visual Studio Code is a good reference if you are not familiar with Visual Studio Code.

- Add to requirements.txt:

python-dotenv

opencensus-ext-azure >= 1.0.2

opencensus-ext-flask >= 0.7.3

opencensus-ext-requests >= 0.7.3

- Modify app.py to:

import os

from dotenv import load_dotenv

from flask import Flask, render_template, send_from_directory

from opencensus.ext.azure.trace_exporter import AzureExporter

from opencensus.ext.flask.flask_middleware import FlaskMiddleware

from opencensus.trace.samplers import ProbabilitySampler

INSTRUMENTATION_KEY = os.environ.get("APPLICATIONINSIGHTS_CONNECTION_STRING")

app = Flask(__name__)

middleware = FlaskMiddleware(

app,

exporter=AzureExporter(connection_string=INSTRUMENTATION_KEY),

sampler=ProbabilitySampler(rate=1.0),

)

@app.route("/favicon.ico")

def favicon():

return send_from_directory(

os.path.join(app.root_path, "static"),

"favicon.ico",

mimetype="image/vnd.microsoft.icon",

)

@app.route("/")

def home():

return render_template("home.html")

if __name__ == "__main__":

app.run(debug=True)

- To run locally, we need to read from the .env file to get the current azd environment context. The easiest is to customize run and debug in Visual Studio Code by creating a launch.json file:

- Ctrl-Shift+D or click “Run and Debug” in the sidebar

- Click “create a launch.json file” to customize a launch.json file

- Select “Flask Launch and debug a Flask web application”

- Modify the generated file to:

{

// Use IntelliSense to learn about possible attributes.

// Hover to view descriptions of existing attributes.

// For more information, visit: https://go.microsoft.com/fwlink/?linkid=830387

"version": "0.2.0",

"configurations": [

{

"name": "Python: Flask",

"type": "python",

"request": "launch",

"module": "flask",

"env": {

"FLASK_APP": "app.py",

"FLASK_DEBUG": "1"

},

"args": [

"run",

"--no-debugger",

"--no-reload"

],

"jinja": true,

"justMyCode": true,

"envFile": "${input:dotEnvFilePath}"

}

],

"inputs": [

{

"id": "dotEnvFilePath",

"type": "command",

"command": "azure-dev.commands.getDotEnvFilePath"

}

]

}

- Create and activate a new virtual environment . I am using Windows. So:

py -m venv .venv

.venvscriptsactivate

pip3 install -r ./requirements.txt

- Click the Run view in the sidebar and hit the play button for Python: Flask

- Browse to http://localhost:5000 to launch the app.

- Click the button a few times and/or reload the page to generate some traffic.

Take a break; perhaps play with your cat or dog for real. The data will take a short while to show up in Application Insights.

- Run `azd monitor –overview` to open the dashboard and notice the change

- Run `azd deploy` to deploy your app to Azure and start monitoring your app!

Get the code for this blog post here. Next, we will explore how you can use `azd pipeline config` to set up GitHub action to deploy update upon code check in.

Feel free to run `azd down` to clean up all the Azure resources. As you saw, it’s easy to get things up and running again. Just `azd up`!

We love your feedback! If you have any comments or ideas, feel free to add a comment or submit an issue to the Azure Developer CLI Repo.

by Contributed | Nov 4, 2022 | Technology

This article is contributed. See the original author and article here.

SWIFT message processing using Azure Logic Apps

We are very excited to announce the Public Preview of SWIFT MT encoder and decoder for Azure Logic Apps. This release will enable customers to process SWIFT based payment transactions with Logic Apps Standard and build cloud native applications with full security, isolation and VNET integration.

What is SWIFT

SWIFT is the Society for Worldwide Interbank Financial Telecommunication (SWIFT) is a global member-owned cooperative that provides a secure network that enables financial institutions worldwide to send and receive financial transactions in a safe, standardized, and reliable environment. The SWIFT group develops several message standards to support business transactions in the financial market. One of the longest established and widely used formats supported by the financial community is SWIFT MT and it is used by SWIFT proprietary FIN messaging service.

SWIFT network is used globally by more than 11,000 financial institutions in 200 regions/countries. These institutions pay SWIFT annual fees as well as based on the processing of financial transactions. Failures in the processing in SWIFT network create delays and result in penalties. This is where Logic Apps enables customers to send/receive these transactions as per the standard as well as proactively address these issues.

Azure Logic Apps enables you to easily create SWIFT workloads to automate their processing, thereby reducing errors and costs. With Logic Apps Standard, these workloads can run on cloud or in isolated environments within VNET. With built-in and Azure connectors, we offer 600+ connectors to a variety of applications, on-premises or on cloud. Logic Apps is gateway to Azure – with the rich AI and ML capabilities, customers can further create business insights to help their business.

SWIFT capabilities in Azure Logic Apps

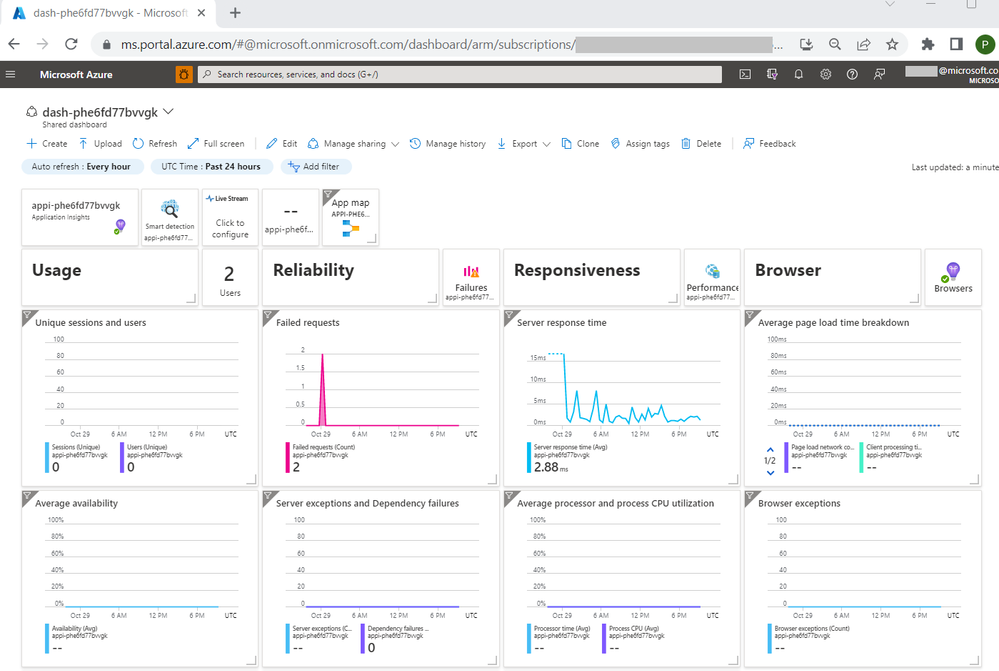

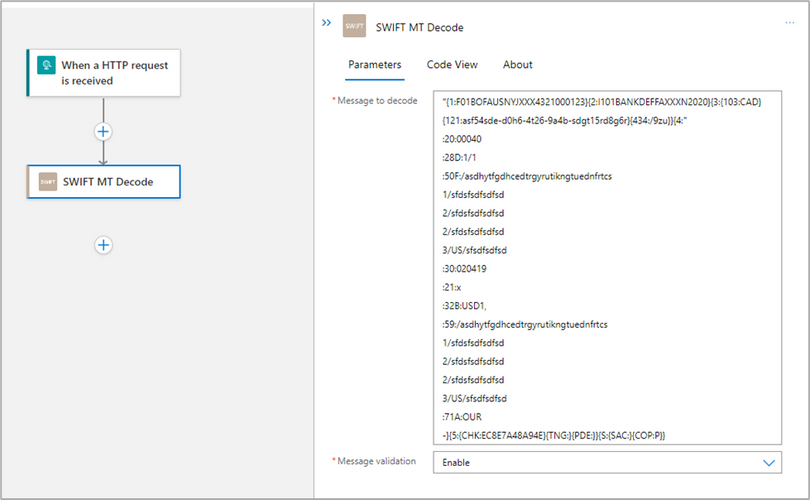

The SWIFT connector has two actions – Encoder and Decoder for MT messages. There are two key capabilities of the connector – transformation of the message from flat file to XML and viceversa. Secondly, the connector performs message validation based on the SWIFT guidelines as described in the SRG (SWIFT Release Guide). The SWIFT MT actions support the processing of all categories of MT messages.

How to use SWIFT in Logic Apps

In this example we are listing the steps to receive an MT flat file message, decode to MT XML format, and then send it to downstream application

- SWIFT support is only available in the ‘Standard’ SKU of Azure Logic Apps. Create a standard Logic App

- Add a new workflow. You can choose stateful or stateless workflow.

- Create the first step of your workflow which is also the trigger, depending on the source of your MT message. We are using a Request based trigger.

- Choose the SWIFT connector under Built-in tab. Add the action ‘SWIFT Encode’ as a next step. This step will transform the MT XML message (sample is attached) to MT flat file format.

By default, the action does message validation based on the SWIFT Release Guide specification. It can be disabled via the Message validation drop-down

- For scenarios where you are receiving a SWIFT MT message as flat file (sample is attached) from SWIFT network, you can use SWIFT decode action to validate and transform the message to MT XML format

Advanced Scenarios

For now, you need to contact us if you have any scenarios described below. We plan to document them soon so this is a short term friction.

- SWIFT processing within VNET

- To perform message validation, Logic Apps runtime leverages artifacts that are hosted on a public endpoint. If you want to limit calls to the internet, and want to do all the processing within VNET, you need to override the location of those artifacts with an endpoint within your VNET. Please reach out to us and we can share instructions.

- BIC (Bank Identifier Code) validation

- By default, BIC validation is disabled. If you would like to enable BIC validation, please reach out to us and we can share instructions

by Contributed | Nov 4, 2022 | Technology

This article is contributed. See the original author and article here.

Summary

Azure Sphere is updating keys used in image signing, following best practises for security. The only impact on production devices is that they will experience two reboots instead of one during the 22.11 release cycle (or when they next connect to the Internet if they are offline). For certain manufacturing, development, or field servicing scenarios where the Azure Sphere OS is not up to date, you may need to take extra steps to ensure that newly signed images are trusted by the device; read on to learn more.

What is an image signing key used for, and why update it?

Azure Sphere devices only trust signed images, and the signature is verified every time software is loaded. Every production software image on the device – including the bootloader, the Linux kernel, the OS, and customer applications, as well as any capability file used to unlock development on or field servicing of devices – is signed by the Azure Sphere Security Service (AS3), based on image signing keys held by Microsoft.

As for any modern public/private key system, the keys are rotated periodically. The image signing keys have a 2-year validity. Note that once an image is signed, it generally remains trusted by the device. There is a separate mechanism based on one-time programmable fuses to revoke older OS software with known vulnerabilities such as DirtyPipe and prevent rollback attacks – we used this most recently in the 22.09 OS release.

When is this happening?

The next update to the image signing certificate will occur at the same time as 22.11 OS is broadly released in early December. When that happens, all uses of AS3 to generate new production-signed application images or capabilities will result in images signed using the new key.

Ahead of that, we will update the trusted key-store (TKS) of Azure Sphere devices, so that the TKS incorporates all existing keys and the new keys. This update will be automatically applied to every connected device over-the-air. Note that device TKS updates happen ahead of any pending updates to OS or application images. In other words, if a device comes online that is due to receive a new-key-signed application or OS, it will first update the TKS so that it trusts that application or OS.

We will update the TKS at the same time as our 22.11 retail-evaluation release, which is targeted at 10 November. The next time that each Azure Sphere device checks for updates (or up to 24 hours later if using the update deferral feature), the device will apply the TKS update and reboot. The TKS update is independent of an OS update, and it will apply to devices using both the retail and retail-eval feeds.

Do I need to take any action?

No action is required for production-deployed devices. There are three non-production scenarios where you may need to take extra steps to ensure that newly signed images are trusted by the device.

The first is for manufacturing. If you update and re-sign the application image you use in manufacturing, but you are using an old OS image with an old TKS, then that OS will not trust the application. Follow these instructions to sideload the new TKS as part of manufacturing.

The second is during development. If you have a dev board that you are sideloading either a production-signed image or a capability to, and it has an old TKS, then it will not trust that capability or image. This may make the “enable-development” command fail with an error such as “The device did not accept the device capability configuration.” This can be remedied by connecting the device to a network and checking that the device is up-to-date. Another method is to recover the device – the recovery images always include the latest TKS.

The third is for field servicing. During field servicing you need to apply a capability to the device as it has been locked down after manufacturing using the DeviceComplete state. However, if that capability is signed using the new image signing key and the device has been offline – so it has not updated its TKS – then the OS will not trust the capability. Follow these instructions to sideload the new TKS before applying the field servicing capability.

Thanks for reading this blog post. I hope it has been informative in how Azure Sphere uses signed images and best practises such as key rotation to keep devices secured through their lifetime.

by Contributed | Nov 3, 2022 | Technology

This article is contributed. See the original author and article here.

Microsoft Defender for Office 365 is pleased to announce a partnership with SANS Institute to deliver a new series of computer-based training (CBT) modules in the Attack Simulation Training service. The modules will focus on IT systems and network administrators. Microsoft is excited to collaborate with a recognized market leader in cyber security training to bring our customers training that can help our customers address a critical challenge in the modern threat landscape: educating and upskilling security professionals.

“We salute Microsoft for recognizing the requirement to direct security awareness training towards IT System and Network Administrators since our experience tells us that it is precisely these users who are more frequently targeted because of their privileged access.”

Carl Marrelli, Director of Business Development at the SANS Institute

We chose SANS Institute for its long track record of success in technical education and for its focus on an audience that Defender for Office 365 wants to support. Technical education is hard, and cyber security is even more difficult to deliver effectively. SANS Institute’s approach was best-in-class, and we think our customers are going to find this content very valuable for their organizational upskilling.

Today our Attack Simulation Training provides a robust catalog of end-user training experiences, soon to be expanding beyond social engineering topics. This partnership with SANS will help us expand our offerings to cover an important and challenging topic area. IT system administrators and network administrators have to acquire and use a broad and deep set of complex cyber security information in order to successfully protect their organizations. It can be difficult to find good training and Microsoft believes that this new set of training modules will help all of our organizations, large and small, upskill their administrative staff. These new courses will be self-paced, short-form, and easily digestible.

These new courses will be made available in the coming months through the Attack Simulation Training platform. They will ship alongside the rest of our catalog and can then be assigned through our training campaign workflows. Attack Simulation Training is available to organizations through Microsoft 365 E5 Security or Microsoft Defender for Office 365 Plan 2. The courses will meet all of Microsoft’s standards for accessibility, diversity, and inclusivity.

The SANS Institute was established in 1989 as a cooperative research and education organization. Today, SANS is the most trusted and, by far, the largest provider of cybersecurity training and certification to professionals in government and commercial institutions worldwide. Renowned SANS instructors teach more than 60 courses at in-person and virtual cybersecurity events and on demand. GIAC, an affiliate of the SANS Institute, validates practitioner skills through more than 35 hands-on technical certifications in cybersecurity. SANS Security Awareness, a division of SANS, provides organizations with a complete and comprehensive security awareness solution, enabling them to manage their “human” cybersecurity risk easily and effectively. At the heart of SANS are the many security practitioners, representing varied global organizations from corporations to universities, working together to support and educate the global information security community.

Want to learn more about Attack Simulation Training?

Get started with the available documentation today and check out the blogs for Setting up a New Phish Simulation Program-Part One and Part Two. In addition to these, you can read more details about new features in Attack Simulation Training.

by Contributed | Nov 1, 2022 | Technology

This article is contributed. See the original author and article here.

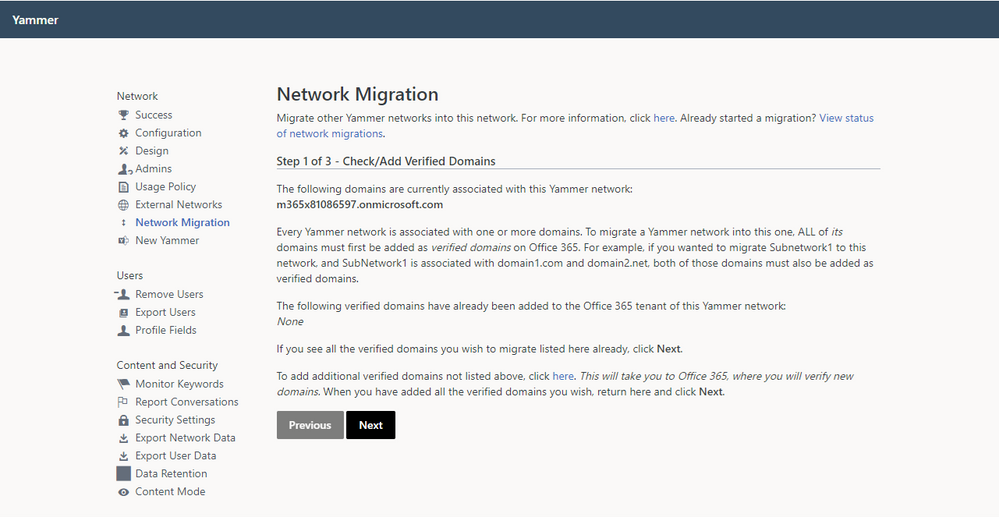

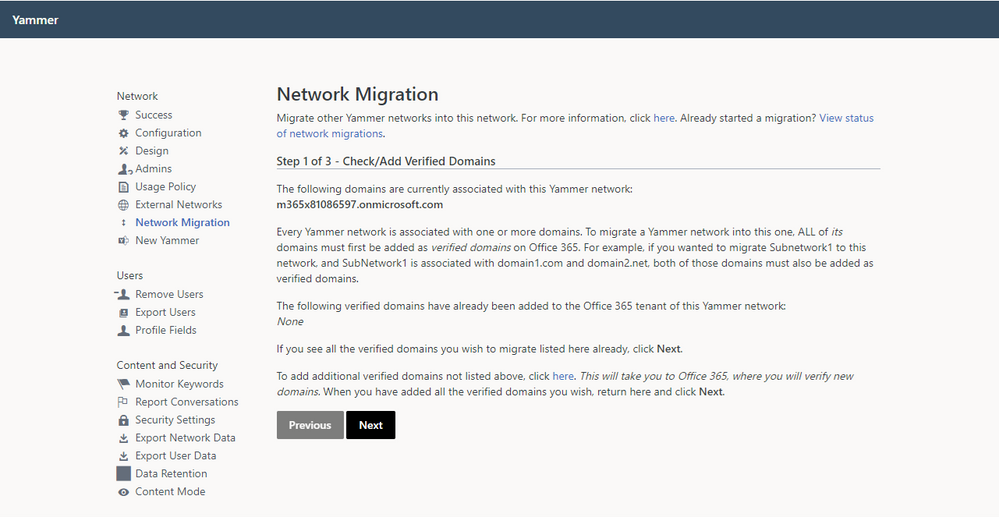

Yammer is committed to strengthening the alignment of Yammer to Microsoft 365. This means we will no longer support tenants having more than one home Yammer network. This change will ensure Yammer networks have the same organizational boundaries as their corresponding M365 tenants. Integrating Yammer more fully into the Microsoft 365 ecosystem has been a multi-year effort and new networks created since October 2018 have not permitted this 1:Many configuration. Similarly, new Yammer networks provisioned after January 2020 have been created in Native Mode. In September 2022, the enforcement of Native Mode was announced. Automated network consolidation is an important prerequisite to reaching Native Mode and gaining access to broader safety, security, and compliance features. This will start on 5/1/2023 and continue through 5/1/2024.

Start the consolidation process

If you have secondary Yammer networks, you will lose access to all your secondary Yammer networks once you consolidate. Users from your secondary Yammer networks will be migrated to your primary network, but groups (communities) and data will be lost. In advance of this change, we strongly recommend that customers perform a full data export of their networks. You can read more about exporting data from your network here.

It is strongly advised that customers perform this network consolidation themselves. This will allow you to choose the time of the consolidation and allow the administrator to choose which primary domain will be associated with the remaining network.

Customers are encouraged to initiate network consolidation any time before or after 5/1/2023.

If you would like to self-initiate your network consolidation:

- There is a guide to network consolidation available to walk you through the process. A network administrator can run the network migration tool from within Yammer.

If you would like Microsoft to initiate your network consolidation:

- You are not required to take any action. Microsoft will notify you via the M365 Message Center of your scheduled consolidation date and default primary network and domain. The network with the highest total message count will be the default primary network.

- If you would like a different default primary network, contact Microsoft 365 support to log an exception.

If you need to postpone or schedule network consolidation around blackout dates:

- Contact Microsoft 365 support to log an exception.

What happens next?

Post consolidation of the networks into 1:1 alignment with Microsoft 365, they will be upgraded to Native Mode. This provides access to the latest features in Yammer and ensures that customers are covered by the Microsoft 365 compliance features.

You can read more about our plans to ensure alignment to Native Mode here.

Need more help?

There are Microsoft partners that can help you migrate data from your secondary networks. Please reach out to your Microsoft Account Team or contact a partner for more information.

FAQ

Q: We know which network we want to be our primary network. How can we make sure that our networks are automatically consolidated to that specific network?

A: Please contact support to specify your desired primary network and domain.

Q: We do not want our networks consolidated. What can we do?

A: Please contact support to request an exception, which will include sharing business justifications, when the consolidation can proceed, and your plan to unblock it. This is not a permanent exception.

Q: We consolidated our networks, but we want to reverse the process so we can have a different primary network. Is that possible?

A: No. Network consolidation is an irreversible process.

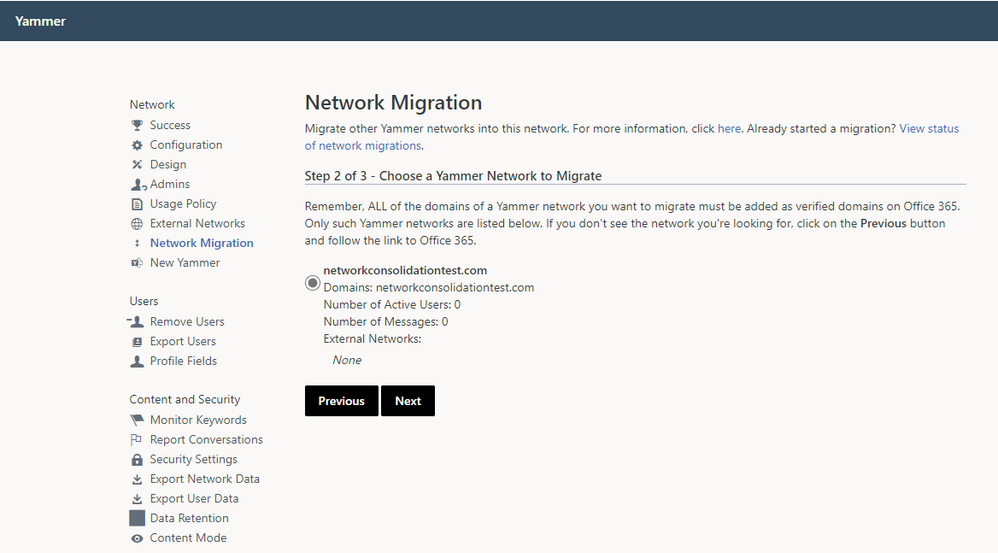

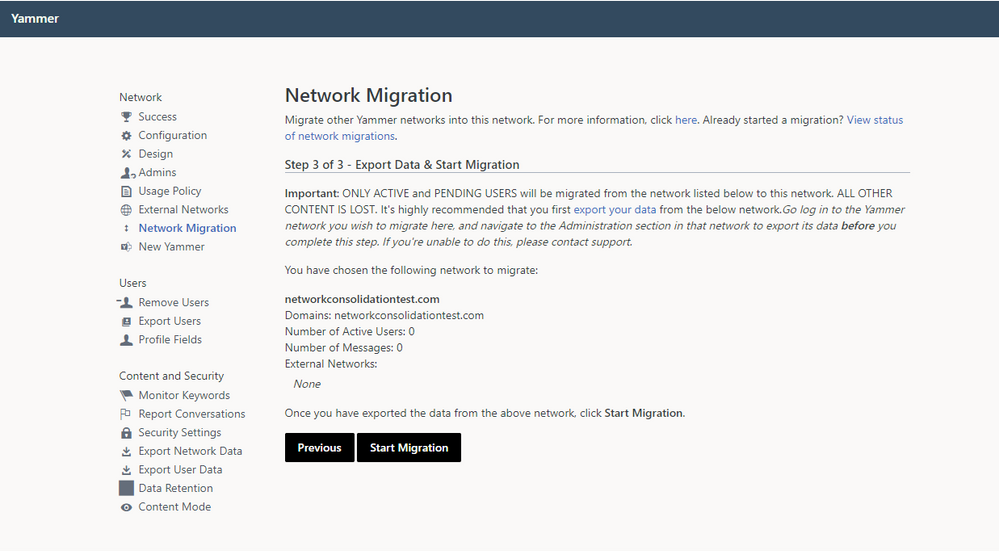

Step-by-step instructions for network consolidation

- As a network admin, visit the Network Migration page within the Yammer network admin settings of the primary network you want to keep. Make sure that all of the domains you wish to consolidate are listed. If they are not listed, you can follow the link to add and verify additional domains.

2. Choose the network you wish to consolidate, and click Next.

3. Validate that you are ready to consolidate the selected network and have performed any desired data export prior to consolidation.

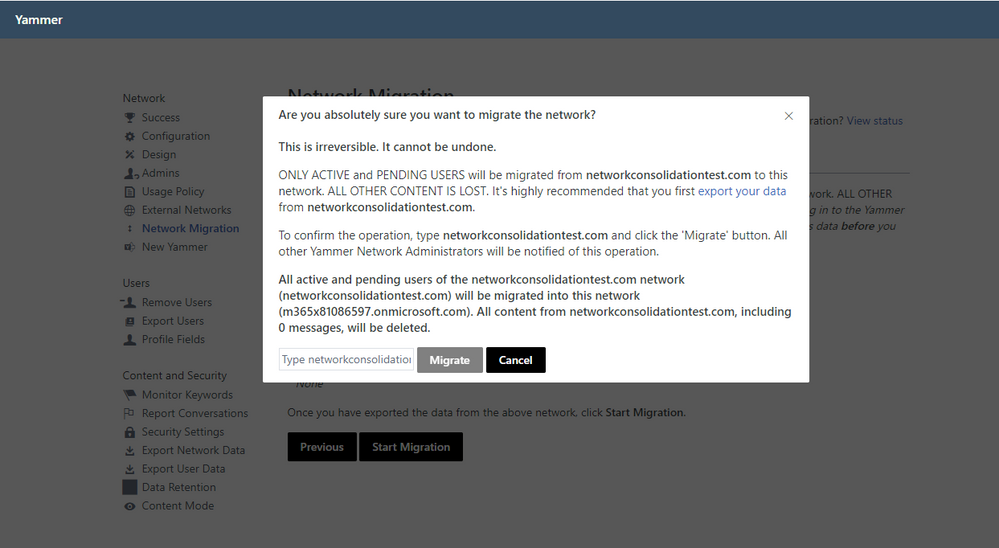

4. Follow the instructions to initiate network consolidation.

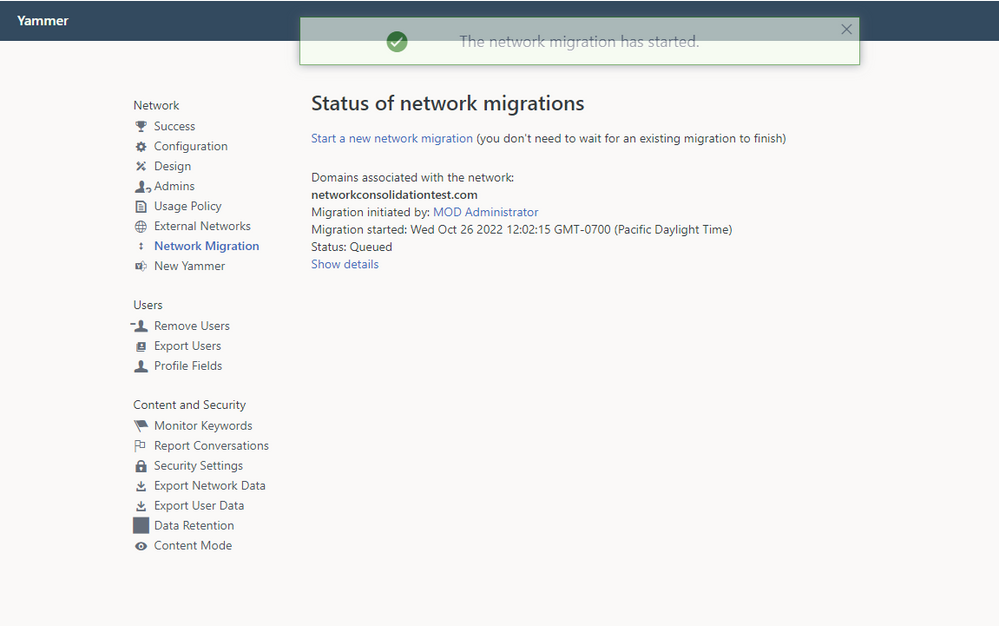

5. You can view the status of your network consolidation on the Network Migration page.

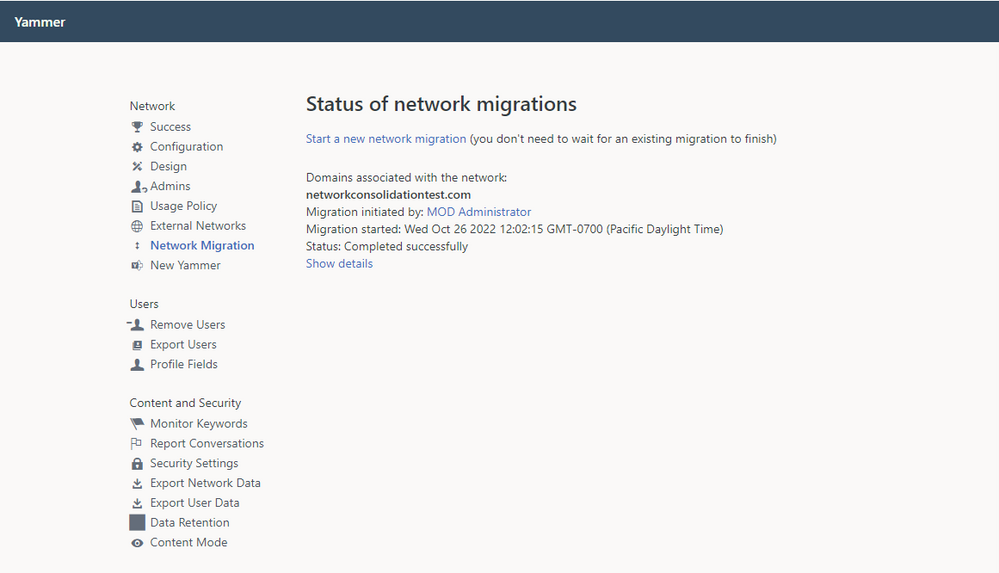

6. When the consolidation is complete, the status will update.

7. Repeat this process until you only have one network associated with your tenant. Congratulations! You are now ready to migrate to Native Mode.

Recent Comments