by Contributed | Dec 1, 2022 | Technology

This article is contributed. See the original author and article here.

We’ve been working diligently to address user feedback about desktop files not opening in the Visio web editor. Today, we’re excited to announce that we’ve made a number of improvements towards a more seamless user experience between the Visio web and desktop apps.

What’s new

Visio users can now open and edit files containing shapes with the following properties in Visio for the web:

- Rectangle gradients

- More fill and line patterns

- OLE objects

- Data graphics

- Shadow text

- Vertical text

- Double underlines

- Shadow effects

- Reflection effects

- Glow effects

- Soft edges

- Bevel effects

- 3-D rotations

- Perspectives and 3-D rotation perspectives

- Compound line patterns

- 1-D and 2-D protection

*Note: Some interactions have been optimized to ensure they work in Visio for the web.

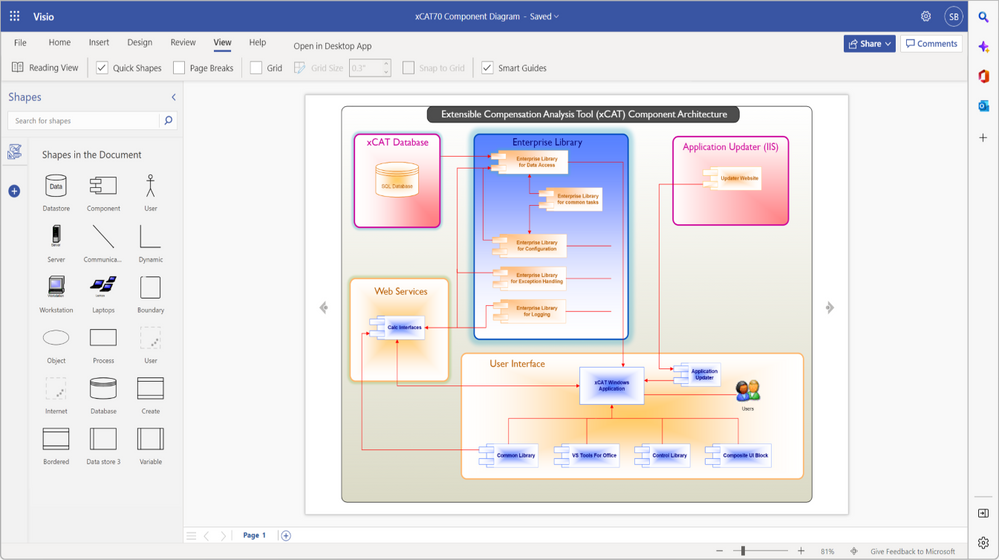

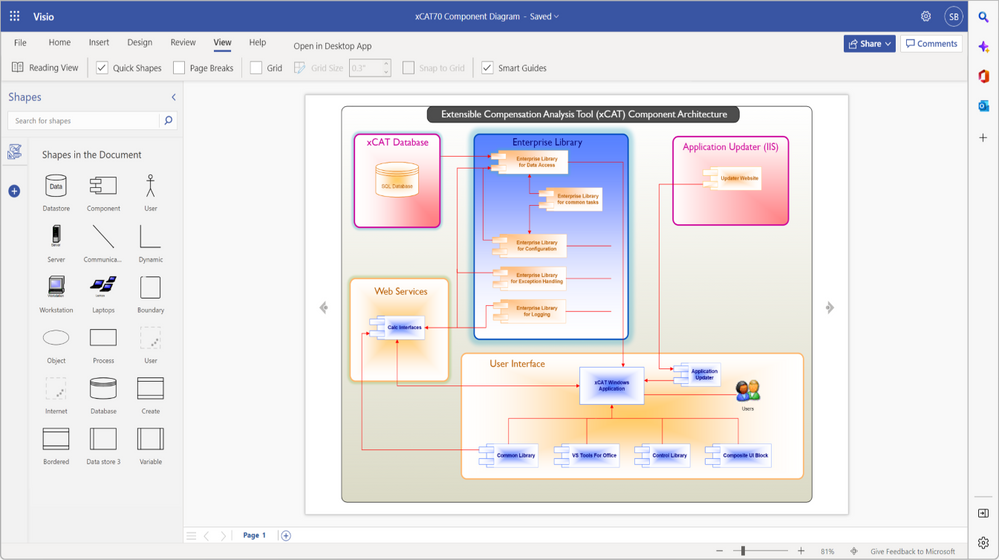

An image of a component architecture diagram demonstrating how shapes with gradients appear in Visio for the web.

An image of a component architecture diagram demonstrating how shapes with gradients appear in Visio for the web.

Looking ahead

Our goal is to always make it easier for you to think and collaborate on ideas visually—whether improving processes, developing new reporting structures, or brainstorming concepts. With this new update, we hope you will find it easier to work with colleagues, from whichever app you prefer.

We are continuing to make improvements on this front and look forward to introducing support, for example, for diagrams containing shapes with layers or diagrams with more than 1,000 shapes. To learn more about the properties that can still lead to compatibility issues when editing files in Visio for the web, please see Why can’t I open a diagram for editing in Visio for the web?

Watch our on-demand webinar

Watch the event recording here: Editable for all: How to create diagrams that work virtually anywhere and across Visio versions. During this session, we show you how to create Visio files that can be accessed by all stakeholders regardless of which Visio version (or app) you use. We also discuss how to avoid or quickly address any web alerts that prevent in-browser edits, so you can jump right back into your workflow.

We are listening!

We look forward to hearing your feedback and learning more about how we can ensure a more seamless user experience between Visio apps. Please tell us what you think in the comment section below. You can also send feedback via the Visio Feedback Portal or directly in the Visio web app using “Give Feedback to Microsoft” in the bottom right corner.

Did you know? The Microsoft 365 Roadmap is where you can get the latest updates on productivity apps and intelligent cloud services. Check out what features are in development and coming soon on the Microsoft 365 Roadmap homepage or learn more about roadmap item, Improved interoperability between the Visio web and desktop apps.

by Contributed | Nov 30, 2022 | Business, Hybrid Work, Microsoft 365, Technology

This article is contributed. See the original author and article here.

We are honored to announce that Gartner® has recognized Microsoft as a Leader in the 2022 Gartner® Unified Communications as a Service (UCaaS) Magic Quadrant™ report.

The post Microsoft named a Leader in 2022 Gartner® Magic Quadrant™ for Unified Communications as a Service, Worldwide appeared first on Microsoft 365 Blog.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

by Contributed | Nov 30, 2022 | Technology

This article is contributed. See the original author and article here.

We continue to expand the Azure Marketplace ecosystem. For this volume, 94 new offers successfully met the onboarding criteria and went live. See details of the new offers below:

Get it now in our marketplace

|

|---|

|

Cloud Native Stack Virtual Machine Image: NVIDIA’s Cloud Native Stack VMI is a GPU-accelerated virtual machine image (VMI) that enables advanced functionality for developers, including better GPU performance, utilization, and telemetry.

|

|

Demography: TensorGo’s Demography API detects faces in videos and returns gender, ethnicity, and estimated age in real time by using advanced machine learning models.

|

|

Face Detection: TensorGo’s Face Detection API uses computer vision to detect faces in an image or video and return a bounding box around individual faces.

|

|

Facial Landmarks: TensorGo’s Facial Landmarks API localizes a human face in an image or video and tracks the facial points to predict and track behaviors over time.

|

|

FTP Server Basic on Windows Server 2019: This preconfigured image from Tidal Media includes a minimized FTP Server on Windows Server 2019. FTP (File Transfer Protocol) lets you transfer computer files between a server and client.

|

|

Heart Rate Estimation: TensorGo’s Heart Rate Estimation API uses non-invasive computer vision techniques to estimate the heart rate of a subject in a video.

|

|

Hyper-V on Windows Server 2022: Cloud Infrastructure Services’ Hyper-V Server lets you deploy nested virtualization on Microsoft Azure to host multiple operating systems and containerized virtual machines.

|

|

Jenkins on Debian 10 with Support: This virtual machine from ATH provides Jenkins 2.361 on Debian 10. Jenkins is an open-source automation server that runs servlet containers and version control tools for CI/CD tasks.

|

|

Jenkins on Debian 11 with Support: This virtual machine from ATH provides Jenkins 2.361 on Debian 11. Jenkins is an open-source automation server that runs servlet containers and version control tools for CI/CD tasks.

|

|

Jenkins on Ubuntu 18.04 LTS with Support: This virtual machine from ATH provides Jenkins 2.361 on Ubuntu 18.04 LTS. Jenkins is an open-source automation server that runs servlet containers and version control tools for CI/CD tasks.

|

|

Jenkins on Ubuntu 20.04 LTS with Support: This virtual machine from ATH provides Jenkins 2.361 on Ubuntu 20.04 LTS. Jenkins is an open-source automation server that runs servlet containers and version control tools for CI/CD tasks.

|

|

License Plate Recognition: TensorGo’s License Plate Recognition API uses optical character recognition to identify vehicles in traffic and extract their license plate numbers.

|

|

Low Light and Infrared Face Detection: TensorGo’s Low Light IR Face Detection API overcomes environmental conditions to identify faces and people from video captured in low lighting or by an infrared (IR) camera.

|

|

LTAPPS Timesheet: The LTAPPS Timesheet is a time-tracking app for Microsoft Teams and SharePoint, whether desktop or mobile, that lets employees and managers track hours, overtime, and absences.

|

|

People Detection: TensorGo’s People Detection API lets you detect people moving in videos, regardless of which direction they are facing, the light levels, and their clothing.

|

|

People Segmentation: TensorGo’s People Segmentation API provides real-time detection and segmentation of people in an image or video, allowing you to home in on individuals in crowds.

|

|

Pose Estimation: TensorGo’s Pose Estimate API can track the pose and orientation of a person or object from an image or video to determine whether the person is standing, sitting, or performing activities.

|

|

Rocky Linux 8 – Generation 2 Virtual Machine: ProComputers has preconfigured this minimal, ready-to-use generation 2 virtual machine containing Rocky Linux 8, cloud-init, and the Microsoft Azure Linux Agent.

|

|

Rocky Linux 9 Minimal: Ntegral has preconfigured this minimal virtual machine containing Rocky Linux 9 for use on Azure. Rocky Linux is used for enterprise cloud environments including Node.js, web, and database applications.

|

|

Ruby on Rails: Data Science Dojo has packaged Ruby on Rails on Ubuntu 20.04 to enable students, developers, and organizations focused on web-based products to easily create Ruby-based data science apps.

|

|

Salesken – AI Assistance: Salesken is a real-time, Azure-based sales assistant that provides AI-driven conversation insights from contextual clues and talking points, enabling you to navigate sales discussions with ease.

|

|

SmartDA: SmartDA is an app for Microsoft Teams that provides a versatile and personalized internal assistant using Intumit’s conversational AI to answer user questions about Microsoft 365.

|

|

Traffic Analyzer: TensorGo’s Traffic Analyzer API detects vehicles, pedestrians, and road signs from images or videos, identifying the location and number of vehicles and people.

|

|

Ubuntu 20.04.5 LTS: Ntegral provides this preconfigured image of Ubuntu 20.04.5 LTS for use on Microsoft Azure. Ubuntu is a popular Linux distribution used to serve enterprise workloads such as Node.js, web, and databases.

|

|

Veritas InfoScale for the Cloud (Ireland): Veritas’s InfoScale is a comprehensive availability and storage management solution delivering a common platform across physical, virtual, and multi-cloud infrastructure.

|

|

Veritas InfoScale for the Cloud (Singapore): Veritas’s InfoScale is a comprehensive availability and storage management solution delivering a common platform across physical, virtual, and multi-cloud infrastructure.

|

|

Veritas InfoScale for the Cloud (United States): Veritas’s InfoScale is a comprehensive availability and storage management solution delivering a common platform across physical, virtual, and multi-cloud infrastructure.

|

|

VSM for Microsoft Teams: Virsae’s VSM Everywhere is a centralized network management and optimization platform to ensure that your Microsoft Teams and contact center environments operate at peak performance.

|

Go further with workshops, proofs of concept, and implementations

|

|---|

|

Azure ARC and Azure Stack HCI: 1-Day Workshop: Available only in German, Bechtle’s practical workshop will present an overview of Microsoft Azure ARC and Azure Stack HCI, as well as use cases to manage your infrastructure uniformly.

|

|

Azure DevOps Best Practices: 1-Day Workshop: Learn from PetaBytz about best practices, tips, tricks, and working demos for Microsoft Azure DevOps to get the most out of your Agile software development stack.

|

|

Business-driven Web Analytics Solution: 5-Week Implementation: Polestar will deliver dashboards built on Microsoft Power BI to help you track web statistics across your analytics stores by using Microsoft Azure Data Factory and Azure Services.

|

|

Cloud Deployment, Migration, and Modernization Services: ISM’s Cloud Deployment, Migration, and Modernization services are targeted to optimize costs and line-of-business delivery for your enterprise IT landscape by using greenfield implementations on Microsoft Azure.

|

|

Cloud End-to-End Managed Services: ISM offers complete management of part or all of your Microsoft Azure environment, whether standalone or hybrid, in alignment with ITIL and the Microsoft Cloud Adoption Framework.

|

|

Cloud Migration Service: 3-Week Implementation: NCS will accelerate your migration and adoption of Microsoft Azure through this low-risk, cost-optimized service that includes strategic planning, technical design, delivery validation, and more.

|

|

DevSecOps Automated Pipeline Process: Implementation: Vismaya India will analyze your traditional automated pipelines and manual development processes, then implement an automation solution built on Microsoft Azure DevOps.

|

|

Hybrid Cloud Sync Services: AlgoSystems’ Hybrid Cloud Sync Services will provide you with a modern and reliable file solution built on Microsoft Azure File Sync and Azure Backup to support your business and users.

|

|

Infrastructure as Code on Azure DevOps: 1-Week Implementation: Drawing on expert experience, PetaBytz will use industry best practice blueprints to drive your adoption of Microsoft Azure DevOps to optimize delivery of business products and services.

|

|

Innovation Jumpstart: 6-Week Proof of Concept: Quisitive’s Innovation Jumpstart helps organizations build a ready-to-execute app roadmap and rapidly prototype a solution built on Microsoft Azure.

|

|

Network Management on Azure for Multi-cloud: 2-Week Proof of Concept: Metanext will implement Aviatrix for Microsoft Azure to improve your management of networks and security in your multi-cloud environment. This offer is available only in French.

|

|

Quickstart Service for Prisma Cloud: 6-Month Implementation: Palo Alto Networks’ Quickstart Service for Prisma Cloud CPSM delivers visibility, compliance, and governance for threat detection and behavior analytics on Microsoft Azure.

|

Contact our partners

|

|---|

Alfresco Consultation: 2-Hour Briefing

|

Analytics Solution: 4-Week Assessment

|

Angles for SAP

|

Anomaly Detection

|

Application Modernization on Azure: 4-Week Assessment

|

ARGOS Cloud Security for Microsoft Sentinel

|

Azure Database Migration: 2-Hour Briefing

|

Azure Migrate: 1-Hour Briefing

|

Beak Vulnerability Detection & Response

|

Canopy Remote Device Management Software

|

Cisco Cyber Vision

|

Clobotics REA

|

Cloud Discovery, Assessment, and Advisory Services

|

Cloud Readiness: 8-Day Assessment

|

Data Discovery for Manufacturing and Construction: 3-Week Assessment

|

Data Governance: 8-Week Assessment

|

DevOps (CI/CD) for z/OS Mainframes

|

DevOps Consulting

|

DrAid AI Cognitive Service

|

Enable Customer Success for Cloud Adoption Framework: 4-Hour Briefing

|

FONS Galen

|

Forcepoint Email Security V8.5.5

|

Hf.flow Application

|

Holistic Business Case Solution for Microsoft 365: 4-Week Assessment

|

Honeywell Forge Performance+ Industrial Asset Performance

|

IBM Cloud Pak for Data on ARO – BYOL

|

Info-Tech Mobile Attendance App

|

Managed GitHub Enterprise Server

|

mirro.ai Mood Analyzer (SaaS)

|

Panoptica Cloud Native Application Security For Developers

|

Percy Intranet

|

Prime Unified Messaging Platform

|

Process Runner for Microsoft 365

|

Proximus Identity Governance: Assessment

|

Quorum Cyber Managed eXtended Detection & Response (XDR)

|

ReversingLabs File Enrichment

|

SAP & Microsoft Analytics Combined: 5-Day Assessment

|

Seavus Application Modernization: 4-Week Assessment

|

Spark Unified Media Platform

|

Spicy Managed Microsoft Sentinel Service

|

SPiDER TM (Japan)

|

SPiDER TM 5.5 (South Korea)

|

Spirent for Azure Public Multi-access Edge Compute

|

Stipra

|

SUSE Linux Enterprise Server 15 SP4 – Hardened BYOS (x86_64)

|

Sustainability Data Insights

|

SymphonyAI Sensa – SensaAML (AI-based Anti-Money Laundering)

|

US Exports Bill of Lading

|

US Imports Bill of Lading

|

US Imports Bill of Lading Commodity Details

|

Veridis Carbon Management

|

WeTrack

|

Windows 365 Cloud PC

|

Yanomaly Asset Health Monitoring

|

by Contributed | Nov 30, 2022 | Business, Hybrid Work, Microsoft 365, Technology, Work Trend Index

This article is contributed. See the original author and article here.

Empowering today’s digitally connected, distributed workforce requires the right culture powered by the right technology. This month in Microsoft 365, we’re highlighting new capabilities to boost productivity and inclusion.

The post From sign language capabilities to gaming in Teams—here’s what’s new in Microsoft 365 appeared first on Microsoft 365 Blog.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

by Contributed | Nov 29, 2022 | Technology

This article is contributed. See the original author and article here.

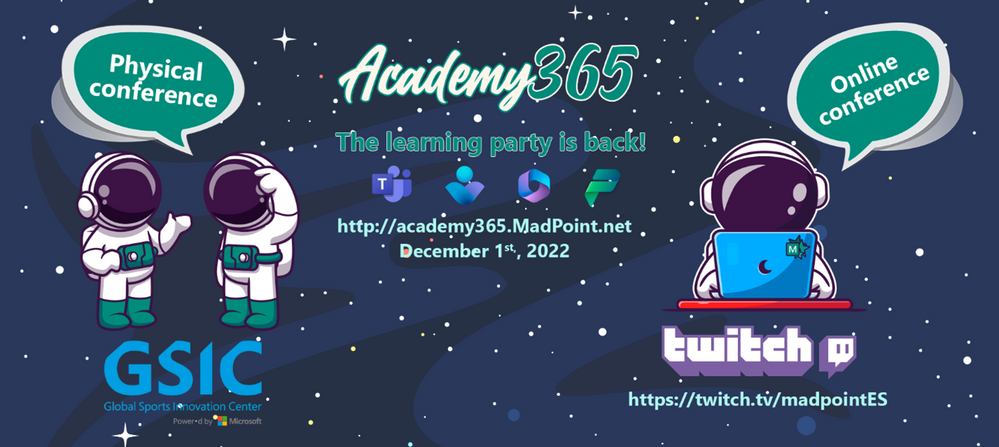

Today, the technology sector is growing very rapidly across Europe and requires many trained, prepared, and qualified professionals to help large, medium, and small companies meet the new global challenges ahead. A report by the agency DigitalES estimates that there are currently more than 120,000 unfilled jobs in Spain related to software development, communications, security, Cloud, Big Data, AI, and AR/VR.

From the Spanish Microsoft Technical Communities, we have always tried to bring technology to all people. That’s why we’ve designed a new type of event focused on learning with the aim of training professionals who are starting to work with the Microsoft 365 ecosystem and who need to get the most out of its services.

In 2021, We Designed Academy365

This new type of event was born last year under the name of Academy365, and it was organized by a few Most Valuable Professionals (MVPs) and coordinators of MadPoint, the Microsoft 365 Technical Community of Madrid. As the objective of the event was to train the attendees, we organized an agenda of level 100 and 200 sessions, and we got several Microsoft Certified Trainer (MCT) professionals to help us teach attendees about Microsoft Teams, SharePoint, Planner, Power Automate, Intune, Security, and other technologies.

Last year, Academy365 was broadcasted as a virtual experience on Twitch, and it was a success in Spain, with an average of 300 attendees on each training and an extraordinary reception. Attendees finished the event with a diploma that certified they had received quality training from our MCT and MVP people.

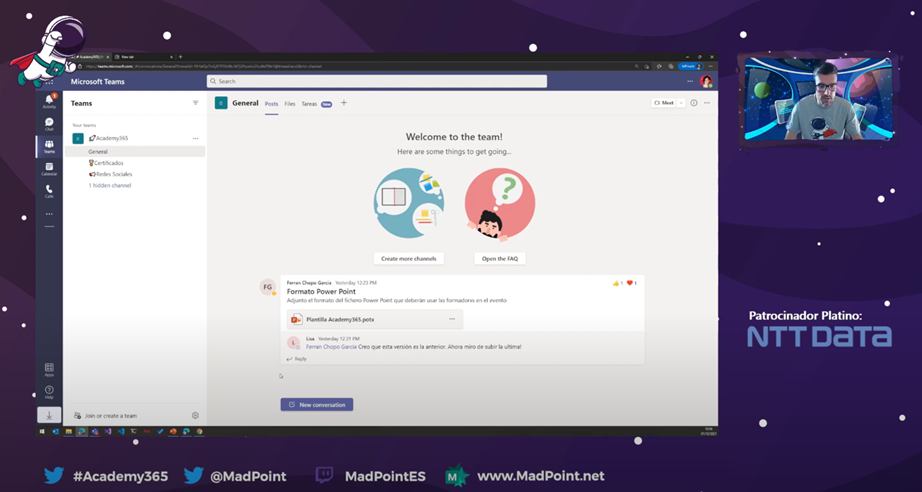

Example of one of our sessions during 2021

MadPoint coordinators prepared a professional-quality broadcast of the event, leveraging Microsoft Teams’ NDI capabilities, which allow us to extract video and audio signals from each trainer inside a Teams meeting and take that signal to a professional production environment such as OBS Studio.

Here you can check the recording of last year, in which you can see how Microsoft Teams helped us to have a fantastic production: YouTube – Academy365

In addition, Academy365 is a solidarity event. Last year we collaborated with the Food Bank Foundation of Madrid, which is a non-profit organization that is responsible for helping the neediest families, bringing them food and helping them as much as possible, especially on Christmas dates. All the amounts received by sponsors and individuals were invested in helping many families.

And this year?

This year we want to resume the physical events experience, and we have designed a second hybrid edition. Attendees can join online on Twitch, but they can also come in person to the Global Sports Innovation Center in Madrid. This center is powered by Microsoft, and it is a non-profit association that facilitates synergies and provides its members with value-added services that enable sports entities and companies to grow and develop with the help of experienced professionals in sports tech ecosystem.

In person attendees will be able to receive the training live from our speakers and will have some additional surprises, as we are preparing an innovation tour of the center, a Metaverse Corner and some additional activity thanks to our friends at Microsoft Education.

As for the online experience, we maintain our Twitch broadcast. We will combine live speakers and speakers by Microsoft Teams in a single production.

This year we have a very interesting agenda, where Microsoft Teams and Microsoft Viva will be the main protagonists. In addition, we can learn about security, Mixed Reality, Power Apps, migrations, development, accessibility, and Windows 365.

If you live in Spain and you are interested in our event, we recommend that you visit our website to know all the details and register for the event.

Here are the most important links:

Thank you very much, and we wish you a Merry Christmas from our community.

An image of a component architecture diagram demonstrating how shapes with gradients appear in Visio for the web.

Recent Comments