by Contributed | Dec 5, 2022 | Technology

This article is contributed. See the original author and article here.

Scientific computing has long relied on HPC systems to accelerate scientific discovery. What constitutes an HPC system has continued to evolve. Access to computing keeps getting democratized and HPC is no longer limited to multi-billion dollar government laboratories and industries who can afford the infrastructure. Anyone with access to the Internet can now easily leverage the ubiquitous cloud for their computing task du jour! Azure natively supports HPC by providing hardware suitable for high performance computing needs together with software infrastructure to make it easy to harness these resources. In this post, we focus on one such Azure infrastructure component, Azure Batch, and see how we can be used to support a common use-case: data browser with interactive 3D visualization support.

Use-Case: the problem statement

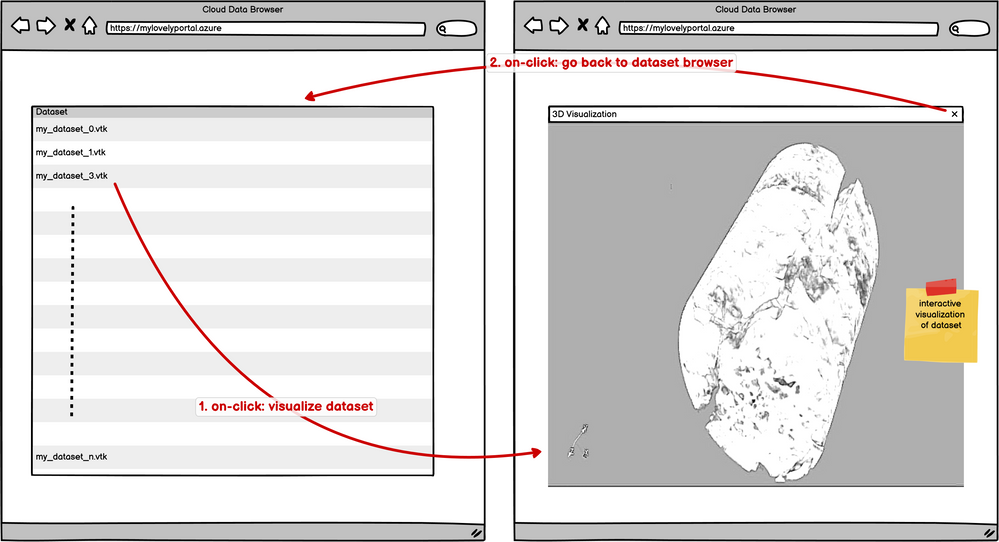

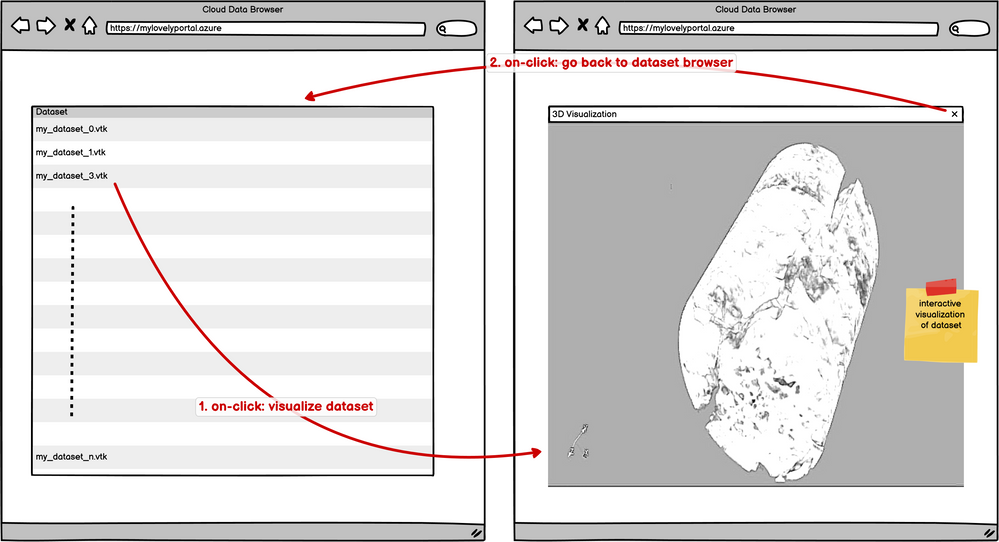

Recently, a customer came to us with an interesting use-case. They wanted to provide their users with an interactive data browser. The datasets are HPC simulation and analysis results which can easily be several gigabytes in size. They wanted to present their users with a web app where users can browse the datasets and then select any of the datasets to interactively visualize it with some canned visualizations.

Variations of this use-case are a very common request in the scientific computing world so let’s generalize (and perhaps simplify) the problem. We want to develop the following web application:

Design Considerations

A few things to qualify the problem and help guide our design choices.

- We want to a scalable solution. Of course, we can set all of this up on a workstation and expose that to the world wide web, however not only is that scary (for security reasons) but also not scalable. We want this to scale no matter how many users are accessing the portal at the same time.

- The datasets are large and require processing before they can be visualized. Hence, we want a remote rendering capable system where the rendering can happen on remote computing resources, rather than the browser itself.

These requirements help us make the following design choices:

- Azure Batch provides us with the ability to allocate (and free up) compute resources as and when needed. We can setup the web app to submit jobs on Azure Batch for visualizing datasets and then Batch can allocate those jobs to nodes in a node pool that can be setup to auto-scale using fancy rules, as needed. This frees us from having to do any management of the nodes in the pool such as setting them up, ensuring they have access to appropriate storage to read the datasets, etc. Batch takes care of that in addition to providing us with tools for monitoring, debugging and diagnosing issues.

- For visualization and data processing, we use ParaView. Together with trame, ParaView makes it easy for us to develop a remote-rendering capable custom web applications that offer all the sophistication and flexibility available in the desktop app. Thus we can easily develop complex data analysis pipelines to satisfy the specific user requirements. trame enables use to access the visualization viewport through a web browser using web sockets.

Deploying the resources

One of the first steps when dealing with cloud computing is deploying the resources necessary on the Cloud. Infrastructure as Code (IaC) refers to the ability of deploying the resources needed and configuring them programmatically. As we go about building our HPC environment in the Azure Cloud, there are many ways to do it. We can use the Azure Portal to setup the system interactively. We can use Azure CLI to script the setup. We can also use domain-specific languages like Terraform or Bicep to define and deploy the infrastructure. For this post, we use Bicep which is a language for declaratively defining the Azure resources. For deploying the Bicep specifications and for other operations like populating datasets, we use Azure CLI.

All the resources needed for this demo can be deployed using the bicep code available in this Github repository. The readme goes over the prerequisites and the detailed steps to deploy all necessary resources. The project includes several different applications. The demo we cover this post is referred to as trame. Ensure you pass enableTrame=true to the `az deployment sub create ….` command to deploy the web application.

Demo in action

Once the deployment is successful, follow the steps described here to upload datasets to the storage account deployed. Finally, you should be able to browse to the URL specific to your deployed web app and start visualizing your datasets! Here’s a short video of the demo in action:

Demo: Cloud Dataset VIewer in action

Demo: Cloud Dataset VIewer in action

Architecture

Let’s dive into the details on how this is put together. Of course, there’s no one way to do this. Discussing the details of the resources and their configuration should help anyone trying to adapt a similar solution for their specific requirements.

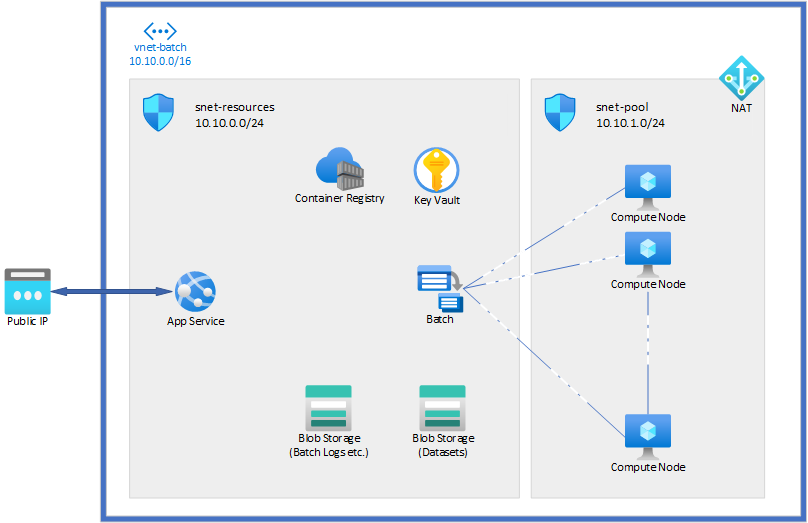

Here’s a schematic of the main Azure resources deployed in this demo.

App Service: This the Azure resource that hosts our main web application. As described in the initial sections, we want our web app to let the user browse datasets and then visualize them. Thus, the web application has two major roles: list datasets, and start/stop visualization jobs. For first role, the web app needs to talk with the storage account on which all the datasets are stored to get the list of available datasets. For the second, the web app needs to communicate with the Batch service to submit jobs/stop jobs etc. In this demo, we decided to write this web app using node.js. The source code is available here. The app uses Azure JavaScript SDK to communicate with the storage account and batch service. The web app also has another role that is a little less obvious: it acts as communication proxy to communicate with the visualization web servers running on the compute nodes in the batch pool. This will become clear when we discuss the Batch resource.

Batch: This is the Azure Batch resource that orchestrates the compute node pools, job submission, etc. Batch takes care of managing all the compute nodes that are available for handling all the visualization requests. When the user “clicks” on a dataset, the web app uses Azure Batch JavaScript API to communicate with the Batch service and request it to start a job to visualize the corresponding dataset. Batch takes care on mounting the storage account on all compute nodes in the pool when they are initialized thus any process running on the compute nodes can access the datasets. The visualization job, in our case, is a simple Python application that uses ParaView/trame APIs to visualize the data. The application, named vizer, is available in this Github repository. When launched with a dataset filename passed on the command line, vizer starts up a Python web-server that one can connect to access the visualization. vizer is running on one of the compute nodes in the pool. The compute nodes in the pool are not accessible from the outside network. Thus, there’s no direct way for the user to connect to this internal visualization web-server. This is why we need the web app deployed in our outward facing app service to also act as a proxy. When a visualization web-server is ready, the main web app creates a iframe that proxies to this internal visualization web-server thus making the visualization accessible by the user. Since trame uses websockets, we need to ensure that this proxy supports websocket proxying as well. Luckily, node.js makes this very easy for us. Look at the web app source code for details on ho this can be done. For simplicity, the demo doesn’t add any additional authorization for the proxying. For production, one should consider adding authorization logic to avoid any random user from accessing any other users visualization results.

Container Registry: Azure Container Registry is used to store container images. In this demo, we containerize both the main web app and the visualization application, vizer. It’s not necessary to use containers, of course. Both App Service and Batch can work without containers, if needed. Containers just make it easier to setup the runtime environments for our demo.

Key Vault: Key Vault is generally used to store secrets and other private information. In this demo, we need the Key Vault for the Batch resource. Batch uses the Key Vault to store certificates etc. that is needs for setting up the compute nodes in the pools.

Wrapping up

As we can see, it’s fairly straight forward to get a interactive visualization portal setup using Azure and ParaView. For this demo, we tried to keep things simple and yet follow best practices when it comes to public access to resources in the cloud. Of course, for a production deployment one would want to add authentication to the web app, along with autoscaling for batch pool and add smarts for resource cleanup and fault tolerance to the web application, etc. One thing we have not covered in this post is how to use Azure’s HPC SKUs and ParaView’s distributed rendering capabilities and GPUs for processing massive datasets. We will explore that and more in subsequent posts.

by Contributed | Dec 5, 2022 | Business, Hybrid Work, Microsoft 365, Technology, Viva Insights, Viva Learning, Work Trend Index

This article is contributed. See the original author and article here.

A new Total Economic Impact™ Of Microsoft Viva study by Forrester Consulting, commissioned by Microsoft, details five ways Microsoft Viva can help organizations save time and money while improving business outcomes.

The post 5 ways Microsoft Viva helps businesses save time and money appeared first on Microsoft 365 Blog.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

by Contributed | Dec 2, 2022 | Technology

This article is contributed. See the original author and article here.

Happy Friday, and welcome back to your MTC Weekly Roundup!

We’ve recovered from the food coma of last week’s holiday and we’re back in the swing of things, so let’s see what’s happening in the Community this week!

MTC Moments of the Week

This week’s MTC Member of the Week spotlight is on @SnowMan55. Like our previous MoW, Glenn is another fresh face in the MTC but has already become a Frequent Contributor in the Excel forum with over a dozen best responses under their belt. We truly appreciate all your help!

Over on the blogs this week, @ShirleyseHaley wrote up a 2-minute recap of everything new with Microsoft Security, Compliance, and Identity, including Microsoft Security Virtual Training Days, which are free and in-depth virtual training events to help professionals of all levels grow their technical skills and gain confidence to navigate what’s ahead.

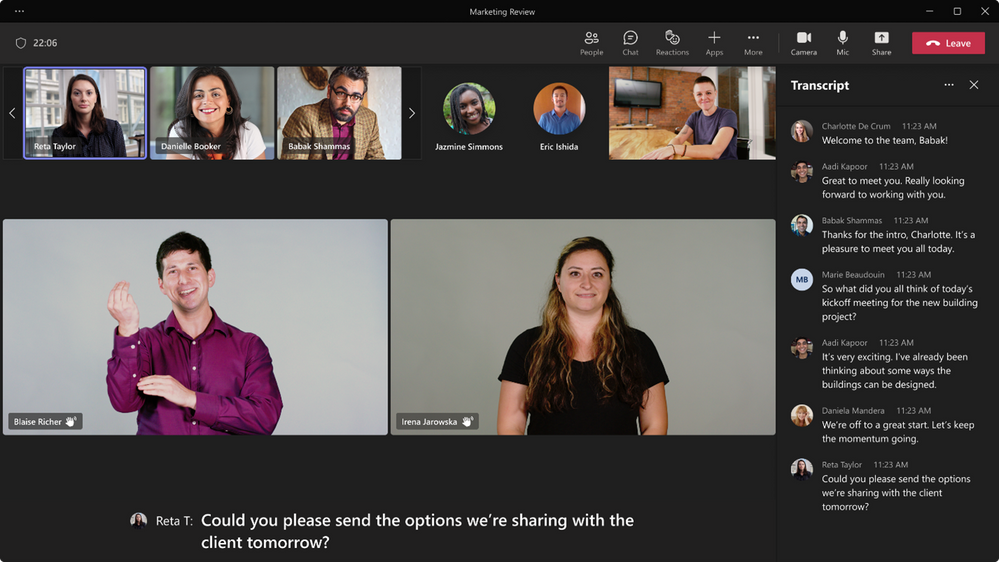

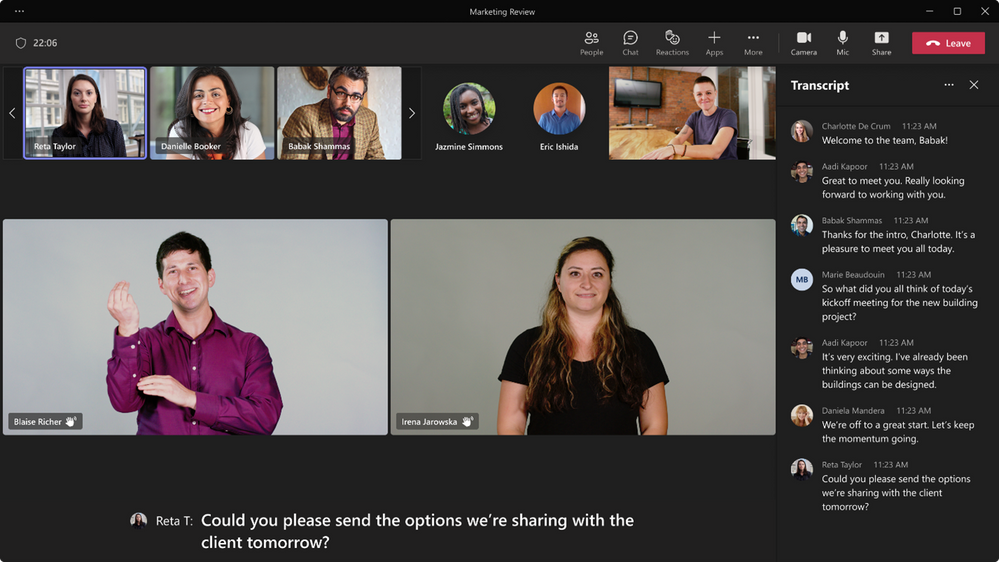

We are on a short break from Community events but mark your calendars for our next Ask Microsoft Anything (AMA) on Tuesday, December 13 at 09:00 am PST, when we’ll have experts from the Microsoft Teams team on hand to answer questions about the new Sign Language View in Microsoft Teams Meetings!

Unanswered Questions – Can you help them out?

Every week, users come to the MTC seeking guidance or technical support for their Microsoft solutions, and we want to help highlight a few of these each week in the hopes of getting these questions answered by our amazing community!

In the Teams forum, @adi_km is facing an issue within the Teams application (not the web version) whereusers are getting messages stuck in the sending state that never get delivered.

Meanwhile, in the SharePoint forum, @MattS1978 is looking for help using a VLOOKUP command between an Excel spreadsheet with an existing VLOOKUP and a Microsoft List for their retail stores.

————————-

For this week’s fun fact: on this day in 1983, the iconic music video epic for Michael Jackson’s “Thriller” premiered on MTV with a run time of almost 14 minutes – a game changer!

And with that, I hope you all have a wonderful weekend!

by Contributed | Dec 2, 2022 | Technology

This article is contributed. See the original author and article here.

Welcome to Azure Data Factory’s November monthly update! Here we’ll share the latest updates on What’s New in Azure Data Factory. You can find all our updates here.

We’ll also be hosting our monthly livestream next week on December 15th at 9:00am PST/ 12:00pm EST! Join us to see some live demos and to ask us your ADF questions!

Join the livestream here.

Table of Contents

Continuous Integration and continuous deployment (CI/CD)

Data flow

Developer Productivity

Continuous integration and continuous delivery (CI/CD)

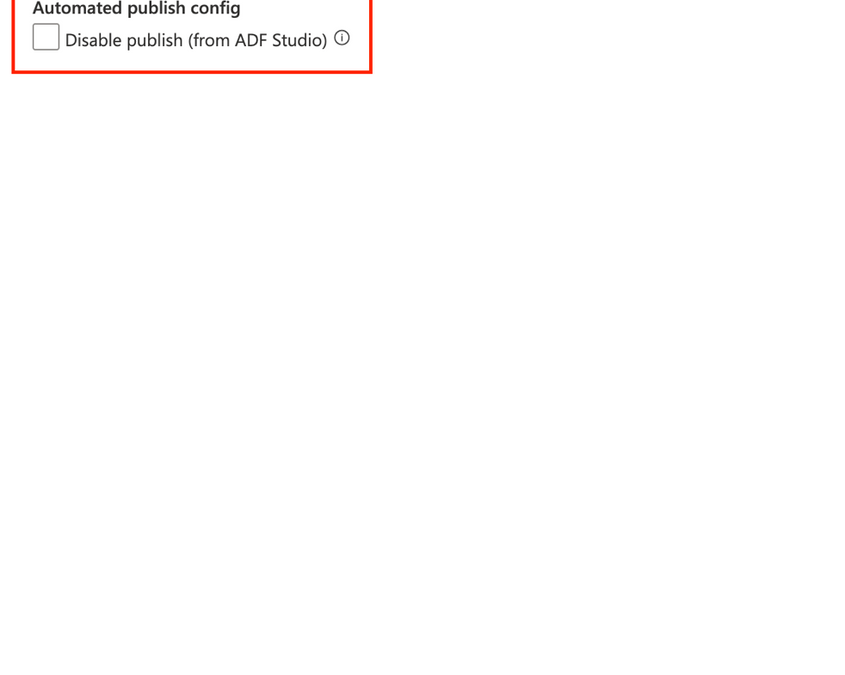

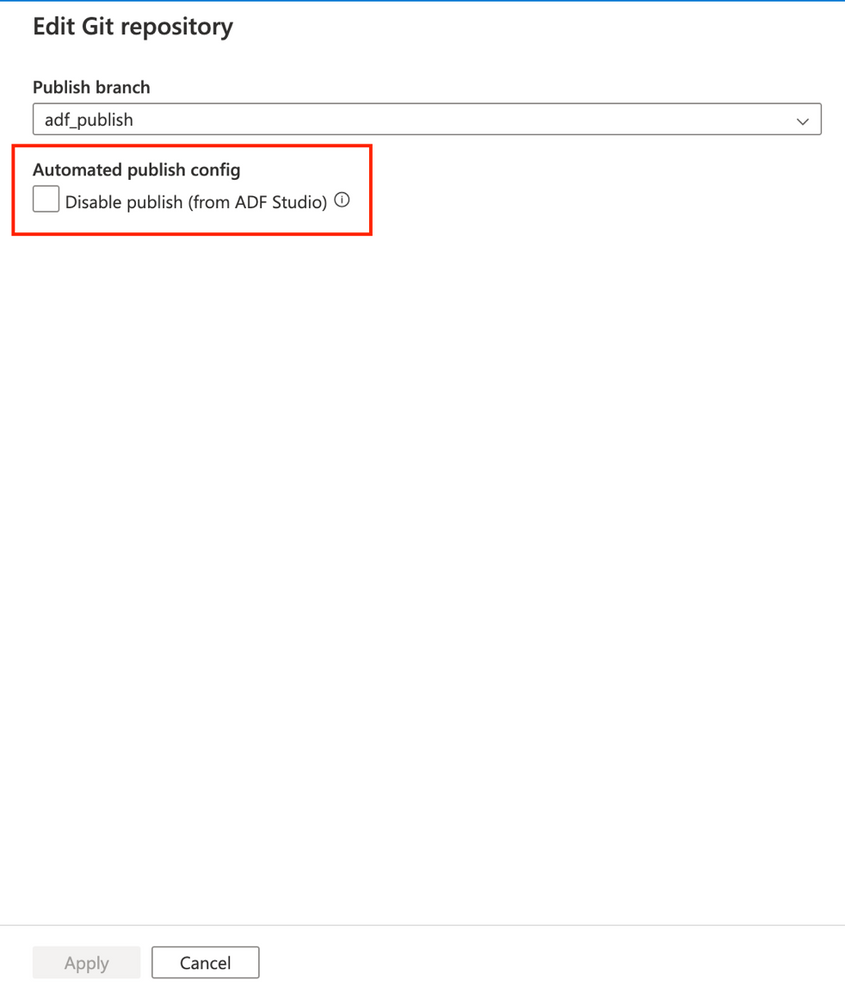

Disable publish button in ADF studio now available

We have added the ability to disable the publish button from the ADF studio when source control is enabled. Once this is enabled, the Publish button will be grayed out in the ADF Studio. This will help to avoid overwriting the last automated publish deployment.

To learn more about this update, read Source control – Azure Data Factory | Microsoft Learn.

Data flow

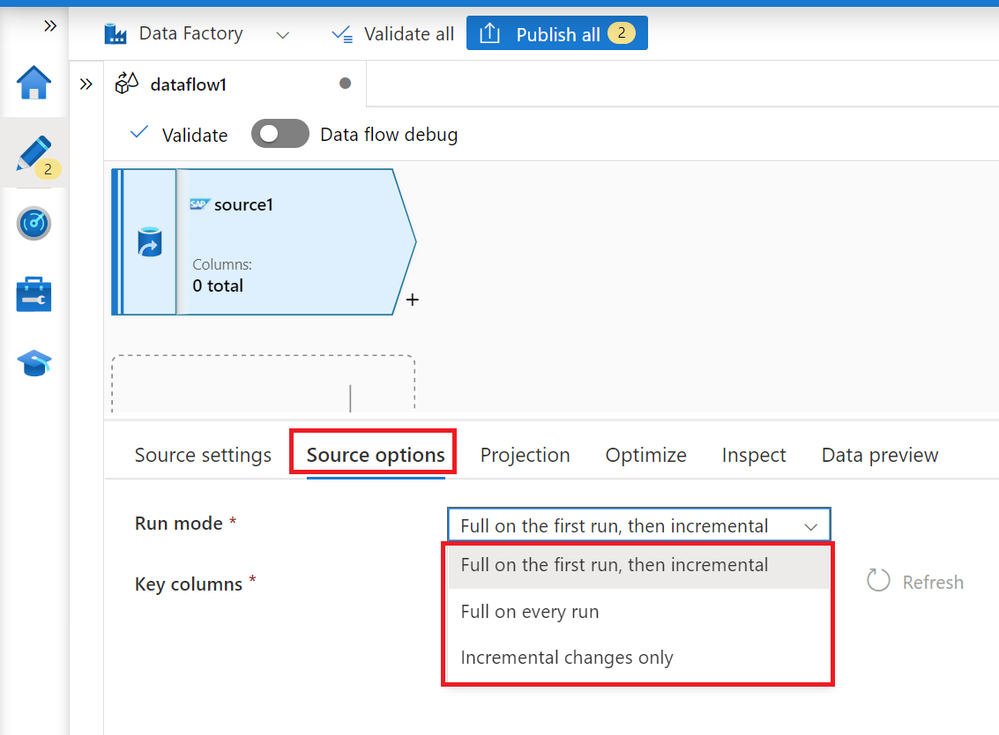

New improvements to SAP Change Data Capture (CDC)

We added some new updates to the SAP CDC connector in mapping data flows, adding new capabilities:

Incremental only is now available

You can get changes only from SAP system without initial full load. With this feature, you have the flexibility to focus on incremental data process without worrying about big data volume movement on an initial full data load.

Performance improvements have been made – source partitions in initial full data load

With a simple radio button, you can now connect your SAP system to multiple sessions in parallel to extract data, which will greatly reduce the time to perform an initial full data load from your SAP system.

Pipeline template added to Template Gallery

There is a new pipeline template in the Template Gallery to help speed up your journey on loading big amounts of SAP objects by a parameterized pipeline with SAP CDC enabled in ADF. You can get more information on the template here.

To learn more about this, read Transform data from an SAP ODP source with the SAP CDC connector in Azure Data Factory or Azure Synapse Analytics – Azure Data Factory & Azure Synapse | Microsoft Learn.

Developer Productivity

Pipeline designer enhancements added to ADF Studio preview experience

We have added three new UI updates to the ADF Studio preview experience:

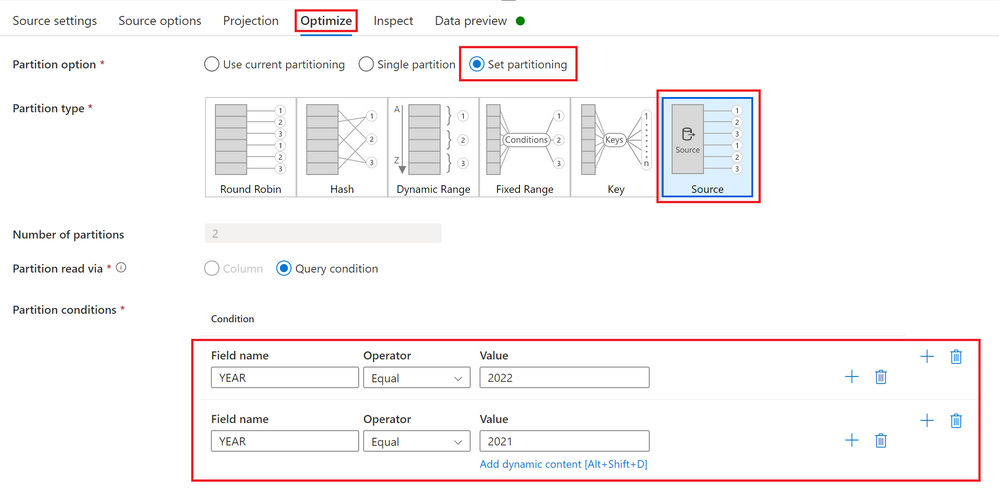

Dynamic Content Flyout

A new dynamic content flyout has been added to make it easier to set dynamic content in your pipeline activities without having to use the expression builder.

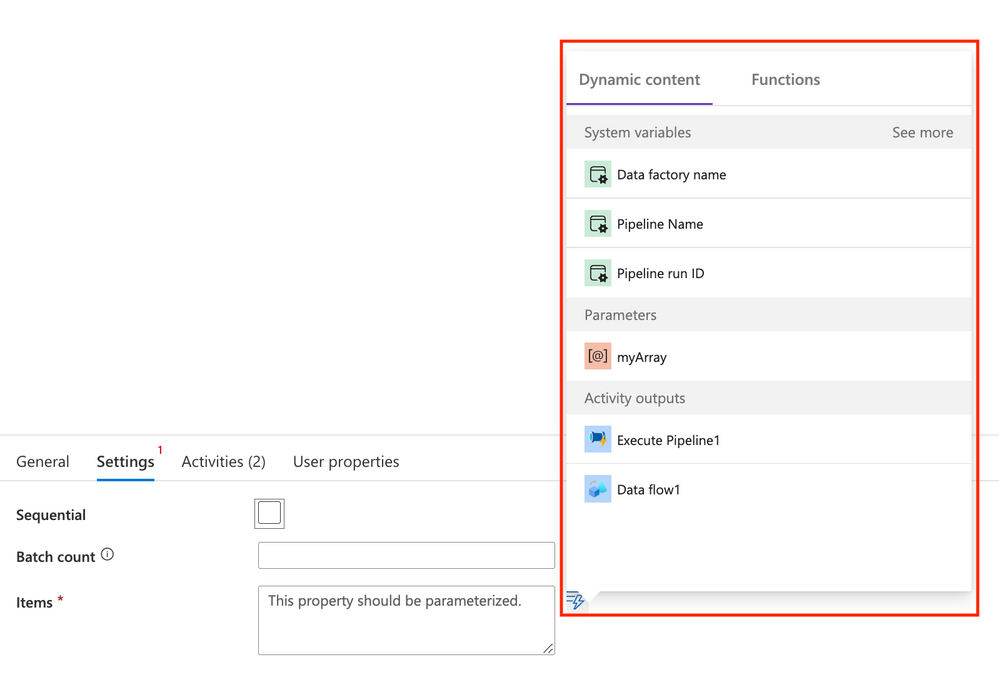

Error Messaging

Error messages have been relocated to the Status column in Debug and Pipeline monitoring. This will make it easier to view errors whenever a pipeline run fails.

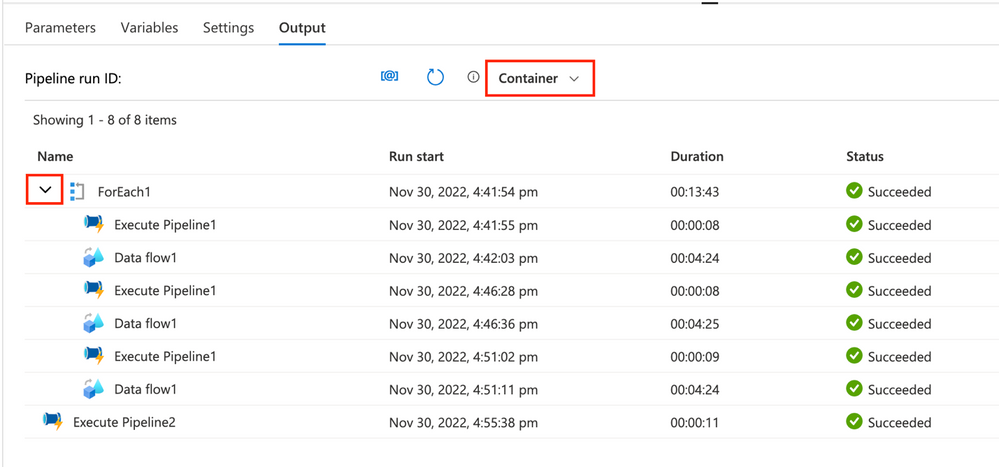

Container view for monitoring

A new monitoring view is available, adding the option to enable the container view. This view provides a more consolidated monitoring view of activities that were run. A big change that you will see is that nested activities are now grouped under the parent activity. To be able to view more activities on one page, you can choose to hide the list of nested activities under the parent activity.

To learn more about these enhancements, read Managing Azure Data Factory studio preview experience – Azure Data Factory | Microsoft Learn.

We hope that you found this helpful! Let us know in the comments if there’s anything else you’d like to see in our blogs or livestreams. We love hearing your feedback!

by Contributed | Dec 2, 2022 | Technology

This article is contributed. See the original author and article here.

Purpose

The purpose of this post is to walk through the process of creating an event driven alerting mechanism for Azure Policy compliance.

Assumptions

General knowledge of Azure, PowerShell, and resource creation, both in the portal and with command-line interfaces.

Challenge

Many organizations use Azure Policy to track, measure, maintain, and enforce regulatory policy compliance. These regulatory compliance initiatives could be standard baseline initiatives that have been assigned or they could be customized regulatory compliance initiatives, created just for that particular organization. Regardless of the regulatory compliance initiative type, organizations have prioritized not just compliance to a regulatory compliance initiative but also when a policy state change occurs. A common question we hear is “How can I be alerted when my policy compliance state changes?”. If an organization would rather use automation instead of a manual method, this article will describe an alerting mechanism that will notify you about what policy changed, when that policy changed, and how you want to be notified about that change.

In Azure, there are multiple ways to accomplish the same objective. Azure Policy is no different. These different methods can be broken down into two categories: event driven and time driven. Time driven methods require you to query or retrieve some data from a source on a schedule. This scheduled query would then leverage technology to determine logic and trigger a response. While this time driven method does work, it is complex and inefficient. This particular method introduces delays between the time a policy event occurs and the time in which you get an alert. The other method related to Azure Policy is event driven. An event driven method is a way to trigger a response to a policy event when it happens. This event driven approach will be the focus of this post.

Querying the Azure Activity log has been one way that administrators have retrieved Azure Policy events. However, the Azure Activity log does not provide the level of detail that is required for Azure Policy especially in regard to regulatory compliance. Admins would like to know when a regulatory compliance policy is no longer in compliance. Unfortunately, because the Azure Activity log is focused on all Azure Activity, it does not provide specifics about Azure Policy event changes. This event driven solution will walk you through the steps of obtaining a method for rich Azure Policy activity details such as regulatory compliance changes.

Solution

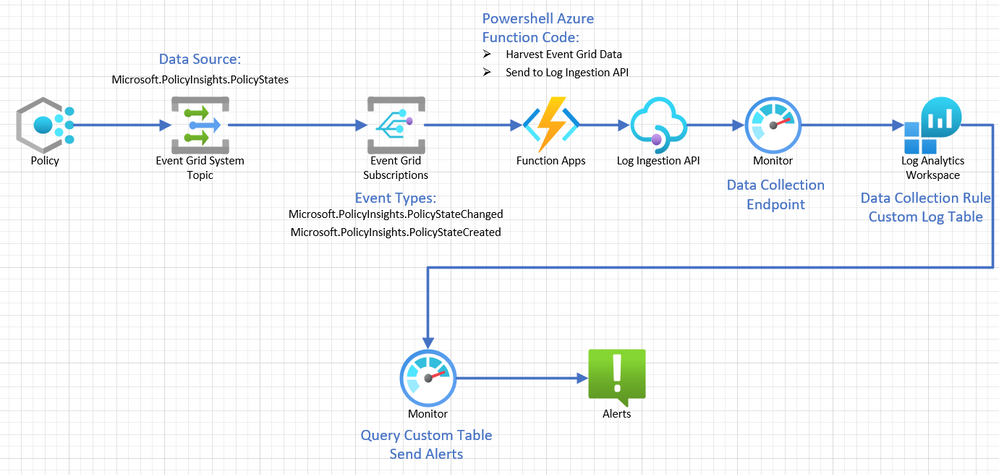

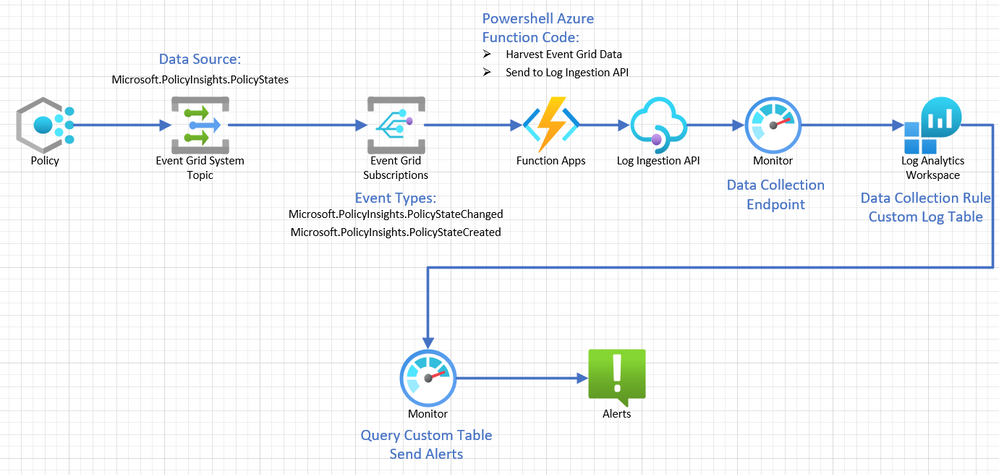

The first item needed in the solution is to determine where the type of data source is that we need to capture for Azure Policy regulatory compliance details. The preferred data source for capturing policy specific events is the PolicyInsights event data. PolicyInsights data comes in three event types in Azure: Policy Compliance Status Added, Policy Compliance Status Changed, Policy Compliance Status Deleted. The next question would be: How do I access or capture this PolicyInsights Event data and then create alerts so that I can be notified when certain policy events happen, like compliance change? The following architecture answers that question.

- Azure Policy: The first step in this process is Azure Policy. Policies are assigned and those policies have compliance states.

- Event Grid: When an Azure Policy compliance state changes, the Event Grid will pick it up because it is “listening” for PolicyInsights events.

- Event Grid Subscription: An Event Grid Subscription will be configured to send those captured events to an Azure Function.

- Azure Function: An Azure Function will be using PowerShell code to harvest the incoming policy event data and use the Log Ingestion API to send it to the Log Analytics Workspace through a Data Collection Endpoint and Data Collection Rule.

- Log Ingestion API: The Log Ingestion API will be used to send this data through the Data Collection Endpoint and Data Collection Rule to the Log Analytics Workspace.

- Log Analytics Workspace: A Log Analytics Workspace will be configured with a Custom Table created to receive the data coming from the Data Collection Endpoint.

- Monitor: Azure Monitor will be used to run queries on the Custom Table to indicate when an alert should be triggered.

- Alert: An alert will be configured to be triggered when the Custom Table query indicates something is out of compliance.

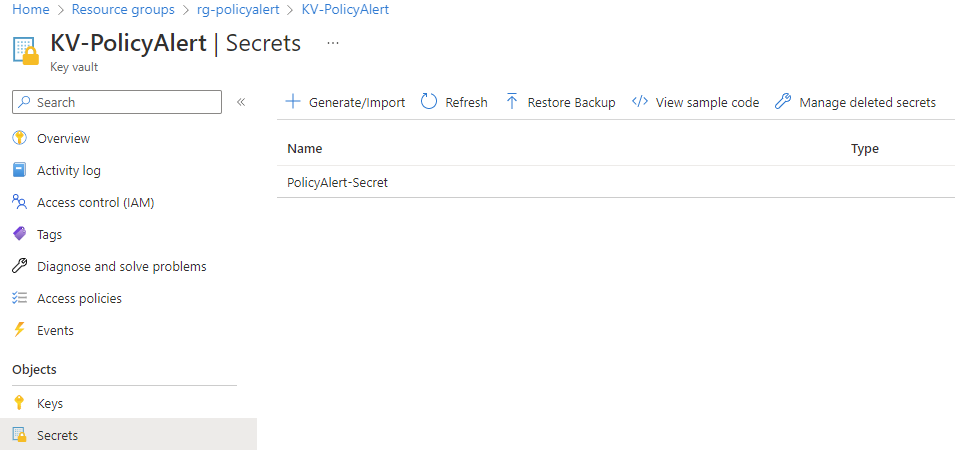

Key Vault

In this reference architecture, we will use a Key Vault to store a secret value that gets dynamically retrieved by the PowerShell code in the Azure Function. The purpose of this process is to maintain proper security posture and provide a secure way to store and retrieve this sensitive data. An existing Key Vault may be used, or you may elect to create a new one for this purpose. Rather than walk through the creation of a new Key Vault, we will just be covering the specific configuration items that are needed. Key Vault deployment docs can be found here ( https://learn.microsoft.com/en-us/azure/key-vault/general/quick-create-portal).

- Secrets: The Key Vault will be used to store the App Registration Secret that you created in the AAD App registration. The value of the secret was one that you should have saved in your reminders notepad. Go to your Key Vault and click “Secrets”.

- Now click “Generate/Import” on the top menu bar to create a new secret.

- Upload option is Manual. Assign a “Name” and “Secret Value“. We used “PolicyAlert-Secret” as the name and put in the AAD App Registration secret as the value. Leave as enabled. Then click “Create“.

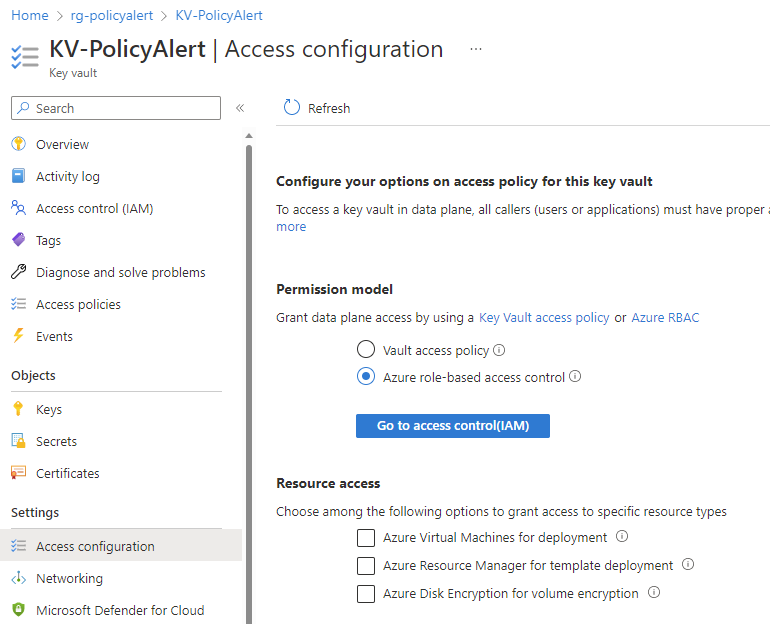

- Access Configuration: The Key Vault will need to have the Access Configuration set to Azure role-based access control. Click to apply the Azure role-based access control Permission model. This model is required so that you can setup specific access for the Azure Function managed identity in a later step.

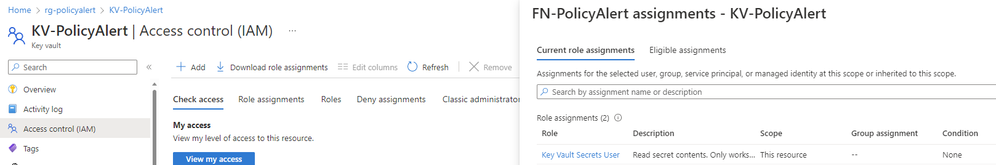

- Access Control: You will need to assign the “Key Vault Secrets User” role to the Azure Function managed identity. If you have not yet created that managed identity, you can come back later to do it or do it in the Azure Function section of the portal.

Event Grid System Topic

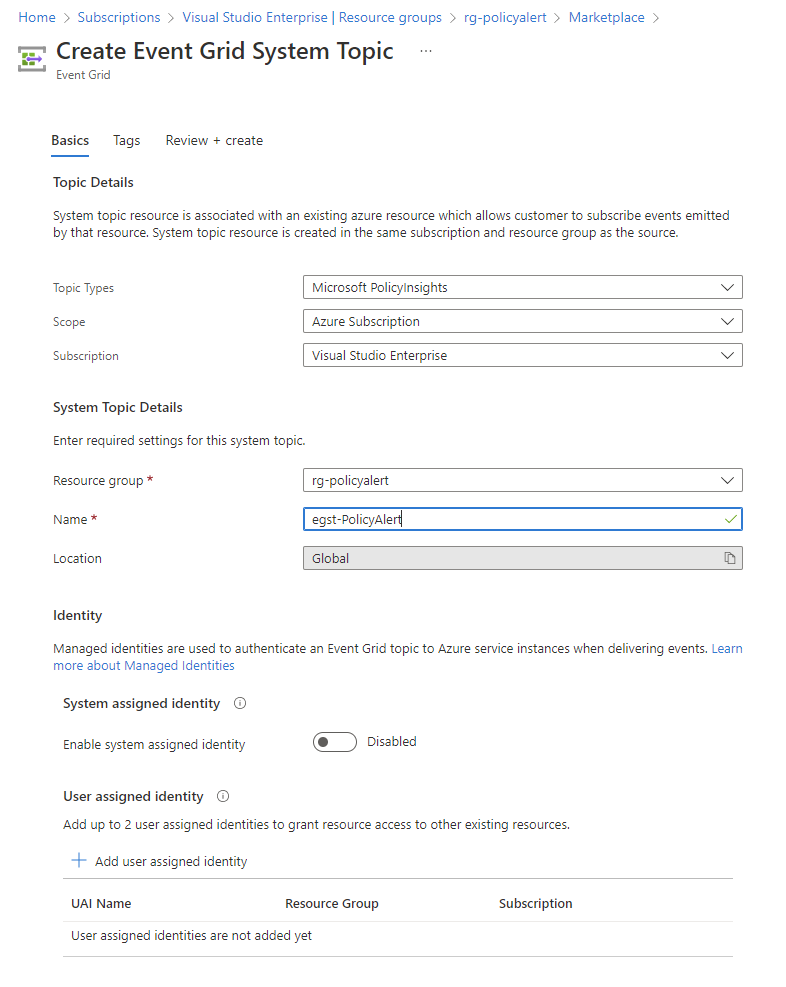

The Event Grid System Topic will capture the PolicyInsights data so it can be sent to a Log Analytics Workspace.

- Type Event Grid in the Global Azure search and select it.

- Under Azure service events, select System topics.

- Create New and use these Basic Settings:

- Change the Topic Types to Microsoft PolicyInsights

- Ensure that the scope is set to Azure Subscription

- Ensure that the appropriate Subscription name has been selected.

- Select the appropriate Resource Group

- Give the SystemTopic an appropriate name such as egst-PolicyAlert

- Tags as needed

- Review and create.

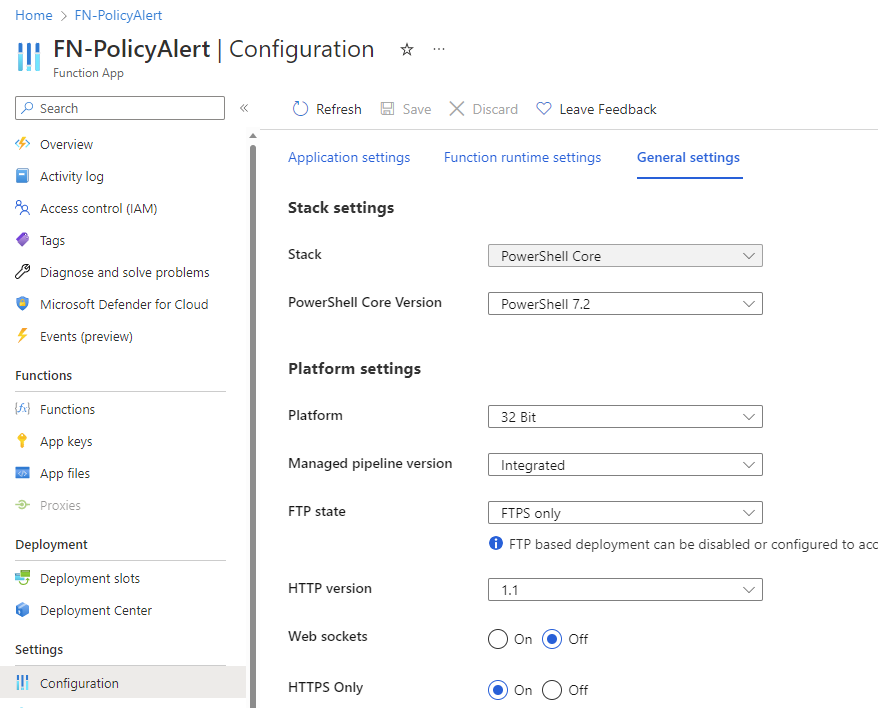

Function App

The Function App be used to harvest the PolicyInsights data from the Event Grid and then write it to a Log Analytics Workspace.

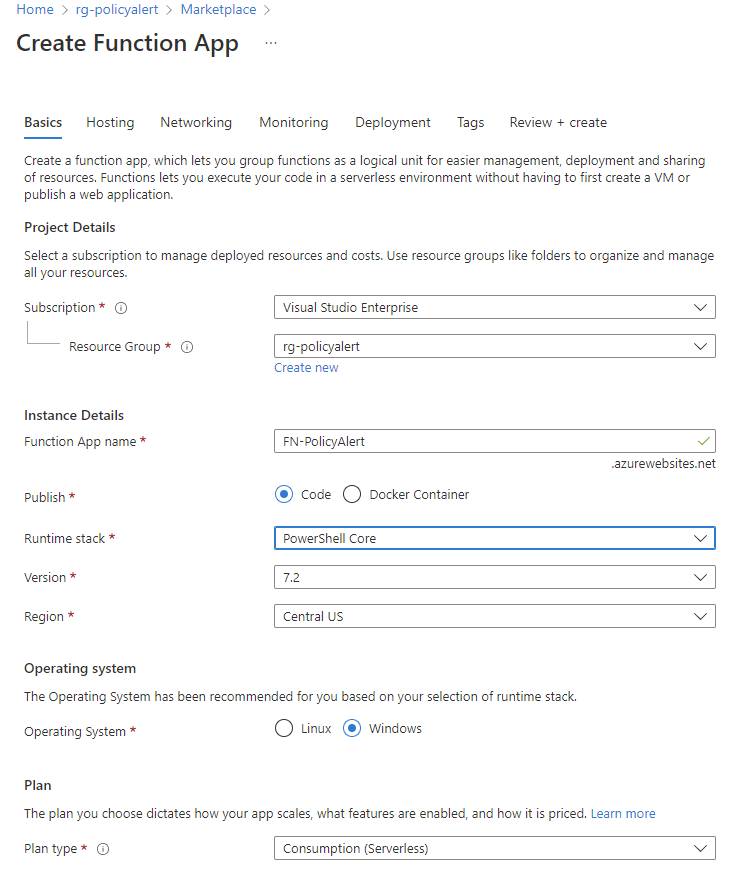

- Basics:

- Go to Azure Global Search and type/select Function App

- Click Create

- Select Resource Group where Event Grid resides

- Give name to function app according to naming convention and that makes sense for example, FN-PolicyAlert or FA-PolicyAlert

- Publish: Code

- Runtime Stack: PowerShell Core

- Version: 7.2 (newest available)

- Region: Select the region where you are working and have the EventGrid resource located

- Operating System: Windows

- Plan type: Consumption (Serverless)

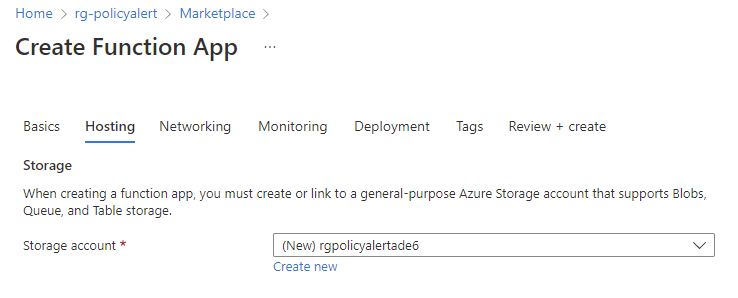

- Hosting: Select a storage account that you already have or accept the storage account that is created automatically.

- Networking: Leave network injection to off

- Monitoring: Enable Applications Insights; accept the newly created application insight.

- Deployment: Accept the defaults.

- Tags: As needed

- Review and Create

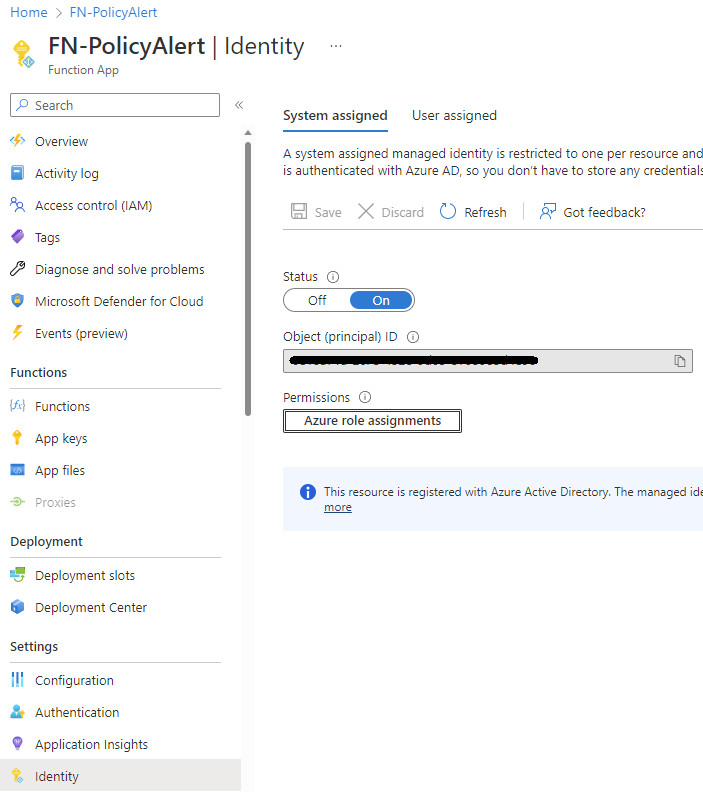

- Identity: Once the Function App is created, you need to configure the Managed Identity.

- After updating the slider to enable system assigned managed identity, the following pop up will occur. Click Yes to enable.

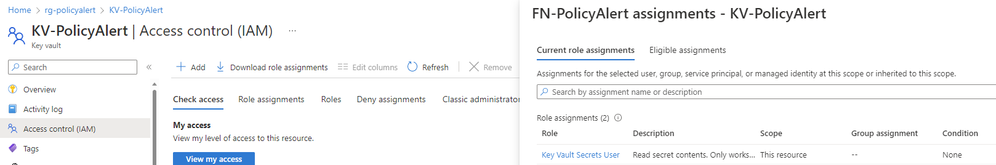

- As outlined earlier, assign the “Key Vault Secrets User” role to the Azure Function managed identity. Click on Azure role assignments, click on + Add role assignment (preview), select the scope to Key Vault, the Subscription in which you are working, the resource, being the actual Key Vault to which you want to apply the role to the managed identity, and finally what role to which you are assigning the managed identity which is the Key Vault Secrets User

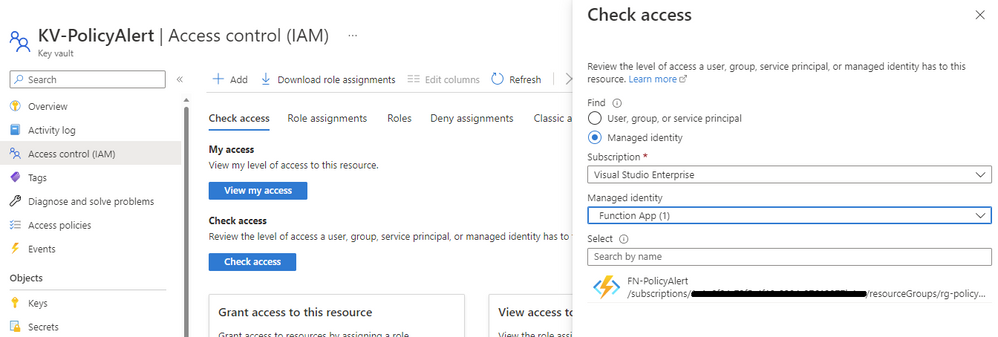

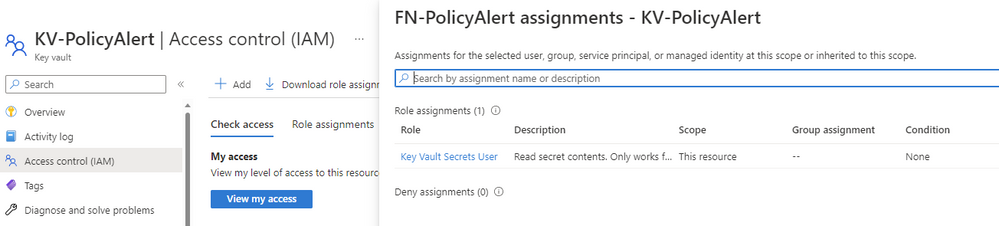

- Check permissions on the Key Vault. Go to Key Vault. Select Access Control (IAM). Click Check Access. Select for Find: Managed Identity. Ensure you have the correct subscription, select the managed identity, Function App and select the Function app you created for this solution.

- You should see the role of Key Vault Secrets User

Function

The Function inside of the Function App will be running code to format the Event Hub data and write it to Log Analytics.

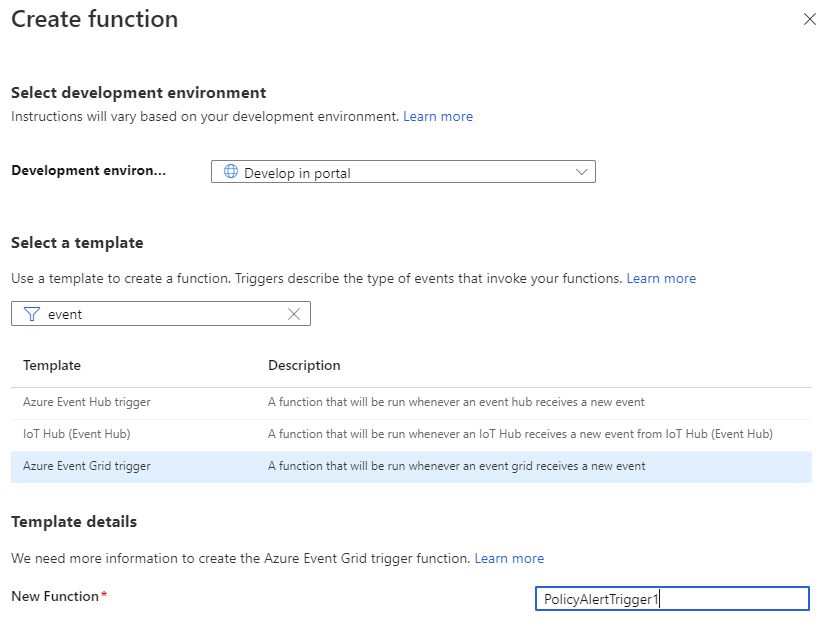

- Create the Function (inside of the Function App).

- Go the Function App that you just created.

- From the left hand menu, in the Functions sub menu, click on Functions.

- Click Create. A flyout menu will pop up on the right hand side of the screen.

- Leave the development environment as Develop in portal.

- The next section will be Select a Template. In this search bar, type and search for “Azure Event Grid Trigger” and select it. You can accept the default name but you can change the name to something that makes sense to the solution and/or naming convention, in this case we used “PolicyAlertTrigger1“.

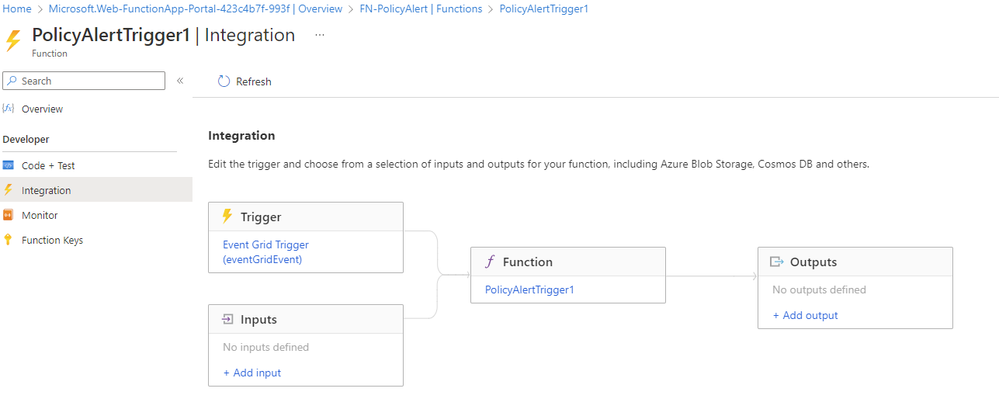

- Once the Function is created, select Integration to view the contents.

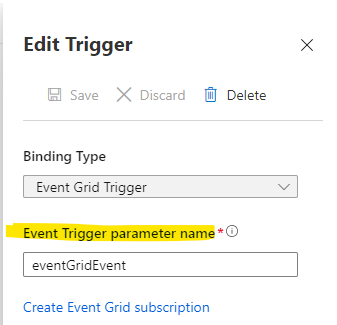

- Click to open the “Trigger” (eventGridEvent in this example).

- Please note the “Event Trigger Parameter Name“. This can be customized but it must match in the PowerShell code for the function. In this example, we will use the default value of “eventGridEvent“.

- Next, click “Create Event Grid Subscription“.

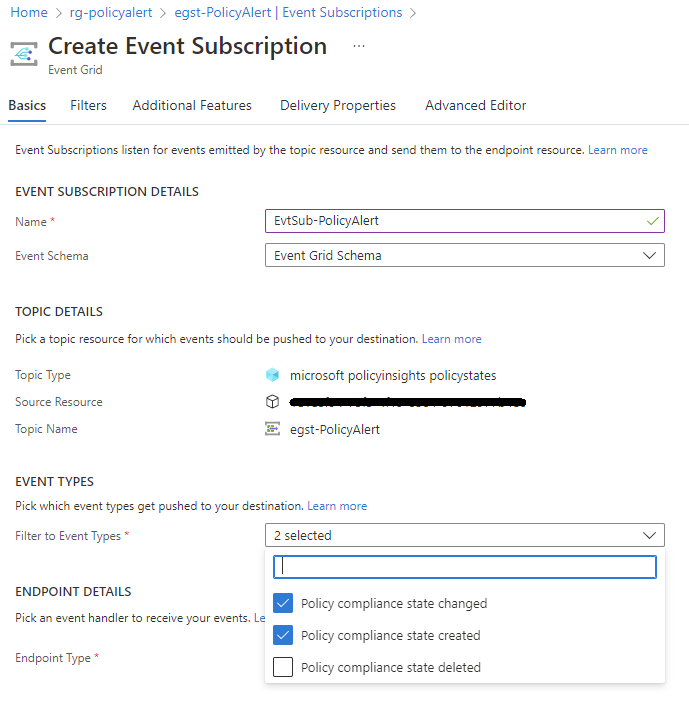

- On the “Create Event Subscription” part of the portal, use the following options/values.

- Name: EvtSub-PolicyAlert (this is customizable but should following naming conventions)

- Event Schema: Event Grid Schema

- Topic Types: Search for “policy” and select Microsoft PolicyInsights.

- Source Resource: Azure Subscription *(might be autofilled)

- System Topic Name: egst-PolicyAlert (or the name you created) *(might be autofilled)

- Event Types: Policy Compliance State Created, Policy Compliance State Changed

- Endpoint Type: Azure Function * (might be autofilled)

- Endpoint: PolicyAlertTrigger1 (Your Azure Function created in the previous step) * (might be autofilled)

AAD App Registration

An AAD App registration is needed for the Log Ingestion API, which is used to write the data to Log Analytics.

( https://learn.microsoft.com/en-us/azure/azure-monitor/logs/tutorial-logs-ingestion-portal) – please follow the link for additional information about log ingestion

- In the Azure global search, type Azure Active Directory.

- Once in your AAD tenant, under the Manage sub menu, click “App Registrations“.

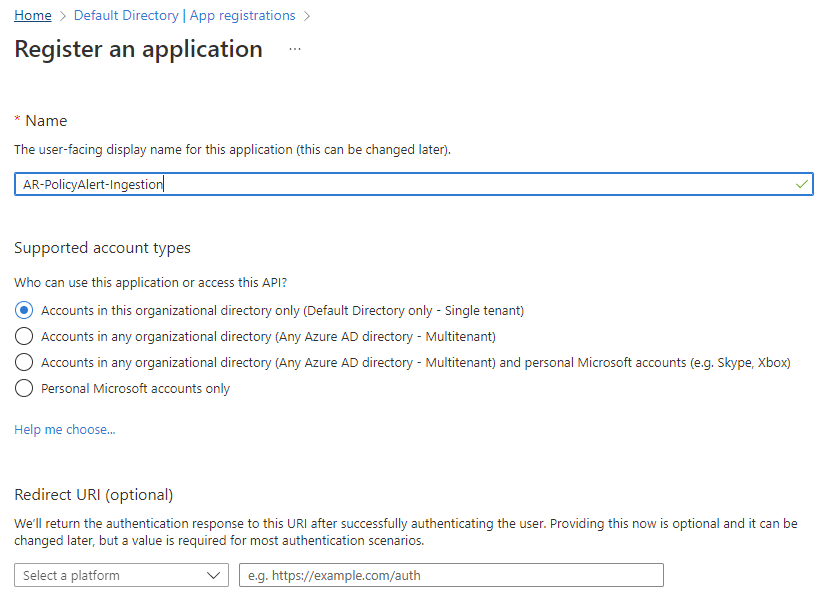

- Click +New Registration and use the following settings:

- Name: AR-PolicyAlert-Ingestion

- Supported account types: Accounts in this organizational directory only

- Click Register

- Once you create the new registration, click to open the registration. Be sure to store your Tenant ID and App ID “reminders” in a text document (like notepad) so you can copy/paste it in a later step. The snapshot below just has the values blanked out but shows the IDs that are needed by being highlighted.

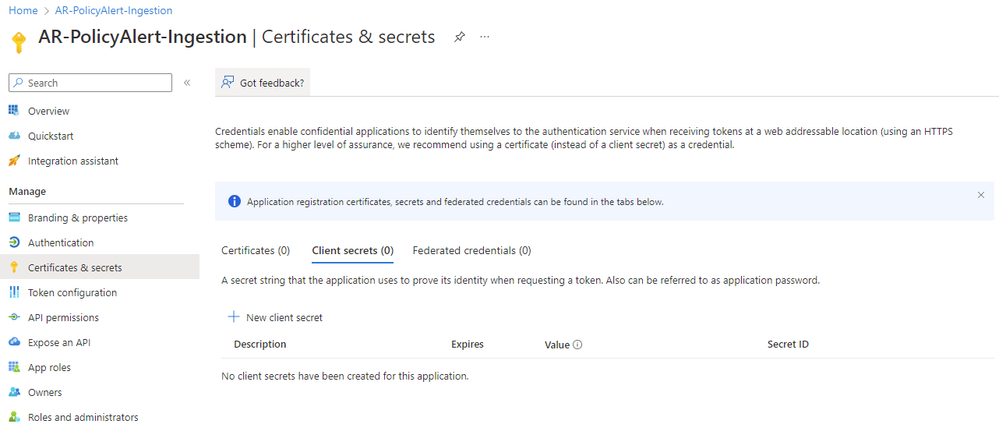

- When inside of your new registration, click “Certificates and Secrets” and then go to “Client Secrets“.

- Create a new client secret. Put the name you want to use in the “Description” and add this to your reminders notepad. Ensure that you have the correct expiration for the secret according to your organization. Click Add.

- Once the new secret is created, immediately copy the secret value and add it to the reminders notepad. This value will only be visible for a short time and you cannot get access to it again.

The Data Collection Endpoint is part of the Log Ingestion for writing the PolicyInsights data to Log Analytics.

- In the Azure global search , type Monitor. Select Azure Monitor and open in the Portal.

- On the left menu bar, under the Settings submenu, click “Data Collection Endpoints“.

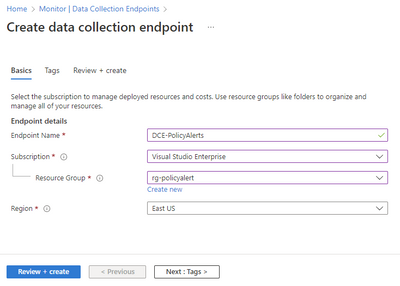

- Click + Create to create a new Data Collection Endpoint (DCE)

- Name the DCE, in this case, DCE-PolicyAlerts

- Ensure that the correct subscription is selected

- Ensure that the correct resource group is selected

- Ensure that the correct region is selected

- Add any appropriate tags.

- Review and Create

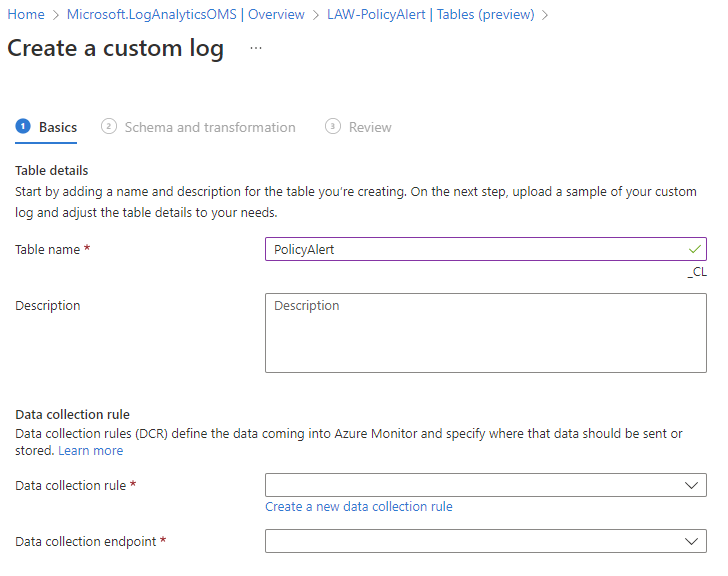

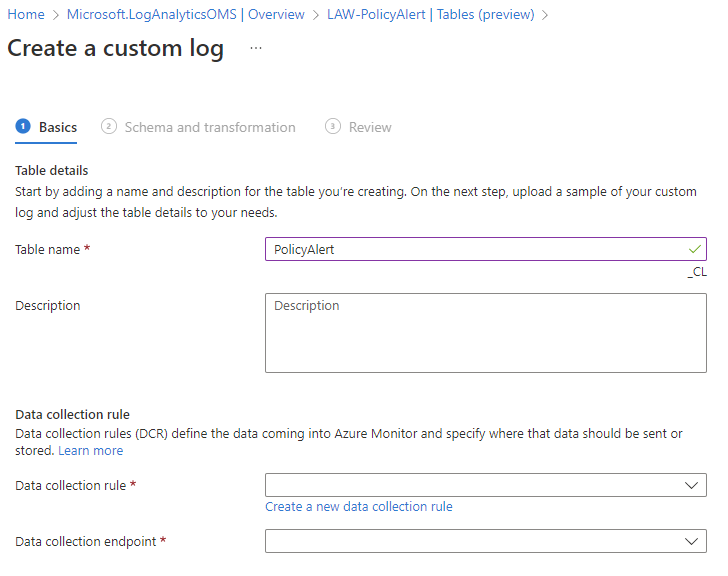

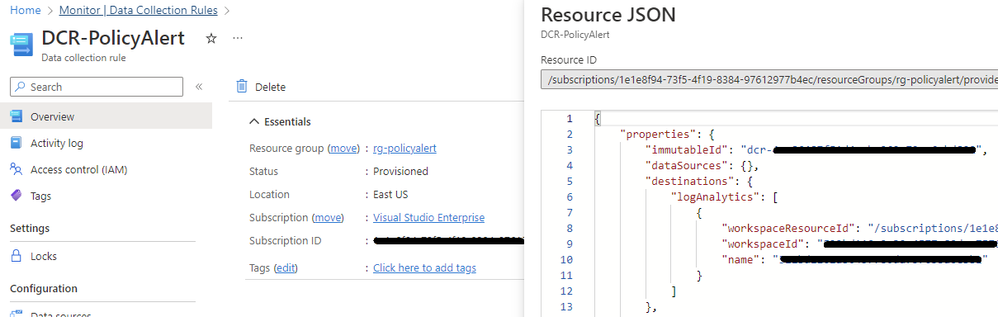

These steps will show how to create a customer data table in the Log Analytics Workspace and create a Data Collection Rule.

- Go to the Azure global search bar and type in Log Analytics Workspaces (LAW)

- If you do not have a current Log Analytic workspace, please create one before moving on to the next step.

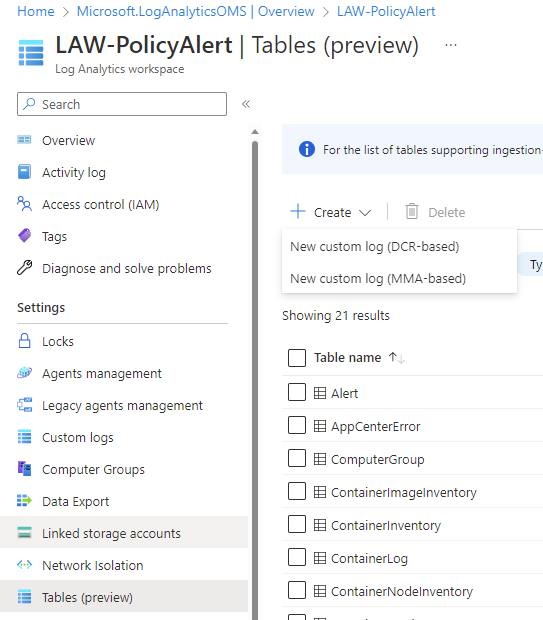

- Open the LAW, from the lefthand side menu, under settings, select Tables.

- Select +Create, and then select New custom log (DCR-based)

- On the Create page, name the Table in this case, the table is called PolicyAlert

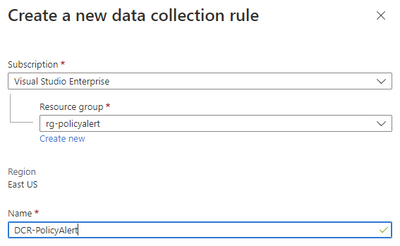

- Next, click “Create Data Collection Rule” blue hyperlink. (This Table which we just named, will be where you will be sending your policy data.)

- Give the new DCR a name, in this case, it is named DCR-PolicyAlert. Click Done.

- When back at the “Create custom log” page, select the Data Collection Rule, DCR-PolicyAlert, you just created if it did not auto populate.

- Select the Data Collection Endpoint (DCE) that you created earlier in Azure Monitor. In this case the DCE is DCE-PolicyAlerts

- Click Next to continue

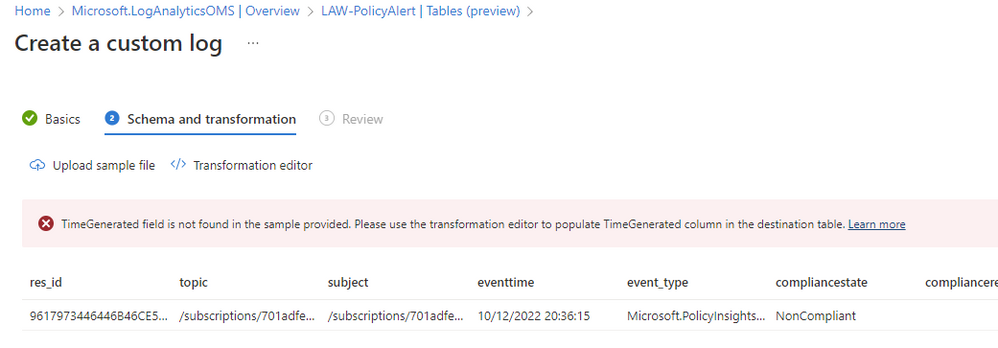

- Setting up the data format/schema is the next step, which can be a little confusing. In this step, you will upload/paste in a sample json that represents the data that you will be using. If you would like to use the exact same data that we are using in this article, HERE is a sample file you can use for this upload. This sample data file will basically define the data structure in your LAW Table. This will need to be coordinated with your PowerShell code that is completed in a later step.

- When you upload the sample file (or any other), you will likely get an error about the “TimeGenerated” data field. This is an expected error. This field is required in Custom Tables so you will next need to “transform” a data/time field in our sample data to suit the Custom Table. If you get this error, click the “Transformation Editor” in the previous image. The following image shows the query you can use. Type in the following KQL. Run the query and then click Apply.

- You should now be able to click Next to continue.

- Click the Create. *Please note you will see the table name appended with _CL. In this case, PolicyAlert_CL is the name of the custom table.

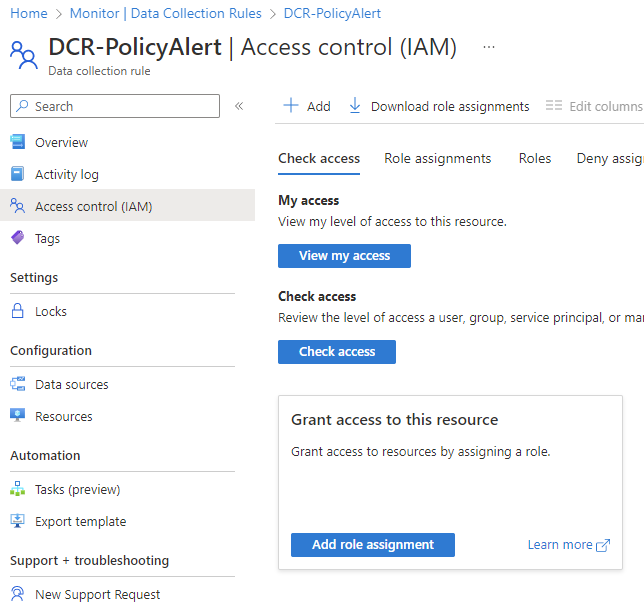

This process will setup access to the Data Collection Rule as part of writing the data to Log Analytics via the Log Ingestion API.

- Go to Azure global search. Type Monitor and select it.

- Within Azure Monitor, go to Settings, and click “Data Collection Rules” on the left menu.

- Find the DCR created in the previous step and click on it.

- Once on the DCR you created, click “Access Control (IAM)” from the left hand menu.

- Find Grant access to this resource, click “Add Role Assignment“.

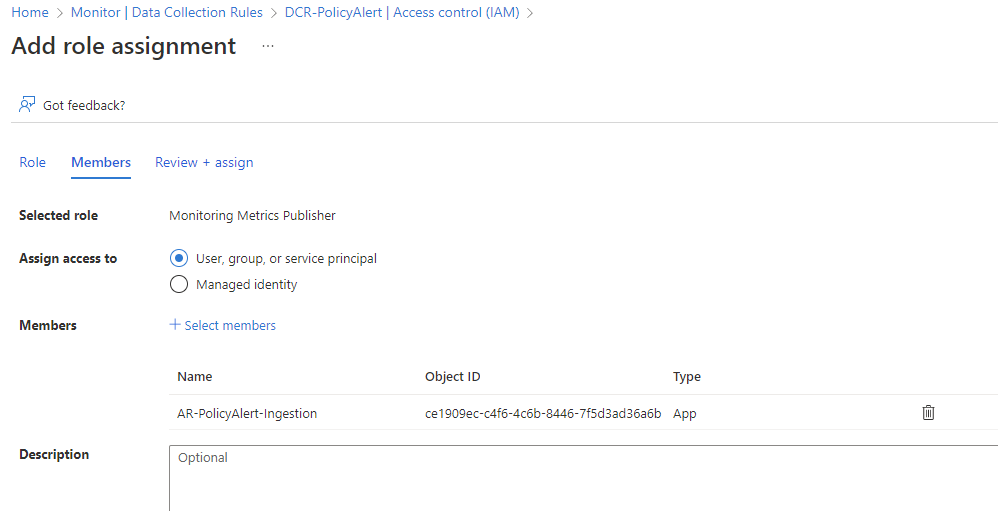

- Add the role “Monitoring Metric Publisher” to your previously created AAD App Registration. In our example, we named ours “AR-PolicyAlert-Ingestion“. Submit the role assignment when completed.

- For Role, search for Monitoring Metrics Publisher. Select it. Click Next.

- For Members, select the name of the app registration from earlier in the solution. In this case, AR-PolicyAlert-Ingestion

- Click Next

- Click Review and Assign.

Setup Function App Managed Identity to have Access to Key Vault

Here you will setup the managed identity for Key Vault so it can read your secret store in Key Vault.

- Setting up access to your Key Vault secrets to the Managed Identity for your Function App can be done from the Key Vault or from the Function App Identity page. In this article we will describe how to do it from the Function App Identity page. This access control will allow your Function App to query the Key Vault for the secret that you stored from the previous step.

- Go to the Global Azure search bar and type Function App

- Go to your Function App that you created for this solution.

- From the Settings menu, click the Identity button on the left menu.

- You should see your managed identity there from the previous step. Now click “Azure Role Assignment“.

- Now click “Add Role Assignment“.

- As shown in the following image, choose Scope=Key Vault, Resource=(Your Keyvault), Role=”Key Vault Secrets User”.

- Assign the role and submit the change.

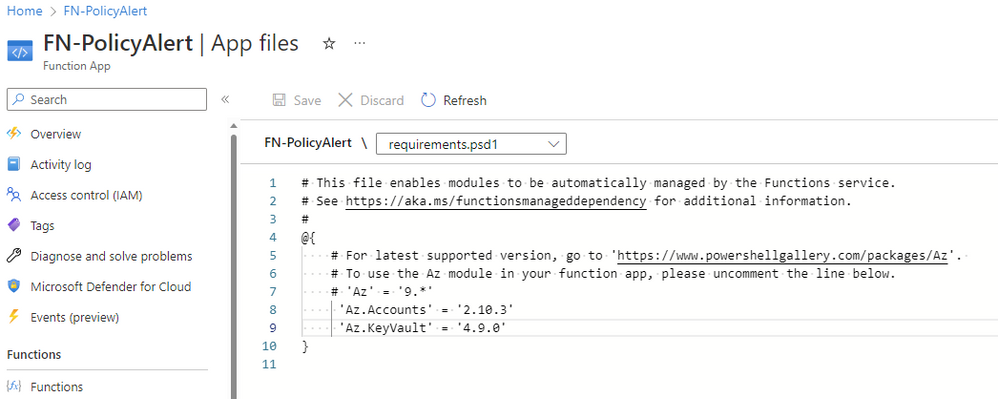

Setup PowerShell Operating Environment in Function App

This process will prepare your Azure Function powershell environment.

- Go to your Function App that you created in a previous step.

- Under Functions, click on “App Files” on the left side.

- There will be a drop-down menu near the top middle of your screen. Choose the “requirements.psd1” option.

- Edit the text in the file so that you have an entry for “Az.Accounts” and “Az.KeyVault“, as indicated in the graphic, and then click “Save“. This will tell your function to automatically install the PowerShell modules specified when the app restarts next.

- With the Az module line uncommented, you now need to restart the function app.

- To do this, click on “Configuration” on the left bar, under Settings.

- Navigate to the “General Settings” tab on the top right.

- Find the setting “PowerShell Core Version” on the right and change it to a different value and click save. Acknowledge the change and wait until it completes. Once the task is completed, reverse that setting back to its original value and click save again. In my example, I went from “PowerShell 7.2” to “PowerShell 7.0” back to “PowerShell 7.2”. This process is just to force Azure to restart the Function App so the Az Modules will load.

- Loading the PowerShell modules could take a few minutes so do not immediately expect it be there right after the restart completes.

- When the PowerShell is executed in your function app, it should have the PowerShell Az modules loaded by default.

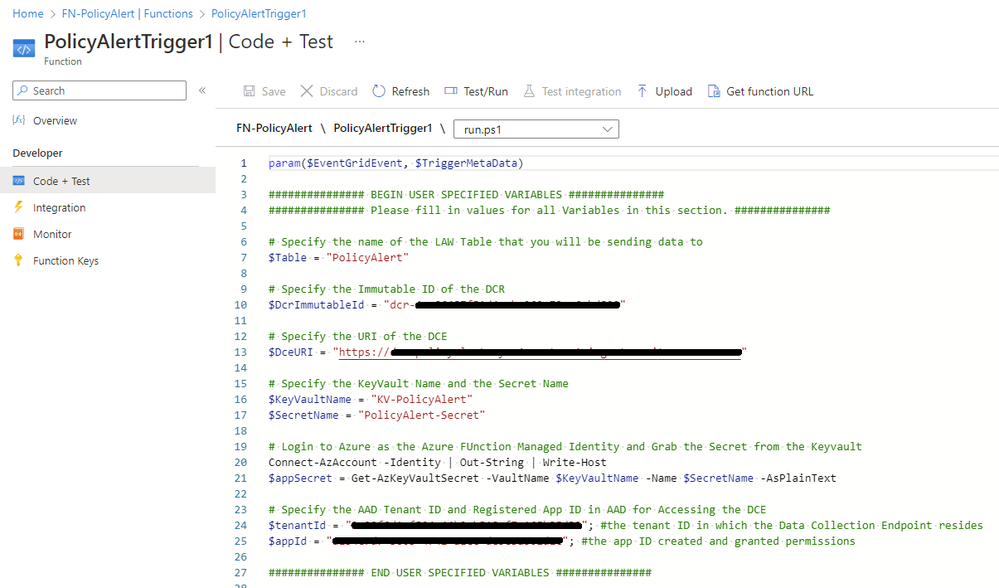

Setup PowerShell in Function

Here you will setup the powershell code within the Azure Function.

- In this section we will setup the PowerShell code and operating environment with the Function created in the previous step. For our reference architecture in this article, you can download the PowerShell source code from HERE.

- Go to the Function App, FN-PolicyAlert or what you called the Function App for this solution.

- From the left-hand menu, under Functions, select Functions.

- You should be able to see on the right-hand side, the function that was created earlier in this solution called, “PolictAlertTrigger1“, and click on it to open it.

- On the left menu, under Developer, click “Code + Test“.

- This will open the code editor. Grab all of the code from HERE and paste it into the editor. You need to make sure that you have verified that all of the PowerShell variables at the top of the code are filled in with your appropriate values. Many of these values will come from the “reminders” notepad text file mentioned in earlier steps.

- Need the DCE logs ingestion URI – go to the DCE in monitor and copy

- For the DCR immutable ID, go to the DCR, click on JSON view

Setup Alerting

Here you will setup the alerting mechanism within Azure Monitor.

- Go to the Log Analytics Workspace (LAW) where you have sent the Custom Logs that you created earlier which in the previous steps in this example was called, LAW-. In this example, the custom log table is called PolicyAlert_CL.

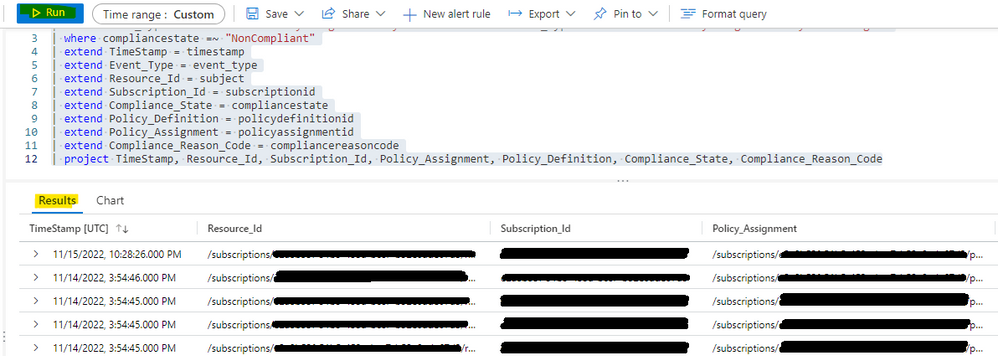

- In a blank query space, please type or paste in the following KQL query to query the custom log table for the policy compliance event changes

PolicyAlert_CL

| where event_type =~ "Microsoft.PolicyInsights.PolicyStateCreated" or event_type =~ "Microsoft.PolicyInsights.PolicyStateChanged"

| where compliancestate =~ "NonCompliant"

| extend TimeStamp = timestamp

| extend Event_Type = event_type

| extend Resource_Id = subject

| extend Subscription_Id = subscriptionid

| extend Compliance_State = compliancestate

| extend Policy_Definition = policydefinitionid

| extend Policy_Assignment = policyassignmentid

| extend Compliance_Reason_Code = compliancereasoncode

| project TimeStamp, Resource_Id, Subscription_Id, Policy_Assignment, Policy_Definition, Compliance_State, Compliance_Reason_Code

- Run the Query by click the Run button to ensure that it is working properly.

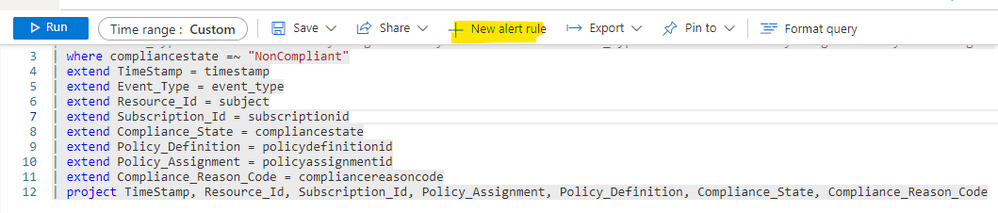

- Click on New alert rule to create the alerting for the policy compliance event changes.

- The alert rule that is being created will be based on the current log query. The Scope will already be set to the current resource.

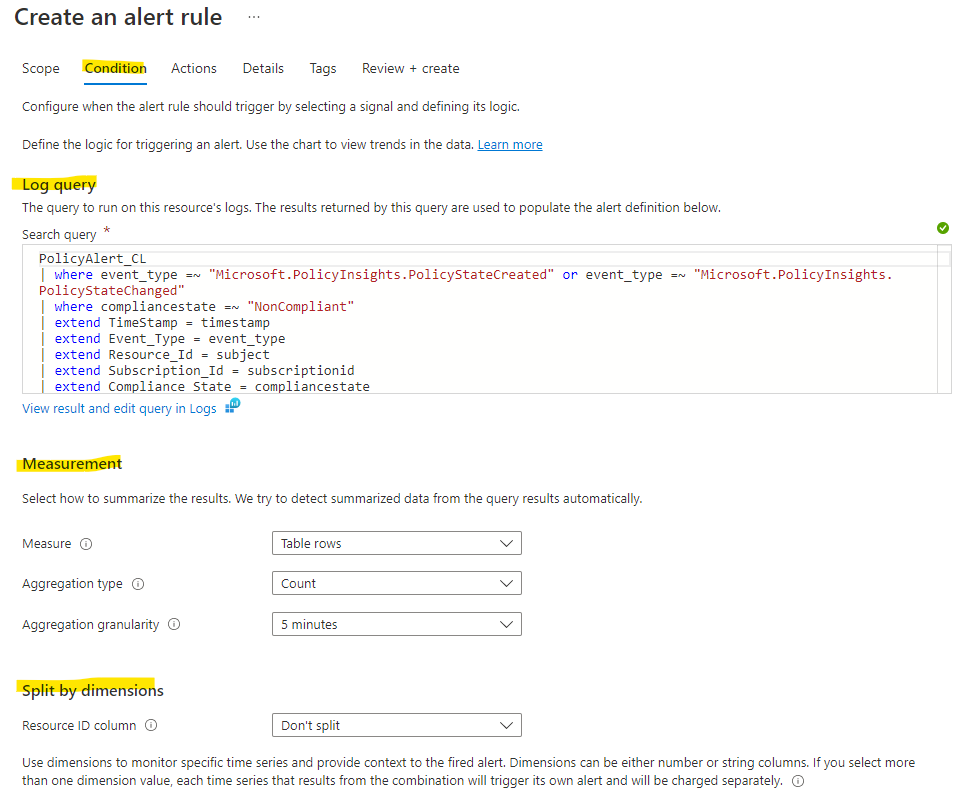

- Next, on the Condition tab, the Log query will already be populated with the KQL query that we entered.

- On the Condition tab, the Measurement section will define how the records from the log query will be measured. As this query has one or more summarized columns, the Table rows option will be shown. Leave Table rows as the selection. Aggregation granularity refers to the time interval over which the collected values are aggregated. By default, the value is set to 5 minutes.

For this example, leave this set to 5 minutes.

- On the Condition tab, the Configure dimensions section allows you to separate alerts for different resources. In this example, we are only measuring one resource so there is no need to configure.

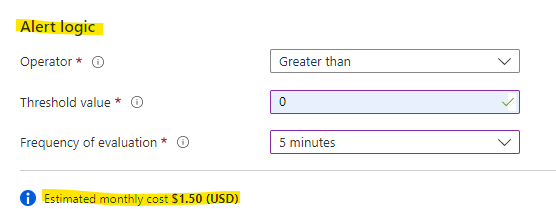

- On the Condition tab, the Configure alert logic is where we can configure the Operator and Threshold values against the value returned from what we are measuring. In this case, select the operator as greater than and the threshold value as 0. We would like to be alerted when there is a policy compliance change. With these settings, we will be notified when the threshold value is greater than 0; so every time a change occurs.

- Next, we will need to select a frequency of evaluation value; how often do we want the log query to run and be evaluated. Remember, the cost for the alert rule increases the lower the frequency is meaning if I have something run with a frequency of every minute, it will cost more than something that I run every 10 minutes. There will be a pro tip that shows an estimated cost. In this example, select 5 minutes.

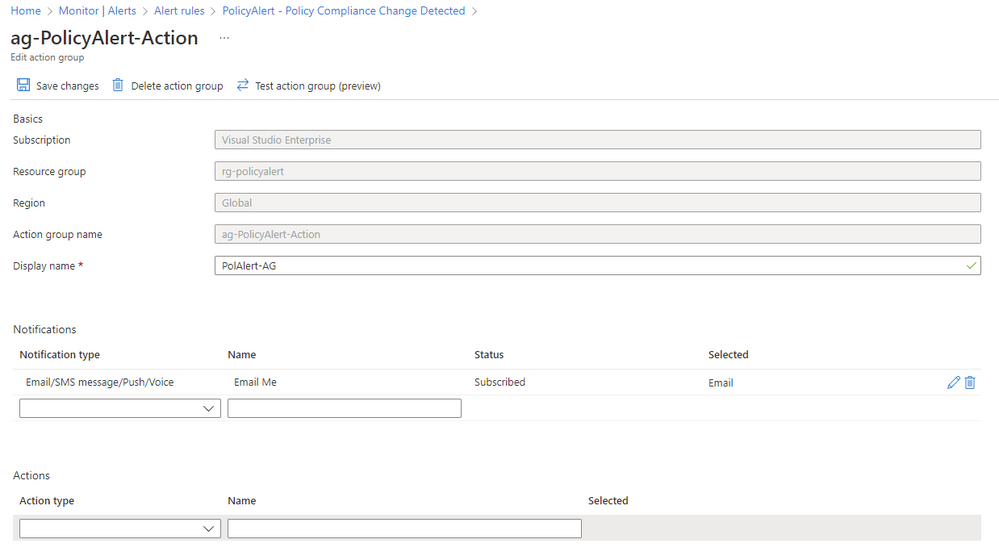

- The remaining steps are all about how you want to be notified by using Action Groups. The procedures to create Action Groups is well documented HERE. For our example, this image shows a basic Action Group that just sends an email. In the example, the name of the Action Group is ag-PolicyAlert-Action. Also, the Action Group will contain the email address of the group/individual that will be notified when a policy compliance change occurs. Please keep these items in mind when designing your Action Group.

Wrap-Up

Now that this setup has been complete, you should be able to receive an email notification whenever an alert is generated from an Azure Policy Compliance status change. There are several possible solutions to use and ways to expand functionality in this architecture so stay tuned for future posts that expand on this approach.

References

Azure Key Vault: Quickstart – Create an Azure Key Vault with the Azure portal | Microsoft Learn

Log Ingestion API: Logs Ingestion API in Azure Monitor (preview) – Azure Monitor | Microsoft Learn

Log Ingestion API Tutorial: Tutorial – Send data to Azure Monitor Logs using REST API (Azure portal) – Azure Monitor | Microsoft Learn

Send Custom Events to Azure Function: Quickstart: Send custom events to Azure Function – Event Grid – Azure Event Grid | Microsoft Learn

Azure PolicyInsights Data: Get policy compliance data – Azure Policy | Microsoft Learn

Azure Policy State Change Events: Reacting to Azure Policy state change events – Azure Policy | Microsoft Learn

Azure Function Powershell Dev: PowerShell developer reference for Azure Functions | Microsoft Learn

Azure Action Groups: Manage action groups in the Azure portal – Azure Monitor | Microsoft Learn

Demo: Cloud Dataset VIewer in action

Recent Comments