by Contributed | Mar 25, 2023 | Technology

This article is contributed. See the original author and article here.

Join us for PyDay

PyDay

2 May, 2023 | 5:30 PM UTC

Are you a student or faculty member interested in learning more about web development using Python? Join us for an exciting online event led by experienced developer and educator Pamela Fox, where you’ll learn how to build, test, and deploy HTTP APIs and web applications using three of the most popular Python frameworks: FastAPI, Django, and Flask. This event is perfect for anyone looking to expand their knowledge and skills in backend web development using Python.

No web application experience is required, but some previous Python experience is encouraged. If you’re completely new to Python, head over to https://aka.ms/trypython to kickstart your learning!

PyDay Schedule:

Session 1: Build, Test, and Deploy HTTP APIs with FastAPI @ 9:30 AM PST

In this session, you’ll learn how to build, test, and deploy HTTP APIs using FastAPI, a lightweight Python framework. You’ll start with a bare-bones FastAPI app and gradually add routes and frontends. You’ll also learn how to test your code and deploy it to Azure App Service.

Session 2: Cloud Databases for Web Apps with Django @ 11:10 AM PST

In this session, you’ll discover the power of Django for building web apps with a database backend. We’ll walk through building a Django app, using the SQLTools VS Code extension to interact with a local PostgreSQL database, and deploying it using infrastructure-as-code to Azure App Service with Azure PostgreSQL Flexible Server.

Session 3: Containerizing Python Web Apps with Docker @ 1:50 PM PST

In this session, you’ll learn about Docker containers, the industry standard for packaging applications. We’ll containerize a Python Flask web app using Docker, run the container locally, and deploy it to Azure Container Apps with the help of Azure Container Registry.

Register here: https://aka.ms/PyDay

This event is an excellent opportunity for students and faculty members to expand their knowledge and skills in web development using Python. You’ll learn how to use three of the most popular Python frameworks for web development, and by the end of the event, you’ll have the knowledge you need to build, test, and deploy web applications.

So, if you’re interested in learning more about web development using Python, register now and join us for this exciting online event! We look forward to seeing you there!

by Contributed | Mar 24, 2023 | Technology

This article is contributed. See the original author and article here.

Sometimes success in life depends on little things that seem easy. So easy that they are often overlooked or underestimated for some reason. This also applies to life in IT. For example, just think about this simple question: “Do you have a tested and documented Active Directory disaster recovery plan?”

This is a question we, as Microsoft Global Compromise Recovery Security Practice, ask our customers whenever we engage in a Compromise Recovery project. The aim of these projects is to evict the attacker from compromised environments by revoking their access, thereby restoring confidence in these environments for our customers. More information can be found here: CRSP: The emergency team fighting cyber attacks beside customers – Microsoft Security Blog

Nine out of ten times the customer replies: “Sure, we have a backup of our Active Directory!”, but when we dig a little deeper, we often find that while Active Directory is backed up daily, an up-to-date, documented, and regularly tested recovery procedure does not exist. Sometimes people answer and say: “Well, Microsoft provides instructions on how to restore Active Directory somewhere on docs.microsoft.com: so, if anything happens that breaks our entire directory, we can always refer to that article and work our way through. Easy!”. To this we say, an Active Directory recovery can be painful/time-consuming and is often not easy.

You might think that the likelihood of needing a full Active Directory recovery is small. Today, however, the risk of a cyberattack against your Active Directory is higher than ever, hence the chances of you needing to restore it have increased. We now even see ransomware encrypting Domain Controllers, the servers that Active Directory runs on. All this means that you must ensure readiness for this event.

Readiness can be achieved by testing your recovery process in an isolated network on a regular basis, just to make sure everything works as expected, while allowing your team to practice and verify all the steps required to perform a full Active Directory recovery.

Consider the security aspects of the backup itself, as it is crucial to store backups safely, preferably encrypted, restricting access to only trusted administrative accounts and no one else!

You must have a secure, reliable, and fast restoration procedure, ready to use when you most need it.

Azure Recovery Services Vault can be an absolute game changer for meeting all these requirements, and we often use it during our Compromise Recovery projects, which is why we are sharing it with you here. Note that the intention here is not to write up a full Business Continuity Plan. Our aim is to help you get started and to show you how you can leverage the power of Azure.

The process described here can also be used to produce a lab containing an isolated clone of your Active Directory. In the Compromise Recovery, we often use the techniques described here, not only to verify the recovery process but also to give ourselves a cloned Active Directory lab for testing all kinds of hardening measures that are the aim of a Compromise Recovery.

What is needed

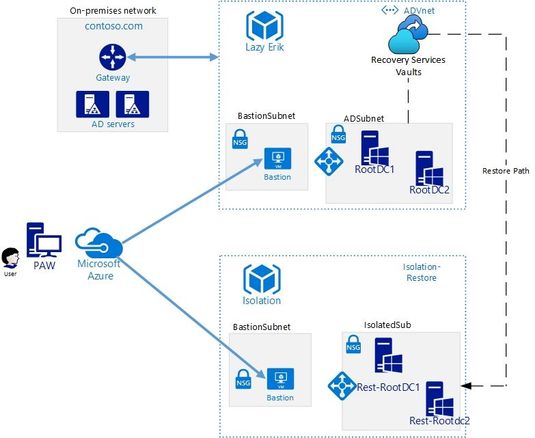

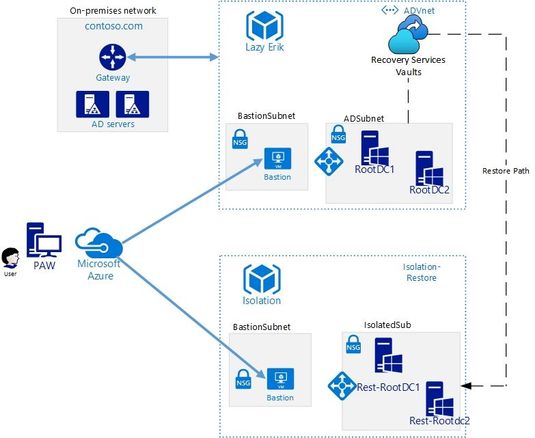

This high-level schema shows you all the components that are required:

At least one production DC per domain in Azure

We do assume that you have at least one Domain Controller per domain running on a VM in Azure, which nowadays many of our customers do. This unlocks the features of Azure Recovery Services Vault to speed up your Active Directory recovery.

Note that backing up two Domain Controllers per domain improves redundancy, as you will have multiple backups to choose from when recovering. This is another point in our scenario where Azure Recovery Vault’s power comes through, as it allows you to easily manage multiple backups in one single console, covered by common policies.

Azure Recovery Services Vault

We need to create the Azure Recovery Services Vault and to be more precise, a dedicated Recovery Services Vault for all “Tier 0” assets in a dedicated Resource Group (Tier 0 assets are sensitive, highest-level administrative assets, including accounts, groups and servers, control of which would lead to control of your entire environment).

This Vault should reside in the same region as your “Tier 0” servers, and we need a full backup of at least one Domain Controller per domain.

Once you have this Vault, you can include the Domain Controller virtual machine in your Azure Backup.

Recovery Services vaults are based on the Azure Resource Manager model of Azure, which provides features such as:

- Enhanced capabilities to help secure backup data: With Recovery Services Vaults, Azure Backup provides security capabilities to protect cloud backups. This includes the encryption of backups that we mention above.

- Central monitoring for your hybrid IT environment: With Recovery Services Vaults, you can monitor not only your Azure IaaS virtual machines but also your on-premises assets from a central portal.

- Azure role-based access control (Azure RBAC): Azure RBAC provides fine-grained access management control in Azure. Azure Backup has three built-in RBAC roles to manage recovery points, which allows us to restrict backup and restore access to the defined set of user roles.

- Soft Delete: With soft delete the backup data is retained for 14 additional days after deletion, which means that even if you accidentally remove the backup, or if this is done by a malicious actor, you can recover it. These additional 14 days of retention for backup data in the “soft delete” state don’t incur any cost to you.

Find more information on the benefits in the following article: What is Azure Backup? – Azure Backup | Microsoft Docs

Isolated Restore Virtual Network

Another thing we need is an isolated network portion (the “isolatedSub” in the drawing) to which we restore the DC. This isolated network portion should be in a separate Resource Group from your production resources, along with the newly created Recovery Services Vault.

Isolation means no network connectivity whatsoever to your production networks! If you inadvertently allow a restored Domain Controller, the target of your forest recovery Active Directory cleanup actions, to replicate with your running production Active Directory, this will have a serious impact on your entire IT Infrastructure. Isolation can be achieved by not implementing any peering, and of course by avoiding any other connectivity solutions such as VPN Gateways. Involve your networking team to ensure that this point is correctly covered.

Bastion Host in Isolated Virtual Network

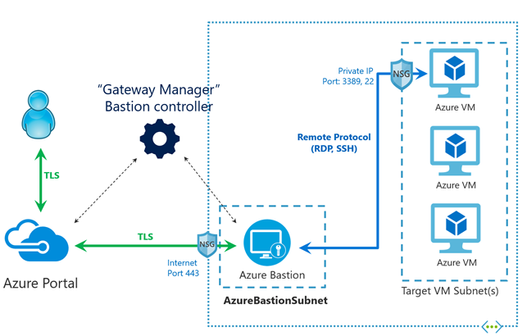

The last thing we need is the ability to use a secure remote connection to the restored virtual machine that is the first domain controller of the restore Active Directory. To get around the isolation of the restoration VNET, we are going to use Bastion Host for accessing this machine.

Azure Bastion is a fully managed Platform as a Service that provides secure and seamless secure connection (RDP and SSH) access to your virtual machines directly through the Azure Portal and avoids public Internet exposure using SSH and RDP with private IP addresses only.

Azure Bastion | Microsoft Docs

The Process

Before Azure Recovery Vault existed, the first steps of an Active Directory recovery were the most painful part of process: one had to worry about provisioning a correctly sized- and configured recovery machine, transporting the WindowsImageBackup folder to a disk on this machine, and booting from the right Operating System ISO to perform a machine recovery. Now we can bypass all these pain points with just a few clicks:

Perform the Virtual Machine Backup

Creating a backup of your virtual machine in the Recovery Vault involves including it in a Backup Policy. This is described here:

Azure Instant Restore Capability – Azure Backup | Microsoft Docs

Restore the Virtual Machine to your isolated Virtual Network

To restore your virtual machine, you use the Restore option in Backup Center, with the option to create a new virtual machine. This is described here:

Restore VMs by using the Azure portal – Azure Backup | Microsoft Docs

Active Directory Recovery Process

Once you have performed the restoration of your Domain Controller virtual machine to the isolated Virtual Network, you can log on to this machine using the Bastion Host, which allows you to start performing the Active Directory recovery as per our classic guidance.

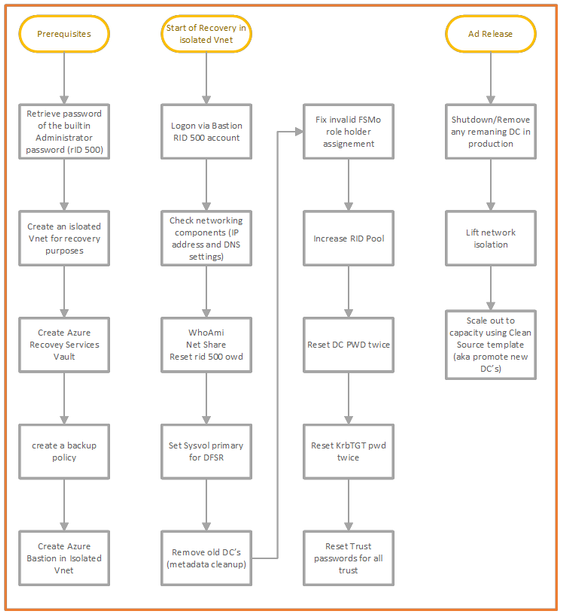

You login using the built-in administrator account, followed by the steps outlined in the drawing below under “Start of Recovery in isolated VNet” :

All the detailed steps can be found here Active Directory Forest Recovery Guide | Microsoft Docs and we note that the above process may need to be tailored for your organization.

Studying the chart above, you will see that there are some dependencies that apply. Just think about seemingly trivial stuff such as the Administrator password that is needed during recovery, the one that you use to log on to the Bastion.

- Who has access to this password?

- Did you store the password in a Vault that is dependent on a running AD service?

- Do you have any other services running on your domain controllers, such as any file services (please note that we do not recommend this)?

- Is DNS running on Domain controllers or is there a DNS dependency on another product such as Infoblox?

These are things to consider in advance, to ensure you are ready for recovery of your AD.

Tips and Tricks

In order to manage a VM in Azure two things come in handy:

- Serial console- this feature in the Azure portal provides access to a text-based console for Windows virtual machines. This console session provides access to the Virtual Machine independent of the network or operating system state. The serial console can only be accessed by using the Azure portal and is allowed only for those users who have an access role of Contributor or higher to the VM or virtual machine scale set. This feature comes in handy when you need to troubleshoot Remote Desktop connection failures; suppose you need to disable the Host Based Firewall or need to change IP configuration settings. More information can be found here: Azure Serial Console for Windows – Virtual Machines | Microsoft Docs

- Run Command- this feature uses the virtual machine agent to run PowerShell scripts within an Azure Windows VM. You can use these scripts for general machine or application management. They can help you to quickly diagnose and remediate Virtual Machine access and network issues and get the Virtual Machine back to a good state. More information can be found here: Run scripts in a Windows VM in Azure using action Run Commands – Azure Virtual Machines | Microsoft Docs

Security

We remind you that a Domain Controller is a sensitive, highest-level administrative asset, a “Tier 0” asset (see for an overview of our Securing Privileged access Enterprise access model here: Securing privileged access Enterprise access model | Microsoft Docs), no matter where it is stored. Whether it runs as a virtual machine on VMware, on Hyper-V or in Azure as a IAAS virtual machine, that fact does not change. This means you will have to protect these Domain Controllers and their backups using the maximum level or security restrictions you have at your disposal in Azure. Role Based Access Control is one of the features that can help here to restrict accounts that have access.

Conclusion

A poorly designed disaster recovery plan, lack of documentation, and a team that lacks mastery of the process will delay your recovery, thereby increasing the burden on your administrators when a disaster happens. In turn, this will exacerbate the disastrous impact that cyberattacks can have on your business.

In this article, we gave you a global overview of how the power of Azure Recovery Services Vault can simplify and speed up your Active Directory Recovery process: how easy it is to use, how fast you can recover a machine into an isolated VNET in Azure, and how you can connect to it safely using Bastion to start performing your Active Directory Recovery on a restored Domain Controller.

Finally, ask yourself this question: “Am I able to recover my entire Active Directory in the event of a disaster? If you cannot answer this question with a resounding “yes” then it is time to act and make sure that you can.

Authors: Erik Thie & Simone Oor, Compromise Recovery Team

To learn more about Microsoft Security solutions visit our website. Bookmark the Security blog to keep up with our expert coverage on security matters. Also, follow us at @MSFTSecurity for the latest news and updates on cybersecurity.

by Contributed | Mar 23, 2023 | Technology

This article is contributed. See the original author and article here.

As we continue to enhance the security of our cloud, we are going to address the problem of email sent to Exchange Online from unsupported and unpatched Exchange servers. There are many risks associated with running unsupported or unpatched software, but by far the biggest risk is security. Once a version of Exchange Server is no longer supported, it no longer receives security updates; thus, any vulnerabilities discovered after support has ended don’t get fixed. There are similar risks associated with running software that is not patched for known vulnerabilities. Once a security update is released, malicious actors will reverse-engineer the update to get a better understanding of how to exploit the vulnerability on unpatched servers.

Microsoft uses the Zero Trust security model for its cloud services, which requires connecting devices and servers to be provably healthy and managed. Servers that are unsupported or remain unpatched are persistently vulnerable and cannot be trusted, and therefore email messages sent from them cannot be trusted. Persistently vulnerable servers significantly increase the risk of security breaches, malware, hacking, data exfiltration, and other attacks.

We’ve said many times that it is critical for customers to protect their Exchange servers by staying current with updates and by taking other actions to further strengthen the security of their environment. Many customers have taken action to protect their environment, but there are still many Exchange servers that are out of support or significantly behind on updates.

Transport-based Enforcement System

To address this problem, we are enabling a transport-based enforcement system in Exchange Online that has three primary functions: reporting, throttling, and blocking. The system is designed to alert an admin about unsupported or unpatched Exchange servers in their on-premises environment that need remediation (upgrading or patching). The system also has throttling and blocking capabilities, so if a server is not remediated, mail flow from that server will be throttled (delayed) and eventually blocked.

We don’t want to delay or block legitimate email, but we do want to reduce the risk of malicious email entering Exchange Online by putting in place safeguards and standards for email entering our cloud service. We also want to get the attention of customers who have unsupported or unpatched Exchange servers and encourage them to secure their on-premises environments.

Reporting

For years, Exchange Server admins have had the Exchange Server Health Checker, which detects common configuration and performance issues, and collects useful information, including which servers are unsupported or unpatched. Health Checker can even create color-coded HTML reports to help you prioritize server remediation.

We are adding a new mail flow report to the Exchange admin center (EAC) in Exchange Online that is separate from and complementary to Health Checker. It provides details to a tenant admin about any unsupported or out-of-date Exchange servers in their environment that connect to Exchange Online to send email.

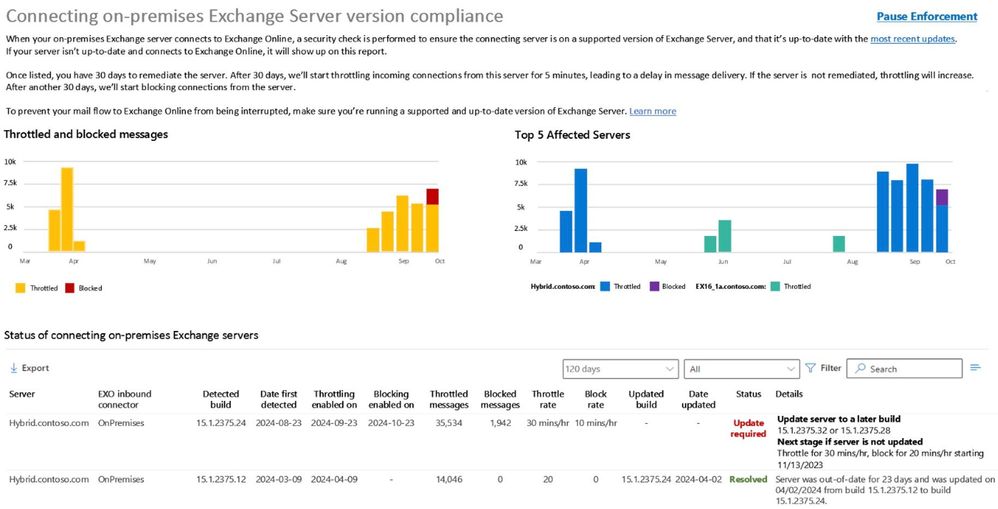

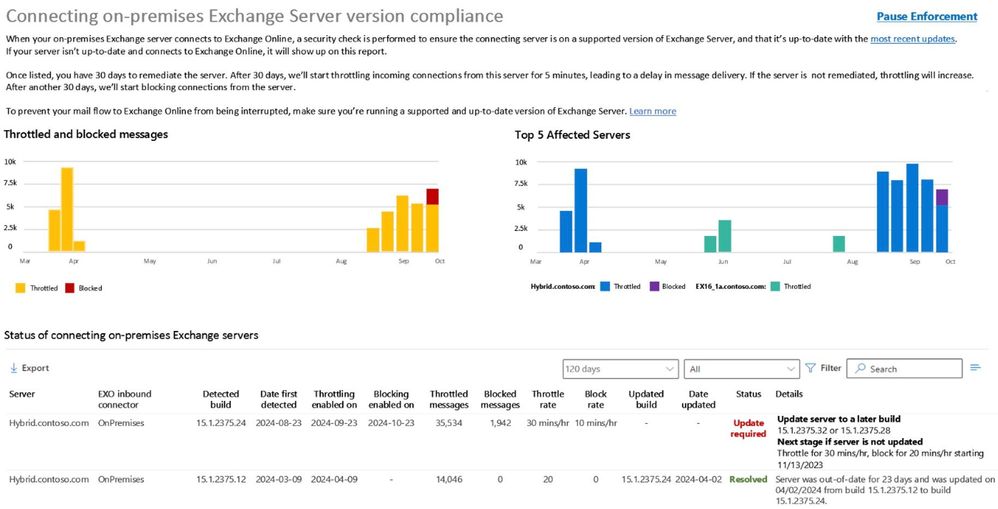

Figure 1 below shows a mockup of what the new report may look like when released:

The new mail flow report provides details on any throttling or blocking of messages, along with information about what happens next if no action is taken to remediate the server. Admins can use this report to prioritize updates (for servers that can be updated) and upgrades or migrations (for servers that can’t be updated).

Throttling

If a server is not remediated after a period of time (see below), Exchange Online will begin to throttle messages from it. In this case, Exchange Online will issue a retriable SMTP 450 error to the sending server which will cause the sending server to queue and retry the message later, resulting in delayed delivery of messages. In this case, the sending server will automatically try to re-send the message. An example of the SMTP 450 error is below:

450 4.7.230 Connecting Exchange server version is out-of-date; connection to Exchange Online throttled for 5 mins/hr. For more information see https://aka.ms/BlockUnsafeExchange.

The throttling duration will increase progressively over time. Progressive throttling over multiple days is designed to drive admin awareness and give them time to remediate the server. However, if the admin does not remediate the server within 30 days after throttling begins, enforcement will progress to the point where email will be blocked.

Blocking

If throttling does not cause an admin to remediate the server, then after a period of time (see below), email from that server will be blocked. Exchange Online will issue a permanent SMTP 550 error to the sender, which triggers a non-delivery report (NDR) to the sender. In this case, the sender will need to re-send the message. An example of the SMTP 550 error is below:

550 5.7.230 Connecting Exchange server version is out-of-date; connection to Exchange Online blocked for 10 mins/hr. For more information see https://aka.ms/BlockUnsafeExchange.

Enforcement Stages

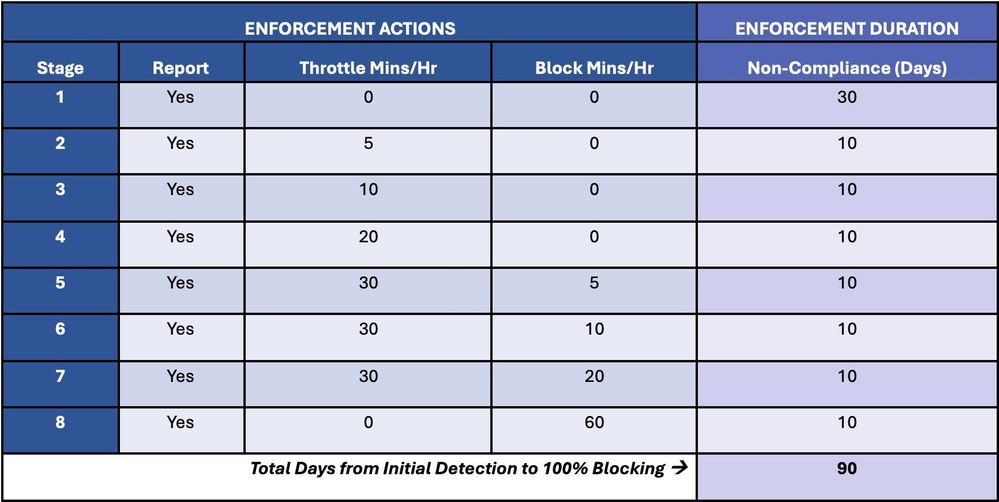

We’re intentionally taking a progressive enforcement approach which gradually increases throttling over time, and then introduces blocking in gradually increasing stages culminating in blocking 100% of all non-compliant traffic.

Enforcement actions will escalate over time (e.g., increase throttling, add blocking, increase blocking, full blocking) until the server is remediated: either removed from service (for versions beyond end of life), or updated (for supported versions with available updates).

Table 1 below details the stages of progressive enforcement over time:

Stage 1 is report-only mode, and it begins when a non-compliant server is first detected. Once detected, the server will appear in the out-of-date report mentioned earlier and an admin will have 30 days to remediate the server.

If the server is not remediated within 30 days, throttling will begin, and will increase every 10 days over the next 30 days in Stages 2-4.

If the server is not remediated within 60 days from detection, then throttling and blocking will begin, and blocking will increase every 10 days over the next 30 days in Stages 5-7.

If, after 90 days from detection, the server has not been remediated, it reaches Stage 8, and Exchange Online will no longer accept any messages from the server. If the server is patched after it is permanently blocked, then Exchange Online will again accept messages from the server, as long as the server remains in compliance. If a server cannot be patched, it must be permanently removed from service.

Enforcement Pause

Each tenant can pause throttling and blocking for up to 90 days per year. The new mail flow report in the EAC allows an admin to request a temporary enforcement pause. This pauses all throttling and blocking and puts the server in report-only mode for the duration specified by the admin (up to 90 days per year).

Pausing enforcement works like a pre-paid debit card, where you can use up to 90 days per year when and how you want. Maybe you need 5 days in Q1 to remediate a server, or maybe you need 15 days. And then maybe another 15 days in Q2, and so forth, up to 90 days per calendar year.

Initial Scope

The enforcement system will eventually apply to all versions of Exchange Server and all email coming into Exchange Online, but we are starting with a very small subset of outdated servers: Exchange 2007 servers that connect to Exchange Online over an inbound connector type of OnPremises.

We have specifically chosen to start with Exchange 2007 because it is the oldest version of Exchange from which you can migrate in a hybrid configuration to Exchange Online, and because these servers are managed by customers we can identify and with whom we have an existing relationship.

Following this initial deployment, we will incrementally bring other Exchange Server versions into the scope of the enforcement system. Eventually, we will expand our scope to include all versions of Exchange Server, regardless of how they send mail to Exchange Online.

We will also send Message Center posts to notify customers. Today, we are sending a Message Center post to all Exchange Server customers directing them to this blog post. We will also send targeted Message Center posts to customers 30 days before their version of Exchange Server is included in the enforcement system. In addition, 30 days before we expand beyond mail coming in over OnPremises connectors, we’ll notify customers via the Message Center.

Feedback and Upcoming AMA

As always, we want and welcome your feedback. Leave a comment on this post if you have any questions or feedback you’d like to share.

On May 10, 2023 at 9am PST, we are hosting an “Ask Microsoft Anything” (AMA) about these changes on the Microsoft Tech Community. We invite you to join us and ask questions and share feedback. This AMA will be a live text-based online event with no audio or video. This AMA gives you the opportunity to connect with us, ask questions, and provide feedback. You can register for this AMA here.

FAQs

Which cloud instances of Exchange Online have the transport-based enforcement system?

All cloud instances, including our WW deployment, our government clouds (e.g., GCC, GCCH, and DoD), and all sovereign clouds.

Which versions of Exchange Server are affected by the enforcement system?

Initially, only servers running Exchange Server 2007 that send mail to Exchange Online over an inbound connector type of OnPremises will be affected. Eventually, all versions of Exchange Server will be affected by the enforcement system, regardless of how they connect to Exchange Online.

How can I tell if my organization uses an inbound connector type of OnPremises?

You can use Get-InboundConnector to determine the type of inbound connector in use. For example, Get-InboundConnector | ft Name,ConnectorType will display the type of inbound connector(s) in use.

What is a persistently vulnerable Exchange server?

Any Exchange server that has reached end of life (e.g., Exchange 2007, Exchange 2010, and soon, Exchange 2013), or remains unpatched for known vulnerabilities. For example, Exchange 2016 and Exchange 2019 servers that are significantly behind on security updates are considered persistently vulnerable.

Is Microsoft blocking email from on-premises Exchange servers to get customers to move to the cloud?

No. Our goal is to help customers secure their environment, wherever they choose to run Exchange. The enforcement system is designed to alert admins about security risks in their environment, and to protect Exchange Online recipients from potentially malicious messages sent from persistently vulnerable Exchange servers.

Why is Microsoft only taking this action against its own customers; customers who have paid for Exchange Server and Windows Server licenses?

We are always looking for ways to improve the security of our cloud and to help our on-premises customers stay protected. This effort helps protect our on-premises customers by alerting them to potentially significant security risks in their environment. We are initially focusing on email servers we can readily identify as being persistently vulnerable, but we will block all potentially malicious mail flow that we can.

Will Microsoft enable the transport-based enforcement system for other servers and applications that send email to Exchange Online?

We are always looking for ways to improve the security of our cloud and to help our on-premises customers stay protected. We are initially focusing on email servers we can readily identify as being persistently vulnerable, but we will block all potentially malicious mail flow that we can.

If my Exchange Server build is current, but the underlying Windows operating system is out of date, will my server be affected by the enforcement system?

No. The enforcement system looks only at Exchange Server version information. But it is just as important to keep Windows and all other applications up-to-date, and we recommend customers do that.

Delaying and possibly blocking emails sent to Exchange Online seems harsh and could negatively affect my business. Can’t Microsoft take a different approach to this?

Microsoft is taking this action because of the urgent and increasing security risks to customers that choose to run unsupported or unpatched software. Over the last few years, we have seen a significant increase in the frequency of attacks against Exchange servers. We have done (and will continue to do) everything we can to protect Exchange servers but unfortunately, there are a significant number of organizations that don’t install updates or are far behind on updates, and are therefore putting themselves, their data, as well as the organizations that receive email from them, at risk. We can’t reach out directly to admins that run vulnerable Exchange servers, so we are using activity from their servers to try to get their attention. Our goal is to raise the security profile of the Exchange ecosystem.

Why are you starting only with Exchange 2007 servers, when Exchange 2010 is also beyond end of life and Exchange 2013 will be beyond end of life when the enforcement system is enabled?

Starting with this narrow scope of Exchange servers lets us safely exercise, test, and tune the enforcement system before we expand its use to a broader set of servers. Additionally, as Exchange 2007 is the most out-of-date hybrid version, it doesn’t include many of the core security features and enhancements in later versions. Restricting the most potentially vulnerable and unsafe server version first makes sense.

Does this mean that my Exchange Online organization might not receive email sent by a 3rd party company that runs an old or unpatched version of Exchange Server?

Possibly. The transport-based enforcement system initially applies only to email sent from Exchange 2007 servers to Exchange Online over an inbound connector type of OnPremises. The system does not yet apply to email sent to your organization by companies that do not use an OnPremises type of connector. Our goals are to reduce the risk of malicious email entering Exchange Online by putting in place safeguards and standards for email entering the service and to notify on-premises admins that the Exchange server their organization uses needs remediating.

How does Microsoft know what version of Exchange I am running? Does Microsoft have access to my servers?

No, Microsoft does not have any access to your on-premises servers. The enforcement system is based on email activity (e.g., when the on-premises Exchange Server connects to Exchange Online to deliver email).

The Exchange Team

Recent Comments