by Scott Muniz | Sep 2, 2020 | Azure, Microsoft, Technology, Uncategorized

This article is contributed. See the original author and article here.

One of the challenges of large scale data analysis is being able to get the value from data with least effort. Doing that often involves multiple stages: provisioning infrastructure, accessing or moving data, transforming or filtering data, analyzing and learning from data, automating the data pipelines, connecting with other services that provide input or consume the output data, and more. There are quite a few tools available to solve these questions, but it’s usually difficult to have them all in one place and easily connected.

If this article was helpful or interesting to you, follow @lenadroid on Twitter.

Introduction

This is the first article in this series, which will cover what Azure Synapse is and how to start using it with Azure CLI. Make sure your Azure CLI is installed and up-to-date, and add a synapse extension if necessary:

$ az extension add --name synapse

What is Azure Synapse?

In Azure, we have Synapse Analytics service, which aims to provide managed support for distributed data analysis workloads with less friction. If you’re coming from GCP or AWS background, Azure Synapse alternatives in other clouds are products like BigQuery or Redshift. Azure Synapse is currently in public preview.

Serverless and provisioned capacity

In the world of large-scale data processing and analytics, things like autoscale clusters and pay-for-what-you-use has become a must-have. In Azure Synapse, you can choose between serverless and provisioned capacity, depending on whether you need to be flexible and adjust to bursts, or have a predictable resource load.

Native Apache Spark support

Apache Spark has demonstrated its power in data processing for both batch and real-time streaming models. It offers a great Python and Scala/Java support for data operations at large scale. Azure Synapse provides built-in support for data analytics using Apache Spark. It’s possible to create an Apache Spark pool, upload Spark jobs, or create Spark notebooks for experimenting with the data.

SQL support

In addition to Apache Spark support, Azure Synapse has excellent support for data analytics with SQL.

Other features

Azure Synapse provides smooth integration with Azure Machine Learning and Spark ML. It enables convenient data ingestion and export using Azure Data Factory, which connects with many Azure and independent data input and output sources. Data can be effectively visualized with PowerBI.

At Microsoft Build 2020, Satya Nadella announced Synapse Link functionality that will help get insights from real-time transactional data stored in operational databases (e.g. Cosmos DB) with a single click, without the need to manage data movement.

Get started with Azure Synapse Workspaces using Azure CLI

Prepare the necessary environment variables:

$ StorageAccountName='<come up with a name for your storage account>'

$ ResourceGroup='<come up with a name for your resource group>'

$ Region='<come up with a name of the region, e.g. eastus>'

$ FileShareName='<come up with a name of the storage file share>'

$ SynapseWorkspaceName='<come up with a name for Synapse Workspace>'

$ SqlUser='<come up with a username>'

$ SqlPassword='<come up with a secure password>'

Create a resource group as a container for your resources:

$ az group create --name $ResourceGroup --location $Region

Create a Data Lake storage account:

$ az storage account create

--name $StorageAccountName

--resource-group $ResourceGroup

--location $Region

--sku Standard_GRS

--kind StorageV2

The output of this command will be similar to:

{- Finished ..

"accessTier": "Hot",

"creationTime": "2020-05-19T01:32:42.434045+00:00",

"customDomain": null,

"enableAzureFilesAadIntegration": null,

"enableHttpsTrafficOnly": false,

"encryption": {

"keySource": "Microsoft.Storage",

"keyVaultProperties": null,

"services": {

"blob": {

"enabled": true,

"lastEnabledTime": "2020-05-19T01:32:42.496550+00:00"

},

"file": {

"enabled": true,

"lastEnabledTime": "2020-05-19T01:32:42.496550+00:00"

},

"queue": null,

"table": null

}

},

"failoverInProgress": null,

"geoReplicationStats": null,

"id": "/subscriptions/<subscription-id>/resourceGroups/Synapse-test/providers/Microsoft.Storage/storageAccounts/<storage-account-name>",

"identity": null,

"isHnsEnabled": null,

"kind": "StorageV2",

"lastGeoFailoverTime": null,

"location": "eastus",

"name": "<storage-account-name>",

"networkRuleSet": {

"bypass": "AzureServices",

"defaultAction": "Allow",

"ipRules": [],

"virtualNetworkRules": []

},

"primaryEndpoints": {

"blob": "https://<storage-account-name>.blob.core.windows.net/",

"dfs": "https://<storage-account-name>.dfs.core.windows.net/",

"file": "https://<storage-account-name>.file.core.windows.net/",

"queue": "https://<storage-account-name>.queue.core.windows.net/",

"table": "https://<storage-account-name>.table.core.windows.net/",

"web": "https://<storage-account-name>.z13.web.core.windows.net/"

},

"primaryLocation": "eastus",

"provisioningState": "Succeeded",

"resourceGroup": "<resource-group-name>",

"secondaryEndpoints": null,

"secondaryLocation": "westus",

"sku": {

"capabilities": null,

"kind": null,

"locations": null,

"name": "Standard_GRS",

"resourceType": null,

"restrictions": null,

"tier": "Standard"

},

"statusOfPrimary": "available",

"statusOfSecondary": "available",

"tags": {},

"type": "Microsoft.Storage/storageAccounts"

}

Retrieve the storage account key:

$ StorageAccountKey=$(az storage account keys list

--account-name $StorageAccountName

| jq -r '.[0] | .value')

Retrieve Storage Endpoint URL:

$ StorageEndpointUrl=$(az storage account show

--name $StorageAccountName

--resource-group $ResourceGroup

| jq -r '.primaryEndpoints | .dfs')

You can always check what your storage account key and endpoint are by looking at them, if you’d like:

$ echo "Storage Account Key: $StorageAccountKey"

$ echo "Storage Endpoint URL: $StorageEndpointUrl"

Create a fileshare:

$ az storage share create

--account-name $StorageAccountName

--account-key $StorageAccountKey

--name $FileShareName

Create a Synapse Workspace:

$ az synapse workspace create

--name $SynapseWorkspaceName

--resource-group $ResourceGroup

--storage-account $StorageAccountName

--file-system $FileShareName

--sql-admin-login-user $SqlUser

--sql-admin-login-password $SqlPassword

--location $Region

The output of the command should show the successful creation:

{- Finished ..

"connectivityEndpoints": {

"dev": "https://<synapse-workspace-name>.dev.azuresynapse.net",

"sql": "<synapse-workspace-name>.sql.azuresynapse.net",

"sqlOnDemand": "<synapse-workspace-name>-ondemand.sql.azuresynapse.net",

"web": "https://web.azuresynapse.net?workspace=%2fsubscriptions%<subscription-id>%2fresourceGroups%2fS<resource-group-name>%2fproviders%2fMicrosoft.Synapse%2fworkspaces%<synapse-workspace-name>"

},

"defaultDataLakeStorage": {

"accountUrl": "https://<storage-account-name>.dfs.core.windows.net",

"filesystem": "<file-share-name>"

},

"id": "/subscriptions/<subscription-id>/resourceGroups/<resource-group-name>/providers/Microsoft.Synapse/workspaces/<synapse-workspace-name>",

"identity": {

"principalId": "<principal-id>",

"tenantId": "<tenant-id>",

"type": "SystemAssigned"

},

"location": "eastus",

"managedResourceGroupName": "<managed-tesource-group-id>",

"name": "<synapse-workspace-name>",

"provisioningState": "Succeeded",

"resourceGroup": "<resource-group-name>",

"sqlAdministratorLogin": "<admin-login>",

"sqlAdministratorLoginPassword": <admin-password>,

"tags": null,

"type": "Microsoft.Synapse/workspaces",

"virtualNetworkProfile": null

}

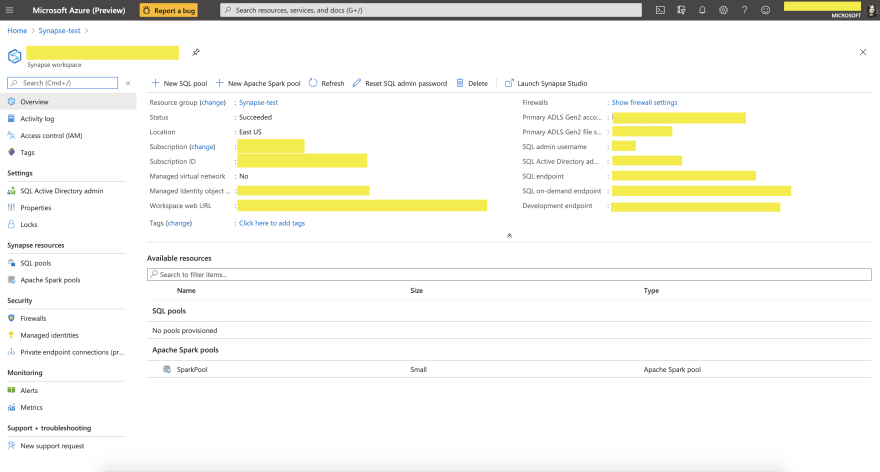

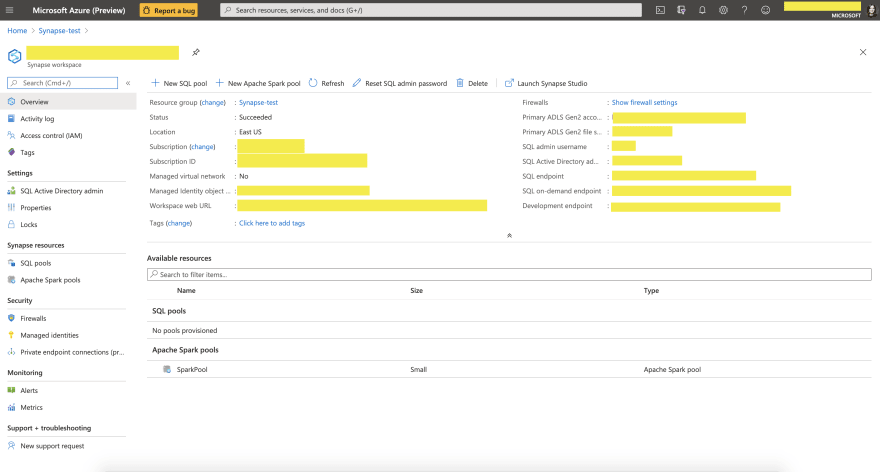

After you successfully created these resources, you should be able to go to Azure Portal, and navigate to the resource called $SynapseWorkspaceName within $ResourceGroup resource group. You should see a similar page:

What’s next?

You can now load data and experiment with it in Synapse Data Studio, create Spark or SQL pools and run analytics queries, connect to PowerBI and visualize your data, and many more.

Stay tuned for next articles to learn more! Thanks for reading!

If this article was interesting to you, follow @lenadroid on Twitter.

by Scott Muniz | Sep 2, 2020 | Azure, Microsoft, Technology, Uncategorized

This article is contributed. See the original author and article here.

One of the most common concerns for public sector cloud adoption is secure isolation among tenants when multiple customer applications and data are stored on the same physical hardware, as described in our recent blog post on secure isolation. To provide customers with more detailed information about isolation in a multi-tenant cloud, Microsoft has published Azure guidance for secure isolation, which provides technical guidance to address common security and isolation concerns pertinent to cloud adoption. It also explores design principles and technologies available in Azure and Azure Government to help customers achieve their secure isolation objectives. The approach relies on isolation enforcement across compute, storage, and networking, as well as built-in user access control via Azure Active Directory and Microsoft’s internal use of security assurance processes and practices to correctly develop logically isolated cloud services. Read more on our Azure Gov blog here.

About the Author

As Principal Program Manager with Azure Government Engineering, @StevanVidich is focused on Azure security and compliance. He publishes and maintains Azure Government documentation and works on expanding Azure compliance coverage.

by Scott Muniz | Sep 2, 2020 | Azure, Microsoft, Technology, Uncategorized

This article is contributed. See the original author and article here.

I was asked to make up a short demo video about creating a custom image for deployment in Microsoft Azure the other week to support some new content going into Microsoft Learn. Since this involves making an on-prem virtual machine first and then preparing it to upload into Azure – I figured I would make a new Windows Server 2019 CORE image (default deployment option) instead of a full desktop. I always found it strange you didn’t have an option to deploy a Core server deployment of Windows Server from Azure Marketplace.

Time to fix that.

Why Windows Server Core? Windows Server Core implementations are:

- Smaller in disk footprint, so potentially cheaper to run in an Azure VM

- Smaller in attack surface, since fewer binaries and fewer services running inside the VM

- Less demand on resources to run workloads, so potentially cheaper to run in an Azure VM

- More “remote friendly” then earlier implementations with management tools, remote PowerShell, remote RDP

- Runs most workloads you might want to run on-prem or in Azure.

I thought this was going to be a simple process that was documented in a single doc. Little did I know that the info I needed was spread across three different official docs as well as some good old trial and error. To save you time – I’ve pulled everything together and have the main steps here, but include links back to the source documents in case you want more detailed information.

The TL;DR of this process is the following:

- Build a Hyper-V VM image of Windows Server 2019 with a Core interface.

- Configure specific settings unique to uploading a Custom VM Image for Azure

- Generalize your local VM image and shut it down

- Upload VHD into a new Azure Managed Disk in your Azure Subscription

- Create a VM Image for deployment using the Azure Managed Disk

- Deploy a new VM using the uploaded image

From there – you can make a new VM from that custom uploaded image. Management wise – the deployed image is compatible with Azure Bastion, Just-In-Time remote access, Remote PowerShell or PowerShell commands via the Azure Portal.

Lets get started!

Build a Hyper-V VM image of Windows Server 2019 with a Core interface.

This should be self-explanatory. You have a Server that is running Hyper-V and you can make a new Windows Server 2019 VM using the install media (ISO file) for Windows Server 2019. The default install experience is to have a CORE install (i.e. no desktop experience) and you create a new password for the local administrator account to further configure the system. To keep things simple – I created a Generation 1 VM initially to do the install and for the most part kept the defaults for the base creation process.

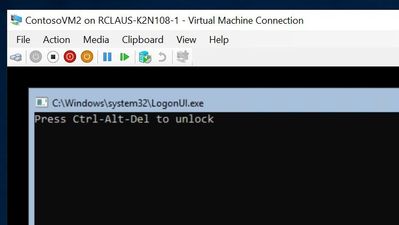

I don’t know what it is, but I really like the simple logon for a Windows Server core box – if I have to logon to the console at all. I need to do some tasks from the Hyper-V host before customizing the local VM – so I’ll shut it down for now.

Configure specific settings unique to uploading a Custom VM Image for Azure

For this example, I am taking this base image as is, and doing the recommended configuration changes as per the “Prepare a Windows VHD or VHDX to upload to Azure”. These include:

- If you made your VM from the Hyper-V Create VM Wizard, you probably have a Generation 1 VM with a dynamically expanding VHDX file. You NEED to convert this to a VHD file and change from a Dynamically expanding file to a FIXED hard drive size. Keep things simple and use the GUI console to do this – or you can follow the instructions in the document referenced above to go the PowerShell route.

- With the VM shutdown, edit the VM settings and select the Hard Disk. Choose the EDIT button to manage the disk.

- Select Convert to convert the disk. Select VHD for a max size of 2 TB, but we’re going to go smaller here.

- Select Fixed Size and choose the appropriate size (I went with 126 GB)

- Create a new name for the VHD as it makes a COPY of the disk.

- Because you change the disk from Dynamic to Fixed and it’s a new disk – you need to edit the settings of the VM to reference THIS new fixed size disk in order to proceed. Once this is updated – boot the machine and logon as the local administrator account

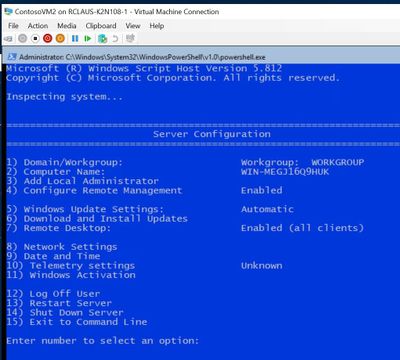

- From the command prompt – start up a PowerShell prompt to continue to prep this VM

- Run the System File Checker utility

Sfc.exe /scannow

- Run and Install all windows updates, I find it’s easies to use SCONFIG to setup Windows Update to run Automatically and check for updates.

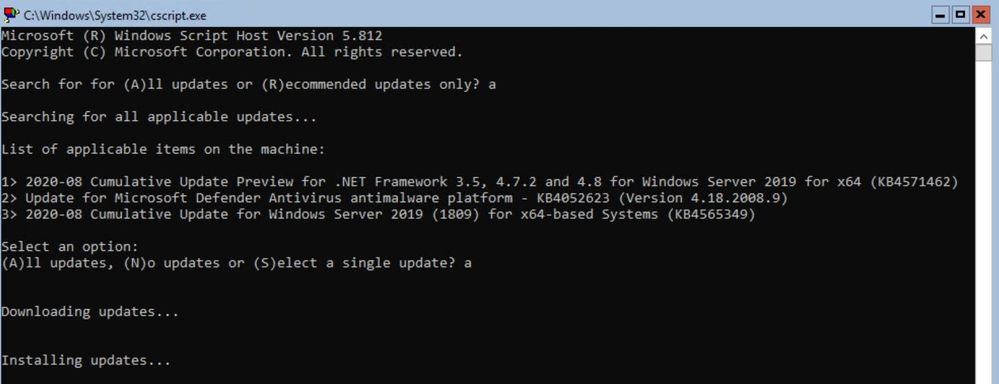

- I can force an update check with option 6 and In this case – I had three downloads / updates I needed to process which included a reboot.

At this point the document goes through an extensive list of checks and settings you should review and implement in your base image in order to ensure a smooth deployment. I am not going to list them all off here – but refer you to the document to follow:

Note: You will get some errors based on if your image is domain joined or if there are group policies in place. I got a number of red error dumps from PowerShell commands, but they were expected since my VM is not domain joined.

OK – we’re ready to go, no turning back now.

Generalize your local VM image and shut it down

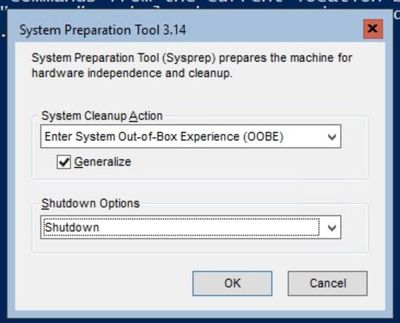

You have prepared your machine, set it up for optimal Azure compatibility and you have it tested for remote connectivity. Time to Generalize it with good old sysprep.exe. Logon to the box and change to the c:windows folder. You can save a bit of space (or a lot of space if this image was an upgrade) by deleting the c:windowspanther directory. Once that’s done, change into c:windowssystem32sysprep folder and then run sysprep.exe.

Make sure you check the Generalize checkbox and choose to Shutdown instead of Reboot.

OK – you are all set for an UPLOAD to Azure now.

Upload VHD into a new Azure Managed Disk in your Azure Subscription

NOTE: I only ever use Managed Disks for my virtual machines now, since it saves me from having to architect a strategy around how many VM disks can be in each storage account before maxing out my throughput OR having issues with storage cluster failures… Just keep it simple and promise me you will always use Azure Managed Disks for your VMs.

You will already need to have a ResourceGroup in azure that you can store these VM images in and you will want to define the location for the storage group to be in the same area where you will be using this image. I assume you are using the same system where the VHD is located OR you have copied it to your admin workstation locally before uploading it.

On this system – you will need to ensure you have the latest version of AzCopy v10 installed and the Azure PowerShell modules installed. We’re following the procedures outlined in the “Upload a VHD to Azure” document.

To upload the image – you first have to create an empty standard HDD managed disk in your pre-created ResourceGroup that is the same size as your soon to be uploaded VHD. These example commands will get your VHD disk size and set the configuration parameters required for making a disk. In order for this to work, you will need to replace <fullVHDFilePath>, <yourdiskname>, <yourresourcegroupname>, and <yourregion> from the example below with your information.

$vhdSizeBytes = (Get-Item "<fullVHDFilePath>").length

$diskconfig = New-AzDiskConfig -SkuName 'Standard_LRS' -OsType 'Windows' -UploadSizeInBytes $vhdSizeBytes -Location '<yourregion>' -CreateOption 'Upload'

New-AzDisk -ResourceGroupName '<yourresourcegroupname>' -DiskName '<yourdiskname>' -Disk $diskconfig

In my example, the complete commands were:

$vhdSizeBytes = (Get-Item "C:vmsContosoVM2.vhd").length

$diskconfig = New-AzDiskConfig -SkuName 'Standard_LRS' -OsType 'Windows' -UploadSizeInBytes $vhdSizeBytes -Location 'eastus' -CreateOption 'Upload'

New-AzDisk -ResourceGroupName ContosoResourceGroup -DiskName ContosoVM2 -Disk $diskconfig

Next You need to grant SAS access to the empty disk

$diskSas = Grant-AzDiskAccess -ResourceGroupName ContosoResourceGroup -DiskName ContosoVM2 -DurationInSecond 86400 -Access 'Write'

$disk = Get-AzDisk -ResourceGroupName ContosoResourceGroup -DiskName ContosoVM2

Now Upload the local VHD file to the Azure Managed Disk. Don’t forget to replace the <fullVHDFilePath> with your local VHD filePath

AzCopy.exe copy "<fullVHDFilePath>" $diskSas.AccessSAS --blob-type PageBlob

Once the AzCopy command completes, you need to revoke the SAS access in order to change the state of the manage disk and enable the disk to function as an image for deployment.

Revoke-AzDiskAccess -ResourceGroupName ContosoResourceGroup -DiskName ContosoVM2

Create a VM Image for deployment using the Azure Managed Disk

OK – final stretch. You’ve made a Windows Server 2019 Core image locally, prepared it for use in Azure, generalized it and uploaded it into you Azure subscription as a Managed Disk. Now you have to identify that managed disk as a VM Image that can be deployed. We’re following our third document on this called “Upload a generalized VHD and use it to create new VMs in Azure”.

- You need to get the information about the Managed Disk you just created. In my case it’s in the ContosoResourceGroup and has a name of ContosoVM2image. The command to run and build the variable is:

$disk = Get-AzDisk -ResourceGroupName ContosoResourceGroup -DiskName ContosoVM2

- Set some more variables including location of where you will be using the image, what image name is and in what resource group does it reside. In my case I used the following:

$location = 'East US'

$imageName = 'ContosoVM2Image'

$rgName = 'ContosoResourceGroup'

- Now Create the image configuration

$imageConfig = New-AzImageConfig -Location $location

$imageConfig = Set-AzImageOsDisk -Image $imageConfig -OsState Generalized -OsType Windows -ManagedDiskId $disk.Id

- FINALLY – create the image object in your subscription for deployment from portal, powershell, AzureCLI or Azure Resource Manager template.

$image = New-AzImage -ImageName $imageName -ResourceGroupName $rgName -Image $imageConfig

And with that – we are finally DONE.

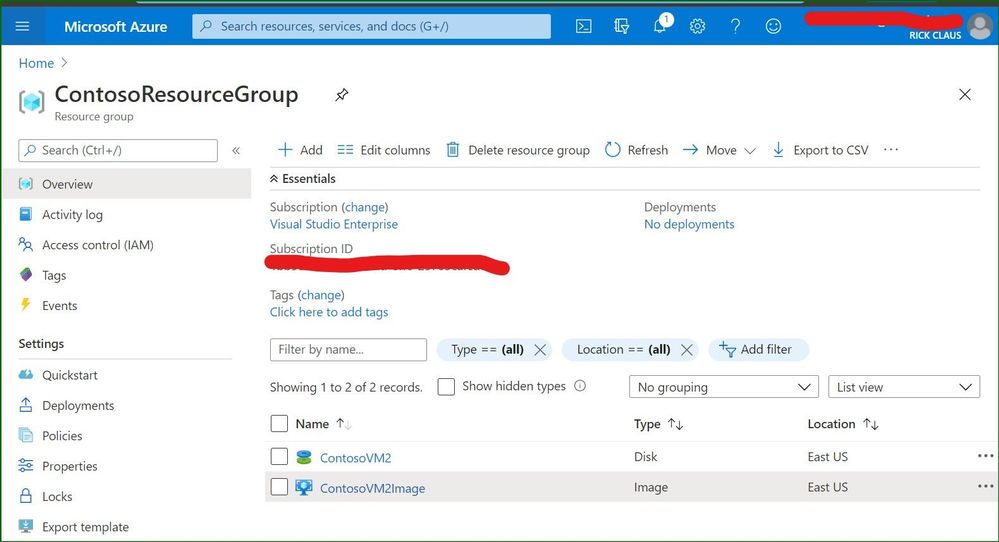

If you open up the Azure portal and explore what is in that resource group where you uploaded the VHD – you should see something similar to what I see in this portal screenshot: a simple VHD uploaded and an Image definition that you can use to deploy new VMs.

In this blog post, the custom local VM that was created was a Windows Server 2019 core install server that was customized, generalized, uploaded and converted into a specialized Image for use in Azure. Because I took the time to build my own custom image and upload it into my Azure subscription – I can deploy as many Windows Server 2019 core boxes as I need for my projects now.

Recent Comments