This article is contributed. See the original author and article here.

A quick post about notebooks and spark. So basically a customer was running some spark jobs on synapse and the error was Livy is dead.

That is sad and also the customer was not sure, why is it dead??

So, we started through the logs available on this session of synapse studio:

https://docs.microsoft.com/en-us/azure/synapse-analytics/monitoring/apache-spark-applications

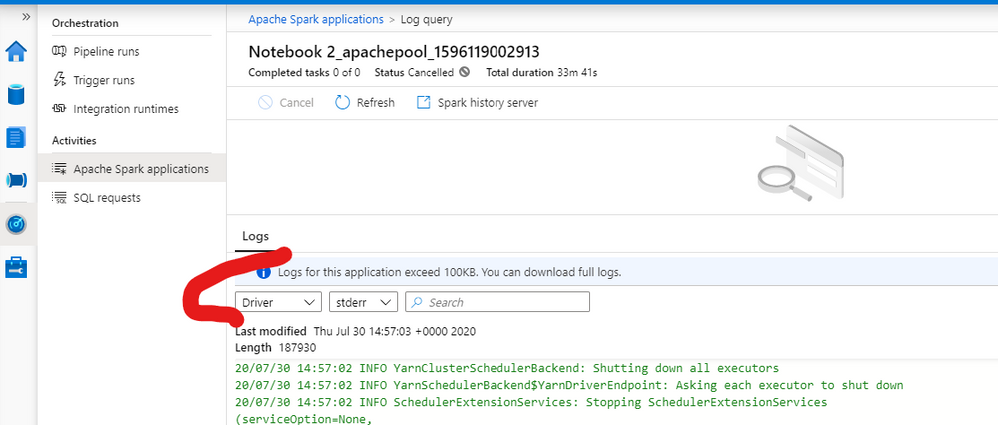

We checked the driver logs as exemplified by fig 1:

Fig 1: Driver Logs

We Spot this error ( note you have also Livy logs on this same session):

java.lang.OutOfMemoryError

The customer was running a job with a small node size with 4vCPus and 32 GB. So we basically changed the pool to give him more room in terms of memory.

You can adjust this configuration on this blade – fig 2:

Fig 2 – Pool Settings

That is something to be defined on the creation as you can see here: https://docs.microsoft.com/en-us/azure/synapse-analytics/quickstart-create-apache-spark-pool-portal

But you can adjust afterward.

That is it!

Liliam

Uk Engineer

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

Recent Comments