This article is contributed. See the original author and article here.

Microsoft Rocket, an open-source project from Microsoft Research, provides cascaded video pipelines that combined with Live Video Analytics from Azure Media Services, makes it easy and affordable for developers to build video analytics applications in their IoT solutions. Unprecedented advances in computer vision and machine learning have opened opportunities for video analytics applications that are of wide-spread interest to society, science, and business. While computer vision models have become more accurate and capable, they are also becoming resource-hungry and expensive for 24/7 analysis of video. As a result, live video analytics across multiple cameras also means a large computational footprint on premises built with a good amount of expensive edge compute hardware (CPU, GPU etc.).

Total cost of ownership (TCO) for video analytics is an important consideration and pain point for our customers. With that in mind, we integrated Live Video Analytics from Azure Media Services and Microsoft Rocket (from Microsoft Research) to enable an order-of-magnitude improvement in throughput per edge core (frame per second analyzed per CPU/GPU core), while maintaining the accuracy of the video analytics insights.

In a previous blog, we introduced Azure Live Video Analytics (LVA), a state-of-the-art platform to capture, record, and analyze live videos and publish the results (video and/or video analytics) to Azure services (in the cloud and/or on the edge). With Live Video Analytics’ flexible live video workflows, developers are now empowered to analyze video with a specialized AI model of their choice, and build truly hybrid (cloud + edge) applications that can analyze live video in the customer’s environment and combine video analytics on their camera streams with data from other IoT sensors and/or business data to build enterprise-grade solutions.

What is Microsoft Rocket?

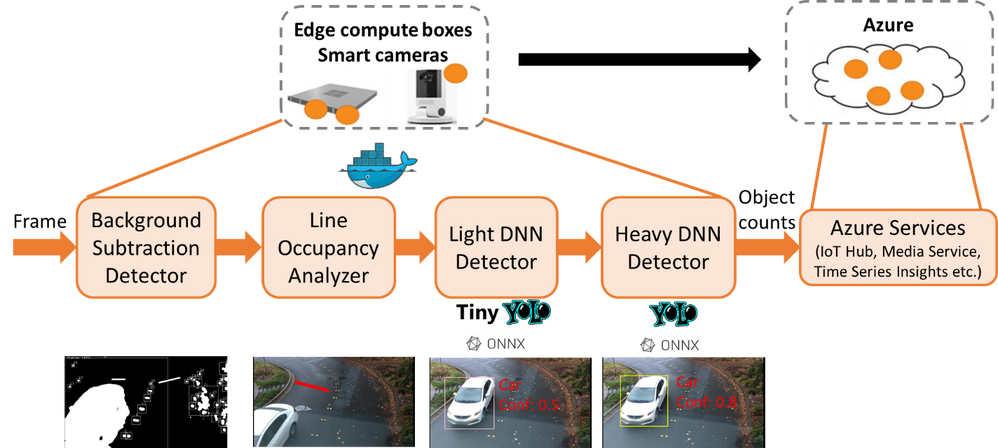

Microsoft Rocket is a video analytics system that makes it easy and affordable for anyone with a video stream to obtain intelligent and actionable outputs from video analytics. Microsoft Rocket provides cascaded video pipelines for efficiently processing live video streams. In the cascaded video pipeline, a decoded frame is first analyzed with a relatively inexpensive light Deep Neural Network (DNN) like Tiny YOLO. A heavy DNN such as YOLOv3 is invoked only when the light DNN is not sufficiently confident, thus leading to efficient GPU usage. You can plug in any ONNX, TensorFlow, or Darknet DNN model in Microsoft Rocket. You can also augment the cascaded pipeline with a simpler CPU-based motion filter based on OpenCV background subtraction, as shown in the figure below.

In the cascaded video analytics pipeline in the above figure, decoded video frames are filtered first using background subtraction detection and focused on a line of interest in the frame, before calling even the low-resource light DNN detection. Frames requiring further processing are passed through a heavy DNN detector.

Rocket plus Live Video Analytics’ cascaded video analytics pipelines lead to considerable efficiency when processing video streams (as we will quantify shortly). By filtering out frames with limited relevant information and being judicious about invoking resource-intensive operations on the GPU, it allows the analytics to not only keep up with the frame-rates of the incoming video stream, but also pack the analysis of more video analytics pipelines on an edge compute box.

Up to 17X improvement in efficiency!

We benchmarked our performance and compared it against naïve video analytics pipelines that execute the Yolov3 DNN on each frame of the video. As shown in the graph below, Rocket plus Live Video Analytics cascaded pipeline is up to nearly 17X more efficient in its processing, bumping up the video analytics pipeline’s processing rate from 10 frames/second with the Yolov3 DNN to over 200 frames/second. Benchmark results across the NVIDIA T4 (which is available in Azure Stack Edge), K80, and Tesla P100 GPUs show that the gains in efficiency hold across the different GPU classes. Further, by carefully tuning simple knobs for downsizing and sampling frames, the video analytics rate goes up to nearly 700 frames/second in our benchmarks with little loss in accuracy (as shown in the tables below).

As a result of these efficiency improvements, an edge compute box that can process only three video streams in parallel when the YoloV3 object detection model is executed on each frame, goes up all the way to processing 17 video streams in parallel with Rocket plus Live Video Analytics’ cascaded pipelines (with the requirement to process at the rate of at least 10 frames/second for acceptable accuracy, which we have seen in our prior engagements on traffic video analytics).

Benchmark results of Live Video Analytics and Rocket containers, with improvement factors marked alongside each bar. Live Video Analytics plus Rocket achieves ~17X higher processing rates (measured in frames/second) compared to naïve solutions that run Yolov3 DNN on each frame of the video. We measure the performance of two cascaded modes of Rocket after background subtraction (BGS): “Full Cascade” (BGS → Light DNN → Heavy DNN) and “Heavy Cascade” (BGS →Heavy DNN). In fact, when we count the cars on the freeway, we can also use just BGS alone for counting as the freeway lanes are unlikely to contain any other objects (and thus requires no confirmation from the DNNs of the objects being cars). The choice of the pipeline and parameters is video-specific.

Impact of varying the frame resolution and frame sampling on the processing rates. Note that for all the experiments in the table above, there was no drop in the accuracy of the video analytics.

Check out the code and take it for a spin!

You can now take advantage of the open-source reference app with extensive instructions to build and deploy Live Video Analytics and Rocket containers for highly efficient live video analytics applications on the edge (as shown in the architecture figure below). The project repository contains helpful Jupyter notebooks as well as a sample video file for easy testing of an object counter for various object categories crossing a line of interest in the video.

The above figure shows the architecture where the Azure Live Video Analytics container works in tandem with Microsoft Rocket’s container (with cascaded video analytics).

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

Recent Comments