This article is contributed. See the original author and article here.

Synapse Serverless SQL Pool is a serverless query engine platform that allows you to run SQL queries on files or folders placed in Azure storage without duplicating or physically storing the data.

There are broadly three ways to access ADLS using synapse serverless.

- Azure Active Directory

- Managed Identity

- SAS Token

The user would need to be assigned to one of the RBAC role : Azure storage blob data ownercontributorreader role. However, there might be scenario that you would or could not provide access to the ADLS account or container and provide access to granular level directories and folder levels and not complete storage container or blob.

Scenario

You have a data lake that contains employee and social feed data. You have data residing in an employee folder that is used by HR team members and twitter for live social feeds that is usually used by marketing folks. If you use SAS token or RBAC, you cannot control to the folder level.

How do you allow users to perform data exploration using synapse serverless with fine grain control on underlying storage.

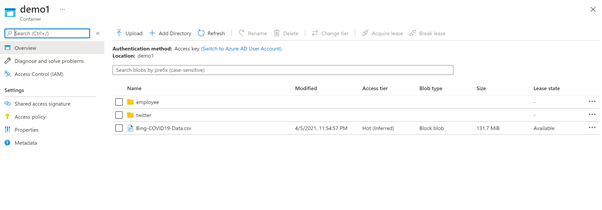

Fig1. A storage account with container demo contains two folder employee and twitter.

Solution

To solve this challenge, you can use directory scoped SAS token along with database scope credentials in synapse serverless.

Directory scoped SAS provides constrained access to a single directory when using ADLS Gen2. This can be used to provide access to a directory and the files it contains. Previously a SAS could be used to provide access to a filesystem or a file but not a directory. This added flexibility allows more granular and easier access privilege assignment.

| Storage Account | Container | Folder | File |

AAD | YES (RBAC on Account) | YES (RBAC on Container) | YES (via POSIX ACLs) | YES (via POSIX ACLs) |

Managed Identity (same as AAD) | YES (RBAC on Account) | YES (RBAC on Container) | YES (via POSIX ACLs) | YES (via POSIX ACLs) |

SAS Token | YES (Scope – Account) | YES(Scope – Container) | YES (Scope – Directory and Files) | YES (Scope – Files) |

For

How to create Directory based SAS token

You can do via SDK or portal. To create a SAS token via portal.

a. Navigate to the folder that you would like to provide access and right click on the folder and select generate SAS token.

Fig 2 : Directory scope selection for employee folder

b. Select permissions Read, list and execute to read and load all the files in the folder. Provide the expiration date and click generate SAS token and URL. Copy blob SAS token.

Fig 3 : Generate SAS token.

The step b. can be similar to create storage SAS token . Earlier, it used to apply to storage account, now you can reduce the surface area to directory and files as well.

Use Serverless with directory SAS token

Once the storage account access has been configured using SAS token, the next to access the data using synapse serverless engine.

On Azure synapse Studio, go to develop and SQL Script.

a. Create a master key, if it is not there.

— create master key that will protect the credentials:

CREATE MASTER KEY ENCRYPTION BY PASSWORD = <enter very strong password here>

b. Create a database scope credential using the sas token. You would like to access HR data. So use the blog storage sas token generated for Employee directory.

CREATE DATABASE SCOPED CREDENTIAL mysastokenemployee

WITH IDENTITY = ‘SHARED ACCESS SIGNATURE‘,

SECRET = ‘<blob sas token>‘

c. Create external data source till the container path demo1 and use credential mysastokenemployee

CREATE EXTERNAL DATA SOURCE myemployee

WITH ( LOCATION = ‘https:// <storageaccountname>.dfs.core.windows.net/<filesystemname>‘,

CREDENTIAL = mysastokenemployee

)

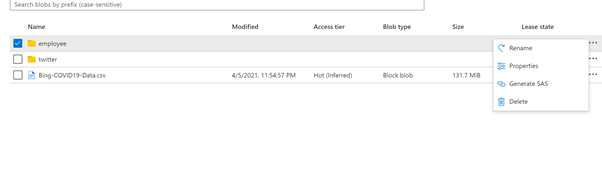

d. Once the data is created, lets read the data using OPENROWSET BULK in serverless

SELECT * FROM OPENROWSET(

BULK ‘/employee/*.csv’,

DATA_SOURCE = ‘myemployee’,

FORMAT =’CSV’,

parser_version = ‘2.0’,

HEADER_ROW = TRUE

) AS Data

Fig 4 : Openrowset bulk output

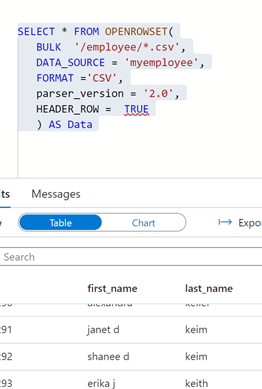

e. Now to confirm whether the scope of the SAS token is only restricted to employee folder, lets use the same data source and database credential to access file in twitter folder.

SELECT * FROM OPENROWSET(

BULK ‘/twitter/StarterKitTerms.csv’,

DATA_SOURCE = ‘myemployee’,

FORMAT =’CSV’,

parser_version = ‘2.0’,

HEADER_ROW = TRUE

) AS Data

Fig 5: Bulk openrowset access failure

f. You will encounter an error because the scope of the SAS token was restricted to employee folder.

Now, to access twitter folder for the marketing representative, create a database scoped credential using a sas token for twitter folder. Repeat the steps “How to create Directory based SAS token” for twitter folder.

CREATE DATABASE SCOPED CREDENTIAL mysastokentwitter

WITH IDENTITY = ‘SHARED ACCESS SIGNATURE‘,

SECRET = ‘<blob sas token>‘

g. Create an external data source using the scope credential created for twitter directory.

CREATE EXTERNAL DATA SOURCE mytwitter

WITH ( LOCATION = ‘https://<storageaccountname>.dfs.core.windows.net/<filesystemname>/‘,

CREDENTIAL = mysastokentwitter

)

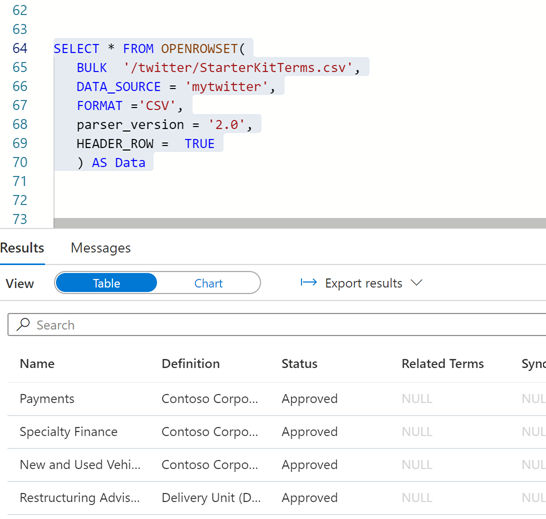

h. Once the data source is created , you can query the twitter data using newly created data source.

SELECT * FROM OPENROWSET(

BULK ‘/twitter/StarterKitTerms.csv’,

DATA_SOURCE = ‘mytwitter’,

FORMAT =’CSV’,

parser_version = ‘2.0’,

HEADER_ROW = TRUE

) AS Data

Fig 6 : Twitter data accessed using the directory sas token

Summary

- In a central data lake environment or any file store , directory sas token is a great way of reducing the access surface area without providing access at storage root or account level.

- Separation of duties and roles can be easily achieved as data access is controlled at storage level and synapse serverless

- Create sas token with read, list and execute to minimize the impact of accidental deletion etc. sharing the sas token should be done in a secured manner.

- Expire sas token, regenerate new token and recreate the scope credentials frequently.

- Serverless is great way of data exploration without spinning any additional SQL resources. You would be charged based on data processed by each query.

- Managing too many sas tokens will be challenge. So, use a hybrid approach of breaking the large data lake to smaller pools or mesh and grant RBAC access control and blend with SAS token for regulated users is best way of scaling the serverless capability.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

Recent Comments