by Scott Muniz | Sep 8, 2020 | Uncategorized

This article is contributed. See the original author and article here.

Documentation says:

“SQL on-demand is serverless, hence there is no infrastructure to setup or clusters to maintain. A default endpoint for this service is provided within every Azure Synapse workspace, so you can start querying data as soon as the workspace is created. There is no charge for resources reserved, you are only being charged for the data scanned by queries you run, hence this model is a true pay-per-use model.

If you use Apache Spark for Azure Synapse in your data pipeline, for data preparation, cleansing or enrichment, you can query external Spark tables you’ve created in the process, directly from SQL on-demand.” https://docs.microsoft.com/en-us/azure/synapse-analytics/sql/on-demand-workspace-overview

About Spark: https://docs.microsoft.com/en-us/azure/synapse-analytics/spark/apache-spark-overview

“Apache Spark provides primitives for in-memory cluster computing. A Spark job can load and cache data into memory and query it repeatedly. In-memory computing is much faster than disk-based applications. Spark also integrates with multiple programming languages to let you manipulate distributed data sets like local collections. There’s no need to structure everything as map and reduce operations.”

Using Synapse I have the intention to provide Lab loading data into Spark table and querying from SQL OD.

This was an option for a customer that wanted to build some reports querying from SQL OD.

You need:

1) A Synapse Workspace ( SQL OD will be there after the workspace creation)

2)Add Spark to the workspace

You do not need:

1) SQL Pool.

Step by Step

Launch Synapse Studio and create a new notebook. Add the following code ( phyton):

%%pyspark

from pyspark.sql.functions import col, when

df = spark.read.load('abfss://<container>@<storageAccount>.dfs.core.windows.net/folder/file.snappy.parquet', format='parquet')

df.createOrReplaceTempView("pysparkdftemptable")

Add some magic by include another cell and running Scala on it:

%%spark

spark.sql("CREATE DATABASE IF NOT EXISTS SeverlessDB")

val scala_df = spark.sqlContext.sql ("select * from pysparkdftemptable")

scala_df.write.mode("overwrite").saveAsTable("SeverlessDB.Parquet_file")

Run.

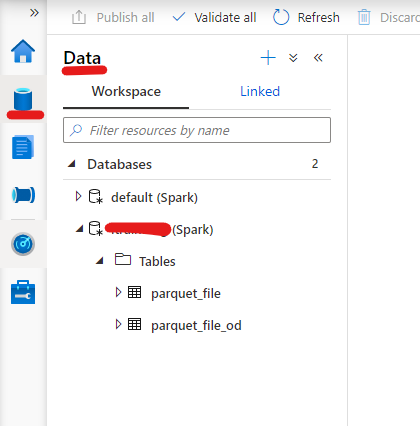

If everything ran successfully you should be able to see your new database and table under the Data Option:

Now it is the easy part. Query the table ( Right button -> New SQL Script -> Select):

Super quick and easy.

That is it!

Liliam C Leme

UK Engineer

by Scott Muniz | Sep 8, 2020 | Azure, Technology, Uncategorized

This article is contributed. See the original author and article here.

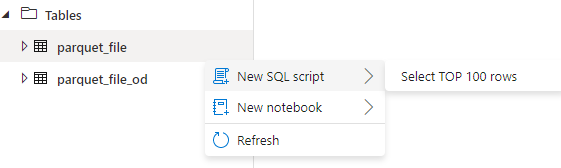

What do you do when you receive alert message in Azure Security Center?

You can find details about Advanced Threat Protection alerts in following reference document.

https://docs.microsoft.com/en-us/azure/security-center/threat-protection

Following are list of alerts

https://docs.microsoft.com/en-us/azure/security-center/alerts-reference#alerts-sql-db-and-warehouse

Background

Azure threat detection is a feature that monitors detects anomalous activities such as unusual successful logins and warns if an unknown or new client IP address is used. Login warning will generate an email and appear on the DW instance Portal. The unfamiliar login feature uses a two month sliding window looking for unknown IPs. When a new IP is found, the warning email and portal threat is generated. The minimal learning period on a new instance, before the first alert is 14 days.

- For alerts e.g. Log on by an unfamiliar principal, Log on from an unusual Azure Data Center, Log on from an unusual location, Potential SQL Brute Force attempt

Following are some mitigation steps to investigate the access and block it, if it is unauthorized.

- You can take immediate action by changing the account password or blocking the IP via the DW server’s firewall rules. However, this may not be the ideal step if IP address is from azure services or recently configured IP, this may block the service. Azure IP addresses keep frequently changes for security reason. You can get information from following URL.

https://www.microsoft.com/en-us/download/details.aspx?id=41653

- If the you don’t recognize the IP address, you should check the ISP that owns the IP address via any tool which is allowed to use in your organization. e.g. you can get information of as follows.

- If the IP address is still unknown, the you can enable Audit Logging, to see the details about queries that IP is submitting. Ref document https://docs.microsoft.com/en-us/azure/sql-data-warehouse/sql-data-warehouse-auditing-overview

- If a threat is found, changing the password is required, in addition to adding more restrictive via firewall rules.

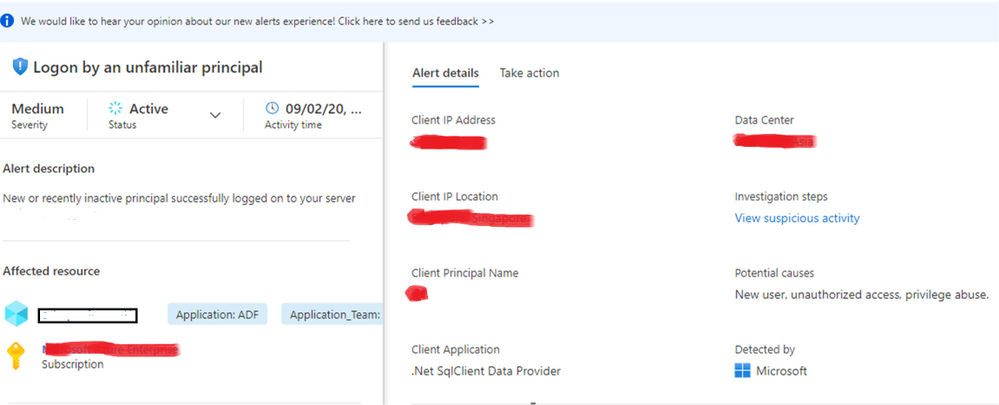

- For alerts e.g. A possible vulnerability to SQL Injection, Potential SQL injection.

Following are some steps to investigate which will be helpful to mitigate the alert.

- You can review auditing logs to understand which query was executed from that IP

- Check the queries which were executed near to the time of the alert with query text that appears as parse error.

- Application name is displayed in alert, review the code that can cause SQL injection.

by Scott Muniz | Sep 7, 2020 | Uncategorized

This article is contributed. See the original author and article here.

The Cloud Adoption Framework is proven guidance that’s designed to help you create and implement the business and technology strategies necessary for your organization to succeed in the cloud. It provides best practices, documentation, and tools that cloud architects, IT professionals, and business decision makers need to successfully achieve their short- and long-term objectives. https://docs.microsoft.com/en-us/azure/cloud-adoption-framework/overview

Also check “Azure Cloud Adoption Framework landing zones for Terraform” https://github.com/Azure/caf-terraform-landingzones

by Scott Muniz | Sep 7, 2020 | Uncategorized

This article is contributed. See the original author and article here.

Hello everyone, here is part 18 of a series focusing on Application Deployment in Configuration Manager. This series is recorded by @Steve Rachui, a Microsoft principal premier field engineer.

This session focuses on the new Task Sequence Deployment Type introduced in Configuration Manager current branch 2002. Demonstrations include how to use the task sequence in various scenarios and demonstrates its use with a basic task sequence example.

Posts in the series

Go straight to the playlist

by Scott Muniz | Sep 7, 2020 | Uncategorized

This article is contributed. See the original author and article here.

Initial Update: Monday, 07 September 2020 17:59 UTC

We are aware of issues within Application Insights and Log Analytics and are actively investigating. Some customers may experience Data Access issues, alerting failures or missed alerts and UI loading issues when querying from portal.

- Next Update: Before 09/07 22:00 UTC

We are working hard to resolve this issue and apologize for any inconvenience.

-Jayadev

Recent Comments