by Scott Muniz | Sep 1, 2020 | Azure, Microsoft, Technology, Uncategorized

This article is contributed. See the original author and article here.

Introduction

With an ever-expanding ocean of data, more and more organizations need to perform advanced and fast analytics over their business data, logs, and telemetry while seeking to reduce costs. Many of them are shifting towards Azure Data Explorer (ADX) and taking advantage of the significant benefits it offers to analyze billions of records quickly and cost-effectively.

But sometimes they are already using other tools. One common scenario is that organizations are already using Elasticsearch, Logstash, and Kibana (The ELK Stack). Migration between big data platforms sounds like a long and complicated process. But that’s not always true. Switching from ELK to Azure Data Explorer offers the opportunity to significantly boost performance, reduce costs and improve the quality of insights by offering advanced query capabilities; all this without entering a long and complex migration, thanks to the tools described below.

This blog post covers the following topics:

- Why organizations are moving to Azure Data Explorer

- How you can use Azure Data Explorer with Kibana

- What additional tools for data exploration, visualizations, and dashboards are available

- How you can send data to Azure Data Explorer through Logstash (or other tools)

- How to use Logstash to migrate historical data from Elasticsearch to Azure Data Explorer

- Appendix: Step by step example – using Logstash to migrate historical data from Elasticsearch to Azure Data Explorer

1. Why organizations are moving to Azure Data Explorer

Azure Data Explorer is a highly scalable and fully managed data analytics service on the Microsoft Azure Cloud. ADX enables real-time analysis of large volumes of heterogeneous data in and allows rapid iterations of data exploration to discover relevant insights. In short, the advantages of ADX can be summed up using the three Ps: Power, Performance, Price.

Power

Azure Data Explorer has a unique column-store inverted-index design. With the intuitive Kusto Query Language (KQL) you can query an enormous amount of data and perform ad-hoc analytics in seconds.

Azure Data Explorer has a unique column-store inverted-index design. With the intuitive Kusto Query Language (KQL) you can query an enormous amount of data and perform ad-hoc analytics in seconds.

Users state that they find it easier to find value and new insights from their data, using KQL. They are more engaged and understand the data better, since they can efficiently explore the data and run ad-hoc text parsing, create run-time calculated columns, aggregations, use joins, and plenty of other capabilities.

These capabilities are natively supported without the need to modify the data. You don’t have to pre-organize the data, pre-define scripted fields, or de-normalize the data. There is no need to manage and take care of the hierarchy of Indices, Types, and IDs.

Azure Data Explorer’s machine–learning capabilities can identify patterns that are not obvious and detect differences in data sets. With capabilities like time series analysis, anomaly detection, and forecasting, you can uncover hidden insights and easily point out issues or unusual relationships you may not even be aware of. You can also run inline Python and R as part of the queries.

Also, Azure Data Explorer supports many communication APIs and client libraries, all of which make programmatic access easy.

Performance

As a fully managed service, Azure Data Explorer gives you best–in-class performance. You can get results from one billion records in less than a second without modifying the data or metadata. ADX can accept hundreds of billions of records a day and store this data reliably for months. ADX is specifically built to handle complex analytics queries on the data and return answers in a matter of seconds, even when the data is a combination of structured, semi-structured (JSON-like nested types), and unstructured (free text).

As a fully managed service, Azure Data Explorer gives you best–in-class performance. You can get results from one billion records in less than a second without modifying the data or metadata. ADX can accept hundreds of billions of records a day and store this data reliably for months. ADX is specifically built to handle complex analytics queries on the data and return answers in a matter of seconds, even when the data is a combination of structured, semi-structured (JSON-like nested types), and unstructured (free text).

Price

Azure Data Explorer is a managed Platform as a Service (PaaS), so you can reduce the management and operations overhead and save the cost of purchasing and maintaining hardware. Auto–scale capabilities are available for better cost control. Due to the efficient data ingestion process and querying engine, compute resources are consumed wisely and economically. The inverted-index columns-store design enables ADX to have a superb data compression ratio, which reduces the amount of required storage, compared to other services. You can run as many queries as you need, thanks to the optimized cost structure, without incurring additional costs. In addition, ADX provides a granular cache policy that customers can set to differentiate between hot data cache and cold data cache, and get even more control over the costs.

Azure Data Explorer is a managed Platform as a Service (PaaS), so you can reduce the management and operations overhead and save the cost of purchasing and maintaining hardware. Auto–scale capabilities are available for better cost control. Due to the efficient data ingestion process and querying engine, compute resources are consumed wisely and economically. The inverted-index columns-store design enables ADX to have a superb data compression ratio, which reduces the amount of required storage, compared to other services. You can run as many queries as you need, thanks to the optimized cost structure, without incurring additional costs. In addition, ADX provides a granular cache policy that customers can set to differentiate between hot data cache and cold data cache, and get even more control over the costs.

You can configure and estimate the costs with our cost estimator.

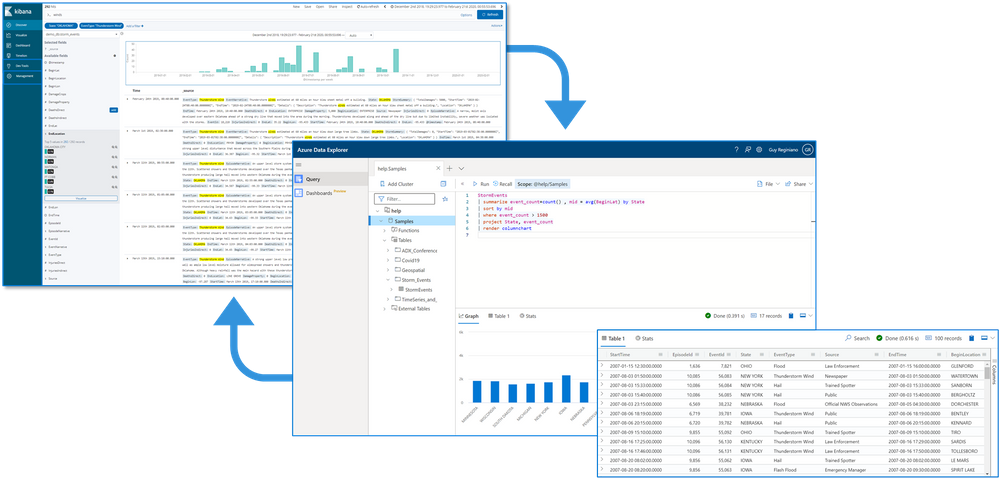

2. How you can use Azure Data Explorer with Kibana

As announced in a separate blog post, we developed the K2Bridge (Kibana-Kusto Bridge), an open-source project that enables you to connect your familiar Kibana’s Discover tab to Azure Data Explorer. Starting with Kibana 6.8, you can store your data in Azure Data Explorer on the back end and use K2Bridge to connect to Kibana. This way, your end-users can keep using Kibana’s Discover tab as their data exploration tool.  Connect Kibana to Azure Data Explorer with K2Bridge

Connect Kibana to Azure Data Explorer with K2Bridge

3. What additional tools for data exploration, visualizations, and dashboards are available

Azure Data Explorer offers various other exploration and visualization capabilities that take advantage of the rich and built-in analyses options of KQL, including:

- Azure Data Explorer Web UI/Desktop application – to run queries, analyze and explore the data using powerful KQL queries.

- The KQL render operator offers various out-of-the-box visualizations such as tables, pie charts, anomaly charts, and bar charts to depict query results. Query visualizations are helpful in anomaly detection, forecasting, machine–learning scenarios, and more.

As described in the first chapter, you can efficiently run ad-hoc text parsing, create calculated columns, use joins and plenty of other capabilities, without any modifications or pre-organizations of the data.

- Azure Data Explorer dashboards – a web UI that enables you to run queries, build dashboards, and share them across your organization.

- Integrations with other dashboard services like Power BI and Grafana.

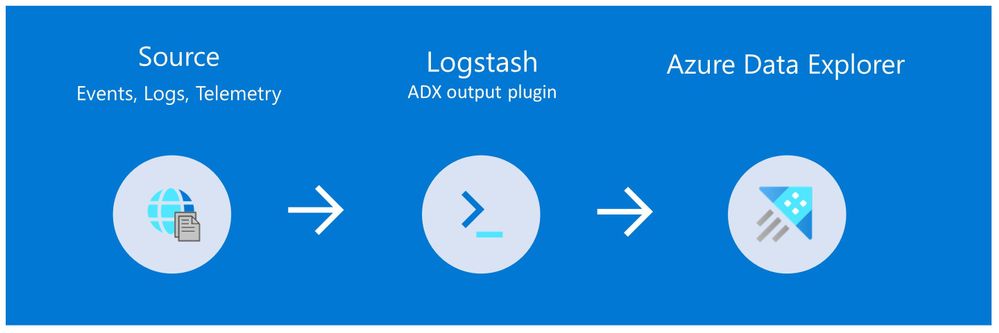

4. How you can send data to Azure Data Explorer through Logstash (or other tools)

Are you already using Logstash as the data pipeline? If so, redirecting the data to ADX is easy! You can use the open-source Logstash Output Plugin for Azure Data Explorer (a detailed example is described in the next chapter), and keep using the Logstash input plugin according to your specific source of the ongoing event stream, as you use it today.

There are many other ways to ingest data into Azure Data Explorer, including:

- Ingestion using managed pipelines – using Azure Event Grid, Azure Data Factory (ADF), IoT Hub and Event Hub (Event Hub can receive data from several publishers, including Logstash and Filebeat, through Kafka).

- Ingestion using connectors and plugins – Logstash plugin, Kafka connector, Power Automate (Flow), Apache Spark connector

- Programmatic ingestion using SDKs

- Tools – LightIngest or One–click ingestion (a detailed example is described in the next chapter)

- KQL ingest control commands

For more information, please refer to the data ingestion overview.

5. How to use Logstash to migrate historical data from Elasticsearch to Azure Data Explorer

Choose the data you care about

When you decide to migrate historical data, it is a great opportunity to validate your data and needs. There is a good chance you can remove old, irrelevant, or unwanted data, and only move the data you care about. By migrating your freshest and latest data only, you can reduce costs and improve querying performance.

Usually, when organizations migrate from Elasticsearch to Azure Data Explorer, they do not migrate historical data at all. The approach is a “side-by-side” migration: they “fork” their current data pipeline and ingest the ongoing live data to Azure Data Explorer (by using Logstash/Kafka/Event Hub connectors, for example). Anyway, we show how you can migrate your historical data using Logstash. For efficiency, the Logstash output plugin section in the next examples contains a ‘query’ section in which you specify the data you care about and would like to export from Elasticsearch.

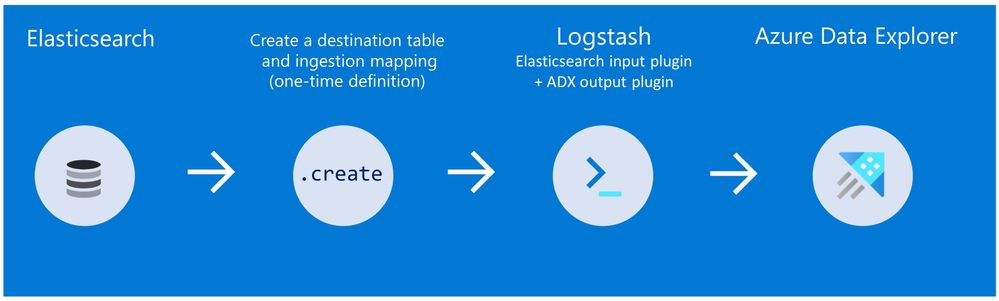

Data migration from Elasticsearch to Azure Data Explorer

Here we describe two methods to migrate historical data from Elasticsearch using Logstash. See the appendix for a step-by-step tutorial.

Method 1: Logstash and One-click Ingestion/LightIngest

Use Logstash to export the data from Elasticsearch into CSV or JSON file(s), and then use Azure Data Explorer’s One-Click Ingestion feature to ingest the data.

- This is an easy way to quickly ramp-up and migrate data because One-Click Ingestion automatically generates the destination table and the ingestion mapping based on the structure of the data source (of course, you can edit the table schema if you want to).

- One-Click Ingestion supports ingesting up to 1 GB at a time. To ingest a more massive amount of data, you can:

- Slice your data into multiple files and ingest them separately.

- Use LightIngest – a command-line utility for ad-hoc data ingestion. The utility can pull source data from a local folder (or from an Azure blob storage container).

- Use the second method described below.

Method 2: Using Logstash only (with the output plugin for Azure Data Explorer)

Use Logstash as a pipeline for both exporting data from Elasticsearch and ingesting it into Azure Data Explorer. When you use this method, you should manually create the Azure Data Explorer destination table and define the ingestion mapping. (You can automatically generate the destination table and the table mapping by using One-Click Ingestion with sample data, as described in method 1 first, and then use method 2 for the rest of the data)

In this blog post, we talked about the advantages of Azure Data Explorer, went over several visualizations options, including the open-source Kibana-Azure Data Explorer connector, and introduced a variety of ways you can ingest your ongoing data into Azure Data Explorer. Then, we presented two ways to migrate historical data from Elasticsearch to Azure Data Explorer.

In the appendix, you can find two step-by-step sample scenarios for historical data migration.

Please do not hesitate to contact our team or leave a comment if you have any questions or concerns.

Appendix: Step-by-step example of historical data migration

Method 1: Logstash and One–Click Ingestion

- Use Logstash to export the relevant data to migrate from Elasticsearch into a CSV or a JSON file. Define a Logstash configuration file that uses the Elasticsearch input plugin to receive events from Elasticsearch. The output will be a CSV or a JSON file.

- To export your data to a CSV file: use the CSV output plugin. For this example, the config file should look like this:

# Sample Logstash configuration: Elasticsearch -> CSV file

input {

# Read documents from Elasticsearch matching the given query

elasticsearch {

hosts => ["http://localhost:9200"]

index => "storm_events"

query => '{ "query": { "range" : { "StartTime" : { "gte": "2000-08-01 01:00:00.0000000", "lte": "now" }}}}'

}

}

filter {

ruby {

init => "

begin

@@csv_file = 'data-csv-export.csv'

@@csv_headers = ['StartTime','EndTime','EpisodeId','EventId','State','EventType']

if File.zero?(@@csv_file) || !File.exist?(@@csv_file)

CSV.open(@@csv_file, 'w') do |csv|

csv << @@csv_headers

end

end

end

"

code => "

begin

event.get('@metadata')['csv_file'] = @@csv_file

event.get('@metadata')['csv_headers'] = @@csv_headers

end

"

}

}

output {

csv {

# elastic field name

fields => ["StartTime","EndTime","EpisodeId","EventId","State","EventType"]

# This is path where we store output.

path => "./data-csv-export.csv"

}

}

This config file specifies that the ‘input’ for this process is the Elasticsearch cluster, and the ‘output’ is the CSV file.

- Implementation note:The filter plugin adds a header with the field names to the CSV file’s first line. This way, the destination table will be auto built with these column names. The plugin uses the ‘init’ option of the Ruby plugin to add the header at Logstash startup-time.

- Alternatively, you can export your data to a JSON file, using the file output format.

This is what our Logstash config file looks like:

# Sample Logstash configuration: Elasticsearch -> JSON file

input {

# Read documents from Elasticsearch matching the given query

elasticsearch {

hosts => ["http://localhost:9200"]

index => "storm_events"

query => '{ "query": { "range" : { "StartTime" : { "gte": "2000-08-01 01:00:00.0000000", "lte": "now" }}}}'

}

}

output {

file {

path => "./output_file.json"

codec => json_lines

}

}

- The advantage of using JSON over CSV is that later, with One–Click Ingestion, the Azure Data Explorer ‘create table’ and ‘create json mapping’ commands will be auto-generated for you. It will save you the need to manually create the JSON table mapping again (in case you want to ingest your ongoing data with Logstash later on. The Logstash output plugin uses json mapping).

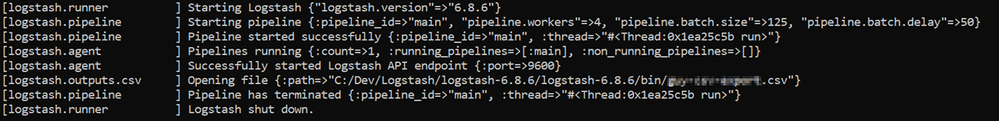

- Start Logstash with the following command, from Logstash’s bin folder:

logstash -f pipeline.conf

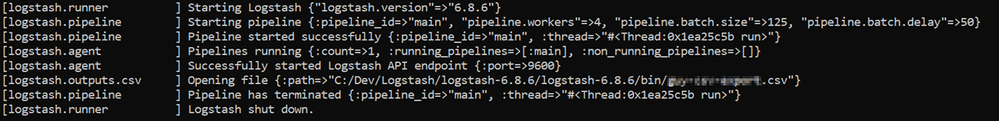

- If your pipeline is working correctly, you should see a series of events like the following written to the console:

- The CSV/JSON file should be created at the destination you specified in the config file.

- Ingest your data into Azure Data Explorer with One–Click Ingestion:

- Open the Azure Data Explorer web UI. If this is the first time you are creating an Azure Data Explorer cluster and database, see this doc.

- Right–click the database name and select Ingest new Data.

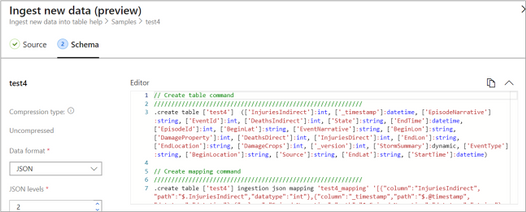

- In the Ingest new data page, use the Create new option to set the table name.

- Select Ingestion type from a file and browse your CSV/JSON file.

- Select Edit schema. You will be redirected to the schema of the table that will be created.

- Optionally, on the schema page, click the column headers to change the data type or rename a column. You can also double-click the new column name to edit it.

For more information about this page, see the doc.

- Select Start Ingestion to ingest the data into Azure Data Explorer.

- After a few minutes, depending on the size of the data set, your data will be stored in Azure Data Explorer and ready for querying.

Method 2: Using Logstash only

- Create an Azure Data Explorer cluster and database.

Note: If you have already created your Azure Data Explorer cluster and database, you can skip this step.

More information on creating an Azure Data Explorer cluster and database can be found here.

-

Create the destination table.

Note: If you have already created your table with One-Click Ingestion, or in other ways, skip this step.

Tip: The One-Click Ingestion tool auto-generates the table creation and the table mapping commands, based on the structure of sample JSON data you provide. If you use One-Click Ingestion with a JSON file, as described above, you can use the auto-generated commands, from the Editor section.

Auto-generate the table and its mapping using One-Click IngestionIn the Azure portal, under your cluster page, on the left menu, select Query (or use Azure Data Explorer Web UI/Desktop application) and run the following command. This command creates a table with the name ‘MyStormEvents’, with columns according to the schema of the data.

Auto-generate the table and its mapping using One-Click IngestionIn the Azure portal, under your cluster page, on the left menu, select Query (or use Azure Data Explorer Web UI/Desktop application) and run the following command. This command creates a table with the name ‘MyStormEvents’, with columns according to the schema of the data.

.create tables MyStormEvents(StartTime:datetime,EndTime:datetime,EpisodeId:int,EventId:int,State:string,EventType:string)

- Create ingestion mapping.

Note: If you used One-Click Ingestion with a JSON file, you can skip this step. This mapping is used at ingestion time to map incoming data to columns inside the ADX target table.

The following command creates a new mapping, named ‘mymapping’, according to the data’s schema. It extracts properties from the incoming temporary JSON on files, that will be automatically generated later, as noted by the path, and outputs them to the relevant column.

.create table MyStormEvents ingestion json mapping 'mymapping' '[{"column":"StartTime","path":"$.StartTime"},{"column":"EndTime","path":"$.EndTime"} ,{"column":"EpisodeId","path":"$.EpisodeId"}, {"column":"EventId","path":"$.EventId"}, {"column":"State","path":"$.State"},{"column":"EventType","path":"$.EventType "}]'

- Your table is ready to be ingested with data from your existing Elasticsearch index. To ingest the historical data from Elasticsearch, you can use the Elasticsearch input plugin to receive data from Elasticsearch, and the Azure Data Explorer (Kusto) output plugin to ingest the data to ADX.

- If you have not used Logstash, you should first install it

- Install the Logstash output plugin for Azure Data Explorer, which sends the data to Azure Data Explorer, by running:

bin/logstash-plugin install logstash-output-kusto

- Define a Logstash configuration pipeline file in your home Logstash directory.

In the input plugin, you can specify a query to filter your data according to a specific time range or any other search criteria. This way, you can migrate only the data you care about.

In this example, the config file looks as follows:

input {

# Read all documents from your Elasticsearch, from index “your_index_name”

elasticsearch {

hosts => ["http://localhost:9200"]

index => " your_index_name "

query => '{ "query": { "range" : { "StartTime" : {"gte": "2020-01-01 01:00:00.0000000", "lte": "now"}} } }'

}

}

output {

kusto {

path => "/tmp/kusto/%{+YYYY-MM-dd-HH-mm }.txt"

ingest_url => "https://<your cluster name>.<your cluster region>.kusto.windows.net”

app_id => "<Your app id>"

app_key => "<Your app key>"

app_tenant => "<Your app tenant>"

database => "<Your Azure Data Explorer DB name>"

table => "<Your table name>"

mapping => "<Yor mapping name>"

}

}

- Edit your configuration pipeline file according to your Azure Data Explorer cluster details and start Logstash with the following command, from Logstash’s bin folder:

logstash -f pipeline.conf

- If your pipeline is working correctly, you should see a series of events like the following written to the console:

- After a few minutes, run the following Azure Data Explorer query to see the records in the table you defined:

MyStormEvents | count

The result is the number of records that were ingested into the table. It might take several minutes to ingest the entire dataset, depending on the size of the data set. The result of this query reflects the quantity of ingested records. Your data is now stored in Azure Data Explorer and is ready for querying!

by Scott Muniz | Sep 1, 2020 | Azure, Microsoft, Technology, Uncategorized

This article is contributed. See the original author and article here.

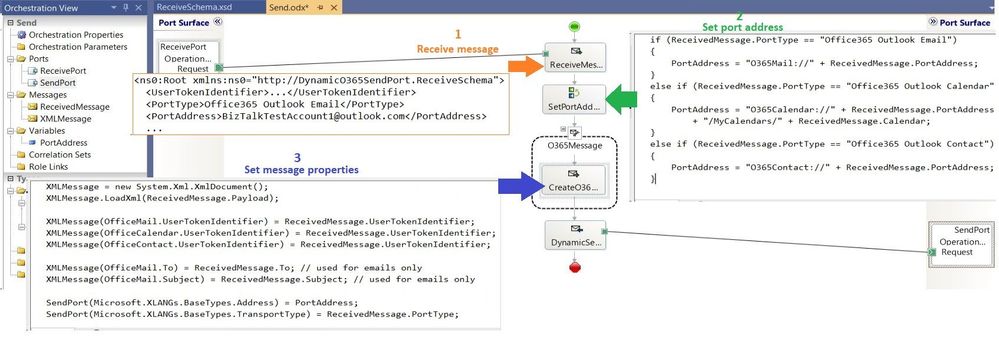

With this article, which is part of our recent Azure Security Center (ASC): Secure Score Blog Post Series, we are diving into the Enable Endpoint Protection security control. This security control contains up to 11 recommendations, depending on the resources you have deployed within your environment, and it is worth a maximum of 2 points that count towards your Secure Score. More information about Secure Score can be found here.

Why enabling endpoint protection?

After making sure that your VMs’ management ports are protected, as Kerinne explained in her article, you have already made a huge step towards securing your infrastructure from unauthorized access. However, malware cannot only be downloaded or installed by unauthorized actors, but also inadvertently by legitimate users who are supposed to access your machines. Once a machine is compromised, it could start downloading malware, sending SPAM, execute crypto mining activities, and so on. So, protecting network endpoints is an important step towards enhancing your security posture, but it is not the only security feature that needs to be implemented. With an Endpoint Protection solution, you make sure that if malicious code is found, you have a higher likelihood that it will be detected.

You can find more details about supported endpoint protection solutions here.

Recommendations within the Enable Endpoint Protection security control

The Enable Endpoint Protection security control contains the following 11 recommendations, depending on your environment and agent status:

- Install endpoint protection solution on virtual machines

- Install endpoint protection solution on your machines

- Endpoint protection solution should be installed on virtual machine scale sets

- Endpoint protection health failures should be remediated on virtual machine scale sets

- Endpoint protection health issues should be resolved on your machines

- Monitoring agent should be installed on virtual machine scale sets

- Monitoring agent should be installed on your machines

- Monitoring agent should be installed on your virtual machines

- Log Analytics agent should be installed on your Windows-based Azure Arc machines (Preview)

- Log Analytics agent should be installed on your Linux-based Azure Arc machines (Preview)

- Monitoring agent health issues should be resolved on your machines

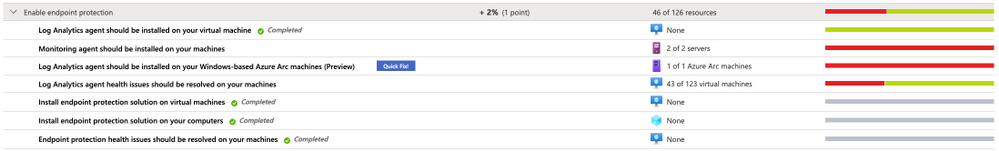

The screenshot below shows an environment in which only 7 of those 11 recommendations are within the scope of the Enable endpoint protection security control.

Figure 1 – Recommendations within the Enable Endpoint Protection security control

Figure 1 – Recommendations within the Enable Endpoint Protection security control

This is because recommendations which do not apply to any resource within your environment do not appear. Basically, the recommendations that can be part of the control can be reduced to four main categories:

Category #1: Install the Log Analytics agent

Azure Security Center relies on the Log Analytics agent to gain information about a server’s current operating system status, events, logfiles, but it is also used to check if a supported endpoint protection solution has been installed on a machine. So, having the monitoring agent installed is mandatory for making sure that your machines are fully monitored and taking advantages of the Azure Security Center Threat Protection for VMs. For the installation, you can use different approaches, depending on what best fits your needs.

The following recommendations belong to this category:

- Monitoring agent should be installed on your virtual machines. This recommendation applies to VMs running in Azure.

- Monitoring agent should be installed on your machines applies to servers which are not running in Azure.

- Monitoring agent should be installed on virtual machine scale sets applies to VM scale sets in Azure

- Log Analytics agent should be installed on your Windows-based Azure Arc machines (Preview) applies to Windows-based Azure Arc machines

- Log Analytics agent should be installed on your Linux-based Azure Arc machines (Preview) applies to Linux-based Azure Arc machines

Category #2: Install one of the support endpoint protection solutions

Besides having the Log Analytics agent installed, of course you want to make sure to have one of the supported endpoint protection solutions installed on your machines. Once you have installed the solution, the particular recommendation that applies to your machine will no longer appear. Only the Microsoft Azure Antimalware solution can be installed directly from the ASC portal. The others need to be installed manually, but then are discovered by Azure Security Center. The following recommendations are part of this category:

- Install endpoint protection solution on virtual machines applies to VMs running in Azure.

- Install endpoint protection solution on your machines applies to machines outside of Azure

- Endpoint protection solution should be installed on virtual machine scale sets applies to VM Scale Sets in Azure.

Category #3: Make sure to resolve Log Analytics Agent health issues

This basically is a single recommendation that is aimed to showing you that one of your machines’ Log Analytics agent is no longer sending data to Azure. This could be an issue with the agent, like the process is no longer running and/or cannot be (re)started, or the machine itself might no longer be working as expected or is switched off. This category is made up of only one recommendation:

- Monitoring agent health issues should be resolved on your machines

We have published more information on possible Log Analytics agent health issues and how to troubleshoot them in the Azure Security Center Troubleshooting Guide.

Category #4: Make sure to resolve health issues with the endpoint protection solution

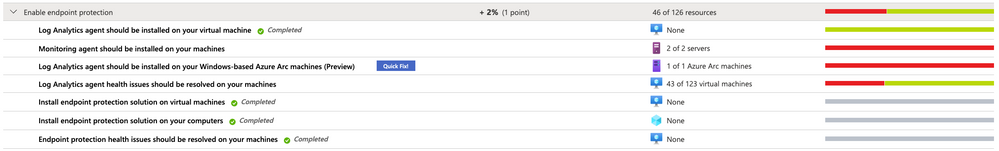

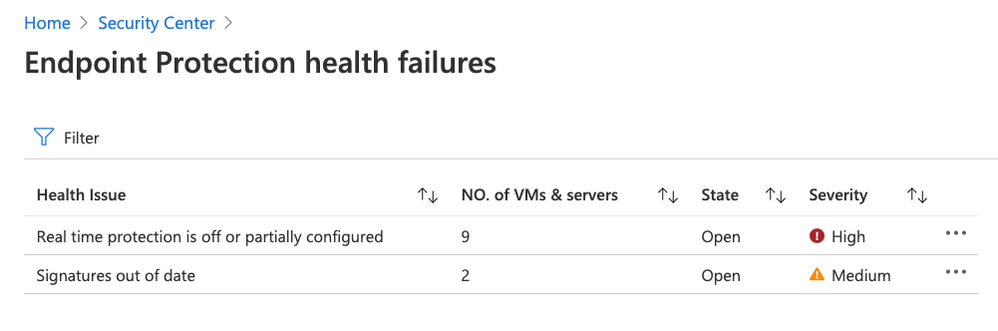

Besides health issues with the Log Analytics agent, Azure Security Center will also report health issues with the endpoint protection solution itself. Health issues include (but are not limited to) out of date signatures and misconfigured real-time protection, as shown in the following screenshot:

Figure 2 – Remediate endpoint protection health issues

Figure 2 – Remediate endpoint protection health issues

The following recommendations belong to this final category:

- Endpoint protection health failures should be remediated on virtual machine scale sets

- Endpoint protection health issues should be resolved on your machines

Next steps

As with all security controls, you need to make sure to remediate all recommendations within the control that apply to a particular resource in order to gain credit towards your secure score. That means, that both, the Log Analytics agent, and the respective endpoint protection solution you want to use are installed, running, and securely configured. Now, go ahead and remediate the Enable endpoint protection security control in your environment!

Reviewer

Thank you to @Yuri Diogenes, Principal Program Manager in the CxE ASC Team for reviewing this article.

by Scott Muniz | Sep 1, 2020 | Azure, Microsoft, Technology, Uncategorized

This article is contributed. See the original author and article here.

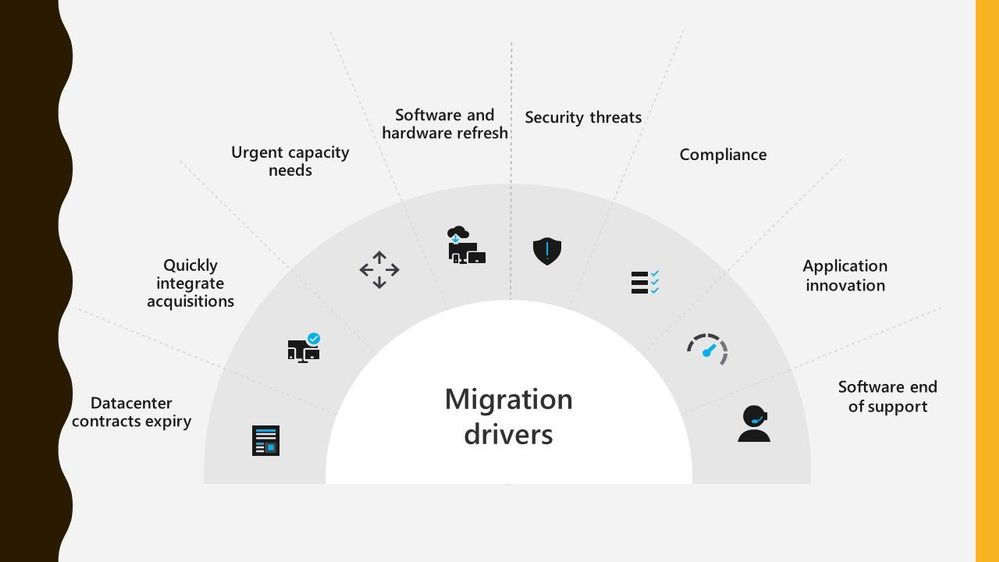

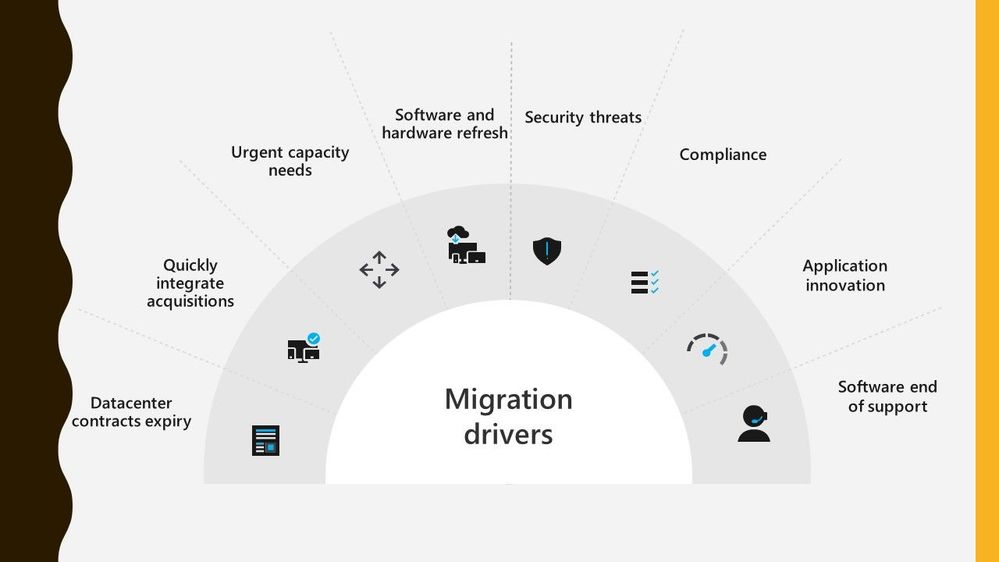

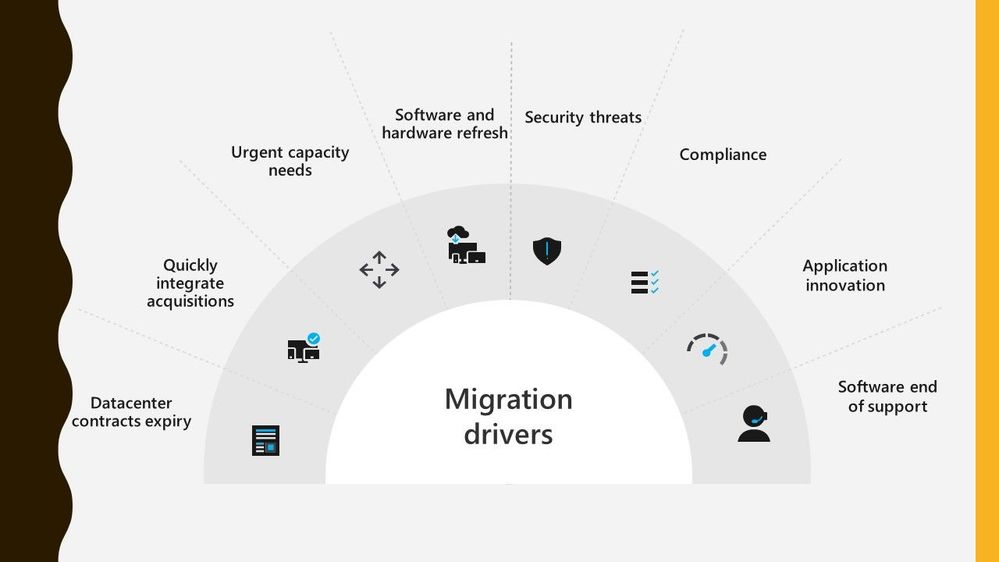

Migrating workloads from on prem datacentres to Azure is something a lot of organisations are thinking about. There are lots of drivers making customers think about this, whether it be physical capacity issues or software refresh timelines.

Migration Drivers

Migration Drivers

Migrating workloads is a big project and any tooling or guidance that can help make that project easier is often welcomed. And this is where Azure Migrate can assist. Azure Migrate is billed as the hub for datacentre migrations, able to assist with discovery, assessment, and migration of many key migration scenarios such as servers, databases, virtual desktops and data.

Azure Migrate is a free service, and often a question people ask how true that is so let’s tackle that subject.

Discovery and Assessment Costs

I’m a big advocate of organisations carrying out a full discovery and assessment of their environment as the first stage during their migration project, and I talk more about it in my blog post looking at the information you should be looking to discover during that discovery. Azure Migrate is a great tool for doing that initial discovery of your environment, it can collect basic information like server name, operating system version and resource configuration.

The other discovery piece of Azure Migrate: Server Assessment is being able to map how your servers all interconnect. Showing you the ports and services that connect, because let’s face it your servers don’t work independently from each other they are all part of a larger puzzle.

For this dependency visualization Azure Migrate: Server Assessment leverages the power and technology from Log Analytics. The dependency visualization is free for the first 180 days from the day of associating a Log Analytics workspace with the Server Assessment tool. After 180 days, standard Log Analytics charges will apply. And if you use any other solution within that Log Analytics workspace it is not free, and you’ll incur standard Log Analytics charges.

My recommendation is to leave the tool gathering the dependency visualization data for at least 30 days as that should capture a good picture of what happens within your environment and show any specific workload spikes.

Migration Costs

When you come to migrating your servers into Azure as Infrastructure as a Service (IaaS) virtual machines, the Azure Migrate: Server Migration tool can help you with that.

When you start to replicate machines from your own environment up in to Azure you will start to incur charges for Azure Storage, storage transactions and data transfer during replication. However, the license charges for Server Migration are waived for the first 180 days.

So the Azure Migrate: Server Assessment part is free for the first 180 days and I’d like to think that most companies are looking to complete the cutover and run their workload live in Azure well within that 180 day period.

Conclusion

So, I think it is fair to say that you can utilise Azure Migrate for your migration for free. Albeit some surrounding services may cost you but there are mechanisms in place that can avoid you incurring to many charges. I’d love to hear if you’ve used Azure Migrate during your migration project and how it helped keep your migration costs down. :grinning_face:

by Scott Muniz | Aug 31, 2020 | Uncategorized

This article is contributed. See the original author and article here.

In the dazzling array of services among the Microsoft cloud offerings, the rollout of TLS 1.0/1.1 deprecations is not being done all at once. This has lead to some confusion and questions around which endpoints are dropping the older TLS support and when.

Here I want to provide some dates and times of the endpoints, along with some .NET code guidance on how to use the newer TLS protocol (1.2), with some more information on TLS across the Microsoft Cloud.

So to begin, here are some of the endpoints that we know of.

| Service |

end date |

Release |

| Office 365 (Exchange/SharePoint/etc) |

|

|

| Office 365 Dod/GCC |

1/1/2020 |

|

| Office 365 consumer |

10/15/2020 |

|

| Graph |

|

|

| Graph Government |

8/5/2020 |

|

| Graph Consumer |

10/15/2020 |

|

| Azure |

|

|

| Azure Guest OS images |

1/1/2019 |

Family 6 release |

| Azure Application Proxy |

1/31/2019 |

|

| Azure intra-service traffic |

1/1/2020 |

|

| Azure SQL DB managed instance (pre SQL 2016) |

1/1/2020 |

|

| Azure Cosmos DB |

7/29/2020 |

|

| Azure File Sync |

8/1/2020 |

|

| Azure AD registration service in all sovereign clouds (GCC High, DoD etc.) |

8/31/2020 |

|

| Azure Automation |

9/1/2020 |

|

| Azure AD registration service in all commercial clouds |

10/30/2020 |

|

| Azure App Services (Web apps/functions/etc.) no announced timeline, can be set by admin still. |

?? |

|

If you are not sure about a particular endpoint, you can use this powershell to test the endpoint to see which versions of TLS it supports-

<#

Created by: whall

Date Created: 3/25/2020

Product Area Tags: Connectivity

Technology Tags: SSL TLS

Use Case:

Shows which version(s) of TLS is supported for a URL

Description:

When you run this, it checks each TLS type connection to see if it is supported.

Parameters:

-url this is the URL of the site you are testing against

Keywords: sockets secure https

Code Example Disclaimer:

Sample Code is provided for the purpose of illustration only and is not intended to be used in a production environment. THIS SAMPLE CODE AND ANY RELATED INFORMATION ARE PROVIDED 'AS IS'

-This is intended as a sample of how code might be written for a similar purpose and you will need to make changes to fit to your requirements.

-This code has not been tested. This code is also not to be considered best practices or prescriptive guidance.

-No debugging or error handling has been implemented.

-It is highly recommended that you FULLY understand what this code is doing and use this code at your own risk.

#>

#TLS check

param([Parameter(Mandatory=$true)][string]$url)

function TLSAvailable([string]$url){

Write-Host =======================

Write-Host $url

Write-Host =======================

[System.Net.ServicePointManager]::SecurityProtocol = "Tls"

try{

$resp1 = Invoke-WebRequest -uri $url -Method GET -DisableKeepAlive

if($resp1.StatusCode -eq 200){

Write-Host "TLS/SSL 1.0 supported" -ForegroundColor green

}

}catch {

Write-Host "TLS/SSL 1.0 not supported" -ForegroundColor Red

#$_.Exception

}

[System.Net.ServicePointManager]::SecurityProtocol = "Tls11"

try{

$resp2 = Invoke-WebRequest -uri $url -Method GET -DisableKeepAlive

if($resp2.StatusCode -eq 200){

Write-Host "TLS/SSL 1.1 supported" -ForegroundColor green

}

}catch {

Write-Host "TLS/SSL 1.1 not supported" -ForegroundColor Red

#$_.Exception

}

[System.Net.ServicePointManager]::SecurityProtocol = "Tls12"

try{

$resp3 = Invoke-WebRequest -uri $url -Method GET -DisableKeepAlive

if($resp3.StatusCode -eq 200){

Write-Host "TLS/SSL 1.2 supported" -ForegroundColor green

}

}catch{

Write-Host "TLS/SSL 1.2 not supported" -ForegroundColor Red

#$_.Exception

}

Write-Host =======================

}

TLSAvailable -url $url

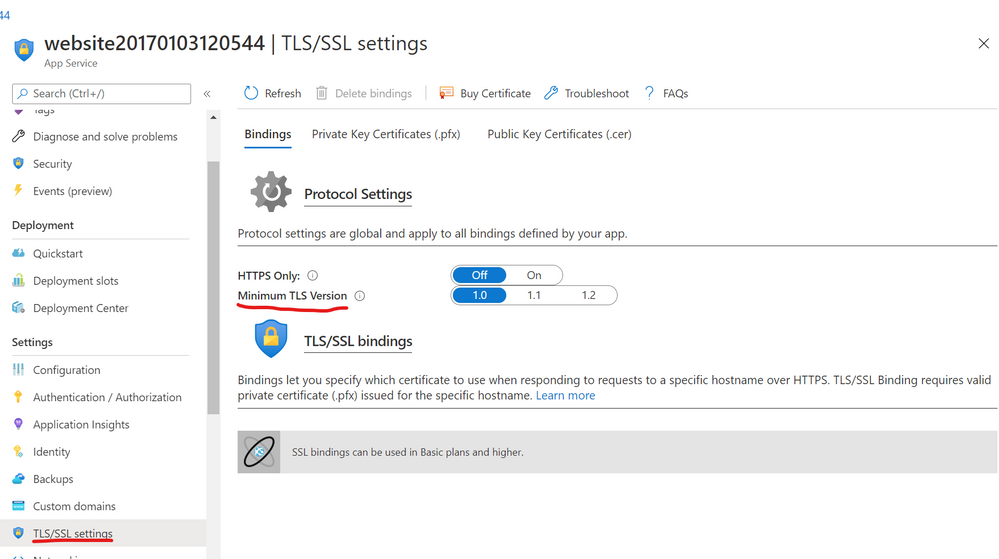

Azure Web Application Services

If you are running a .NET web application in the Azure web application services, you can set the TLS level under the application settings as below-

.NET Framework Code

If you are compiling your code for .NET framework 4.7 (4.7.1 for WCF apps) or later, it will use the default TLS version for the OS.

If you complied to a previous .NET framework version, it will use older versions of TLS unless you apply the right patch, and use one of the following methods-

- Set a registry setting to force all .NET code to use strong cryptography

- Set a config setting for the app context overrides to use the strong cryptography

- Add a line of code to change the TLS version used for HTTPS calls

Method 1 (System wide registry change)-

This enables something called strong cryptography which makes .NET use the strongest cryptography available currently. This affects all .NET applications with one registry change (per CLR version).

Enable strong cryptography for .NET CLR 4 versions (64 bit)-

Windows Registry Editor Version 5.00

[HKEY_LOCAL_MACHINESOFTWAREMicrosoft.NETFrameworkv4.0.30319]

“SchUseStrongCrypto”=dword:00000001

[HKEY_LOCAL_MACHINESOFTWAREWow6432NodeMicrosoft.NETFrameworkv4.0.30319]

“SchUseStrongCrypto”=dword:00000001

Enable strong cryptography for .NET CLR 2 versions (64 bit)-

Windows Registry Editor Version 5.00

[HKEY_LOCAL_MACHINESOFTWAREMicrosoft.NETFrameworkv2.0.50727]

“SchUseStrongCrypto”=dword:00000001

[HKEY_LOCAL_MACHINESOFTWAREWow6432NodeMicrosoft.NETFrameworkv2.0.50727]

“SchUseStrongCrypto”=dword:00000001

Method 2 (Config file change)-

Add the following to your .NET config file

<runtime>

<AppContextSwitchOverrides value=”Switch.System.Net.DontEnableSchUseStrongCrypto=false” />

</runtime>

Method 3 (Hardcoded in the application)-

Use this line of C# code in your application during the initialization so that all web calls will use the newer TLS 1.2 protocol-

System.Net.ServicePointManager.SecurityProtocol = SecurityProtocolType.Tls12;

If you are using PowerShell you can use the same object with this-

[System.Net.ServicePointManager]::SecurityProtocol = "Tls12"

More on these here-

https://docs.microsoft.com/en-us/dotnet/framework/network-programming/tls

Browser Support (Edge/Chrome/Edge legacy/IE/Firefox/Safari)

The following clients are known to be unable to use TLS 1.2. Update these clients to ensure uninterrupted access to the service.

- Android 4.3 and earlier versions

- Firefox version 5.0 and earlier versions

- Internet Explorer 8-10 on Windows 7 and earlier versions

- Internet Explorer 10 on Windows Phone 8

- Safari 6.0.4/OS X10.8.4 and earlier versions

Edge chromium disabled 1.0 and 1.1 around July 2020 (ver 84).

For all supported versions of Internet Explorer 11 and Microsoft Edge Legacy (EdgeHTML-based), TLS 1.0 and TLS 1.1 will be disabled by default as of September 8, 2020.

TLS 1.3

The next version of TLS is already implemented in some browsers, and is just around that corner, but as of yet should not be causing issues since TLS 1.2 is just getting to the lowest mandatory version.

More information

For more information on the patches for various products and more details to some of the .NET settings related to TLS please see the following articles.

Azure

https://azure.microsoft.com/en-us/updates/azuretls12/

https://azure.microsoft.com/en-us/updates/?query=TLS

Windows/.NET/SQL/SharePoint (on-Prem)

SQL-

https://support.microsoft.com/en-us/help/3135244/tls-1-2-support-for-microsoft-sql-server

SharePoint (this covers .NET/windows/SQL/browsers as well)-

https://docs.microsoft.com/en-us/sharepoint/security-for-sharepoint-server/enable-tls-1-1-and-tls-1-2-support-in-sharepoint-server-2019

.NET 4.5-

https://docs.microsoft.com/en-us/sharepoint/security-for-sharepoint-server/enable-tls-1-1-and-tls-1-2-support-in-sharepoint-server-2019#34—enable-strong-cryptography-in-net-framework-45-or-higher

.NET 3.5 update for TLS 1.1/1.2 support-

https://docs.microsoft.com/en-us/sharepoint/security-for-sharepoint-server/enable-tls-1-1-and-tls-1-2-support-in-sharepoint-server-2019#35—install-net-framework-35-update-for-tls-11-and-tls-12-support

.NET programming guidance-

https://docs.microsoft.com/en-us/dotnet/framework/network-programming/tls

![[Guest Blog] My Microsoft Ignite Experience as a Humans of IT Student Ambassador](https://www.drware.com/wp-content/uploads/2020/08/large-1304-999x675.)

by Scott Muniz | Aug 31, 2020 | Uncategorized

This article is contributed. See the original author and article here.

This article was written by Microsoft Ignite 2020 Humans of IT student advisor Kiana Alikahedemi, a Computer Science PhD candidate at the University of Florida. Kiana was previously a Humans of IT student ambassador herself at Microsoft Ignite 2019 and is returning this year as a mentor to the incoming batch of new student ambassadors from five HBCUs. Kiana shares about her experience as a student ambassador, and excitement about this year’s Microsoft Ignite Humans of IT track. This is the third article in a series featuring this year’s Humans of IT student ambassadors and advisors.

Although I have been living and studying in the US for many years now, my childhood was spent growing up in the Middle East where there is a dire lack of diversity in the engineering workforce. Many women in my community do not earn degrees, falling victim to the belief that they can’t. I vividly remember how from a young age, I decided not to let this be my story. I was always interested in problem-solving and science concepts in school. My passion led me to choose Computer Science as a major. During my undergrad days, I consciously looked for mentors who were similarly passionate about tech and had experience in helping others build their careers. It didn’t stop at my bachelor’s degree. Shortly after, I was admitted into graduate school, which brought me closer to my dream job in the tech industry and academia.

A lack of diversity continues to be a prevalent issue in most regions – I, too, experienced a lot of bias and discrimination firsthand while in graduate school. Last year, my friend heard about the Humans of IT Student Ambassador program and nominated me as she knew my passion for diversity and equity. I decided to take the challenge and join the other students to promote inclusion in tech and adopt a “human-first” approach when it comes to technology. That’s how I first got involved with the Microsoft Humans of IT Community, which turned out as a valuable experience for me to connect with diverse and wonderful people in tech from all around the world, and celebrate our common humanity.

This is the team that helped me to shape one of the best experience I’ve ever had in tech:

During Microsoft Ignite, I participated in insightful and amazing talks. I had the pleasure to meet truly amazing and inspiring people such as Haben Girma, a Disability Rights Lawyer, Author, and Speaker, who I’ve always praised for her courage and persistence. I even got to talk to her in person during the conference, and received a signed copy of her book. It was truly the highlight of my entire week!

It was incredible to meet a diverse set of people who, like me, had experienced the similar discrimination or bias in school, at work, or within their communities and simply wanted to belong in tech. Furthermore, it was also rewarding to meet people like Microsoft’s Bryce Johnson who mindfully considers diversity, accessibility and user needs in every stage of their work (If you don’t know him, just search his name you will be amazed. Hint: one of the biggest technologies he helped developed – the Xbox adaptive controller – is in the next picture!).

The Microsoft Humans of IT community has taught me to be resilient and confident. All of the conversations and encounters made me feel like I was a part of a broader community with the vision to solve diversity issues and help empower all humans out there through technology. As a community, we will pave the way for others to realize that they are incredible humans who have earned their seat at the table and have valuable skills to offer. We pledge to use our technical skills for good, and to help solve challenging world issues.

I took away all the inspirations from Microsoft Ignite 2019 with me and applied in every single decision or experience I had since. Naturally, when I heard that it was time for Microsoft Ignite 2020 (coming up in just 22 days!), I immediately put my hand up to be a student ambassador again. Not only did the Humans of IT Community welcome me back as a student ambassador, I even got the chance to become a student advisor to this year’s inspiring batch of students from Historically Black Colleges and Universities (HBCUs) to help mentor the next generation of technologists!

Amidst a global pandemic, this is an amazing opportunity to show up, be present and demonstrate how we can better understand the importance of community. I cannot wait to share lessons, challenges, and obstacles I encountered in these hard times with my Microsoft Ignite community. Together, we will overcome challenges and become #StrongerTogether.

#HumansofIT

#StudentAmbassador

#MicrosoftIgnite2020

by Scott Muniz | Aug 31, 2020 | Uncategorized

This article is contributed. See the original author and article here.

BizTalk 2020 CU1 introduced support for dynamic send ports in the Office 365 Outlook adapters. In this post, we go over this improvement into more details.

Pre-requisites

Messages sent from a dynamic send port with transport type set to one of the Office 365 Outlook adapters require the UserTokenIdentifier context property. This identifier is created when the user signs in to Office 365, which is done in the transport properties of the Office 365 Outlook adapters as documented in Office 365 Outlook adapters in BizTalk.

Once a user is signed-in, the UserTokenIdentifier is retrieved from the bindings, as shown below, in the case of an exported Mail send port (placeholder nnnnnnnn-nnnn-nnnn-nnnnnnnnnnnn):

<SendPort Name="MailSendPort2" IsStatic="true" IsTwoWay="false" BindingOption="0" AnalyticsEnabled="false">

<Description xsi:nil="true" />

<TransmitPipeline

Name="Microsoft.BizTalk.DefaultPipelines.PassThruTransmit"

FullyQualifiedName="Microsoft.BizTalk.DefaultPipelines.PassThruTransmit, Microsoft.BizTalk.DefaultPipelines, Version=3.0.1.0, Culture=neutral,

PublicKeyToken=31bf3856ad364e35" Type="2" TrackingOption="None" Description="" />

<PrimaryTransport>

<Address>O365Mail://BizTalkTestAccount1@outlook.com</Address>

<TransportType Name="Office365 Outlook Email" Capabilities="11" ConfigurationClsid="48b96e09-bd96-4f46-95ef-57accc55f23d" />

<TransportTypeData><CustomProps><DefaultCC vt="8" /><UserTokenIdentifier vt="8">nnnnnnnn-nnnn-nnnn-nnnn-nnnnnnnnnnnn</UserTokenIdentifier><FileAttachments vt="8" /><DefaultTo vt="8">BizTalkTestAccount2@outlook.com</DefaultTo><EmailAddress vt="8">BizTalkTestAccount1@outlook.com</EmailAddress><AttachBizTalkMessageParts vt="11">0</AttachBizTalkMessageParts><DefaultSubject vt="8">Hi from BizTalkTestAccount1</DefaultSubject><DefaultImportance vt="8">Normal</DefaultImportance></CustomProps></TransportTypeData>

Note that the UserTokenIdentifier is tied to a signed-in account and a transport type. A static send port needs to be created for each Office 365 transport type in order to get an identifier for each. For a given sign-in, the value of the UserTokenIdentifier may be the same for all Office 365 transport types, but static send ports need to be created for each transport type nonetheless.

Other important points to be aware of:

- Static send ports can be deleted afterwards. They are not needed once the UserTokenIdentifiers are known.

- UserTokenIdentifiers remain valid regardless of whether a dynamic or static send port exists or not, and after port deletions.

- The same UserTokenIdentifier can be used by multiple ports.

Scenario

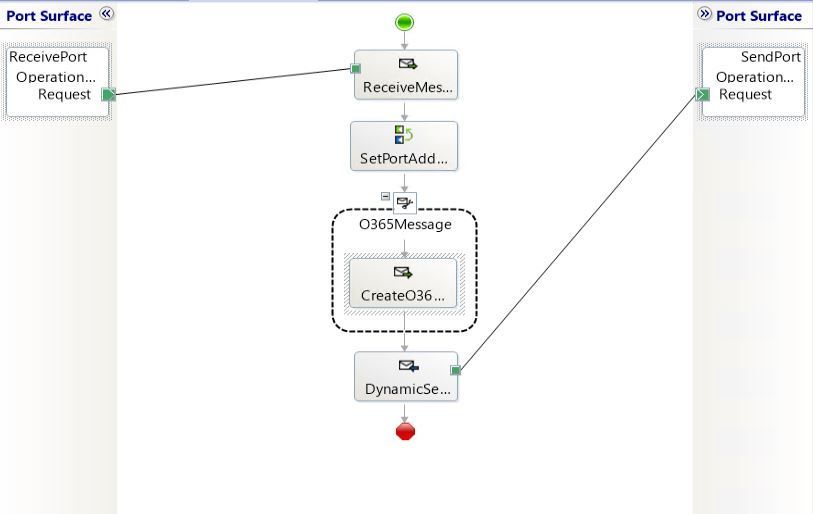

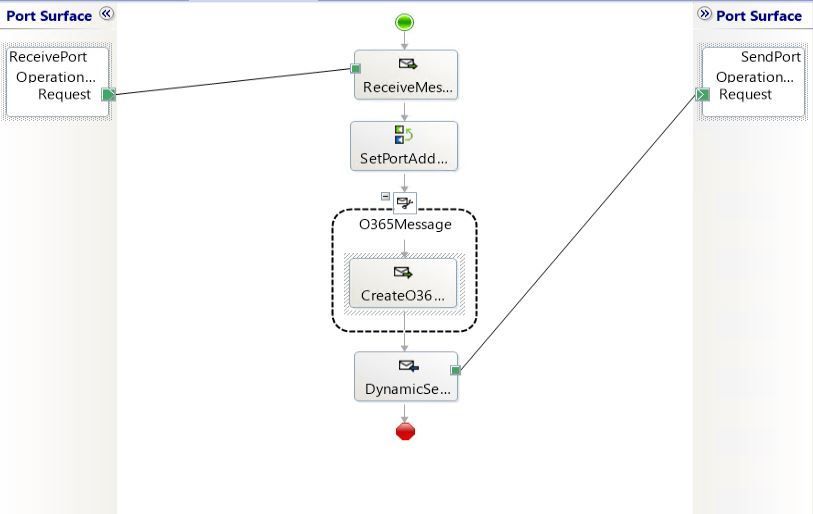

The demo scenario is based on a simple orchestration:

Received messages follow the schema:

|

Element

|

Description

|

|

UserTokenIdentifier

|

Value of the UserTokenIdenfier acquired ahead of time by exporting the static ports.

The UserTokenIdentifier is provided by received messages. In the general case, the UserTokenIdentifier can come from any source. For instance one could keep a mapping of email addresses to UserTokenIdentifiers and make this mapping available for lookup at runtime.

|

|

PortType

|

Name of the adapter transport type:

- Office365 Outlook Email

- Office365 Outlook Calendar

- Office365 Outlook Contact

|

|

PortAddress

|

Any unique identifier for the dynamic send port. Typically it is a user-friendly string. Since we’re using an Office 365 account, we used an email address (e.g., BizTalkAccount1@outlook.com).

|

|

To

|

(only for O365 Email) Email address to send mails to, if the transport type is Office 365 Outlook Email.

|

|

Subject

|

(only for O365 Email) Email subject .

|

|

Calendar

|

(only for O365 Calendar) Calendar where the calendar items are created, when using Office 365 Outlook Calendar transport type.

|

|

Payload

|

<![CDATA[content]] where content can be either:

- Email body

- Calendar item in XML according to the Office365OutlookCalendarSend.xsd provided in the BizTalk installation folder under SDKSchemas.

- Contact item in XML following the Office365OutlookContactSend.xsd in the same location.

CDATA is used to make the XML payloads (calendar and contact) opaque to XML parsers on the receive side. The payloads are used on the send side.

|

In the case of calendar and contact items, the payloads are based on the schemas provided in the BizTalk installation folder under C:Program Files (x86)Microsoft BizTalk ServerSDKSchemas (more info in Office 365 Outlook Adapters in Action).

Examples

|

Email

|

<ns0:Root xmlns:ns0="http://DynamicO365SendPort.ReceiveSchema">

<UserTokenIdentifier>nnnnnnnn-nnnn-nnnn-nnnn-nnnnnnnnnnnn</UserTokenIdentifier>

<PortType>Office365 Outlook Email</PortType>

<PortAddress>BizTalkAccount1@outlook.com</PortAddress>

<To>BizTalkAccount2@outlook.com</To>

<Subject>Hello</Subject>

<Payload><![CDATA[<?xml version="1.0" encoding="utf-8"?><EmailBody>body</EmailBody>]]></Payload>

</ns0:Root>

|

|

Calendar

|

<ns0:Root xmlns:ns0="http://DynamicO365SendPort.ReceiveSchema">

<UserTokenIdentifier>nnnnnnnn-nnnn-nnnn-nnnn-nnnnnnnnnnnn</UserTokenIdentifier>

<PortType>Office365 Outlook Calendar</PortType>

<PortAddress>BizTalkAccount1@outlook.com</PortAddress>

<To>BizTalkAccount2@outlook.com</To>

<Subject>Hello</Subject>

<Calendar>Calendar</Calendar>

<Payload>

<![CDATA[

<ns0:Event xmlns:ns0="http://schemas.microsoft.com/BizTalk/Office365OutlookCalendar/Send">

<subject>Let's meet</subject>

<body><content>Info for the upcoming meeting</content></body>

<start><dateTime>2020-06-25</dateTime><timeZone>Pacific Standard Time</timeZone></start>

<end><dateTime>2020-06-25</dateTime><timeZone>Pacific Standard Time</timeZone></end>

<attendees>

<emailAddress><address>BizTalkAccount2@outlook.com</address><name>BizTalkAccount2</name></emailAddress>

</attendees>

</ns0:Event>

]]>

</Payload>

</ns0:Root>

|

|

Contact

|

<ns0:Root xmlns:ns0="http://DynamicO365SendPort.ReceiveSchema">

<UserTokenIdentifier>nnnnnnnn-nnnn-nnnn-nnnn-nnnnnnnnnnnn</UserTokenIdentifier>

<PortType>Office365 Outlook Contact</PortType>

<PortAddress>BizTalkAccount1@outlook.com</PortAddress>

<To>BizTalkAccount2@outlook.com</To>

<Subject>Hello</Subject>

<Payload>

<![CDATA[

<ns0:Contact xmlns:ns0="http://schemas.microsoft.com/BizTalk/Office365OutlookContacts/Send">

<displayName>displayName_3</displayName>

more contact fields

</ns0:Contact>

]]>

</Payload>

</ns0:Root>

|

Dynamic Send Port Configuration

To keep things simple, an XML document is created on the fly and properties are added. The message creation expression is:

XMLMessage = new System.Xml.XmlDocument();

// Payload from the CDATA section of received messages

XMLMessage.LoadXml(ReceivedMessage.Payload);

// Token identifier (required)

XMLMessage(OfficeMail.UserTokenIdentifier) = ReceivedMessage.UserTokenIdentifier;

XMLMessage(OfficeCalendar.UserTokenIdentifier) = ReceivedMessage.UserTokenIdentifier;

XMLMessage(OfficeContact.UserTokenIdentifier) = ReceivedMessage.UserTokenIdentifier;

// Transport type (required)

SendPort(Microsoft.XLANGs.BaseTypes.TransportType) = ReceivedMessage.PortType;

// Port address

SendPort(Microsoft.XLANGs.BaseTypes.Address) = PortAddress; // Generated in separate expression.

// Additional fields for Mail transport type

XMLMessage(OfficeMail.To) = ReceivedMessage.To;

XMLMessage(OfficeMail.Subject) = ReceivedMessage.Subject;

Note: Additional promoted are available for Mail transport type, such as CC, importance and attached files (see Office 365 Outlook Email Adapter).

The port address is generated from received messages by using the following expression:

if (ReceivedMessage.PortType == "Office365 Outlook Email")

{

PortAddress = "O365Mail://" + ReceivedMessage.PortAddress;

}

else if (ReceivedMessage.PortType == "Office365 Outlook Calendar")

{

PortAddress = "O365Calendar://" + ReceivedMessage.PortAddress + "/MyCalendars/" + ReceivedMessage.Calendar;

}

else if (ReceivedMessage.PortType == "Office365 Outlook Contact")

{

PortAddress = "O365Contact://" + ReceivedMessage.PortAddress;

}

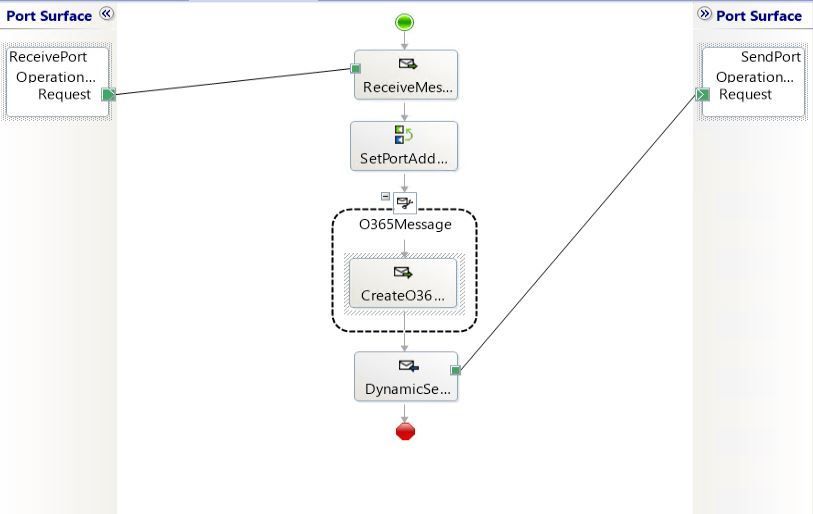

Putting it all together

The annotated view of the demo orchestration below illustrates the data flow. Received messages provide UserTokenIdentifiers and transport type to be used for the messages sent by the dynamic send port.

BizTalk code is attached to this post for reference.

As a fully managed service, Azure Data Explorer gives you best–in-class performance. You can get results from one billion records in less than a second without modifying the data or metadata. ADX can accept hundreds of billions of records a day and store this data reliably for months. ADX is specifically built to handle complex analytics queries on the data and return answers in a matter of seconds, even when the data is a combination of structured, semi-structured (JSON-like nested types), and unstructured (free text).

Azure Data Explorer is a managed Platform as a Service (PaaS), so you can reduce the management and operations overhead and save the cost of purchasing and maintaining hardware. Auto–scale capabilities are available for better cost control. Due to the efficient data ingestion process and querying engine, compute resources are consumed wisely and economically. The inverted-index columns-store design enables ADX to have a superb data compression ratio, which reduces the amount of required storage, compared to other services. You can run as many queries as you need, thanks to the optimized cost structure, without incurring additional costs. In addition, ADX provides a granular cache policy that customers can set to differentiate between hot data cache and cold data cache, and get even more control over the costs.

Connect Kibana to Azure Data Explorer with K2Bridge

Auto-generate the table and its mapping using One-Click IngestionIn the Azure portal, under your cluster page, on the left menu, select Query (or use Azure Data Explorer Web UI/Desktop application) and run the following command. This command creates a table with the name ‘MyStormEvents’, with columns according to the schema of the data.

Recent Comments