by Contributed | Aug 16, 2023 | Technology

This article is contributed. See the original author and article here.

Introduction

In the realm of high-performance computing (HPC) and AI workloads, the need for agile and powerful storage solutions cannot be overstated. Azure Managed Lustre (AMLFS) has emerged as a game-changing solution, providing managed, pay-as-you-go file systems optimized for these data-intensive tasks. Building upon the success of its General Availability (GA) launch last month and in direct response to customer feedback that we received during our Preview period, we’re excited to unveil two new performance tiers for AMLFS, designed to cater to the diverse array of customer needs. This blog post explores the specifics of these new tiers and how they embody a customer-centric approach to innovation.

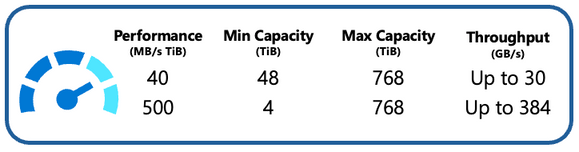

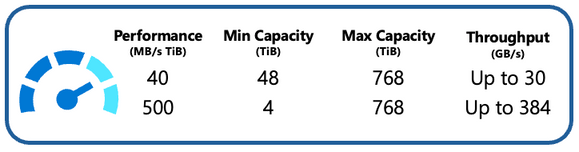

40 MB/s per TiB Option: Optimizing Cost and Capacity

The 40MB/s per TiB performance tier represents a significant milestone for Azure Managed Lustre users. It directly addresses the needs of customers dealing with larger datasets, providing a lower-cost option without compromising on performance. Built on the exceptional speed, reliability, and low-latency characteristics of Azure Managed SSDs, the 40MB/s per TiB configuration, ensures that organizations can unlock the power of HPC and AI without incurring exorbitant costs. This tier offers a default maximum file system capacity of 768 TiB. With this option, Azure Managed Lustre becomes an even more accessible solution for enterprises seeking scalable and cost-effective storage solutions.

500 MB/s per TiB Option: Tailored Performance for Massive Throughput Requirements

Azure recognizes that not all workloads require massive storage capacities. The introduction of the 500MB/s per TiB performance tier specifically speaks to the needs of customers dealing with smaller datasets. Constructed on top of Azure Managed SSDs, this tier strikes a balance between performance and capacity, ensuring that users can access a storage solution that aligns precisely with their requirements. With a minimum file system size of 4 TiB, this option empowers organizations to avoid over-provisioning on capacity to meet their performance requirements, allowing customers to efficiently manage their resources. This granular approach to performance tiers demonstrates Azure’s commitment to catering to a wide spectrum of customer needs.

Pricing

The introduction of these performance tiers underscores Azure’s dedication to listening to its customers and iterating its offerings to match real-world demands. While pricing details for these new options are set to be published next month, we want to be completely transparent. Below is a glimpse of the anticipated pricing across different regions for the 40 MB/s per TiB and 500 MB/s per TiB performance tiers:

Region

|

40 MB/s per TiB

(per GB per month)

|

500 MB/s per TiB

(per GB per month)

|

Australia East

|

$0.090

|

$0.396

|

Brazil South

|

$0.145

|

$0.680

|

Canada Central

|

$0.090

|

$0.365

|

Central India

|

$0.090

|

$0.403

|

Central US

|

$0.102

|

$0.418

|

East US

|

$0.083

|

$0.340

|

East US2

|

$0.083

|

$0.340

|

North Europe

|

$0.083

|

$0.374

|

SouthCentral US

|

$0.100

|

$0.408

|

Southeast Asia

|

$0.090

|

$0.421

|

Sweden Central

|

$0.088

|

$0.444

|

UK South

|

$0.086

|

$0.467

|

West Europe

|

$0.088

|

$0.444

|

West US2

|

$0.083

|

$0.340

|

West US3

|

$0.083

|

$0.340

|

[Note: Actual pricing details will be found on the official Azure pricing page next month (https://azure.microsoft.com/en-us/pricing/details/managed-lustre/)]

The introduction of the 40 MB/s per TiB and 500 MB/s per TiB performance tiers for Azure Managed Lustre marks a significant step forward in the realm of HPC and AI storage solutions. These options cater to a diverse range of workloads, from large-scale datasets to smaller, performance-intensive tasks. Azure’s responsiveness to customer feedback and its focus on aligning its services with actual user needs further solidify its position as a leading cloud service provider. As the industry continues to evolve, Azure Managed Lustre remains at the forefront of delivering innovative solutions that empower organizations to thrive in the digital age.

Learn more about using Azure Managed Lustre for your HPC and AI solutions

#AzureHPC #AzureHPCAI

by Contributed | Aug 15, 2023 | Technology

This article is contributed. See the original author and article here.

With AI deeply embedded, Teams is the smart place to work. But what does it really mean to be smart? For us, it means that when AI is present, it is there to help you level up your work in a way that does not replace you or take away from your agency. We believe that AI should augment and amplify your potential, abilities, and productivity. With Copilot in Teams, you’ll experience a whole new way to work and be able to do things you’ve never been able to do before.

When Microsoft 365 Copilot was first announced in March, it was the start of Teams adding intelligence in ways that unlock new possibilities across communication and collaboration. This blog highlights what’s been announced for Copilot in Teams and some examples of ways you can engage with Copilot to get the most value – regardless of if you are participating in the Early Access Program (EAP) or not. Let’s take a look at where things started and where we are today.

Our first milestone, was announcing Microsoft 365 Copilot and Copilot in Teams meetings. The Microsoft 365 Copilot chat experience – available in Teams and in the browser – is an entirely new experience that works alongside you. It uses the power of Microsoft Graph to bring together data from across the internet, your documents, presentations, email, files, meetings, chats, documents, and 3rd party applications. It has the potential to save you a lot of time and effort throughout your work day. Using your own words to ask Copilot a question or selecting a suggested prompts – right in Teams – you will be able quickly find what you need to move your work forward. For example, rather than having to take time searching through your recent emails, chats, meetings notes, presentations, and other documents to prepare for a meeting – you can just ask Copilot. Copilot will find and summarize everything related to the project your meeting is about so that you don’t have to. In addition, you can get updated on the latest news related to a specific topic, summarize outstanding project deliverables with potential risks, and so much more.

Copilot in Teams meetings makes your meetings even more effective by becoming a powerful tool that helps you complete common meetings tasks. You can get up to speed quickly on anything you’ve missed when you join late, capture unresolved issues before the meeting ends, list all the questions that were left unresolved, identify the right people for specific follow-ups, or even create a table of the pros and cons for a decision that was being discussed. One of my personal favorites to use following an active discussion is to create a table of all the questions asked and their answers. It makes it easy to share back with the team in case any else had similar questions or as a starting point for a Frequently Asked Questions document. Just by using your own words or using a suggested prompt you can get the information you need without disrupting the discussion during a live meeting or afterwards with Intelligent Recap.

Most recently, at Inspire, we announced the next wave of Copilot in Teams with Copilot in Teams Phone and Copilot in Teams Chat, bringing the same great functionality from meetings to impromptu chats and calls. With Copilot in Teams Phone, you can make and receive calls from your Teams app on any device, and get real-time summarization, and insights. You can ask Copilot to draft notes for you and highlight key points, such as names, dates, numbers, and tasks during your call – for both VoIP and PSTN calls. Imagine needed to kick off a new project with a partner outside of your organization. You give the partner a call to provide an outline of the project and discuss the tasks that they will need to collaborate with you on. As your conversation unfolds, Copilot is summarizing the call and capturing the partners questions on timing, their feedback, as well as next steps. After the call, you can use this information to quickly send a follow-up note to confirm the project plan based on your conversation.

Copilot in Teams chat will help you stay on top of your conversations by quickly getting up to speed, summarizing or recapping your chats, and synthesizing key information across your Teams chat threads. The best part? You’ll be able to do all this without interrupting your conversation flow or endlessly scrolling through chats. To fully understand the potential of Copilot in Teams chat think about all the times that you have been away from work – whether in back to back meetings for a day, or out on vacation for 3 days. While you were away, the conversations don’t stop. Imagine that your team is working on a new marketing campaign. While you were away your team was using chat to discuss potential ideas. Rather than endlessly scrolling through the chat conversation, you can just ask Copilot questions to get a quick summary of what you missed over the past 3 days, the top ideas that were discussed, and a list of action items to follow-up on. You can even ask Copilot to create a table of the final ideas along the pros and cons discussed. This way you get back in the loop quickly without having to interrupt the team so everyone can continue focus on next steps for the new campaign. Catching me up after being away from active chats and asking for documents I need to review are a few things I find myself regularly asking Copilot to do in Teams chat.

If you’re interested in going even deeper, check out this episode of Inside Microsoft Teams , where we explore how Copilot in Teams Meetings, Phone, and chat work with the lead product managers responsible for building each experience. We are excited to continue to innovate and transform the way work happens, together. Stay tuned as we roll out more updates in the coming months.

by Contributed | Aug 14, 2023 | Technology

This article is contributed. See the original author and article here.

Contributors: Eliran Azulai and Yuval Pery

Monitoring, management, and innovation are core pillars of Azure Firewall. With this in mind, we are delighted to share the following new capabilities:

- Resource Health is now in public preview

- Embedded Firewall Workbooks is now in public preview

- Latency Probe Metric is now in general availability

When you monitor the firewall, it’s the end-to-end experience that we continuously strive to improve. Our aim is to empower you to make informed decisions quickly and maximize your organization’s security demands. Understanding the importance of having visibility into your network, this release focuses on making it easier for you to monitor, manage, and troubleshoot your firewalls more efficiently.

Azure Firewall is a cloud-native firewall as a service offering that enables customers to centrally govern and log all their traffic flows using a DevOps approach. The service supports both application and network-level filtering rules and is integrated with the Microsoft Threat Intelligence feed to filter known malicious IP addresses and domains. Azure Firewall is highly available with built-in auto-scaling.

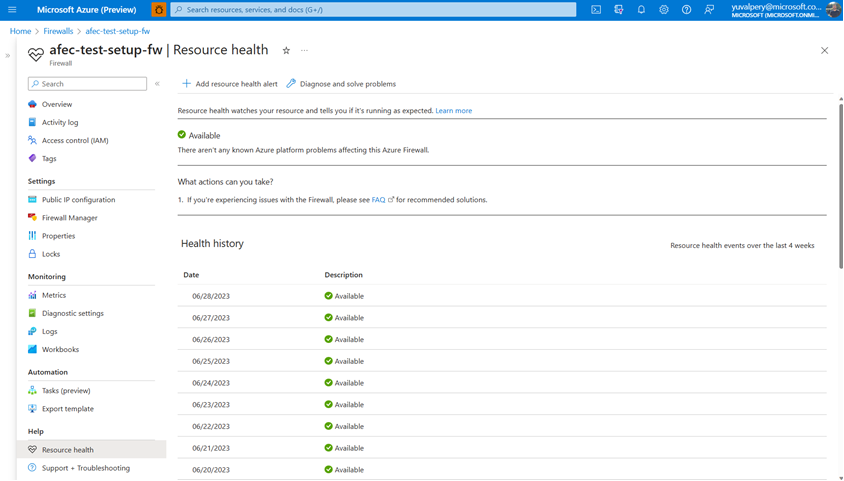

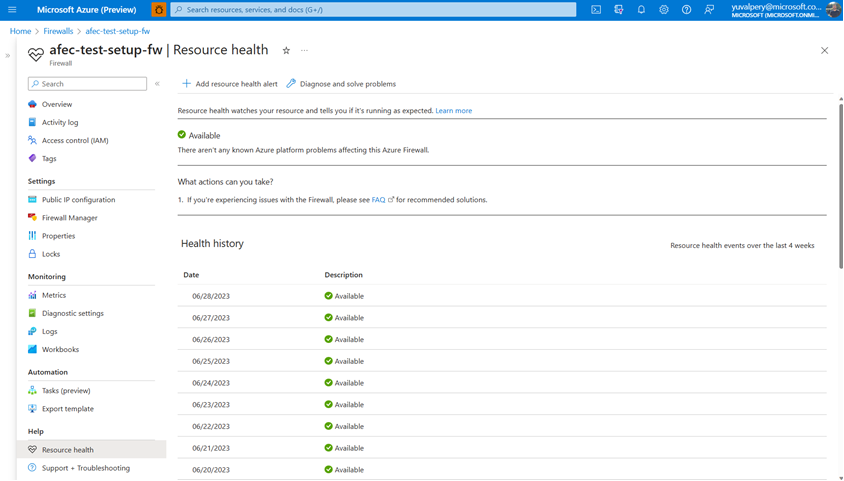

Resource Health is now in public preview

With the Azure Firewall Resource Health check, you can now view the health status of your Azure Firewall and address service problems that may affect your Azure Firewall resource. Resource Health allows IT teams to receive proactive notifications regarding potential health degradations and recommended mitigation actions for each health event type. For instance, you can determine if the firewall is running as expected with an “Available” status or if there was downtime due to platform events with an “Unavailable” status.

This preview is automatically enabled on all firewalls and no action is required to enable this functionality. For more information, see Azure Resource Health overview – Azure Service Health | Microsoft Learn

Easily view the resource health status and history of your firewall

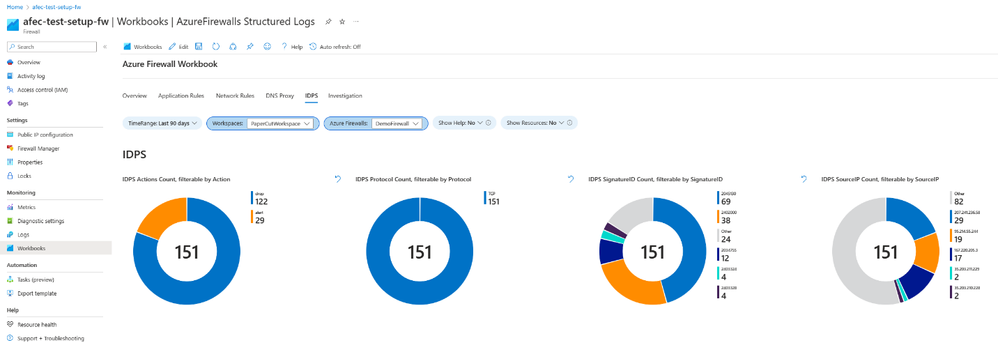

Embedded Firewall Workbooks are now in public preview

The Azure Firewall Workbook presents a dynamic platform for analyzing Azure Firewall data. Within the Azure portal, you can utilize it to generate visually engaging reports. By accessing multiple Azure Firewalls deployed throughout your Azure infrastructure, you can integrate them to create cohesive and interactive experiences.

With the Azure Firewall Workbook, you can extract valuable insights from Azure Firewall events, delve into your application and network rules, and examine statistics regarding firewall activities across URLs, ports, and addresses. It enables you to filter your firewalls and resource groups, and effortlessly narrow down data sets based on specific categories when investigating issues in your logs. The filtered results are presented in a user-friendly format, making it easier to comprehend and analyze.

Now, Azure Firewall predefined workbooks are two clicks away and fully available from the Monitor section in the Azure Firewall Portal UI:

View valuable insights in a dashboard view using Azure Firewall Embedded Workbooks

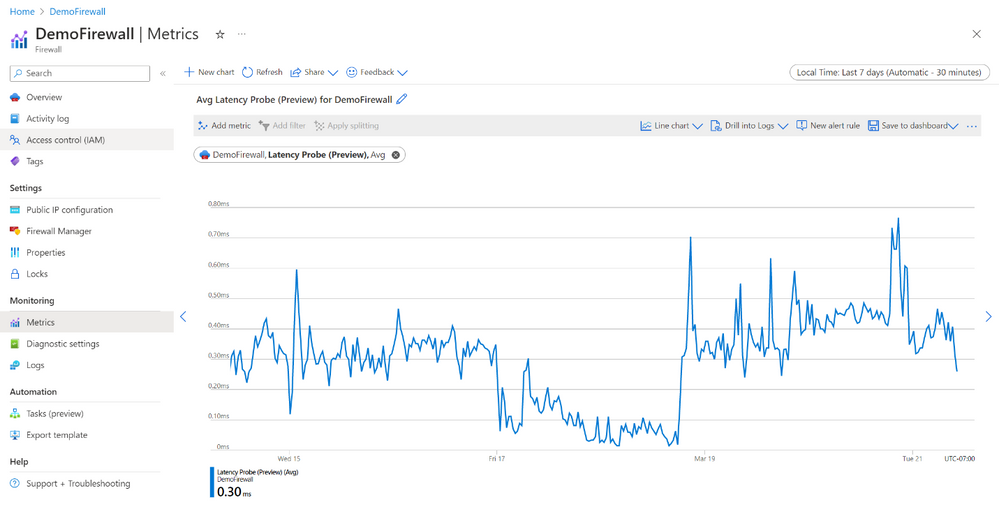

Latency Probe metric is now generally available

The Latency Probe metric is designed to measure the overall latency of Azure Firewall and provide insight into the health of the service. IT administrators can use the metric for monitoring and alerting if there is observable latency and diagnosing if the Azure Firewall is the cause of latency in a network. This troubleshooting metric is helpful for proactively engaging in potential issues to traffic or services in your infrastructure.

Azure Firewall latency can be caused by various reasons, such as high CPU utilization, throughput, or networking issues. As an important note, this tool is powered by Ping Mesh technology, which means that it measures the average latency of the ping packets to the firewall itself. The metric does not measure end-to-end latency or the latency of individual packets.

View the overall latency of the Azure Firewall using the Latency Probe metric

Learn more

When you’re ready to try these new capabilities, just navigate to Azure Firewall Monitoring in the Azure Portal, and select Logs, Metrics, or Workbooks to use these new features. If you do not have logs, navigate to Azure Firewall Diagnostic settings to get started. And continue to provide us with feedback! To give us feedback just tap the feedback icon  in the Azure Portal. Your feedback is invaluable in crafting an improved experience that caters to your specific needs.

in the Azure Portal. Your feedback is invaluable in crafting an improved experience that caters to your specific needs.

Learn more in the following support articles:

Latency Probe metric – Microsoft Learn

Resource Health – Microsoft Learn

Azure Firewall Workbook – Microsoft Learn

Azure Firewall – Microsoft Learn

Azure Firewall Manager – Microsoft Learn

About the author

Suren Jamiyanaa is a Product Manager in Azure Network Security. She joined the team in 2019 where she focuses on innovating the Azure Firewall product for customers in a modern cloud network strategy.

by Contributed | Aug 13, 2023 | Technology

This article is contributed. See the original author and article here.

by Contributed | Aug 11, 2023 | Technology

This article is contributed. See the original author and article here.

Disclaimer

This document is not meant to replace any official documentation, including those found at docs.microsoft.com. Those documents are continually updated and maintained by Microsoft Corporation. If there is a discrepancy between this document and what you find in the Compliance User Interface (UI) or inside of a reference in docs.microsoft.com, you should always defer to that official documentation and contact your Microsoft Account team as needed. Links to the docs.microsoft.com data will be referenced both in the document steps as well as in the appendix.

All the following steps should be done with test data, and where possible, testing should be performed in a test environment. Testing should never be performed against production data.

Target Audience

Microsoft customers who want to better understand Microsoft Purview.

Document Scope

The purpose of this document (and series) is to provide insights into various user cases, announcements, customer driven questions, etc. It is not meant as the final answer to all Purview related questions.

Topics for this blog entry

Here are the topics covered in this issue of the blog:

- Topic – Purview related eDiscovery and Office Message Encrypted (OME) emails

- Use Case #1 – legal or HR review of Office Message Encrypted (OME) emails within Purview eDiscovery

- Use Case #2 – legal or HR review of OME emails that have been exported from Purview to a PST and/or Exchange Mailbox and then opened within an Outlook thick client.

Out-of-Scope

This blog series and entry is only meant to provide information, but for your specific use cases or needs, it is recommended that you contact your Microsoft Account Team to find other possible solutions to your needs.

Not done – OME and eDiscovery

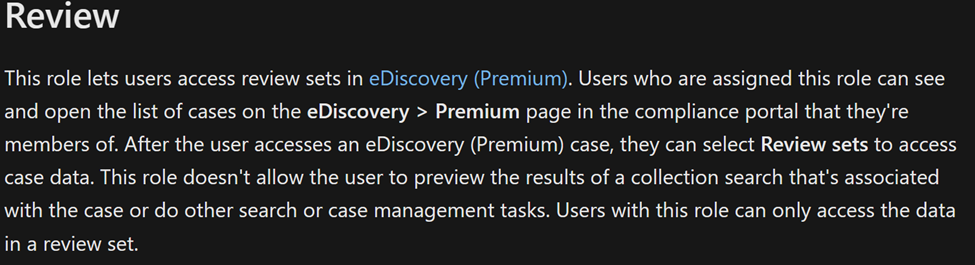

1 – Roles Based Access Control (RBAC) for Purview

If you want to leverage Purview RBAC roles to access and view emails/files, you will need to open the Purview eDiscovery console. The Purview RBAC roles are not “usable” within Outlook thick or thin clients.

Here is a link to the RBAC information and a screenshot related specifical the Review role within that RBAC:

Assign eDiscovery permissions in the Microsoft Purview compliance portal | Microsoft Learn

2 – Accessing emails that have been encrypted via OME inside of Purview eDiscovery

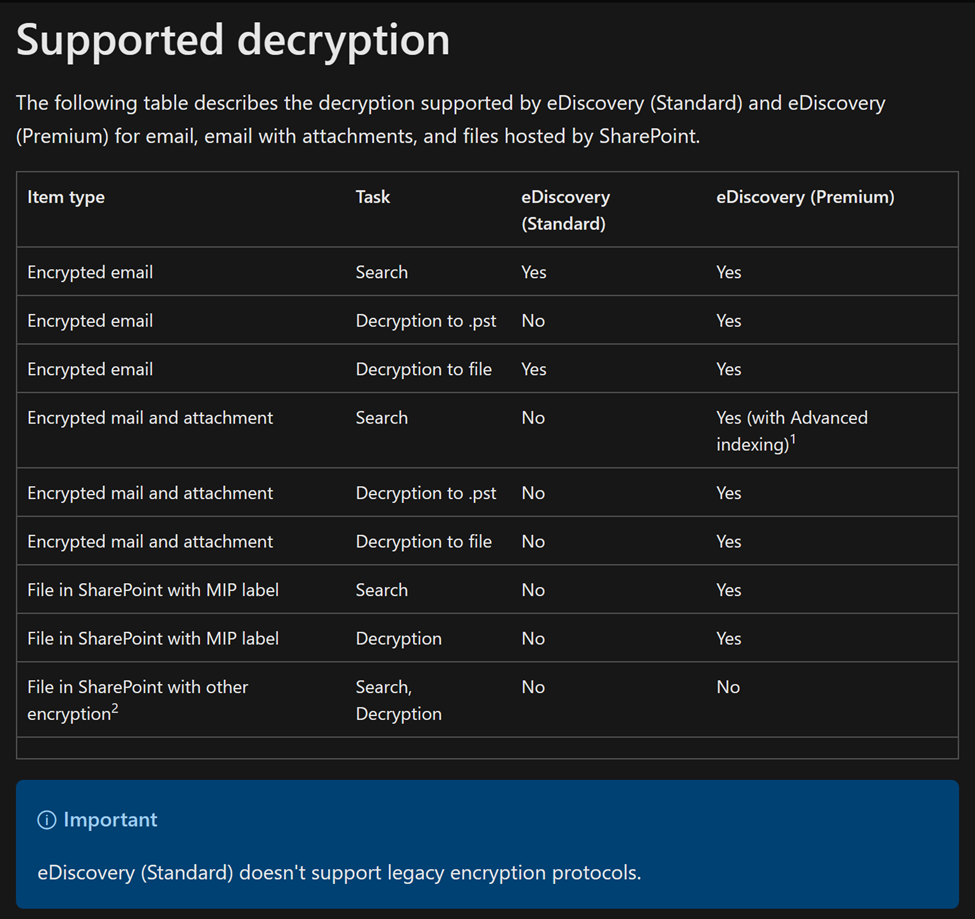

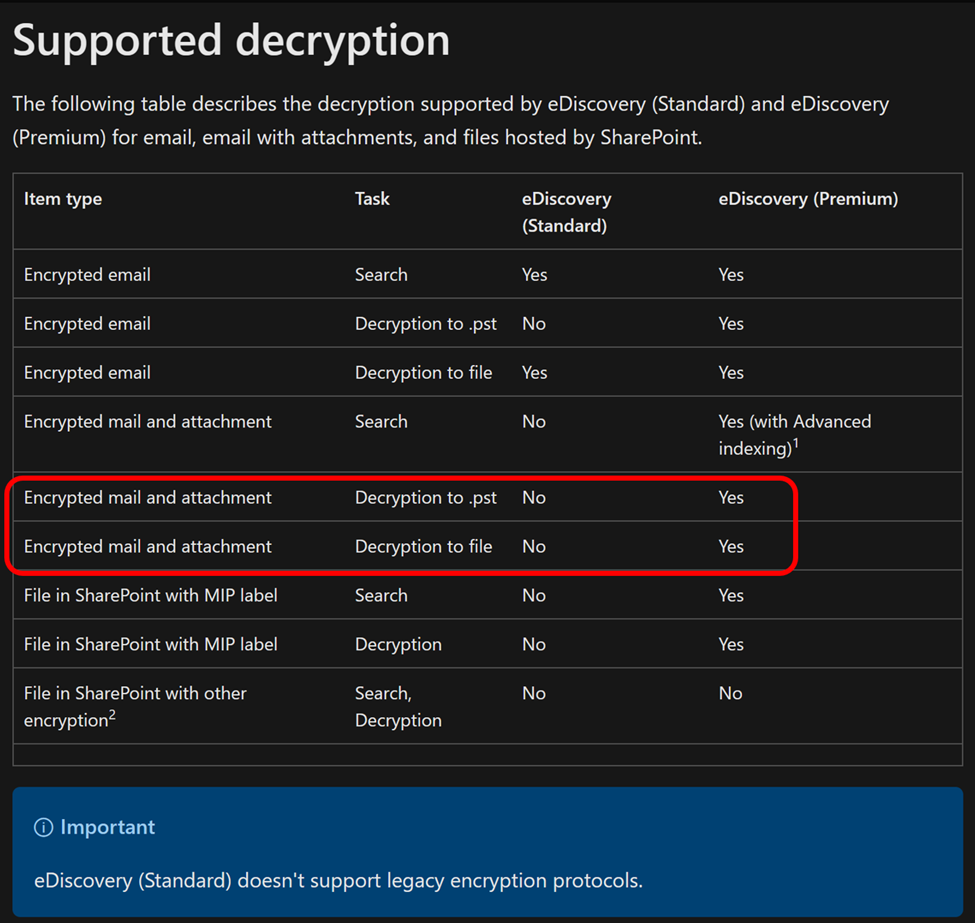

- Let us first understand how Purview deals with encrypting/decrypting data, as it relates to eDiscovery. The following chart from Microsoft documentation should provide more light on what is decrypted in the Standard and Premium versions of Purview.

Decryption in Microsoft Purview eDiscovery tools | Microsoft Learn

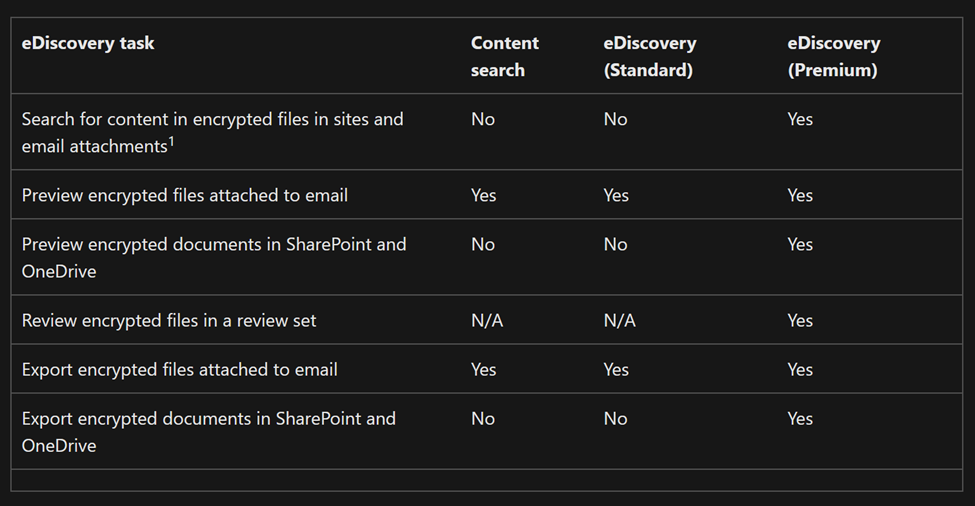

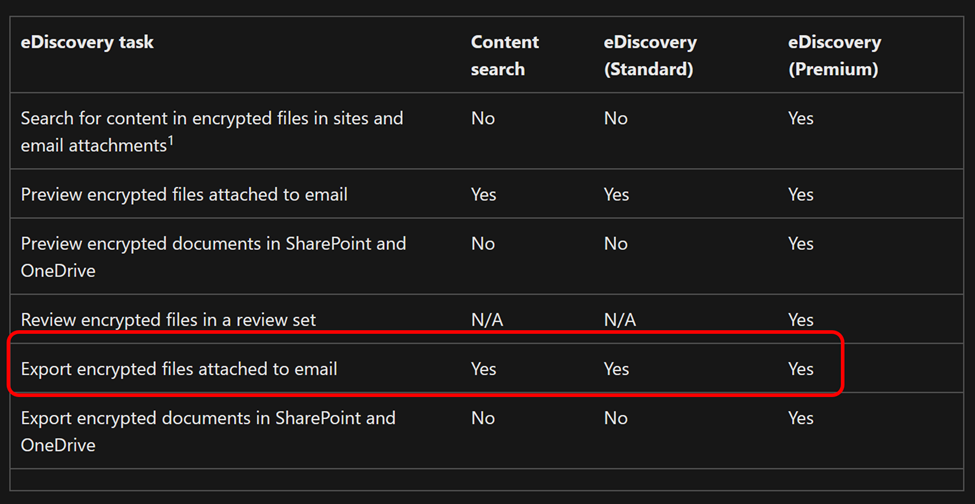

- The following is the link and screenshot to the Microsoft documentation that tells you what Purview eDiscovery tasks can be run on encrypted data.

Decryption in Microsoft Purview eDiscovery tools | Microsoft Learn

- In conclusion, if you have the proper version of Purview eDiscovery (ie. Premium) and the proper RBAC role, you can view emails that have been encrypted using OME.

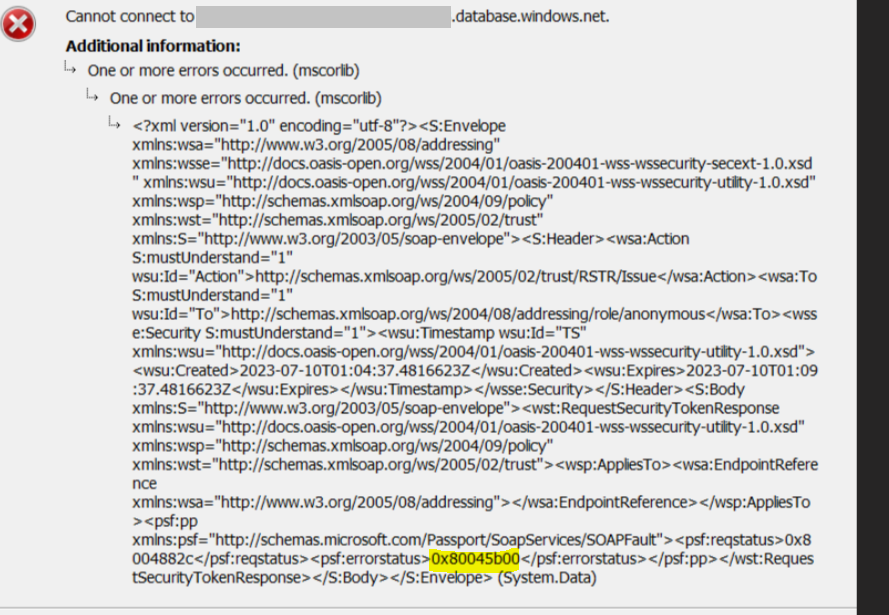

3 – Accessing emails that have been encrypted via OME and then exported to a PST and/or Exchange mailbox

Before we start this section, please note that review of eDiscovery related data from within Outlook is not a Microsoft best practice. We recommend you perform your reviews from within Purview eDiscovery or another eDiscovery solution designed for legal and HR investigations.

With that being stated, let us look at what options are available if you do decided to try and review encrypted (OME) that has been exported from Purview eDiscovery.

- First, let us return to the supported decryption charted from above, we can see what versions of Purview support decryption of data when exporting to PST files.

Decryption in Microsoft Purview eDiscovery tools | Microsoft Learn

- Next, let us again return to one of the charts above, notice that you can export encrypted data (to email/PST). This applies to the export of encrypted data but DOES NOT decrypt data as part of its export process.

Decryption in Microsoft Purview eDiscovery tools | Microsoft Learn

- So, this begs the following:

- Question – if my data is exported and still encrypted with OME, how can I read OME emails from the exported PST file?

- Answer – The official answer is you need additional rights tied back to RMS, in particular the RMS Decrypt role. Please note the information in the following link and screenshot for specifics.

Decryption in Microsoft Purview eDiscovery tools | Microsoft Learn

From the link and screenshot above, there are 2 items listed:

- You need to assign the RMS Decrypt role to your user performing the review. This is separate from the Reviewer role specific to Purview eDiscovery.

- It is recommended that you run the ScanPST.exe tool on the exported PST. This tool does not decrypt data only verifies and fixes PST files that might have become corrupted.

Important Note

For a deeper understanding of what rights are needed and work flow you should follow (if you are pursuing this email review process) you should contact your Microsoft Account Manager or certified Microsoft Partner.

Appendix and Links

by Contributed | Aug 11, 2023 | Technology

This article is contributed. See the original author and article here.

Learn how you can save money with the Azure saving plan recommendations. with your host Thomas Maurer and Azure savings plan expert Obinna Nwokolo.

Azure savings plan feature recap.

Azure savings plan for compute is an easy and flexible way to save money on compute services spend compared to pay-as-you-go (PAYG) prices. What customers do is you can commit to an hourly amount to spend over a one to three-year and in exchange for that you get significant discounts over the on-demand prices.

Purchase savings plans in the Azure portal.

You can purchase Azure savings plan by going to Azure Portal.

- Login to the Azure Portal.

- In the search box, search for “Savings plan”, click on that.

- You’ll be brought to the Savings plan blade where you can click “Add” and then you can go through the purchasing process just by filling out the necessary information.

How are savings plan recommendations generated?

Thomas mentioned that when he talks to customer using the savings plan, he gets a few common questions such as “How much commitment should I make?” or “How do I select the right savings plan?” and so on because it varies by individual cases One other question, he gets asked is “How are Azure savings plans recommendations generated?”

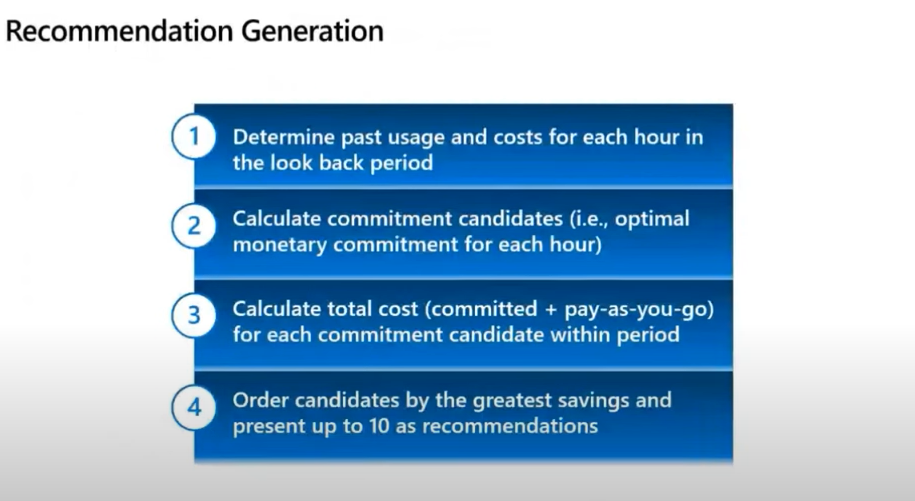

From Obinna’s experience it is very difficult to figure out exactly how much to commit and so Microsoft tries to do that work for you. Microsoft looks at your hourly usage, what you’ve spent on savings plan eligible resources over the last 7, 30, and 60 days. Then Microsoft does some calculations where they try to determine what would have been the optimal savings plan amount for each hour within the 7, 30, or 60 day timeframe. Then Microsoft takes that optimal savings plan amount and attempts to simulate what would have happened had you made that savings plan purchase and your savings projected. After all those simulations have been done, Microsoft takes the top ten of those simulations that resulted in positive savings and presents those to you as recommendations. Your recommendations are based on what you’re actually spending.

Demo of Azure savings plan and explanation of calculations.

In the video below there is a segment where there is a demo. For example, let’s talk about a customer that would be a good fit for Azure savings plan. Our fictional customer Contoso runs a helpdesk service and because they are serving globally, it’s important for them to leverage compute services through multiple regions over the course of the day.

This makes them a really good candidate for the Azure savings plans. So, in this example how does Microsoft generate the recommendation is going to go through several steps.

- Microsoft figures out what did you spend, this could be called the “commitment candidates”. These are the amounts that say for this hour this is the optimal savings plan amount.

- Then some simulations are run where Microsoft figures out what you would have saved if you had that commitment.

- Then Microsoft simulates all 720 commitment candidates; it is going to say here are the top 10 and this is what we’re going to present to you as a customer.

To recap, Microsoft wants to start by looking at your usage. In this scenario, when Microsoft looks at Contoso’s usage over 720 hours. Microsoft goes through hour one, hour two all the way down to the last hour for that period and says “this is your usage and what did they actually spend?”.

You can see how this is calculated in this example a little bit over $7.00, so $7.32 and fractions of that. Then Microsoft says, “let’s figure out that same answer for all of the remaining hours within that 30 day look back period.” Which in this case is 720 distinct hours (24 hours x 30 days = 720 hours). So now that Microsoft knows what they were spending for every single hour, Microsoft wants to understand what the optimal savings plan amount is for each one of those hours.

Microsoft then applies the Azure savings plan discount for each one of those hours and then calculates what’s the net resultant savings plan commit optimal amount. So, in this example their usage of on demand cost was $7.32 for hour one. When the savings plan discounts are applied, we find that the right amount for them, from a savings plan perspective, is a little bit more than $3.30.

So, we now know this is the optimal amount for our number one. Let’s take our number one’s value and apply to our number two, our number three and all the remaining hours in that 720-hour window. And so, we’re going to follow the benefit application rules that exist for savings plan, look at the meter that has the greatest discount and apply the savings plan to that first and then work our way down. As we go through that process in this particular example, we find that a $3.30 savings plan isn’t actually enough to fully cover that first meter so there’s going to be some overage. We’re going to fully consume the savings plan and then we’re going to incur an on-demand cost of $1.57. Then we’re going to go on that second meter and we’re going to, because the savings plan is gone, just charge that one as the full on-demand rate. So, the net of this is as we go through this, we see that we incurred a total simulated cost of $6.87. That’s actually still better than the on-demand cost that the customer had coming in which was $7.32. So, this $3.30 savings plan for our number two resulted in a net savings of $0.44 which is progress. As we go through each one of those hours, if a candidate results in a positive savings, we want to keep it because it’s got potential but if it doesn’t, we want to discard it. So we’re going to run this analysis for our number two, our number three, all the way to 720 when we finish for each individual hour, each individual simulation, we want to compare all of them.

In this case, again, we have 720 candidates. We’re going to simulate each one of those against every single hour. The result of this is about a little bit over 500,000+ calculations in this period and when we finish this whole process, we’re going to select up to the top 10 candidates that actually resulted in savings and that’s what you end up seeing within the Azure portal UI when you click into the billing into the hourly commitment.

You’ll see in this example we’ve presented for $1.43 all the way to $1.43 and a little bit more change along with providing the commitment amount, we do provide additional information. It says here’s your expected savings percentage as well as the expected coverage and this is the coverage that this savings plan and any other reservations and or savings plan you’ve previously purchased would have provided for you.

We think that’s really good information to help you make the right choice, but you still also have the ability to put a custom amount if you want to go a little bit less. We wouldn’t recommend going over because as you go over you have additional waste and again this is focused on making sure we provide you with the greatest cost savings.

Resources to help you learn more about Azure savings plan.

Microsoft has lots of resources to help you figure this out. Learn more at the Azure savings plan for compute https://aka.ms/savingsplan-compute to understand broadly how savings plan works. You can also read about Azure savings plan for compute at Microsoft Learn https://aka.ms/savingplans/doc and about Cost Management APIs at Microsoft Learn https://aka.ms/CostManagement/API.

Recommended Next Steps:

If you’d like to learn more about the general principles prescribed by Microsoft, we recommend Microsoft Cloud Adoption Framework for platform and environment-level guidance and Azure Well-Architected Framework. You can also register for an upcoming workshop led by Azure partners on cloud migration and adoption topics and incorporate click-through labs to ensure effective, pragmatic training.

You can view the whole video below.

Recent Comments