by Scott Muniz | Jul 31, 2020 | Alerts, Microsoft, Technology, Uncategorized

This article is contributed. See the original author and article here.

This week some important Windows Virtual Desktop features moved to be generally available (hello audio and video redirection!), the Android Remote Desktop client now also supports WVD and Azure AD App Proxy now supports the Remote Desktop Services web client,. Azure Blob storage announced (in preview) connectivity using the Network File System 3.0 protocol. And the Azure IoT Device Provisioning Service now supports locking down ingress access to devices connecting via a specified virtual network.

Windows Virtual Desktop and Remote Desktop

New Windows Virtual Desktop capabilities now generally available

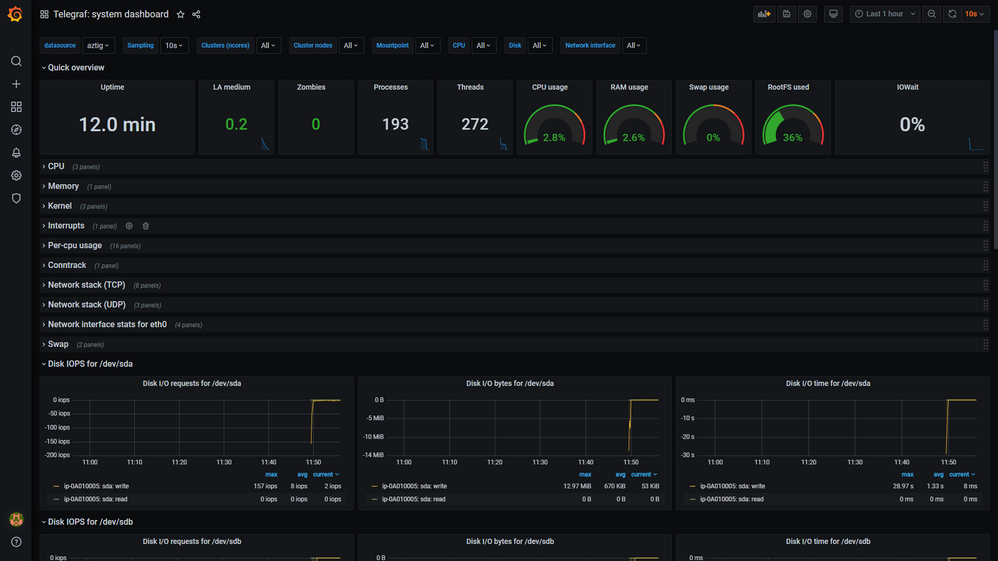

New Windows Virtual Desktop capabilities now GA including Azure portal integration for deployment / management and new audio/video redirection capabilities providing seamless meeting and collaboration experience for Microsoft Teams.

Windows Virtual Desktop blade in the Azure Portal

Windows Virtual Desktop blade in the Azure Portal

Remote Desktop client for Android now supports Windows Virtual Desktop connections

The new Remote Desktop client for Android now supports Windows Virtual Desktop connections. This new client (version 10.0.7 or later) features refreshed UI flows for an improved user experience.

The app also integrates with Microsoft Authenticator on the device to enable conditional access when subscribing to Windows Virtual Desktop workspaces. View the announcement here.

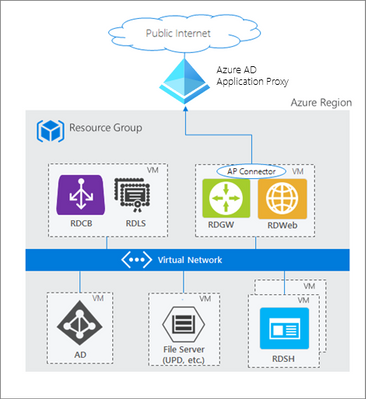

Azure AD Application Proxy now supports the Remote Desktop Services web client

You can now use the RDS web client even when App Proxy provides secure remote access to RDS. The web client works on any HTML5-capable browser such as Microsoft Edge, Internet Explorer 11, Google Chrome, Safari, or Mozilla Firefox (v55.0 and later). You can push full desktops or remote apps to the Remote Desktop web client. The remote apps are hosted on the virtualized machine but appear as if they’re running on the user’s desktop like local applications. The apps also have their own taskbar entry and can be resized and moved across monitors.

How Azure AD App Proxy works in an RDS deployment

How Azure AD App Proxy works in an RDS deployment

Learn about the requirement to update your App Proxy connectors and configure RDS to work with App Proxy.

NFS 3.0 support for Azure Blob storage is now in preview

Azure Blob storage is the only storage platform that supports the Network File System 3.0 protocol over object storage, natively (with no gateway or data copying required), with crucial object storage economics. This is great news if you need to preserve your legacy data access methods but want to migrate the underlying storage to Azure Blob storage. It also enables you to re-use the same code from on-premises solutions to access files. Learn more, including how to mount a blob container using NFS 3.0.

Azure IoT Device Provisioning Service VNET ingress support is now available

The Azure IoT Device Provisioning Service VNET ingress support feature enables users to lock down DPS ingress access to devices connecting through a specific VNET. DPS egress to IoT Hub uses an internal service-to-service mechanism and does not currently operate over a dedicated VNET.

This core new capability improves the connectivity security and is of special significance to those in the industrial and enterprise sectors with stringent network and compliance requirements. View the documentation Azure IoT Hub Device Provisioning Service (DPS) support for virtual networks.

MS Learn Module of the Week

This week we couldn’t decide, so it’s TWO modules of the week, both about Azure Resource Manager templates.

- Deploy to multiple Azure environments by using ARM templates

Now you understand how ARM templates work, in this module you make your ARM template reusable for different environments by adding variables and expressions via Resource Manager functions. You also add better tracking and organization of your deployed resources by using tags. You complete the goal of improving the flexibility of your deployments by using parameter files.

Those were our highlights this week – tell us about yours! Was something else on the Azure announcements blog relevant to you? Will these announcements help your organization this week? Let us know in the comments.

by Scott Muniz | Jul 30, 2020 | Alerts, Microsoft, Technology, Uncategorized

This article is contributed. See the original author and article here.

What is the issue?

We discovered an issue that affects verification of tenant certificates and we are resolving this by renewing the tenant CA certificates for all impacted tenants. As described in the blog Azure Sphere tenant CA certificate management: certificate rotation, the Azure Sphere tenant certificate authority (CA) certificates that were issued two years ago are being automatically renewed. The Azure Sphere 20.07 SDK, released on July 29, 2020, supports features with which you can download the renewed certificates for your tenants. For certificates created between June 16, 2020 21:00 UTC, and July 28, 2020 00:15 UTC, verification using OpenSSL may fail. The failure is due to a mismatched signature algorithm identifier in the certificate. The error does not compromise the security of these certificates.

Who is impacted?

If the tenant CA certificate issuance date is after June 16, 2020 and before July 28, 2020, the tenant CA certificate may fail to verify with OpenSSL. The Azure Sphere Security Service will renew and activate all impacted certificates as a corrective measure.

What actions should you take?

|

Condition

|

Instructions

|

|

You have not downloaded the tenant CA certificate or tenant CA certificate chain that was issued between June 16, 2020 and July 28, 2020

(If you run ‘azsphere ca list’ in your Azure Sphere Development command prompt, you will see this issue date listed as “Start date”)

|

You don’t have any actions to take and these instructions don’t apply to you.

|

|

You have downloaded the tenant CA certificate or tenant CA certificate chain that was issued between June 16, 2020 and July 28, 2020

|

Between August 5, 2020 and August 18, 2020, please follow the instructions below to ensure that there is no break in service.

- Run ‘azsphere ca list’ in your Azure Sphere Development command prompt

- Use the most recent certificate to register with Azure IoT Hub/Central or other third-party resources following instructions here

|

For tenants that are impacted by this issue, the new and valid tenant CA certificates will be created by August 5, 2020. The new certificates will be activated after August 18, 2020. If you have any additional questions, please reach out to Microsoft Support.

Documentation Resources:

by Scott Muniz | Jul 30, 2020 | Alerts, Microsoft, Technology, Uncategorized

This article is contributed. See the original author and article here.

Now, for the first time, with the Azure Sphere OS 20.07 release, Microsoft has licensed and exposed a subset of wolfSSL for use on Azure Sphere devices, allowing software developers to create client TLS connections directly using the Azure Sphere SDK. Software developers no longer need to package their own TLS library for this purpose. Using the wolfSSL support in Azure Sphere can save device memory space and programming effort, freeing developers to build new IoT solutions.

Microsoft Azure Sphere and wolfSSL have been long-time partners, striving for the very best in security. The Azure Sphere OS has long used wolfSSL for TLS connections to Microsoft Azure services. Azure Sphere also uses wolfSSL technology to enable secure interactions from developer apps to customer-owned services.

Partnerships with leaders like wolfSSL play an important role in Azure Sphere’s mission to empower every organization to connect, create, and deploy highly secured IoT devices. The unique Azure Sphere approach to security is based on years of vulnerability research, summarized in the seminal paper “Seven Properties of Highly Secure Devices.” These seven properties are the minimum requirement for any connected device to be considered highly secured. Azure Sphere implements all seven properties, providing a robust foundation for IoT devices.

Azure Sphere can be used with any customer cloud service, not just Microsoft’s own Azure. By providing a highly secured ecosystem, Microsoft and wolfSSL make security features more accessible and easier to use and can extend unmatched security to the frontiers in IoT where security has historically been sparse.

For information on how to use the wolfSSL API on Azure Sphere, please see Use wolfSSL for TLS connections in the online documentation. We plan to publish a related sample application, available at a later date. Check back here—we will update this post with the link to the sample once it is available.

If you have any questions, contact Microsoft at AzCommunity@microsoft.com or wolfSSL at facts@wolfSSL.com

by Scott Muniz | Jul 30, 2020 | Alerts, Microsoft, Technology, Uncategorized

This article is contributed. See the original author and article here.

In the last part of this two-part series, Shreya Verma takes a deeper dive into backup options by showing us point-in-time, long-term retention, and geo restore demos. To learn about the different backups options Azure SQL provides and how to effectively manage them, watch part one.

Watch on Data Exposed

Additional Resources:

Overview of Business Continuity

Automated Backups

Backup Cost Management

PiTR and Geo-Restore

Long-Term Retention

Recovery Drills

Azure SQL Blogs

View/share our latest episodes on Channel 9 and YouTube!

by Scott Muniz | Jul 30, 2020 | Alerts, Microsoft, Technology, Uncategorized

This article is contributed. See the original author and article here.

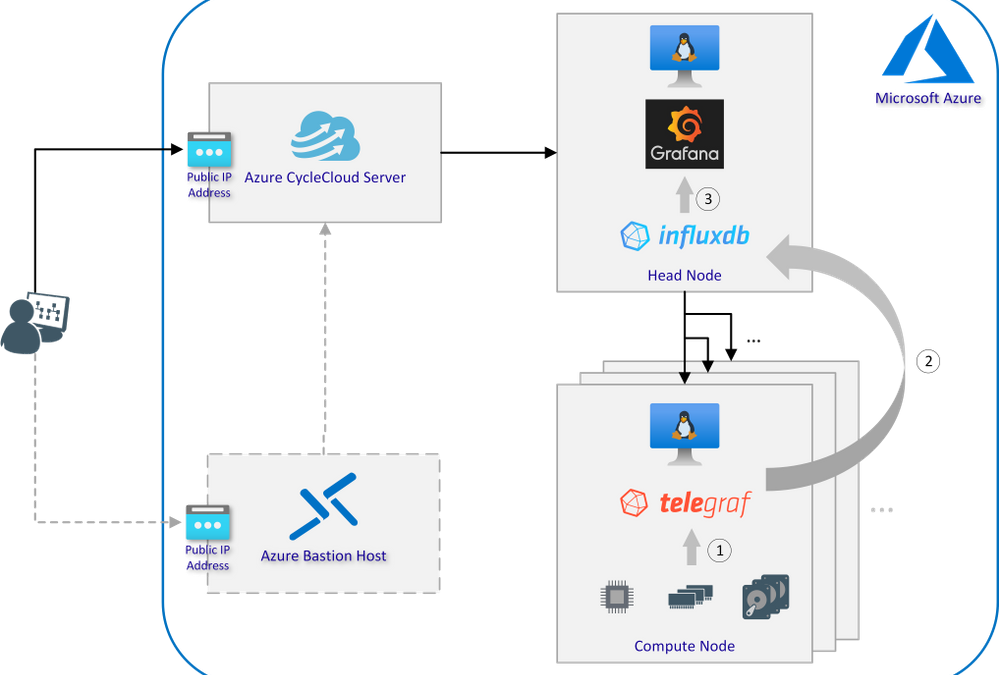

Setting up Telegraf, InfluxDB and Grafana using Azure CycleCloud

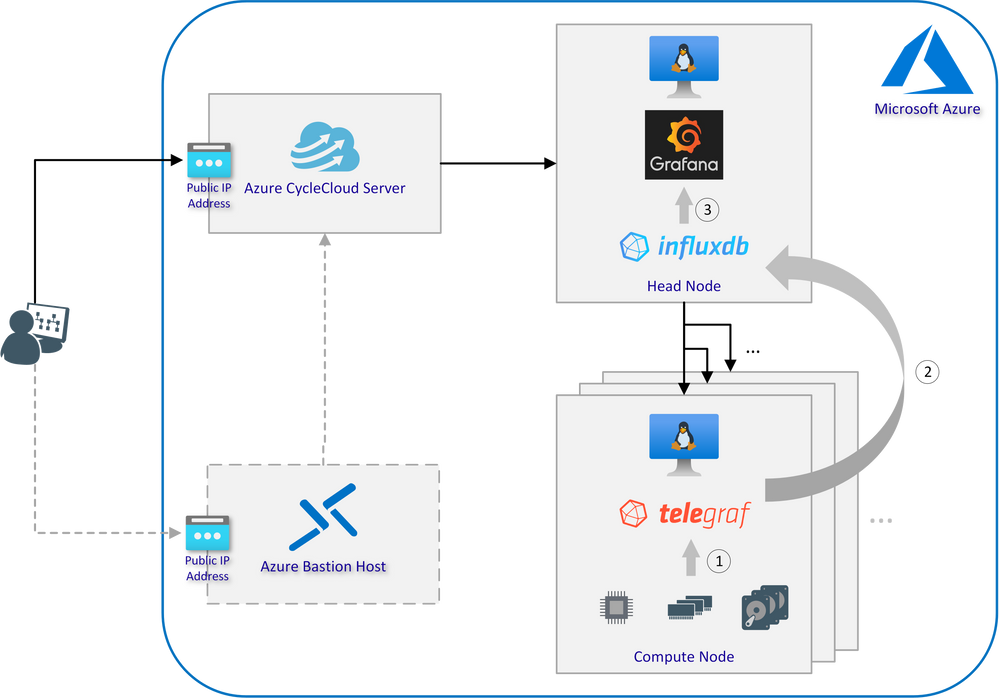

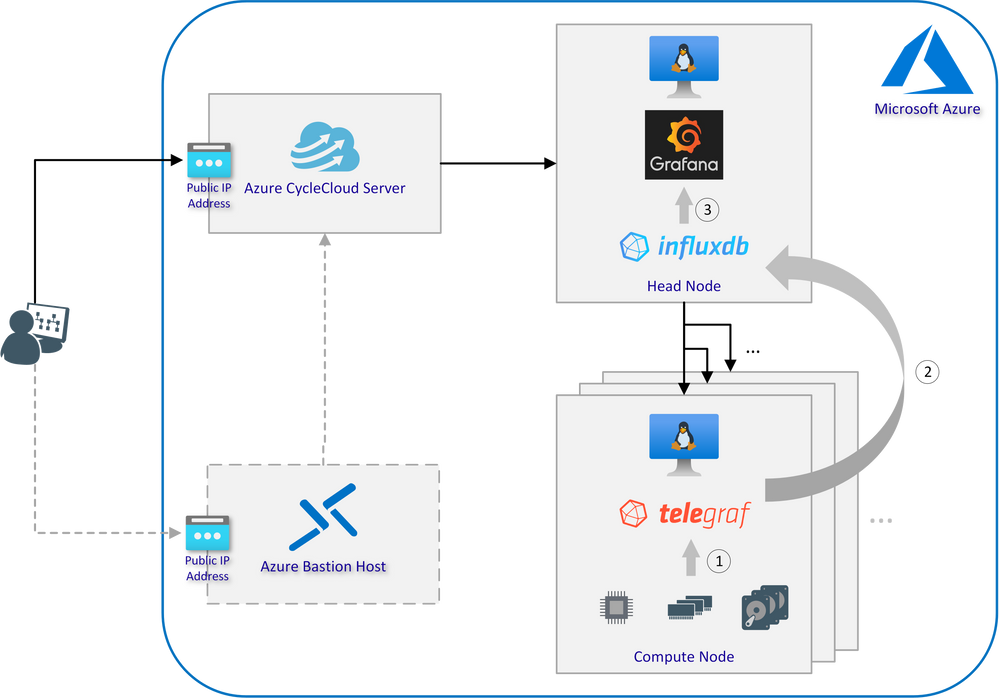

Architecture Overview

Interaction of Telegraf, InfluxDB and Grafana:

- Telegraf is a plugin-driven server agent for collecting and reporting system metrics and events

- InfluxDB is an open source time series database designed to handle high write and query loads and used to store the data from all compute nodes collected by Telegraf

- Using Grafana to turn metrics into graphs based on time-series data stored in InfluxDB

Prerequisites

- Azure account with an active subscription

- Azure CycleCloud instance which can be set up as described here

- Working CentOS or Ubuntu base image to deploy clusters with Azure CycleCloud

- Optional: Azure Bastion host configured to access the subnet in which the cluster will be deployed

Step-by-Step Installation Guide

- Connect to your Azure CycleCloud server via SSH (if necessary through the Bastion host)

- Use git to clone the aztig GitHub repository or download it from the website and extract it in a folder of your choice:

sudo yum install -y git

git clone https://github.com/andygeorgi/aztig.git

- Create a new CycleCloud project using the CycleCloud CLI:

cyclecloud project init cc-aztig

You will be prompted to enter the name of a locker. Press Enter to display a list of all valid Lockers and select one:

Project 'cc-aztig' initialized in …

Default locker:

Valid lockers: MS Azure-storage

Default locker: MS Azure-storage

- Link or copy the init scripts from the cloned GitHub repository to the project folder:

ln -s $(pwd)/aztig/specs/master cc-aztig/specs/master

ln -s $(pwd)/aztig/specs/execute cc-aztig/specs/execute

- Edit the configuration files for both node types and add a password for InfluxDB:

cat cc-aztig/specs/master/cluster-init/files/config/aztig.conf

INFLUXDB_USER="admin"

INFLUXDB_PWD="<INSERTPW>"

GRAFANA_SHARED=/mnt/exports/shared/scratch

cat cc-aztig/specs/execute/cluster-init/files/config/aztig.conf

INFLUXDB_USER="admin"

INFLUXDB_PWD="<INSERTPW>"

GRAFANA_SHARED=/mnt/exports/shared/scratch

Make sure that the parameters in both files are exactly the same!

- Switch to the CycleCloud project folder and upload it to the specified locker:

cd cc-aztig/

cyclecloud project upload

Uploading to az://rgdemogpv2/cyclecloud/projects/cc-aztig/1.0.0 (100%)

Uploading to az://rgdemogpv2/cyclecloud/projects/cc-aztig/blobs (100%)

Upload complete!

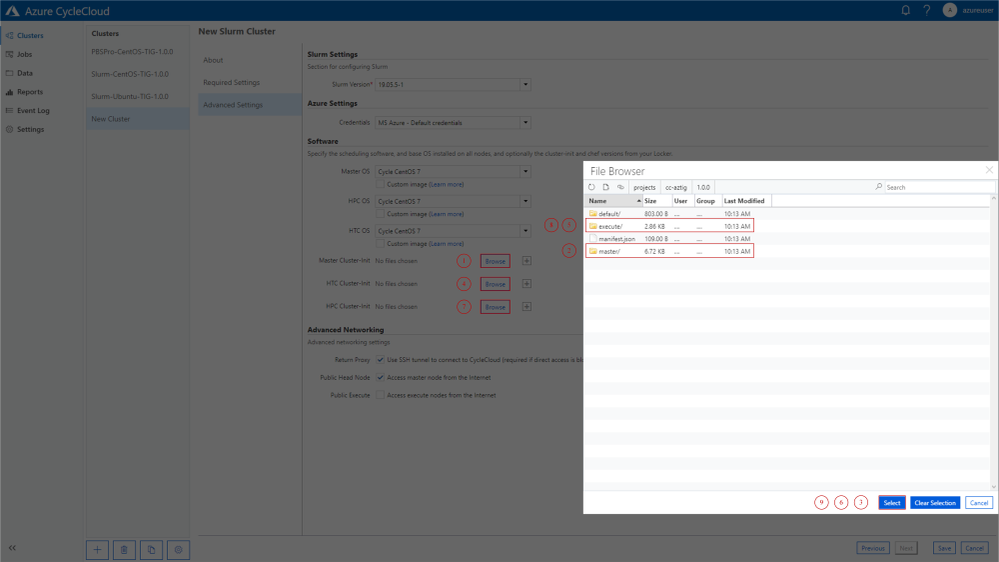

- Navigate to the CycleCloud web portal and create a new cluster (see “Software Versions Tested” for tested and working templates)

- In the advanced settings select the master folder for the head node and the execute folder for all nodes to be monitored:

-

Start the cluster and use SSH port forwarding to access Grafana on the head node without exposing the ports to the public Internet:

ssh -A -l azureuser -L 8080:<PRIVATE-HEAD-NODE-IP>:3000 -N <PUBLIC-CC-IP>

Insert the private IP of your head node and the IP of the jump host (e.g. CycleCloud server or Bastion host)

- Login to Grafana by opening http://localhost:8080 and follow the steps for the first log in attempt from the Grafana documentation

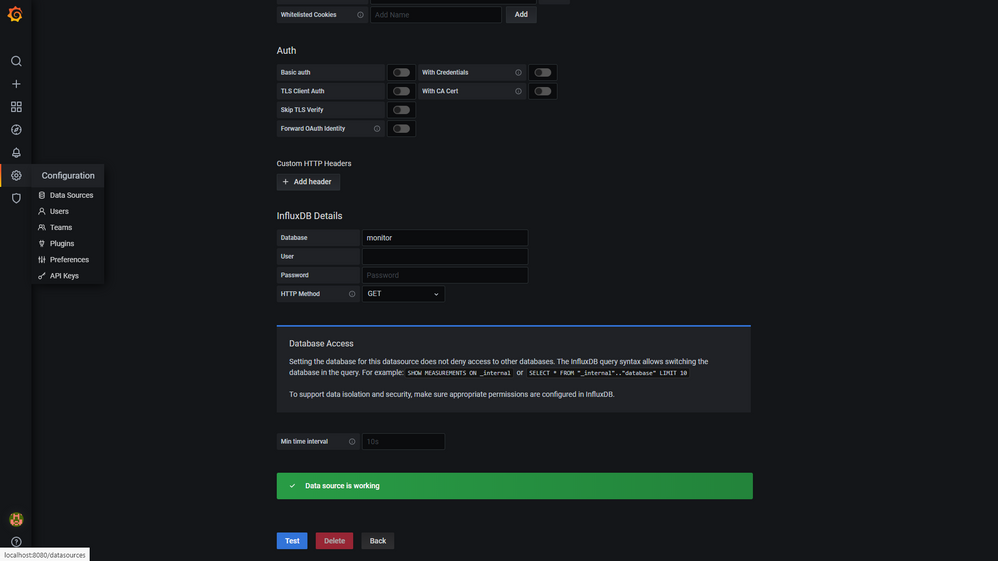

- After setting your password, verify that the aztig data-source is working correctly:

-

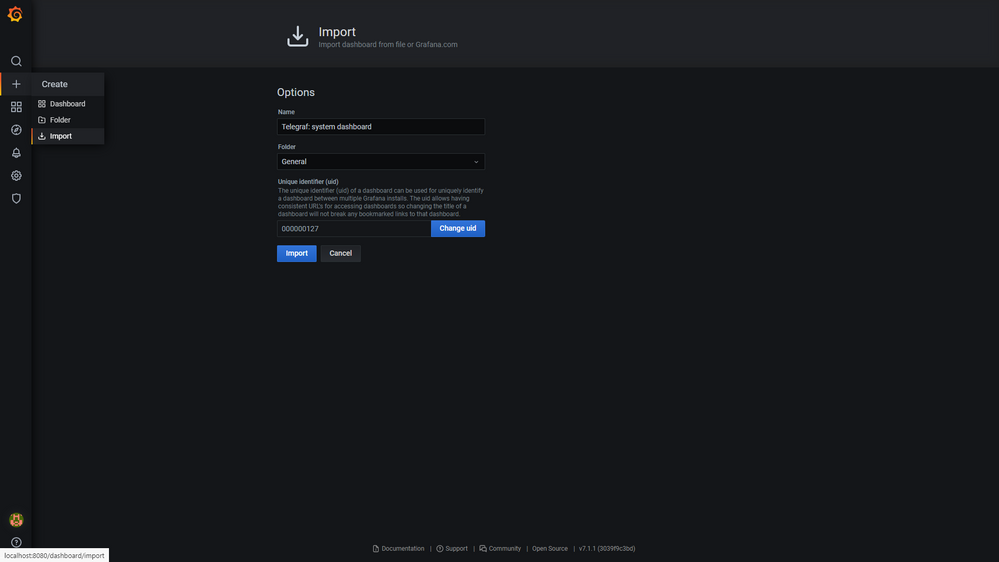

Finally import the Telegraf system dashboard which is included into the GitHub repository:

- After successful import you should be redirected to the dashboard, where all collected metrics are displayed:

Note that an error is displayed if no data is available in InfluxDB. It will disappear as soon as first data comes in.

Note that an error is displayed if no data is available in InfluxDB. It will disappear as soon as first data comes in.

Customisation, Debugging and Optimisation

- By default the head node is observed as well. To remove it from the list of monitored nodes the init script for the client can be deleted from the master folder:

cc-aztig/specs/master/cluster-init/scripts/011-aztig-client.sh

- Data collection can represent a significant overhead, depending on how many metrics and nodes need to be monitored. Therefore, it is highly recommended to adapt the telegraph configuration to the specific needs:

cc-aztig/specs/execute/cluster-init/files/config/telegraf.conf

- In case of connection problems between Telegraf and InfluxDB check the firewall settings. By default InfluxDB listens on port 8086. Some example rules are already included in the master init script and can be commented out/adopted if necessary.

cc-aztig/specs/master/cluster-init/scripts/010-aztig-server.sh

- Instead of manually selecting the init scripts in the GUI, CycleCloud also offers the ability to create customised cluster templates that include the scripts by default. Follow the instructions in the CycleCloud documentation to set the parameters accordingly.

Software Versions Tested

by Scott Muniz | Jul 29, 2020 | Alerts, Microsoft, Technology, Uncategorized

This article is contributed. See the original author and article here.

In recent years, Azure Stack Hub has led the way for Microsoft’s Hybrid cloud offerings and partners have joined us to enhance the hybrid cloud journey of our customers. These partners and customers have built solutions that leverage Azure Stack Hub as part of their hybrid cloud strategy.

In some cases, these are actual products that work in hybrid environments and take advantage of the consistency offered by Azure Stack Hub, for the parts of the solution that “need to be on-premises”. In others, we’ve seen Service Providers that offer fully managed solutions to their customers – which essentially look just like a SaaS application they can consume directly, without worrying about all the parts of the solution that need to work together. Usually these range between:

- Service Providers

- They typically are Cloud Solution Providers (CSP), Managed Service Providers (MSP), or a System Integrator (SI) – some Service Providers are part of the Preferred and Advanced partners

- Either hosting an Azure Stack Hub, or a managing one on behalf of the end customer

- Partnering with ISVs, or building their own internal applications that are intended for their customers

- Enterprise customers

- Usually the larger companies that have certain requirements (regulatory, compliance, security, etc) for running Azure services on-premises

- Typically, they have an Azure presence and look to keep a consistent operational approach for their on-premises workloads

- Although they are internal to the company, they have their own internal-customers from other departments

- They need to provide value for their internal-customers and help them answer certain requirements

All of these partners create value for their customers, either internal or external to their company, by leveraging Azure Stack Hub as a platform and as part of the overall solution.

Today, we are starting a journey to explore the ways our customers and partners use, deploy, manage, and build solutions on the Azure Stack Hub platform.

Join Thomas Maurer (@ThomasMaurer) and myself (@rctibi) in this series, as we will meet customers that are deploying Azure Stack Hub for their own internal departments, partners that run managed services on behalf of their customers, and a wide range of in-between as we look at how our various partners are using Azure Stack Hub to bring the power of the cloud on-premises.

We are starting this journey with three videos:

Through the month of August, we have quite a few partner videos following and we will update this thread, as well as announce them on our Twitter feeds (#AzStackPartners) – follow us in this journey as we explore the partner solutions built on Azure Stack Hub!

How Azure AD App Proxy works in an RDS deployment

Recent Comments