by Scott Muniz | Aug 12, 2020 | Azure, Microsoft, Technology, Uncategorized

This article is contributed. See the original author and article here.

By Jaya Mathew, Richin Jain and Pritish Devurkar

In our previous blog, we outlined that Supervised Machine Learning (ML) models need labeled data, but majority of the data collected in the raw format lacks labels. So, the first step before building a ML model would be to get the raw data labeled by domain experts. To do so, we had outlined how Doccano is an easy tool for collaborative text annotation. However, not all data that gets collected is in text format, many a times we end up with a bunch of images but the end goal is again to build a Supervised ML model. Like stated previously, the first step would be to tag these images with specific labels. Image tagging as well as building and even deploying either a multi-class or a multi-label classifier can be done in a few simple steps using Azure Custom Vision.

What is Azure Custom Vision?

Azure Custom Vision is a cognitive service that enables the user to specify the labels for the images, build, deploy, and improve your image classifiers. The tool enables the user to easily label the images at the time of upload. Then the algorithm trains using these images and calculates the model performance metrics. The Custom Vision service is optimized to quickly recognize major differences between images, so the user can start prototyping the model with a small amount of data (50 images per label is generally considered a good start). Once the algorithm is trained, the user can test, retrain, and eventually use the newly trained model to classify new images according to the needs of their end application. The user also can also export the trained model for offline use.

Getting started:

To get started, the user would need to first create an Azure account and then create a new project as shown below. During the initial setup, the user is to name their project, select a resource group and determine whether the project is a Classification/Object detection scenario. In this sample, we will get started with a ‘Classification’ project which is of type ‘Multiclass (single tag per image)’. Since our images are generic, we pick the Domains as being ‘General’.

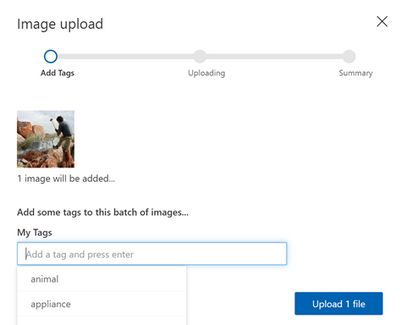

Once the project is created, the user can define the tags upfront or as they upload the images. Images can be uploaded using the ‘Add Images’ option, which prompts the user to navigate to the location of the image and enables the user to tag and upload the image. Bulk import of images is also an option. You can also use the smart-labeled option after the model has been trained.

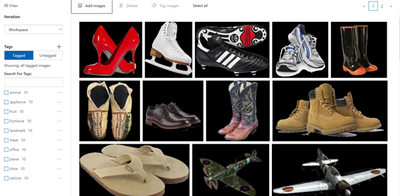

Continue to upload all the images available for classification and then tag all the images as shown below:

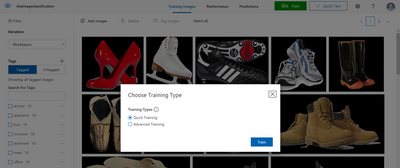

Once the user has successfully uploaded and tagged their images, the next step would be to train the classifier. The user can opt for either a quick/advanced training based on cost as well as time constraints. The quality of the classifier depends on the amount, quality, and variety of the labeled data provided as well as how balanced the overall dataset is.

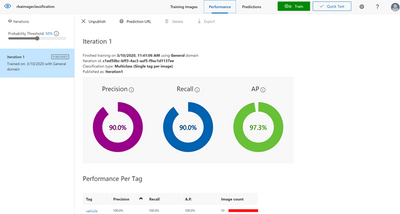

After the training is complete, review the training results. The user can benchmark their model using the quick training option and then use more advanced training if the results are not satisfactory.

If the model performance is not satisfactory, the user can also add some more images per class and then retrain the model.

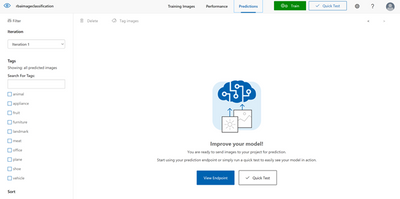

Once the model performance is deemed to be satisfactory, the user can test some more images using this option:

Now that you have built a classifier model, the user can use the Custom Vision service for image classification in production ready systems. To do so, the user would need to first publish the model which creates an endpoint for use in production ready systems. Overall based on our experience, Azure Custom Vision reduces the complexity involved in building and deploying a Custom Vision model.

References:

by Scott Muniz | Aug 12, 2020 | Azure, Microsoft, Technology, Uncategorized

This article is contributed. See the original author and article here.

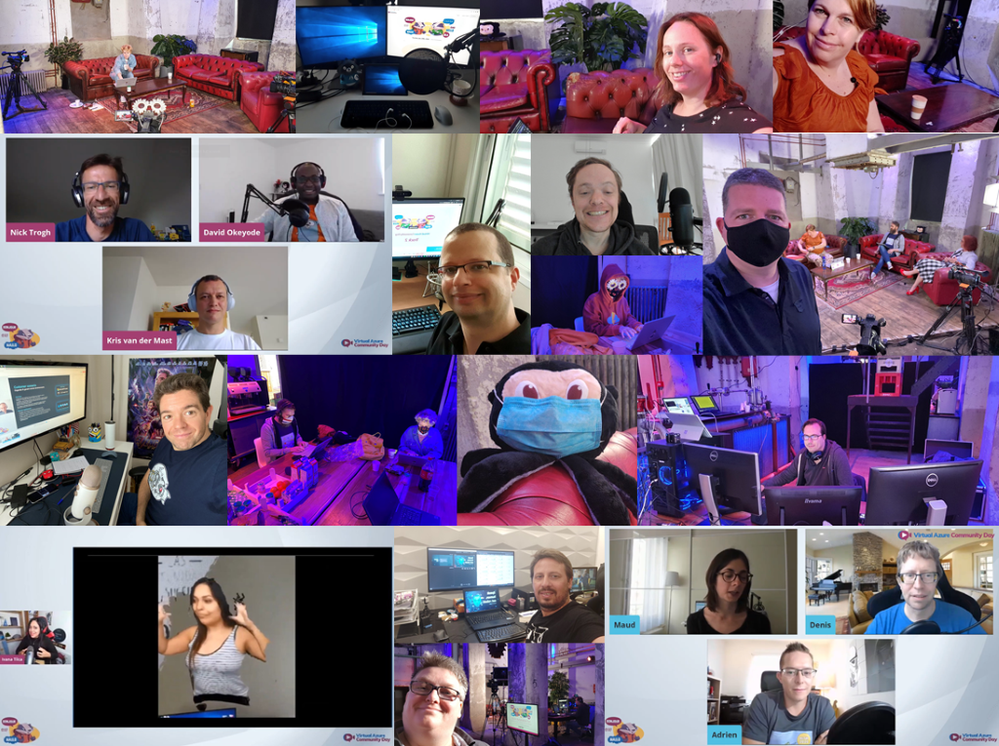

It is harder than ever to bring multiple people from multiple countries together in one place right now.

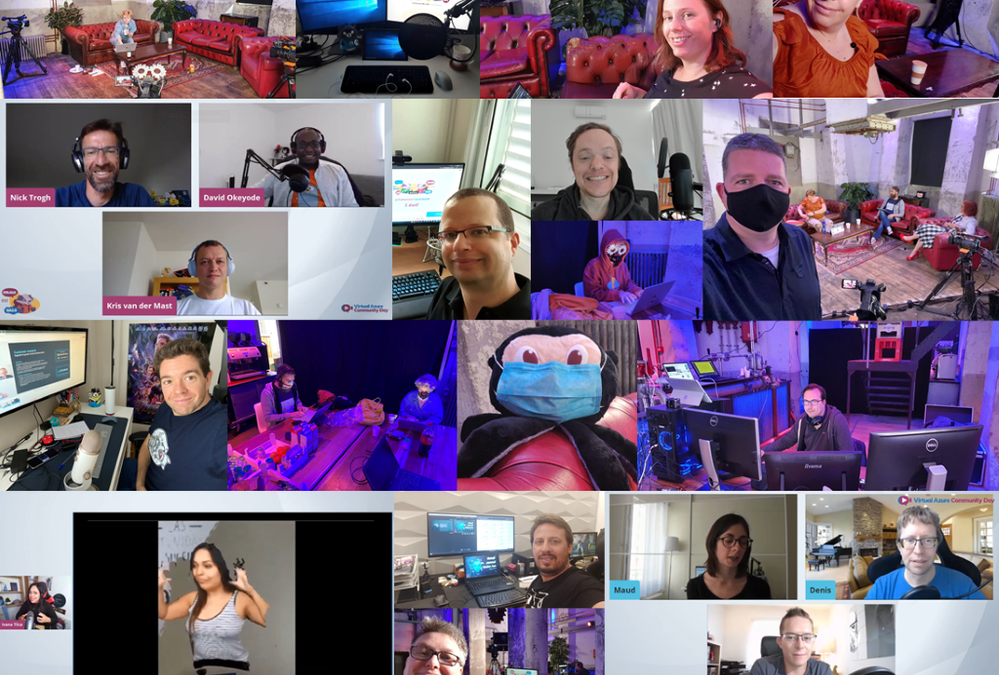

However, thanks to some “technical geekery” and organizing superpowers, this is exactly what took place when Azure lovers from around the world gathered online for 24 hours at the end of July.

The Virtual Azure Community Day is a multi-track, multi-cultural, and multi-discipline marathon which reunites Azure enthusiasts in the virtual world. The July 28 event produced almost 80 hours of content in six languages on the Azure Day platform. There, users could seamlessly switch between four tracks and interact in live chats. Moreover, 14 sessions were streamed live to Learn TV, 12 streams received interpretation into International Sign, and all streams were reproduced on YouTube and Twitch.

The effort to be as inclusive as possible paid off with the content reach exceeding more than 4 million. But beyond the numbers, the organizing team of Virtual Azure Community Day was most proud of the diverse line-up of speakers and emcees who shared their passion for Azure.

For example, African MVPs Oluwawumiju Oluwaseyi and Ayodeji Folarin say the event enabled them to present on their Azure experiences and learn from other international use cases. “We were very excited to work with fellow MVPs and Microsoft across the world because it exposes us to divergence in the knowledge and the different scenarios of Azure,” they say. “This keeps us on our toes to improve and optimize our current usage.”

International representation was also an important element to the Chinese representatives. Chinese Microsoft Azure and AI MVP Hao Hu says events like Virtual Azure Community Day help the nation’s Microsoft community overcome any social media obstacles to be seen and heard. “Global events like this or Azure Bootcamp and AI Bootcamp are a great chance to let global communities see us,” he says.

Meanwhile, this year was supposed to mark the debut international speeches of Nepalese Azure MVP Ravi Mandal. While the coronavirus impeded those plans, Ravi says he was excited to take his technical talks online at the marathon. “I immediately realized that this was for me because this would be the first talk I would give for an audience outside Nepal. Though online, it marks my first talk for international audiences!”

Similarly, multiple MVPs relished the chance to connect with other Azure fans regardless of location or language. “I love to work with these people, it’s always nice to rely on someone when you get stuck,” says Danish AI MVP Eva Pardi. Likewise, Portuguese OAS MVP Nuno Arias Silva says, “[At Microsoft] we all have different experiences and sharing is the most beautiful thing that we can do.”

“The world is smaller and smaller, and the walls between us are getting thinner and thinner,” Romanian Azure MVP Radu Vunvulea says. “The experience that I had with people from the Microsoft ecosystem was amazing.” This point is reinforced by Netherlands Azure MVP Sjoukje Zaal, who believes there was a major benefit to creating this event as one which was not bound by timezone restrictions.

“With the current state of affairs, I think having an easy way to share all of this content is the best support we could ask for,” says South African Windows Development MVP Gergana Young. This point is driven home by Japanese Microsoft Azure MVP Yuto Takei, who says: “It’s really amazing to see presentations in different languages taking place at the same time and lasting 24 hours!”

“Honestly, since I began my career I have been seeking for likeminded people – newbies, experts, gurus – so that I can exchange ideas, learn, practice, or simply discuss!” says Bulgarian AI MVP Ekaterina Marinova. “The MVPs, Microsoft employees, everybody within this mini world has the spirit and it is contagious.” Canadian Dev MVP Hamida Rebai agrees the event successfully integrated Azure users of all experience levels to enable the sharing of knowledge.

“For more than 10 years I have been part of different communities inside Microsoft,” says Argentine Ivana Tilca. “First I was a Microsoft student partner, then I worked at Microsoft, and now I have the opportunity to be an MVP – and it always felt like being at home,” she says.

The Virtual Azure Community Day organizing team – comprised of Henk Boelman, Suzanne Daniels, Nick Trogh, Olivier Leplus, Floor Drees, Lucie Simeckova, and Maud Levy – thank all who contributed on the day to make it possible, with special thanks to AI MVP Willem Meints.

Check out all the action from the event at the YouTube channel.

by Scott Muniz | Aug 11, 2020 | Azure, Microsoft, Technology, Uncategorized

This article is contributed. See the original author and article here.

by @Edwin Hernandez

In this article, I will describe the implementation of a logger for UI Test automation and the integration with test execution from an Azure DevOps Release pipeline. This article and the demo solution are standalone articles; however, I do build on concepts we reviewed on previous articles, specifically:

You may want to read through those articles before starting with this one. Having said that, let us get started describing what the logger does.

Purpose

UI Test automation and Regression are time-consuming expensive tasks because of their initial time investment and their maintenance effort. Even if a test suite is well maintained, once Automated Regression is executed, you still need a QA Automation Engineer to analyze the results and investigate any errors.

The purpose of this logger is to provide a detailed log of the flow of the UI script, including test case steps and screenshots. The QA Engineer or a Business Analyst can use the information from the logger to follow the business logic of the UI test and more easily validate if the intended work was completed or the reason for failure if an error occurred. A descriptive log can be more useful than even an actual video recording of the test.

Logger Features

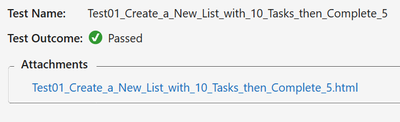

- The logger creates a single HTML file that contains all messages and embedded images.

- This class is meant to be used in a Page Object Model project, when the LogMessage method is called it reflects itself to get the calling method and class and it uses this information to write a message into the HTML log.

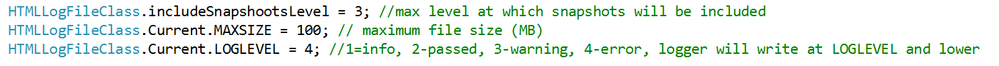

- There are 4 log levels to help filtering how much information you want to log (error, warning, passed, info). This and other configurations are exposed as global variables. You can also specify the level up to which you want screenshots to be taken.

- Screenshots are saved as binary strings and embed into the HTML file so that you end up with a single file. This greatly helps the integration with Azure DevOps.

- This logger is adaptable since it is a standalone class. You can plug it into several types of UI Test projects. I have personally used it with WinAppDriver, CodedUI, Selenium WebDriver, and Xamarin.UITests projects with minimal changes, pretty much all that needs to be changed is the type of session object that is passed as an argument and the screenshot method.

Logger Requirements

- Given the frameworks I just mentioned, you can guess that this Logger class was written in C# and is meant to be used on Visual Studio for UI Test projects running over MSTest or NUnit projects. You could adapt it to other types of frameworks, but it would require more work.

- As I said this logger is meant to be used as part of a Page Object Model (POM), it is not that you couldn’t use it with a straight Top to Bottom test but you would take more advantage of it in a POM project.

Other than that, the log method needs to be specifically called every time something needs to be logged. I explored the option of using the constructor or interfaces, but it would require the class to be more coupled with the test solution and I wanted it to be more portable.

Configuration Variables

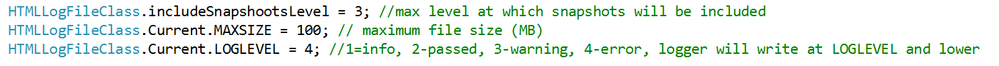

The logger configuration variables should be put for example on the Class Initialize method:

A log level of 4 would log all messages, a log level of 3 would log only messages level 1 to 3. You can change the labels of the labels in the HTMLLogFile class itself.

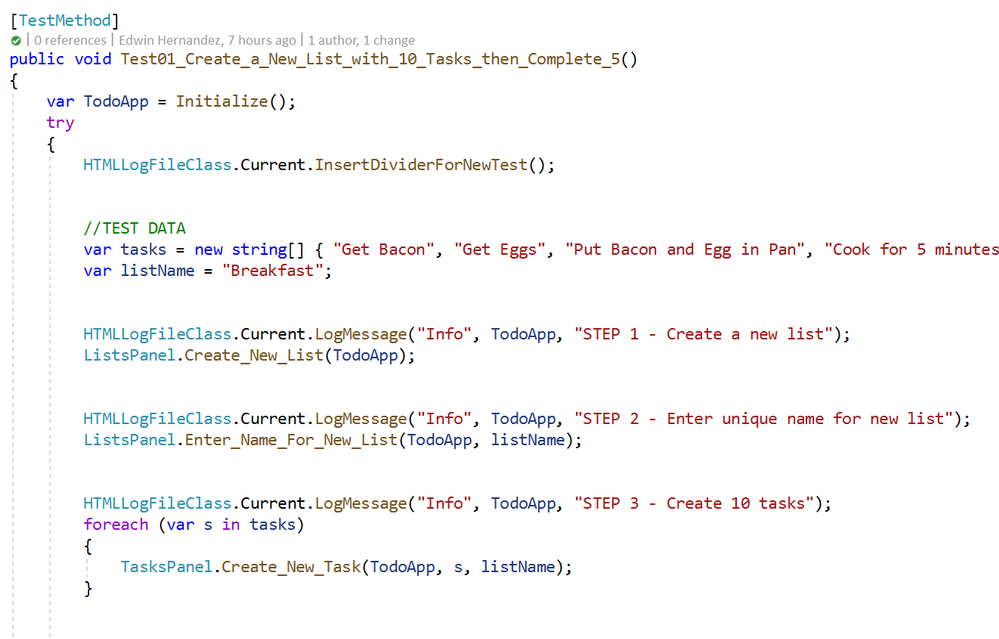

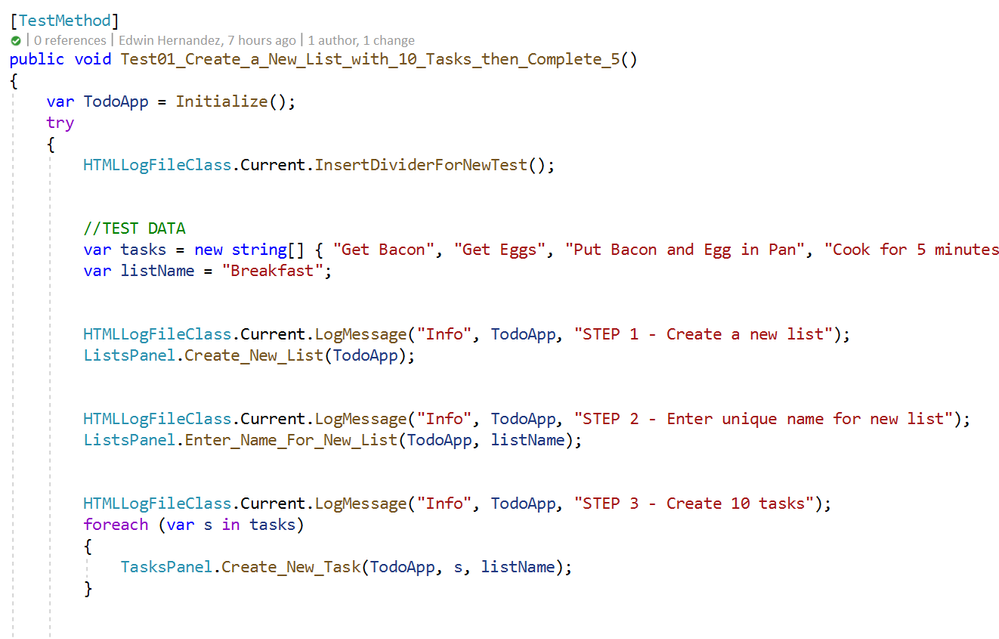

Logging Messages from the Test Method

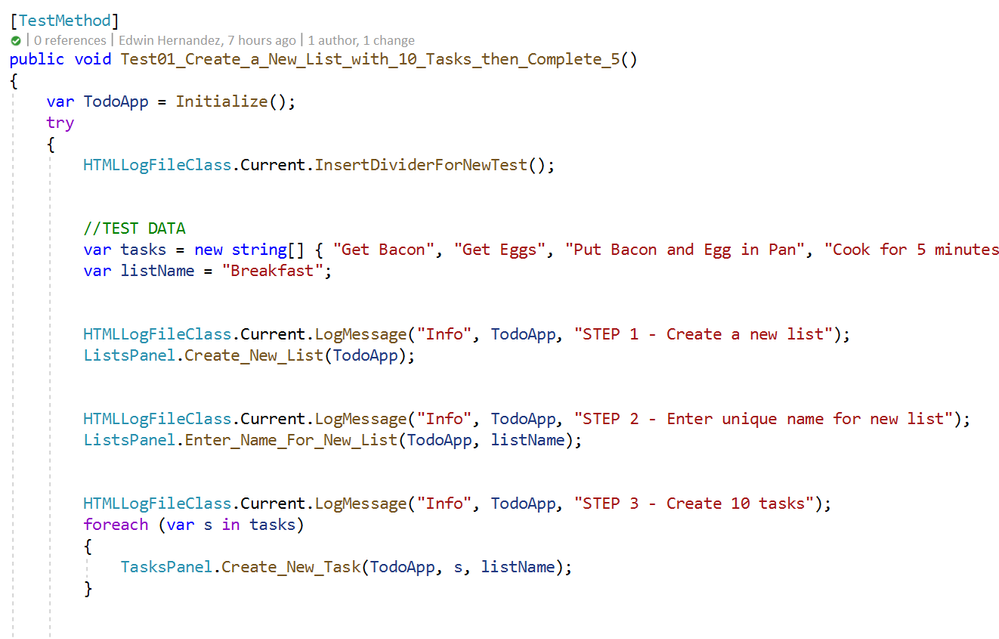

As I mentioned earlier, the LogMessage method needs to be called every time something is to be logged into the file. I recommend that only “Info” level messages should be logged from the Test Method, mostly to log comments and business step details that would make the HTML report easier to read, for example:

The InsertDividerForNewTest creates a header for the report, then every “Info” step is logged with or without screenshots depending on the configured level.

Logging Messages from the Page Class Methods

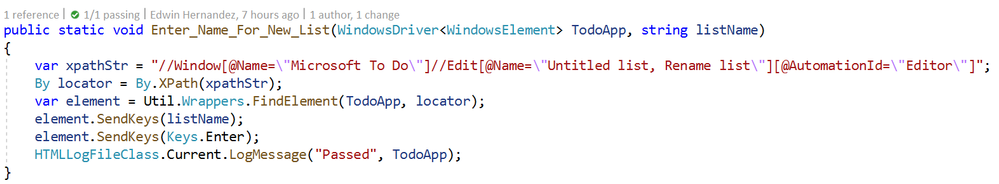

This may be the most interesting part, every time you use LogMessage from the Page Class Methods, the HTMLLogFile class will use reflection to get the calling method/class information and it will include this information into the HTML report, take the following for example:

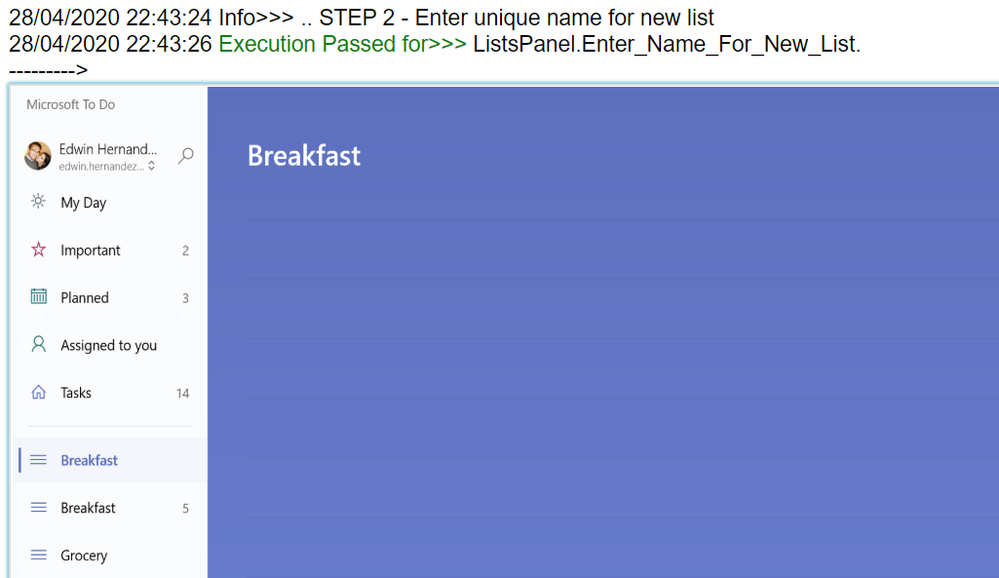

By including LogMessage “Passed” at the end, the HTML log will print the following:

Thus, by having well-constructed Page Object Classes, the log would build itself with very few additional comments.

Finding the Log for Local Test Executions

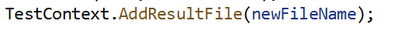

To have this HTML file to be uploaded to Azure DevOps it must be part of the Test Context. This part is included in this demo in the Class Cleanup Method:

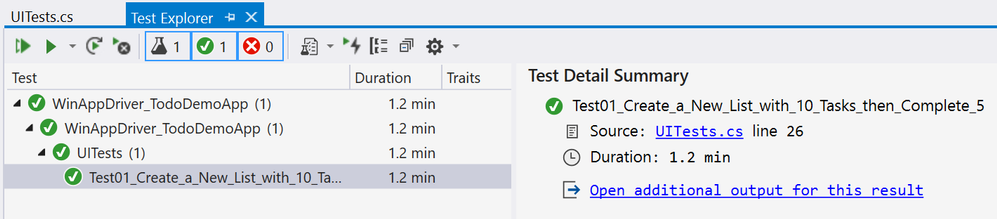

If the UI test is executed from Visual Studio (make sure you have WinAppDriver installed, please check my other post about this on the top). The Test Detail Summary should show additional output already part of the Test Context, and inside of that you can see the HTML file:

.

If you open that, the HTML log should have a header, then every Log Message. Please note that screenshots are expandable on hover.

Integration with Azure DevOps Release Pipeline

Now for the next part, I will only show what the result would look like. Setting up a Release Pipeline in Azure DevOps for UI Test Regression is not in the scope of this article. I intend to cover that in a future article, there are several ways to accomplish that depending on what you need to do.

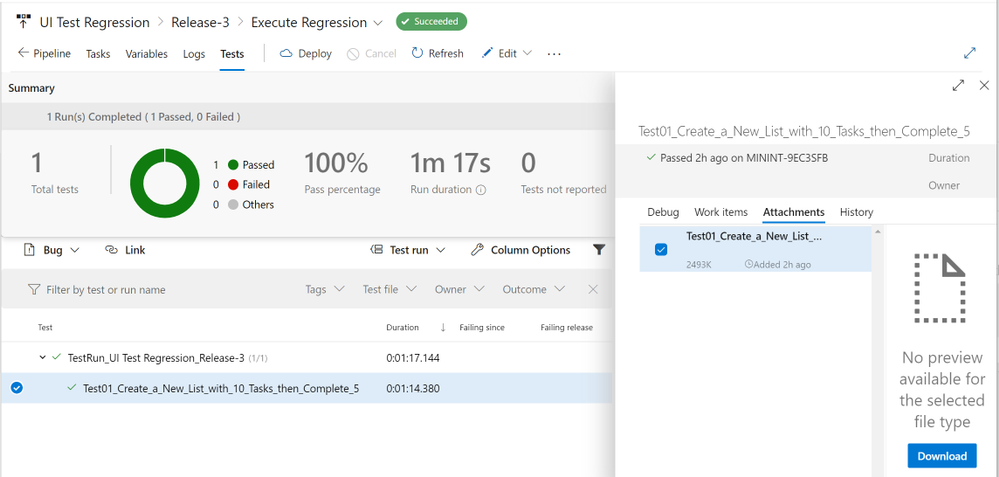

For now, the screenshot below is a simple Release Pipeline that takes the Test Assemblies as an artifact, executes the Tests on a Private (Self-Hosted) Build Agent set on interactive mode.

MSTest automatically uploads to the Release Pipeline test results information (# of test passed, pass %, duration, etc.) and if you expand a specific test and navigate to the Attachments panel, the HTML Log file should be there already associated with that test. Every test will get a unique HTML file:

The benefits of running Regression from a Release Pipeline are related to traceability against a Build/Environment and even user stories and test cases. For deployment approval and for historical purposes since tests are saved into the cloud instead of local files.

Download Demo Project and HTMLLogFIle class

You can find at the bottom of this post, a file containing a Visual Studio solution that has a demo POM library project, a test project, and the HTMLLogFIle class, as well as a demo HTML report file as a reference.

If you have any comments/concerns, please reach out or comment below.

by Scott Muniz | Aug 11, 2020 | Azure, Microsoft, Technology, Uncategorized

This article is contributed. See the original author and article here.

This installment is part of a broader series to keep you up to date with the latest enhancements to the Azure Sentinel Devops template.

This blog is a collaboration between @Cristhofer Munoz & @Matt_Lowe.

Introduction

Threats are evolving just as quickly as data-volume growth, with bad actors exploiting every possibility and technique to gain access to the corporate network. At the same time, the risk surface has widened as companies shift to hybrid-cloud environments, adopt DevOps and Internet of Things (IoT) technologies, and expand their remote workforces.

Amid this landscape, organizations require a bird’s-eye view of security posture across the enterprise, hence a security information and event management (SIEM) system is a critical element.

Frankly, deploying a SIEM is not a trivial task. Organizations struggle with the number of tasks to adopt a SIEM due to the lack of an agile methodology to plan, execute, and validate its initial success and deploy into production.

To help alleviate this challenge, we’ve developed an Azure Sentinel DevOps Board Template which serves as a blueprint to understand the tasks and activities to deploy Azure Sentinel following recommended practices. By leveraging the Azure Sentinel DevOps Boards one can quickly start tracking user stories, backlog items, task, features, and bugs associated with your Azure Sentinel deployment. The Azure Sentinel DevOps Board is not a static template, it can be modified to reflect your distinctive needs. You will have the ability to quickly add and update the status of work using the Kanban board. You can also assign work to team members and tag with labels to support queries and filtering.

For additional information on Azure Boards, please refer the public documentation.

In this template we provide prescriptive guidance for the following Azure Sentinel use cases:

- Define Use Cases

- Get Started with Azure Sentinel | Tutorials

- Onboard Azure Sentinel | Prerequisites

- Azure Sentinel Architecture

- Setup Azure Sentinel

- Data Collection

- Visualize your security data with Workbooks

- Enabling Analytics

- Respond to threats

- Proactive threat hunting

- Advanced Topics

Getting Access | Azure DevOps Generator

The purpose of this initiative is to simplify the process and provide tactical guidance to deploy Azure Sentinel by providing an Azure Sentinel DevOps board template that provides the prescriptive guidance you need to get going with your deployment. To populate the Azure Sentinel board, we utilized the Azure DevOps Demo generator service to create pre-populated content.

To get started:

1. Browse to the Azure DevOps Demo Generator site by selecting the link, or copy https://azuredevopsdemogenerator.azurewebsites.net/ into your browser’s URL field.

2. Click Sign In and provide the Microsoft or Azure AD account credentials associated with an organization in Azure DevOps Services. If you don’t have an organization, click on Get Started for Free to create one and then log in with your credentials.

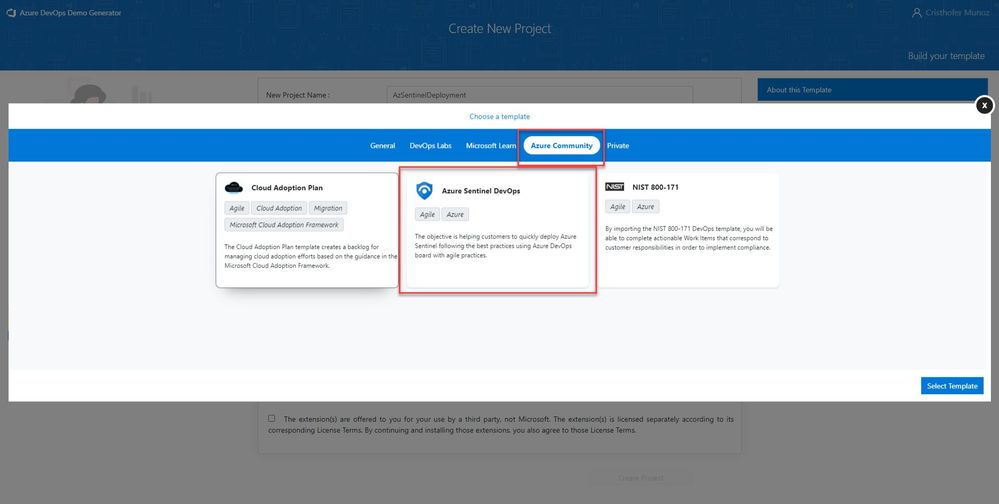

3. After signing in, you will arrive at the “Create New Project” page.

4. Provide a name for your project (such as “AzSentinelDeployment”) that you and other contributors can use to identify the project.

5. Next, Select the organization you will use to host the project created by the Azure DevOps Demo Generator. (You may have multiple accounts of which you are a member, and which are associated with your login, so choose carefully.)

6. Lastly, select the demo project template you want to provision by clicking … (Choose Template) button.

7. A new pane will populate providing you the ability to select a pre-populated template. Click on the Azure Community tab, there you will find the Azure Sentinel Devops template.

8. Select the Azure Sentinel Devops template and create the project. Your project may take a couple of minutes for the Demo Generator to provision. When it completes, you will be provided with a link to the demo project.

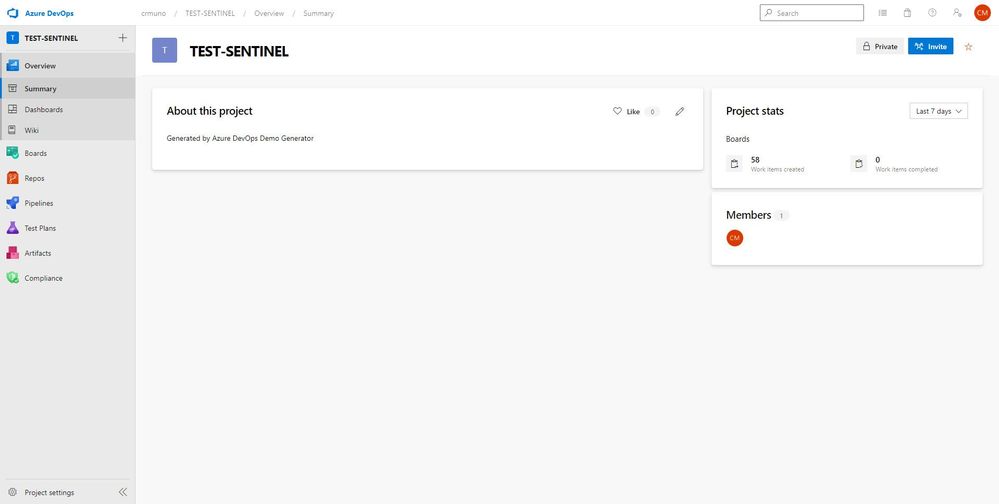

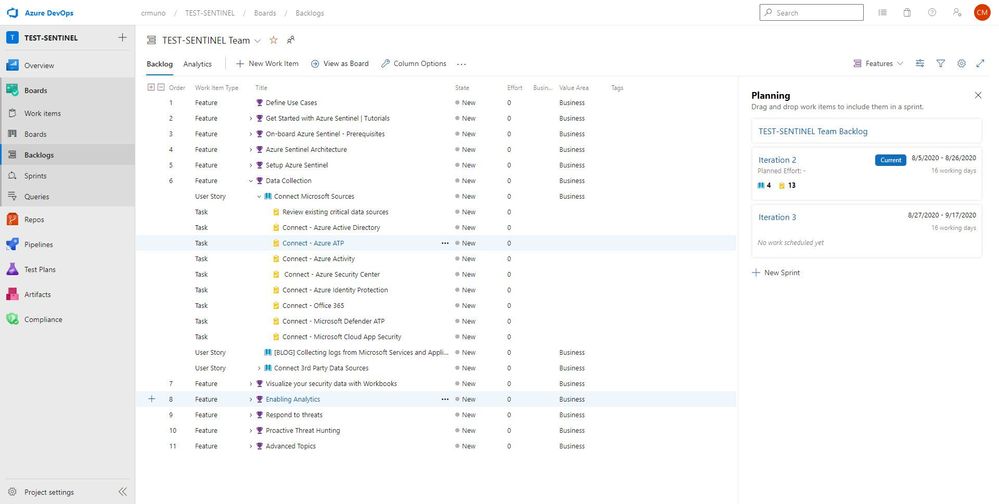

9. Select the link to go to the new demo Azure DevOps Services project and confirm it was successfully provisioned. You should arrive at the following page:

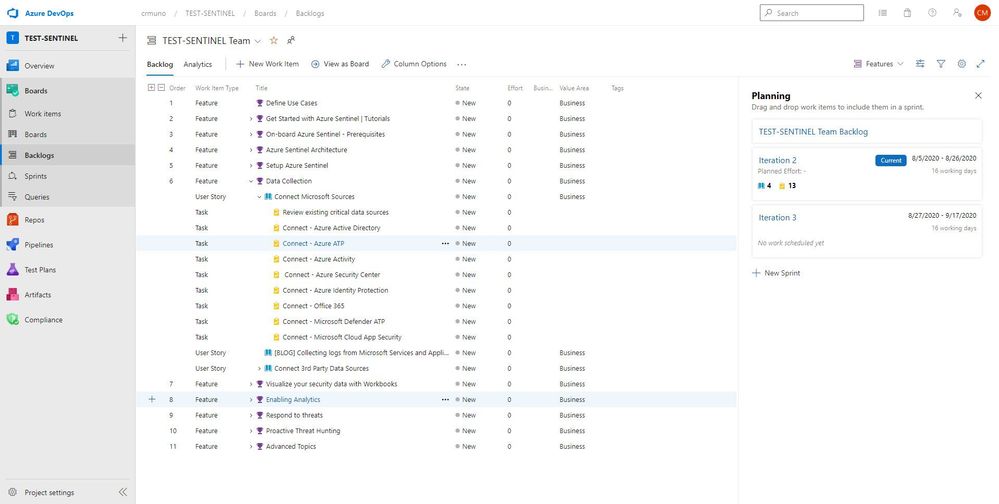

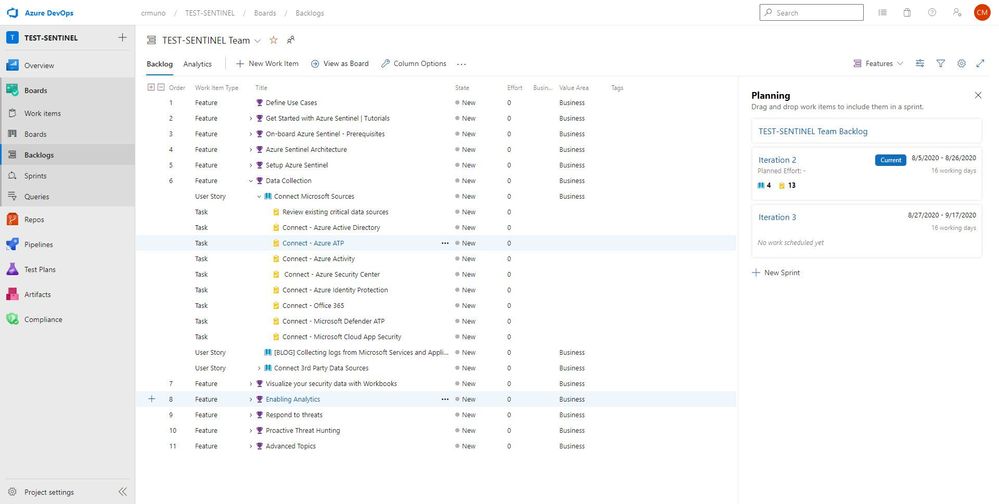

10. To access the Azure Sentinel backlog where you will find the features, user stories, and tasks to deploy Azure Sentinel, hover over Boards, and select Backlogs. Make sure that you are viewing the Features hierarchy. The backlog page will be the main page you will visit to consume the recommended practices and detailed steps to deploy Azure Sentinel.

.

Adding Team Members

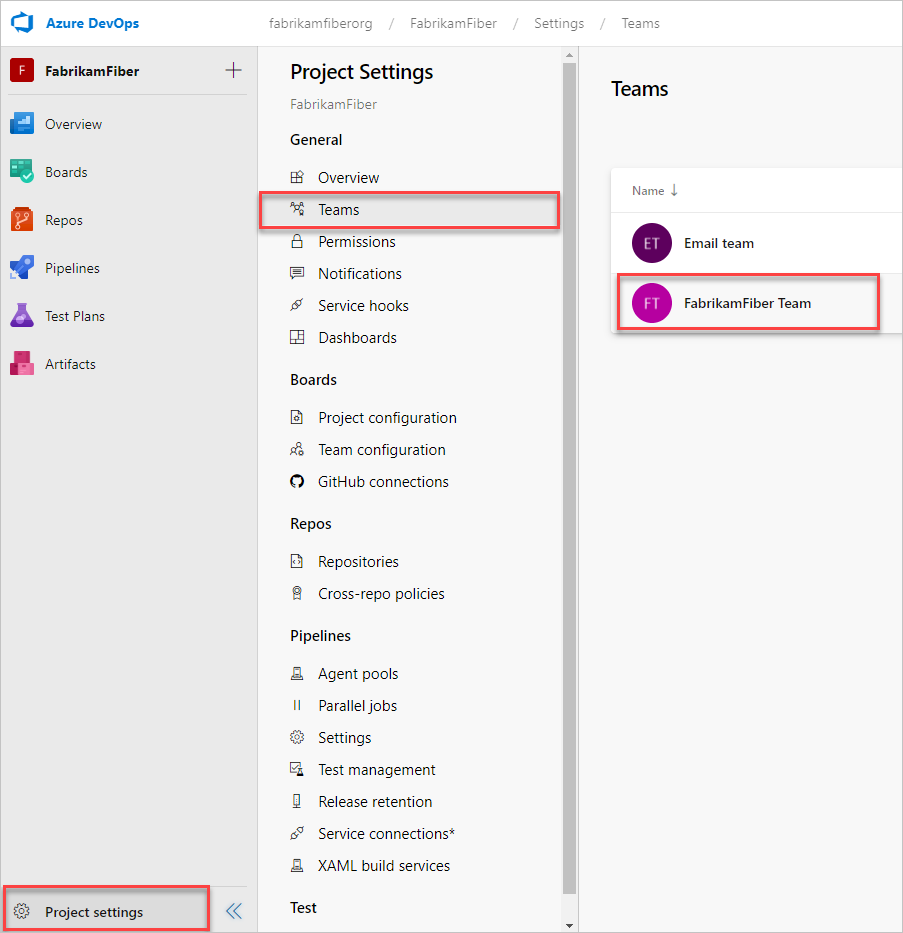

1. Open your project, and then select Project settings > Teams. Then, select your project.

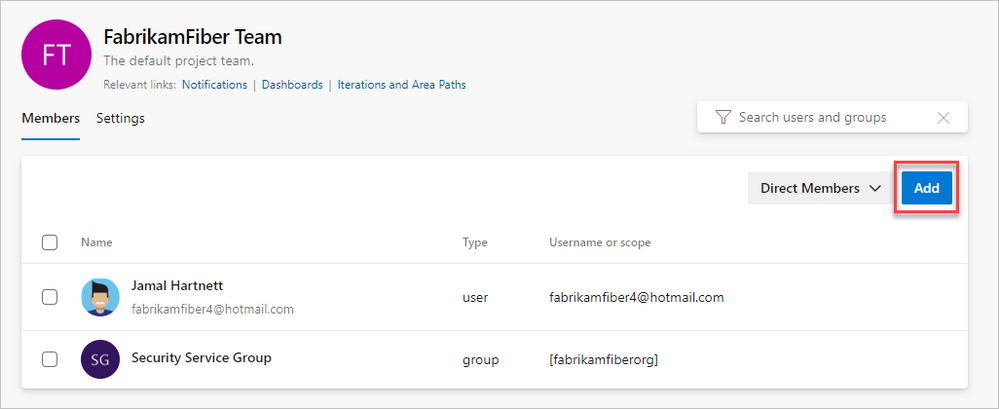

2. Select Add to invite members to your project.

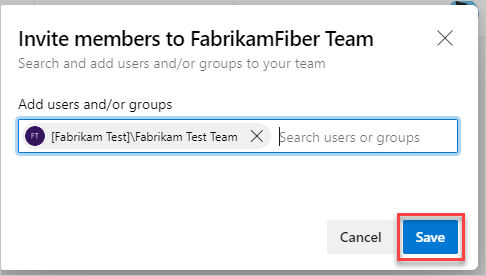

3. Add users or groups, and then choose Save.

Enter the email addresses of the new users, separated by semicolons, or enter the display names of existing users. Add them one at a time or all at once.

How to Use

The template is comprised of features, user stories, and tasks providing guidance and recommended practices for your Azure Sentinel deployment. The template should help your team to discuss, agree on acceptance criteria, delegate ownership, create iterations, track the progress and efficiently deploy Azure Sentinel.

Note: Please remember that the template is not static, it can be modified to your reflect distinctive needs. You have the ability to add your own features, user stories, and tasks to reflect your custom use cases.

In this template we provide prescriptive guidance for the following Azure Sentinel use cases:

- Define Use Cases

- Get Started with Azure Sentinel | Tutorials

- Onboard Azure Sentinel | Prerequisites

- Azure Sentinel Architecture

- Setup Azure Sentinel

- Data Collection

- Visualize your security data with Workbooks

- Enabling Analytics

- Respond to threats

- Proactive threat hunting

- Advanced Topics

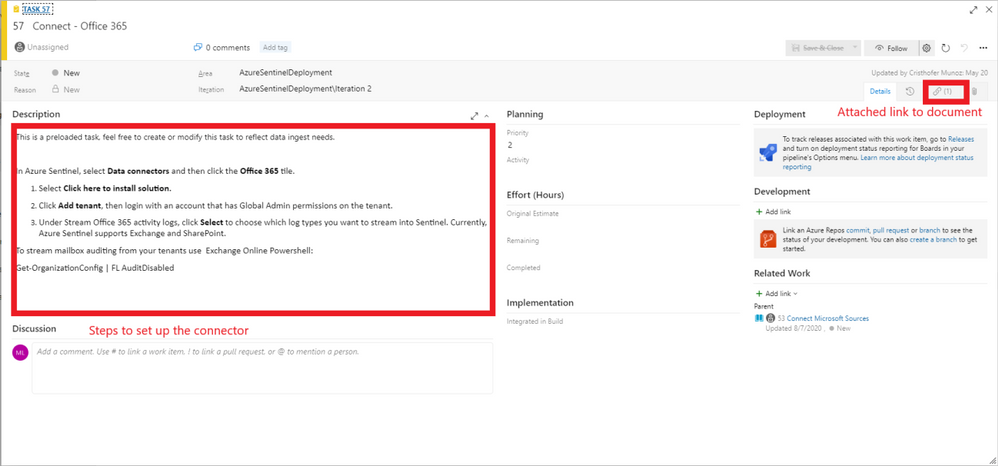

The use cases above are listed as Features, comprised of user stories and tasks providing detailed steps to satisfy the use case. The user stories and tasks are nested within each feature. Each task under the user stories includes important information such as links to public documentation, blogs, and webinars that provide you the necessary information complete the task.

In total, there are 11 features that have been listed above. Features 1 through 4 cover any initial steps and pre-requisites for preparing your Azure Sentinel deployment. Features 5 through 11 cover the actual steps for setting up and exploring features with Azure Sentinel.

Feature 1: Define Use Cases

Defining use cases is the most important step for this entire process. There must be a need and use when pursuing the deployment of a product. To provide some ideas or guidance, Gartner has created an article that covers how to determine and build great use cases when deploying a SIEM.

Feature 2: Get Started with Azure Sentinel | Tutorials

To help introduce and prepare you for the deployment of Azure Sentinel, this feature includes the well put together Azure Sentinel Ninja Training with additional Kusto training to assist. This training is to help introduce the concepts and features of the product with materials to help educate and prepare your users for day to day usage of Azure Sentinel.

Feature 3: Onboard Azure Sentinel | Prerequisites

It is important to identify and understand what the prerequisites are for deploying and using Azure Sentinel. To assist with this, this feature in the template provides a list of prerequisites as well as any associated documents that provide additional information that will help with addressed them.

Feature 4: Azure Sentinel Architecture

The design of a SIEM is as important as the SIEM itself. When deploying, it is essential to anticipate design, architecture, and best practices. To provide some guidance and advice, a blog that covers the best practices for implementing Azure Sentinel and Azure Security Center is included.

Along with the best practices for implementing Azure Sentinel, it is essential to understand the costs associated with using the product. Azure Sentinel as a service is mostly free but it is important to understand where the costs are coming from and how you can project costs when reviewing data ingestion options and volume. The Azure Calculator is an invaluable tool that assists with this process and can provide insight into how much it will cost to ingest data that is not free.

Feature 5: Setup Azure Sentinel

The use cases have been determined. The learning material has been reviewed. The prerequisites are understood. The architecture is set and the costs are projected. It is time to begin to take action and deploy the resources to set up Azure Sentinel. As covered in the Ninja training, Azure Sentinel is built on top of the Azure Log Analytics service. This service will serve as the main point for ingestion and log retention. The Azure Log Analytics is where one will collect, process, and store data at cloud scale. For reference, documentation for creating a new workspace is listed in this feature. Once the workspace is ready to go, it is time to onboard it to Azure Sentinel. The documentation for onboarding the workspace is also included in the feature.

Once the service is set up, it is time to determine the permissions that are needed for the users that will be using it. Azure Sentinel has 3 different roles backed by Azure role-based access control (Azure RBAC) to provide built-in roles that can be assigned to users, groups, and services in Azure. The document with the roles is listed in the feature. Additionally, permissions can be assigned on the table level for data in order to prevent users from seeing certain data types if desired.

Feature 6: Data Collection

Data ingestion is the oxygen of Azure Sentinel. Azure Sentinel improves the ability to collect and use data from a variety of sources to unblock customer deployments and unlock full

SIEM value.

Setting up data collection begins not only the data ingestion, but also the machine learning capabilities of Azure Sentinel. When exploring the dozens of connectors that are available out of the box, we recommend to enable the Microsoft security data connectors first. Once first part connectors are chosen, it is time to explore the 3rd party connectors. Each connector listed in this feature includes a description and a reference to the associated document.

Feature 7: Visualize your security data with Workbooks

Once data begins to be ingested, it is time to visualize the data to monitor trends, identify anomalies, and present useful information within Azure Workbooks. Out of the box, there are dozens of built-in Workbooks to choose from as well as several from the Azure Sentinel GitHub community page. Within the feature for Workbooks are a few sample Workbooks to consider. Not every data source or connector will have a Workbook but there are quite a few that can be useful.

Feature 8: Enabling Analytics

One of the main features of Sentinel is its ability to detect malicious or suspicious behaviors based on the MITRE attack framework. Out of the box, there are over 100 different detections built in that were made by Microsoft Security Professionals. These are simple to deploy and the feature in the template provides documentation for deploying the template detection rules, as well as the document for creating your own custom detection rules.

Feature 9: Respond to threats

To compliment its SIEM capabilities, Azure Sentinel also has SOAR capabilities. This feature contains helpful documents for setting up Playbooks for automated response, deploying Playbooks from the GitHub repository, and how to integrate ticket managing services via Playbooks.

Feature 10: Proactive threat hunting

To go along with the reactive features, Azure Sentinel also provides proactive capabilities that provide you the ability to proactively search, review, and respond to undetected or potentially malicious activities that may indicate a sign of intrusion/compromise. Azure Sentinel offers dozens out of the box hunting queries that identify potentially exploitable or exploited areas and activities within your environment. This feature within the template provides links and information to ignite your proactive threat hunting journey with out the box threat hunting queries, bookmarks, Azure Notebooks, and livestream.

Feature 11: Advanced Topics

If desired, Azure Sentinel can be deployed and managed as code. To help provide context and guidance, this feature within the template includes a blog post that covers how one can deploy and managed Azure Sentinel as code.

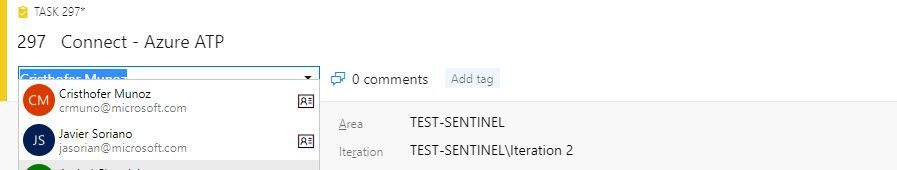

Assign work items to a team member

You can only assign a work item to one person at a time. The Assigned To field is a person-name field designed to hold an user identity recognizable by the system. Within the work item form, choose the Assigned To field to select a project member. Or, you can begin typing the name of a project member to quickly focus your search to a select few.

Tracking Progress with Boards

Azure DevOps utilizes a progress tracking approach that is similar to Agile project management. Boards lists each task, the state of progress, and the individuals that are assigned to the tasks. As the tasks are worked on, they will move within the Board until they are closed. The tasks can also be clicked and dragged around the Board as desired. This will provide you the blueprint understanding the completed and outstanding tasks for your Azure Sentinel deployment.

What’s Next

The current version of the Azure Devops Board template provides you the blueprint to understand the tasks and recommended practices to onboard to Azure Sentinel. The next iteration will incorporate a CI/CD pipeline that will enhance and automate the tasks/phases covered in the Azure Devops Board template. The CI/CD pipeline will automate your Azure Sentinel deployment so you spend less time with the nuts and bolts and more time securing your environment.

Get started today!

Supercharge your cloud SIEM today!

We encourage you to leverage the Azure Sentinel Devops Board template to accelerate your Azure Sentinel deployment following recommended practices.

Try it out, and let us know what you think!

Recent Comments