by Contributed | Sep 30, 2020 | Azure, Technology, Uncategorized

This article is contributed. See the original author and article here.

Many customers require the ability to audit what happens in their SOC environment for both internal and external compliance requirements . It is important to understand the who/what/when’s of activities within your Azure Sentinel instance. In this blog, we will explore how you can audit your organization’s SOC if you are using Azure Sentinel and how to get the visibility you need with regard to what activities are being performed within your Sentinel environment. The accompanying Workbook to this blog can be found here.

There are two tables we can use for auditing Sentinel activities:

- LAQueryLogs

- Azure Activity

In the following sections we will show you how to set up these tables and provide examples of the types of queries that you could run with this audit data.

LAQueryLogs table

The LAQueryLogs table containing log query audit logs provides telemetry about log queries run in Log Analytics, the underlying query engine of Sentinel. This includes information such as when a query was run, who ran it, what tool was used, the query text, and performance statistics describing the query’s execution.

Since this table isn’t enabled by default in your Log Analytics workspace you need to enable this in the Diagnostics settings of your workspace. Click here for more information on how to do this if you’re unfamiliar with the process. @Evgeny Ternovsky has written a blog post on this process that you can find here.

A full list of the audit data contained within these columns can be found here. Here are a few examples of the queries you could run on this table:

How many queries have run in the last week, on a per day basis:

LAQueryLogs

| where TimeGenerated > ago(7d)

| summarize events_count=count() by bin(TimeGenerated, 1d)

Number of queries where anything other than HTTP response request 200 OK is received (i.e. the query failed):

LAQueryLogs

| where ResponseCode != 200

| count

Show which users ran the most CPU intensive queries based on CPU used and length of query time:

LAQueryLogs

|summarize arg_max(StatsCPUTimeMs, *) by AADClientId

| extend User = AADEmail, QueryRunTime = StatsCPUTimeMs

| project User, QueryRunTime, QueryText

| order by QueryRunTime desc

Summarize who ran the most queries in the past week:

LAQueryLogs

| where TimeGenerated > ago(7d)

| summarize events_count=count() by AADEmail

| extend UserPrincipalName = AADEmail, Queries = events_count

| join kind= leftouter (

SigninLogs)

on UserPrincipalName

| project UserDisplayName, UserPrincipalName, Queries

| summarize arg_max(Queries, *) by UserPrincipalName

| sort by Queries desc

AzureActivity table

As in other parts of Azure, you can use the AzureActivity table in log analytics to query actions taken on your Sentinel workspace. To list all the Sentinel related Azure Activity logs in the last 24 hours, simply use this query:

AzureActivity

| where OperationNameValue contains "SecurityInsights"

| where TimeGenerated > ago(1d)

This will list all Sentinel-specific activities within the time frame. However, this is far too broad to use in a meaningful way so we can start to narrow this down some more. The next query will narrow this down to all the actions taken by a specific user in AD in the last 24 hours (remember, all users who have access to Azure Sentinel will have an Azure AD account):

AzureActivity

| where OperationNameValue contains "SecurityInsights"

| where Caller == "[AzureAD username]"

| where TimeGenerated > ago(1d)

Final example query – this query shows all the delete operations in your Sentinel workspace:

AzureActivity

| where OperationNameValue contains "SecurityInsights"

| where OperationName contains "Delete"

| where ActivityStatusValue contains "Succeeded"

| project TimeGenerated, Caller, OperationName

You can mix these up and add even more parameters to search the AzureActivities log to explore these logs even more, depending on what your organization needs to report on. Below is a selection of some of the actions you can search for in this table:

- Update Incidents/Alert Rules/Incident Comments/Cases/Data Connectors/Threat Intelligence/Bookmarks

- Create Case Comments/Incident Comments/Watchlists/Alert Rules

- Delete Bookmarks/Alert Rules/Threat Intelligence/Data Connectors/Incidents/Settings/Watchlists

- Check user authorization and license

Alerting on Sentinel activities

You may want to take this one step further and use Sentinel audit logs for proactive alerts in your environment. For example, if you have sensitive tables in your workspace that should not typically be queried, you could set up a detection to alert you to this:

LAQueryLogs

| where QueryText contains "[Name of sensitive table]"

| where TimeGenerated > ago(1d)

| extend User = AADEmail, Query = QueryText

| project User, Query

Sentinel audit activities Workbook

We have created a Workbook to assist you in monitoring activities in Sentinel. Please check it out here and if you have any improvements or have made your own version you’d like to share, please submit a PR to our GitHub repo!

With thanks to @Jeremy Tan @Javier Soriano, @Matt_Lowe and @Nicholas DiCola (SECURITY JEDI) for their feedback and inputs to this article.

by Contributed | Sep 29, 2020 | Azure, Technology, Uncategorized

This article is contributed. See the original author and article here.

Additional certificate updates for Azure Sphere

Microsoft is updating Azure services, including Azure Sphere, to use intermediate TLS certificates from a different set of Certificate Authorities (CAs). These updates are being phased in gradually, starting in August 2020 and completing by October 26, 2020. This change is being made because existing intermediate certificates do not comply with one of the CA/Browser Forum Baseline requirements. See Azure TLS Certificate Changes for a description of upcoming certificate changes across Azure products. Azure IoT TLS: Changes are coming! (…and why you should care) provides details about the reasons for the certificate changes and how they affect the use of Azure IoT.

How does this affect Azure Sphere?

On October 13, 2020 we will update the Azure Sphere Security Service SSL certificates. Please read on to determine whether this update will require any action on your part.

What customer actions are required for the SSL certificate updates?

On October 13, 2020 the SSL certificate for the Azure Sphere Public API will be updated to a new leaf certificate that links to the new DigiCert Global Root G2 certificate. This change will affect only the use of the Public API. It does not affect Azure Sphere applications that run on the device.

For most customers, no action is necessary in response to this change because Windows and Linux systems include the DigiCert Global Root G2 certificate in their system certificate stores. The new SSL certificate will automatically migrate to use the DigiCert Global Root G2 certificate.

However, if you “pin” any intermediate certificates or require a specific subject, name, or issuer (“SNI pinning”), you will need to update your validation process. To avoid losing connectivity to the Azure Sphere Public API, you must make this change before we update the certificate on October 13, 2020.

What about Azure Sphere apps that use IoT and other Azure services?

Additional certificate changes will occur soon that affect Azure IoT and other Azure services. The update to the SSL certificates for the Azure Sphere Public API is separate from those changes.

Azure IoT TLS: Changes are coming! (…and why you should care) describes the upcoming changes that will affect IoT Hub, IoT Central, DPS, and Azure Storage Services. These services are not changing their Trusted Root CAs; they are only changing their intermediate certificates. Azure Sphere on-device applications that use only the Azure IoT and Azure Sphere application libraries should not require any modifications. When future certificate changes are required, we will update the IoT C SDK in the Azure Sphere OS and thus make the updated certificates available to your apps.

If your Azure Sphere on-device applications communicate with other Azure services, however, and pin or supply certificates for those services, you might need to update your image package to include updated certificates. See Azure TLS Certificate Changes for information about which certificates are changing and what changes you need to make.

We continue to test common Azure Sphere scenarios as other teams at Microsoft perform certificate updates and will provide detailed information if additional customer action is required.

For more information:

If you encounter problems

For self-help technical inquiries, please visit Microsoft Q&A or Stack Overflow. If you require technical support and have a support plan, please submit a support ticket in Microsoft Azure Support or work with your Microsoft Technical Account Manager/Technical Specialist. If you would like to purchase a support plan, please explore the Azure support plans.

by Contributed | Sep 29, 2020 | Azure, Technology, Uncategorized

This article is contributed. See the original author and article here.

As we continue to drive expansion of support for Department of Defense Security Requirements Guide (DoD SRG) Impact Level 5 (IL5) to all Azure Government regions, we recently announced the addition of 18 new services for a total of 97 services authorized for IL5 workloads in Azure Government – more than any other cloud provider.

These services include a broad range of IaaS and PaaS capabilities to enable mission owners to move further, faster. Mission owners can choose from multiple regions across the country and benefit from decreased latency, expanded geo-redundancy, and a range of options for backup, recovery, and cost optimization.

When supporting IL5 workloads on Azure Government, the isolation requirements can be met in different ways. Isolation guidelines for IL5 workloads documentation page addresses configurations and settings for the isolation required to support IL5 data with specific service instructions.”

To learn more about the new Azure Government services authorized for IL5, read the Azure Gov blog.

by Contributed | Sep 29, 2020 | Azure, Technology, Uncategorized

This article is contributed. See the original author and article here.

Final Update: Tuesday, 29 September 2020 18:38 UTC

We’ve confirmed that all systems are back to normal with no customer impact as of 09/29, 18:20 UTC. Our logs show the incident started on 09/28, 21:45 UTC and that during the 20 hours 35 mins that it took to resolve the issue.

-

Root Cause: AAD outages

-

Incident Timeline: 20 Hours & 35 minutes – 09/28, 21:45 UTC through 09/29, 18:20 UTC

We understand that customers rely on Application Insights as a critical service and apologize for any impact this incident caused.

-Vincent

Initial Update: Tuesday, 29 September 2020 18:00 UTC

We are aware of issues within Application Insights and are actively investigating. Some customers may experience issues retrieving availability results in WUS Application Insights components.

-

Work Around: none

-

Next Update: Before 09/29 20:30 UTC

We are working hard to resolve this issue and apologize for any inconvenience.

-Vincent

by Contributed | Sep 29, 2020 | Azure, Technology, Uncategorized

This article is contributed. See the original author and article here.

In the first part of this two-part series with Hamish Watson, we will look at the various methods available to deploy an Azure SQL database including PowerShell, Azure CLI and Terraform. Creating resources has never been easier or more standard than what we have now.

Watch on Data Exposed

View/share our latest episodes on Channel 9 and YouTube!

by Contributed | Sep 29, 2020 | Azure, Technology, Uncategorized

This article is contributed. See the original author and article here.

As many of you know, all versions of the AzureRM PowerShell module are outdated, but not out of support (yet). The Az PowerShell module is now the recommended PowerShell module for interacting with Azure.

We asked our customers what was keeping them from migrating from AzureRM to the Az PowerShell module. Most of the responses mentioned the amount of work invested in scripts based on AzureRM that would need to be overhauled. Many of these are non-trivial scripts to create things like entire environments in Azure.

We listened and we’re happy to announce the Az.Tools.Migration PowerShell module. The Az.Tools.Migration PowerShell module can automatically upgrade your PowerShell scripts and script modules from AzureRM to the Az PowerShell module.

Preparing your environment.

First, you’ll need to update your existing PowerShell scripts to the latest version of the AzureRM PowerShell module (6.13.1) if you haven’t already.

Install the Az.Tools.Migration PowerShell module from the PowerShell Gallery using the following command.

Install-Module -Name Az.Tools.Migration

Generate an Upgrade Plan.

You use the New-AzUpgradeModulePlan cmdlet to generate an upgrade plan for migrating your scripts and modules to the Az PowerShell module. This cmdlet doesn’t make any changes to your existing scripts. Use the FilePath parameter for targeting a specific script or the DirectoryPath parameter for targeting all scripts in a specific folder.

The following example generates a plan for all the scripts in the C:Scripts folder. The OutVariable parameter is specified so the results are returned and simultaneously stored in a variable named Plan.

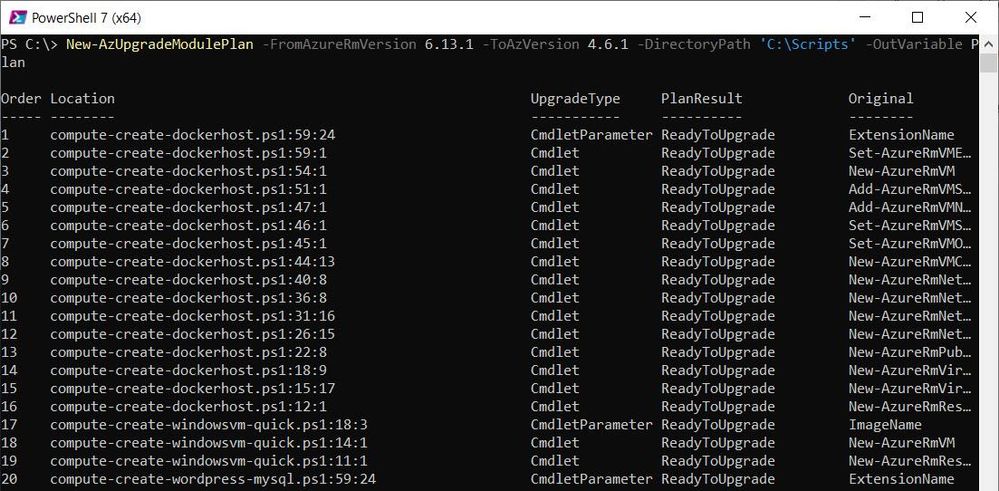

New-AzUpgradeModulePlan -FromAzureRmVersion 6.13.1 -ToAzVersion 4.6.1 -DirectoryPath 'C:Scripts' -OutVariable Plan

Before performing the upgrade, you need to view the results of the plan for any problems. The following example returns a list of scripts and the items in those scripts that will prevent them from being upgraded automatically.

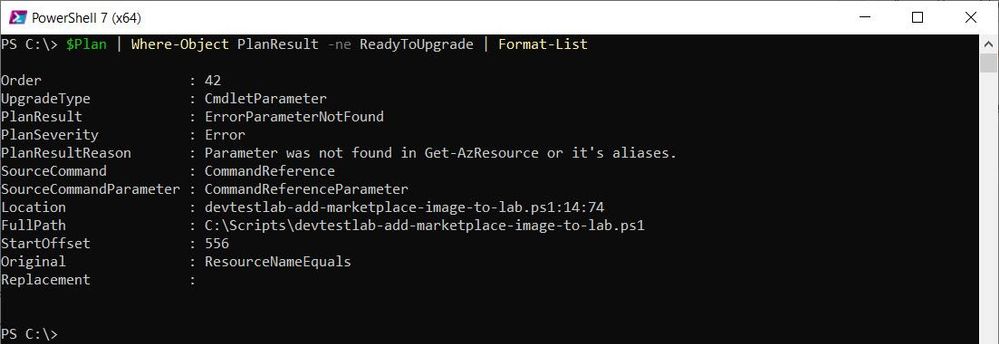

$Plan | Where-Object PlanResult -ne ReadyToUpgrade | Format-List

These items will not be upgraded automatically without manually correcting the issues first. Known issues that can’t be upgraded automatically include any commands that use splatting.

Perform the Upgrade.

Once you’re satisfied with the results, the upgrade is performed with the Invoke-AzUpgradeModulePlan cmdlet. This cmdlet also doesn’t make any changes to your scripts. It performs the upgrade by creating a copy of each script targeted with “_az_upgraded” appended to the file names.

The following example upgrades all of the scripts from the previously generated plan.

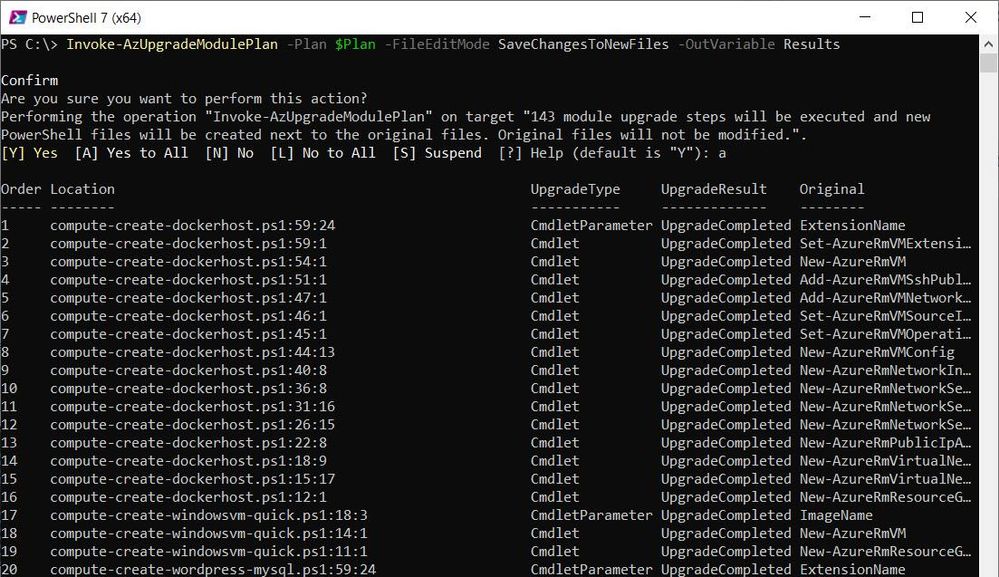

Invoke-AzUpgradeModulePlan -Plan $Plan -FileEditMode SaveChangesToNewFiles -OutVariable Results

Feedback

Issues and/or suggestions can be logged via an issue in the azure-powershell-migration GitHub repository.

Recent Comments