by Contributed | Oct 13, 2020 | Azure, Technology

This article is contributed. See the original author and article here.

It’s an iterative process, as cloud estate changes over time, so do cloud governance processes and policies. Cloud Governance spans across the five disciplines:

- Cost Management – Evaluate & Monitor costs, limit IT spend, scale to meet need, create cost accountability

- Security Baseline – Ensure compliance with IT Security requirements by applying a security baseline to all adoption efforts

- Resource Consistency- Ensure consistency in resource configuration. Ensure practices for onboarding, recovery, and discoverability

- Identity Baseline – Ensure the baseline for identity and access are enforces by consistently applying role definitions and assignments

- Deployment Acceleration – Accelerate deployment through centralization, consistency, and standardization across deployment templates

Following systematic approach can help you putting together initial governance foundation:

- Methodology: This helps establishing a basic understanding of the methodology to drive cloud governance in the Cloud Adoption Framework to begin thinking through the end state solution.

- Benchmark: Evaluation and assessment of your AS-IS and TO-BE state to establish a vision for applying the framework.

- Governance MVP: Provides initial foundation to your governance journey with a small, easily implemented set of governance tools.

- Extend and Improvise your Governance MVP: Iteratively enhance your Governance MVP by adding governance controls to address tangible risks as you progress toward the end state.

Reference – https://aka.ms/CAF/Gov

by Contributed | Oct 13, 2020 | Azure, Technology

This article is contributed. See the original author and article here.

In the second part of this two-part series, Hamish Watson shows us how to use infrastructure as code to deploy an Azure Kubernetes systems cluster. To learn about the many ways to deploy an Azure SQL database, watch part one.

Watch on Data Exposed

View/share our latest episodes on Channel 9 and YouTube!

by Contributed | Oct 13, 2020 | Azure, Technology

This article is contributed. See the original author and article here.

My customer has a large on-premises file share environment based on Windows Server File Shares with petabytes of data. The maintenance and operations of those servers sounds like a simple task – but having this in a large and complex infrastructure can be challenging. If the file shares are run by multiple teams, then the overall SLA could be heavily impacted, and the run cost are very high.

Azure has viable alternatives to host files shares – in this post, I want to compare the different services – we will compare Azure Files (AZF) and Azure NetApp Files (ANF) to make the right choice when we migrate to Azure.

I discussed the scenario with Sebastian Brack – thanks a lot for providing the tables below and providing lots of insights!

Features

Feature | Azure NetApp Files | Azure Files Premium |

Native Azure Service, fully managed | Yes | Yes |

Protocol Compatibility | SMB 2.1/3.0/3.1.1, NFS 3/4.1

Multiprocotol: SMB+NFSv3 | FileREST, SMB 2.1/3.0, NFS 4.1 (Preview) |

Min Size | 4 TiB | 100 GiB |

Max Volume Size | 100 TiB | 100 TiB |

Max File Size | 16 TiB | 4 TiB |

Service Levels / Tiering | Standard 0.124354€/GiB

Premium 0.248091€/GiB

Ultra 0.331198€/GiB | Premium 0.162€/GiB + 0.1375€/GiB Snapshots

|

Shape Capacity/Performance independently | Yes (Manual-QoS) | No |

On-Prem Access (Hybrid) | Yes (Express Route, VPN) | Yes (ExpressRoute, VPN, Internet)

Private Link required (pricing) for VPN/ExpressRoute (Private Peering):

€0.009 per GB In-/Outbound Data Processing

Or ExpressRoute (Microsoft Peering). |

Regional Availability | 22+ regions | 32+ regions |

Regional Redundancy | LRS equivalent (99.99% SLA) | LRS (99.9% SLA)

ZRS* (Asia Southeast, Australia East, Europe North, Europe West, US East, US East 2, US West 2) |

Geo Redundancy | Yes, Cross-Region Replication (Preview) | No |

Storage at-rest encryption | Yes (AES 256) | Yes (AES 256) |

Backup | Incremental Snapshots (4k block), Cross-Region Replication, 3rd party | Incremental Snapshots (file), Azure Backup Integration |

Snapshot Integration into SMB Client | Yes (Previous Versions + ~snapshot) | Yes (Previous Versions) |

Snapshot Integration into NFS Client | Yes (.snapshot) | No |

Snapshot Restore via Portal | Restore to new volume | No |

Integrated Snapshot Scheduling | Yes (Snapshot Policies) | No |

Identity-based authentication and authorization | Azure Active Directory Domain Services (Azure AD DS), On-premises Active Directory Domain Services (AD DS) | Azure Active Directory (Azure AD)

Azure Active Directory Domain Services (Azure AD DS)

On-premises Active Directory Domain Services (AD DS) via AD Connect |

please note: the prices are taken from Azure West Europe region for comparison – they may vary depending on the service/region.

The features table looks quite similar – but the details make this more interesting:

Protocol compatibility is a strength of ANF – more protocols and SMB combined with NFSv3: Some applications require both protocols, especially in an integration scenario. As of writing this, NFS is in Preview for Azure Files.

As of now, you must start with at least 4 TiB for ANF, for AZF it is only 100 GiB – if you only have a small scenario, then AZF scores here.

Hybrid connectivity is another important point for my customer – ANF is fully private with no way to expose it to the internet, AZF is accessible via the internet, privately via Private Link (additional cost!) or via ExpressRoute Microsoft Peering.

Performance, Throughput

Feature | Azure NetApp Files | Azure Files Premium |

Transaction & data transfer prices | Included | Included |

Throughput (single volume/share) | Ultra: 128MiB/s per provisioned TiB (auto) Premium: 64 MiB/s per provisioned TiB (auto) Standard: 16MiB/s per provisioned TiB (auto) | Egress: 60MiB/s + 61.44 MiB/s per provisioned TiB

Ingress: 40MiB/s + 40.96 MiB/s per provisioned TiB |

Shape capacity & performance independently | Yes, Manual-QoS (preview) | No |

IOPS (single volume/share) | Not limited explicitly, dependent on throughput & IO Size (benchmark ~460.000) Example: 1 IOPS @ 64kb per provisioned GiB Premium 16 IOPS @ 4k per provisioned GiB Premium | Baseline: 1 IOPS per provisioned GiB up to 100.000 Burst: 3 IOPS per provisioned GiB up to 100.000 |

File level throughput limit | Unlimited (volume throughput limit) | Egress 300MiB/s Ingress 200MiB/s |

File level IOPS limit | Unlimited (volume throughput limit) | 5000 IOPS |

Volume/Share Size adjustable | Yes | Yes, cooldown for decrease @ 24h |

Service Level changeable | Yes, cooldown for decrease @ 7 days (Preview) | No |

NFS nconnect | Yes (NFSv3) | No |

SMB Multichannel | Yes | No |

Please note: Features and performance may have changed since publishing this post – please verify! For ANF there is a “What’s new page”, for AZF you can check Azure Update.

Now let us look at the service level. ANF is more flexible, file shares can be divided in 3 performance tiers, AZF has two tiers. If you provision large, 100 TB shares with ANF, you get 1600 MiB/s throughput with the standard tier – even for single files (file level throughput depending on volume size or manual quota). The flexibility on the ANF side is a big benefit.

Changing the service level on ANF can be done – please be aware of the cooldown period. Doing the same for AZF is possible, but its not as easy as with ANF.

The last two rows are very important regarding performance – both nconnect and multichannel allow to have multiple connections to the same to ANF drastically improving the bandwidth. Great stuff.

Hybrid Connectivity & Encryption

Feature | Azure NetApp Files | Azure Files Premium |

SMB signing | Yes | |

SMB in-flight encryption | No | Yes |

NFS in-flight encryption | Yes | No |

Active Directory Integration | Yes | Yes |

Azure Active Directory Independent | Yes | No (AD-Connect required) |

AD Kerberos Authentication | Yes (AES 256, AES128, DES) | Yes (AES 256) |

AD LDAP Signing | Yes | |

Comparing the identity aspects, then both integrate into a on-premises Active Directory. AZF requires to have the identities synced to Azure Active Directory (AAD), ANF directly integrates into Active Directory.

Encryption-wise, AZF supports SMB encryption – ANF does not have this yet.

Hopefully, this comparison helps you to make decisions.

Hope it helps,

Max

by Contributed | Oct 13, 2020 | Azure, Technology

This article is contributed. See the original author and article here.

With over two years since general availability of Azure Database for MySQL , we’ve listened and learned a lot from you who use our MySQL managed database service on Azure. As a developer, you appreciate the ease of provisioning, built-in high availability, and manageability of fully managed service. But for some of you, moving to a managed service can be seen as loss of database level control and flexibility when it comes to configuring your MySQL servers—which has prevented you from taking advantage of the benefits of a managed service but hopefully, not anymore.

We in Azure OSS Database engineering team feel extremely excited about our big announcement at Microsoft Ignite where we introduced the preview release of our new Flexible server deployment option for Azure Database for MySQL. We started on this journey more than a year back to re-imagine and design a service architecture that strikes a better balance between the control and flexibility for a managed service.

Now in preview: Introducing Azure Database for MySQL – Flexible Server

We designed the new Flexible server deployment option for MySQL with these goals in mind:

- Simplify developer experiences – Make it easier for you to quickly onboard, connect, and get started.

- Maximize Database Controls – Provide maximum control on your server configurations to provide experiences at par with running your own MySQL deployments.

- More Cost Optimization Controls – Provide more options for you to optimize and save costs.

- Enable Zone Resilient & Aware Applications – Allow you to build highly available, zone resilient and performant applications, with your MySQL database co-located in the same zone, so you can tolerate zone level failures.

Let us now dive into what you can expect from the new Flexible server deployment option on Azure Database for MySQL—as well as a bit about what your experience will be like.

Create a Flexible server with single Azure CLI command

As a developer, you are probably familiar with Azure CLI commands in Azure Cloud Shell. Now, you can create a new Flexible server deployment option for MySQL using a single Azure CLI command, as shown below:

az mysql flexible-server create -l location

Create a secure flexible server in virtual network using a single Azure CLI command

Create a secure flexible server in virtual network using a single Azure CLI command

The output of the command, shows the connection string which you can use in mysql cli client and get started.

As of today, Flexible Servers offering for Azure Database for MySQL is live in following Azure regions:

- East US 2

- West US 2

- North Europe

- Southeast Asia

We plan to release flexible server deployment in 8 new regions in upcoming weeks. You can check our documentation for most up to date information.

Use familiar tools to connect to your server & it just works!

With Flexible Server deployment option for MySQL, you can use familiar tools like MySQL Workbench and drivers to connect and it just works !!!.

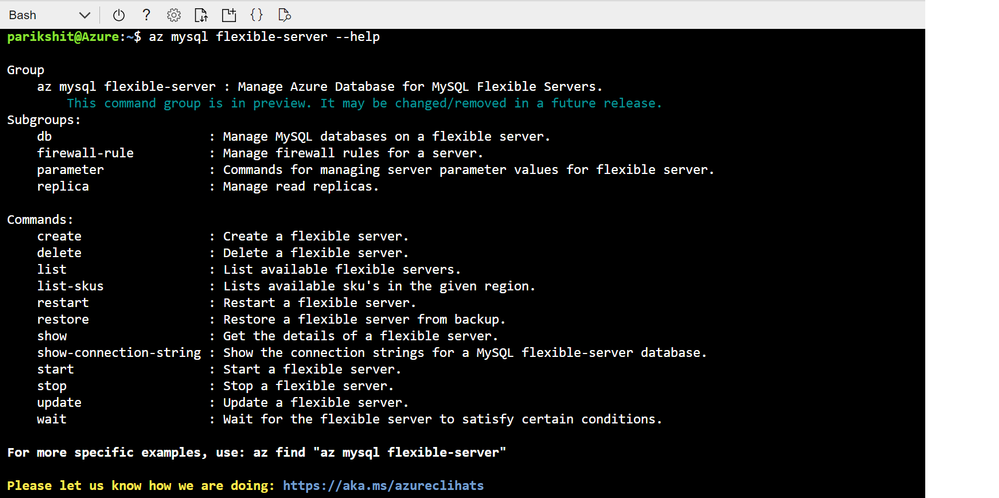

If you would like to get a guided quick start, I recommend you start here. Here is the detailed list of commands you can expect.

Screenshot showing output of — help parameter command in Azure Cloud shell to enumerate list of all the commands supported by az mysql flexible-server cli

Screenshot showing output of — help parameter command in Azure Cloud shell to enumerate list of all the commands supported by az mysql flexible-server cli

You can expect the same level of simplicity and easy of use while provisioning the server using Azure Portal, ARM or Terraform.

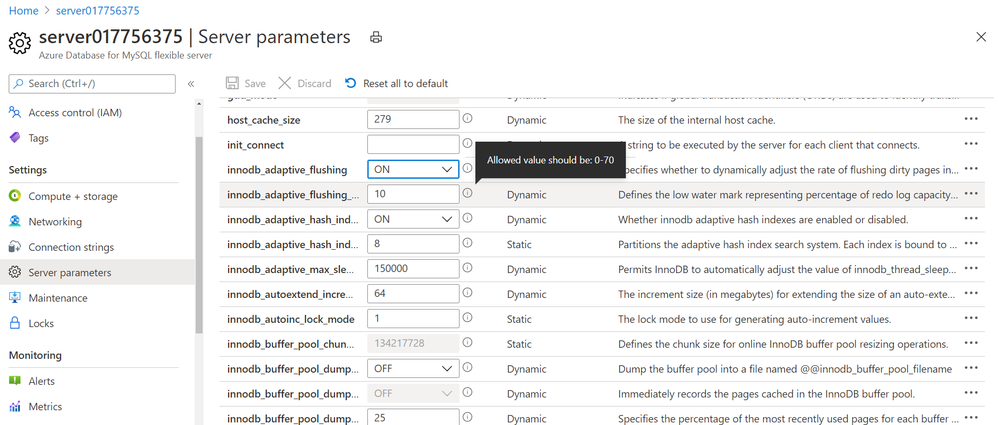

More Server Parameter Control with Flexible Server

With Flexible Server, we have exposed 30% more parameters compared to Single server which you can now modify and customize based on the needs and dependencies of your application.

Screenshot showing Server parameter blade in Azure portal for Azure Database for MySQL – Flexible Server

Screenshot showing Server parameter blade in Azure portal for Azure Database for MySQL – Flexible Server

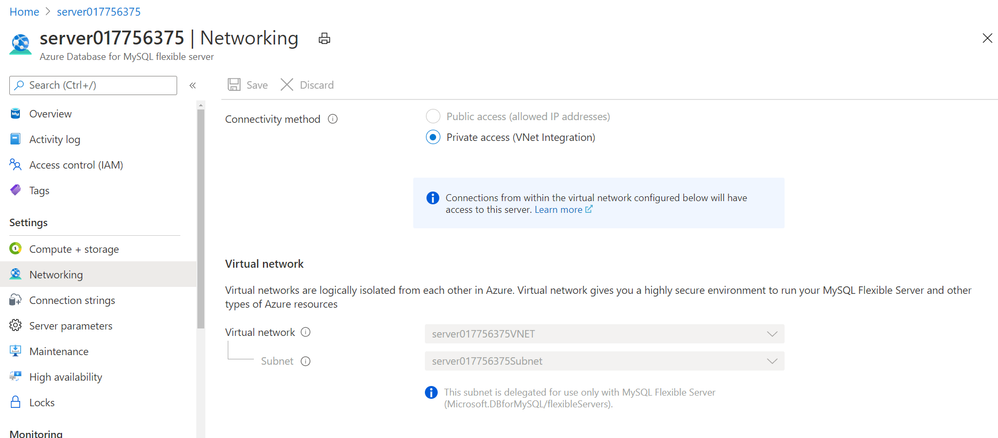

Network Isolation Control

With Flexible Server on Azure Database for MySQL, you can run and select your server to be in either be in public access mode or secure it in private access mode.

With Private access, you can deploy your Flexible server into your Azure Virtual Network. Azure virtual networks provide private and secure network communication. Resources in a virtual network can communicate through private IP addresses only. Flexible server in private access mode has no public endpoints and cannot be reached from outside the virtual network. In addition, you can create a flexible server in virtual network using a single command show below. The subne t should not have any other resource deployed in it and this subnet will be delegated to Microsoft.DBforMySQL/flexibleServers, if not already delegated. See Networking concepts for more details.

az mysql flexible-server create –subnet /subscriptions/{SubID}/resourceGroups/{ResourceGroup}/providers/Microsoft.Network/virtualNetworks/{VNetName}/subnets/{SubnetName}

By default, SSL is enabled with TLS 1.2 encryption enforced. At this point, you have no control over SSL/TLS configuration, and you cannot change this by yourself in portal. We had a lot of debate over this within the product team and in the end, we chose that as your trusted cloud provider, we would like to enforce right behavior when it comes to security. However, we welcome your feedback on this and if you have concerns or requirements for you to support SSL disabled or TLS < 1.2, I would encourage you to open or vote on this feedback item for Azure Database for MySQL, on the Azure Feedback forum.

Screenshot showing Networking blade in Azure portal for Azure Database for MySQL – Flexible Server

Screenshot showing Networking blade in Azure portal for Azure Database for MySQL – Flexible Server

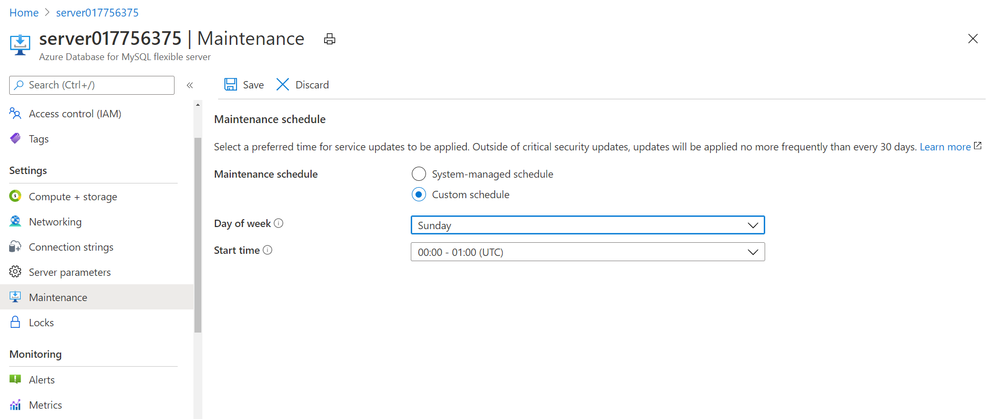

Control your Planned Maintenance schedule

The service performs automated patching of the underlying hardware, OS, and database engine. The patching includes security and software updates. For MySQL engine, minor version upgrades are also included as part of the planned maintenance release. When managing and running mission critical business application, it is critical for you to be able to control the maintenance schedule as it directly impacts the availability of the database server and application for your business. You may also want to test the impact of the patch on your application behavior and performance. This is where you may want to apply and release the patch on pre-production and test environments first as soon as service releases it to test it and plan to roll out in production at a later schedule. With the new Flexible Server option for Azure Database for MySQL, you can now schedule your maintenance at a time which works best for you. From the Maintenance blade in Azure portal, you can specify the day of the week and 1 hour time window in a month, which works best for you to perform server patching which may involve restarts. For more details, refer Scheduled Maintenance concepts.

Screenshot showing Maintenance blade in Azure portal for Azure Database for MySQL – Flexible Server to schedule planned maintenance

Screenshot showing Maintenance blade in Azure portal for Azure Database for MySQL – Flexible Server to schedule planned maintenance

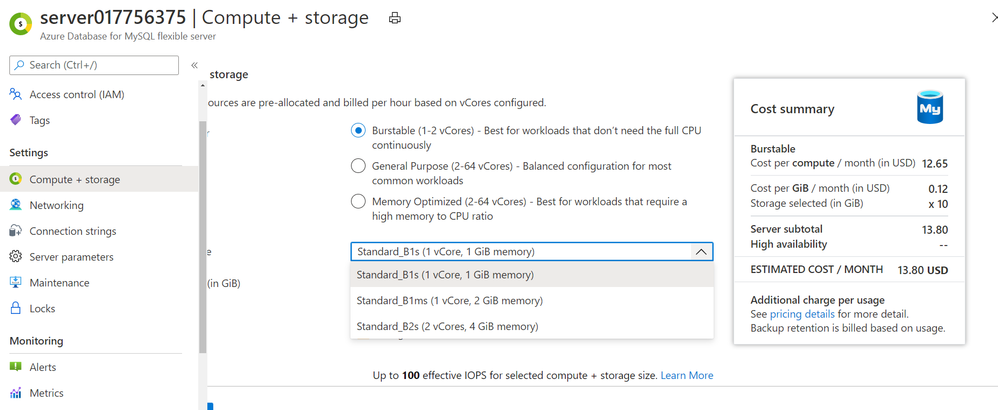

Start with burstable SKUs starting at $13 per month

This has been one of the long standing asks from many of you looking to use MySQL server for personal projects or development purposes. With Flexible Server on Azure Database for MySQL, you can now start with a burstable SKU if your workload doesn’t need 100% of CPU time all the time. Burstable SKUs are generally preferred for dev/test scenarios. The lowest available burstable compute tier B1S starts at $13 per month. See Compute and Storage sizes in documentation for more details.

Burstable SKU choices available in Flexible Server on Azure Database for MySQL server

Burstable SKU choices available in Flexible Server on Azure Database for MySQL server

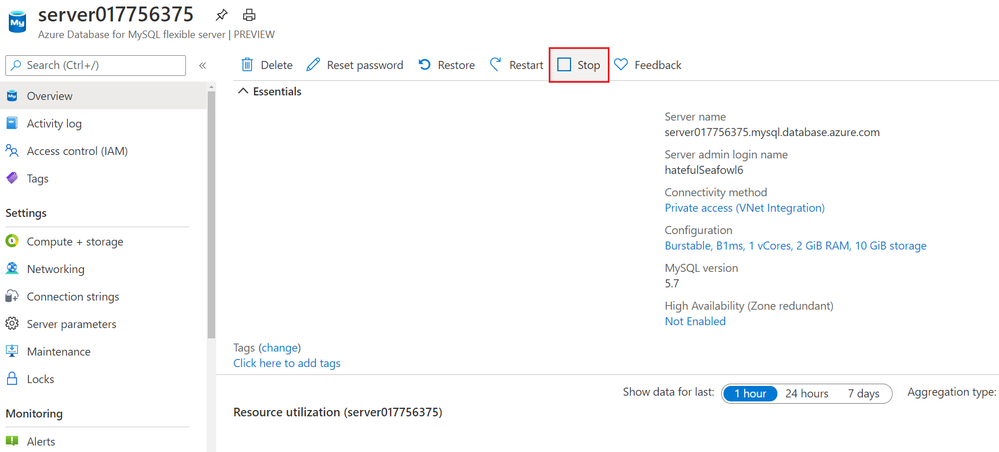

Stop your server when not in use to save cost!

This is again one of the highly requesting asks from many of you who are looking to save compute cost when not in use by simply stopping the server. See Server concepts for more details.

Stop your Flexible Server from the Overview blade in Azure portal

Stop your Flexible Server from the Overview blade in Azure portal

Build Zone resilient applications with Flexible Server

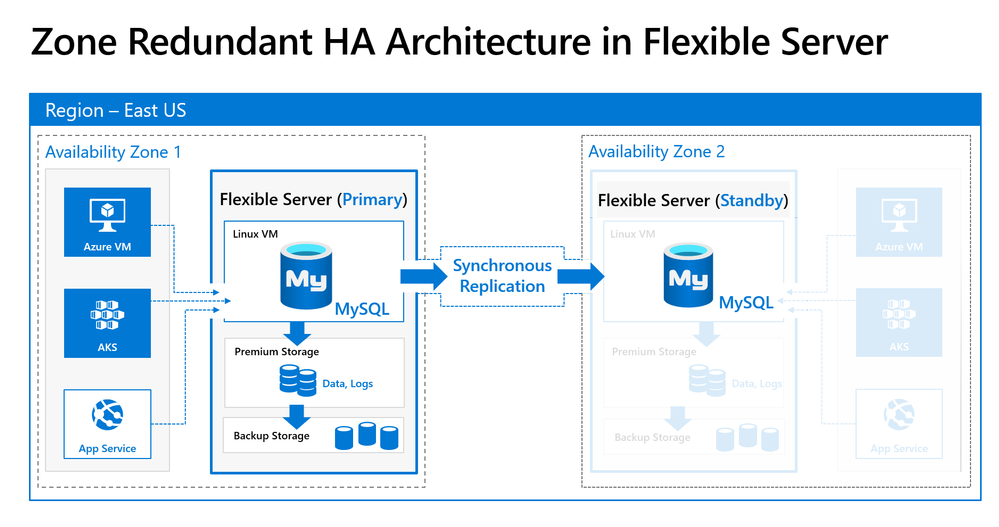

With Azure Kubernetes Services (AKS) or Virtual Machine Scale sets, you can build and deploy zone resilient application that can tolerate zonal failures. With Flexible server on Azure Database for MySQL, you can now enable zone redundancy for your MySQL database server as well.

When you enable zone redundant high availability for your MySQL server with Flexible server, the service provisions a hot standby server on the secondary availability zone with synchronous replication of data. In case of zonal failures, the MySQL database server will automatically failover to bring the standby server on secondary availability zone online to ensure your applications and database is highly available and fault tolerant to Availability zone level failures. See high availability concepts for more details.

Zone Redundant HA using synchronous replication for data durability and high availability

Zone Redundant HA using synchronous replication for data durability and high availability

Getting Started

You can quickly get started by creating your first server using the quickstarts in our documentation on docs.microsoft.com:

To learn more, you can read our Flexible server documentation for MySQL.

For any questions or suggestions you might have about working with Azure Database for MySQL, you can send an email to the (Ask Azure DB for MySQL. To provide feedback or request new features, we would appreciate it if you could make an entry via UserVoice which can help us to prioritize.

Flexible server is available in preview on Azure Database for MySQL, with no SLAs and hence is not meant for production deployments yet. Single Server deployment option continues to be our enterprise-ready platform, supporting mission critical application and services as I shared in my last service update.

To help you compare Single server and Flexible server for Azure Database for MySQL so you can figure out which deployment option is right for you, we’ve created a handy feature comparison matrix for you in our documentation.

by Contributed | Oct 13, 2020 | Azure, Technology

This article is contributed. See the original author and article here.

Abstract

Azure services are always misunderstood that, they can be used only with Native Azure offerings. This is not true. It is surprising to see people make this perception so easily. Azure CDN is no exception for this perception. Well, Azure CDN can be used for Azure services and for any other cloud vendors and even for on premises workloads as well.

The common question I get is, “If I have Azure VM hosted website, how can I use Azure CDN on top of it? I am not using/ don’t want to use Azure blob storage. Can I still use CDN for files sources from web application? If yes, how?”

This is the article where we will explore how can you use Azure CDN with IIS hosted sample website on Azure VM and then check the performance of the website using recently wrote approach of Vegeta Attack load test.

Let’s cache!

Origin for Azure CDN

We should be clear what is going to be origin for CDN deployment. Origin simply means source location from where CDN will pickup publicly available static files and contents and, cache it for faster access. In our case the origin is going to be Azure VM and one static image that we will access from CDN. By default, Azure CDN can have native Azure services as Origin as listed below –

- Azure Storage

- Azure App Services – web app

- Azure Cloud Service – Web role deployments

Anything beyond above list must be “Custom Origin”. The sample list of custom origins can be as follows –

Public/ Internet facing content from –

- on Premise hosted Application

- Azure VM hosted application

- AWS hosted applications.

- GCP hosted applications

And so on.

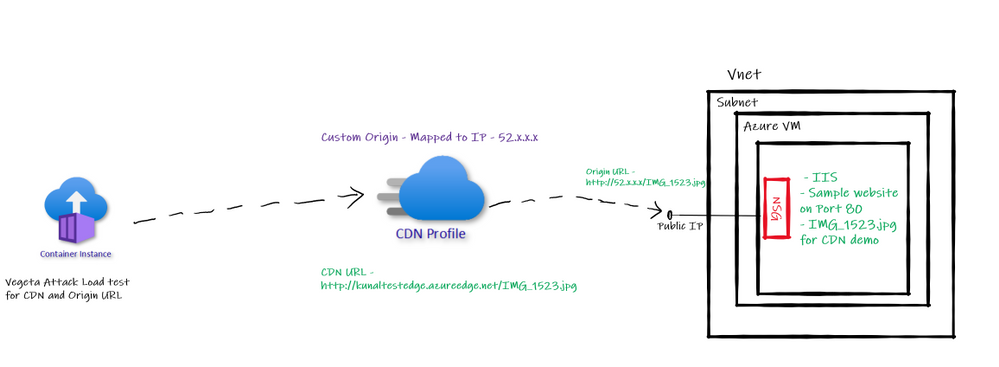

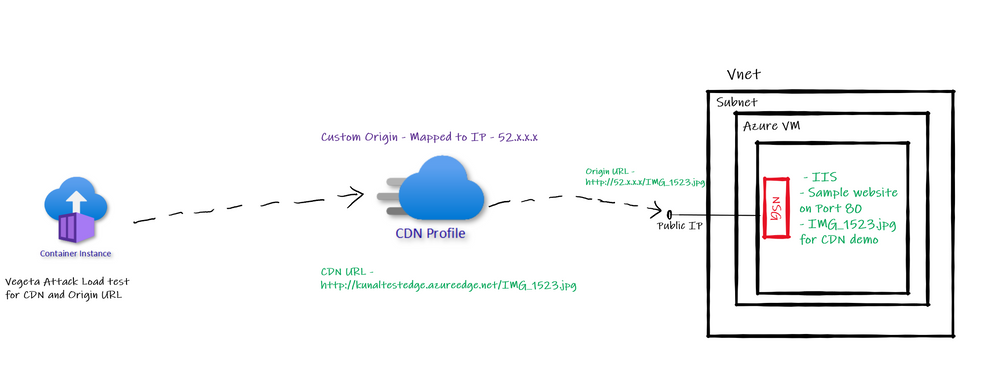

Architecture of implementation

With above understanding of custom origin for Azure CDN let us have a look at the architecture we are trying to implement as a part of this article.

Custom Origin Architecture

Custom Origin Architecture

So, we have one Azure VM with IIS default website running on port 80. We will configure the public IP of this Azure VM as “Custom Origin” in Azure CDN. Then run Vegeta Attack load test on Origin based URL and CDN based URL to see the different in performance in terms of latency etc.

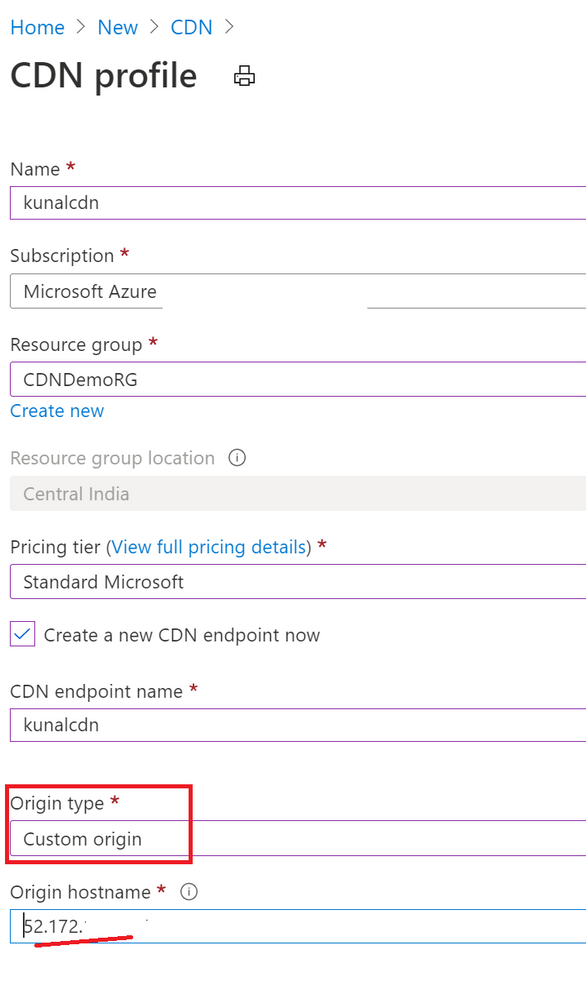

Creating Azure CDN with Custom Origin

I already have created Azure VM, subnet, VNET, and configured IIS based default website along with public IP, also added sample image we plan to access through CDN. While creating Azure CDN make sure you select “Custom Origin” option provide public IP of website which we use for accessing it over internet. Refer below –

Notice below screenshot. I have not added “Origin Path” value and kept it empty. Similarly, for hist header it is empty. When I had added “origin path” value Azure CDN URL was throwing error of 404. I removed it and it worked.

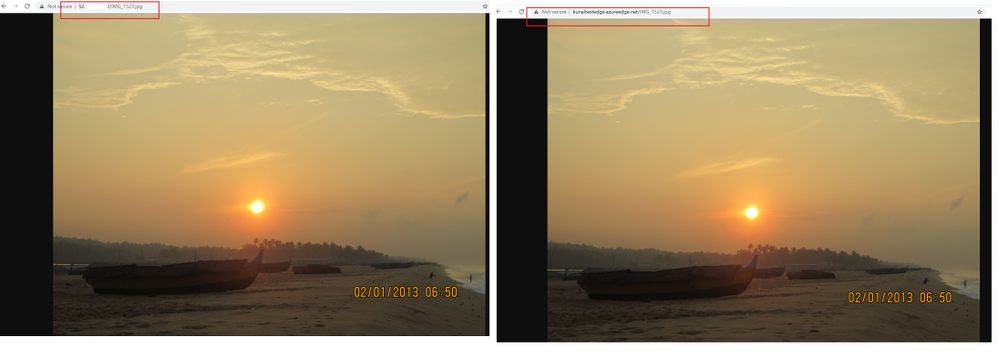

After this configuration if I access the CDN URL I see an image being loaded. Similarly, if I access origin URL the same image being loaded. Refer below –

Vegeta Attack on Azure CDN and Origin URL

I am using Vegeta Attack with Azure Container Instances to generate load against both URLs – CDN and Origin URL and below are the results –

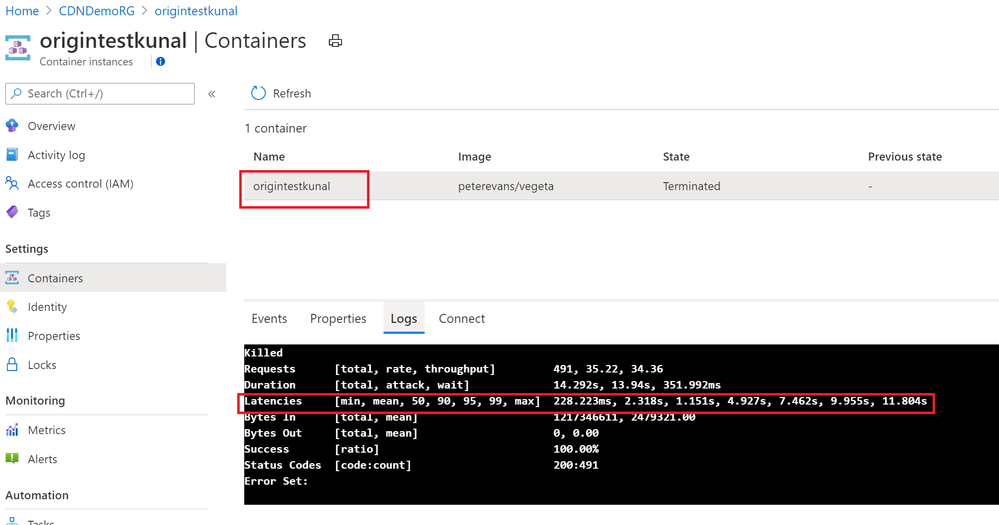

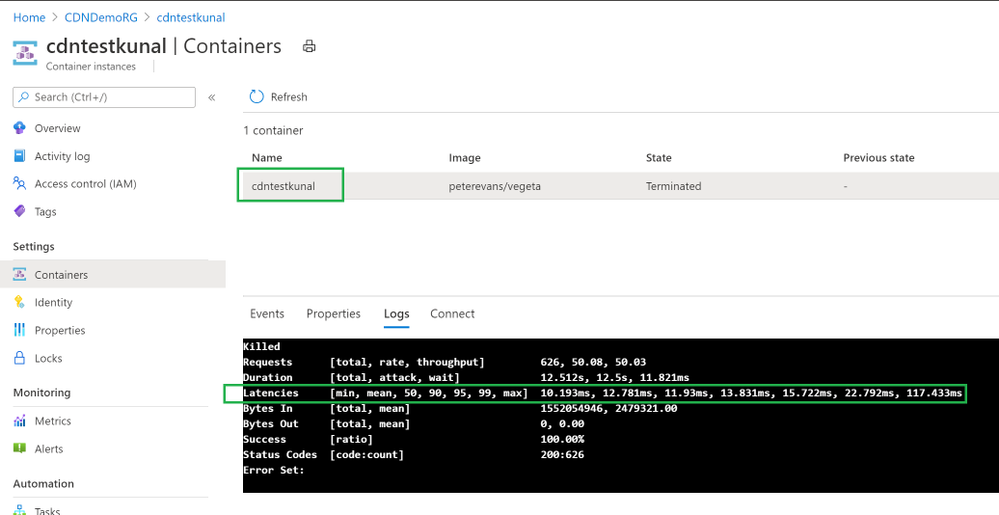

Origin Test –

CDN Test –

Clearly CDN based responses are clear win! CDN based responses are not even crossing 1 second where Origin URL is going to 11 seconds sometimes.

Azure CDN with Azure File Storage

This another common question I get for Azure CDN.

“Can I use Azure Files for Azure CDN”?

Answer is: Yes, you can.

However, there is a catch. There is always a catch.

Azure Files is a SMB thing which can’t be accessed directly over internet. It requires “Authentication”. If you access Azure Files base URL there is no way how you can perform the “authentication” to access the Azure Files hosted content.

So, when you want to access Azure Files contents from Azure CDN you need to take care of Authentication while calling from Azure CDN. Therefore, the way we can use Azure Blob public blob/container in Azure CDN; Azure files can’t be accessed directly.

To cater to requirement of authentication to access Azure Files you need to use web application. So, create a web application, use Azure Files authentication inside it to allow access to Azure File Share contents and then refer those contents in Azure CDN. Of course, you will have to use “Custom Origin” in this case mapping to Web application domain or IP address.

Testing Hops

Back in 2011 I wrote two articles on CDN basics – here and here.

One of the articles talks about Hops used for reaching to destination and this test is still valid to learn performance improvement from CDN while using Custom Origin. This is also a very good option to showcase CDN performance boost.

Conclusion

Hope this article gave you good understanding on approach of using Azure CDN with other backends using “Custom Origin” feature.

by Contributed | Oct 13, 2020 | Azure, Technology

This article is contributed. See the original author and article here.

Azure Security Center has a builtin export capability called continuous export, that helps you to export security alerts and recommendations once they are generated to Azure Log Analytics, and/or Azure EventHub. Unfortunately, today there is no builtin capability to continuously export resource exemptions, as well. Now, resource exemptions are a great capability to granularly tune the set of recommendations that apply to your environment, without having to completely disable the underlying security policy. But with great power comes great responsibility, and so customers have been asking for an ability to be notified once there is a new resource exemption created. This is when our latest automation artifact comes into play, which offers the following benefits:

- Logic App integration with Office 365 enables you to send notification emails

- Data can be exported to any service, including Log Analytics, EventHub, Service Now, and others

- The playbook is triggered just in time, instead of using a regular cadence

Notify about new resource exemptions

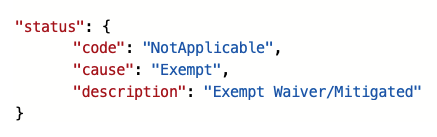

In my previous article, you learned that once a new resource exemption is created, the status of the underlying security assessment is changed, and it will contain the following properties.status values:

Figure 1 – status code and cause

Figure 1 – status code and cause

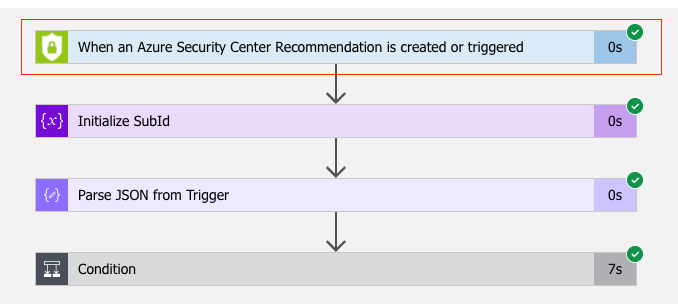

There is another capability in Azure Security Center called workflow automation, which allows you to automatically respond to alerts and recommendations. With workflow automation there comes one Logic App trigger type called When an Azure Security Center Recommendation is created or triggered. With this trigger type, your Logic App will run every time there is a new recommendation, or when the status of an assessment changes. That makes it a perfect fit for our purpose. Once the Logic App is triggered, I calculate the subscription ID from the assessment ID that is passed from workflow automation for further use later in the automation.

Figure 2 – Workflow Automation Trigger

Figure 2 – Workflow Automation Trigger

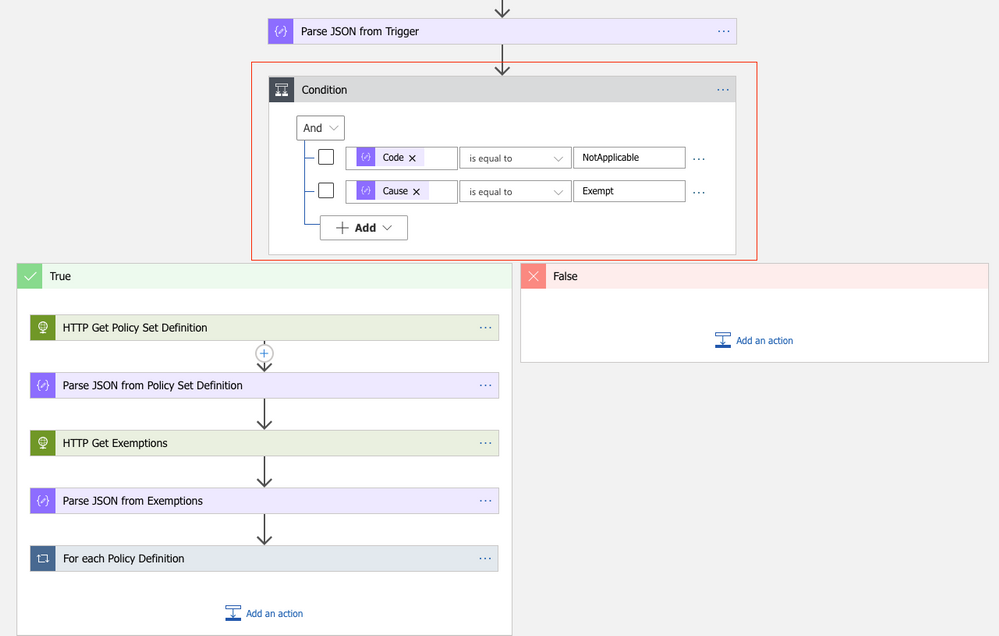

Since the Logic App will run every time a new recommendation is created, or the status of an existing recommendation changes, we need to filter for only these cases when an actual resource exemption has been created. For this, I defined a condition (if…then) in which I make sure that only when properties.status.code is “NotApplicable” and properties.status.cause is “Exempt”, the actual logic is started.

Figure 3 – define a condition

Figure 3 – define a condition

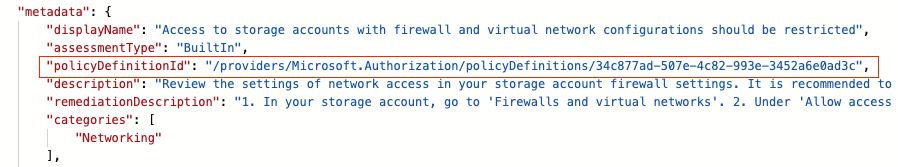

What follows are two GET requests to the Microsoft.Authentication/PolicySetDefintions and Microsoft.Authorization/policyExemptions APIs. The first call will get all information from the Builtin Azure Security Center default initiative (PolicySetDefinition ID 1f3afdf9-d0c9-4c3d-847f-89da613e70a8), the second will get all policy exemptions that have been created for the subscription with the ID calculated before. As explained in my previous article, resource exemption in Azure Security Center leverages the policy exemption feature. With workflow automation, we can react on assessment changes, but there’s no direct way to pass further information about the exemption itself. What we get is the resource ID the assessment applies to, as well as the policy definition ID in the metadata section:

Figure 4 – Policy definition ID the ASC assessment relies on

Figure 4 – Policy definition ID the ASC assessment relies on

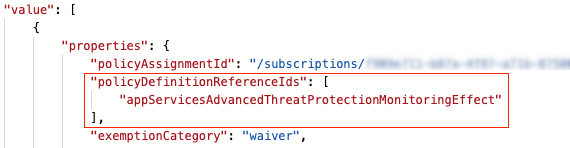

Policy exemptions unfortunately do not contain the policy definition ID. That is because they do not apply to a policy definition, but to assignments. Good news is that a policy exemption contains a list of Policy Definition Reference IDs instead:

Figure 5 – Policy definition reference IDs in policy exemption

Figure 5 – Policy definition reference IDs in policy exemption

This is when policy set definitions (aka initiative definitions) API comes into play. A policy set definition contains both, policy definition IDs, and policy definition reference IDs. So now, we can make sure to figure out which policy exemption has been created from ASC, and, even better, who and when it was.

Following the workflow, the next step is to compare all policy definition IDs in the policy set definition with the policy definition ID that has been passed from ASC. If both IDs match, we take the corresponding policy definition reference ID and compare it to the IDs in all policy exemptions. If the IDs match, and if also the exemption ID begins with the resource ID that comes from the trigger, we know that we’ve found the policy exemption that has been created using the resource exemption feature in ASC.

What follows is an export of the policy exemption details to a Log Analytics workspace, as well as a notification email that is sent.

How to deploy the automation playbook

You can find an ARM template that will deploy the Logic App Playbook and all necessary API connections in the Azure Security Center GitHub repository, but you can also directly deploy all resources by clicking here. Once you have deployed the ARM template, you will have some manual steps to take before the tool will work as expected.

Make sure to authorize the Office 365 API connection

This API connection is used to send emails once a new resource exemption is created. To authorize the API connection:

- Go to the Resource Group you have used to deploy the template resources.

- Select the Office365 API connection and press ‘Edit API connection’.

- Press the ‘Authorize’ button.

- Make sure to authenticate against Azure AD.

- Press ‘save’.

Authorize the Logic App’s managed identity

The Logic App is using a system assigned managed identity to query information from the resource exemption and policy set definitions APIs. For this purpose, you need to grant it the reader RBAC role on the scope you want it to be used at. It is recommended to give it reader access on the tenant root management group, so it is able to query information for all subscriptions once relevant. To grant the managed identity reader access, you need to:

- Make sure you have User Access Administrator or Owner permissions for this scope.

- Go to the subscription/management group page.

- Press ‘Access Control (IAM)’ on the navigation bar.

- Press ‘+Add’ and ‘Add role assignment’.

- Choose ‘Reader’ role.

- Assign access to Logic App.

- Choose the subscription where the logic app was deployed.

- Choose the Logic App you have just deployed.

- Press ‘save’.

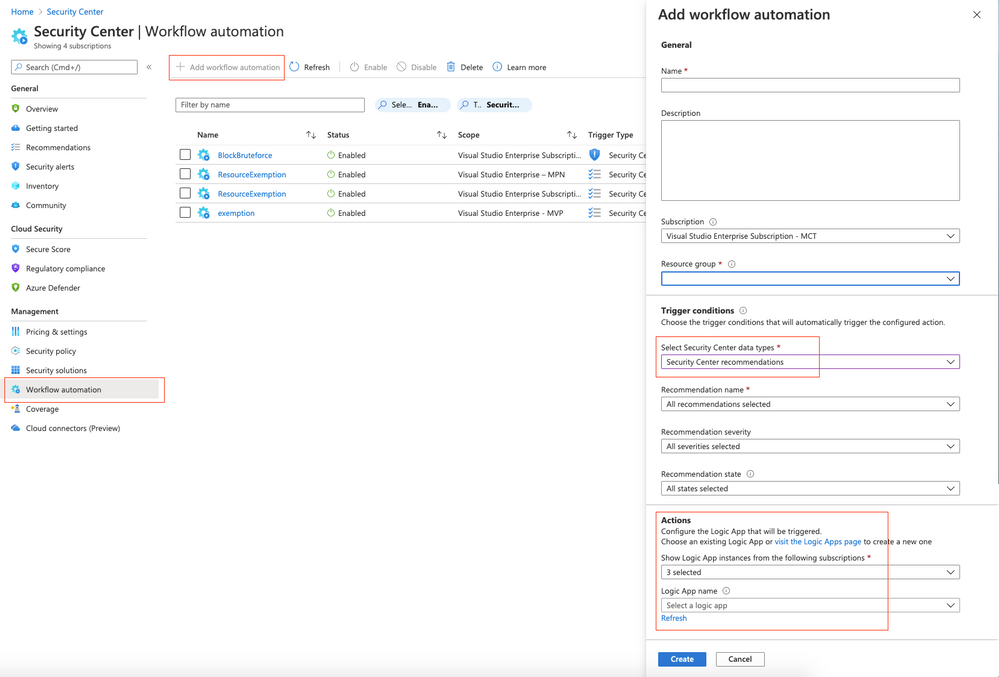

Create a new workflow within Azure Security Center

For the Logic App to be triggered automatically once an assessment is changed, you need to configure a new workflow within Azure Security Center. In Azure Security Center, you just need to navigate to the Workflow Automation control, select + Add workflow automation, and as a trigger condition, you select Security Center recommendations. In the Actions, you select the Logic App that you’ve just deployed.

Figure 6 – Add workflow in Azure Security Center

Figure 6 – Add workflow in Azure Security Center

What’s next

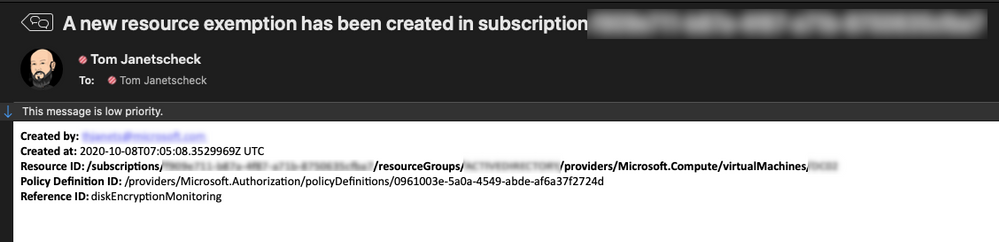

If you create a new resource exemption, the exemption information is exported to the Log Analytics workspace you’ve defined during your deployment as of now. In addition to that, the recipient address(es) you’ve defined during the deployment will receive a notification email with the following information:

- Created by

- Created at

- Resource ID

- Policy Definition ID

- Policy Definition Reference ID

Figure 7 – example email generated from the playbook

Figure 7 – example email generated from the playbook

Now, go ahead, deploy the workflow in your environment, and let us know what you think by commenting on this article.

Acknowledgements

Thanks to Miri Landau, Senior Program Manager in the Azure Security Center engineering team for reviewing this article.

Recent Comments