This article is contributed. See the original author and article here.

Guest blog by the Computer Vision Recipe Creators – Patrick Buehler (Principal Data Scientist at Microsoft. PhD in Computer Vision / Machine Learning with Prof. Andrew Zisserman at Oxford.) JS (Software Engineer at Microsoft) and Young Park (Software Engineer at Microsoft),

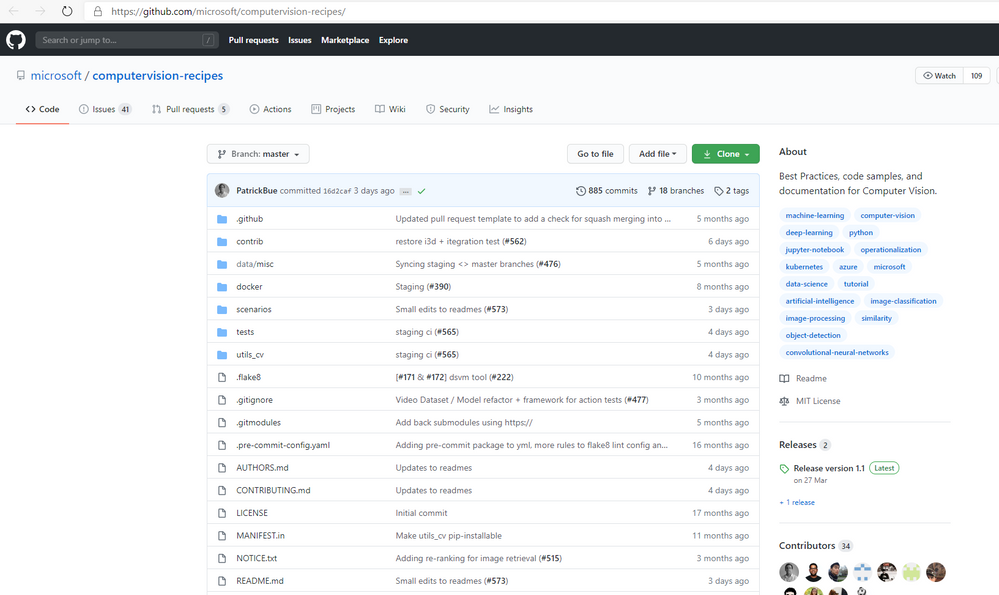

The open-source Computer Vision Recipes repository.

The repository supports various scenarios, including classification, retrieval, segmentation, detection, tracking, and action recognition as shown in the figure below.

Introducing Computer Vision Recipes repository

Creating a computer vision model can be daunting and time-consuming for beginners and experts alike. Popular libraries such as Torchvision or OpenCV provide implementations for various models and can be great resources for seasoned researchers. However, for software engineers or data scientists who are less experienced in computer vision, building a high performing model can still be extremely challenging even with these libraries.

http://github.com/microsoft/computervision-recipes

To help jumpstart people on their computer vision tasks, we’ve created the Computer Vision Recipes repository.

Our goal for the project.

Is to provide ‘recipes’ for different scenarios based on popular libraries or research implementations so that high-performing models can be trained and evaluated with just a few lines of code. In addition, we guide users to avoid common pitfalls when training their models, propose tried-and-tested parameters that we found to work well for many datasets, and help users train, improve, visualize, and deploy their own custom models.

Using this repository, expert users can quickly build high-performing baseline models for a wide variety of computer vision solutions.

Computer vision novices will be able to build state-of-the-art models on their own datasets without getting bogged down by implementation details.

The library builds on top of PyTorch, is easy to install, uses a single conda environment for all scenarios, daily unit/integration tested, and includes code examples with documentation in the form of Jupyter notebooks.

The repository currently supports following scenarios:

- Object detection

- Image classification

- Image similarity

- Image segmentation

- Object tracking

- Action recognition

Supported Scenarios of the Computer Vision Recipes Respository

Recognizing actions in video example

Step by step tutorial on Object detection

We will now walk through how to build, train, and evaluate an object detection model in just a few lines of code using the open-source Computer Vision Recipes repository.

All supported scenarios in the repository follow similar implementation steps as this example. Under the hood, the object detection model uses Torchvision’s excellent implementation of the Mask R-CNN model. All code examples are taken from the 01_training_introduction.ipynb notebook which introduces model training and evaluation. Other notebooks which cover more advanced topics such model deployment or hard-negative mining can be found here.

Step 0. Installing the repository

Installation is as easy as downloading the repository and creating a new conda environment using the commands below. For more information see the setup instructions.

git clone https://github.com/Microsoft/computervision-recipes

conda env create -f environment.yml

python -m ipykernel install --user --name cv --display-name "cv"

Step 1. Loading images

For object detection, CV recipes comes with a DetectionDataset class which accepts any data in the PASCAL VOC format, i.e. each image has an associated XML annotation file following this format:

# Images and annotation XML files in separate folders:/data

+-- images

| +-- image1.jpg

| +-- image2.jpg

| +-- ...

+-- annotations

| +-- image1.xml

| +-- image2.xml

| +-- ...

We create an instance of the DetectionDataset class by passing it the path to our data and annotations.

from utils_cv.detection.dataset import DetectionDataset

my_datapath = Path("path/to/my/images")

data = DetectionDataset(my_datapath, train_pct=0.75)

Once it’s loaded, we can simply call show_ims()to inspect that we correctly loaded our dataset.

data.show_ims()

In addition, we can inspect how many ground truth annotations the dataset contains, and what their sizes are using the command

data.plot_boxes_stats()

Step 2. Training your model

Training a new model can be done using the fit()function of the DetectionLearnerclass. Because finding good parameters to train a deep neural network can be a challenge, we’ve provided good default parameters which we found by testing our implementation on multiple datasets. Below we’ll show you how you can specify the image size, the number of epochs to train for, and the learning rate.

from utils_cv.detection.model import DetectionLearner

detector = DetectionLearner(data, im_size=500)

detector.fit(10, lr=0.001)

As you can see above, the loss will reduce with each epoch while the accurate increases.

Step 3. Evaluating your model

Next, we’ll want to evaluate the model we just fine-tuned to see how it performs on our test dataset. We do this using a precision recall curve, and (not shown) also compute the average precision.

e = detector.evaluate()

plot_pr_curves(e)

Step 4. Predicting using your model

The last step is to make a prediction on a new image. Using the DetectionDetector, that’s as simple a calling predict().

detections = detector.predict("new/image/to/predict.jpg")

plot_detections(detections)

Step 5. Saving your model

Once you’re happy with the results, you can save and load your models to a .pth file:

detector.save("my_drink_detector")

Conclusion

In this article, we have introduced the Computer Vision Recipes repository and showed how easy it is to use. Using an object detection model tutorial, we demonstrated how a wide variety of Computer Vision solutions can be built. Feel free to visit our repository where we provide plenty of Jupyter notebooks to walk you through each of the scenarios.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

Recent Comments