Microsoft Copilot brings generative help and guidance into Dynamics 365 Supply Chain Management

This article is contributed. See the original author and article here.

Introduction:

In the ever-evolving landscape of business operations, Dynamics 365 Supply Chain Management has been a trusted companion for organizations, offering a robust suite of features to streamline processes. However, with its rich functionality, there often comes a learning curve for users. They require guidance to harness the full potential of the software. Enter the innovative in-app help guidance powered by Microsoft Copilot, which is set to transform the user experience.

Unleashing the Power of Dynamics 365 Supply Chain Management:

Dynamics 365 Supply Chain Management provides a wide array of tools to support diverse business processes. Yet, mastering this complexity often necessitates training and hands-on experience. Traditionally, users relied on experts, partners, or Microsoft support for guidance. But now, a revolutionary solution is at hand.

Introducing Copilot’s capability for In-App Help Guidance:

Copilot brings AI-driven capabilities into Dynamics 365 Supply Chain Management. One powerful capability delivers contextual help and guidance right into the application. This capability has arrived in public preview.

Here’s a closer look at how it functions:

Harnessing Public Documentation

The Copilot capability for help and guidance is grounded on the extensive repository of public documentation on Dynamics 365 Supply Chain Management features. It employs generative AI to craft precise responses to user queries based on the understanding of the documentation it has been grounded on.

Contextual Assistance

Users can seamlessly and conversationally engage through Copilot within the application. They can pose questions related to their current context, and the Copilot, armed with a wealth of documentation, provides tailored and context-aware guidance.

In the future, it will facilitate direct navigation to specific pages within the application and suggest relevant actions to complete users’ tasks.

Real-World Applications:

The Copilot’s in-app help and guidance proves invaluable in various scenarios. For instance:

- Onboarding New Users: As new business users join, they often require assistance in understanding the application’s functionality and workflows. Copilot can answer questions like, “How do I navigate the procurement process?” or “What steps are involved in inventory management?“

- Immediate Problem Solving: Users can seek instant solutions to challenges they encounter while using Dynamics 365 Supply Chain Management. Whether it’s configuring settings or troubleshooting issues, Copilot is there to help.

- Reducing Training Costs: Small and medium-sized enterprises often invest heavily in training their workforce on new ERP systems. The in-app help guidance feature minimizes training expenses by enabling users to rapidly familiarize themselves with the software.

Step-by-Step Experience:

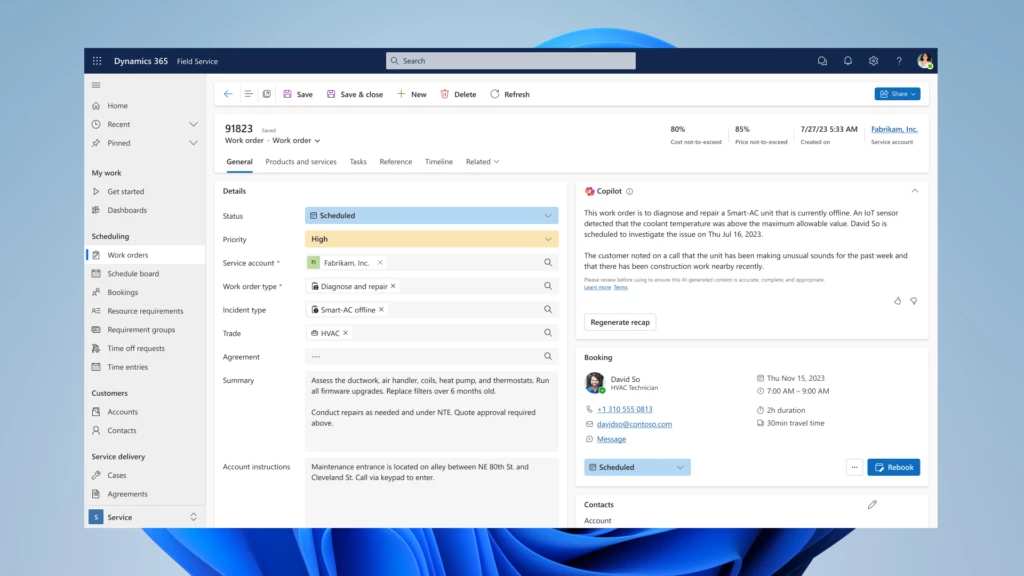

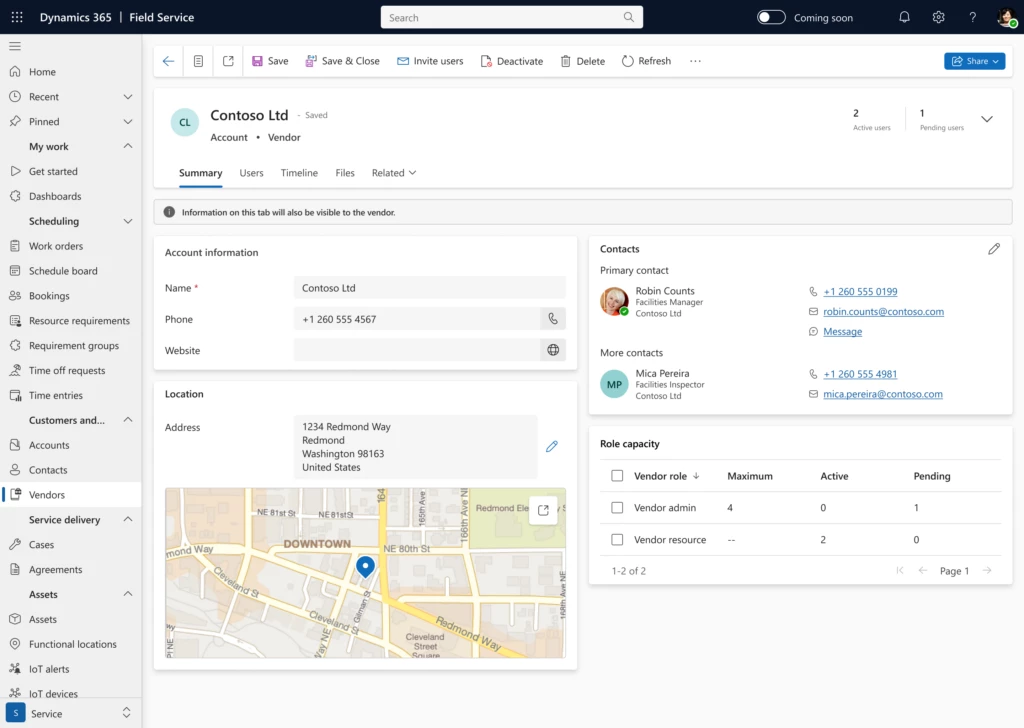

Let’s take a journey with Alice, a Purchaser who is new to Dynamics 365 Supply Chain Management. Alice is eager to initiate the creation of a purchase agreement with a vendor but is feeling uncertain about the process. Fortunately, Copilot is here to assist her every step of the way, thanks to its generative help feature.

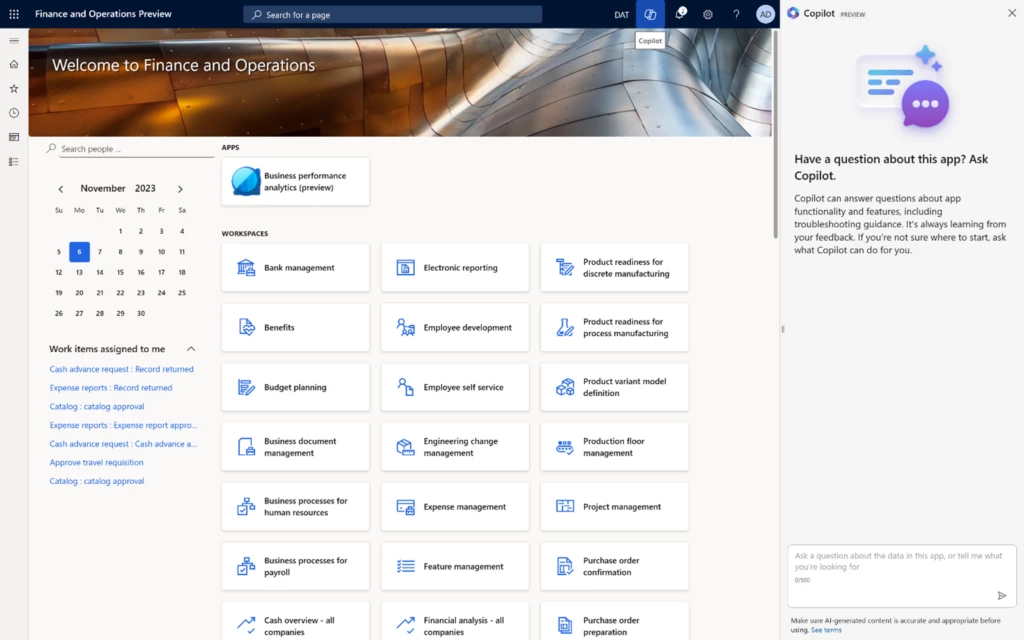

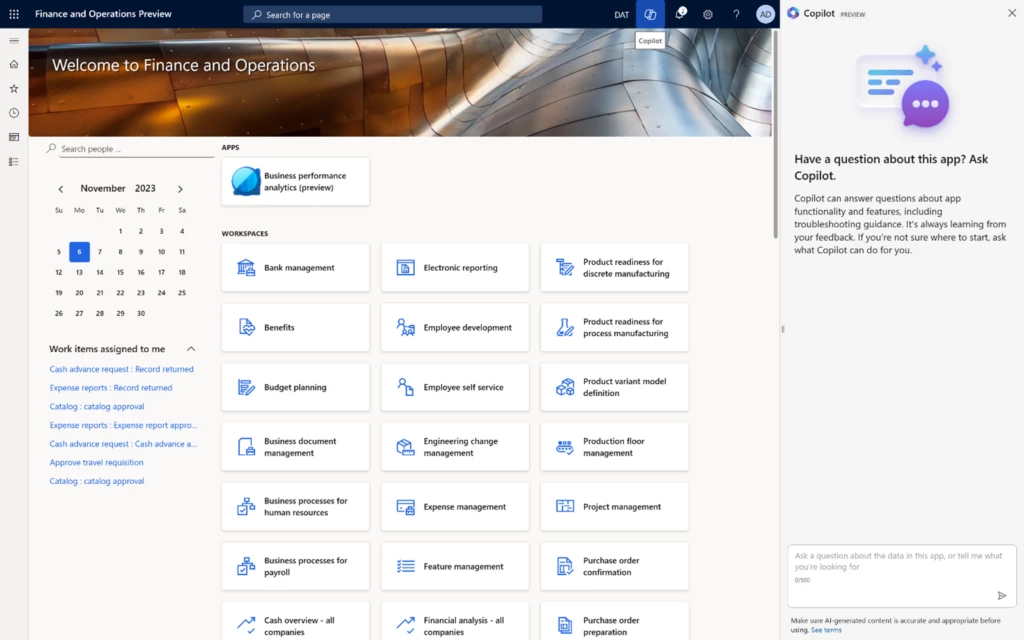

Step 1: Access Copilot

To begin her journey, Alice simply looks for the Copilot icon at the top of the screen. Clicking on it opens the conversational sidecar experience.

Copilot introduces itself warmly and encourages users, like Alice, to ask any questions they may have.

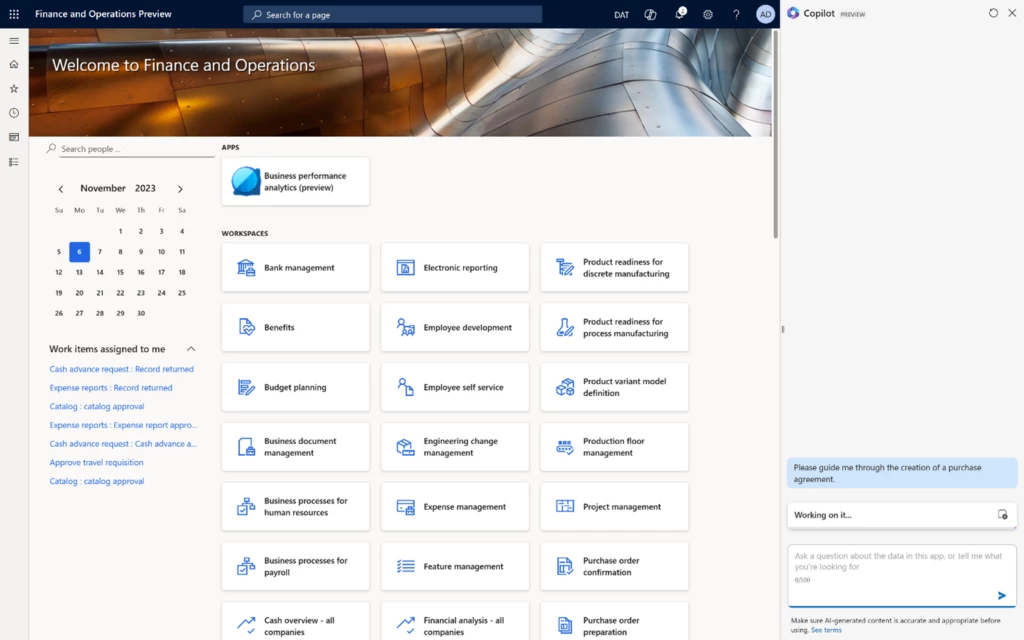

Step 2: Pose Your Question

In this for her uncommon task of creating a purchase agreement, Alice can simply type her question into the Copilot chat experience.

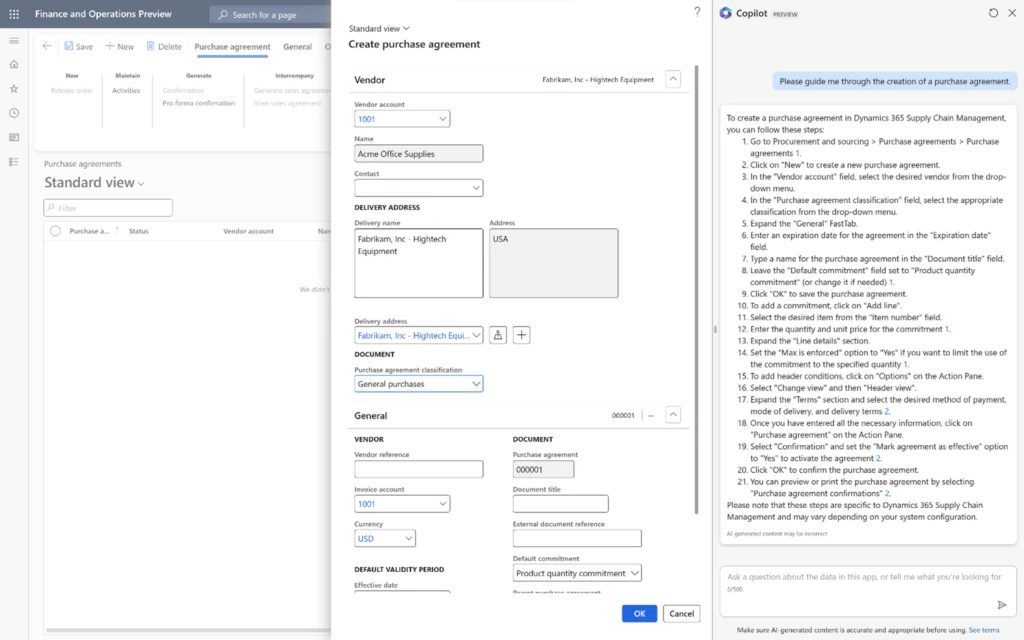

Step 3: Instant Guidance

Like a trusted companion, Copilot responds promptly, providing Alice with clear, step-by-step guidance. With Copilot’s help, Alice navigates through the process seamlessly, ensuring she doesn’t miss a single detail.

Watch the demonstration videos below to see Copilot in action:

In this animation, Alice as a novice user experiences Copilot with its in-app help and guidance feature and Copilot helps her to get started with the Dynamics 365 Supply Chain Management application.

In the second example you see a Copilot interaction where a more experienced user is empowered by Copilot to efficiently tackle complex tasks with confidence and ease.

The Benefits Unveiled

The advantages of Copilot’s in-app help guidance are numerous:

- Cost-Efficiency: By reducing the need for extensive training, organizations can save significantly on training costs.

- Enhanced User Experience: Users gain immediate access to accurate information, enhancing their overall experience with Dynamics 365 Supply Chain Management.

- Improved Productivity: With real-time answers to questions, users can work more efficiently and effectively.

Responsible AI

We are committed to responsible AI practices. Here’s how Copilot ensures responsible AI usage:

- Data Privacy: Copilot follows strict data encryption and secure storage protocols to protect user data.

- Content Moderation: Messages generated by Copilot undergo a series of checks to ensure relevance and prevent harmful content.

- Responsible AI Principles: Our work is guided by principles such as fairness, reliability, privacy, transparency, and accountability.

The future of Copilot in Dynamics 365 Supply Chain Management

This section provides a glimpse into the expanding capabilities of Copilot, where it becomes a valuable partner for users, offering guidance, insights, and seamless navigation within the application.

- Copilot will go beyond providing answers, it will assist users in navigating within our product, making it an indispensable tool for efficiency.

- Users can inquire about data, processes, and status, and even act directly from the Copilot conversation.

- Copilot, in generative help and guidance, will provide the next best action suggestions on what users should likely do next.

As we look into the future, we are excited to share a demo video that offers first hand experience of Copilot’s evolving capabilities in Dynamics 365 Supply Chain Management.

Conclusion:

In conclusion, Copilot with its its In-app help guidance in Dynamics 365 Supply Chain Management represents a significant step in enhancing user experience. It bridges the gap between complexity and ease of use, enabling users to unlock the full potential of our product. While we acknowledge that we are in the early stages of this journey with basic capabilities, we are committed to continuous improvement. As organizations embrace this feature, they begin to reduce training costs and empower their workforce. Dynamics 365 Supply Chain Management users can look forward to a more streamlined experience. Copilot In-app help and guidance simplifies tasks and provides instant assistance, helping users make the most of their Dynamics 365 Supply Chain Management experience. We are dedicated to enhancing these capabilities further, ensuring that users can navigate our product more efficiently.

Learn More

Interested in learning more about Copilot’s in-app help guidance? Here are your next steps:

Read the Copilot Product Documentation:

For comprehensive and detailed information about Copilot’s capabilities and functionalities, be sure to check out our product documentation. You’ll find in-depth insights into how Copilot can enhance your experience with Dynamics365 Supply Chain Management –

Read the Responsible AI FAQ for Copilot and its capability of generative help and guidance.

Experience Copilot in action (For Existing Dynamics 365 Supply Chain Management Customers):

If you’re already using Dynamics 365 Supply Chain Management, you can enable and experience Copilot’s capabilities to streamline your operations. Here’s how:

- Step 1: Enable Copilot Feature: Follow our documentation for existing customers to learn how to enable this feature. Once enabled, you’ll have access to Copilot’s powerful in-app help guidance within Dynamics 365 Supply Chain Management.

- Step 2: Access Copilot – Locate the Copilot icon at the top of your screen within Dynamics 365 Supply Chain Management, then click on it to open the conversational sidecar experience. Copilot will introduce itself and encourage you to ask questions.

- Step 3: Pose Your Question – In any uncommon or challenging task within the application, simply ask Copilot for guidance. For better results, especially when seeking documentation-related in-app help, consider starting your questions with ‘How.’

- Step 4: Instant Guidance – Copilot will provide you with step-by-step guidance; all responses are grounded by our public documentation.

Please note that Copilot’s capabilities are exclusively available to existing Dynamics 365 Supply Chain Management customers. If you’re one of them, don’t miss out on the opportunity to enhance your user experience and streamline your operations with Copilot.

Join Copilot for finance and operations apps – Yammer

Stay informed about the most recent Copilot by becoming a member of our Copilot for finance and operations Yammer Group . Share your Feedback and be the first to know about the latest enhancements.

The post Microsoft Copilot brings generative help and guidance into Dynamics 365 Supply Chain Management appeared first on Microsoft Dynamics 365 Blog.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

Recent Comments