by Contributed | Apr 16, 2021 | Technology

This article is contributed. See the original author and article here.

Surface tools that assist IT admins with core security, management, and diagnostic tasks are now updated with support for the latest enterprise devices. These IT tools include:

- Surface Enterprise Management Mode. Allows you to secure and manage firmware settings within your organization. Notably, customers can now use Surface Enterprise Management Mode to enable and disable hardware components and UEFI in Surface Laptop 4 along with support for simultaneous multithreading and improved MSI signing across all devices.

- Surface Diagnostic Tool for Business. Eases the support experience through Surface Diagnostics. Provides a full suite of diagnostic tests and software repairs to quickly investigate, troubleshoot, and resolve hardware, software, and firmware issues with Surface devices. This latest update lets you quickly determine if the SSD was replaced from the original inbox SSD.

- Surface Data Eraser. With the all new and improved Surface Data Eraser, we re–engineered how the tool works to provide a seamless experience to help you secure your devices through erasure.

- Surface Deployment Accelerator. Support for building your own Surface images for Surface Laptop 4 will be available soon.

Built-in support

In addition, the latest versions of Surface Brightness Control and Surface Asset Tag already have built-in support for Surface Laptop 4.

Download

You can download these and other tools from Surface Tools for IT, available on the Microsoft Download Center. Surface Laptop 4 and Surface Headphones 2+” are available for purchase now.

by Contributed | Apr 15, 2021 | Technology

This article is contributed. See the original author and article here.

Azure Sphere OS version 21.04 is now available for evaluation in the Retail Eval feed. The retail evaluation period provides 14 days for backwards compatibility testing. During this time, please verify that your applications and devices operate properly with this release before it is deployed broadly via the Retail feed. The Retail feed will continue to deliver OS version 21.03 until we publish 21.04 in two weeks. For more information on retail evaluation see our blog post, The most important testing you’ll do: Azure Sphere Retail Evaluation.

This evaluation release includes an OS update only; it does not include an updated SDK.

Notes about this release

- Areas of special focus for compatibility testing with 21.04 should include apps and functionality utilizing:

- Any wolfSSL calls, as it has been upgraded to version 4.7.

- Wi-Fi connectivity as the background scanning process had been upgraded.

- 21.04 includes updates to mitigate against the following CEVs:

- CVE-2021-28460

- CVE-2021-22876

- CVE-2021-22890

- RF tools version 21.01 is compatible with OS version 21.04.

For more information on Azure Sphere OS feeds and setting up an evaluation device group, see Azure Sphere OS feeds and Set up devices for OS evaluation.

For self-help technical inquiries, please visit Microsoft Q&A or Stack Overflow. If you require technical support and have a support plan, please submit a support ticket in Microsoft Azure Support or work with your Microsoft Technical Account Manager. If you would like to purchase a support plan, please explore the Azure support plans.

by Contributed | Apr 15, 2021 | Technology

This article is contributed. See the original author and article here.

We are experiencing issues with the Microsoft Tech Community Language Translation system which means that Translations will currently be unavailable for all users. Our engineers are investigating the cause and we will resume normal service as soon as possible.

Please comment or subscribe to this post to receive updates via email.

Please accept our apologies for any inconvenience.

Allen Smith

Technical Lead

by Contributed | Apr 15, 2021 | Technology

This article is contributed. See the original author and article here.

Yanelis Lopez, Security Software Engineer, Cloud & AI Security Green Team

A common tactic we observe used by adversaries against customers running Linux Virtual Machines (VMs) in Azure is password-based attacks. This article will explain how to help protect Linux VMs in Azure from these types of attacks at every step of the deployment pipeline.

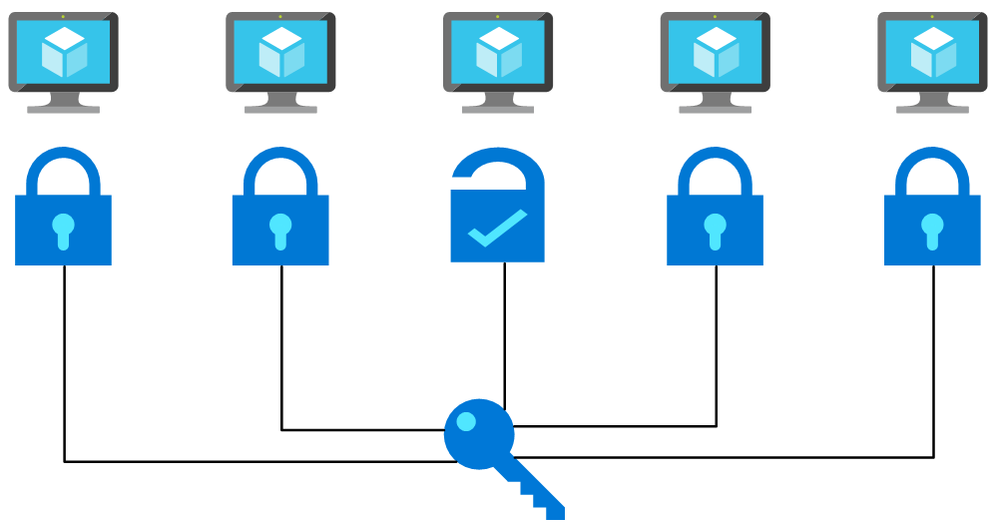

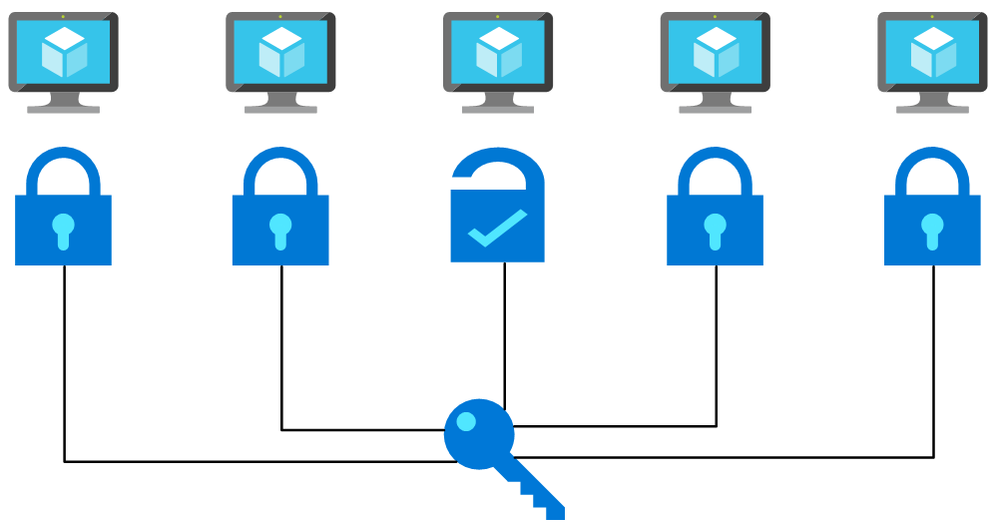

Flow chart picturing a password being used on multiple machines and working with one. This is a simplified depiction of a password spray attack.

Flow chart picturing a password being used on multiple machines and working with one. This is a simplified depiction of a password spray attack.

Many tools exist that are commonly used to brute force user account passwords over SSH, including those used in cases of Linux brute force compromise in Azure. A specific example is password spray attacks, where an attacker uses one or a few passwords against multiple well-known user accounts in an environment. These attacks typically attempt common passwords hoping one or more accounts can be compromised across multiple virtual machines. Attackers limit the number of password guess attempts to avoid account lockout and detection by defenders.

Although mitigating controls such as password complexity, routine password rotations, disallowing common passwords, etc. help, using password-less authentication is the safer approach.

On Linux, authentication via SSH can be password-less, using SSH key-based authentication instead. SSH keys, are non-human generated, inherently unique, and significantly harder to be brute forced or guessed.

Below, we will show you how to use SSH key-based authentication pre-deployment, enforce it during deployment, and detect non-compliance post-deployment.

Pre-Deployment – Secure from the start: Creating Linux virtual machines

In Azure, there are a few methods for creating Linux VMs: via the Azure Portal, Azure CLI, Powershell, or Azure Resource Manager (ARM) Templates. All have the ability (and document how) to deploy a Linux VM with SSH key as a default option for authentication. To update an ARM template that uses password authentication to instead use SSH keys, follow the below steps.

How to transform your ARM template to use SSH key

Replace the admin password parameter with the ‘adminSSHKey’ parameter

“parameters”: {

…

“adminUsername”: {

“type”: “string”,

“metadata”: {

“description”: “Username for the Virtual Machine.”

}

},

“adminPassword”: {

“type”: “securestring”,

“metadata”: {

“description”: “Admin password on all VMs.”

}

},

“adminSSHKey”: {

“type”: “securestring”,

“metadata”: {

“description”: “SSH Key for the Virtual Machine.”

}

}

…

}

Next, add the ‘linuxConfiguration’ property to the variables of the template

“variables”: {

…

“linuxConfiguration”: {

“disablePasswordAuthentication”: true,

“ssh”: {

“publicKeys”: [

{

“path”: “[concat(‘/home/’, parameters(‘adminUsername’), ‘/.ssh/authorized_keys’)]”,

“keyData”: “[parameters(‘adminSSHKey’)]”

}

]

}

},

…

}

Finally, add the ‘linuxConfiguration’ property to the VM resource in the template. In the ‘osProfile’ property remove the ‘adminPassword’ property and add the following ‘linuxConfiguration’ property

“properties”: {

…

“osProfile”: {

…

“adminPassword”: “[parameters(‘adminPassword’)]”

“linuxConfiguration”: “[variables(‘linuxConfiguration’)]”

}

…

}

Now that the template is updated to use SSH key authentication, generate a SSH key using the following command

ssh-keygen -m PEM -t rsa -b 4096

Check out this documentation for more information about generating SSH keys on Windows.

Pass the public key of the SSH key as the value for the ‘adminSSHKey’ parameter described above. Now all VMs deployed using these transformed ARM templates will be safer from password-based attacks.

You can find many examples of ARM templates that deploy VMs with SSH key-based authentication online. When searching for ARM Template examples for Linux VMs, one of the top results is the Azure Quickstart GitHub Repo. These templates are used as starting points for deployments by many individuals. Almost 200 templates in this 800-template repo, deploy a VM or VMSS and previously had SSH authentication configured as password only, making VMs deployed with these templates vulnerable to password-based attacks. We updated these sample templates to disable password-based authentication by default, adding SSH key as the default option.

At Deployment – SSH key enforcer: Using Azure Policy

As part of Microsoft’s quest to get rid of passwords, we have published an Azure Policy which helps ensure Azure Linux VMs use SSH key authentication instead of passwords. You can deploy this policy to your subscription or management group to prevent creation of Linux VMs with password as the SSH authentication type. As seen below, this policy blocks the deployment if the ‘disablePasswordAuthentication’ property in a VM is not defined or is set to ‘false’ when the VM image publisher and offer are well-known Linux offerings. After deploying this policy, any new deployments which include a Linux VM with password-based authentication fail.

"policyRule": {

"if": {

"allOf": [

{

"field": "type",

"equals": "Microsoft.Compute/virtualMachines"

},

{

"anyof": [

{

"field": "Microsoft.Compute/virtualMachines/osProfile.linuxConfiguration.disablePasswordAuthentication",

"exists": "False"

},

{

"field": "Microsoft.Compute/virtualMachines/osProfile.linuxConfiguration.disablePasswordAuthentication",

"equals": "false"

}

]

},

{

"anyOf": [

{

"allOf": [

{

"field": "Microsoft.Compute/imagePublisher",

"equals": "Canonical"

},

{

"field": "Microsoft.Compute/imageOffer",

"in": [

"UbuntuServer",

"Ubuntu_Core"

]

}

]

},

...

{

"allOf": [

{

"field": "Microsoft.Compute/imagePublisher",

"equals": "RedHat"

},

{

"field": "Microsoft.Compute/imageOffer",

"in": [

"osa",

"RHEL",

"rhel-byos",

"rhel-ocp-marketplace",

"RHEL-SAP",

"RHEL-SAP-APPS",

"RHEL-SAP-HANA"

]

}

]

}

]

}

]

},

"then": {

"effect": "deny"

}

}

Post-Deployment – Staying Secure: Azure Security Center Recommendation

We have discussed solutions for new deployments, but what if you have VMs already deployed which use password-based SSH authentication? A new Azure Security Center (ASC) recommendation, “Authentication to Linux machines should require SSH keys”, checks for the use of SSH authentication after a VM has been deployed. This recommendation is part of the ASC Secure Score* and follows Azure Security Benchmark best practices. The recommendation is a built-in Guest Configuration policy that monitors changes made to the SSH password authentication setting on the machine itself; if a VM was originally deployed with SSH key-based authentication and later password-based authentication is enabled in the SSH settings, this recommendation will report the VM as non-compliant.

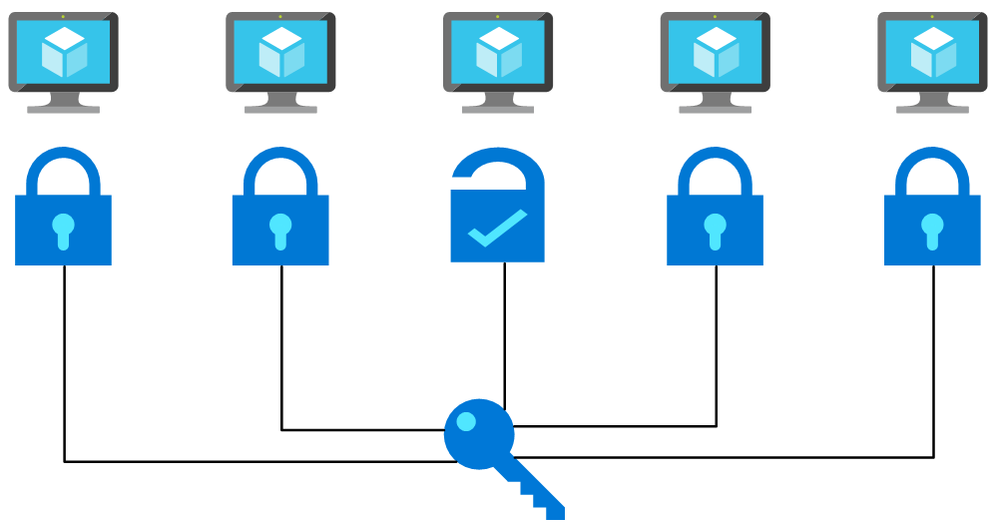

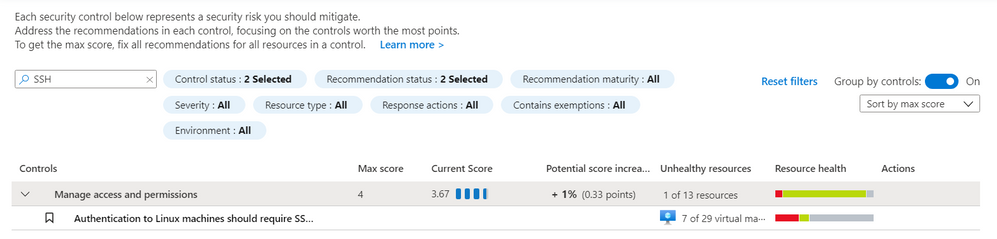

For the recommendation to run, the Guest Configuration extension must be installed. You can follow this documentation for installing the prerequisite Guest Configuration extension. There is also an ASC recommendation suggesting downloading the extension that includes a Quick Fix capability named “Guest Configuration extension should be installed on your machines”. It can be found under the “Manage access and permissions” control. Likewise, the Linux SSH policy recommendation can be found under the “Manage access and permissions” control. The figure below shows this Linux SSH policy recommendation:

ASC Linux SSH Recommendation – Screenshot of the recommendation in the Azure Security Center blade in the Azure Portal. You can find the recommendation by searching for “ssh” in the search bar.

ASC Linux SSH Recommendation – Screenshot of the recommendation in the Azure Security Center blade in the Azure Portal. You can find the recommendation by searching for “ssh” in the search bar.

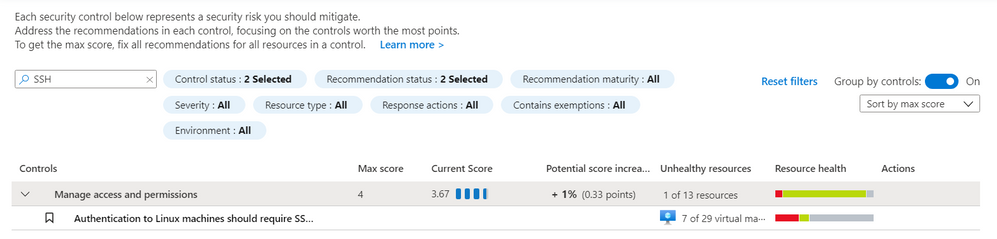

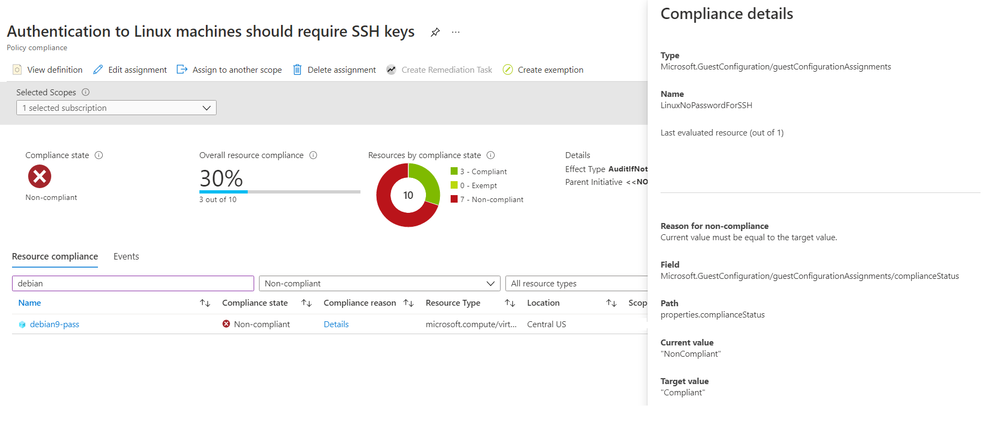

The policy can also be found in the virtual machine resource under the Policies blade. This can be useful for understanding the Compliance state, Compliance reason, and Last evaluated time. The figure below shows a Non-compliant machine using password authentication:

ASC Linux SSH Policy – Screenshot of the Azure Portal showing the Compliance information for the ASC recommendation. This example shows a VM as non-complaint for this recommendation.

ASC Linux SSH Policy – Screenshot of the Azure Portal showing the Compliance information for the ASC recommendation. This example shows a VM as non-complaint for this recommendation.

To remediate this recommendation, you must add an SSH key to the non-compliant VM and disable password authentication by following the below steps.

- SSH into the existing VM

- Copy the SSH public key from your host into ~/.ssh/authorized_keys

- Edit /etc/ssh/sshd_config (with sudo) and update the value of “PasswordAuthentication” to “no”.

- Restart the SSH service on the VM

Azure Active Directory (Azure AD) alternative

An alternative to SSH keys is using Azure Active Directory (Azure AD) authentication. This allows for the use of Azure AD credentials to log in to Azure Linux VMs; this feature is currently in public preview.

Conclusion

Password-based attacks on Azure Linux VMs is an active problem, but solutions are available to help protect against these attacks. We’ve demonstrated how to fix ARM templates to use SSH Key instead of passwords, how to deploy a security gate using Azure Policy which disallows Linux VMs that use passwords, and how to detect and remediate existing VMs that have password authentication enabled. Together, these recommendations provide a solid defense against password-based attacks on Azure Linux VMs. Take action and do all you can to be as secure as possible!

Footnotes:

*The policy does not impact the ASC Secure Score during public preview. Secure Score will only be impacted when the policy becomes Generally Available (GA).

Reviewers

Johnathon Mohr, Security Software Engineer, Cloud & AI Security Green Team

by Contributed | Apr 15, 2021 | Dynamics 365, Microsoft 365, Technology

This article is contributed. See the original author and article here.

In any business operations software, the ability to visualize, inspect, and print data in an appropriate layout is paramount. In Dynamics 365 Business Central and in most other software, reports serve these purposes.

Our partner community is familiar with customizing built-in reports, either as suppliers of a vertical solution that adds capabilities to underlying data which must be reflected in the relevant reports, or as resellers who tailor reports to meet individual customer requirements.

In the past, you had to modify existing reports through code customization by directly editing the report object.

In Business Central online, direct customization is not possible. Instead, you add new objects or modifications in a controlled manner through extensions to the base product. The extensions make it easier to deploy, maintain, and upgrade the software.

However, until now you weren’t able to extend report objects, so partners had to copy and branch any report that required modification. These steps were a pain point that incurred costs and a maintenance burden even for the simplest changes. As a result, closing this gap has been the highest voted idea on the Business Central Ideas site year after year.

2021 release wave 1 brings support for report extensions in Dynamics 365 Business Central. You can now extend both document type, visual reports as well as processing reports. You no longer need to branch reports when you add capabilities, and multiple solutions can now add to the same base report dataset. Plus, as a reseller who adds additional customer requirements and custom layouts, you have access to all of the resulting report datasets.

With the introduction of report extensions, there are two approaches to customizing reports:

- Use the event-based substitution of a report, which is useful for taking over reports without changing any actions in the user interface.OK

- Use the new report extension for making additive changes.

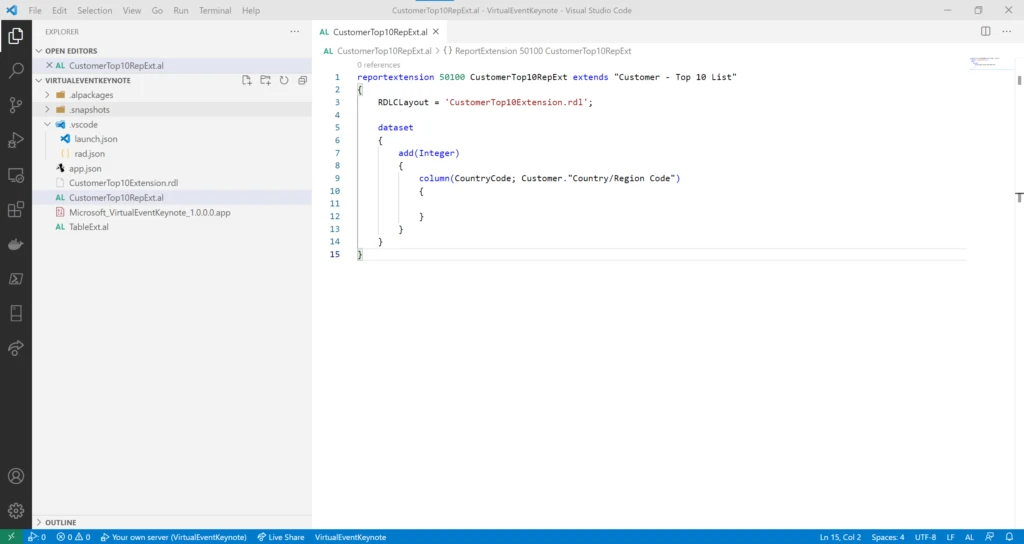

New report extension AL object

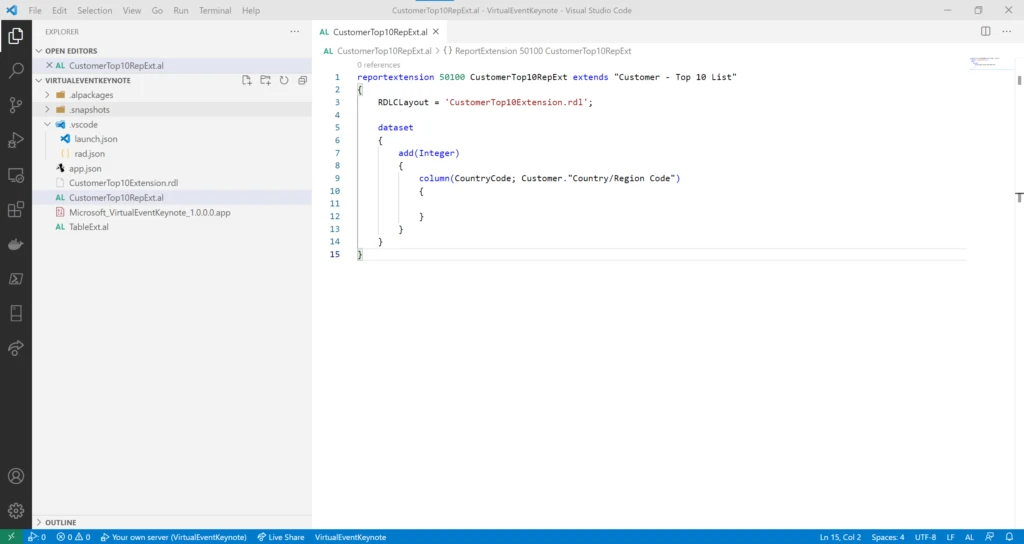

Report extensions are based on a new object type in AL, the programming language used for manipulating data and controlling the execution of the various application objects, such as pages, reports, or codeunits. With this object type, you can extend an existing report in several different ways, including the following:

- Add new columns to existing data items.

- Add new nested data items.

- Add columns for fields in a source table, a table extension, related tables, variables, procedures, or expressions.

- Add a new RDLC layout or Word layout.

- Add or modify the request page.

Note that there is no support for extending or modifying an existing layout.

In an upcoming service update, we will add support for labels, as well as some limited abilities to modify existing data items, such as adding to request fields, calc fields, or triggers.

Use report extensions to make additive changes to existing reports, such as adding a country column to the existing customer top 10 list.

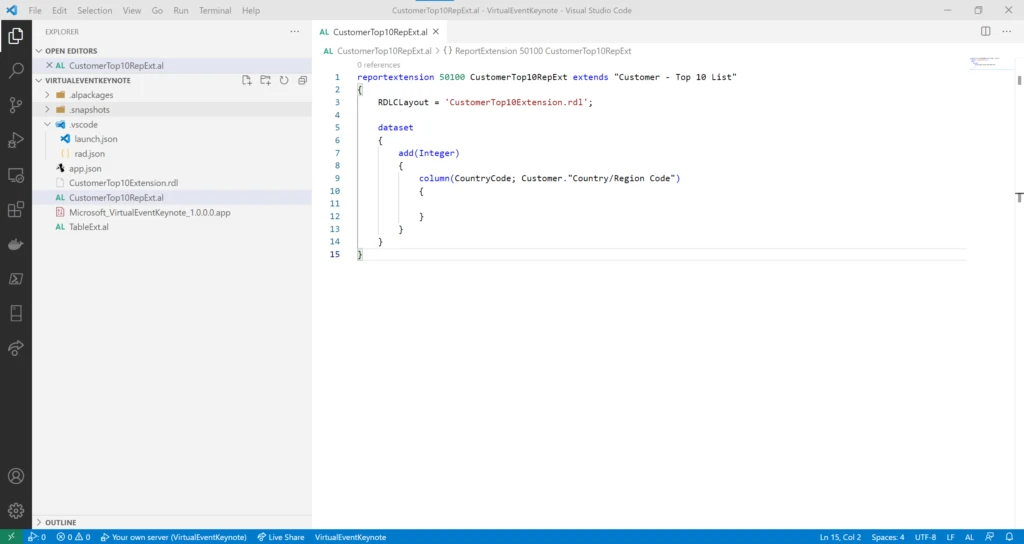

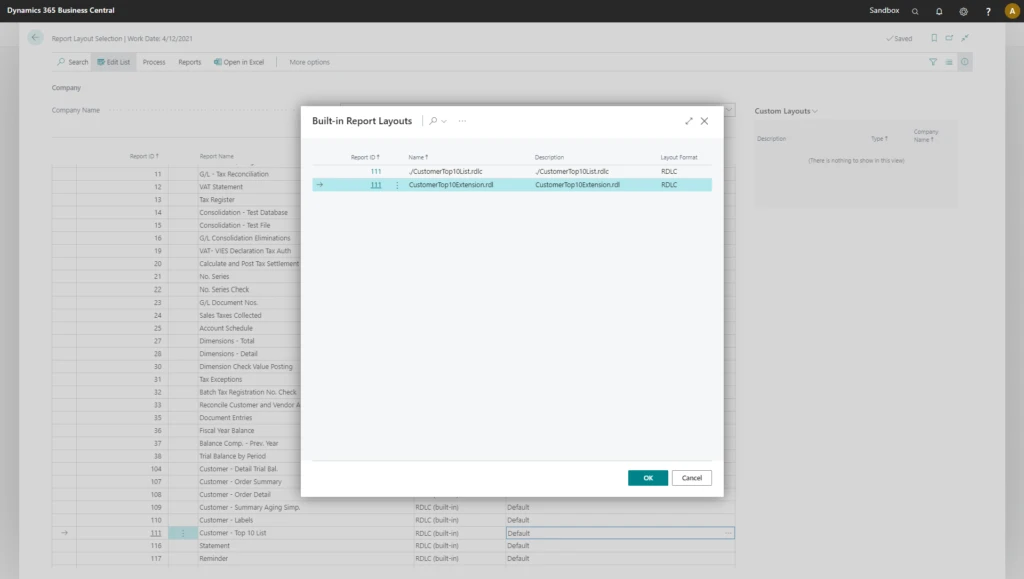

Choose layouts from report extensions

When an extension deploys to a customer environment, either as an AppSource app or as a per-tenant extension (PTE), any report layouts in the extension also become available in the environment. However, if you want to apply a report extension layout to a specific report, you need to add installation and upgrade code in the extension. Users will be able to choose a layout from a report extension in the Report Layout Selection page that lists all available layouts for a given report.

The Report Layout Selection page includes any layouts from report extensions so that the user can choose between all available layouts.

Next steps

Learn more about report extensibility in the release plans, the documentation, or at the virtual launch event sessionLE21-08 What’s new in Visual Studio code and AL, which covers report extensions in detail. The virtual launch event site has many other valuable sessions on new features in Dynamics 365 Business Central 2021 Wave 1 release.

We encourage you to explore the new report extension feature and provide feedback to our AL GitHub repo. You can also submit any suggestions for additional coverage or improvements on the Business Central Ideas site.

The post Extending reports in Business Central appeared first on Microsoft Dynamics 365 Blog.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

by Contributed | Apr 15, 2021 | Technology

This article is contributed. See the original author and article here.

Are you bewildered by the possibilities of the Microsoft Cloud when it comes to SharePoint? You’re not alone.

The Microsoft Cloud brings a huge range of opportunities for how users can leverage Azure with SharePoint – and one father-daughter duo is preparing to educate and elevate other developers on how Azure fits into their Office 365 and SharePoint world.

Office Apps & Services MVP David Patrick is set to present with daughter Sarah Patrick at this week’s Global Azure 2021. Over three days from April 15-17, communities around the world are organizing localized live streams for anyone and everyone to learn about Azure from best-in-class community leaders.

David says he is excited to share the virtual stage with his daughter, a student at the University of Maryland, and share all the tips and tricks the pair has learned. “[My learning journey started] in Visual Basic a long time ago,” David says. “Then I moved on to C# when .NET came out and now I’m learning, along with my daughter, all the good cloud technologies like SharePoint Online and Power Apps and how to integrate everything using Flows in Power Automate.”

“All along the way, Microsoft’s great community support has always enabled Sarah and me to quickly learn new technologies without a lot of financial investment,” David adds. “Tools like the Learning Paths, Quick Starts, Tutorials and free Azure Sandboxes available from Microsoft Learn are extremely helpful in getting up to speed on things like Azure SQL and Web Apps really quickly.”

David says his daughter is very active in learning new technologies and sharing that knowledge with others. For example, Sarah has taken part in internships with the Project Management Institute and the Smithsonian Institution and regularly instructs at conferences like SharePoint Saturdays and Teams Day Online.

“Sarah started young, she gave her first talk at age 16 to a group of middle school girls during a workshop called TechGirlz,” David says. “She was ‘bitten’ by the speaking bug because she continues to lead these workshops today five years later!”

Going forward, David suggests other MVPs can help spread the word about all things tech by directly working with and inspiring young people. “They can volunteer at colleges, like a bunch of MVPs did when we organized a ‘College Tour’ in 2018 for Towson University, or they can partner with an organization like TechGirlz, which always needs volunteers to help lead and run their workshops,” David says.

“Sarah and I have been doing these for the last five years and they continue to be a source of energy and enthusiasm.”

In their session titled Intro to Azure for the SharePoint Developer, the duo is set to offer an overview of how cloud computing relates to Azure and Office 365, give tips on deploying SharePoint on-premises to Azure, and demonstrate how to quickly stand up multiple versions of SharePoint in Azure using Azure templates.

For more, check out Sarah’s Twitter @sarahepatrick or David’s Twitter @DavidEPatrick

by Contributed | Apr 15, 2021 | Technology

This article is contributed. See the original author and article here.

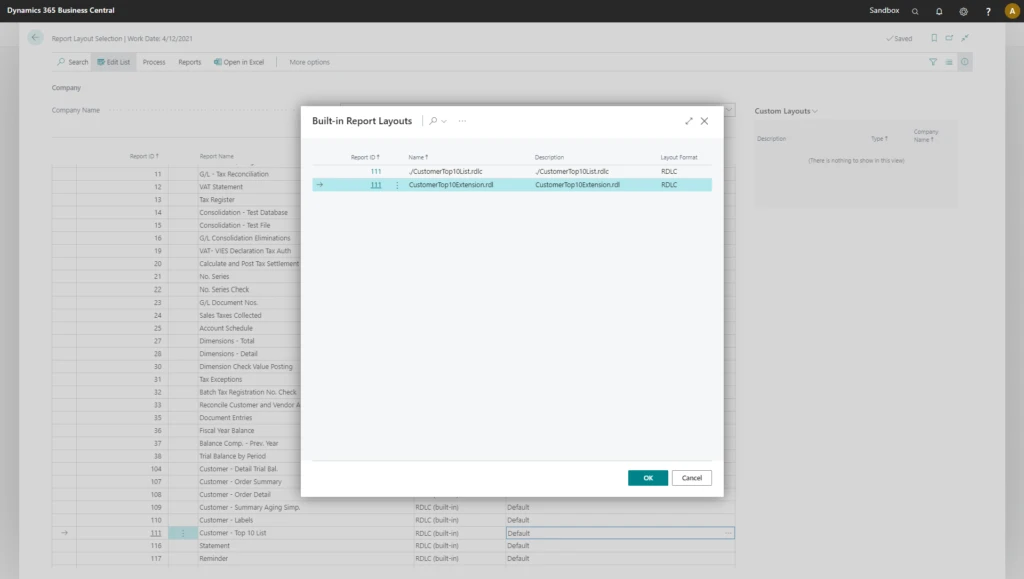

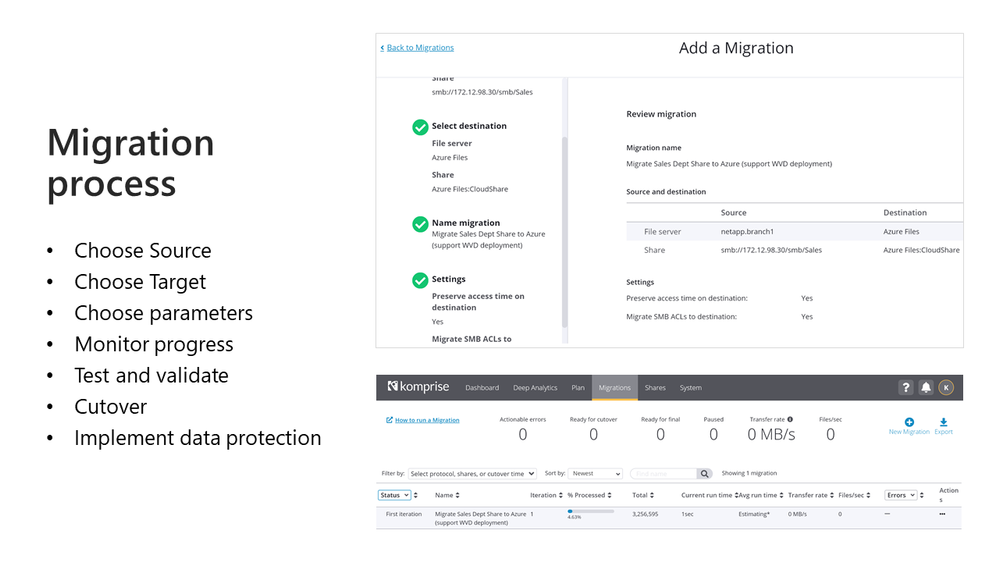

Throughout my career as both an IT Pro and supporting customers as a vendor, my most challenging projects (and causes of sleep deprivation) were always tied to migration. I have been personally responsible for migrating applications and data – and data centers – from one physical location to another. Between homogeneous and heterogeneous platforms. Physical and virtual. SAN and NAS. On-premises to Cloud. Anything and everything. Anywhere and everywhere.

The Azure Migrate team has done a tremendous job of building an extensible service platform that gives our customers and partners the ability to migrate the vast majority of servers, applications, and databases to Azure quickly, reliably, and safely. Within the Azure Storage team we have invested time and resources in developing solutions like Azure Data Box, AzCopy, and Azure File Sync to help our customers migrate file data to Azure from on-premises and Cloud-based Windows File Servers, NAS devices, and object storage platforms.

As I know personally, there are cases where additional tools are necessary when you migrate between heterogeneous storage platforms. This can be due to your business uptime requirements, the technical details of the source and target systems, complexity requiring expertise from specialists, or risk management and mitigation.

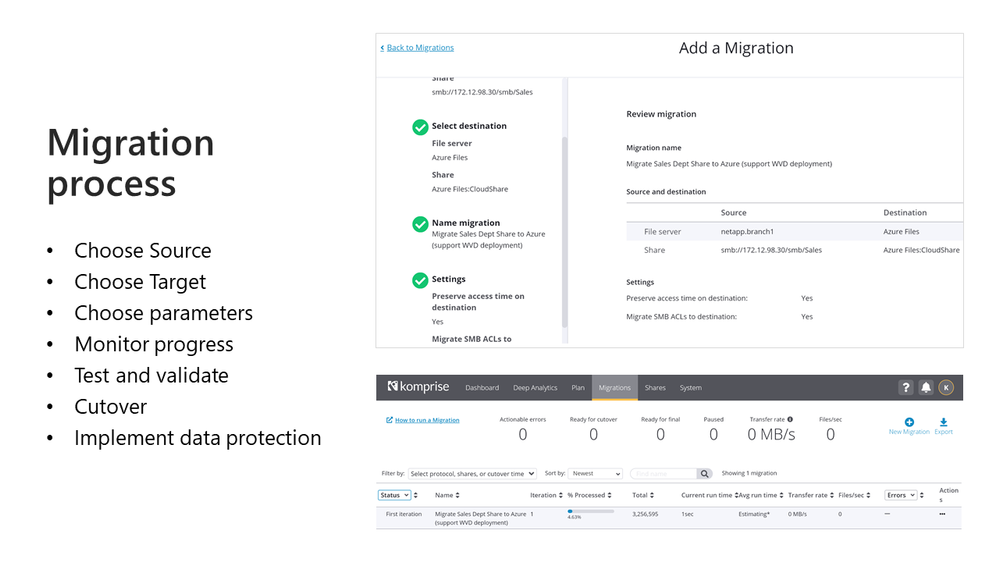

Migration Session during Azure Storage Day

If you are considering, planning, or executing a migration to Azure I would encourage you to attend our upcoming Azure Storage Day on April 29th. I will be delivering a session covering migration best practices, an overview of validated partner solutions, and a few demos that provide a complete solution for migrating all your current data sets. We will be following that session with a series on data migration process and solution best practices right here on Tech Community!

Want to get started building your foundation prior to the 29th?

Start with the excellent new overview document from my friend and colleague Niko Dukic. What types of solutions will you see and hear about as we kick off a deep dive on data migration during Azure Storage Day? You can get a sneak preview by taking a look at our list of verified storage migration partners and viewing demo videos from these partners and more on the Storage Bytes channel in the Azure Video Resource Center.

Partners are key and our partner ecosystem can help you to address data sets that are not covered with our native tools.

Here are some examples:

- Assess your current storage and backup environments to get ready for the migration to Azure and set your company up for success with Datavoss

- Migrate Files and Objects to Azure Blob Storage with Scality Zenko

- Move your big data held in HDFS to Azure Data Lake Storage with WANDisco LiveData Migrator for Azure

- Transfer multipath attached LUNs (and a LOT more from SANs) to Azure disks or Azure SAN Partners like Pure Storage with Cirrus Data Galaxy

- Move enterprise NAS volumes (NFS/SMB/Multiprotocol) to Azure Files, Azure NetApp Files, or Azure NAS Partners with Datadobi, Data Dynamics, or Komprise

- Niko also compiled the comparison of file migration solutions represented during Storage Day that you can view here.

But wait, there’s more!

I know, you are dying for a tech deep dive covering these migration solutions and migration methodology. Watch this space for more following the Azure Storage Day event including interviews with industry experts, tech deep dives, and longer demos than I will have time for on the 29th!

More coming soon!

Karl

by Contributed | Apr 15, 2021 | Technology

This article is contributed. See the original author and article here.

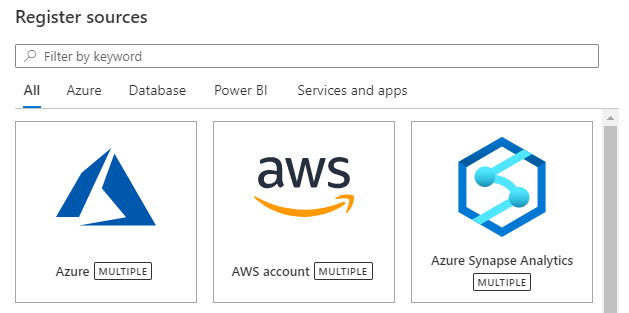

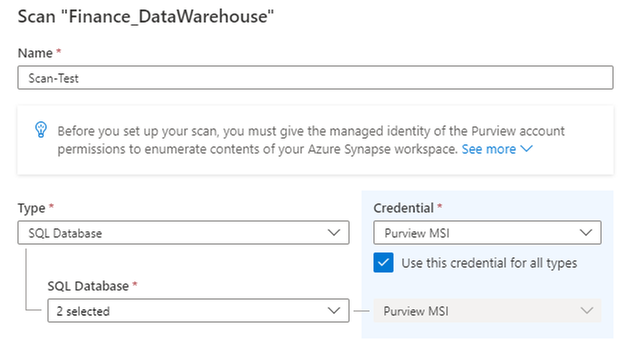

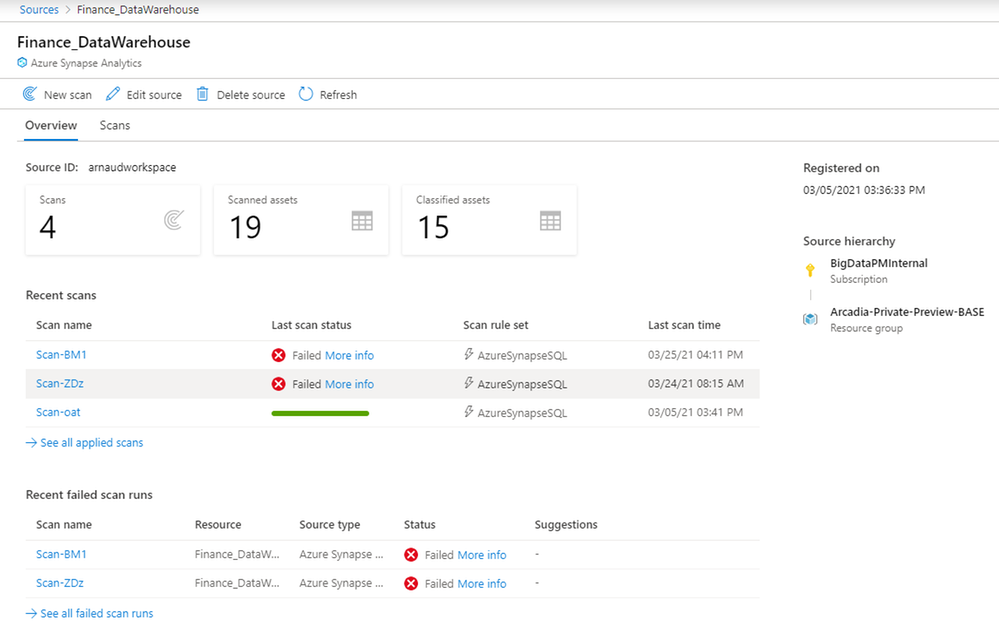

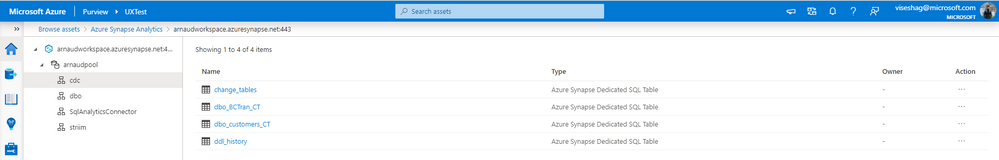

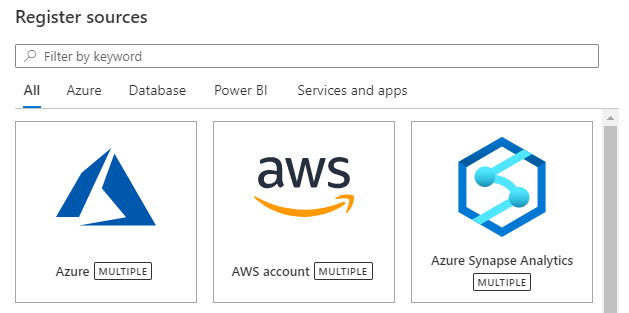

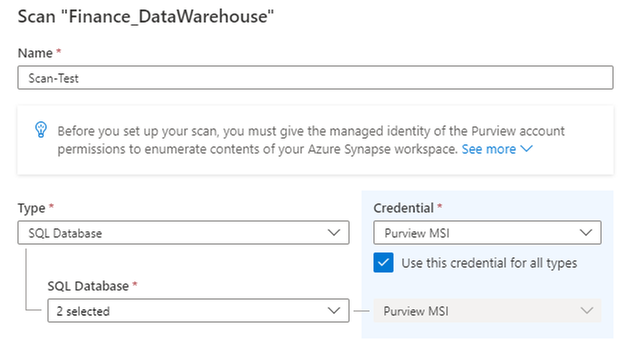

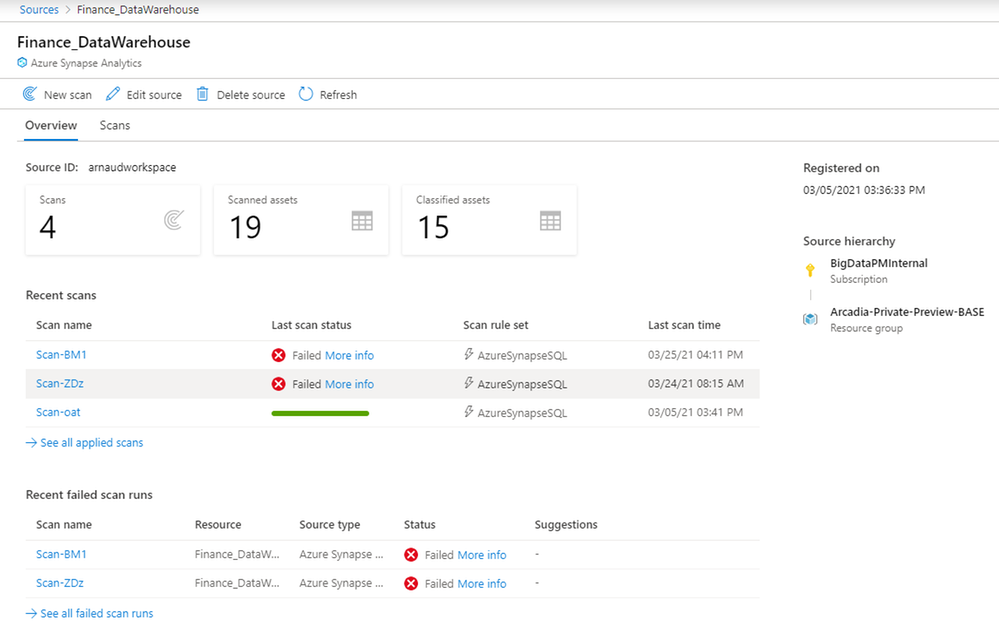

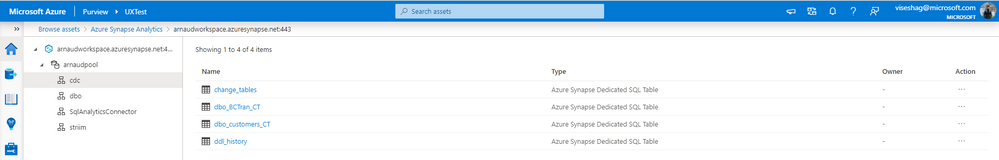

Azure Purview now supports registering your Azure synapse workspace as a data source. You can scan all the Dedicated and Serverless SQL databases within your workspace in a matter of a few clicks. You can also choose to scan your Synapse workspace under a subscription or resource group data source that you have already registered.

Setting up authentication to enumerate resources within your Synapse workspace

Navigate to the Resource group or Subscription that the Synapse workspace is in, in the Azure portal and select Access Control (IAM) from the left navigation menu. You must be owner or user access administrator to add a role on the Resource group or Subscription. Select +Add button and set the Reader Role and enter your Azure Purview account name (which represents its MSI) under Select input box. Follow the same steps as above to also add Storage blob data reader Role for the Azure Purview MSI on the resource group or subscription that the Synapse workspace is in.

Now, navigate to your Synapse workspace, and under the Data section, click on one of your Serverless SQL databases. Click on the ellipses icon and start a New SQL script. Add the Azure Purview account MSI (represented by the account name) as sysadmin on the serverless SQL databases by running the command below in your SQL script:

CREATE LOGIN [PurviewAccountName] FROM EXTERNAL PROVIDER;

ALTER SERVER ROLE sysadmin ADD MEMBER [PurviewAccountName];

You must also set up authentication on your Dedicated and Serverless databases to run scans on them. To learn how, read our full documentation here.

Register and scan your Azure Synapse data source

You can register all your Azure Synapse Analytics (multiple) workspaces.

You can set up scans on your Synapse workspace using our secure credential mechanism for authentication.

You can also select a scan rule set and set up a schedule for your scans.

Once your scan completes successfully, you can navigate to source details to view all relevant information pertaining to that source and its scans.

And you can discover assets along with their metadata, schema, and classifications because of these scans on your Synapse workspace, via the Purview catalog.

Get started today!

Read our full documentation here today!

by Contributed | Apr 15, 2021 | Technology

This article is contributed. See the original author and article here.

By Mark Hopper – Program Manager II | Microsoft Endpoint Manager – Intune

Microsoft Intune has the capability to integrate and connect with numerous external services. These connectors can include Microsoft services such as Microsoft Defender for Endpoint, third–party services such as Apple Business Manager, on–premises integrations such as the Certificate Connector for Intune, and many more.

Monitoring the health of an Intune environment is often a common focus for Microsoft Endpoint Manager customers. Today, admins can check their Intune tenant’s connector health using the Tenant Status page in the Microsoft Endpoint Manager admin center. However, many customers have expressed interest in exploring what options are available to proactively notify their teams when an Intune connector is determined to be unhealthy.

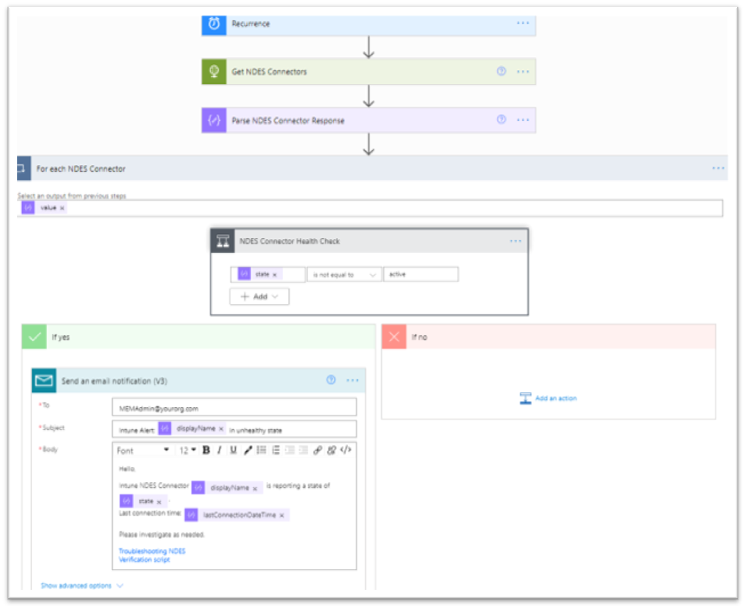

This blog will walk through the configuration steps to create an automated cloud flow using Power Automate that will notify a team or an individual when an Intune connector is unhealthy. The walkthrough will use the NDES Certificate Connector as an example, but this same flow logic can be leveraged across all Intune connectors in your environment. If you are not familiar with Power Automate, it’s a “low-code” Microsoft service that can be used to automate repetitive tasks to improve efficiencies for organizations. These automated tasks are called flows.

While the flow outlined in this blog will use email as the example notification method, keep in mind that flexibility and customization is key here. You can implement alternative notification methods that best aligns with your organization’s workflows such as mobile push notifications to the Power Automate app, Microsoft Teams channel posts, or even generating a ticket in your Helpdesk system if it can integrate with Power Automate. You can find a list of services that have published Power Automate connectors here.

Requirements

- Azure Active Directory

- Microsoft Intune

- Microsoft Power Automate

Note: The example flow in this blog leverages the HTTP action, which is a premium connection action. For more information on Power Automate licensing, see the page

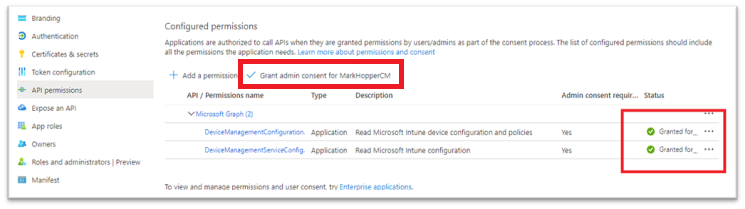

Permission

|

Graph Endpoints

|

Intune Connector

|

DeviceManagementConfiguration.Read.All

|

ndesConnector

androidManagedStoreAccountEnterpriseSettings

|

NDES

Managed Google Play

|

DeviceManagementServiceConfig.Read.All

|

applePushNotificationCertificate

vppToken

depOnboardingSettings

windowsAutopilotSettings

mobileThreatDefenseConnector

|

APNS Certificate

VPP Tokens

DEP Tokens

Autopilot

MTD

|

- Perform an HTTP GET request to each connector’s Microsoft Graph REST API endpoint.

- Parse the JSON response returned from Graph API.

- If there can be multiple connectors for a given Graph endpoint, use an Apply to each step. For example, only one APNS cert can be configured per Intune tenant, so an Apply to each would not be required. However, there can be numerous VPP tokens or NDES Connectors in a given tenant, so this step will loop through each connector returned in the response.

- Evaluate each connector’s health state.

- If determined to be unhealthy, send an email notification to a specified email address to notify the relevant admin or team.

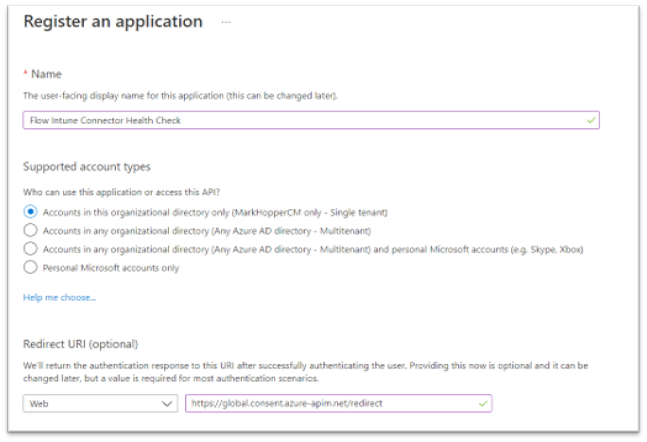

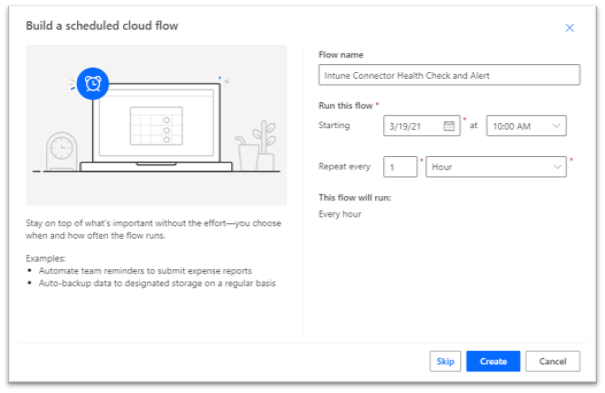

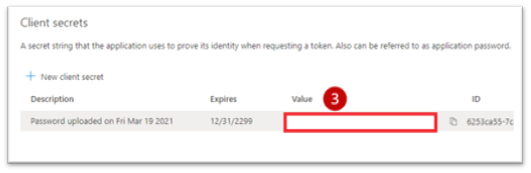

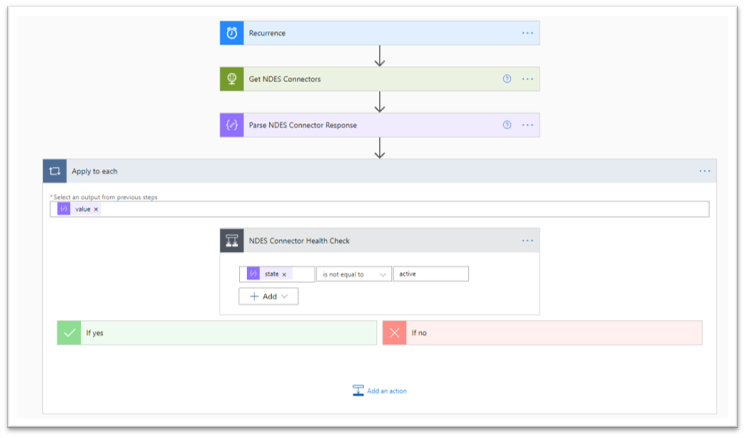

Create a Power Automate flow to evaluate Intune Connector health

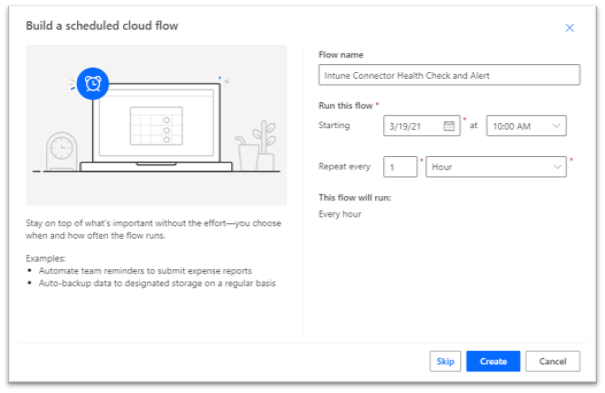

- To begin, open the Power Automate admin console, create a new scheduled cloud flow. For this example, the flow is configured to run once an hour.

Creating a new Power Automate flow in the Power Automate admin console.

Creating a new Power Automate flow in the Power Automate admin console.

Note: Ensure to not run this flow on an overly aggressive schedule to reduce the risk of throttling! Graph API and Intune service-specific throttling limits can be found here: Microsoft Graph throttling guidance. Power Platform request limits and allocations can be found here: Requests limits and allocations.

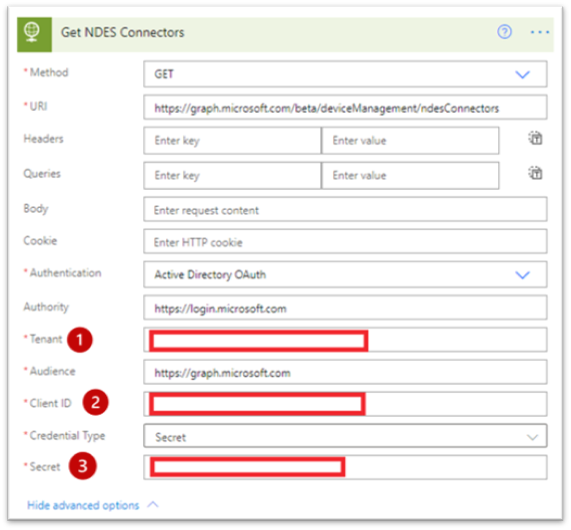

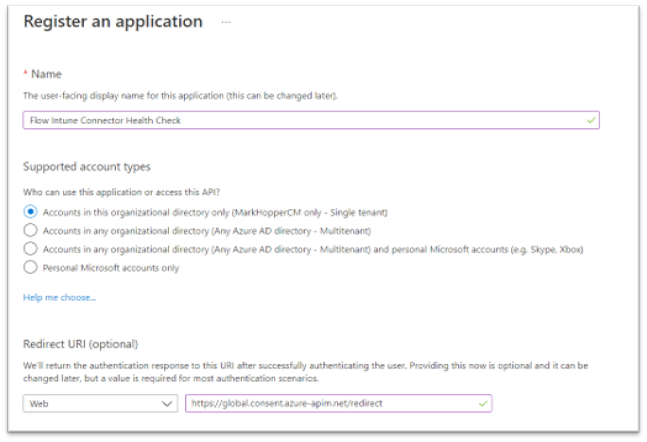

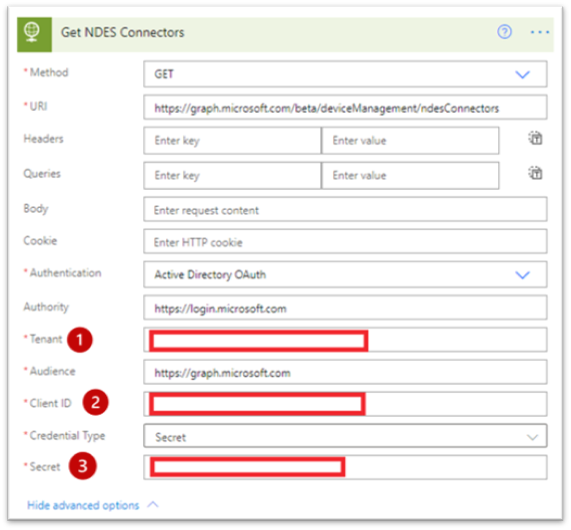

- Create a new HTTP action under the reoccurrence trigger using Active Directory OAuth as your authentication method. This action will retrieve the NDES Connectors by querying the https://graph.microsoft.com/beta/deviceManagement/ndesConnectors endpoint. In this example, this step is named “Get NDES Connectors”.

HTTP action properties for the ndesConnectors flow.

HTTP action properties for the ndesConnectors flow.

Method: GET

URI: https://graph.microsoft.com/beta/deviceManagement/ndesConnectors

Authentication: Active Directory OAuth

Authority: https://login.microsoft.com

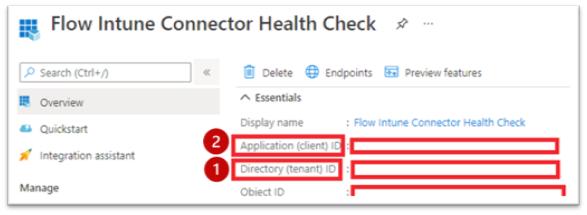

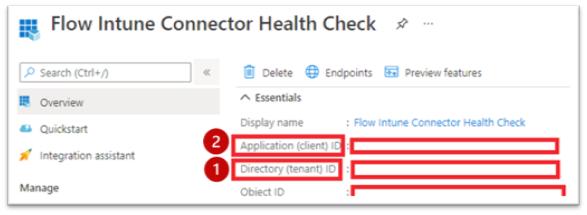

Tenant: Directory (tenant) ID from Overview blade in your Azure AD App Registration

Authority: https://graph.microsoft.com

Client ID: Application (client) ID from Overview blade in your Azure AD App Registration

Credential type: Secret

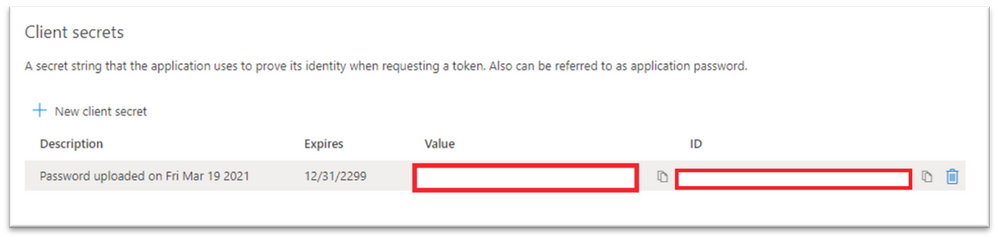

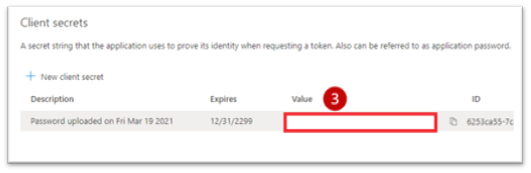

Secret: Secret key value generated while configuring the Azure AD App Registration.

Overview of the Flow Intune Connector Health Check.

Overview of the Flow Intune Connector Health Check.

Secret key value generated while configuring the Azure AD App Registration.

Secret key value generated while configuring the Azure AD App Registration.

- Create a new step to parse the JSON response returned from the GET request using the Parse JSON action. This will allow the flow to use values returned from our HTTP request for our connector health evaluation, as well as our notification message.

Content: Use the ‘Body’ dynamic content value generated from the previous step.

Schema: You can find the JSON schema by running a test GET request in Graph Explorer, and using the response to generate the schema. For example, run the following query in Graph Explorer: https://graph.microsoft.com/beta/deviceManagement/ndesConnectors.

Example GET request in Graph Explorer for the “ndesConnectors” query.

Example GET request in Graph Explorer for the “ndesConnectors” query.

This should return a JSON response. Copy this JSON response and paste it into Generate from sample in your Parse JSON step. This should generate the following schema, which will allow the flow to use the values in the JSON response such as state and lastConnectionDateTime as Dynamic Values in future steps to check if our connector is healthy. Here is what the JSON schema generated from the response should look like:

{

"type": "object",

"properties": {

"@@odata.context": {

"type": "string"

},

"value": {

"type": "array",

"items": {

"type": "object",

"properties": {

"id": {

"type": "string"

},

"lastConnectionDateTime": {

"type": "string"

},

"state": {

"type": "string"

},

"displayName": {

"type": "string"

}

},

"required": [

"id",

"lastConnectionDateTime",

"state",

"displayName"

]

}

}

}

}

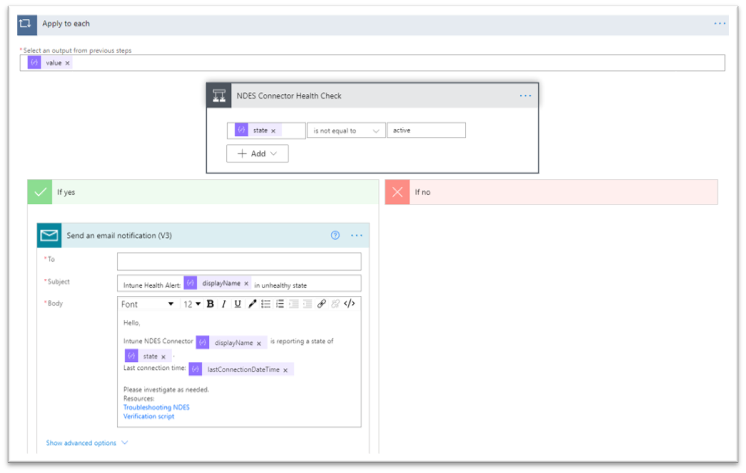

- Create a Condition step to check the NDES Connector health. For this step, the only condition to check is to see if state is not equal to active. Your health check should look similar to this:

Condition step to check the NDES Connector health.

Condition step to check the NDES Connector health.

Note: When you set the Condition step, the flow will automatically create an Apply to each step (think of it as a for-each loop). The reason for this behavior is that the “Parse NDES Connector Response” step returns an array which could contain multiple NDES connectors. The Apply to each step ensures each NDES Connector in the response has ran through the health check.

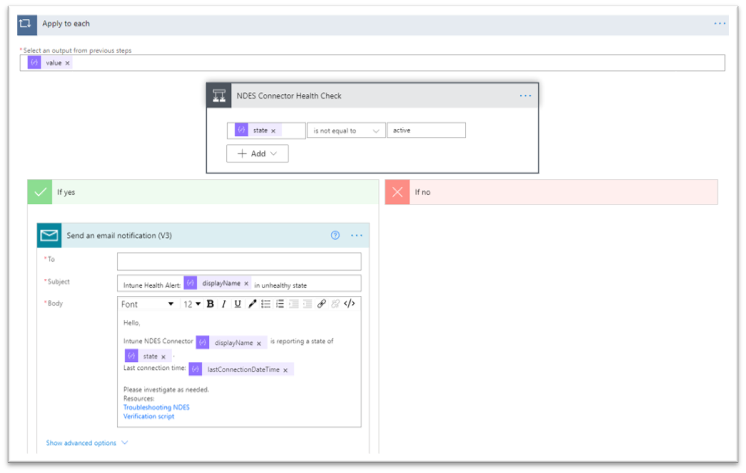

- Next, create a step to send an email to your specified email address if the connector is determined to be unhealthy using the Send an email notification (V3) action. In this example, the email body is customized to include details such as the display name of the connector that is unhealthy, last connection time, and additional troubleshooting resources.

Email notification check to send a customized email notification.

Email notification check to send a customized email notification.

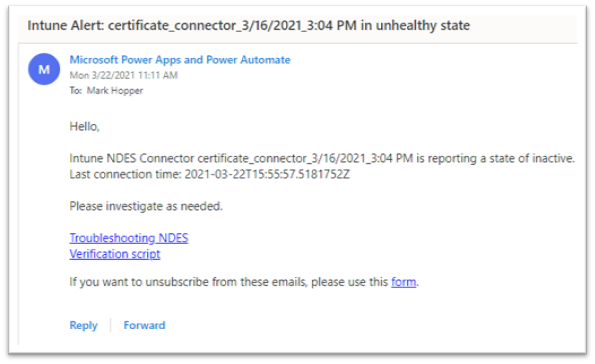

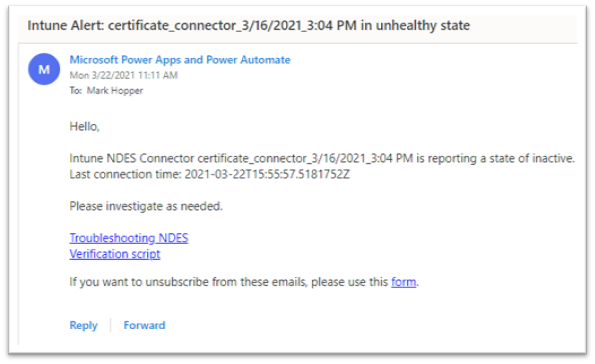

- Save, and test the flow. If your NDES connector is in an unhealthy state, the email addresses specified should receive a message similar to this:

Example screenshot of an email notification sent to an admin.

Example screenshot of an email notification sent to an admin.

Note: If your connector is currently active and healthy, but you want to test the email notification, temporarily set your health check condition to check for a state that would return “Yes”. For example, state is equal to active. Make sure to switch this back once you have confirmed the notification is sent as expected.

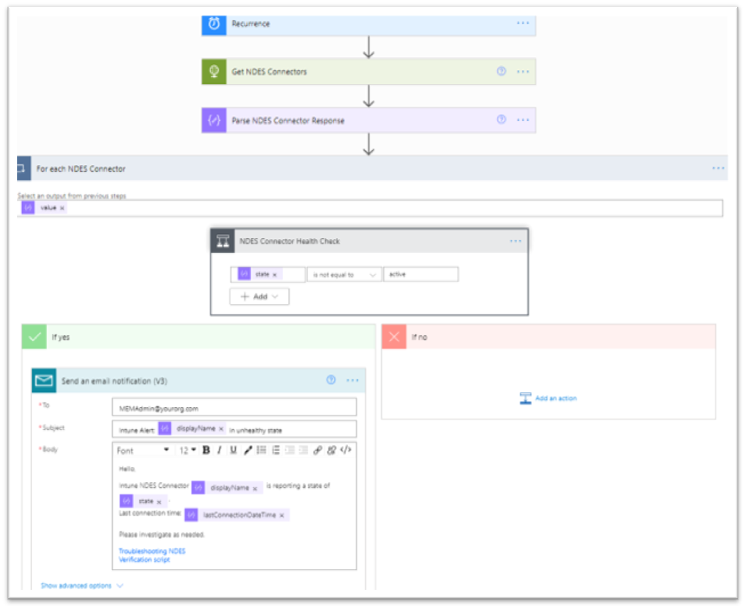

You should now have a working automated cloud flow that scans Graph for NDES connector details, checks the connector’s health, and sends out an email notification if the connector is determined to be in an unhealthy state on an hourly schedule. The completed flow should look like this:

Overview of a working automated cloud flow that scans Graph for NDES connector details.

Overview of a working automated cloud flow that scans Graph for NDES connector details.

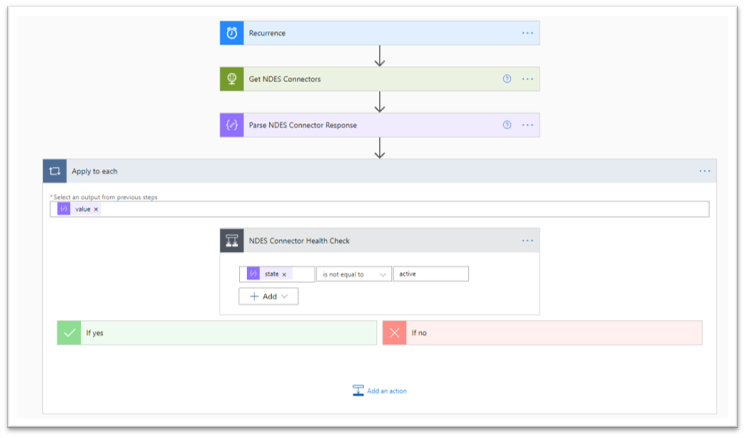

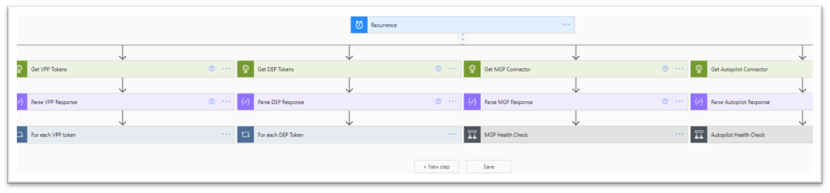

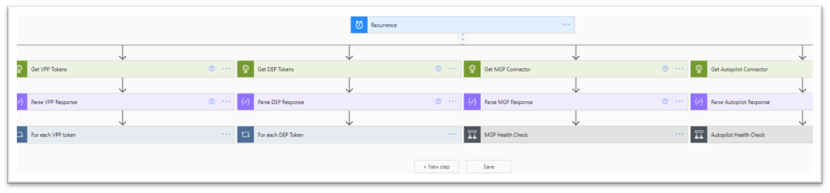

- Now, you can apply these same set of steps for the remaining Intune connectors in your environment. Either in different flows, or as parallel branches under the same recurrence.

Additional Intune connector resources you could add in your environment.

Additional Intune connector resources you could add in your environment.

Connector Health Check Examples

Properties that can be used to determine each connector’s health status can be found in Microsoft’s Graph API documentation for Intune.

For commonly used Intune connectors, here are some health check examples that can be used or built on for the health check Condition step, as well as their Graph URI endpoints for the HTTP step:

Apple Push Notification Certificate

URI: https://graph.microsoft.com/beta/deviceManagement/applePushNotificationCertificate

expirationDateTime is less than addToTime(utcNow(), 61, ’day’)

Apple VPP Tokens

URI: https://graph.microsoft.com/beta/deviceAppManagement/vppTokens

lastSyncStatus is equal to failed

or

state is not equal to valid

Apple DEP Tokens

URI: https://graph.microsoft.com/beta/deviceManagement/depOnboardingSettings

lastSyncErrorCode is not equal to 0

or

tokenExpirationDateTime is less than addToTime(utcNow(), 61, ’day’)

Managed Google Play

URI: https://graph.microsoft.com/beta/deviceManagement/androidManagedStoreAccountEnterpriseSettings

bindStatus is not equal to boundAndValidated

or

lastAppSyncStatus is not equal to success

Autopilot

URI: https://graph.microsoft.com/beta/deviceManagement/windowsAutopilotSettings

syncStatus is not equal to completed

or

syncStatus is not equal to inProgress

Mobile Threat Defense Connectors

URI: https://graph.microsoft.com/beta/deviceManagement/mobileThreatDefenseConnectors

partnerState is not equal to enabled

or

partnerState is not equal to available

Considerations

You can create a flow for each individual Intune connector, or you can create parallel branches under your recurrence trigger that check multiple connectors’ health in the same flow.

For example, you may not want to check your Managed Google Play connector in the same flow as your NDES connector. Or, you may want to check your DEP token expiration on a different cadence than your DEP token sync status. Flexibility is key here- use what works best for your organization!

Without custom connector

|

With custom connector

|

- Leverages Azure AD application permissions.

- HTTP requests will run without administrative user credentials. These will continue to operate as long as the Azure AD Enterprise App secret key being used is valid.

|

- Leverages Azure AD delegated permissions.

- HTTP requests will be run using an administrator account who has proper permissions to check the respective Intune connector health. The connector may fail to run and require reauthentication if the account used for the connection has a password change, access tokens revoked, or needs to satisfy an MFA requirement. In this blog, we executed Graph API requests using the HTTP action without creating a Graph API custom connector. However, an alternative method could be creating for Graph, and configuring the HTTP requests as custom actions to read the Graph Intune connector endpoints. Here are some considerations when deciding which method may work best for your organization:

|

More information can be found here: 30DaysMSGraph – Day 12 – Authentication and authorization scenarios.

- Not all connectors can have multiple instances. For example, an Apply to each step will not be necessary for the APNS certificate health check. Since only one APNS can be configured in a tenant at a time, an array would not be returned in the JSON response.

- For health checks where you are evaluating connector or token expirations, you should customize your health checks based on your organization’s needs. For example, the Microsoft Endpoint Manager admin center will start flagging a DEP token or APNS certificate as nearing expiration when the expiration date is 60 days away. However, you may prefer to check for and send these notifications a few weeks or a month in advance rather than 60 days, every hour until it is renewed.

- Consider leveraging secure inputs and outputs for steps in the flow that handle your Azure AD app’s secret key. By default, in Power Automate, you can see inputs and outputs in the run history for a flow. When you enable secure inputs and outputs, you can protect this data when someone tries to view the inputs and outputs and instead display the message “Content not shown due to security configuration.”

- In addition to secure inputs and outputs, consider leveraging Azure Key Vault and the Azure Key Vault Power Automate Connector to handle storage and retrieval of your Azure AD app’s secret key. Keep in mind that actions for this connector will be run using an administrator account who has proper permissions to check the respective Key Vault. The connector may fail to run and require reauthentication if the account used for the connection has a password change, access tokens revoked, or needs to satisfy an MFA requirement.

You should now have an understanding of how you can leverage Power Automate and Graph API to proactively notify your team when an Intune connector is in an unhealthy state. Please let us know if you have any additional questions by replying to this post or by reaching out to @IntuneSuppTeam on Twitter.

Additional Resources

For further resources on Graph API, Power Automate, and Intune connectors, please see the links below.

by Contributed | Apr 15, 2021 | Technology

This article is contributed. See the original author and article here.

As we continue to evolve the Universal Print Microsoft Graph API, we’ve changed and replaced some API endpoints and data models to refine the way apps and services interact with the Universal Print platform.

Additionally, all Universal Print APIs now require permission scopes. The documentation for each API endpoint lists permission scopes that grant access.

For a full list of available permissions, see the Universal Print permissions section of the Microsoft Graph permissions reference. Delegated permissions grant capabilities on behalf of the user currently logged in, and application permissions grant permissions that can access the data of all users in a tenant.

What you need to know

These changes may break any applications and services that rely on the Beta version of Microsoft Graph. If your application is running in production and relies on the Universal Print Microsoft Graph API, we recommend using v1.0 which will never have breaking changes.

Time to take action

Please review the API usage of your applications and ensure that you are not relying on any of the deprecated endpoints. Removal of deprecated endpoints will begin on July 1, 2021.

To see the list of deprecated and changed APIs, see the Microsoft Graph Changelog. Use the filtering controls to select the following options:

- Versions: beta

- Change type: “Change” and “Deletion”

- Services: Devices and apps > Cloud printing

Learn more

To learn more about Universal Print, visit out the Universal Print site.

Recent Comments