by Contributed | Apr 19, 2021 | Technology

This article is contributed. See the original author and article here.

Initial Update: Monday, 19 April 2021 23:25 UTC

We are aware of issues within Log Analytics and are actively investigating. Some customers may experience data access issue and delayed or missed Log Search Alerts in West Europe region.

- Work Around: None

- Next Update: Before 04/20 02:30 UTC

We are working hard to resolve this issue and apologize for any inconvenience.

-Saika

![[Guest Blog] My Journey from McDonald's to Microsoft](https://www.drware.com/wp-content/uploads/2021/04/fb_image-148.jpeg)

by Contributed | Apr 19, 2021 | Technology

This article is contributed. See the original author and article here.

This blog is written by by Taiki Yoshida, a Microsoft employee who specializes in Power Apps and low-code development. He shares his non-traditional career journey, from an entry-level job at McDonald’s to landing his dream job in Microsoft’s product engineering team. He hopes to empower individuals to do more through low-code at Microsoft.

I still remember the day I first touched a computer – a Windows 95 that my mother had setup for work. I was 5 years old but I remember the spark of excitement that was in me. Today, after 25 years, I have that same spark inside me.

I was born and raised in Japan by my mother. As a single parent, she did her best to give me an opportunity to learn. One of which was for me to go to a boarding school in the United Kingdom at 11 years old. I studied hard with a choice of Math, Further Math, and Physics in A-Levels (University entry qualifications in UK), and it was natural for me to apply for a masters degree in computer science. I was given an offer at the University of London and Imperial College London. It was all going well.

When everything changed

After graduating high school in the summer of 2009, my mother fell ill. I could no longer afford to go to university. I had to take care of her and had no choice but to return to Japan, giving up my university offers. Upon arrival back to Japan, I became the single source of income. My mother had debts to pay off and I needed to quickly find a job. There was nothing that I could apply for in IT with no degree or work experience. I couldn’t apply for university in Japan as I had not met the Japanese educational requirements since I studied abroad.

After many job offer declines, the only remaining place I could apply was McDonald’s. I felt devastated at the time, but now looking back, it was a great experience to have. With people from the most diverse set of backgrounds – High school students, University students, pensioners, moms, dads, this was the first place where I learned about “community”.

At McDonald’s store

At McDonald’s store

My first IT job

After several months working full-time in McDonald’s I ended up a “swing manager” (temp-store manager) but my passion to work in IT remained deep. I continuously applied for jobs in IT. Seven months in, I finally started my IT-career at a mat manufacturing company Kleen-Tex – where they were looking for a temporary IT helpdesk at the Japan subsidiary. Although I had no IT work experience, they gave me a chance. After 3 months, they offered me full-time work. Initially, my work was focused on answering and solving problems from employees, but then came my first big opportunity. The company decided to implement Microsoft Dynamics NAV (an ERP system now known as Dynamics 365 Business Central). As you may have noticed by now, I had no knowledge or experience around that. The only thing I could do was to web search my way through. Being able to experience an ERP implementation was a huge game changer for me. From Finance, Inventory, Sales, Purchase, Logistics, Manufacturing – I got firsthand experience with it all and actually saw it in action at the company. The project eventually expanded to implementing it to a subsidiary in Thailand where I acted as a project manager based on the experiences in the Japan subsidiary.

ERP Project team at Kleen-Tex

ERP Project team at Kleen-Tex

Moving from an End-User to Consulting company

By the time I finished implementing Dynamics NAV, I was 21. I was fully in love with the product and how it had the power to be able to capture and assist all aspects of the business. I wanted to scale this experience. I wanted to do the same as Kleen-Tex, but for more companies. I tried applying to many IT companies but almost all of them declined because I didn’t have a university degree with one exception. Ryo – the director from the Microsoft partner, Pacific Business Consulting who had helped me in the first company, reached out to me as he noticed my work and did not have prejudice over my education. Once I joined the team, I was immediately assigned to work as an application consultant, mainly working on global and cross-continent projects as I could speak both English and Japanese. Two years had gone by and with the total of five years in Dynamics NAV, I didn’t want to just focus on becoming an ERP specialist, and decided to move on to another partner, Japan Business Systems, where they focused on Dynamics CRM (a.k.a. Dynamics 365). My boss, Masa, was coincidently a Microsoft MVP.

A community newbie to a Microsoft MVP

I didn’t really know what a Microsoft MVP was, but Masa shared and introduced me to how great Microsoft communities are. I started visiting the Office 365 User Group, then the Japan Azure User Group, and so on. I was reaching a point where I was going to at least one community event each week to learn about new things! I found it absolutely amazing to know that these user groups and communities were being held for free. Until I knew about the community, I was always alone, searching for knowledge, but in these events, you gain so much more than that. That was when I realized I wanted to present too – I want to output what I learned from others so that I could “Pay it forward.” Presenting and writing blog posts also helped me as it allowed me to have a better knowledge and deeper understanding of things. Through the contributions, in 2016, I became a Microsoft MVP for the Business Solutions category.

Microsoft MVP

Microsoft MVP

Joining Microsoft

2016 was a very special year. Microsoft MVP was definitely one reason, but the other, was that I was introduced to Power Apps (a low-code/no-code tool for application development). I was given access to a private preview and could immediately see the world it would become. With PowerApps, people no longer had to be pro-developers or an IT guru to build apps. Although I had blog posts about Dynamics 365 and others, I had already fallen in love with this product and it became the most passionate thing on my blog and community events.

After 1 year as an MVP, I was working at EY and in late autumn 2017 a Microsoft recruiter reached out to me via LinkedIn. I remember it very clearly as I picked up the phone and the lady said “I have an interesting role for you, it is a Global Black Belt role for a product you’ve probably never heard of… It’s called PowerApps.” I was only just 7 months into the role at EY, but there was no way I was going to let that opportunity go. In fact, I wanted that job so badly, I presented my resume in PowerApps (I still keep a copy of it in my personal Office tenant).

Microsoft Redmond Campus

Microsoft Redmond Campus

Scaling my Power Apps love

With a big company like Microsoft, I imagined a designated team focusing on Power Apps in Japan, but in reality, I was the first person in Japan hired for this. I had to quickly think of a way I could let people know of Power Apps, but with myself as the only person to do so. In April 2018, I started the Japan Power Apps user group (JPAUG). I felt that the best way to share my passion was through the community. I wanted to create a user group where not just pro-devs and IT admins join, but a group where all you had to believe in was Paying it forward. That was all I asked for. I would spend every day on Twitter and Facebook posts, replying to each and every Japanese tweets. Today, the group has grown to over 1700 users, with great MVPs based not just in Tokyo, but widely spread across all of Japan.

Japan Power Platform User Group – Summer 2019

Japan Power Platform User Group – Summer 2019

Senior Program Manager in Power Platform engineering team

I was a technical a salesperson at the time, but my passion to the product was beyond what was written in the job description and sales targets. I wanted to empower everyone to be able to create apps.

I was naturally in tears when I first saw the story of Samit Saini from London Heathrow at Microsoft Ignite 2018.

Samit’s story was just one of many other success stories to follow, and I saw the great stories and experiences that were published from Power Apps Customer Advisory Team (a.k.a. Power CAT) – a small team that is part of the product engineering team focusing on Power Apps, Power Automate and Power Virtual Agents. Working as a Global Black Belt was definitely an awesome experience without a doubt, but I wanted to go beyond just selling the product and see the customers use the product in action—make sure that all of the customers are successful as much as Samit was and to scale the success to others. So I reached out to the manager, Saurabh, to apply for a position in the UK. The team was only a five person team. Unfortunately, having no degree also stopped me from being able to relocate to the UK due to restrictions in visas, but Saurabh was kind enough to open a position for me in Japan (this really doesn’t happen often!!) and even support me to explore options to work abroad as that was one of my dreams.

Since then, I have helped customers in Japan, Singapore, Australia, Prague, the Netherlands, Qatar, and the United States, as well as published content through blog posts and Microsoft Docs—allowing me to be able to work on something that I’m truly passionate about 24/7. And now, after nearly two years with this awesome Power CAT team, I will be relocating to Redmond in May 2021 to continue being a Power CAT. I hope to further empower people through low-code with bigger impacts than ever before!

Since then, I have helped customers in Japan, Singapore, Australia, Prague, Netherlands, Qatar and United States, as well as publish contents through blog posts and Microsoft Docs – being able to work on something that I’m truly passionate with 24/7.

And now, after nearly two years in this awesome Power CAT team, I will be relocating to Redmond in May continue being a Power CAT – and I hope to further empower people through low-code even more with bigger impacts than ever before!

Power CAT – Sept 2019

Power CAT – Sept 2019

Lastly…

I just would like to send kudos to the awesome MVPs who’ve helped my Power Apps journey – Taichi, Hiro, Ryota, Makoto, Teruchika, Noriko, Jun, Kazuya, Yoshio, Takeshi, Yugo, Ai, Masayuki, Hirofumi and Ryo, my two awesome managers, Marc and Saurabh for supporting my dreams, my wife who has always been fully supportive of my passion, and lastly, to Microsoft for providing me with this opportunity to become who I am today.

by Contributed | Apr 19, 2021 | Technology

This article is contributed. See the original author and article here.

Unreal Engine allows developers to create industry-leading visuals for a wide array of real-time experiences. Compared to other platforms, mixed reality provides some new visual challenges and opportunities. MRTK-Unreal’s Graphics Tools empowers developers to make the most of these visual opportunities.

Graphics Tools is an Unreal Engine plugin with code, blueprints, and example assets created to help improve the visual fidelity of mixed reality applications while staying within performance budgets.

When talking about performance in mixed reality, questions normally arise around “what is visually possible on HoloLens 2?” The device is a self-contained computer that sits on your head and renders to a stereo display. The mobile graphics processing unit (GPU) on the HoloLens 2 supports a wide gamut of features, but it is important to play to the strengths and avoid the weaknesses of the GPU.

On HoloLens 2 the target framerate is 60 frames per second (or 16.66 milliseconds for your application to preset a new frame). Applications which do not hit this frame rate can result in deteriorated user experiences such as worsened hologram stabilization, hand tracking, and world tracking.

One common bottleneck in “what is possible” is how to achieve efficient real-time lighting techniques in mixed reality. Let’s outline how Graphics Tools solves this problem below.

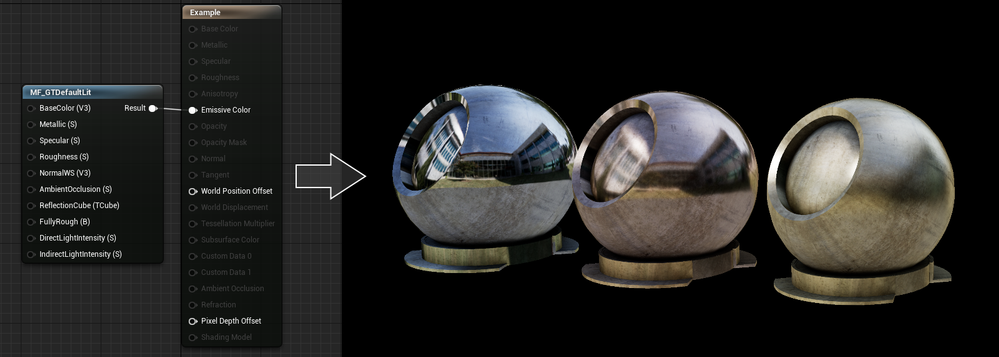

Lighting, simplified

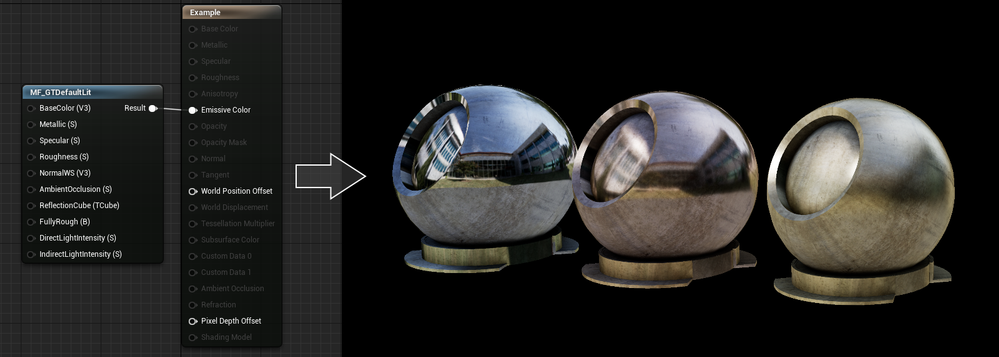

By default, Unreal uses the mobile lighting rendering path for HoloLens 2. This lighting path is well suited for mobile phones and handhelds, but is often too costly for HoloLens 2. To ensure developers have access to a lighting path that is performant, Graphics Tools incudes a simplified physically based lighting system accessible via the MF_GTDefaultLit material function. This lighting model restricts the number of dynamic lights and enforces some graphics features are disabled.

If you are familiar with Unreal’s default lighting material setup, the inputs to the MF_GTDefaultLit function should look very similar. For example, changing the values to the Metallic and Roughness inputs can provide convincing looking metal surfaces as seen below.

If you are interested in learning more about the lighting model it is best to take a peek at the HLSL shader code that lives with the Graphics Tools plugin and read the documentation.

What is slow?

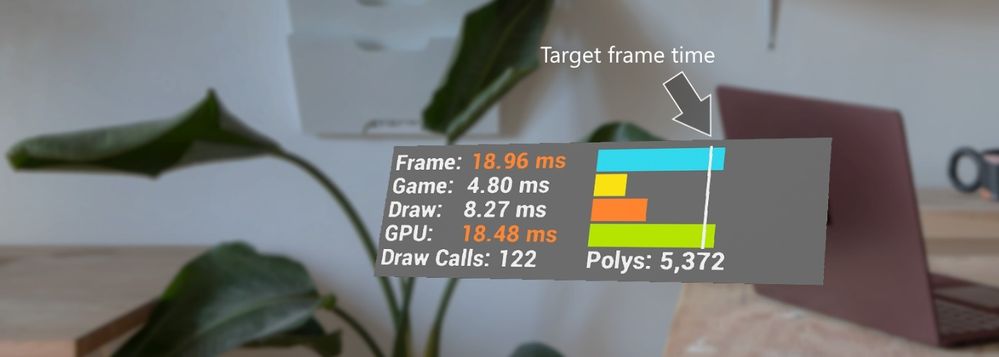

More often than not, you are going to get to a point in your application development where your app isn’t hitting your target framerate and you need to figure out why. This is where profiling comes into the picture. Unreal Engine has tons of great resources for profiling (one of our favorites is Unreal Insights which works on HoloLens 2 as of Unreal Engine 4.26).

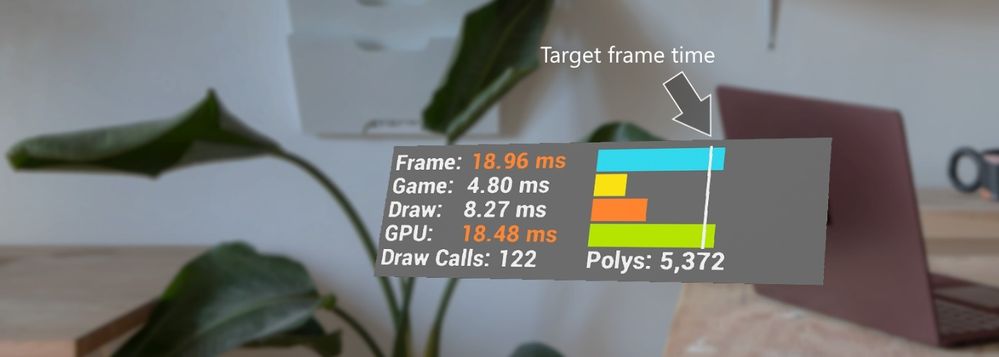

Most of the aforementioned tools require connecting your HoloLens 2 to a development PC, which is great for fine grained profiling, but often you just need a high-level overview of performance within the headset. Graphics Tools provides the GTVisualProfiler actor which gives real-time information about the current frame times represented in milliseconds, draw call count, and visible polygon count in a stereo friendly view. A snapshot of the GTVisualProfiler is demonstrated below.

In the above image a developer can, at a glance, see their application is limited by GPU time. It is highly recommended to always show a framerate visual while running & debugging an application to continuously track performance.

Performance can be an ambiguous and constantly changing challenge for mixed reality developers, and the spectrum of knowledge to rationalize performance is vast. There are some general recommendations for understanding how to approach performance for an application in the Graphics Tools profiling documentation.

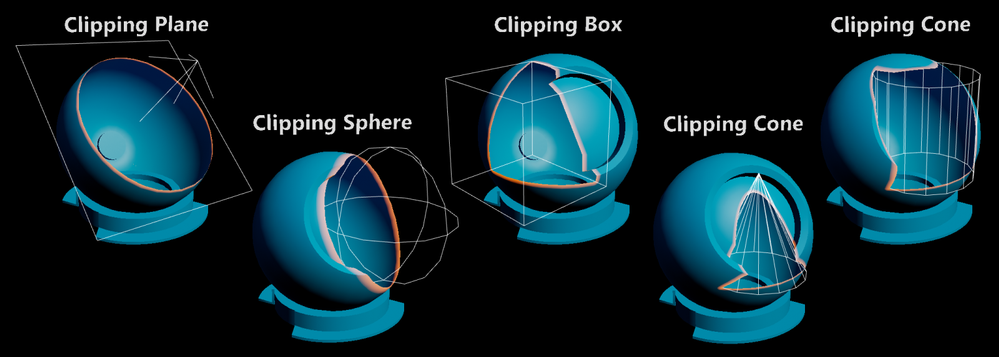

Peering inside holograms

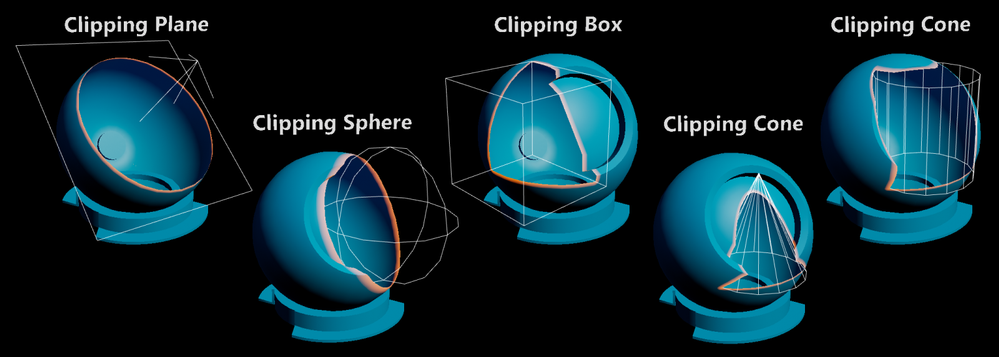

Many developers ask for tools to “peer inside” a hologram. When reviewing complex assemblies, it’s helpful to cut away portions of a model to see parts which are normally occluded. To solve this scenario Graphics Tools has a feature called clipping primitives.

A clipping primitive represents an analytic shape that passes its state and transformation data into a material. Material functions can then take this primitive data and perform calculations, such as returning the signed distance from the shape’s surface. Included with Graphics Tools are the following clipping primitive shapes.

Note, the clipping cone can be adjusted to also represent a capped cylinder. Clipping primitives can be configured to clip pixels within their shape or outside of their shape. Some other use cases for clipping primitives are as a 3D stencil test, or as a mechanism to get the distance from an analytical surface. Being able to calculate the distance from a surface within a shader allows one to do effects like the orange border glow in the above image.

To learn more about clipping primitives please see the associated documentation.

Making things “look good”

The world is our oyster when it comes to creating visual effects for HoloLens 2. Unreal Engine has a powerful material editor that allows people without shader experience to create and explore. To bootstrap developers, Graphics Tools contains a few out-of-the-box effects.

Effects include:

- Proximity based lighting (docs)

- Procedural mesh texturing & lighting (docs)

- Simulated iridescence, rim lighting, + more (docs)

To see all of these effects, plus everything described above in action, Graphics Tools includes an example plugin which can be cloned directly from GitHub or downloaded from the releases page. If you have a HoloLens 2, you can also download and sideload a pre-built version of the example app from the releases page.

Questions?

We are always eager to hear more from the community for ways to improve the toolkit. Please feel free to share your experiences and suggestions below, or on our newly created GitHub discussions page.

by Contributed | Apr 19, 2021 | Technology

This article is contributed. See the original author and article here.

Since we announced in 2019 that we would be retiring Basic Authentication for legacy protocols we have been encouraging our customers to switch to Modern Authentication. Modern Authentication, based on OAuth2, has a lot of advantages and benefits as we have covered before, and we’ve yet to meet a customer who doesn’t think it is a good thing. But the ‘getting there’ part might be the hard part, and that’s what this blog post is about.

This post is specifically about enabling Modern Authentication for Outlook for Windows. This is the client most widely used by many of our customers, and the client that huge numbers of people spend their day in. Any change that might impact those users is never to be taken lightly.

As Admin, you know you need to get those users switched from Basic to Modern Auth, and you know all it takes is one PowerShell command. You took a look at our docs, found the article called Enable or disable Modern Authentication for Outlook in Exchange Online | Microsoft Docs and saw that all you need to do is read the article (which it says will take just 2 minutes) and then run:

Set-OrganizationConfig -OAuth2ClientProfileEnabled $true

That sounds easy enough. So why didn’t you do it already?

Is it because it all sounds too easy? Or because there is a fear of the unknown? Or spiders? (We’re all scared of spiders, it’s ok.)

We asked some experts at Microsoft who have been through this with some of our biggest customers for their advice. And here it comes!

Expert advice and things to know

“Once Exchange Online Modern Authentication is enabled for Outlook for Windows, wait a few minutes.”

That was the first response we got. It was certainly encouraging, but wasn’t exactly a lot of information we realized, so we dug in some more, and here’s what we found.

One thing you need to remember that enabling Modern Authentication for Exchange Online using the Set-OrganizationConfig parameter only impacts Outlook for Windows. Outlook on the Web, Exchange ActiveSync, Outlook Mobile or for Mac etc., will continue to authenticate as they do today and will not be impacted by this change.

Once Modern Authentication is turned on in Exchange Online, a Modern Authentication supported version of Outlook for Windows will start using Modern Authentication after a restart of Outlook. Users will get a browser-based pop up asking for UPN and Password or if SSO is setup and they are already logged in to some other services, it should be seamless.

If the login domain is setup as Federated, the user will be redirected to login to the identity provider (ADFS, Ping, Okta, etc.) that was set up. If the domain is managed by Azure or set up for Pass Through Authentication, the user won’t be redirected but will authenticate with Azure directly or with Azure on behalf of your Active Directory Domain Service respectively.

Take a look at your Multi-Factor Authentication (MFA)/Conditional Access (CA) settings. If MFA has been enabled for the user and/or Conditional Access requiring MFA has been setup for the user account for Exchange Online (or other workloads that have a dependency on Exchange Online), then the user/computer will be evaluated against the Conditional Access Policy.

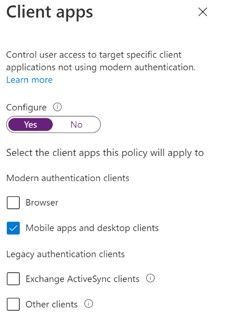

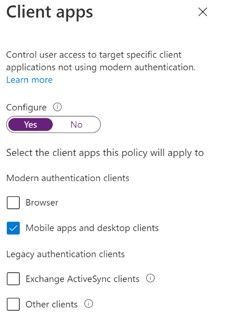

- Here is an example of a CA policy with Condition of Client App “Mobile apps and desktop clients”. This will impact Outlook for Windows with Modern Authentication whereas “Other Clients” would impact Outlook for Windows using Basic Authentication, for example.

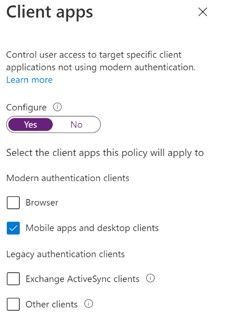

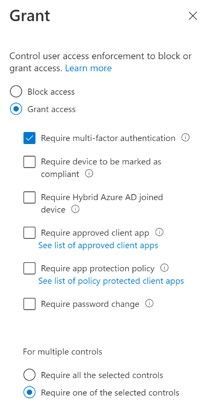

Next is Access Control Grant in CA requiring MFA. If Outlook for Windows was using Basic Authentication, this would not apply since MFA depends on Modern Authentication. But once you enable Modern Authentication, users in the scope of this CA policy would be required to use MFA to access Exchange Online.

Next is Access Control Grant in CA requiring MFA. If Outlook for Windows was using Basic Authentication, this would not apply since MFA depends on Modern Authentication. But once you enable Modern Authentication, users in the scope of this CA policy would be required to use MFA to access Exchange Online.

The Modern Authentication setting for Exchange Online is tenant-wide. It’s not possible to enable it per-user, group or any such structure. For this reason, we recommend turning this on during a maintenance period, testing, and if necessary, rolling back by changing the setting back to False. A restart of Outlook is required to switch from Basic to Modern Auth and vice versa if roll back is required.

It may take 30 minutes or longer for the change to be replicated to all servers in Exchange Online so don’t panic if your clients don’t immediately switch, it’s a very big infrastructure.

Be aware of other apps that authenticate with Exchange Online using Modern Authentication like Skype for Business. Our recommendation is to enable Modern Authentication for both Exchange and Skype for Business.

Here is something rare, but we have seen it… After you enable Modern Authentication in an Office 365 tenant, Outlook for Windows cannot connect to a mailbox if the user’s primary Windows account is a Microsoft 365 account that does not match the account they use to log in to the mailbox. The mailbox shows “Disconnected” in the status bar.

This is due to a known issue in Office which creates a miscommunication between Office and Windows that causes Windows to provide the default credential instead of the appropriate account credential that is required to access the mailbox.

This issue most commonly occurs if more than one mailbox is added to the Outlook profile, and at least one of these mailboxes uses a login account that is not the same as the user’s Windows login.

The most effective solution to this issue is to re-create your Outlook profile. The fix was shipped in the following builds:

- For Monthly Channel Office 365 subscribers, the fix to prevent this issue from occurring is available in builds 16.0.11901.20216 and later.

- For Semi-Annual Customers, the fix is included in builds 16.0.11328.20392 (Version 1907) and later.

You can find more info on this issue here and here.

That’s a list of issues we got from the experts. Many customers have made the switch with little or no impact.

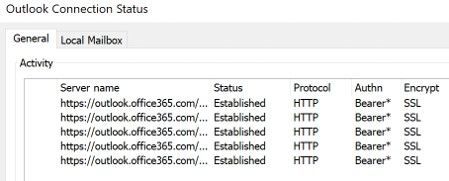

How do you know Outlook for Windows is now using Modern Auth?

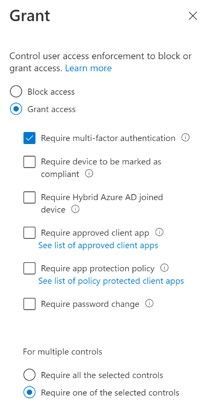

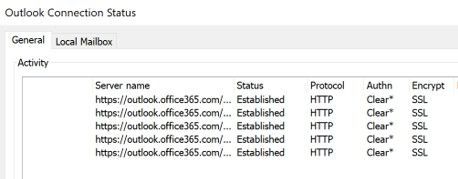

When using Basic Auth, the Outlook Connection Status “Authn” column shows “Clear*”

Once you switch to Modern Auth, the Connection Status in Outlook showing Modern Authentication “Authn” column shows “Bearer*”

And that’s it!

The biggest thing to check prior to making the change are your CA/MFA settings, just to make sure nothing will stop access from happening and making sure your users know there will be a change that might require them to re-authenticate.

Now you know what to expect, there is no need to be afraid of enabling Modern Auth. (Spiders, on the other hand… are still terrifying, but that’s not something we can do much about.)

Huge thanks to Smart Kamolratanapiboon, Rob Whaley and Denis Vilaca Signorelli for the effort it took to put this information into a somewhat readable form.

If you are aware of some other issues that might be preventing you from turning this setting on, let us know in comments below!

The Exchange Team

by Contributed | Apr 19, 2021 | Technology

This article is contributed. See the original author and article here.

The Microsoft 365 compliance center provides easy access to solutions to manage your organization’s compliance needs and delivers a modern user experience that conforms to the latest accessibility standards (WCAG 2.1). From the compliance center, you can access popular solutions such as Compliance Manager, Information Protection, Information Governance, Records Management, Insider Risk Management, Advanced eDiscovery, and Advanced Audit.

Over the coming months, we will begin automatically redirecting users from the Office 365 Security & Compliance Center (SCC) to the Microsoft 365 compliance center for the following solutions: Audit, Data Loss Prevention, Information Governance, Records Management, and Supervision (now Communication Compliance). This is a continuation of our migration to the Microsoft 365 compliance center, which began in September 2020 with the redirection of the Advanced eDiscovery solution.

We are continuing to innovate and add value to solutions in the Microsoft 365 compliance center, with the goal of enabling users to view all compliance solutions within one portal. While redirection is enabled by default, should you need additional transition time, Global admins and Compliance admins can enable or disable redirection in the Microsoft 365 compliance center by navigating to Settings > Compliance Center and using the Automatic redirection toggle switch under Portal redirection.

We will eventually retire the Security & Compliance Center experience, so we encourage you to explore and transition to the new Microsoft 365 compliance center experience. Learn more about the Microsoft 365 compliance center.

by Contributed | Apr 19, 2021 | Technology

This article is contributed. See the original author and article here.

Azure SQL Database offers an easy several-clicks solution to scale database instances when more resources are needed. This is one of the strengths of PaaS, you pay for only what you use and if you need more or less, it’s easy to do the change. A current limitation, however, is that the scaling operation is a manual one. The service doesn’t support auto-scaling as some of us would expect.

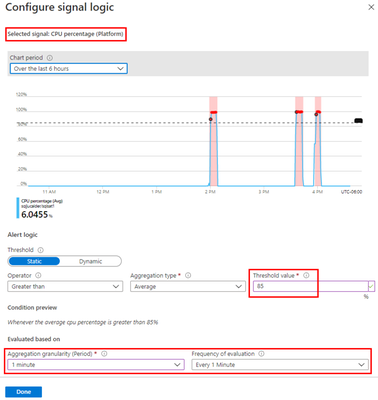

Having said that, using the power of Azure we can set up a workflow that auto-scales an Azure SQL Database instance to the next immediate tier when a specific condition is met. For example: what if you could auto-scale the database as soon as it goes over 85% CPU usage for a sustained period of 5 minutes? Using this tutorial we will achieve just that.

Supported SKUs: because there is no automatic way to get the list of available tiers at script runtime, these must be hard-coded into it. For this reason, the script below only supports DTU and vCore (provisioned compute) databases. Hyperscale, Serverless, Fsv2, DC and M series are not supported. Having said that, the logic is the same not matter the tier so feel free to modify the script to suit your particular SKU needs.

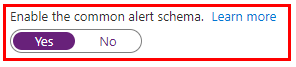

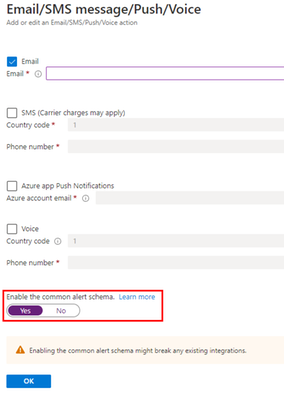

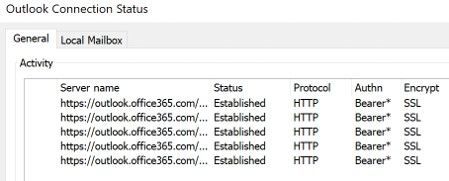

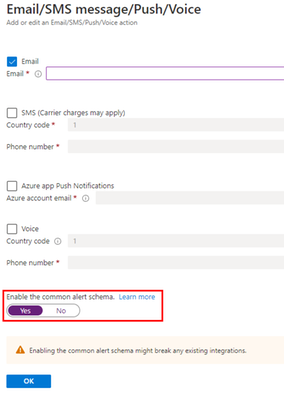

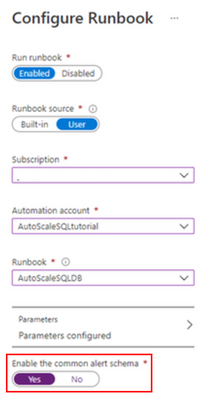

Important: every time any part of the setup asks if the Common Alert Schema (CAS) should be enabled, select Yes. The script used in this tutorial assumes the CAS will be used for the alerts triggering it.

|

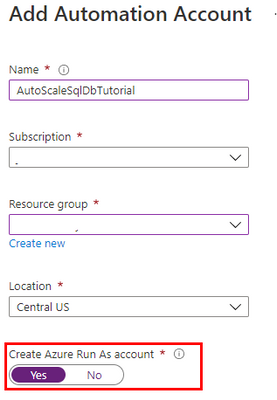

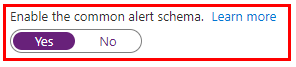

Step #1: deploy Azure Automation account and update its modules

The scale operation will be executed by a PowerShell runbook inside of an Azure Automation account. Search Automation in the Azure Portal search bar and create a new Automation account. Make sure to create a Run As Account while doing this:

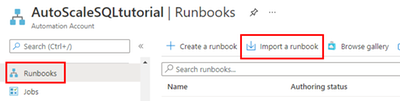

Once the Automation account has been created, we need to update the PowerShell modules in it. The runbook we will use uses PowerShell cmdlets but by default these are old versions when the Automation account is provisioned. To update the modules to be used:

- Save the PowerShell script here to your computer with the name Update-AutomationAzureModulesForAccountManual.ps1. The Manual word is added to the file name as to not overwrite the default internal runbook the account uses to update other modules once it gets imported.

- Import a new module and select the file you saved on step #1:

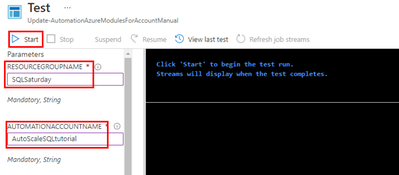

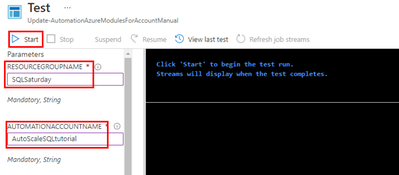

- When the runbook has been imported, click Test Pane, fill in the details for the Resource Group and the Azure Automation account name we are using and click Start:

- When it finishes, the cmdlets will be fully updated. This benefits not only the SQL cmdlets used below but any other cmdlets any other runbook may use on this same Automation account.

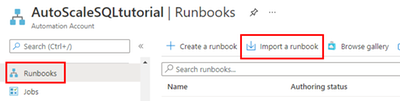

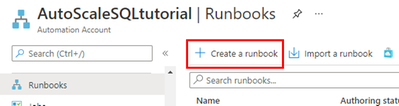

Step #2: create scaling runbook

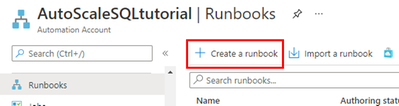

With our Automation account deployed and updated, we are now ready to create the script. Create a new runbook and copy the code below:

The script below uses Webhook data passed from the alert. This data contains useful information about the resource the alert gets triggered from, which means the script can auto-scale any database and no parameters are needed; it only needs to be called from an alert using the Common Alert Schema on an Azure SQL database.

param

(

[Parameter (Mandatory=$false)]

[object] $WebhookData

)

# If there is webhook data coming from an Azure Alert, go into the workflow.

if ($WebhookData){

# Get the data object from WebhookData

$WebhookBody = (ConvertFrom-Json -InputObject $WebhookData.RequestBody)

# Get the info needed to identify the SQL database (depends on the payload schema)

$schemaId = $WebhookBody.schemaId

Write-Verbose "schemaId: $schemaId" -Verbose

if ($schemaId -eq "azureMonitorCommonAlertSchema") {

# This is the common Metric Alert schema (released March 2019)

$Essentials = [object] ($WebhookBody.data).essentials

Write-Output $Essentials

# Get the first target only as this script doesn't handle multiple

$alertTargetIdArray = (($Essentials.alertTargetIds)[0]).Split("/")

$SubId = ($alertTargetIdArray)[2]

$ResourceGroupName = ($alertTargetIdArray)[4]

$ResourceType = ($alertTargetIdArray)[6] + "/" + ($alertTargetIdArray)[7]

$ServerName = ($alertTargetIdArray)[8]

$DatabaseName = ($alertTargetIdArray)[-1]

$status = $Essentials.monitorCondition

}

else{

# Schema not supported

Write-Error "The alert data schema - $schemaId - is not supported."

}

# If the alert that triggered the runbook is Activated or Fired, it means we want to autoscale the database.

# When the alert gets resolved, the runbook will be triggered again but because the status will be Resolved, no autoscaling will happen.

if (($status -eq "Activated") -or ($status -eq "Fired"))

{

Write-Output "resourceType: $ResourceType"

Write-Output "resourceName: $DatabaseName"

Write-Output "serverName: $ServerName"

Write-Output "resourceGroupName: $ResourceGroupName"

Write-Output "subscriptionId: $SubId"

# Because Azure SQL tiers cannot be obtained programatically, we need to hardcode them as below.

# The 3 arrays below make this runbook support the DTU tier and the provisioned compute tiers, on Generation 4 and 5 and

# for both General Purpose and Business Critical tiers.

$DtuTiers = @('Basic','S0','S1','S2','S3','S4','S6','S7','S9','S12','P1','P2','P4','P6','P11','P15')

$Gen4Cores = @('1','2','3','4','5','6','7','8','9','10','16','24')

$Gen5Cores = @('2','4','6','8','10','12','14','16','18','20','24','32','40','80')

# Here, we connect to the Azure Portal with the Automation Run As account we provisioned when creating the Automation account.

$connectionName = "AzureRunAsConnection"

try

{

# Get the connection "AzureRunAsConnection "

$servicePrincipalConnection=Get-AutomationConnection -Name $connectionName

"Logging in to Azure..."

Add-AzureRmAccount `

-ServicePrincipal `

-TenantId $servicePrincipalConnection.TenantId `

-ApplicationId $servicePrincipalConnection.ApplicationId `

-CertificateThumbprint $servicePrincipalConnection.CertificateThumbprint

}

catch {

if (!$servicePrincipalConnection)

{

$ErrorMessage = "Connection $connectionName not found."

throw $ErrorMessage

} else{

Write-Error -Message $_.Exception

throw $_.Exception

}

}

# Gets the current database details, from where we'll capture the Edition and the current service objective.

# With this information, the below if/else will determine the next tier that the database should be scaled to.

# Example: if DTU database is S6, this script will scale it to S7. This ensures the script continues to scale up the DB in case CPU keeps pegging at 100%.

$currentDatabaseDetails = Get-AzureRmSqlDatabase -ResourceGroupName $ResourceGroupName -DatabaseName $DatabaseName -ServerName $ServerName

if (($currentDatabaseDetails.Edition -eq "Basic") -Or ($currentDatabaseDetails.Edition -eq "Standard") -Or ($currentDatabaseDetails.Edition -eq "Premium"))

{

Write-Output "Database is DTU model."

if ($currentDatabaseDetails.CurrentServiceObjectiveName -eq "P15") {

Write-Output "DTU database is already at highest tier (P15). Suggestion is to move to Business Critical vCore model with 32+ vCores."

} else {

for ($i=0; $i -lt $DtuTiers.length; $i++) {

if ($DtuTiers[$i].equals($currentDatabaseDetails.CurrentServiceObjectiveName)) {

Set-AzureRmSqlDatabase -ResourceGroupName $ResourceGroupName -DatabaseName $DatabaseName -ServerName $ServerName -RequestedServiceObjectiveName $DtuTiers[$i+1]

break

}

}

}

} else {

Write-Output "Database is vCore model."

$currentVcores = ""

$currentTier = $currentDatabaseDetails.CurrentServiceObjectiveName.SubString(0,8)

$currentGeneration = $currentDatabaseDetails.CurrentServiceObjectiveName.SubString(6,1)

$coresArrayToBeUsed = ""

try {

$currentVcores = $currentDatabaseDetails.CurrentServiceObjectiveName.SubString(8,2)

} catch {

$currentVcores = $currentDatabaseDetails.CurrentServiceObjectiveName.SubString(8,1)

}

Write-Output $currentGeneration

if ($currentGeneration -eq "5") {

$coresArrayToBeUsed = $Gen5Cores

} else {

$coresArrayToBeUsed = $Gen4Cores

}

if ($currentVcores -eq $coresArrayToBeUsed[$coresArrayToBeUsed.length]) {

Write-Output "vCore database is already at highest number of cores. Suggestion is to optimize workload."

} else {

for ($i=0; $i -lt $coresArrayToBeUsed.length; $i++) {

if ($coresArrayToBeUsed[$i] -eq $currentVcores) {

$newvCoreCount = $coresArrayToBeUsed[$i+1]

Set-AzureRmSqlDatabase -ResourceGroupName $ResourceGroupName -DatabaseName $DatabaseName -ServerName $ServerName -RequestedServiceObjectiveName "$currentTier$newvCoreCount"

break

}

}

}

}

}

}

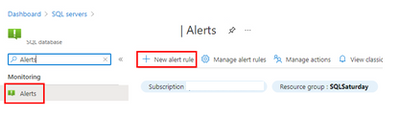

Step #3: create Azure Monitor Alert to trigger the Automation runbook

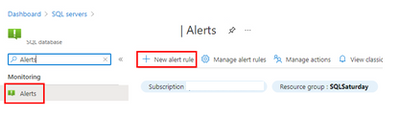

On your Azure SQL Database, create a new alert rule:

The next blade will require several different setups:

- Scope of the alert: this will be auto-populated if +New Alert Rule was clicked from within the database itself.

- Condition: when should the alert get triggered by selecting a signal and defining its logic.

- Actions: when the alert gets triggered, what will happen?

Condition

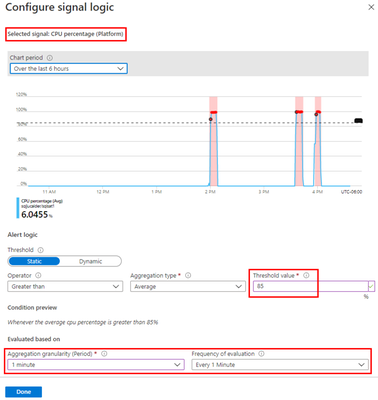

For this example, the alert will monitor the CPU consumption every 1 minute. When the average goes over 85%, the alert will be triggered:

Actions

After the signal logic is created, we need to tell the alert what to do when it gets fired. We will do this with an action group. When creating a new action group, two tabs will help us configure sending an email and triggering the runbook:

Notifications

Actions

After saving the action group, add the remaining details to the alert.

That’s it! The alert is now enabled and will auto-scale the database when fired. The runbook will be executed twice per alert: once when fired and another when resolved but it will only perform a scale operation when fired.

by Contributed | Apr 19, 2021 | Dynamics 365, Microsoft 365, Technology

This article is contributed. See the original author and article here.

Over the last several months, we have continued to see the agility and resiliency shown by businesses across the globe in response to the COVID-19 pandemic. Throughout the holiday trading period, we saw businesses adapt to new customer needs across digital channels, along with enhancing and streamlining in-person buying experiences. As vaccines become available, many retail businesses are looking at the future shopping needs of their customers and what the return to in-store buying will mean for customer experiences. Along with this, business-to-business (B2B) buying has also been transitioning from in-person to more digital and self-service buying options for accounts and partners.

Building atop the great features released in Microsoft Dynamics 365 Commerce 2020 release wave 2, we are bringing additional capabilities to Dynamics 365 Commerce 2021 release wave 1, grounded in intelligence to help businesses meet the needs of their customers across consumer-facing and business-to-business segments. We are delivering innovation across the following key investment areas:

- New digital commerce capabilitiesto further enhance customer experience and business outcomes: We continue to build upon the foundation e-commerce capabilities including announcing the general availability of B2B e-commerce capabilities on a single connected platform.

- Enhancements through ingrained intelligence to drive growth and efficiencies: Utilize enhancements with AI and machine learning to further improve insights into business operations and deliver differentiation.

- Expand on omnichannel capabilities to improve business performance: We continue to invest in core capabilities that support unified commerce capabilities along with enhancements with broader Microsoft and Microsoft Dynamics 365 solutions.

Let’s take a closer look at some of the new capabilities and highlights we are bringing to our customers within the Dynamics 365 Commerce 2021 release wave 1.

Build rich, relevant, and intuitive digital commerce experiences for every customer

With the acceleration of digital commerce channels amidst COVID-19, we continue to invest in building the best e-commerce platform available on the market. With the addition of business-to-business e-commerce capabilities for Dynamics 365 Commerce, we are bringing intelligent and user-friendly features available in business-to-consumer (B2C) e-commerce to business partners.

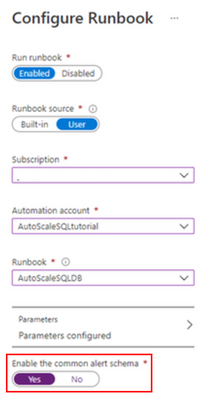

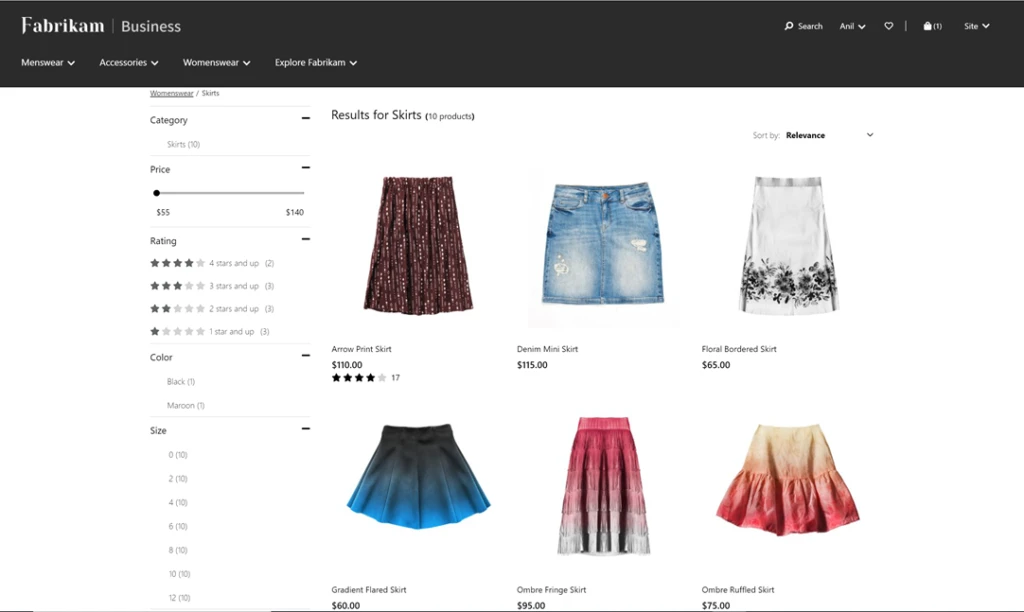

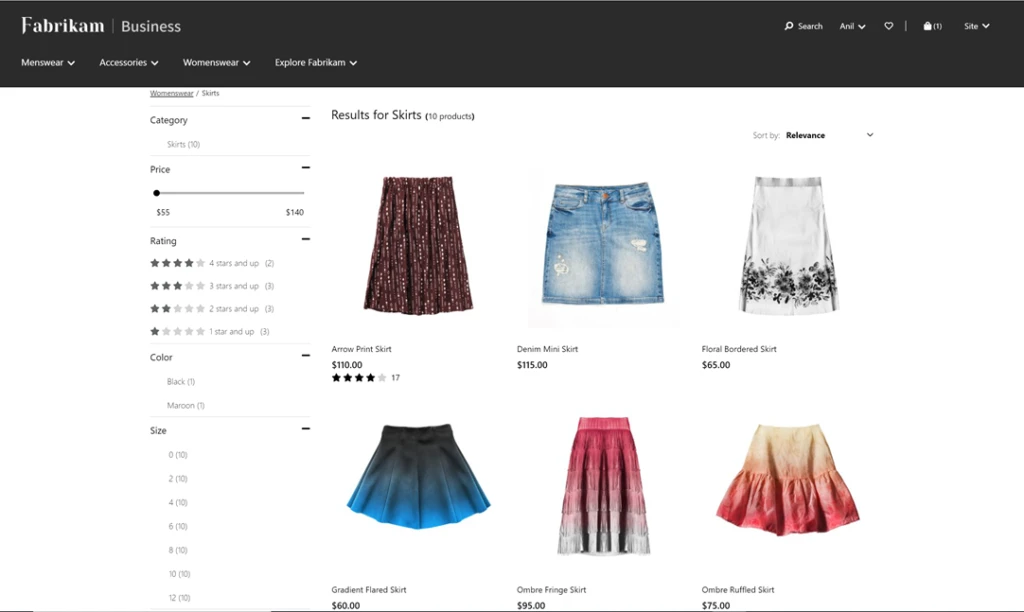

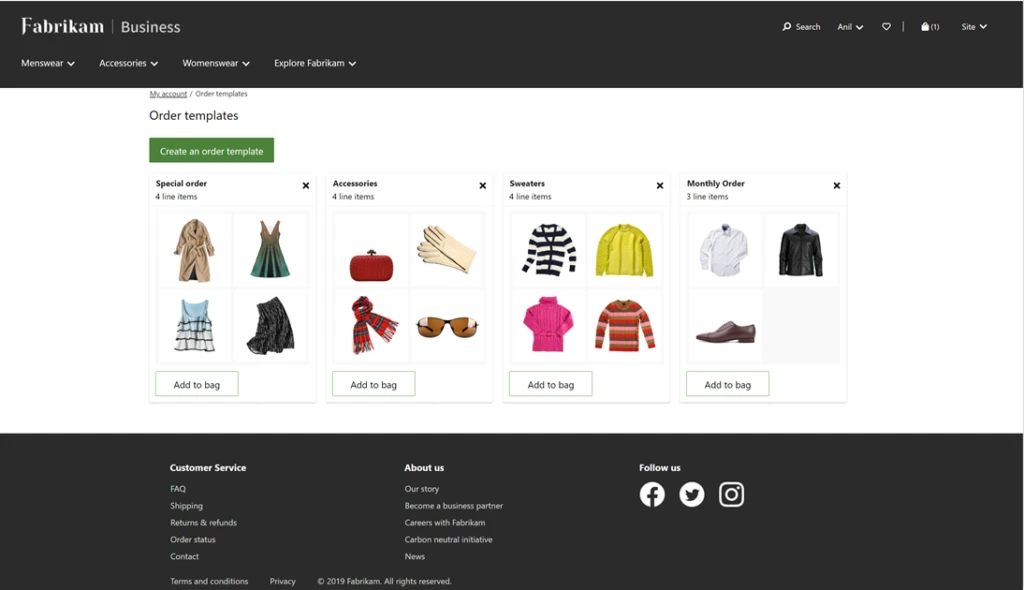

B2B e-commerce capabilities now available: B2B e-commerce capabilities for Dynamics 365 Commerce enables organizations in varied industry segments to digitize their B2B transactions with personalized, user friendly, and self-serve driven B2C e-commerce-like experiences while still providing the unique capabilities needed for a B2B user to be productive in their job function.

As e-business teams look for solutions in the market, not only are they benchmarking their future-state online buying experience against B2B peers, but also against B2C leaders. They need solutions with a best-in-class foundation of B2C features, such as robust marketing and merchandising and experience management tools. With this release, we are bringing a range of B2B e-commerce capabilities to market including partner onboarding, order templates, quick order entry, account statement and invoicing management, and more. In addition, we are delivering native integration to Dynamics 365 Sales and Dynamics 365 Customer Service for unified customer engagement across touchpoints. Unique B2B capabilities such as contract pricing, quotes pricing lists, e-procurement, product configuration and customization, guided selling, bulk order entry, and account, contract, and budget management can also be layered on top of our rich e-commerce capabilities to deliver a great buying experience for every partner.

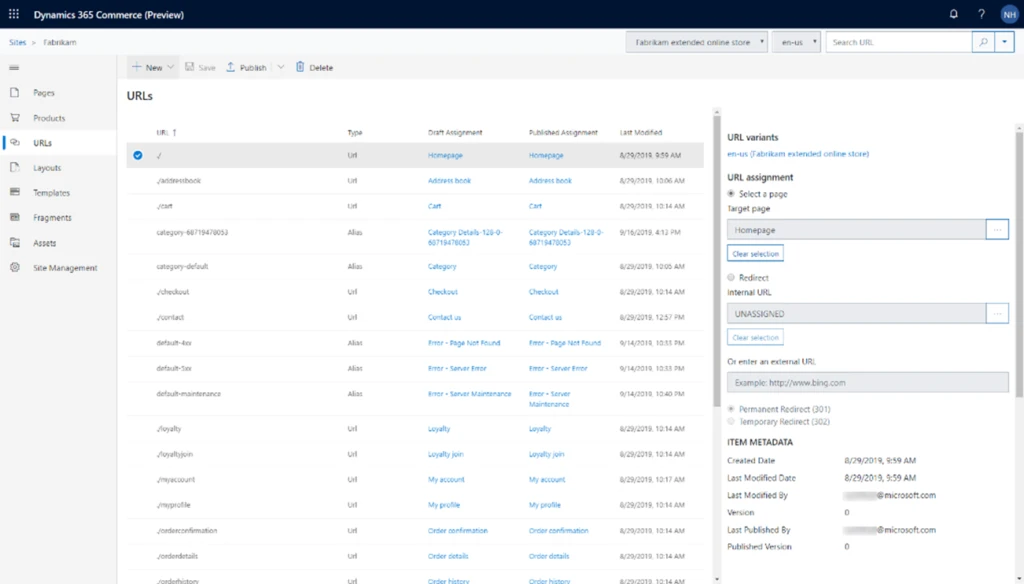

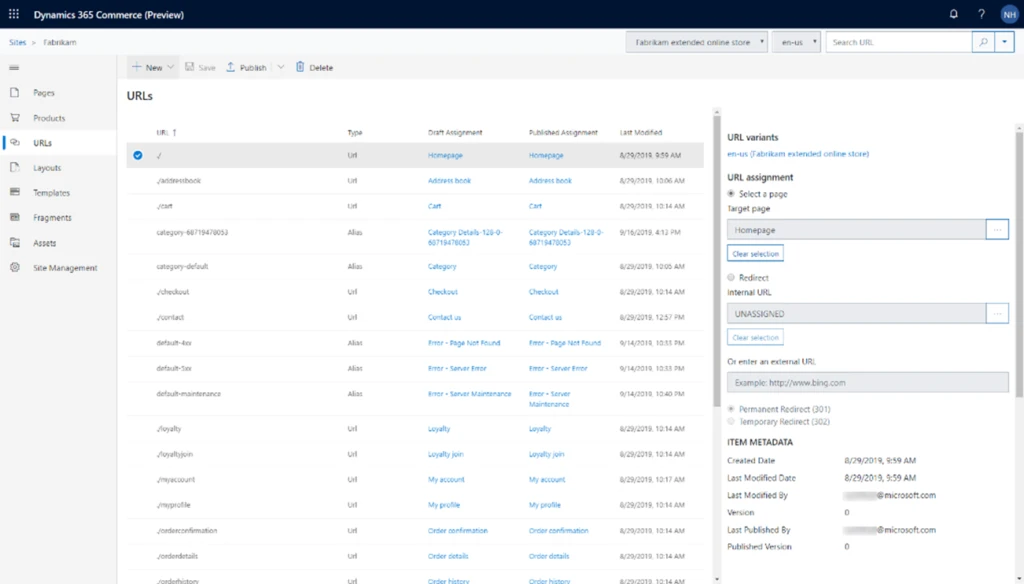

Enhanced search engine results: Improve SEO rankings for product landing and display pages to enable quicker page discovery through native support for schema.org/product metadata. Product pages can utilize existing Dynamics 365 Commerce headquarters product data to simplify and streamline merchandising workflows.

Enhancements through ingrained intelligence to drive growth and efficiencies

Unlock immersive AI-powered intelligent shopping features that enable more personal and relevant shopping experiences for every customer.

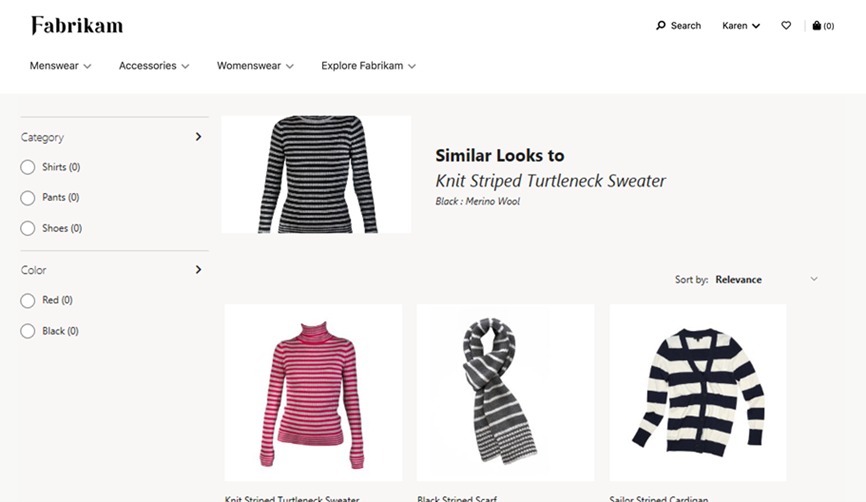

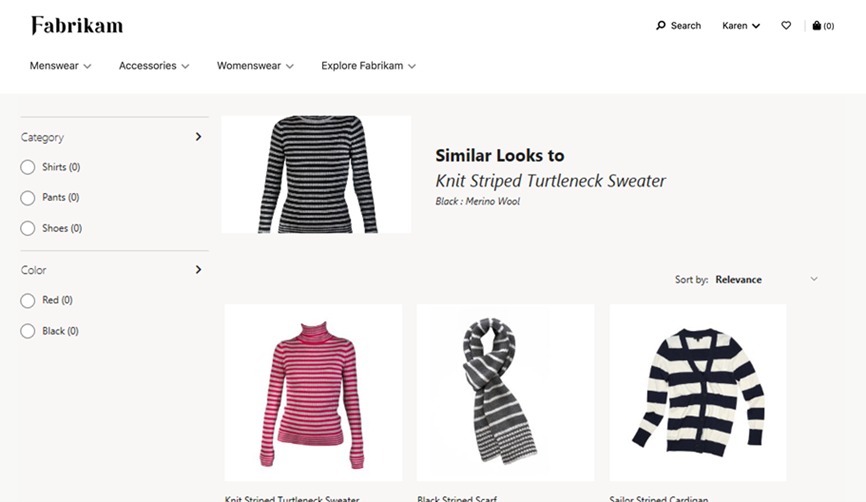

Shop similar looks: Bring fresh and appealing choices to the forefront of the shopping experience using the power of AI and machine learning. The effect can be transformative and can create additional selling power as shoppers find more of the things they want in an easy-to-use visual experience.

Shop by similar description: This recommendations service enables customers to easily and quickly find the products they need and want (relevant), find more than they had originally intended (cross-sell, upsell), all the while having an experience that serves them well (satisfaction). For retailers, this functionality helps drive conversion opportunities across all customers and products, resulting in more all-up sales revenue and customer satisfaction.

System monitoring and diagnostics across in-store and e-commerce: Access to system diagnostic logs allows better visibility for IT administrators and enables improved time to detection, time to mitigation, and time to resolution of live-site incidents. IT administrators are able to determine key contributing factors of incidents, which allows for targeted engagement with Microsoft support teams, or with implementation partners, ISVs, or other stakeholders.

Cloud-powered customer search (preview): Customer data is the lifeblood for businesses, and yet almost all businesses run into the problem of duplicate customer records because of inefficient customer search algorithms. If cashiers are not able to easily find a customer, they end up creating new customers. With this enhancement, businesses will be able to easily switch their current customer search experience from SQL-based search to cloud-powered search to gain performant, relevant, and scalable search capabilities.

Expand on omnichannel capabilities to improve business performance

We continue to see the rapid evolution of technologies supporting business, employee, and customer shopping experiences. With this, comes the need to deliver tools that span across channels to streamline purchasing experiences and empowers staff to perform at their best. In this release, we are continuing to invest in making Dynamics 365 Commerce the best solution for omnichannel shopping and bringing new capabilities to customers and frontline workers alike.

Better together with Microsoft Teams: Administrators now can provision Microsoft Teams from Dynamics 365 Commerce and create teams for stores, add members from a store’s worker book, and more in Microsoft Teams, and synchronize the changes in the future. They can also notify frontline workers on mobile devices and synergize task management between Dynamics 365 Commerce and Microsoft Teams to improve productivity.

Additional features and improvements to Curbside Pickup

In today’s world, it is important that organizations can offer safe and flexible fulfillment options to meet their customers’ ever-changing needs. Building on Dynamics 365 Commerce’s existing capabilities that enables buy online, pick up in-store (BOPIS), Dynamics 365 Commerce now offers additional features to optimize convenient and COVID safe curbside pickup. These capabilities will make it easier for businesses to deploy mobile POS devices and allow their store associates to efficiently manage orders that need to be fulfilled and picked up. With this release, we have added the following features and improvements to Dynamics 365 Commerce:

- Ability for shoppers to set their preferred pick-up location on E-Commerce channel.

- Ability for businesses to offer multiple pickups and delivery options for shoppers to choose from.

- Flexible configuration of pickup time slots per day by location and allowing shoppers to choose a pickup time slot on E-Commerce channel.

- Improved email receipts and notifications with the ability for businesses to customize email receipts, configure by deliver mode, etc.

- Enhanced order fulfillment visibility for store associates with live tile and notifications to monitor and address orders that need to be fulfilled.

- Streamline order pick-up flows, including support for QR codes.

Enhancements to inventory visibility, movement, and adjustments

Inventory management and visibility is key to business success in order to give customers clear insights into where stock is located, reduce out-of-stock situations, and empower our sellers, managers, and merchandisers to make better decisions. With this update, we have made a range of improvements touching inventory and stock management including enhancements to e-commerce inventory availability lookup API, inventory aware product discovery for e-commerce customers, improvements to inventory lookup operations via in-store point of sale, along with comprehensive in-store inventory management capabilities.

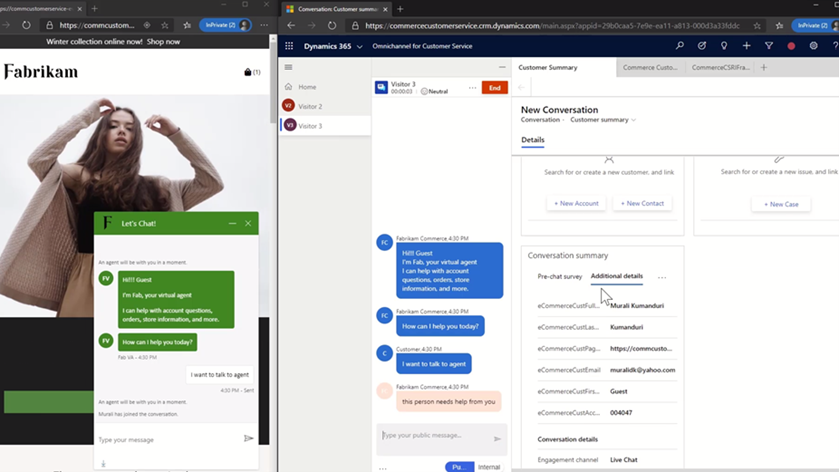

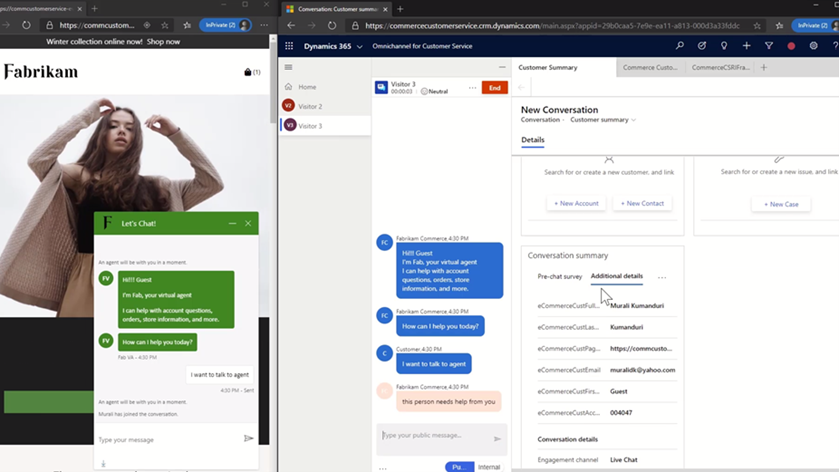

Chat in Dynamics 365 Commerce with Power Virtual Agents and Dynamics 365 Customer Service (Preview)

Customer service functionality will be added to Dynamics 365 Commerce by leveraging the capabilities in Microsoft Dynamics 365 Customer Service and Microsoft Power Virtual Agents. Site administrators will be able to configure the chat widget on the e-commerce site with proactive notification capability based on different criteria.

This will be supported via native integrations with Dynamics 365 Customer Service chat or virtual agents into Dynamics 365 Commerce. With this, contact center agents will be able to look up customer information using Dynamics 365 Commerce Call Center and act on customer’s details and orders as required.

Learn more about Dynamics 365 Commerce

Please share your thoughts with us about the 2021 release wave 1 updates for Dynamics 365 Commerce. We will continue to bring new capabilities and enhancements to Dynamics 365 Commerce and look forward to sharing more in the future.

The post Continued innovation in Dynamics 365 Commerce 2021 release wave 1 appeared first on Microsoft Dynamics 365 Blog.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

by Contributed | Apr 19, 2021 | Technology

This article is contributed. See the original author and article here.

We are pleased to announce an update to the Azure HPC Cache service!

HPC Cache helps customers enable High Performance Computing workloads in Azure Compute by providing low-latency, high-throughput access to Network Attached Storage (NAS) environments. HPC Cache runs in Azure Compute, close to customer compute, but has the ability to access data located in Azure as well as in customer datacenters.

Preview Support for Blob NFS 3.0

The Azure Blob team introduced preview support for the NFS 3.0 protocol this past fall. This change enables the use of both NFS 3.0 and REST access to storage accounts, moving cloud storage further along the path to a multi-tiered, multi-protocol storage platform. It empowers customers to run their file-dependent workloads directly against blob containers using the NFS 3.0 protocol.

There are certain situations where caching NFS data makes good sense. For example, your workload might run across many virtual machines and requires lower latency than what the NFS endpoint provides. Adding the HPC Cache in front of the container will provide sub-millisecond latencies and improved client scalability. This makes the joint NFS 3.0 endpoint and HPC Cache solution ideal for scale-out read-heavy workloads such as genomic secondary analysis and media rendering.

Also, certain applications might require NLM interoperability, which is unsupported for NFS-enabled blob storage. HPC Cache responds to client NLM traffic and manages lock requests as the NLM service. This capability further enables file-based applications to go all-in to the cloud.

Using HPC Cache’s Aggregated Namespace, you can build a file system that incorporates your NFS 3.0-enabled containers into a single directory structure – even if you have multiple storage accounts and containers that you want to operate against. And you can also add your on-premises NAS exports into the namespace, for a truly hybrid file system!

HPC Cache support for NFS 3.0 is in preview. To use it, simply configure a Storage Target of the type “ADLS-NFS” type and point at your NFS 3.0-enabled container.

Customer-Managed Key Support

HPC Cache has had support for CMK-enabled cache disks since mid-2020, but it was limited to specific regions. As of now, you can use CMK-enabled cache disks in all regions where CMK is supported.

Zone-Redundant Storage (ZRS) Blob Containers Support for Blob-As-POSIX

Blob-as-POSIX is a 100% POSIX compliant file system overlaid on a container. Using Blob-as-POSIX, HPC Cache can provide NAS support for all POSIX file system behaviors, including hard links. As of April 2nd, you can use both ZRS and LRS container types.

Custom DNS and NTP Server Support

Typically, HPC Cache will use the built-in Azure DNS and NTP services. When using HPC Cache and your on-premises NAS environment, there are some situations where you might want to use your own DNS and NTP servers. This special configuration is now supported in HPC Cache. Note that using your own servers in this case requires additional network configuration and you should consult with your Azure technical partners for further information. You can find more documentation here.

Client Access Policies

Traditional NAS environments support export policies that restrict access to an export based on networks or host information. Further, they typically allow the remapping of root to another UID, known as root squash. HPC Cache now offers the ability to configure such policies, called client access policies, on the junction path of your namespace. Further, you will be able to squash root to both a unique UID and GID value.

Extended Groups Support

HPC Cache now supports the use of NFS auxiliary groups, which are additional GIDs that might be configured for a given UID. Any group count above 16 falls into the auxiliary, or extended, group definition. HPC Cache now supports the use of such group integration with your existing directory mechanisms (such as Active Directory or LDAP, or even a recurring file upload of these definitions). Using HPC Cache in combination with Azure NetApp Files, for example, allows you to leverage your extended groups.

Get Started

To create storage cache in your Azure environment, start here to learn more about HPC Cache. You also can explore the documentation to see how it may work for you.

Tell Us About It!

Building features in HPC Cache that help support hybrid HPC architectures in Azure is what we are all about! Try HPC Cache, use it, and tell us about your experience and ideas. You can post them on our feedback forum.

by Contributed | Apr 19, 2021 | Technology

This article is contributed. See the original author and article here.

MVPs know better than most that education is not something that starts and ends with a formal experience like college. Instead, education is a life-long process and requires continual upskilling.

Four MVPs were recently featured in two separate sessions at MS Ignite about the importance of skilling and certification in their careers. In the first session, Azure MVP Tiago Costa and Office Apps & Services MVP Chris Hoard shared the digital stage with tech trainers and managers on the value of Microsoft Certifications.

Tiago, from Portugal, says he has personally used Microsoft Certification to progress into new roles and climb the corporate ladder. Tiago advises tech enthusiasts of all experience levels to experiment with MS Learn, a resource that is “free and of amazing quality and accuracy.”

“If you have the willingness to learn, it doesn’t matter what level of expertise you have,” Tiago says. “I have helped people with literally zero – I will repeat, zero – experience in IT and today they are tech leaders with their field.”

UK-based Chris agrees: “Authenticity, validation, knowledge, closing skill gaps, finding a new passion, the prospects of better wages or even a better job – these are all good reasons why learning and certification are important.”

“My advice is that it is never too late to start. Set yourself a modest goal and once you have started, don’t stop – keep learning and unlearning,” Chris says.

Later at the conference, Business Apps MVPs Amey Holden and Lisa Crosbie shared their learning journeys as part of the Australian tech community.

Amey says that she was thrown into the deep end when a respected practice lead sold her to a project as an expert in Dynamics 365 when “actually I was a clueless graduate with some impressive Excel formulae skills.”

Thus, Amey’s Power Platform journey began with a three-day crash course in Dynamics 365 Sales and “piles of PDFs with labs and content to learn everything (back in the days before MS Learn!)” Now, however, Amey is a big fan of the platform as it “has given me the tools to understand all new features and functionality.”

“Being officially recognized by Microsoft for your knowledge and achievements helped to boost my confidence earlier in my career when the impostor syndrome kicked in or I genuinely had no idea what I’m doing,” Amey says.

“It has helped me attain knowledge that I never knew I would have needed until I find myself calling on it during client conversations. This has helped me to more easily become a trusted client advisor who can have a positive and valuable impact.”

Lisa similarly uses MS Learn to illuminate new tech knowledge. Lisa made a career change from book publishing to tech in 2016, and says MS Learn “is an awesome revision tool for a number of certifications in my main area of Power Platform and Dynamics 365, as well as using it to upskill in new areas and pass certification exams in M365 and Azure AI.”

“It is a good discipline to make sure I stay up to date with new features and review the things I use less often. I also feel it gives credibility to the advice I give to customers and gives me confidence in my knowledge,” Lisa says.

“I always have more collections bookmarked and not enough hours in the day!”

For more, check out Amey’s and Lisa’s session at MS Ignite, as well as Tiago’s and Chris’ session.

by Contributed | Apr 19, 2021 | Technology

This article is contributed. See the original author and article here.

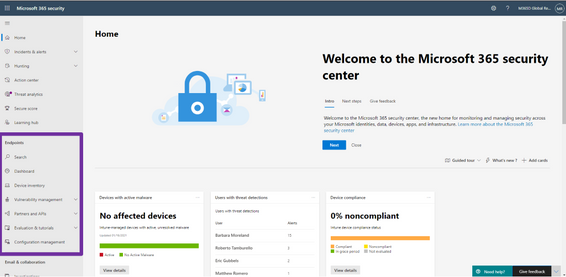

We’re excited to announce that we have reached a new milestone in our XDR journey: the integration of our endpoint and email and collaboration capabilities into Microsoft 365 Defender is now generally available. Security teams can manage all endpoint, email, and cross-product investigations, configuration, and remediation within a single unified portal.

Register for the Microsoft 365 Defender’s Unified Experience for XDR webinar to learn how your security teams can leverage the unified portal and check out our video to learn more about these new capabilities.

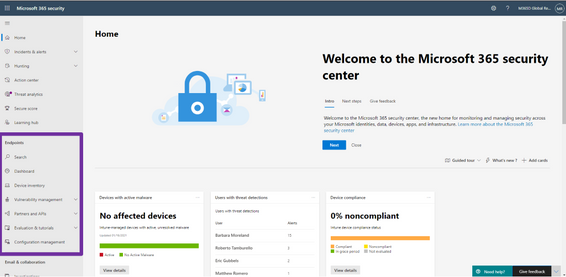

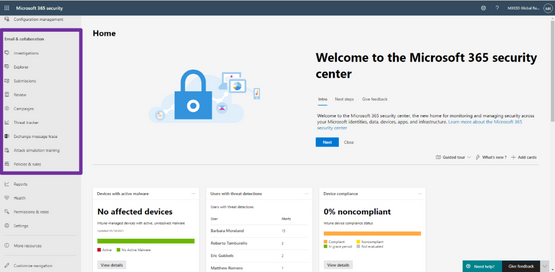

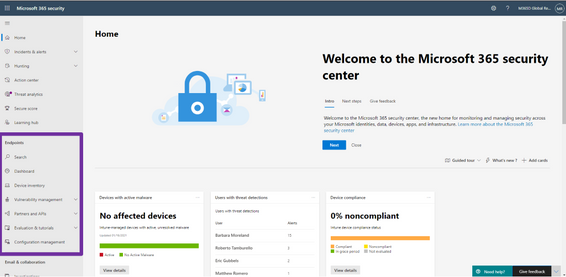

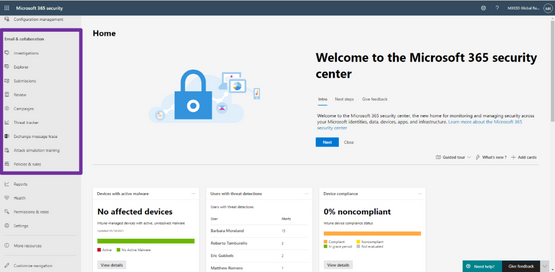

This release delivers the rich set of capabilities we announced in public preview, including unified pages for alerts, users, and automated investigations, a new email entity page offering a 360-degree view of an email, threat analytics, a brand-new Learning hub, and more – all available exclusively in the Microsoft 365 Defender portal at security.microsoft.com.

Now is the time to start moving your users to the unified experience using the automatic URL redirection for Microsoft Defender for Endpoint and automatic URL redirection for Microsoft Defender for Office 365 as the previously distinct portals will eventually be phased out.

Figure 1: Endpoint features integrated into Microsoft 365 Defender.

Figure 2: Email and collaboration features integrated into Microsoft 365 Defender.

We’re excited to be bringing these additional capabilities into Microsoft 365 Defender and look forward to hearing about your experiences and your feedback as you explore and transition to the unified portal.

To read more about the unified portal experience, check out:

![[Guest Blog] My Journey from McDonald's to Microsoft](https://www.drware.com/wp-content/uploads/2021/04/fb_image-148.jpeg)

Recent Comments