by Contributed | May 5, 2021 | Technology

This article is contributed. See the original author and article here.

Last year we released the Reply-all Storm Protection feature to help protect your organization from unwanted reply-all storms. This feature uses global settings applicable to all Microsoft 365 customers for reply-all storm detection and for how long the feature will block subsequent reply-alls once a storm has been detected. Today we’re pleased to announce you’ll now have the ability to make these settings specific to your Microsoft 365 organization; email admins will have the flexibility to enable/disable the feature, and set customized detection thresholds and block duration time. This not only makes it more flexible to tailor for your organization, but it also allows more Microsoft 365 customers to take advantage of the Reply-all Storm Protection feature since the minimum number of reply-all recipients for detection can now be as low as 1000 when previously it was hard-coded at 5000.

The current Reply-all Storm Protection settings for Microsoft 365 are as follows:

Setting

|

Default

|

Enabled/disabled

|

Enabled

|

Minimum number of recipients

|

5000

|

Minimum number of reply-alls

|

10

|

Detection time sliding window

|

60 minutes

|

Block duration (once detected)

|

4 hours

|

Based on our telemetry and customer feedback we’re also taking this opportunity to update a few of the default settings. Once this change has rolled out, the default settings for each Microsoft 365 organization will be the following:

Setting

|

Default

|

Enabled/disabled

|

Enabled

|

Minimum number of recipients

|

2500 (previously 5000)

|

Minimum number of reply-alls

|

10

|

Detection time sliding window

|

60 minutes

|

Block duration (once detected)

|

6 hours (previously 4 hours)

|

The customizations possible for each setting will be as follows:

Setting

|

Customizable options

|

Enabled/disabled

|

Enabled or Disabled

|

Minimum number of recipients

|

1000 to 5000

|

Minimum number of reply-alls

|

5 to 20

|

Detection time sliding window

|

60 minutes (not customizable)

|

Block duration (once detected)

|

1 to 12 hours

|

Admins will be able to use the Set-TransportConfig Remote PowerShell cmdlet to update the settings for their organization:

Setting

|

Cmdlet

|

Enabled/disabled

|

Set-TransportConfig -ReplyAllStormProtectionEnabled [$True:$False]

|

Number of recipients threshold

|

Set-TransportConfig -ReplyAllStormDetectionMinimumRecipients [1000 – 5000]

|

Number of reply-alls threshold

|

Set-TransportConfig -ReplyAllStormDetectionMinimumReplies [5 – 20]

|

Block duration

|

Set-TransportConfig -ReplyAllStormBlockDuration [1 – 12]

|

These updates are rolling out now and should be fully available to all Microsoft 365 customers by mid-June. While this should come as a welcome update for customers wanting to better take advantage of the Reply-all Storm Protection feature, we are not done yet! In future updates we plan to provide an insight, report, and optional notifications for the feature as well. And if there’s enough customer feedback for it, we’ll consider also exposing the ability to customize these settings in the Exchange Admin Center. Let us know what you think!

The Exchange Transport Team

by Contributed | May 5, 2021 | Technology

This article is contributed. See the original author and article here.

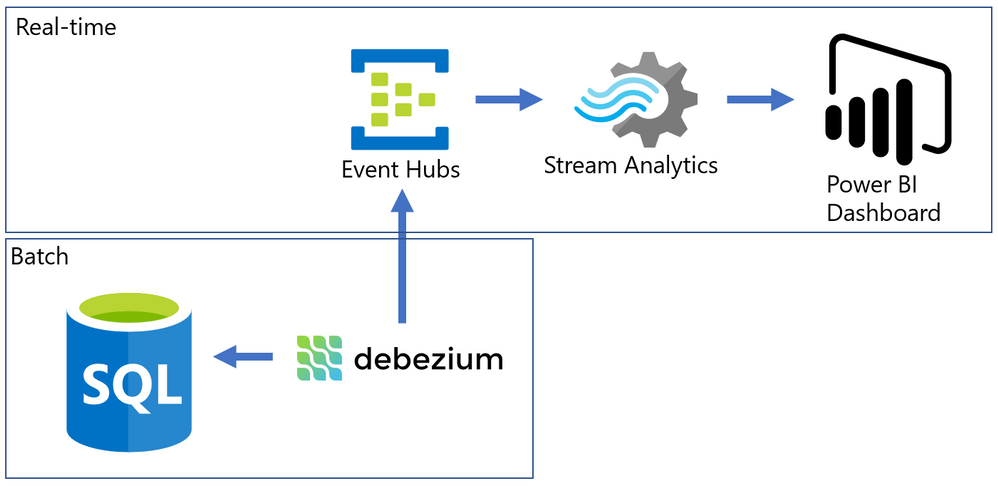

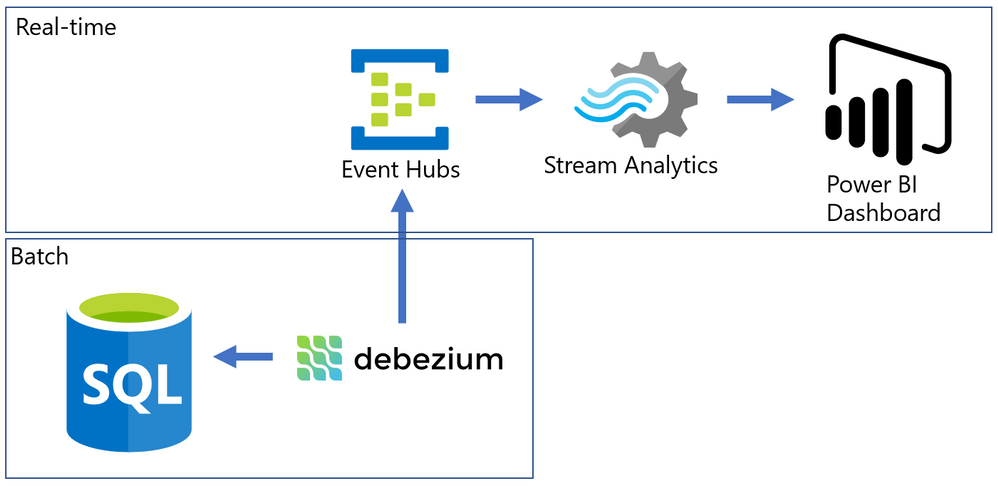

Almost every modern data warehouse project touches the topic of real-time data analytics. In many cases, the source systems use a traditional database, just like SQL Server, and they do not support event-based interfaces.

Common solutions for this problem often require a lot of coding, but I will present an alternative that can integrate the data form SQL Server Change Data Capture to a Power BI Streaming dataset with a good help of an Open-Source tool named Debezium.

The Problem

SQL Server is a batch-oriented service, just like any DBMS. This means that one program must query it in order to get the result – so to have real time analytics we would have to change this batch behavior to a streaming /event/push behavior.

On the other side, we have Azure Event Hubs, Stream Analytics and Streaming datasets on Power BI. They work pretty well together if the data source is a stream producing events (something we can have with a custom code application or some Open Source solution like Kafka).

The challenge here was to find something to make the bridge between SQL Server and Event Hubs.

After some time looking for solutions, I found this Docs page (Integrate Apache Kafka Connect on Azure Event Hubs with Debezium for Change Data Capture – Azure Event Hubs | Microsoft Docs) with an approach to bring CDC data from Postgres to Event Hubs.

The solution presented on Docs was more complex than I needed, so I simplified it by using a container deployment and by removing unnecessary dependencies, then I wrote this post where I hope I can present it is a simpler way.

The solution looks like this: On one side, we have SQL Server with CDC enabled in a few tables, on the other we have Azure ready to process events that came to Event Hub. To make the bridge, we have Debezium that will create one event per row found on the CDC tables.

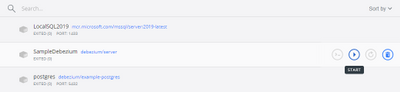

Have you ever used Docker?

For my development environment, I decided to go for Docker Desktop. The new WSL2 backend makes it easy to run Linux containers (such as those needed by Debezium). It works well on Windows 10 and on recent builds of Windows Server (Semi-Annual Channel). If you still never tried WSL2, I highly recommend it. (See: Install Docker Desktop on Windows | Docker Documentation)

After a few steps, I have installed the WSL2 feature, chose the Ubuntu 20.04 distro (there other distros available on Windows Store) and finished the setup of Docker Desktop on my Windows 10 Surface Laptop :smiling_face_with_smiling_eyes:.

Spoiler (Highlight to read)

Docker Desktop works well for your development enviorement. For production, if your company don’t have a container orchestration environment, you can try

Azure Kubernetes Service (AKS) | Microsoft Azure.

Docker Desktop works well for your development enviorement. For production, if your company don’t have a container orchestration environment, you can try Azure Kubernetes Service (AKS) | Microsoft Azure.

How to install a Debezium container?

Debezium has a Docker Image available on hub.docker.com, named “debeziumserver”.

Debezium Server is a lightweight version that do NOT have Kafka installed. The image has already the connector you need for SQL Server and can output the events directly to Event Hubs.

To install and configure the container, I ran only this single line on PowerShell.

docker run -d -it --name SampleDebezium -v $PWD/conf:/debezium/conf -v $PWD/data:/debezium/data debezium/server

This will download the docker image “debezium/server” and start a container named “SampleDebezium”

We are mounting 2 folders from the host machine to the container:

/conf – Holds the configuration file.

/data – Will store the status of Debezium. This avoids missing or duplicate data once the container is recreated or restarted.

On my lab, I used the configuration file bellow (place it on the /conf folder named as application.properties). (Don’t worry about the keys here, I changed them already)

You will have to change the SQL Server and Event Hubs connections to match to match your enviorement.

Sample application.properties file:

debezium.sink.type=eventhubs

debezium.sink.eventhubs.connectionstring=Endpoint=sb://er-testforthisblogpost.servicebus.windows.net/;SharedAccessKeyName=TestPolicy;SharedAccessKey=O*&HBi8gbBO7NHn7N&In7ih/KgONHN=

debezium.sink.eventhubs.hubname=hubtestcdc

debezium.sink.eventhubs.maxbatchsize=1048576

debezium.source.connector.class=io.debezium.connector.sqlserver.SqlServerConnector

debezium.source.offset.storage.file.filename=data/offsets.dat

debezium.source.offset.flush.interval.ms=0

debezium.source.database.hostname=sqlserverhostname.westeurope.cloudapp.azure.com

debezium.source.database.port=1433

debezium.source.database.user=UserDemoCDC

debezium.source.database.password=demo@123

debezium.source.database.dbname=TestCDC

debezium.source.database.server.name=SQL2019

debezium.source.table.include.list=dbo.SampleCDC

debezium.source.snapshot.mode=schema_only

debezium.source.max.queue.size=8192

debezium.source.max.batch.size=2048

debezium.source.snapshot.fetch.size=2000

debezium.source.query.fetch.size=1000

debezium.source.poll.interval.ms=1000

debezium.source.database.history=io.debezium.relational.history.FileDatabaseHistory

debezium.source.database.history.file.filename=data/FileDatabaseHistory.dat

Setting up the Change Data Capture

My SQL Server is hosted on Azure (but this is not a requirement) and to create a lab enviorement, I created a single table and enabled CDC on it by using this script:

-- Create sample database

CREATE DATABASE TestCDC

GO

USE TestCDC

GO

-- Enable the database for CDC

EXEC sys.sp_cdc_enable_db

GO

-- Create a sample table

CREATE TABLE SampleCDC (

ID int identity (1,1) PRIMARY KEY ,

SampleName nvarchar(255)

)

-- Role with privileges to read CDC data

CREATE ROLE CDC_Reader

-- =========

-- Enable a Table

-- =========

EXEC sys.sp_cdc_enable_table

@source_schema = N'dbo',

@source_name = N'SampleCDC',

@role_name = N'CDC_Reader', -- The user must be part of this role to access the CDC data

--@filegroup_name = N'MyDB_CT', -- A filegroup can be specified to store the CDC data

@supports_net_changes = 0 -- Debezium do not use net changes, so it is not relevant

-- List the tables with CDC enabled

EXEC sys.sp_cdc_help_change_data_capture

GO

-- Insert some sample data

INSERT INTO dbo.SampleCDC VALUES ('Insert you value here')

-- The table is empty

select * from SampleCDC

-- But it recorded CDC data

select * from [cdc].[dbo_SampleCDC_CT]

/*

-- Disable CDC on the table

--EXEC sys.sp_cdc_disable_table

--@source_schema = N'dbo',

--@source_name = N'SampleCDC',

--@capture_instance = N'dbo_SampleCDC'

*/

Debezium will query the latest changed rows on CDC based on its configuration file and create the events on Event Hub.

Event Hub and Stream Analytics

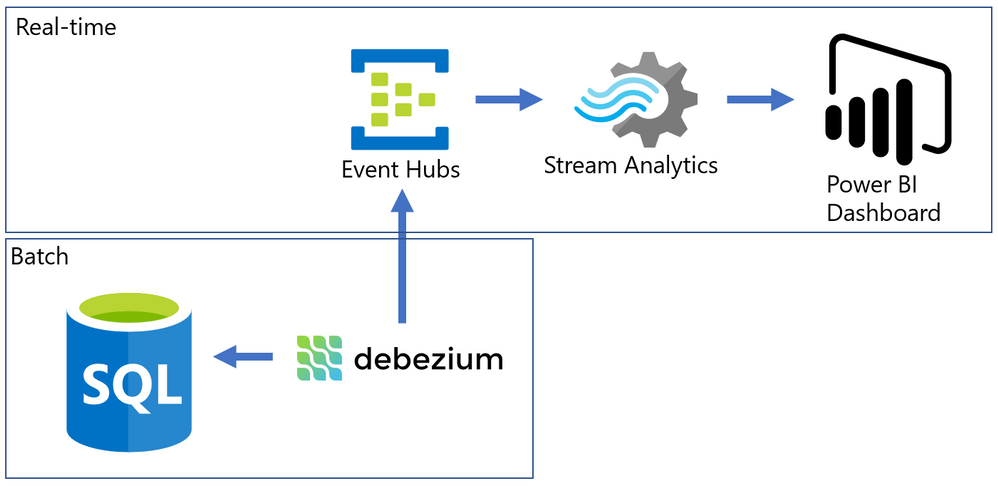

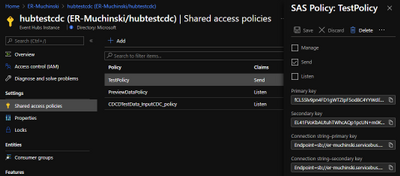

I created a Event Hub Namespace with a single Event Hub to hold this experiment. There is no special requirement for the event hub. The size will depend only on the volume of events your application will send to it.

Once it is done, we have to create a Shared Access Policy. The connection string is what you need to add to the Debezium application.properties file.

To consume the events and create the Power BI streaming dataset, I used Azure Stream Analytics.

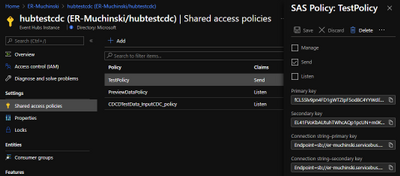

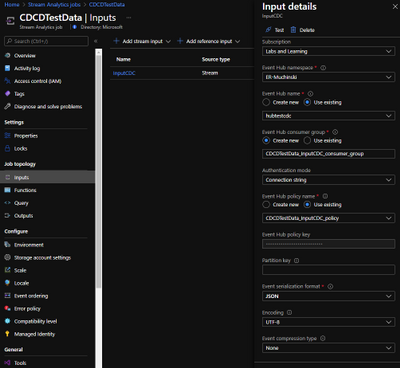

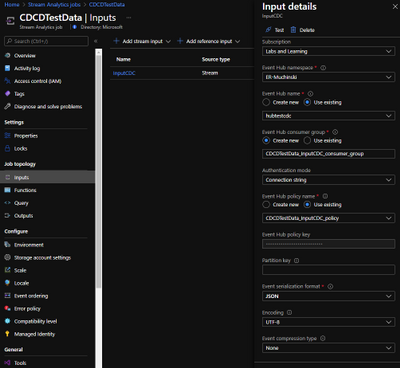

Once the Stream Analytics job is created, we have to configure 3 things: Inputs, Outputs and Query.

Inputs

Here is where you say what the stream analytics will listen to. Just create a Inputs for your Event Hub with the default options. Debezium will generate uncompressed JSON files encoded on UTF-8.

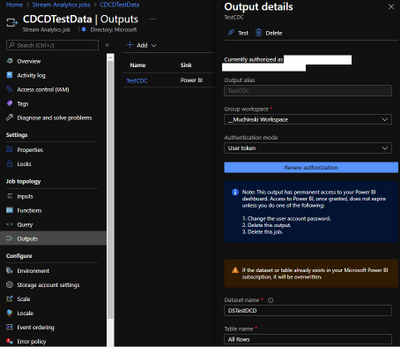

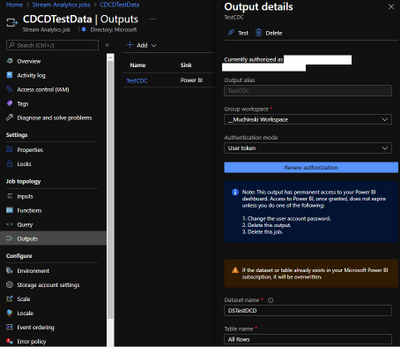

Outputs

Here is where we will configure the Power BI streaming dataset. But you first need to know on which Power BI Workspace it will stay.

On the left menu, click on Outputs and then Add -> Power BI.

The options “Dataset name” and “Table name” are what will be visible to Power BI.

The a test, the “Authentication mode” as “User Token” is a good one, but for production, better use “Managed Identity”

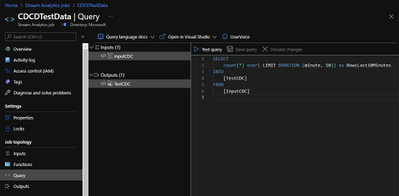

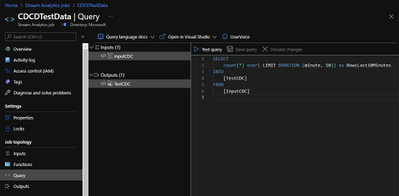

Query

Stream used a query language very similar to T-SQL to handle the data that comes in a stream input.

Check this link to find more about it Stream Analytics Query Language Reference – Stream Analytics Query | Microsoft Docs

On the example, I’m just counting row many rows (events) were generated on the last 10 minutes FROM an input INTO an output. The names on the query must match the ones you defined on prior steps.

Here it is in the text version:

SELECT

count(*) over( LIMIT DURATION (minute, 10)) as RowsLast10Minutes

INTO

[TestCDC]

FROM

[InputCDC]

Make it Run

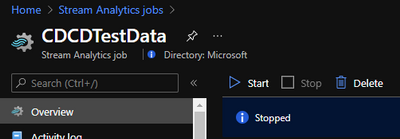

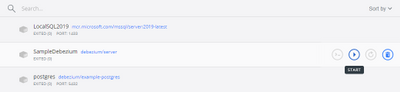

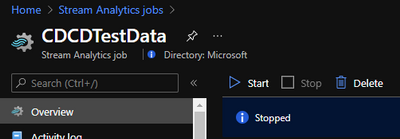

If everything is correctly configured, we will be able to start our Stream Analytics and our Container.

Stream Analytics:

Docker Desktop:

Power BI

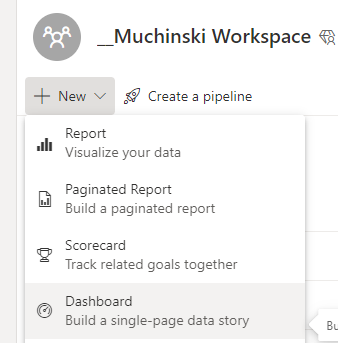

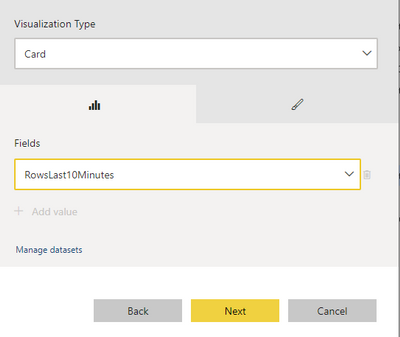

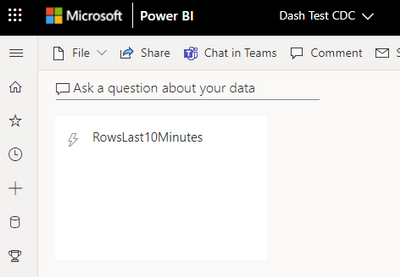

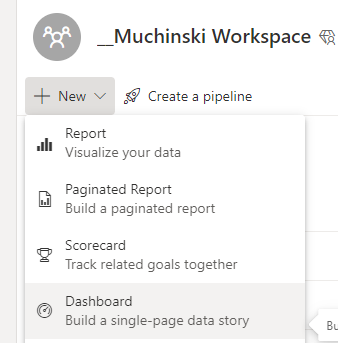

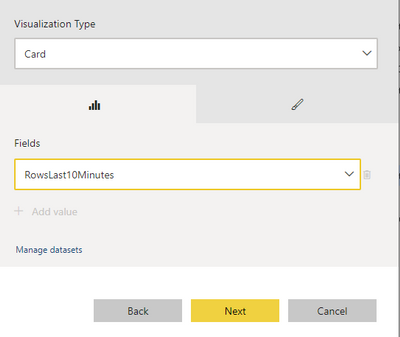

Once the Stream Analytics is started, we will go to the Power BI workspace and create a tile based on the streaming dataset.

If you don’t have a Power BI Dashboard on your workspace, just create a new one.

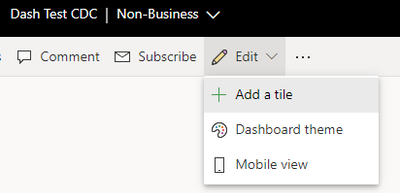

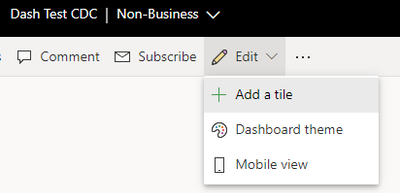

On the Dashboard, add a Tile.

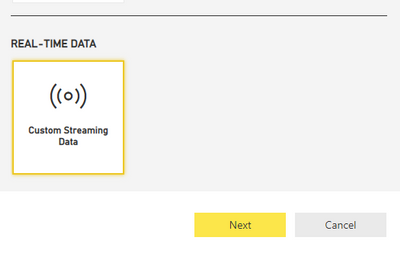

Click on Real-time data and Next.

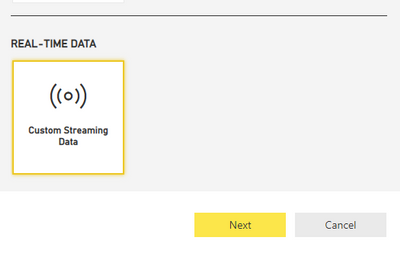

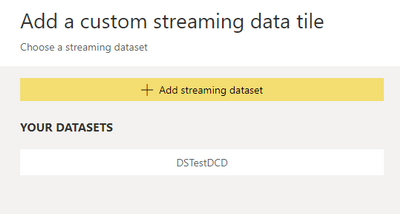

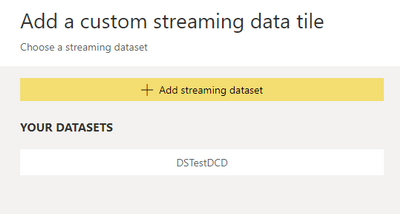

The dataset with the name you chose on Stream Analytics should be visible here.

Select the Card visual and the column RowsLast10Minutes, click Next, and Apply.

It should be something like this if there is no data being inserted on the table.

Now it comes the fun time. If everything is configured correctly, we just have to insert data on our sample table and see it flowing to the dashboard!

Known problems

It seems when a big transaction happens (like an update on 200k rows), Debezium stops pointing that the message size was bigger than the maximum size defined from Event Hubs. Maybe there is a way to break it on smaller messages, maybe it is how it works by design. If the base (CDC) table are OLTP oriented (small, single row operations), the solutions seem to run fine.

References

Enable and Disable Change Data Capture (SQL Server)

Debezium Server Architecture

Docker image with example

SQL Server connector

Azure Event hubs connection

by Contributed | May 5, 2021 | Technology

This article is contributed. See the original author and article here.

Accessibility is about making our products accessible and inclusive to everyone, including the 1 billion+ people with disabilities around the world. It is a core Excel and Microsoft priority, and an area where we continuously strive to improve. For more information about Microsoft’s commitment to accessibility, visit microsoft.com/accessibility.

Excel’s approach

Making Excel more accessible is a journey, and we will always have room for improvement as we strive to make spreadsheets work for everyone. We have three overarching objectives to guide us:

- Work seamlessly with assistive technology. Our partners around the globe and within Microsoft create amazing technology to support people with disabilities. A few examples of assistive technology include screen readers, dictation software, magnifiers, and physical devices. Our priority is to collaborate with these partners so that everyone can use Excel in the way that works best for them, with tools that are already familiar.

- Build inclusive and delightful experiences inspired by people with disabilities. Beyond “just working,” Excel should be efficient and delightful to use. We are always looking for opportunities to simplify your workflow, summarize your content, or suggest information. By learning from and being inspired by the experiences of people with disabilities, we can make Excel better for all.

- Support authors to create accessible content. Making Excel as an application accessible is only half the battle; the other half is making sure workbooks created in Excel are accessible. For that, we rely heavily on you, the author…but that doesn’t mean we can’t help! We look for opportunities to automatically create accessible content on your behalf, support you while you create accessible content, let you know when something is inaccessible, and help you fix accessibility issues before sharing your workbook with others.

Feature Spotlight: Accessibility ribbon

As a part of our goal to help you create accessible content, there is a new contextual ribbon called “Accessibility” coming to Excel. The Accessibility ribbon will be available when you open the Check Accessibility pane on the Review tab. On the Accessibility ribbon you will find a collection of the most common tools you need to make your workbook accessible. The ribbon is available today to those in the Office Insiders program.

Contextual Accessibility ribbon in Excel shows when the Check Accessibility pane is open.

Contextual Accessibility ribbon in Excel shows when the Check Accessibility pane is open.

To learn more about the Accessibility ribbon, view our announcement on the Office Insiders blog. If you are interested in learning more about how to create accessible workbooks, check out our support article Make your Excel documents accessible to people with disabilities.

Next steps

Please let us know what you think! Your feedback and suggestions shape our approach to accessibility and inclusive design. To get in touch, contact the Disability Answer Desk or use Help > Feedback. We look forward to hearing from you!

This is the first in a series of blogs about our accessibility work in Excel. We hope you will join us over the next several months as we discuss exciting new improvements to Excel through an inclusive lens.

Subscribe to our Excel Blog and join our Excel Community to stay connected with us and other Excel fans around the world.

by Contributed | May 5, 2021 | Technology

This article is contributed. See the original author and article here.

The Azure Sentinel: Zero Trust (TIC3.0) Workbook provides an automated visualization of Zero Trust principles cross walked to the Trusted Internet Connections framework. Compliance isn’t just an annual requirement, and organizations must monitor configurations over time like a muscle. This workbook leverages the full breadth of Microsoft security offerings across Azure, Office 365, Teams, Intune, Windows Virtual Desktop, and many more. This workbook enables Implementers, SecOps Analysts, Assessors, Security & Compliance Decision Makers, and MSSPs to gain situational awareness for cloud workloads’ security posture. The workbook features 76+ control cards aligned to the TIC 3.0 security capabilities with selectable GUI buttons for navigation. This workbook is designed to augment staffing through automation, artificial intelligence, machine learning, query/alerting generation, visualizations, tailored recommendations, and respective documentation references.

Azure Sentinel: Zero Trust (TIC3.0) Workbook

Azure Sentinel: Zero Trust (TIC3.0) Workbook

Mapping technology to Zero Trust frameworks is a challenge in the federal sector. We need to change our thinking in security assessment as the cloud evolves at the speed of innovation and growth, which often challenges our security requirements. We need a method to map Zero Trust approaches to technology while measuring change over time like a muscle.

What Are the Use Cases?

There are numerous use cases for this workbook including role alignment, mappings, visualizations, time-bound measurement, and time-saving features:

Roles

- Implementers: Build/Design

- SecOps: Alert/Automation Building

- Assessors: Audit, Compliance, Assessment

- Security & Compliance Decision Makers: Situational Awareness

- MSSP: Consultants, Managed Service

Mappings

- Framework to Requirement to Microsoft Technology

Visualization

- Hundreds of Visualizations, Recommendations, Queries

Time-Bound

- Measure Posture Over Time for Maturity

Time-Saving

- Aggregation & Analysis

- Capabilities Assessment

- Navigation

- Documentation

- Compliance Mapping

- Query/Alert Generation

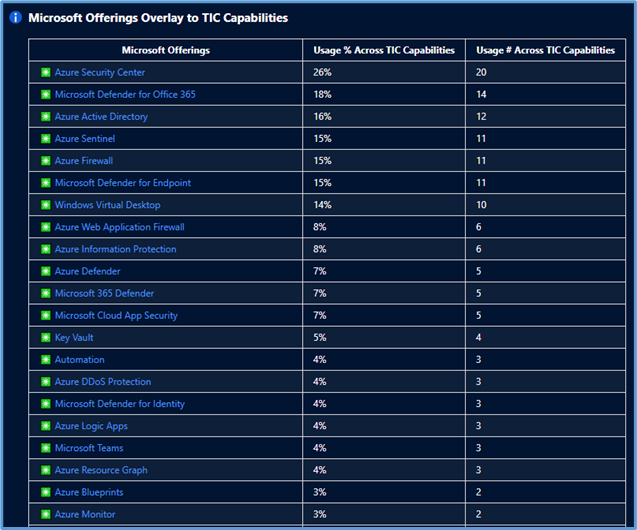

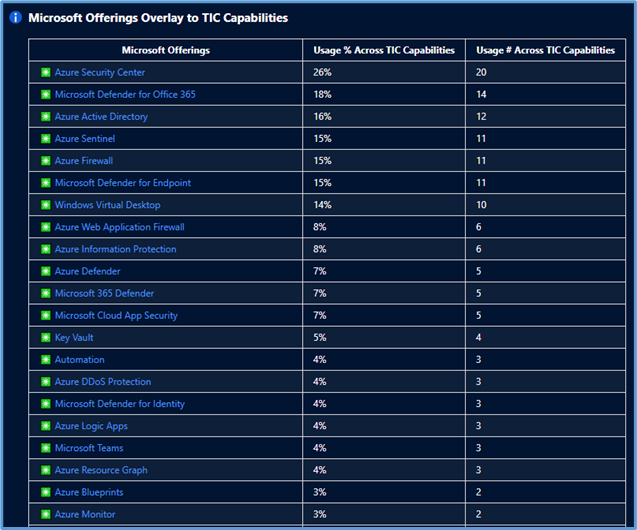

Microsoft Offerings Overlay to TIC Capabilities

Microsoft Offerings Overlay to TIC Capabilities

What is Zero Trust?

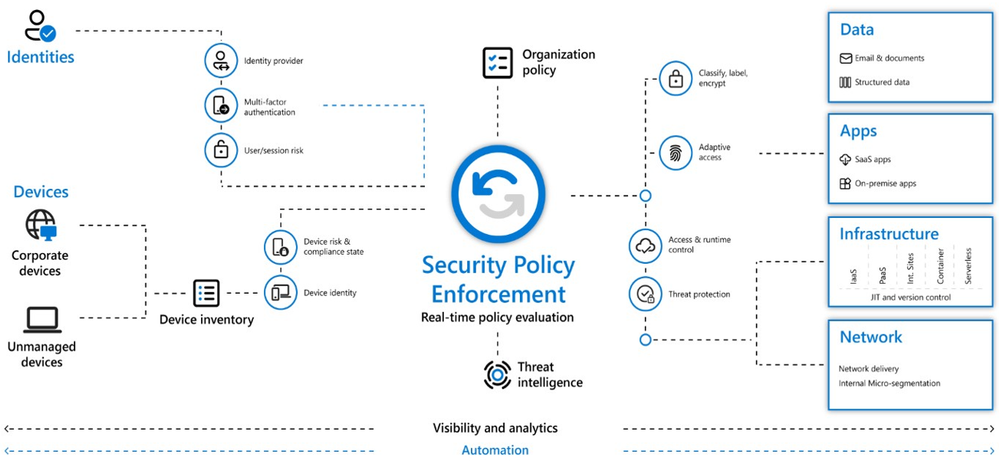

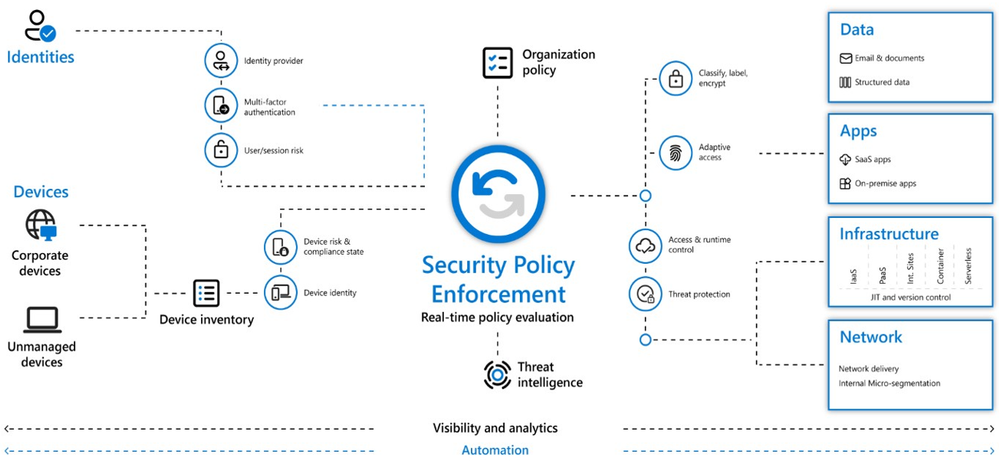

Zero Trust is a security architecture model that institutes a deny until verified approach to access resources from both inside and outside the network. This approach addresses the challenges associated with a shifting security perimeter in a cloud-centric and mobile workforce era. The core principle of Zero Trust is maintaining strict access control. This concept is critical to prevent attackers from pivoting laterally and elevating access within an environment.

At Microsoft, we define Zero Trust around universal principles.

- Verify Explicitly: Always authenticate and authorize based on all available data points, including user identity, location, device health, service or workload, data classification, and anomalies.

- Use Least Privileged Access: Limit user access with just-in-time and just-enough-access (JIT/JEA), risk-based adaptive policies, and data protection to secure both data and productivity.

- Assume Breach: Minimize blast radius for breaches and prevent lateral movement by segmenting access by the network, user, devices, and app awareness. Verify all sessions are encrypted end to end. Use analytics to get visibility, drive threat detection, and improve defenses.

These principles are technology-agnostic and aligned to six Zero Trust pillars.

Zero Trust Defined

Zero Trust Defined

- Identity: Whether they represent people, services, or IoT devices—define the Zero Trust control plane. When an identity attempts to access a resource, verify that identity with strong authentication, and ensure access is compliant and typical.

- Endpoints: Once an identity accesses a resource, data can flow to different endpoints—from IoT devices to smartphones, BYOD to partner-managed devices, and on-premises workloads to cloud-hosted servers. This diversity creates a massive attack surface area. Monitor and enforce device health and compliance for secure access.

- Data: Ultimately, security teams are protecting data. Where possible, data should remain safe even if it leaves the devices, apps, infrastructure, and networks the organization controls. Classify, label, and encrypt data, and restrict access based on those attributes.

- Apps: Applications and APIs provide an interface for data consumption. They may be legacy on-premises, lifted-and-shifted to cloud workloads, or modern SaaS applications. Apply controls and technologies to discover shadow IT, ensure appropriate in-app permissions, gate access based on real-time analytics, monitor for abnormal behavior, control user actions, and validate secure configuration options.

- Infrastructure: Infrastructure—whether on-premises servers, cloud-based VMs, containers, or micro-services—represents a critical threat vector. Assess for version, configuration, and JIT access to harden defense. Use telemetry to detect attacks and anomalies, and automatically block and flag risky behavior and take protective actions.

- Network: All data transits over network infrastructure. Networking controls can provide critical controls to enhance visibility and prevent attackers from moving laterally across the network. Segment networks and deploy real-time threat protection, end-to-end encryption, monitoring, and analytics.

What is Trusted Internet Connections (TIC3.0)?

Trusted Internet Connections (TIC) is a federal cybersecurity initiative to enhance network and perimeter security across the United States federal government. The TIC initiative is a collaborative effort between the Office of Management and Budget (OMB), the Department of Homeland Security (DHS), Cybersecurity and Infrastructure Security Agency (CISA), and the General Services Administration (GSA). The TIC 3.0: Volume 3 Security Capabilities Handbook provides various security controls, applications, and best practices for risk management in federal information systems.

Is Zero Trust Equivalent to TIC 3.0?

No, Zero Trust is a best practice model and TIC 3.0 is a security initiative. Zero Trust is widely defined around core principles whereas TIC 3.0 has specific capabilities and requirements. This workbook demonstrates the overlap of Zero Trust Principles with TIC 3.0 Capabilities. The Azure Sentinel Zero Trust (TIC 3.0) Workbook demonstrates best practice guidance, but Microsoft does not guarantee nor imply compliance. All TIC requirements, validations, and controls are governed by the Cybersecurity & Infrastructure Security Agency. This workbook provides visibility and situational awareness for security capabilities delivered with Microsoft technologies in predominantly cloud-based environments. Customer experience will vary by user and some panels may require additional configurations for operation. Recommendations do not imply coverage of respective controls as they are often one of several courses of action for approaching requirements which is unique to each customer. Recommendations should be considered a starting point for planning full or partial coverage of respective requirements.

Deploying the Workbook

It is recommended that you have the log sources listed above to get the full benefit of the Zero Trust (TIC3.0) Workbook, but the workbook will deploy regardless of your available log sources. Follow the steps below to enable the workbook:

Requirements: Azure Sentinel Workspace and Security Reader rights.

1) From the Azure portal, navigate to Azure Sentinel

2) Select Workbooks > Templates

3) Search Zero Trust and select Save to add to My Workbooks

Navigating the Workbook

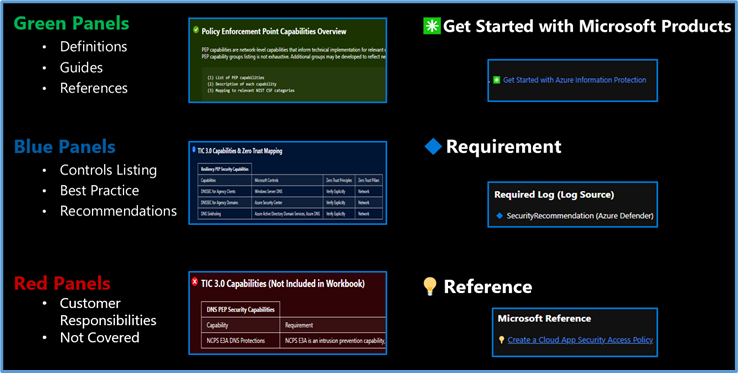

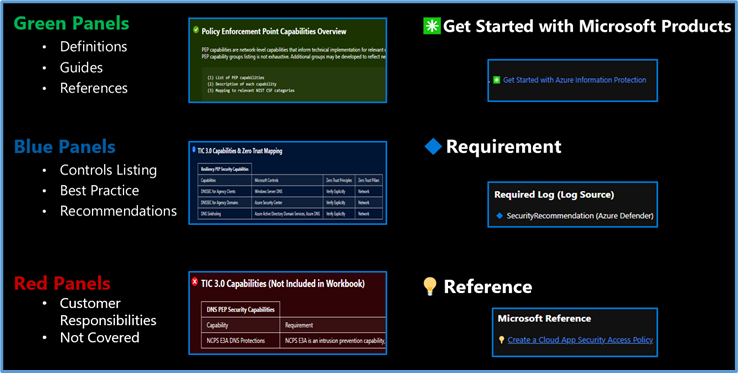

The Legend Panel provides a helpful reference for navigating the workbook with respective colors, features, and reference indicators.

Workbook Navigation

Workbook Navigation

The Guide Toggle is available in the top left of the workbook. This toggle allows you to view panels such as recommendations and guides, which will help you first access the workbook but can be hidden once you’ve grasped respective concepts.

Guide Toggle

Guide Toggle

The Resource Parameter Options provide configuration options to sort control cards by Subscription, Workspace, and Time Range. The Parameter Options are beneficial for Managed Security Service Providers (MSSP) or large enterprises that leverage Azure Lighthouse for visibility into multiple workspaces. It facilitates assessment from both the aggregate and individual workspace perspectives. Time range parameters allow options for daily, monthly, quarterly, and even custom time range visibility.

Resource Parameter Options

Resource Parameter Options

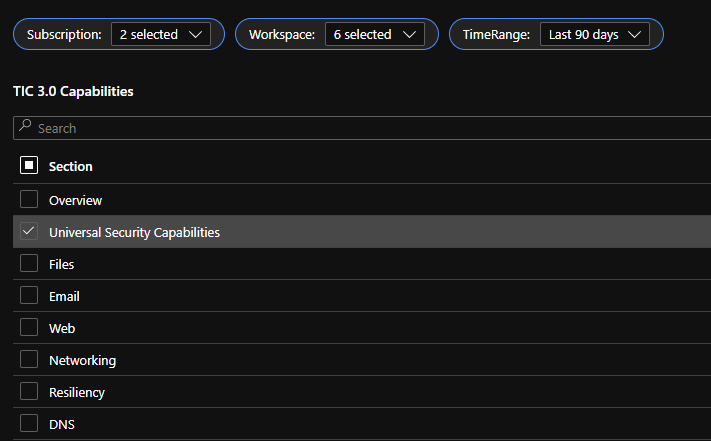

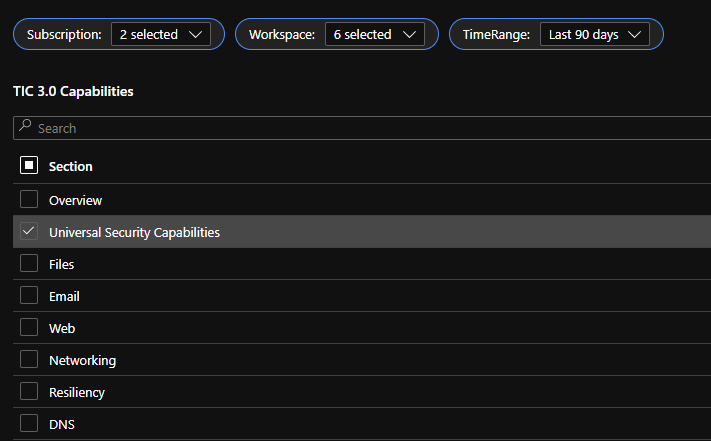

The Capabilities Ribbon provides a mechanism for navigating the desired security capabilities sections highlighted in the TIC3.0 framework. Selecting a capability tab will display Control Cards in the respective area. An Overview tab provides more granular detail of the overlaps between the Microsoft Zero Trust model and the TIC3.0 framework.

Capabilities Selector

Capabilities Selector

This workbook leverages automation to visualize your Zero Trust security architecture. Is Zero Trust the same as TIC 3.0? No, they’re not the same, but they share numerous common themes which provide a powerful story. The workbook offers detailed crosswalks of Microsoft’s Zero Trust model with the Trusted Internet Connections (TIC3.0) framework to better understand the overlaps.

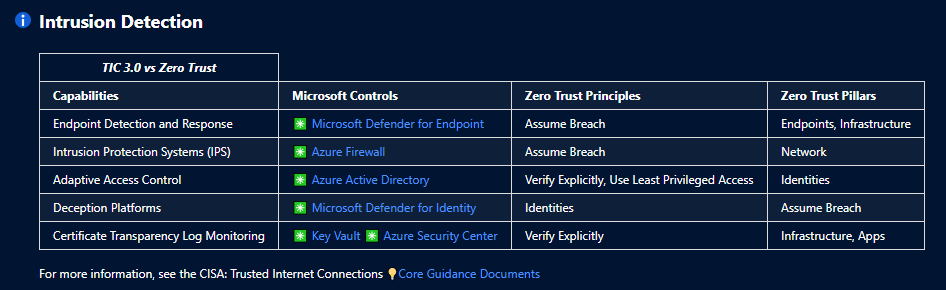

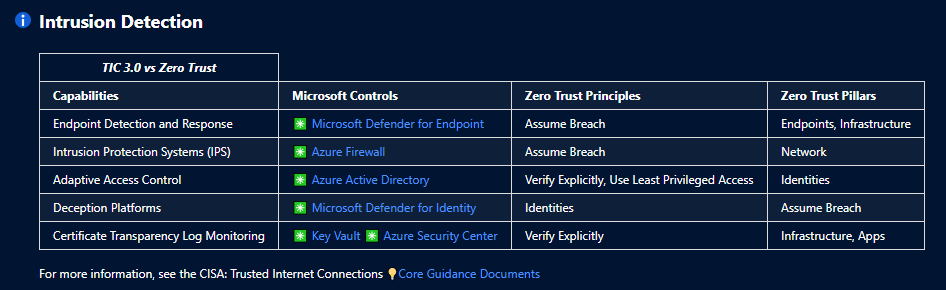

TIC 3.0 Overlay to Microsoft Offerings and Zero Trust Principles

TIC 3.0 Overlay to Microsoft Offerings and Zero Trust Principles

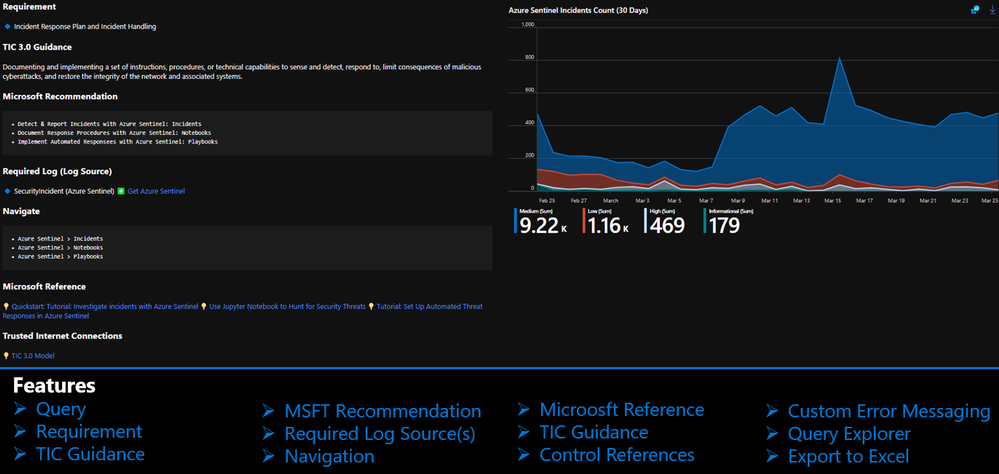

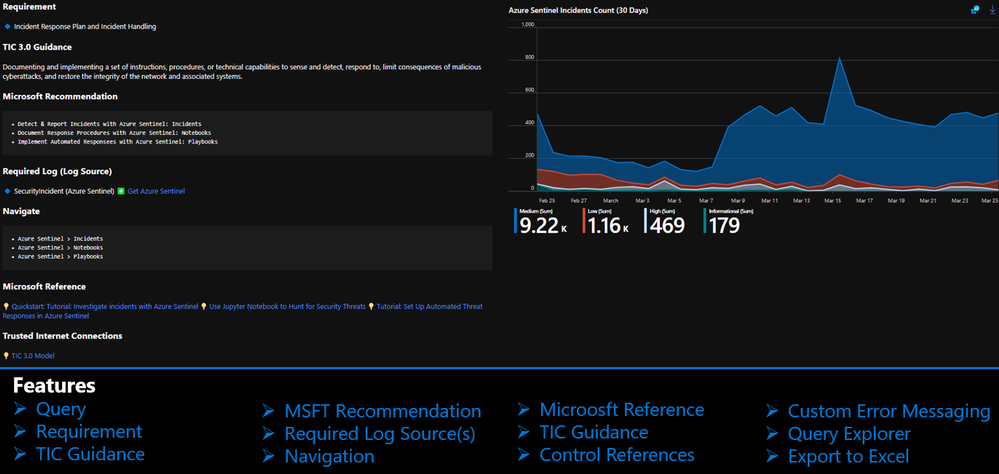

The Azure Sentinel Zero Trust (TIC3.0) Workbook displays each control in a Capability Card. The Capability Card provides respective control details to understand requirements, view your data, adjust SIEM queries, export artifacts, onboard Microsoft controls, navigate configuration blades, access reference materials, and view correlated compliance frameworks.

Capability Card

Capability Card

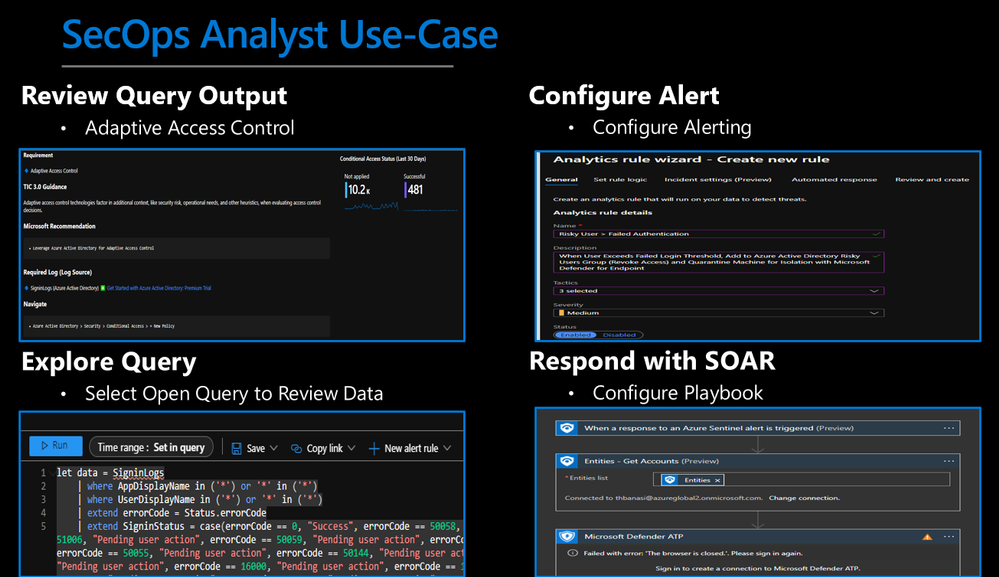

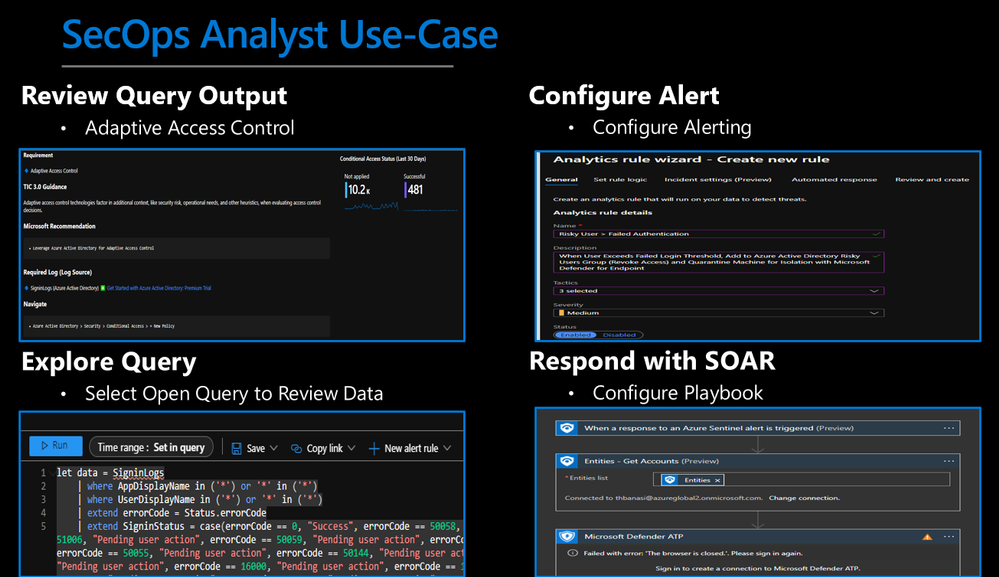

How to Use It?

There are several use cases for the Azure Sentinel Zero Trust (TIC 3.0) Workbook depending on user roles and requirements. The graphic below shows how a SecOps analyst can leverage the workbook to review requirements, explore queries, configure alerts, and implement automation. There are also several additional use cases where this workbook will be helpful:

- Security Architect: Build/design a cloud security architecture to compliance requirements.

- Managed Security Services Provider: Leverage the workbook for Zero Trust (TIC3.0) Assessments.

- SecOps Analyst: Review activity in query, configure alerts, deploy SOAR automation.

- IT Pro: Identify performance issues, investigate issues, set alerts for remediation monitoring.

- Security Engineer: Assess security controls, review alerting thresholds, adjust configurations.

- Security Manager: Review requirements, analyze reporting, evaluate capabilities, adjust accordingly.

SecOps Analyst Use-Case

SecOps Analyst Use-Case

Configurations & Troubleshooting

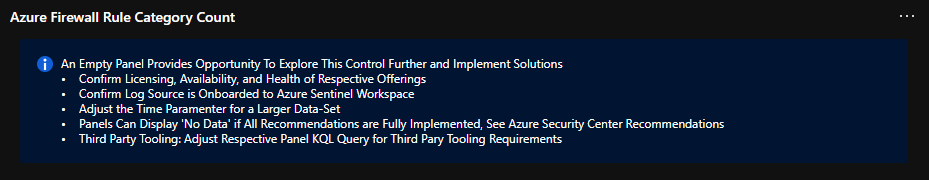

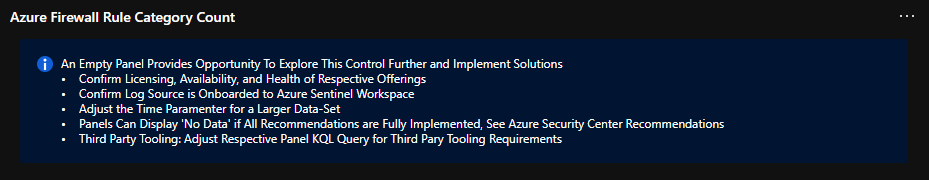

It’s important to note that this workbook provides visibility and situational awareness for control requirements delivered with Microsoft technologies in predominantly cloud-based environments. Customer experience will vary by user, and some panels may require additional configurations and query modification for operation. It’s unlikely that all 76+ panels will populate data, but this is expected as panels without data highlight respective areas for evaluation in maturing cybersecurity capabilities. Capability Cards without data will display the custom error message below. Most issues are resolved by confirming the log source’s licensing/availability/health, ensuring the log source is connected to the Sentinel workspace, and adjusting time thresholds for larger data sets. Ultimately this workbook is customer-controlled content, so panels are configurable per customer requirements. You can edit/adjust Control Card queries as follows:

- Zero Trust (TIC3.0) Workbook > Edit > Edit Panel > Adjust Panel KQL Query > Save

Custom Error Messaging

Custom Error Messaging

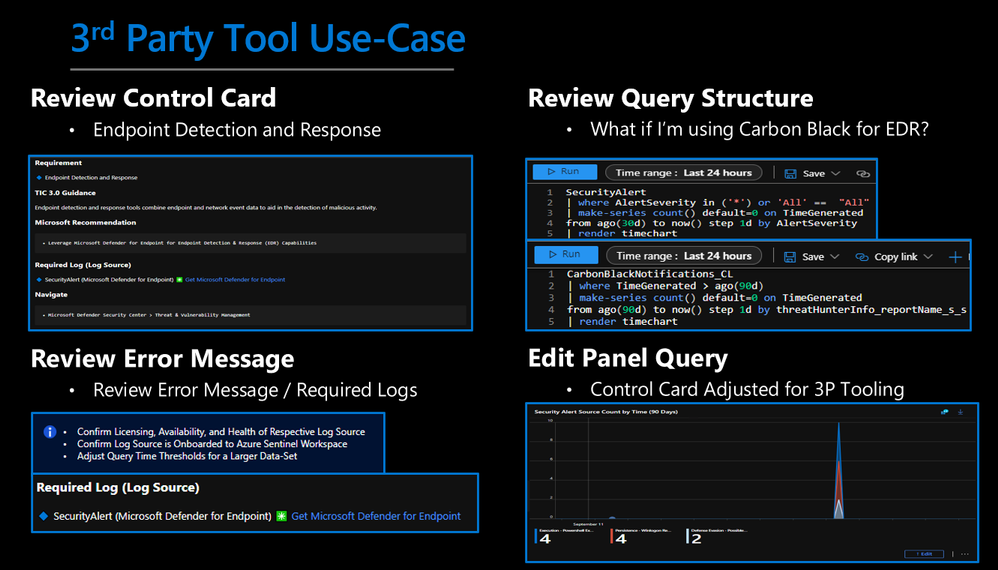

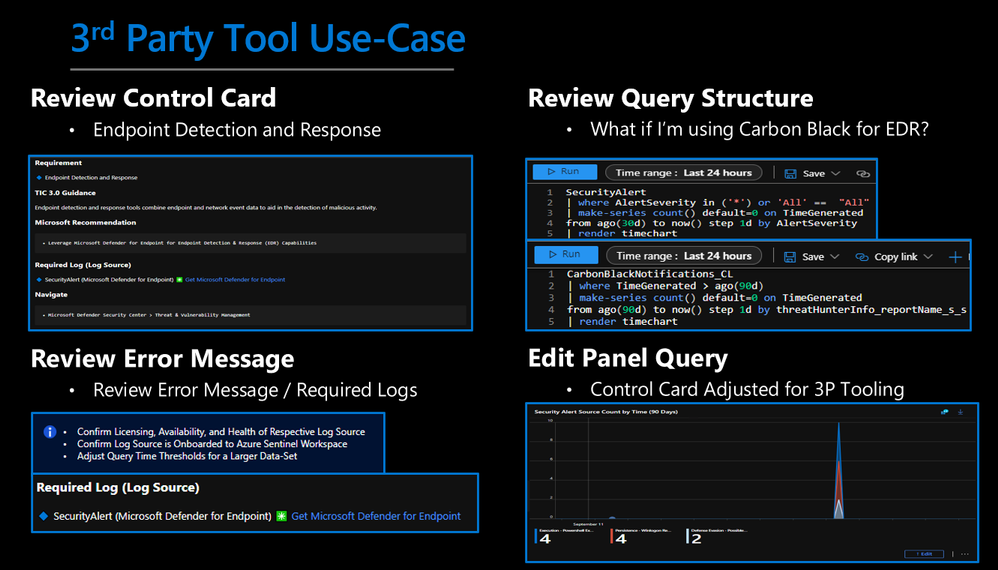

While using Microsoft offerings for the Zero Trust (TIC3.0) Workbook is recommended, it’s not a set requirement as customers often rely on many security providers and solutions. Below is a use-case example for adjusting a Control Card to include third-party tooling. The default KQL query provides a framework for target data, and it is readily adjusted with the desired customer controls/solutions.

3rd Party Tool Use-Case

3rd Party Tool Use-Case

Get Started with Azure Sentinel and Learn More About Zero Trust with Microsoft

Below are additional resources for learning more about Zero Trust (TIC3.0) with Microsoft. Bookmark the Security blog to keep up with our expert coverage on security matters and follow us at @MSFTSecurity or visit our website for the latest news and cybersecurity updates.

Disclaimer

The Azure Sentinel Zero Trust (TIC 3.0) Workbook demonstrates best practice guidance, but Microsoft does not guarantee nor imply compliance. All TIC requirements, validations, and controls are governed by the Cybersecurity & Infrastructure Security Agency. This workbook provides visibility and situational awareness for control requirements delivered with Microsoft technologies in predominantly cloud-based environments. Customer experience will vary by user, and some panels may require additional configurations and query modification for operation. Recommendations do not imply coverage of respective controls as they are often one of several courses of action for approaching requirements which is unique to each customer. Recommendations should be considered a starting point for planning full or partial coverage of respective control requirements.

by Contributed | May 5, 2021 | Technology

This article is contributed. See the original author and article here.

This week we are announcing the preview support of Microsoft Azure SQL Edge on Red Hat Enterprise Linux (RHEL). Developers can now easily extend their existing SQL Server 2019 offerings for RHEL and Red Hat Openshift to IoT edge gateways and devices for consistent edge to cloud data management.

Azure SQL Edge is a small-footprint, edge-optimized database delivered as an Open Containers Initiative (OCI) compatible container for connected, disconnected or hybrid environments and supports the latest generation of the Podman container management tools on RHEL 8. Features include built-in data streaming and time series, with in-database machine learning and graph features for low-latency analytics as well as capabilities for machine learning at the edge to optimize bandwidth, reaction time, and cost. The availability of Azure SQL Edge on RHEL expands the existing ecosystem of tools, applications, frameworks, and libraries for building and running containers on the edge.

Because Azure SQL Edge is packaged as a container, it is easy to deploy and update on RHEL. You can pull the latest image down from an internal repository directly to your edge devices, from the official Microsoft container repository, or manage everything directly from Azure. A basic installation for an Azure SQL Edge developer edition on RHEL is as simple as running:

$ podman run –cap-add SYS_PTRACE -e ‘ACCEPT_EULA=1’ -e ‘MSSQL_SA_PASSWORD=StrongPassword’ -p 1433:1433 –name azuresqledge -d mcr.microsoft.com/azure-sql-edge

That’s it! The Azure SQL Edge database is up and running and you are ready to start developing your RHEL-based project on the edge.

It needs to be noted that in the connected deployment scenario, Azure SQL Edge is deployed as a module of Azure IoT Edge. Azure IoT Edge’s platform support documentation states RHEL as Tier 2 systems. This means RHEL is compatible with Azure IoT Edge but is not actively tested or maintained by Microsoft.

To learn more about Azure SQL Edge visit the product page or watch the latest customer evidence stories

by Contributed | May 5, 2021 | Technology

This article is contributed. See the original author and article here.

Microsoft Defender for Identity is removing non-secure cipher suites to provide best-in-class encryption, and to ensure our service is more secure by default. As of version 2.149 (expected to be deployed on the week commencing 23rd May) Microsoft Defender for Identity will no longer support the following cipher suites. From this date forward, any connection using these protocols will no longer work as expected, and no support will be provided.

Non-secure cipher suites:

- TLS_ECDHE_ECDSA_WITH_AES_256_CBC_SHA

- TLS_ECDHE_ECDSA_WITH_AES_128_CBC_SHA

- TLS_ECDHE_RSA_WITH_AES_256_CBC_SHA

- TLS_ECDHE_RSA_WITH_AES_128_CBC_SHA

Support will continue for the following suites:

- TLS_ECDHE_ECDSA_WITH_AES_256_GCM_SHA384

- TLS_ECDHE_ECDSA_WITH_AES_128_GCM_SHA256

- TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384

- TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256

- TLS_ECDHE_ECDSA_WITH_AES_256_CBC_SHA384

- TLS_ECDHE_ECDSA_WITH_AES_128_CBC_SHA256

- TLS_ECDHE_RSA_WITH_AES_256_CBC_SHA384

- TLS_ECDHE_RSA_WITH_AES_128_CBC_SHA256

What do I need to do to prepare for this change?

Nothing – this change will be automatic and we don’t anticipate it affecting customer environments.

For additional inquiries please contact support.

– Microsoft Defender for Identity team.

by Contributed | May 5, 2021 | Technology

This article is contributed. See the original author and article here.

As schools begin to reopen across the world, concern about student wellbeing is at the forefront of many discussions. Educators want to help students recognize and navigate their emotions by providing regular opportunities to share and be heard.

This need inspired us to develop Reflect in Microsoft Teams for Education.

Reflect can help broaden learners’ emotional vocabulary and deepen empathy for their peers while also providing valuable feedback to educators for a healthy classroom community.

Whatever your role in the educational community (educator, school leader, or part of the wellbeing team), this blog post will show you how to support student wellbeing by encouraging reflective conversations in your school and making emotional check-ins a part of your routine.

Decades of research on the science of learning, and the important role emotions play in how we humans learn and process information, has finally caught up with what most educators have always known – students need more than academics to successfully navigate their way through this complex world they have inherited. Social Emotional Learning (SEL) is about treating a student as a whole person, emotions and all, and cultivating the skills we all need to become engaged citizens leading happy, healthy, successful lives.

CASEL (Collaborative for Academic, Social, and Emotional Learning) defines SEL as:

The process through which children and adults acquire and effectively apply the knowledge, attitudes, and skills necessary to understand and manage emotions, set and achieve positive goals, feel and show empathy for others, establish and maintain positive relationships, and make responsible decisions.

Once we understand SEL, we can implement it throughout the school community. One tool that can help you is the new Reflect app in Microsoft Teams, and here are some tips on how to start.

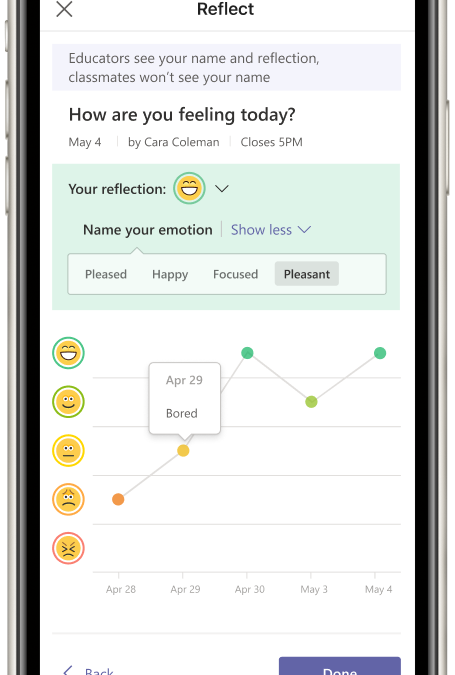

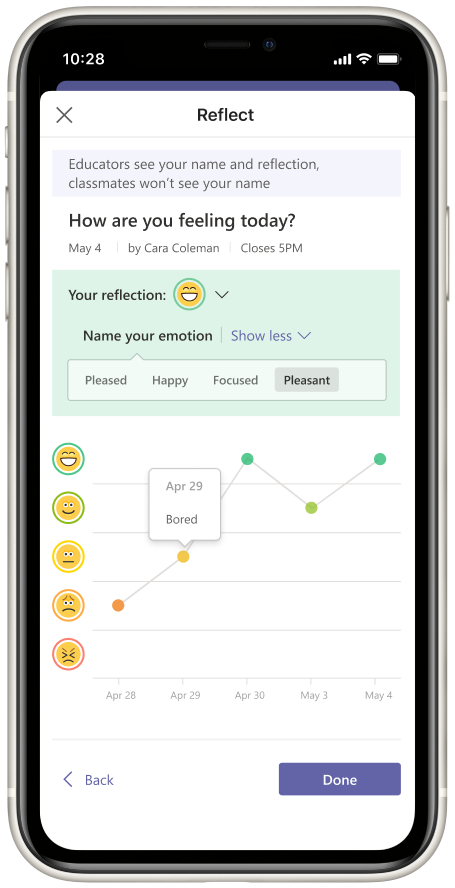

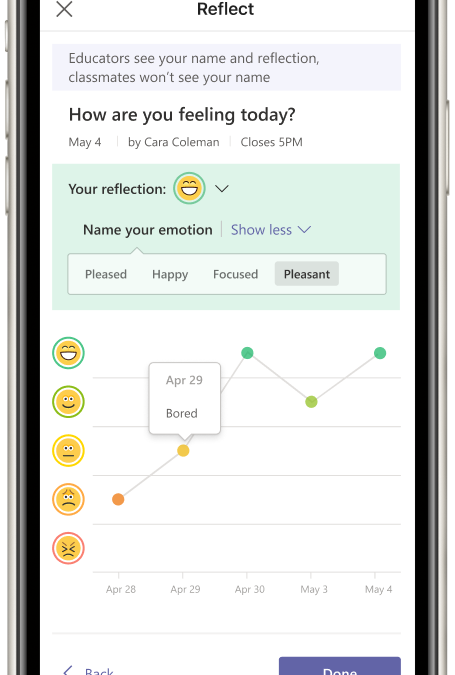

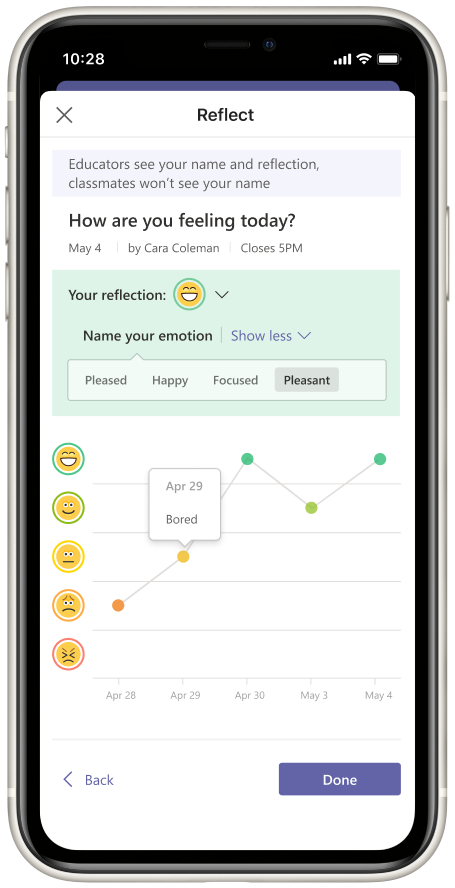

Student journal in Reflect

Student journal in Reflect

What is Reflect, and why it’s used

We want to build a school community that recognizes the whole student. It is important that we support them emotionally, understand when they are going through a rough time, and ensure that the classroom environment stays positive and conducive to learning.

Reflect is an emotional check-in app that helps educators support their students and the class as a whole. Reflect helps students recognize and navigate their emotions by providing regular opportunities to share and be heard. Reflect can help broaden a student’s emotional vocabulary and deepen empathy for their peers while also providing valuable feedback to educators for a healthy classroom community.

This check-in app uses emojis and research-backed emotional granularity to support educators in adding social and emotional learning and support into their routine.

Schools are reopening, Reflect is as valuable as ever

The future is unpredictable. Preparing youth for the challenges of tomorrow in the ambiguity of today is a hurdle that educators have taken in their stride. Social-emotional skills help us better navigate complexity and uncertainty while minimizing the adverse effects of disruption and sustaining the relationships that support the students. The past year’s changes have highlighted the importance of personal connection, accelerated the integration of technology in the classroom, and amplified the role of teachers. Adjusting back to in-person learning is a disruption in itself. Students will need support in re-establishing their bonds, noticing social cues, and communicating their needs to their peers and educators. A regular SEL routine like Reflect can provide an entry point for educators to host classroom conversations and a safe space for students to voice their concerns.

Privacy and Security

Reflect follows the same privacy and security standards as Education Insights to protect students’ sensitive information.

The information collected and shown through Reflect meets more than 90 regulatory and industry standards, including GDPR and the Family Education Rights and Privacy Act (FERPA) for students and children’s security and other, similar, privacy-oriented regulations.

Students never see the names of other students, only how they responded. While they can see the distribution of responses, they cannot see the student names associated with each reflection.

Steps to successful Reflect adoption

1. Enable Reflect

You can locate the Reflect app through the Teams apps gallery or using this link: https://aka.ms/getReflect.

If you don’t find the Reflect app in the gallery or if the link is not accessible, it may be that your IT Admin has limited the app in your tenant. In such a case, please refer your IT Admin to the following document: IT Admin Guide to Reflect in Microsoft Teams.

Only when Reflect is allowed can educators see and use the app in their classes.

Guest users (students or educators) cannot use Reflect.

2. Work with educators, so they understand the importance of regular check-ins and emotional granularity

Reflect helps educators easily check how their students feel in general or about a specific topic, such as learning from home, an assignment, current events, or a change within their community.

Emotional granularity is the ability to differentiate between emotions and articulate the specific emotion experienced. Developing language to talk about feelings is foundational to social and emotional learning. Accurately defining our emotions can help identify the source of the feeling and plan to meet our needs.

Introduce your educators to the app and show them how it can support a positive emotional climate in the classroom. Reflect provides an emotional safe space to grow student-teacher connections and help students develop their emotional vocabulary. We worked with SEL experts to select 50 emotion words that students can identify with as they reflect.

3. Ask educators to try Reflect and share their feedback

The first step is always the hardest. Reflect provides a variety of questions educators can ask their class for some common scenarios. The questions are a closed list, developed with the help of experts, and designed to provide Insights when associated with other data. One way to customize a Reflect check-in is to publish it and then “reply” to the message with specific guidelines.

Ask the educators to share their experiences using Reflect so that you can support them and their specific needs.

Choose a check-in question in Reflect

Choose a check-in question in Reflect

We have created a resource page for educators that includes instructions, short videos, and a free course on getting started.

Listen to their feedback, and work with them to find what works best for their classes.

* The capacity to schedule repeat check-ins and access detailed data for Reflect in Insights will be available summer of 2021

4. Ask educators to use Reflect as part of their routine

Encouraging communication about emotions and supporting students in voicing their needs can go a long way toward preventing feelings of isolation, frustration, and disengagement. Once educators understand how Reflect supports them, suggest to them that they use it as part of their routine.

Here are some suggestions for using Reflect in the classroom.

- Implement a daily check-in to provide students with opportunities to practice evaluating and naming their emotions and ensure educators have a touchpoint with every student every day.

- Jumpstart conversations with students after a difficult day by asking What does the mood in our classroom feel like? This can be an excellent opportunity to model self-reflection and set goals together as a class.

- To support learning, assess student confidence about a concept or assignment, and plan accordingly.

- Celebrate wins with a Reflect check-in after a successful activity. Remember, mistakes can be framed as successes too!

- Identify dynamics between students by asking How do your friendships feel today? This can be paired with conversations or stories about sharing, bullying, and healthy relationships.

5. Empower the educator

The educator has complete control of when (or even if) to use Reflect. Only they should decide whether to post a new Reflect check-in, when and what type. If an educator feels it is not suitable for their classroom or they cannot support the students that way, they will not publish a new check-in, and you need to support them in that decision.

6. Don’t set goals for students

There is no right or wrong answer when dealing with emotions. All feelings are valid, and we want students to provide an honest assessment from their perspective and feel safe when describing their emotions. The goal is to develop a deeper understanding of student needs and work together to create a more positive climate and foster greater well-being in class.

We want to make the classroom a place where student emotions are valued, and everyone feels safe and heard.

7. Use Insights reports

Through Education Insights, educators can see how students respond to check-ins over time, making it easier to identify and address student needs.

The digital activity report now includes Reflect check-in data, with additional Reflect insights coming soon.

Education Insights – Reflect check-ins in the digital activity report

Education Insights – Reflect check-ins in the digital activity report

Educators can also track the adoption of the tool amongst their students to make sure they feel comfortable sharing their emotions. If there is low adoption, you may want to provide a framework to help establish the classroom as a safe space to share and support them in developing the emotional vocabulary needed to feel confident in sharing.

If you have Education Insights Premium (currently in preview), you can also see adoption across classes, grade level, school, etc., in your organizational view, and provide support and assistance for those who either don’t use Reflect at all or stopped using it after the initial adoption.

We’re always looking for ways to make Education Insights and Reflect better. Have questions, comments, or ideas? Let me know! Add your idea here, share your comment below, and find me on Twitter (@grelad).

Elad Graiver

Senior Program Manager, Education Insights and Reflect

by Contributed | May 5, 2021 | Technology

This article is contributed. See the original author and article here.

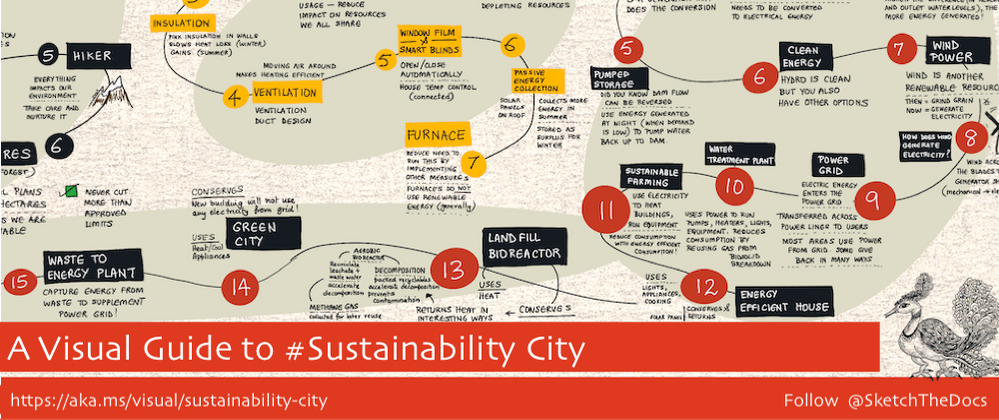

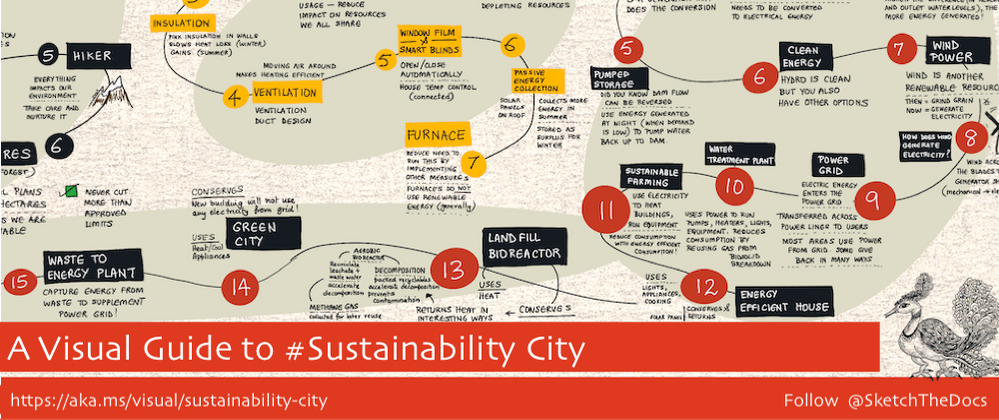

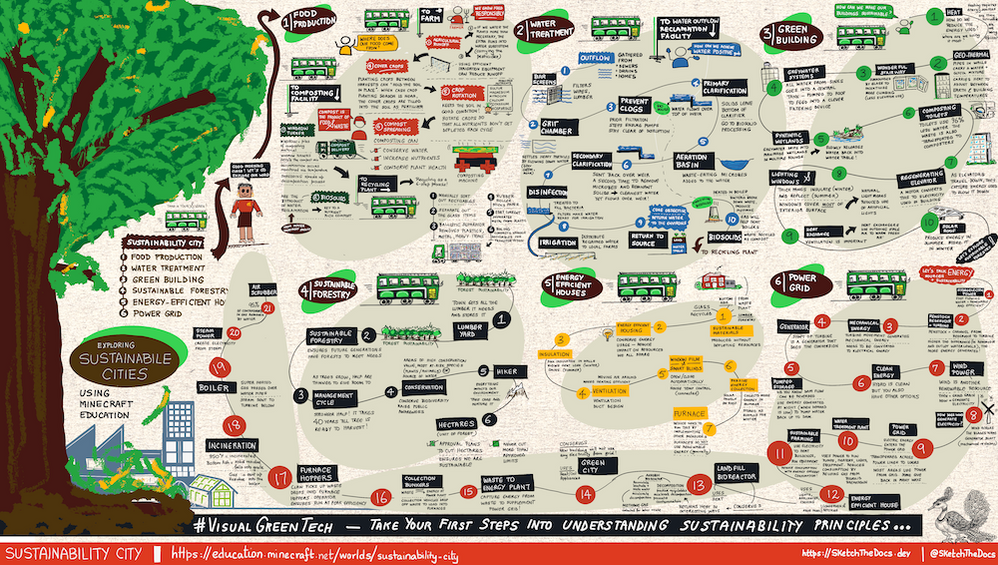

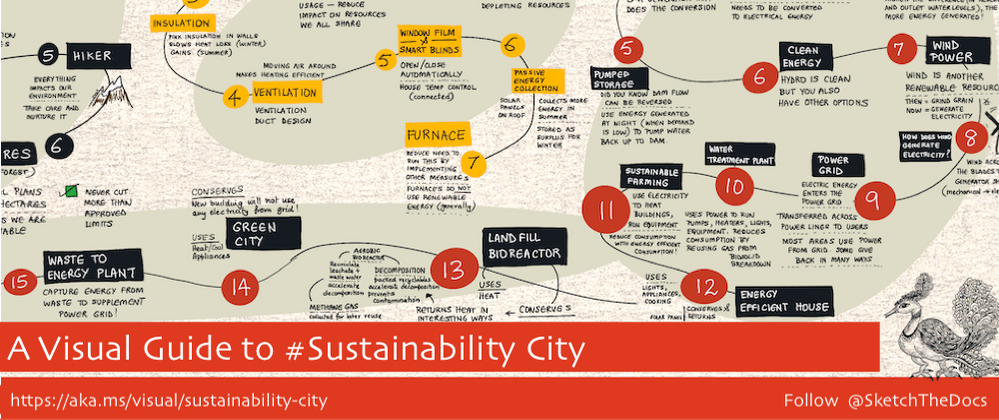

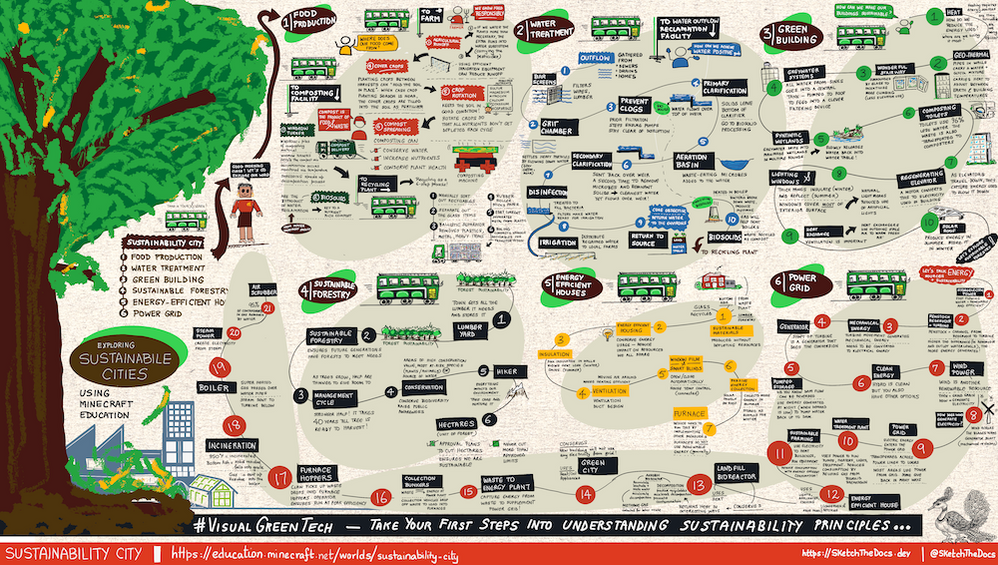

A Visual Guide To #Sustainability City

Welcome to the second in my series of visual guides focused on the topic of Sustainability and Green Tech. The first one focused on a Visual Guide To Sustainable Software Engineering, visualizing the core philosophies and eight principles of sustainable software engineering, as defined by this fantastic Microsoft Learn Module.

Putting Philosophy Into Practice

In fact, it was that first philosophy (“Everyone has a part to play in the climate solution”) that inspired me to work with the Microsoft Green Cloud Advocacy team on the #VisualGreenTech challenge for EarthDay and co-host a special Earth Day themed episode of #HelloWorld featuring Green Tech experts from Microsoft.

The challenge itself featured 24 prompts, three of which explored Sustainability interactively using Minecraft Education Edition resources for Earth Day. The first of these prompts focused on Sustainability City – a Minecraft world where you can take green buses around a bustling city, visiting various facilities to learn about sustainable practices targeting water treatment, food production, sustainable forestry, green buildings, energy-efficient homes, and the power grid. This community-created video does a great job of navigating the world in under eight minutes.

It was there that my personal journey into putting that philosophy to practice began!

Using Minecraft To Motivate Sustainability Education

Minecraft is an amazing resource for educating K-12 students on sustainability in actionable ways. The Minecraft Education Edition provides downloadable words that students can navigate and it has detailed lesson plans with activities and discussion guides to help students go from awareness to engagement, and action.

In my case, I took advantage of our Microsoft employee access to the Minecraft Edu Edition to begin a sustainability city journey with my 12-year-old. We took every bus, talked to every character, and had interesting follow-up conversations like: where does our water come from? how can we be more sustainable at home during the pandemic? and my favorite: should we create our own vegetable garden so we know where our food comes from?

If you are a parent, I strongly advocate for doing this exploration with kids and using the visual guide below to have a conversation once you leave the world. If you are an educator with access to this edition of Minecraft, I hope you find this visual guide a good resource for classroom conversations or continued awareness of what they learned, once they have completed that lesson.

Visual Guide & Navigation

Here is the visual guide to Sustainability City. You can find a hi-res downloadable version of this visual guide here – warning: this is a large file (13MB) so make sure you have the data/bandwidth to download it. See this tweet for a behind-the-scenes time-lapse replay of how it was created.

The visual guide has six sections, each mapping to one of the regions of Sustainability City. Start from the top left and work your way clockwise to the last one. Here is what you’ll learn. Start with Food Production to explore sustainable farming practices including water reclamation and composting. Next, travel to the Water Outflow Reclamation Facility to learn how water from sewers and drains is “cleaned” and used for irrigation or returned to source (water positive) – the removed biosolids become fodder for composting.

Then, explore sustainable practices in the construction of large buildings (make them self-sustaining in energy needs) and explore sustainable forestry practices required to support our lumber needs. Finally, we look at energy-efficient housing and explore the power grid in some detail. As we know, electricity is a proxy for carbon, and understanding the various ways we generate, transport, and use, energy is critical to sustainability education.

I hope you found the guide useful. Making visual guides takes time but is infinitely rewarding. Have comments or feedback? Do leave them below.

by Contributed | May 5, 2021 | Technology

This article is contributed. See the original author and article here.

Today and every Wednesday Data Exposed goes live at 9AM PT on LearnTV. Every 4 weeks, we’ll do a News Update. We’ll include product updates, videos, blogs, etc. as well as upcoming events and things to look out for. We’ve included an iCal file, so you can add a reminder to tune in live to your calendar. If you missed the episode, you can find them all at https://aks.ms/AzureSQLYT.

You can read this blog to get all the updates and references mentioned in the show. Here’s the May 2021 update:

Product updates

The two main updates for this month are for Azure SQL Managed Instance and Azure SQL Database Hyperscale.

Azure SQL Managed Instance announced the general availability of service-aided subnet configurations. Service-aided subnet configuration allows users to remain in full control of TDS traffic, and Azure SQL Managed Instance takes responsibility to ensure uninterrupted flow of management traffic in order to meet SLAs. This configuration builds on top of the virtual network subnet delegation feature to make it easier for customers, by providing automatic network configuration management and enabling service endpoints.

These service endpoints or ‘service tags’ provide a was to reference essentially all IP addresses related to a given service in a specific region. For example, if you want to configure access to storage accounts to keep backups and audit logs in the region of West US, you could use the tag Storage.WestUs. Then, you can create a user-defined route (UDR) as the mechanism that allows traffic to leave the SQL MI virtual network and reach your storage account.

The other major announcement is a public preview for geo-replication in the Azure SQL Database Hyperscale service tier. This has been available in other Azure SQL Database tiers for a while, and this capability enables cross region business continuity and disaster recovery by allowing you to create one or more replicas in the same or different regions. This announcement brings Azure SQL Hyperscale one step closer to full feature parity with Azure SQL Database. Learn more about the preview here.

From a tools perspective, special guest Alexandra Ciortea came on to talk about SQL Server Migration Assistant (SSMA) and the latest release. She mentioned several great references for how to stay up to date, which you can find below:

Videos

We continued to release new and exciting Azure SQL episodes this month. Here is the list, or you can just see the playlist we created with all the episodes!

- Kate Smith: Understanding the Benefits of Intelligent Query Processing

- [MVP Edition] Argenis Fernandez: Storage 101 for Azure SQL and SQL Server Engineers

- Aaron Nelson: How to Parameterize Notebooks for Automation in Azure Data Studio

- Amit Khandelwal: Use Helm Charts from Windows Client Machine to Deploy SQL Server 2019 Containers on Kubernetes

- Manoj Raheja: Get Started with Azure Data Explorer using Apache Spark for Azure Synapse Analytics

- Mohamed Kabiruddin: Get Started with the New Database Migration Guides to Migrate your Databases to Azure

We’ve also had some great Data Exposed Live sessions. Subscribe to our YouTube channel to see them all and get notified when we stream. Here are some of the recent live streams.

- Something Old, Something New: Do Kangaroos Prefer Cake or Frosting?

- Azure SQL Security Series: Understanding Access and Authentication

- Ask the Experts: SQL Edge to Cloud

Blogs

As always, our team is busy writing blogs to share with you all. Blogs contain announcements, tips and tricks, deep dives, and more. Here’s the list I have of SQL-related topics you might want to check out.

- Azure Blog, data-related

- SQL Server Tech Community

- Azure SQL Tech Community

- Azure SQL Devs’ Corner

- Azure Database Support (SQL-related posts)

Special Segment: SQL in a Minute, Microsoft Docs

We introduced a new segment this month, SQL in a Minute, led by Cheryl Adams. Cheryl and Jason Roth came on to talk about the latest updates and how to contribute to Microsoft Docs. You can find the documentation at https://aka.ms/sqldocs and the contributors guide at https://aka.ms/editsqldocs.

Special Segment, Citus and Open source updates

Claire Giordano, Principal PM Manager in Azure Data, came on the show to share some resources and updates around Citus and open source databases. Here are some references if you want to learn more:

Upcoming events

As always, there are lots of events coming up this month. Here are a few to put on your calendar and register for:

May 10: SQLDay 2021

Azure SQL Workshop, Bob Ward and Anna Hoffman

Keynote, Bob Ward

SQL Day Q&A, Bob Ward and Buck Woody

Inside the Memory of SQL, Bob Ward

Presentation Skills for the Data Profession, Buck Woody

May 11: Azure Webinar Series: Debugging Web Apps on Azure App Service and Azure SQL

May 14: PyCon 2021

What we learned from Papermill to operationalize notebooks, Alan Yu and Vasu Bhog

May 15: Data Weekender

Keynote – Into the Dataverse, Buck Woody

May 15: Data Saturday Southwest US

Keynote, Buck Woody

May 18: Redgate Azure SQL Managed Instance Webinar

Inside Azure SQL Managed Instance, Bob Ward

May 18: Techorama

From Oops to Ops: Incident Response with Notebooks, Julie Koesmarno and Shafiq Rahman

May 20: Azure Webinar Series: Implementing DevOps Best Practices on Azure SQL

May 25- 27: Microsoft Build

Keynote, Rohan Kumar and Jason Anderson

Learn Live: Build full stack applications with Azure Static Web Apps and Azure SQL Database, Anna Hoffman and Davide Mauri

Roundtable: Develop Apps with SQL DB, Anna Hoffman, Davide Mauri, Sanjay Mishra

Roundtable: Mission Critical workloads on Azure SQL DB, Anna Hoffman, Abdul Sathar Sait, Sanjay Mishra, Roberto Bustos, Emily Lisa

In addition to these upcoming events, here’s the schedule for Data Exposed Live:

May 12: Azure SQL Virtual Machine Reimaged Series with David Pless and Pam Lahoud

May 19: Deep Dive: Azure SQL Insights with Alain Dormehl

Plus find new, on-demand Data Exposed episodes released every Thursday, 9AM PT at aka.ms/DataExposedyt

Featured Microsoft Learn Module

Learn with us! This month I highlighted the Deploy highly available solutions by using Azure SQL. Check it out!

By the way, did you miss Learn Live: Azure SQL Fundamentals? On March 15th, Bob Ward and I started delivering one module per week from the Azure SQL Fundamentals learning path (https://aka.ms/azuresqlfundamentals ). Head over to our YouTube channel https://aka.ms/azuresqlyt to watch the on-demand episodes!

Anna’s Pick of the Month

This month I am highlighting the Azure SQL Digital Event that happened on May 4th. This was an amazing event led by Azure Data CVP Rohan Kumar. Rohan spoke about the current and future innovations in Azure SQL, and it was hosted by Bob Ward with cameo from Scott Guthrie. I got to demo a few cool things, along with several other Microsoft Product Group members and Microsoft MVPs. You don’t want to miss the on-demand! Register at https://aka.ms/AzureSQLDigitalEvent to get the recordings.

Until next time…

That’s it for now! Be sure to check back next month for the latest updates, and tune into Data Exposed Live every Wednesday at 9AM PST on LearnTV. We also release new episodes on Thursdays at 9AM PST and new #MVPTuesday episodes on the last Tuesday of every month at 9AM PST at aka.ms/DataExposedyt.

Having trouble keeping up? Be sure to follow us on twitter to get the latest updates on everything, @AzureSQL. You can also download the iCal link with a recurring invite!

We hope to see you next time, on Data Exposed :)

–Anna and Marisa

by Contributed | May 5, 2021 | Technology

This article is contributed. See the original author and article here.

We are pleased to share a set of new resources on storytelling for Champions!

Our overall goal for delivering these new resources is to enable you and your organization to achieve greater results from your adoption and change management programs. Stories are an amazing way to connect with people through shared experiences, inspire others to act, and are an influential way to communicate. Storytelling can play a key role in any change journey as a powerful way to showcase examples of the new behavior change in action.

I have worked with enterprise customers at Microsoft for nearly two decades and have seen the impact of great adoption success stories when they are shared by champion business users who have realized the positive impact of change. This has helped them grow and enhance executive sponsorship, lowered resistance to change among later adopters, inspired new ideas, and reinforced the value of the change program.

The resources include:

- A new storytelling guide that includes:

- A framework for finding and growing good success stories: Empower, Capture, Amplify, Learn;

- A sample structure for capturing compelling stories: Situation, Complication, Resolution, The Point;

- And additional learning resources.

- A storytelling course on LinkedIn Learning where you can also earn a certificate for completion.

We encourage you to take a look and leverage with your teams. We also welcome your feedback here on how we can improve this and other resources on the site to keep inspiring your communities to achieve more!

Access the content at storytelling for Champions.

Recent Comments