by Contributed | May 12, 2021 | Technology

This article is contributed. See the original author and article here.

Do you already have a solution in place to deal with insider risks? When it comes to remediating insider risks, many organizations either deploy a simple transactional, rules-based solution such as data loss prevention (DLP) or they deploy a much more complex and resource-intensive solution such as user and entity behavior analytics (UEBA). From our own experience and what we’ve learned from our Microsoft 365 customers, neither of these two approaches are effective when it comes to addressing insider risks.

While identifying insider risks can be complex, implementing a holistic solution that looks end-to-end will allow you to reduce the complexity and zero in on the relevant trends that lead to heightened risk. With privacy built-in, pseudonymization by default, and strong role-based access controls, Insider Risk Management is used by companies worldwide to identify insider risks and take action with integrated collaboration workflows.

To help organizations quickly get started in identifying and accelerating time to action on insider risks, we released a number of capabilities at Ignite. Today we are excited to announce the public preview of additional new features that further broaden the analytics and investigation capabilities already in the solution, making it easier to investigate and act on insider risks.

Enabling a richer and efficient investigation experience

Machine learning technology is amazing in that it can reason over and correlate millions of disparate signals to identify hidden risks. This is why we have several machine learning-based policy templates focused on specific risks, such as IP theft by departing employees and confidential data leakage by disgruntled employees, built into the Insider Risk Management solution.

However, what happens if you become aware of a potential insider risk through a tip? How do you efficiently investigate this tip without having to resort to manually trying to piece together various activities?

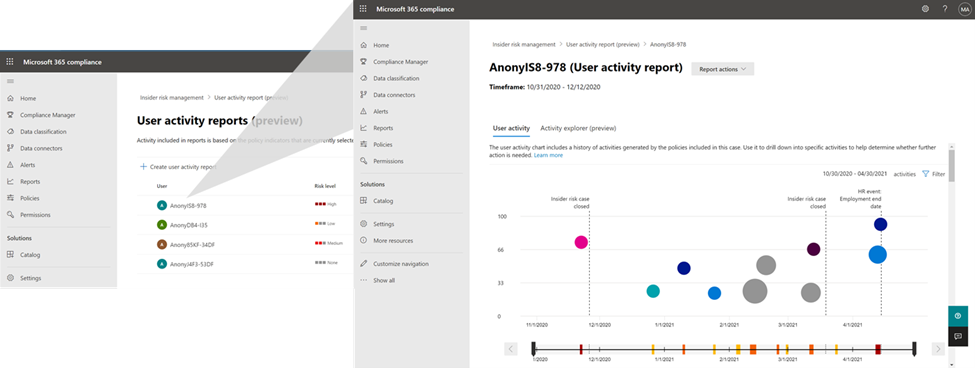

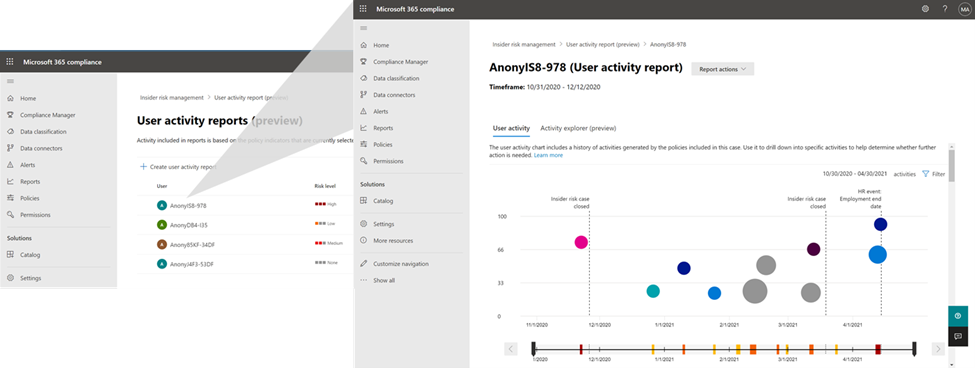

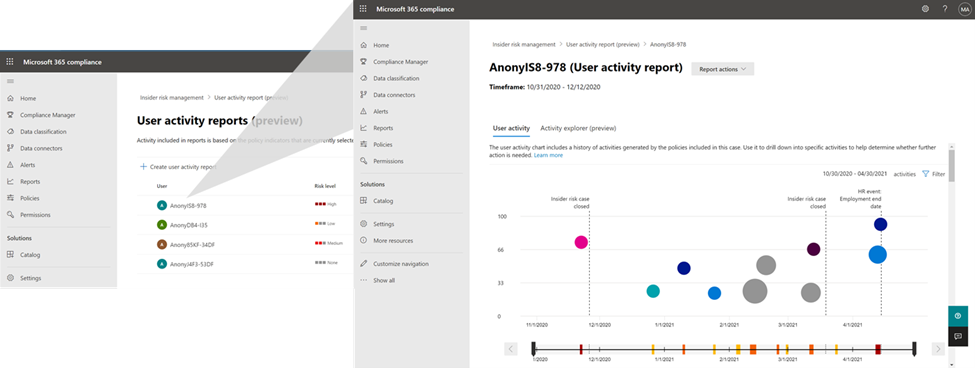

This is where the new User Activity report is valuable. This new capability provides the Investigator in Insider Risk Management with the ability to simply generate a report of the relevant activities of the user that they received a tip about, and quickly investigate those activities to understand the risk.

User Activity report

User Activity report

To make the alert review process more efficient, we have now enabled persistent filters. With this improved experience, selected filters on the alerts list, such as filters for policy, alert severity, alert status, and date range will persist throughout your alert review process. There is no need to reset filters to see your desired set of focused alerts as you move on to select the next alert to review. This provides a frictionless and efficient experience for analysts to quickly make progress through their queue of alerts.

Priority Content limits have also now been increased in policy from 15 to 50. This means you can select up to 50 SharePoint sites, 50 Microsoft Information Protection Labels, and 50 Sensitive Information Types to prioritize in your policy. This allows you to broaden the activity and content that you want to prioritize for risk scoring and also investigate the potential impact when risks are identified.

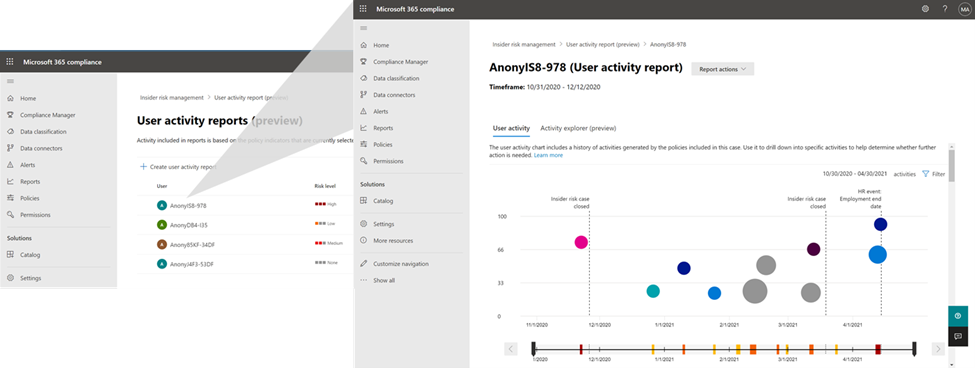

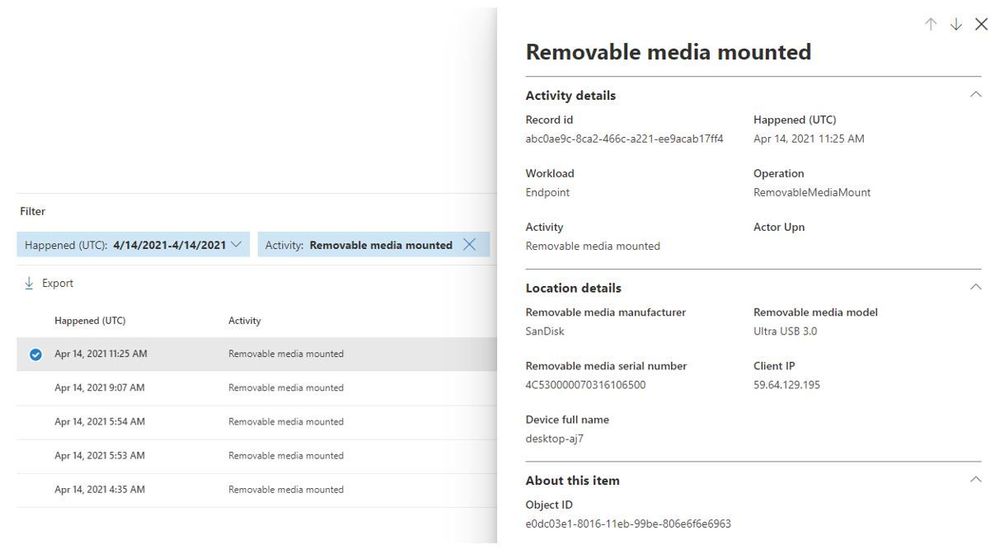

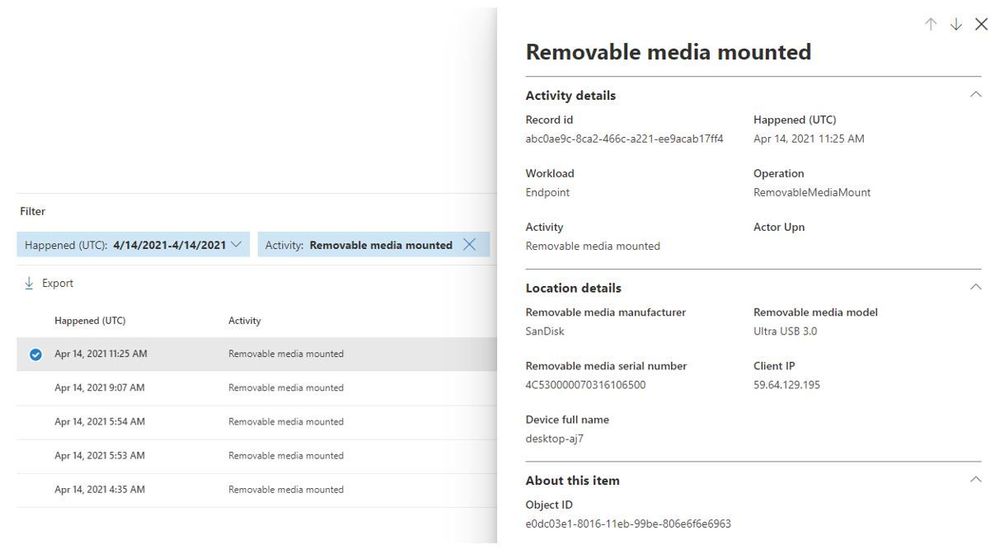

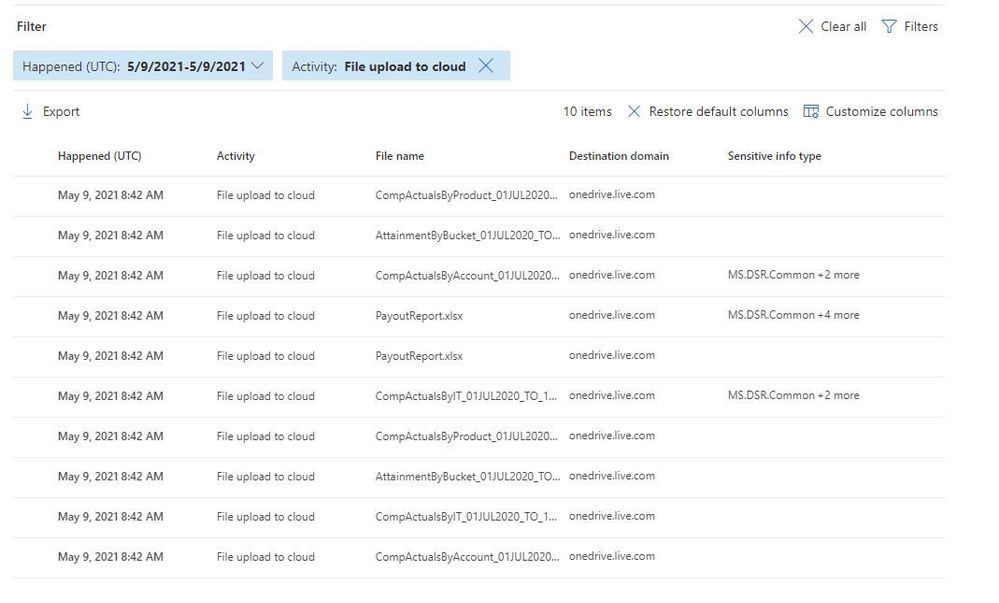

The Activity Explorer in Insider Risk Management has been very well received by customers as it provides comprehensive analytics and detailed information about alerts. With this release, we are making leveraging Activity Explorer for insider risk investigations even more efficient. Now, when activities are filtered to show only specific types of activities or workloads the columns associated with the activity of workload will dynamically update to show only the information which is most relevant.

Removable media mounted

Removable media mounted

File upload to cloud

File upload to cloud

Finally, we continue to further enrich our policy templates by making improvements to our Sensitive Information Type (SIT) classifications. SIT is used by the solution to provide an improved fidelity of matches for sensitive information within documents. In the past in order to leverage SIT in detecting whether someone is trying to exfiltrate sensitive information such as credit cards in email, you needed to have an associated DLP policy setup. With this release we are removing that requirement so that now all you have to do is opt-in to the Exchange Online indicator in the policy and the solution will automatically detect for SIT, no configuration or DLP policy needed.

Get started today

We have new videos showcasing how the new features in Insider Risk Management can help customers identify and remediate insider risks. We also have a new interactive guide to help you become familiar with the various capabilities in the solution.

The new features announced today will start rolling out to customers’ tenants in the coming days and weeks. Insider Risk Management is one of several products from Microsoft 365 E5, including Communication Compliance, Information Barriers, and Privileged Access Management, that helps organizations mitigate insider risks and policy violations. You can sign up for a trial of Microsoft 365 E5 or navigate to the Microsoft 365 compliance center to get started.

Learn more about Insider Risk Management, how to get started, and configure policies in your tenant in this supporting documentation. Keep a lookout for updates to the documentation with information on the new features over the coming weeks.

Finally, if you haven’t listened to our podcast “Uncovering Hidden Risks”, we encourage you to listen about the technologies used to detect insider risks and what is required to build and maintain an effective insider risk management program.

We are excited about all the new innovations coming out with this new release and look forward to hearing your feedback.

Thank you,

Talhah Mir, Principal Program Manager, Microsoft 365 Security and Compliance Engineering

by Contributed | May 12, 2021 | Technology

This article is contributed. See the original author and article here.

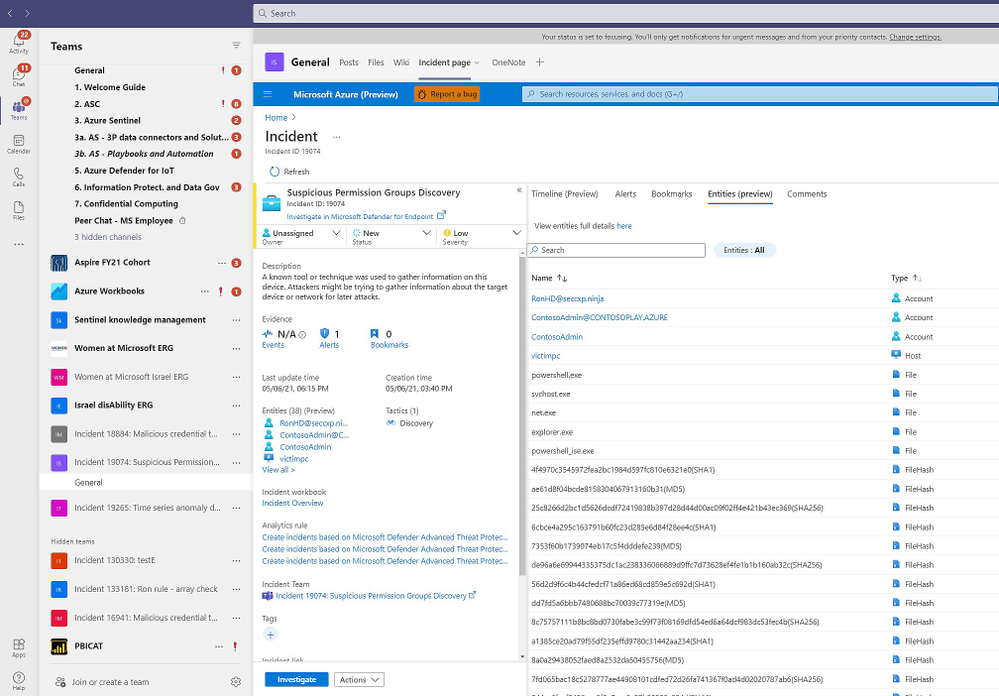

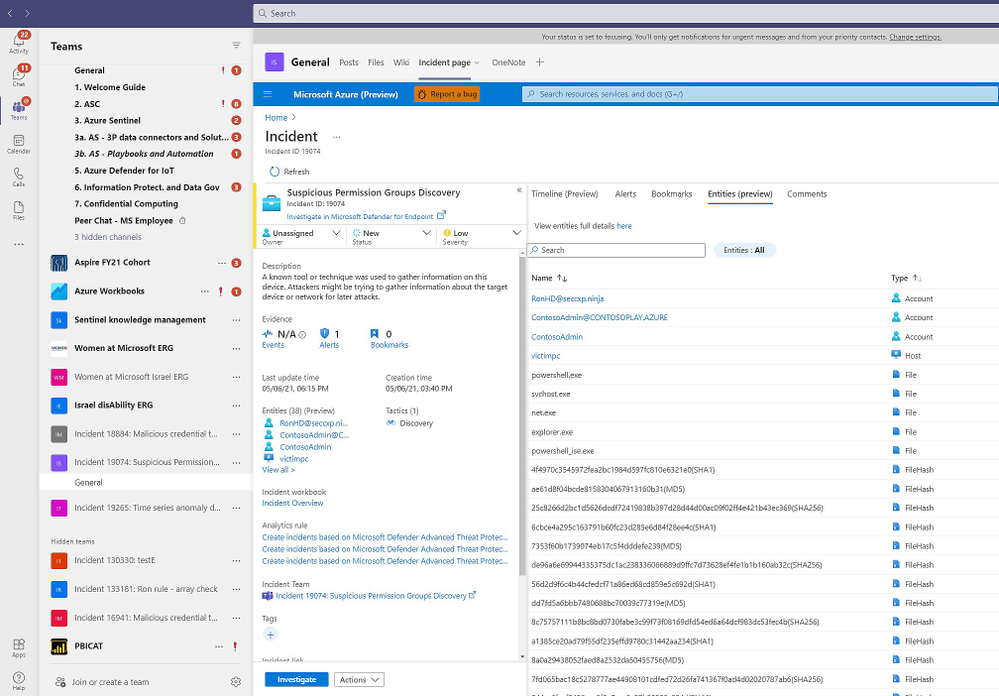

This past year has put unprecedented strain on security teams, and many are preparing to “return to normal” with a new view of what it will take to protect their organizations. Environments are still increasingly distributed, threats are more difficult to catch through overwhelming noise, and security analysts need to efficiently work across remote teams.

Supporting security teams through these challenges was top of mind for the Azure Sentinel team as we continued to deliver new innovation in the product. Our latest releases for the RSA Conference 2021 are all about improving quality of life for security operations teams.

Today, we are announcing the launch of a solutions marketplace in Azure Sentinel, providing customers with an easy want to unlock new use cases. One of the most exciting new solutions is designed to help you monitor and respond to threats in your SAP environments, often home to some of your most business-critical data and applications. We’re also releasing new features to help you harness machine learning to efficiently detect threats through the noise. Plus, we’re making it easier for SecOps teams to work together with native Microsoft Teams collaboration integration.

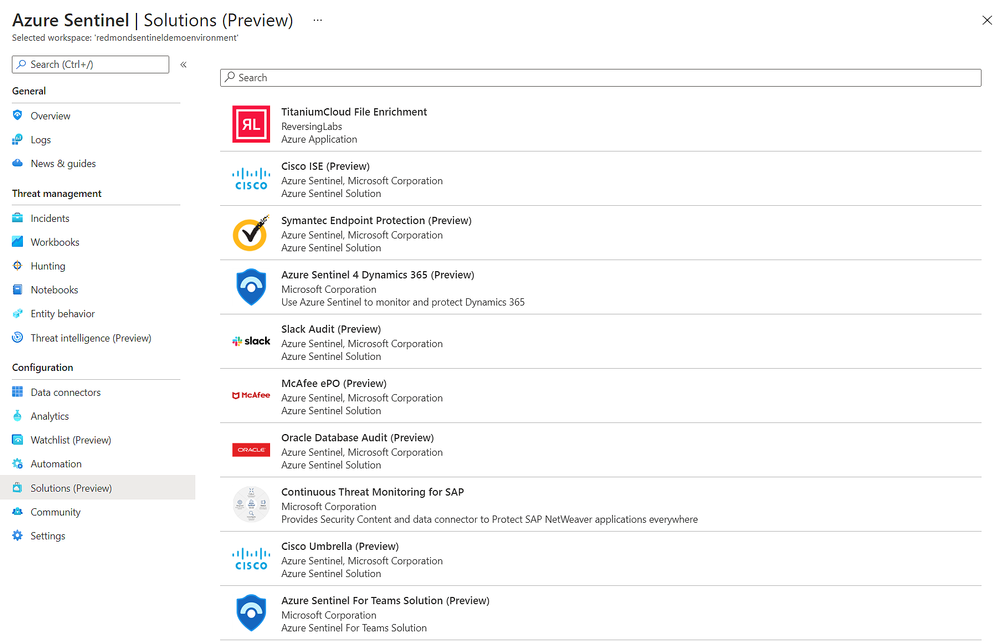

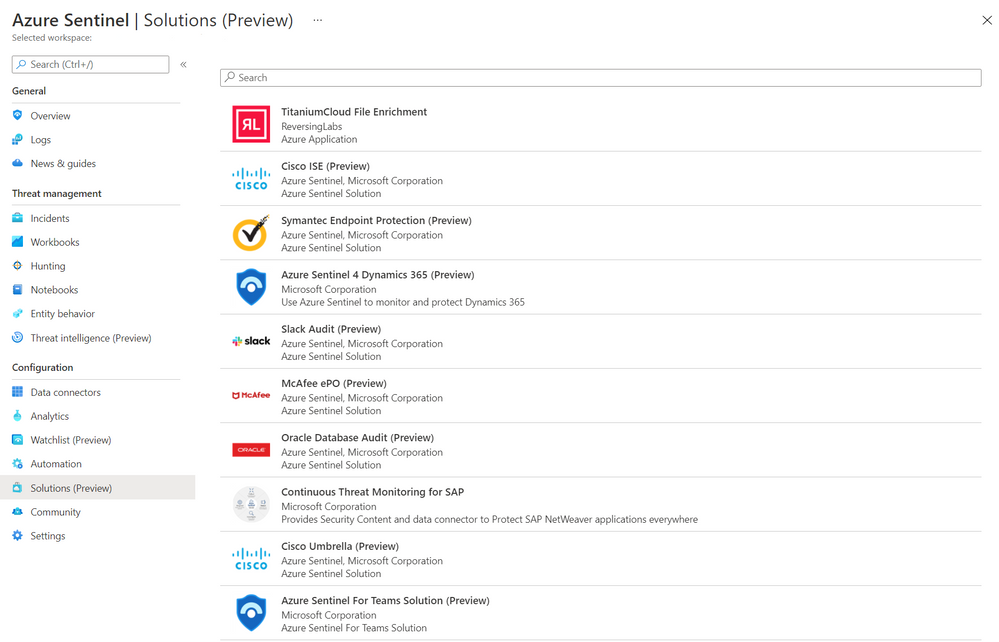

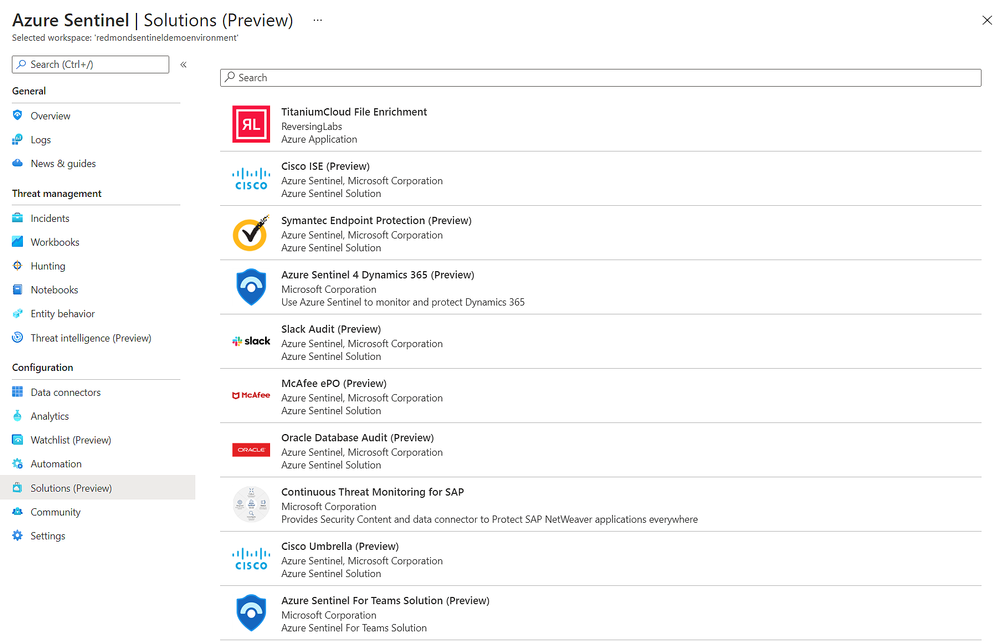

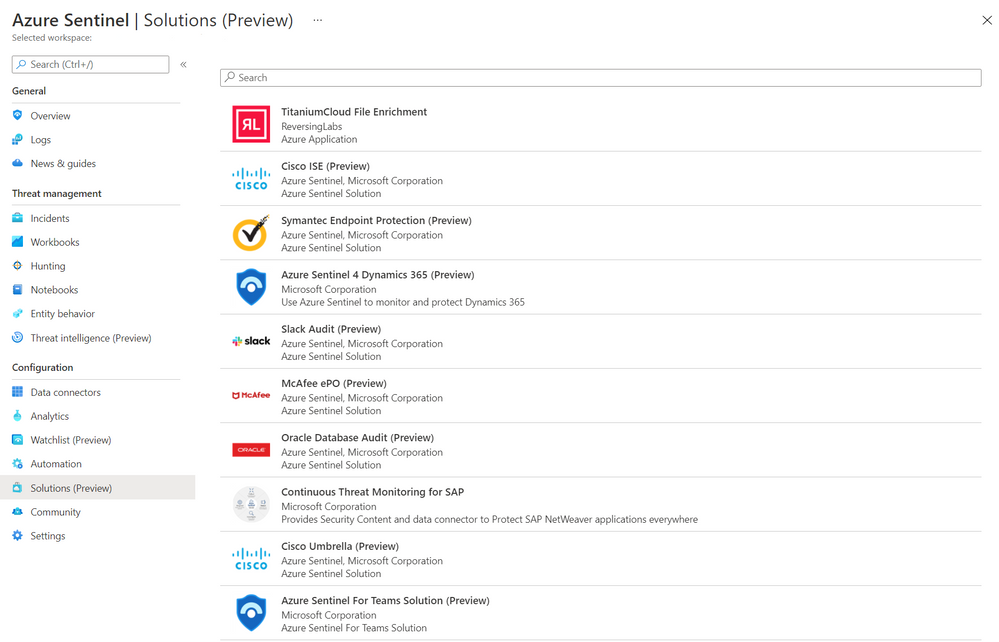

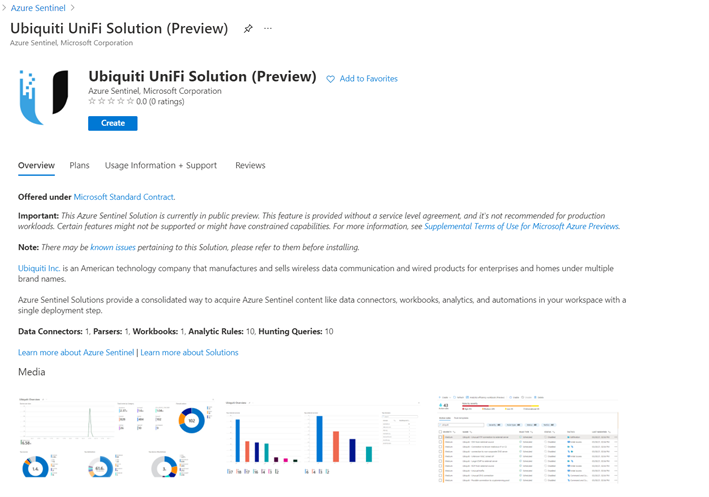

Easily discover and deploy Solutions for Azure Sentinel

With the release of Azure Sentinel Solutions, now in public preview, we’re providing a new, easier way to discover and deploy use cases for Azure Sentinel. Solutions consolidate related connectors and content, and can include data connectors, analytics rules to power detections, interactive workbooks, automation playbooks, and more so you can easily enable new use cases for integrations, end-to-end, from a single package.

The Solutions marketplace features 32 solutions that includes:

- Palo Alto Prisma and Cloudflare solutions to give you visibility into your cloud workloads.

- Threat intelligence solutions from RiskIQ, and ReversingLabs to enrich your threat detection, hunting and response capabilities.

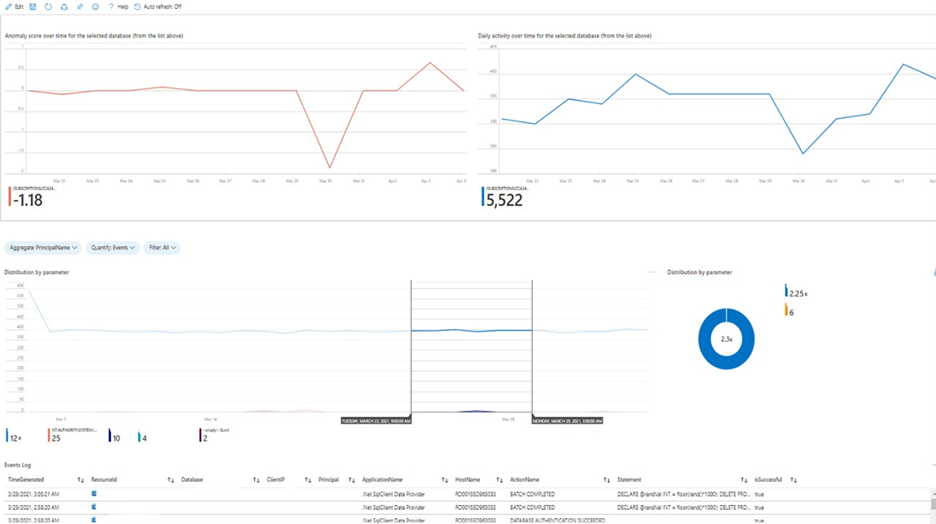

- Azure SQL and Oracle database audit solutions to monitor your database anomalies.

- And many more, like PingFederate for monitoring identity and access, Cisco Umbrella solution for threat protection, McAfee ePo solution for endpoint protection, Microsoft Teams solution for productivity workloads, and more.

Visit the in Azure Sentinel to see all available solutions. Partners and community members can build and contribute new solutions by following the guidelines on the Azure Sentinel GitHub.

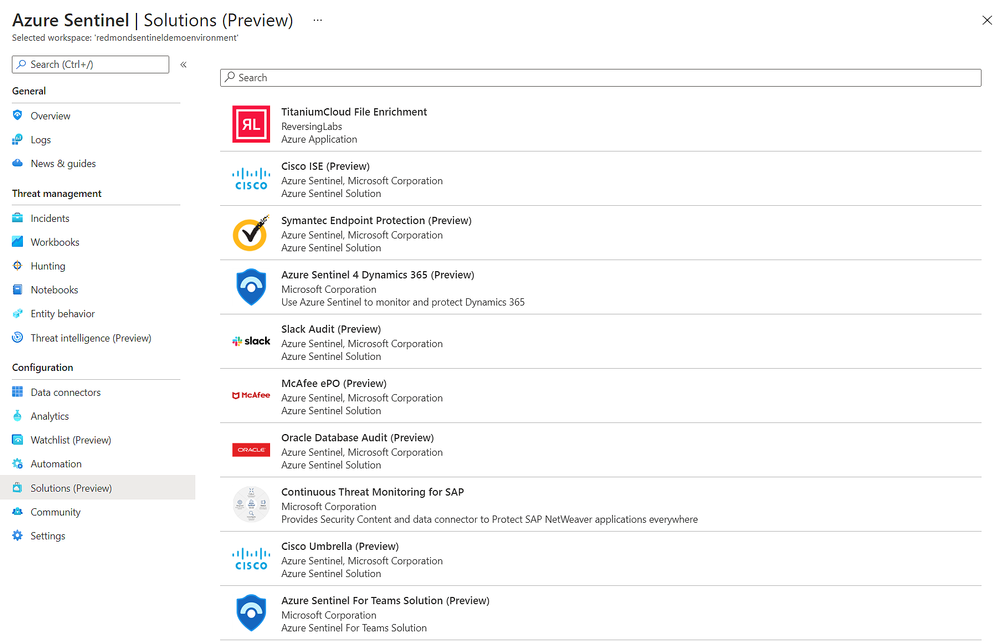

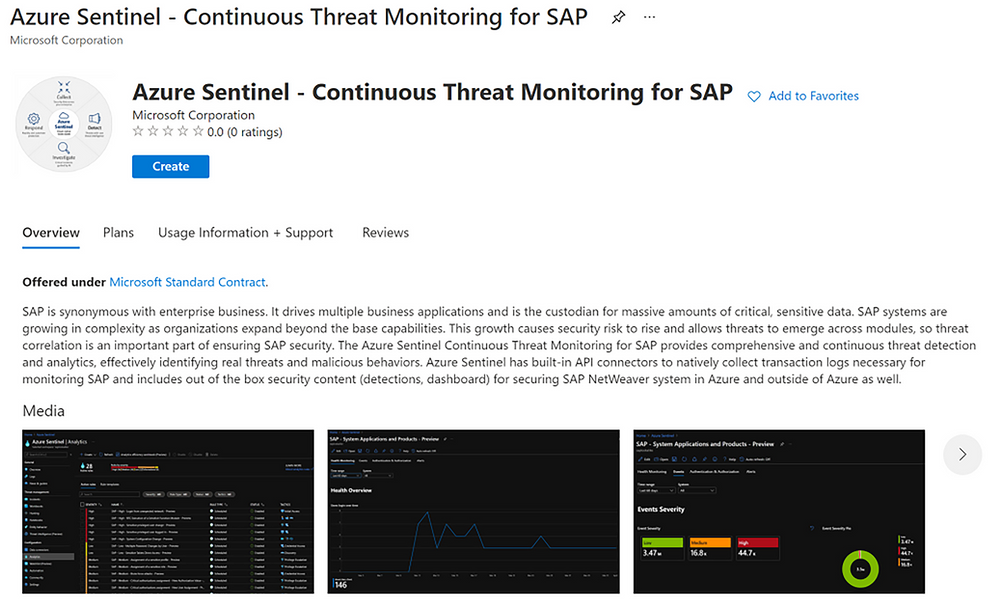

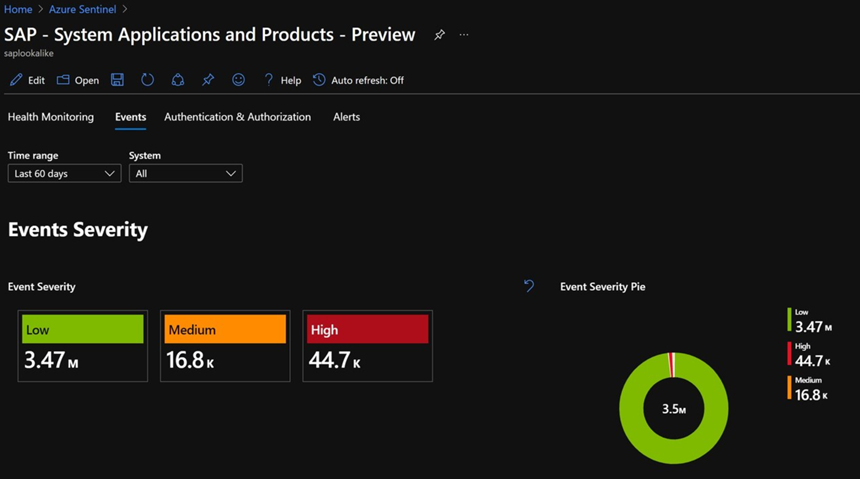

Monitor your SAP applications and respond quickly to threats

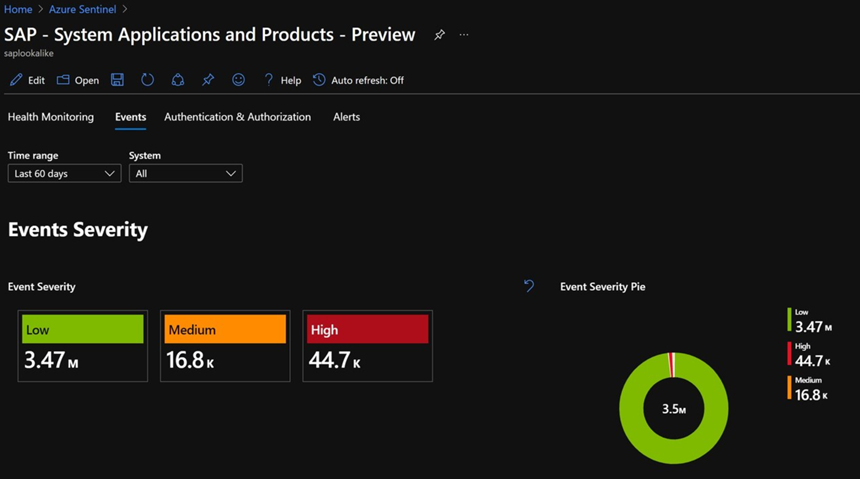

One of the most highly anticipated solutions for Azure Sentinel is our SAP threat monitoring solution. Now, you can use Azure Sentinel to monitor your SAP applications across Azure, other clouds, and on-premises.

SAP handles massive amounts of extremely sensitive data. These increasingly-complex systems are business critical, and a security breach to them could be catastrophic. With the release of the Azure Sentinel continuous threat monitoring solution, now in Public Preview, Azure Sentinel provides continuous threat detection and analytics to identify real threats and malicious behaviors. The SAP threat monitoring solution provides connectors, analytics rules to power detections, interactive workbooks, and more to help organizations detect threats to their SAP environments and respond quickly.

Learn more in documentation for the SAP threat monitoring solution.

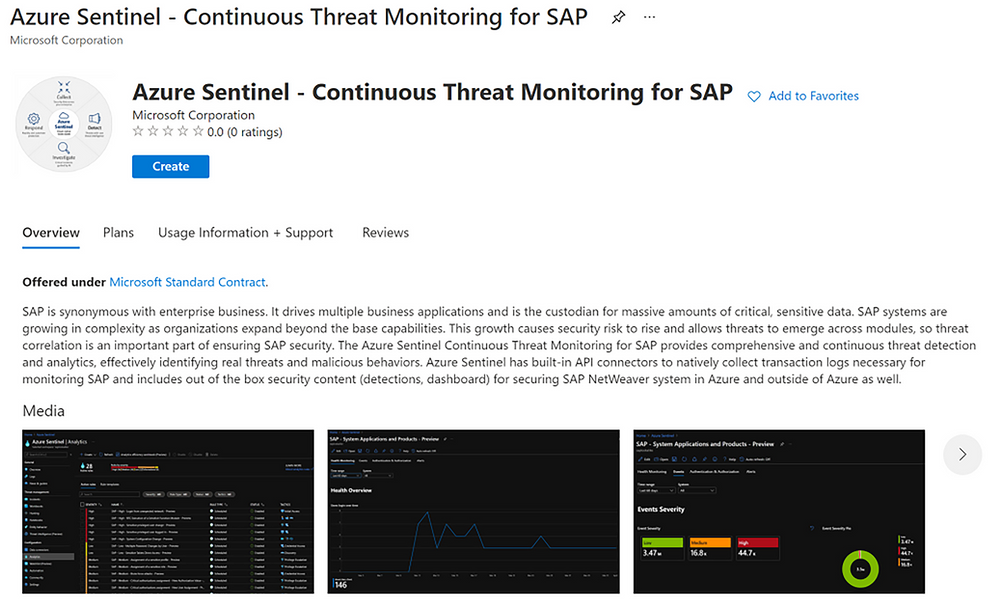

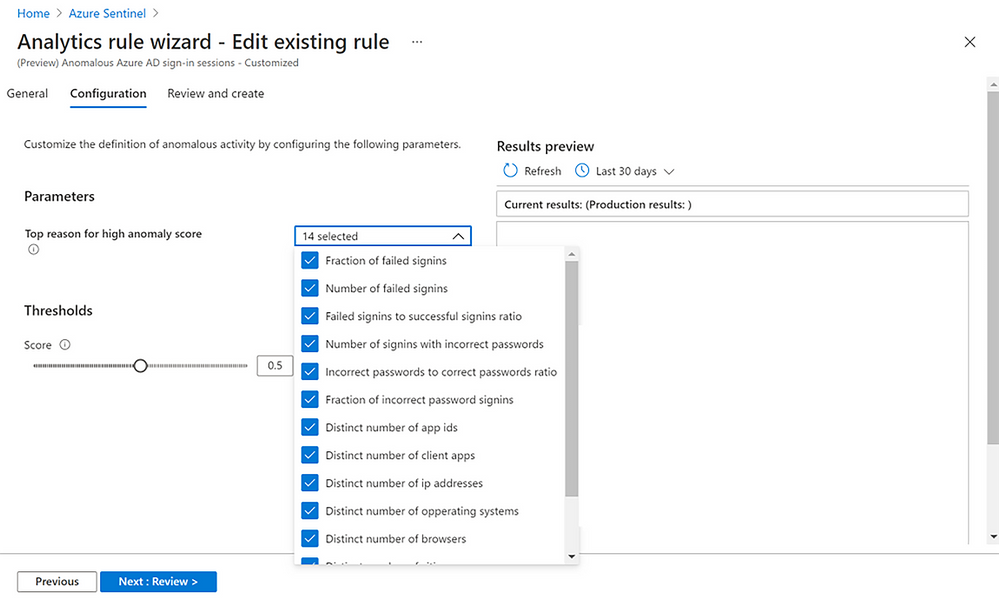

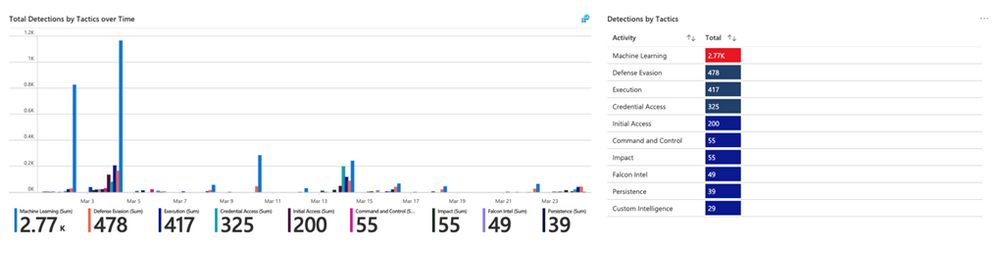

Tap into the power of ML with new easy-to-tune anomaly detections

With the release of customizable ML anomalies, now in Public Preview, we’re introducing a code-free experience to help security analysts get the most out of machine learning. These anomalies span the attack chain, today covering 11 of the 14 total MITRE ATT&CK tactics.

Security analysts can customize the parameters used in the ML model to tune anomalies to their specific needs, cutting down noise and ensuring that anomalies are detecting what’s relevant to your specific organization. For example, you can add or remove file extensions to prioritize/exclude document types for detecting mass downgrade AIP document sensitive labels, such as from High confidential to Public, or adjust the threshold of the number of documents that is considered a mass downgrade — customization that is especially useful given that different organizations often have different file sensitivity processes. In another example, you can also customize fourteen different parameters that affect the ML score of anomalous Azure Active Directory sign-in sessions, as well as the threshold for triggering these anomalies, so you can tailor the ML models based on your organization’s processes, priorities, and user behavior.

Learn more about customizable ML anomalies here.

Collaborate more efficiently with native Microsoft Teams integration

Many of our customers use Microsoft Teams to collaborate across security operations teams during investigations. Our new integration with Microsoft Teams, now in Public Preview, will make that easier than ever.

Azure Sentinel now integrates with Microsoft Teams so that, with a single click, you can create a Team for a particular incident. Use this Team as your central hub for investigation across members of the security team, all with easy access to the incident in a tab within it. When the incident is closed in Azure Sentinel, the Team is automatically archived, providing a record of the investigation should you need to reference it in the future.

Learn more about the Microsoft Teams collaboration integration here.

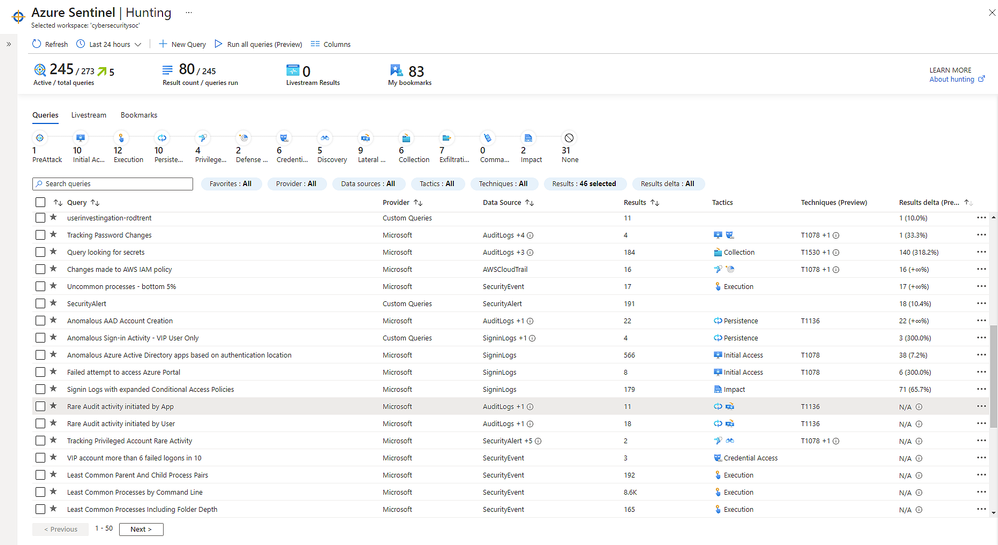

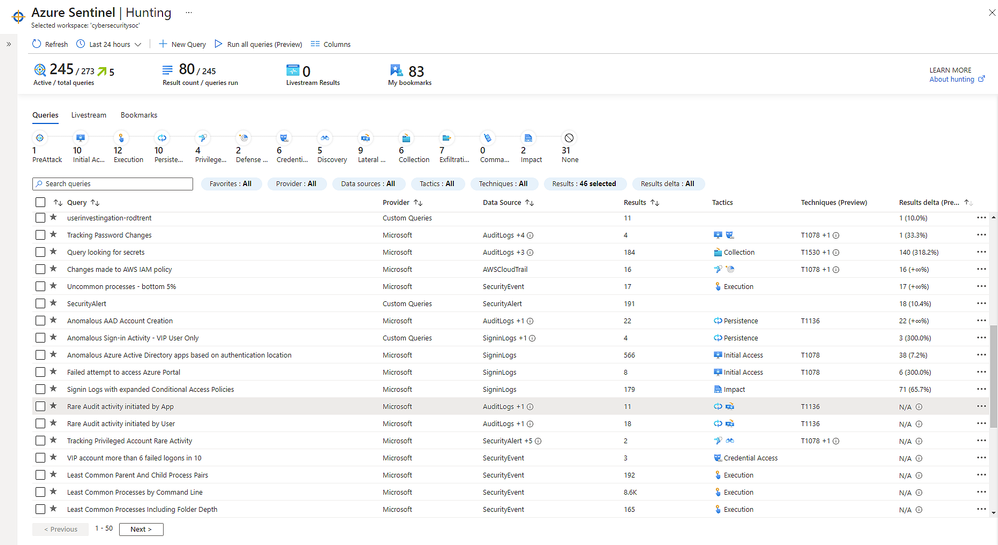

Improve SOC hunting visibility with a refreshed hunting dashboard

Now in public preview, a refreshed hunting query experience helps you find undetected threats more quickly and identify which hunting results are most relevant to your environment.

You can now run all your hunting queries, or a selected subset, in a single click. To contextualize your results, you can search for or filter results based on specific MITRE ATT&CK techniques. You can also identify spikes of activity with new “result deltas” to see which results have changed the most in the last 24 hours. As you filter through your queries and results, the new MITRE ATT&CK tactic bar dynamically updates to show which MITRE ATT&CK tactics apply.

Learn more about new hunting dashboard features here.

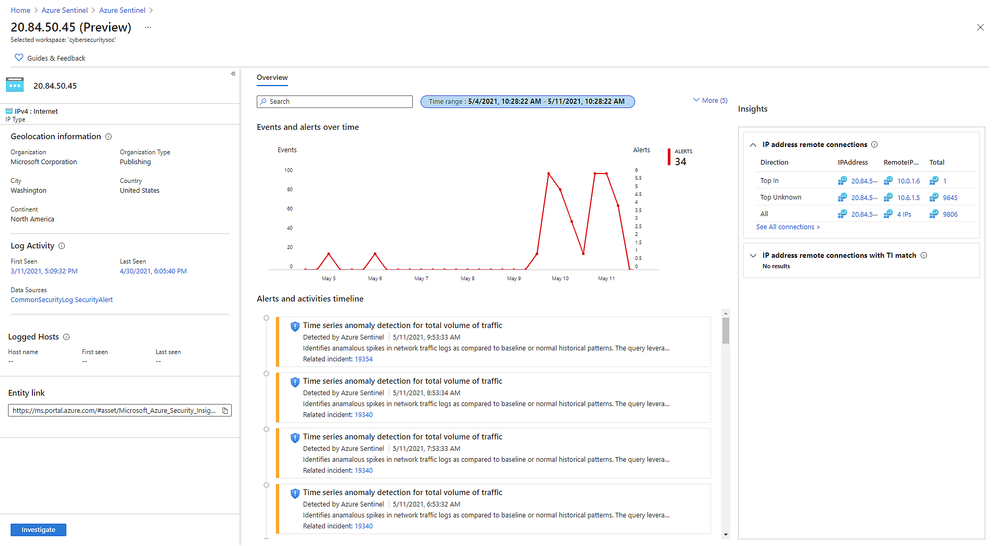

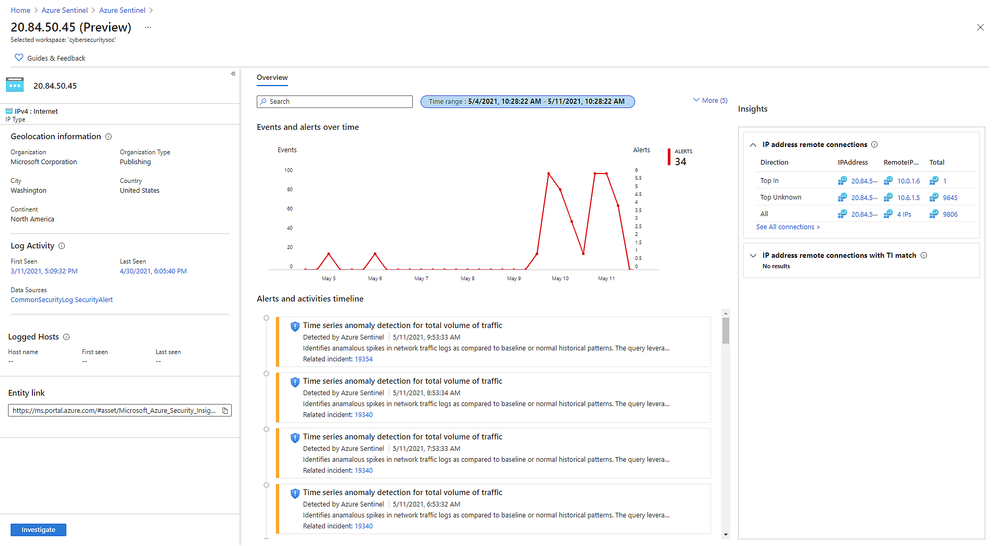

New IP entity page accelerates triage and investigation

Also in public preview, the IP entity page is the latest addition to Azure Sentinel’s User and Entity Behavior Analytics capabilities. Like the host and account pages, the IP page helps analysts quickly triage and investigate security incidents. The IP page aggregates information from multiple Microsoft and 3rd party data sources and includes insights like threat indicator data, network session data, host IP mappings, and first/last seen information – which is retained even if the earliest records age out of the retention period.

A key insight presented in IP entity pages is geolocation information, which is often used to assess the security relevance of an IP address. We provide geolocation enrichment data from the Microsoft Threat Intelligence service. This service combines data from Microsoft solutions with 3rd party vendors and partners. It will soon be available via REST API for security investigation scenarios to Azure Sentinel customers.

Learn more about the IP entity page here.

Detect advanced multistage attacks with new scheduled analytics rules in Fusion

Azure Sentinel leverages a machine learning technology called Fusion to automatically detect multistage attacks by identifying combinations of anomalous behaviors and suspicious activities that are observed at various stages of the kill-chain. There are currently 90 multistage attack scenarios detected by Azure over medium and low severity alerts from Microsoft threat protection services and third party sources, like Palo Alto Networks.

To help you discover threats tailored to your environment, we are releasing multistage attack scenarios that leverage a set of custom scheduled analytic rules to public preview. With these rules, Fusion can detect 32 new scenarios by combining alerts from the scheduled analytics rules, which detect specific events or sets of events across your environment, with alerts from Microsoft Cloud App Security or Azure Active Directory Identity Protection.

Learn more about the supported scenarios and how to configure your scheduled analytics rules here.

Next Steps

Learn more about these new innovations and see them in action in our upcoming webinar on May 26 at 8 AM Pacific. Register here.

For RSA, Microsoft released a number of innovations across security, compliance, and identity solutions. Learn more in the blog by CVP Security, Compliance, and Identity Vasu Jakkal. Join us for a live webinar on May 27 at 8 AM Pacific to learn more about new innovations across Microsoft security – register here.

by Contributed | May 12, 2021 | Technology

This article is contributed. See the original author and article here.

Today, we are announcing Azure Sentinel Solutions in public preview, featuring a vibrant gallery of 32 solutions for Microsoft and other products. Azure Sentinel solutions provide easier in-product discovery and single-step deployment of end-to-end product, domain, and industry vertical scenarios in Azure Sentinel. Solutions also enables Microsoft partners to deliver combined value for their integrations and productize their investments in Azure Sentinel. This experience is powered by Azure Marketplace for solutions’ discovery and deployment, and by Microsoft Partner Center for solutions’ authoring and publishing.

Azure Sentinel solutions currently include integrations as packaged content with a combination of one or many Azure Sentinel data connectors, workbooks, analytics, hunting queries, playbooks, and parsers (Kusto Functions) for delivering end-to-end product value or domain value or industry vertical value for your SOC requirements. All these solutions are available for you to use at no additional cost (regular data ingest or Azure Logic Apps cost may apply depending on usage of content in Azure Sentinel).

Few use cases of Azure Sentinel solutions are outlined as follows.

- On-demand out-of-the-box content: Solutions unlock the capability of getting rich Azure Sentinel content out-of-the-box for complete scenarios as per your needs via centralized discovery in Solutions gallery and single step deployment capability. Feel free to customize this content per your needs post deploy!

- Unlock complete product value: Discover and deploy a solution for not only onboarding the data for a certain product, but also monitor the data via workbooks, generate custom alerts via analytics in the solution package, use the queries to hunt for threats for that data source and run necessary automations as applicable for that product.

- Unlock domain value: Discover and deploy solutions for specific Threat Intelligence automation scenarios or zero-day vulnerability hunting, analytics, and response scenarios.

- Unlock industry vertical value: Get solutions for ERP scenarios or Healthcare or finance compliance needs in a single step.

- And more to unlock complete SIEM and SOAR capabilities in Azure Sentinel.

Get Started

Select from the rich set of 30+ Solutions to start working with the specific content set in Azure Sentinel immediately. Steps to discover and deploy Solutions is outlined as follows. Refer to the Azure Sentinel solutions documentation for further details.

Discover Solutions

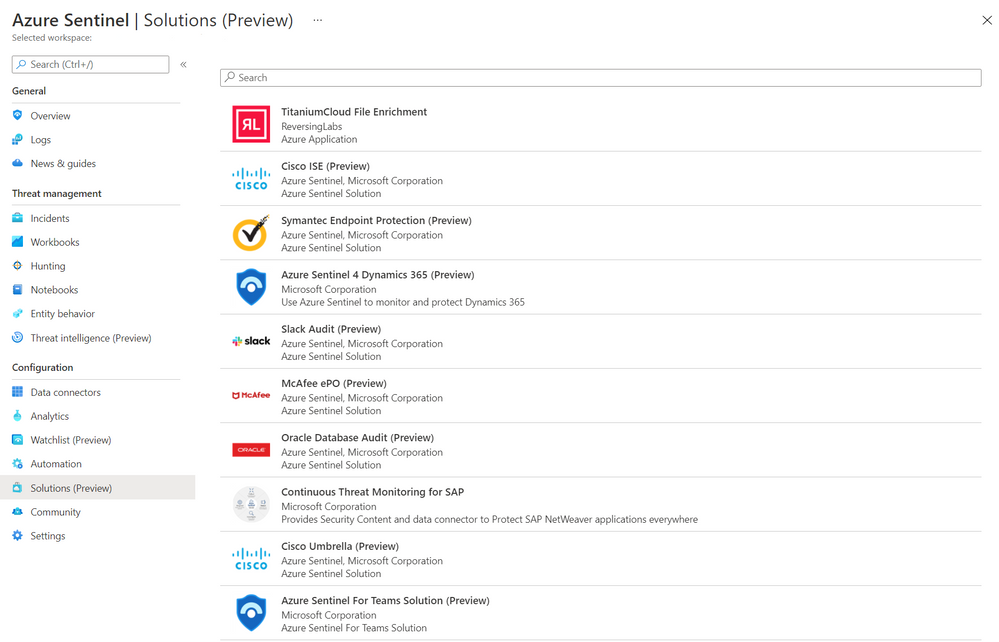

- Select Solutions (Preview) from the Azure Sentinel Solutions navigation menu.

- This displays a searchable list of solutions for you to select from.

Azure Sentinel Solutions Blade

Azure Sentinel Solutions Blade

- Click Load more at the bottom of the page to see more solutions.

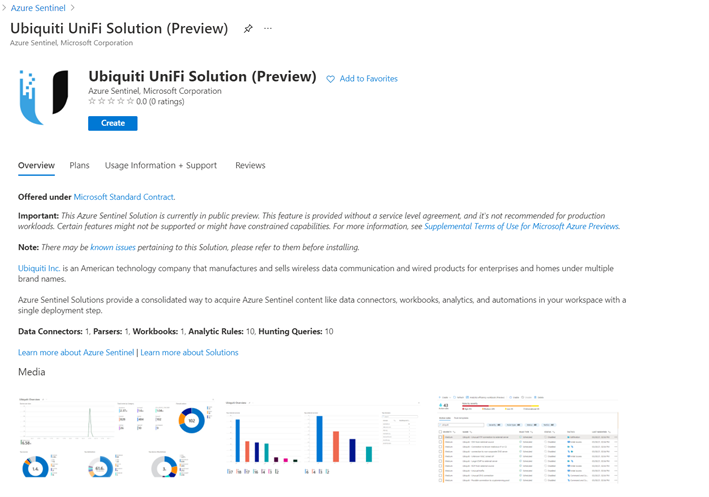

- Select solution of your choice and click on it to display the solutions details view.

- You can now view the Overview tab that includes important details of the solution and the content included in the solution package as illustrated in the diagram below.

Solution details

Solution details

- The Plans tab covers information about the license terms. All the solutions included in the Solutions gallery are available at no additional cost to install.

- The Usage Information + Support tab includes information about the publisher details for each solution and also a direct link to the support contact for the respective solution.

Deploy Solutions

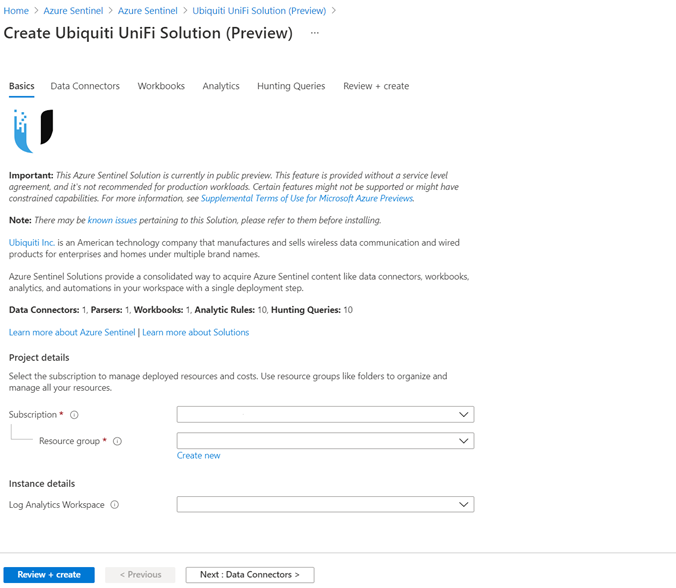

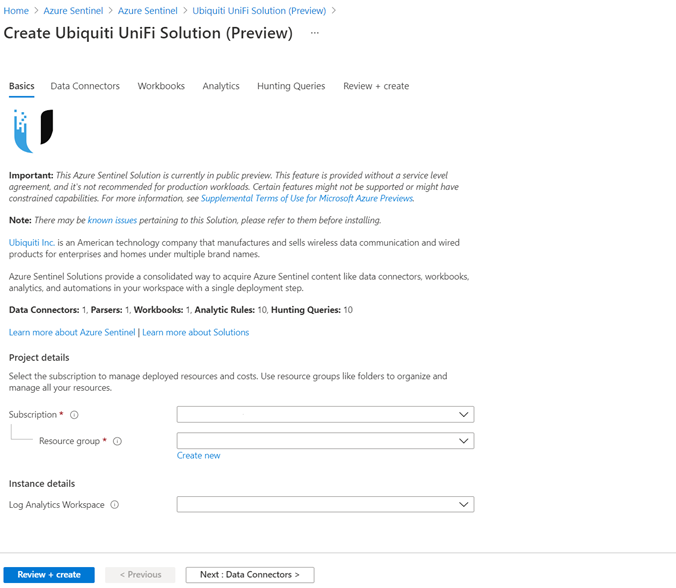

- Select the Create button in the solutions detail page to deploy the solution.

- You can now enter information in each tab of the solutions deployment flow and move to the next tab to enable deployment of this solution as illustrated in the following diagram.

Solution deploy

Solution deploy

Finally select Review and create that will trigger the validation process and upon successful validation select Create to run solution deployment.

Visit the respective feature galleries to customize (as needed), configure, and enable the relevant content included in the Solution package. For e.g., if the Solution deploys a data connector, you’ll find the new data connector in the Data connector blade of Azure Sentinel from where you can follow the steps to configure and activate the data connector.

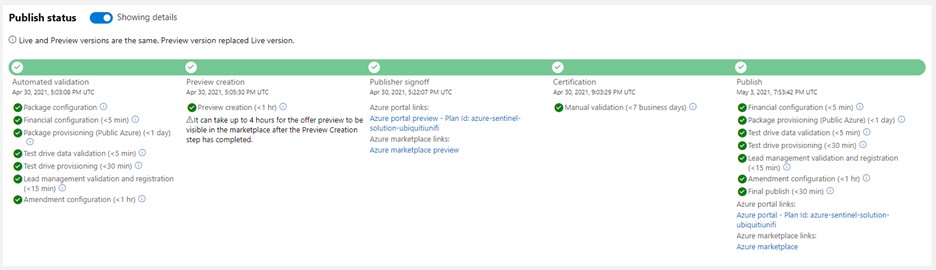

Partner Scenario: Deliver Solutions

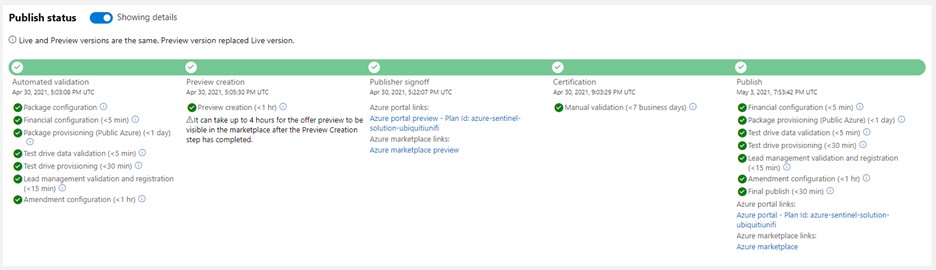

Microsoft partners like ISVs, Managed Service Providers, System Integrators, etc. can follow the 3-step process outlined below to author and publish a solution to deliver product, domain, or vertical value for their products and offerings in Azure Sentinel. Refer to the guidance on Azure Sentinel GitHub for further details on each step.

Step 1. Create Azure Sentinel content for your product / domain / industry vertical scenarios and validate the content.

Step 2. Package content created in the step above. Use the new packaging tool that creates the package and also runs validations on it.

Step 3. Publish your Azure Sentinel solution by creating an offer in Microsoft Partner Center, uploading the package generated in the step above and sending in the offer for certification and final publish. Partners can track progress on their offer in Partner Center dashboard view as shown in the diagram below.  Solution build

Solution build

New Azure Sentinel Solutions

The Azure Sentinel Solutions gallery showcases 32 new solutions covering depth and breadth of various product, domain, and industry vertical capabilities. These out-of-the-box content packages enable to get enhanced threat detection, hunting and response capabilities for cloud workloads, identity, threat protection, endpoint protection, email, communication systems, databases, file hosting, ERP systems and threat intelligence solutions for a plethora of Microsoft and other products and services.

SAP Continuous Threat Monitoring

Use the SAP continuous threat monitoring solution to monitor your SAP applications across Azure, other clouds, and on-premises. This solution package includes a data connector to ingest data, workbook to monitor threats and a rich set of 25+ analytic rules to protect your applications.  SAP Solution

SAP Solution

Cisco Solutions

There are two solutions for Cisco Umbrella and Cisco Identity Services Engine (ISE). The Cisco Umbrella solution provides multiple security functions to enable protection of devices, users, and distributed locations everywhere. The Cisco ISE solution includes data connector, parser, analytics, and hunting queries to streamline security policy management and see users and devices controlling access across wired, wireless, and VPN connections to the corporate network.

PingFederate

PingFederate solution includes data connectors, analytics, and hunting queries to enable monitoring user identities and access in your enterprise.

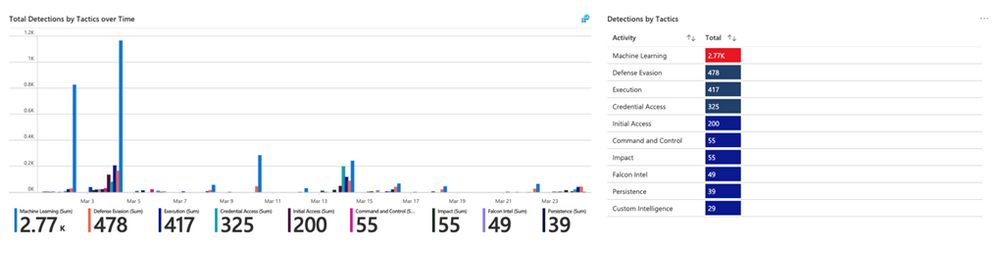

CrowdStrike Falcon Protection Platform

The CrowdStrike solution includes two data connectors to ingest Falcon detections, incidents, audit events and rich Falcon event stream telemetry logs into Azure Sentinel. It also includes workbooks to monitor CrowdStrike detections and analytics and playbooks for automated detection and response scenarios in Azure Sentinel.  CrowdStrike Solution

CrowdStrike Solution

McAfee ePolicy Orchestrator

McAfee ePolicy Orchestrator monitors and manages your network, detecting threats and protecting endpoints against these threats leveraging the data connector to ingest McAfee ePo logs and leveraging the analytics to alert on threats.

Palo Alto Prisma

Palo Alto Prisma solution includes data connector to ingest Palo Alto Cloud logs into Azure Sentinel. Leverage the analytics and hunting queries for out-of-the-box detections and threat hunting scenarios besides leveraging the workbooks for monitoring Palo Alto Prisma data in Azure Sentinel.

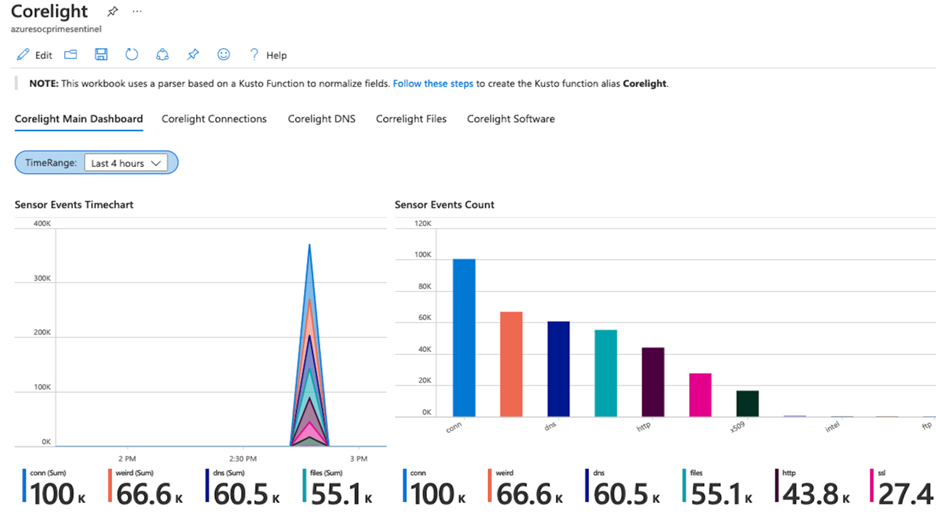

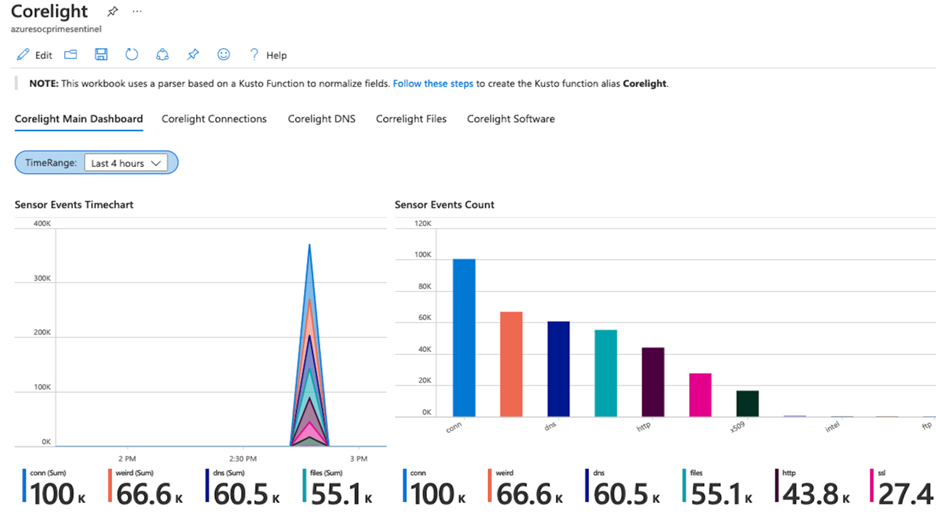

Corelight

Corelight provides a network detection and response (NDR) solution based on best-of-breed open-source technologies, Zeek and Suricata that enables network defenders to get broad visibility into their environments. The data connector enables ingestion of events from Zeek and Suricata via Corelight Sensors into Azure Sentinel. Corelight for Azure Sentinel also includes workbooks and dashboards, hunting queries, and analytic rules to help organizations drive efficient investigations and incident response with the combination of Corelight and Azure Sentinel.  Corelight Solution

Corelight Solution

Infoblox Cloud

BloxOne DDI enables you to centrally manage and automate DDI (DNS, DHCP and IPAM) from the cloud to any and all locations. BloxOne Threat Defense maximizes brand protection to protect your network and automatically extend security to your digital imperatives, including SD-WAN, IoT and the cloud. His Azure Sentinel solution powers security orchestration, automation, and response (SOAR) capabilities, and reduces the time to investigate and remediate cyberthreats.

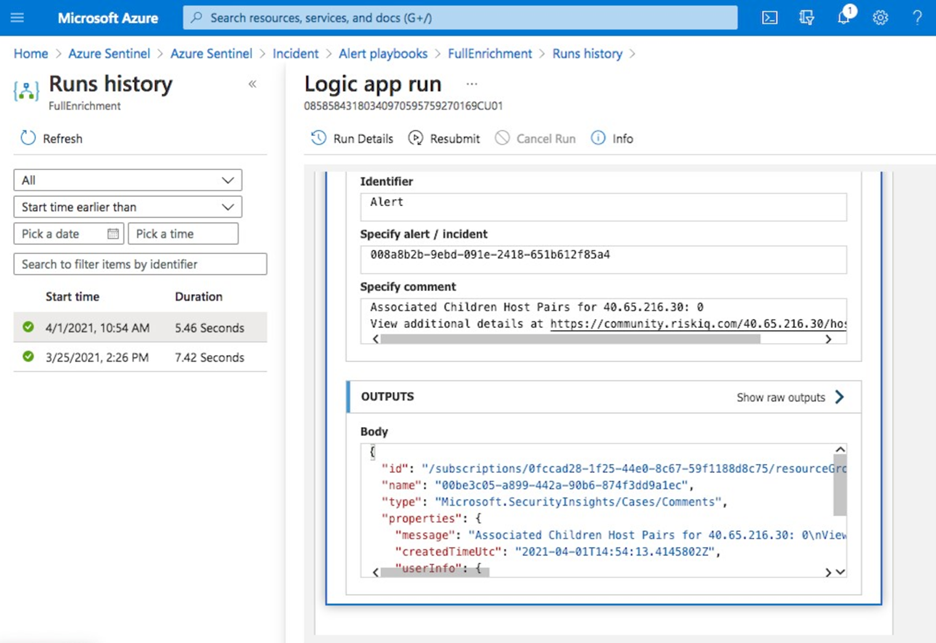

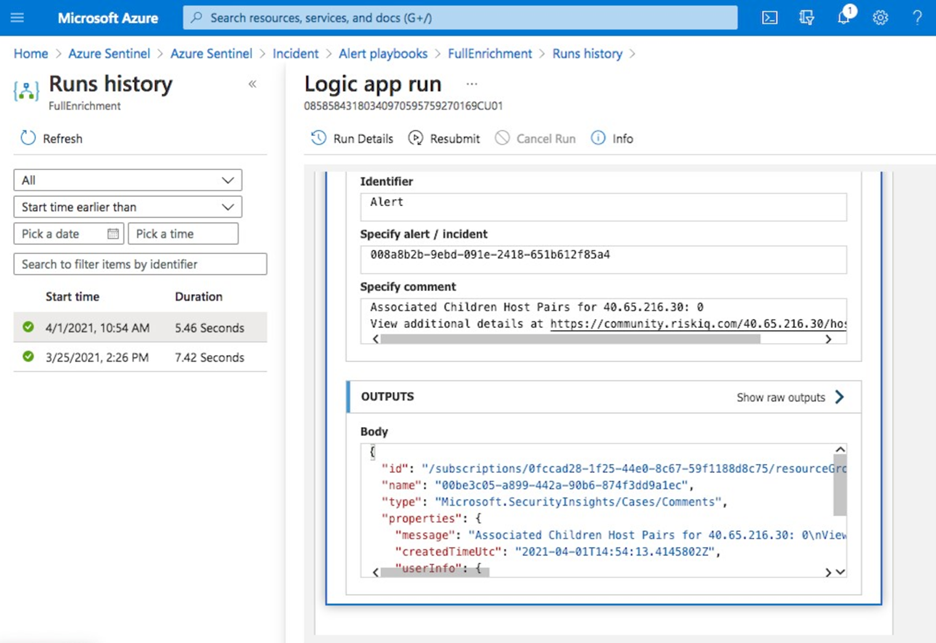

RiskIQ Illuminate Security Intelligence

RiskIQ has created several Azure Sentinel playbooks that pre-package functionality in order to enrich, add context to and automatically action incidents based on RiskIQ Internet observations within the Azure Sentinel platform. These playbooks can be configured to run automatically on created incidents in order to speed up the triage process. When an incident contains a known indicator such as a domain or IP address, RiskIQ will enrich that value with what else it’s connected to on the Internet and if it may pose a threat. If a threat is identified, RiskIQ can action the incident including elevating its status and tagging with additional metadata for analysts to review.  RiskIQ Solution

RiskIQ Solution

vArmour Application Controller

Application Controller is an easy to deploy solution that delivers comprehensive real-time visibility and control of application relationships and dependencies, to improve operational decision-making, strengthen security posture, and reduce business risk across multi-cloud deployments. This solution includes data connector to ingest vArmour data and workbook to monitor application dependency and relationship mapping info along with user access and entitlement monitoring.

VMWare Carbon Black

Use this solution to monitor Carbon Black events, audit logs and notifications in Azure Sentinel and analytic rules on critical threats and malware detections to help you get started immediately.

Symantec Solutions

There are two solutions from Symantec. Symantec Endpoint protection solution enables anti-malware, intrusion prevention and firewall features of Symantec being available in Azure Sentinel and help prevent unapproved programs from running, and response actions to apply firewall policies that block or allow network traffic.

Symantec Proxy SG solution enables organizations to effectively monitor, control, and secure traffic to ensure a safe web and cloud experience by monitoring proxy traffic.

Microsoft Teams

Teams serves a central role in both communication and data sharing in the Microsoft 365 Cloud. Since the Teams service touches on so many underlying technologies in the Cloud, it can benefit from human and automated analysis not only when it comes to hunting in logs, but also in real-time monitoring of meetings in Azure Sentinel. The solution includes analytics rules, hunting queries, and playbooks.

Slack Audit

The Slack Audit solution provides ability to get Slack events which helps to examine potential security risks, analyze your organization’s use of collaboration, diagnose configuration problems and more. This solution includes data connector, workbooks, analytic rules and hunting queries to connect Slack with Azure Sentinel.

Azure Firewall

Azure Firewall is a managed, cloud-based network security service that protects your Azure Virtual Network resources. It’s a fully stateful firewall as a service with built-in high availability and unrestricted cloud scalability. This Azure Firewall solution in Azure Sentinel provides built-in customizable threat detection on top of Azure Sentinel. The solution contains a workbook, detections, hunting queries and playbooks.

Sophos XG Firewall

Monitor the network traffic and firewall status using this solution for Sophos XG Firewall. Furthermore, enable the port scans and excessive denied connections analytic rules to create custom alerts and track as incidents for the ingested data.

Qualys VM

Monitor and detect vulnerabilities reported by Qualys in Azure Sentinel by leveraging the new solutions for Qualys VM.

Microsoft Dynamics 365

The Dynamics 365 continuous threat monitoring with Azure Sentinel solution provides you with ability to collect Dynamics 365 logs, gain visibility of activities within Dynamics 365 and analyze them to detect threats and malicious activities. The solution includes a data connector, workbooks, analytics rules, and hunting queries.

Cloudflare

This solution combines the value of Cloudflare in Azure Sentinel by providing information about the reliability of your external-facing resources such as websites, APIs, and applications. Use the detections and hunting queries to protect your internal resources such as behind-the-firewall applications, teams, and devices.

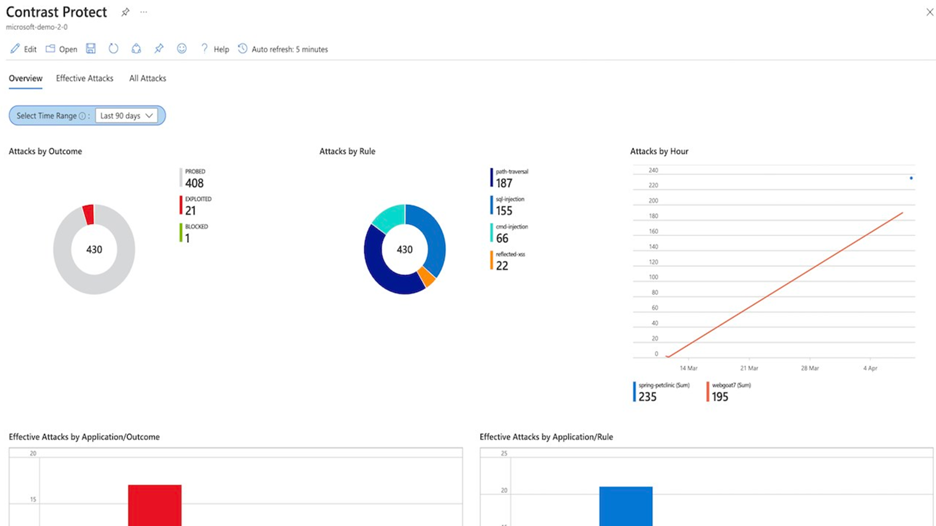

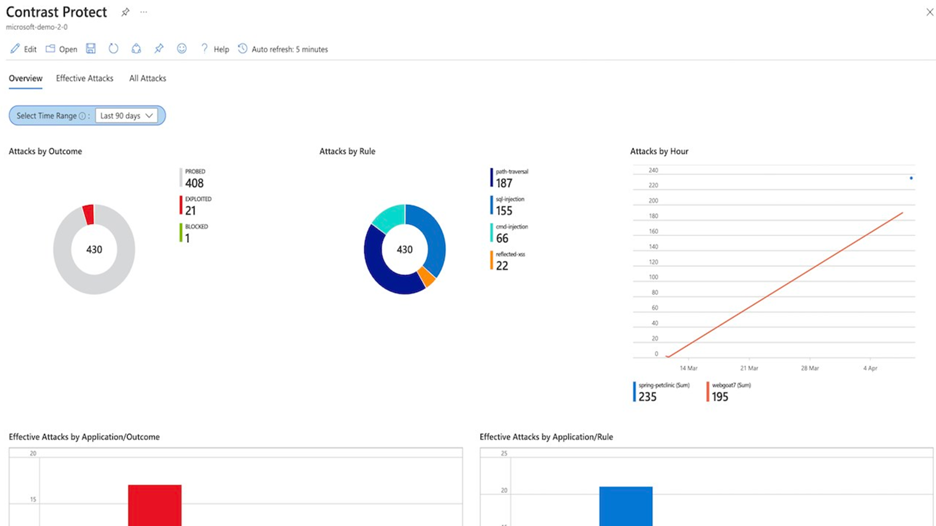

Contrast Protect

Contrast Protect empowers teams to defend their applications anywhere they run, by embedding an automated and accurate runtime protection capability within the application to continuously monitor and block attacks. Contrast Protect seamlessly integrates into Azure Sentinel so you can gain additional security risk visibility into the application layer.  Contrast Protect Solution

Contrast Protect Solution

Check Point CloudGuard

This solution includes an Azure Logic App custom connector and playbooks for Check Point to offer enhanced integration with SOAR capabilities of Azure Sentinel. Enterprises can correlate and visualize these events on Azure Sentinel and configure SOAR playbooks to automatically trigger CloudGuard to remediate threats.

Senserva

Senserva, a Cloud Security Posture Management (CSPM) for Azure Sentinel, simplifies the management of Azure Active Directory security risks before they become problems by continually producing priority-based risk assessments. Senserva information includes a detailed security ranking for all the Azure objects Senserva manages, enabling customers to perform optimal discovery and remediation by fixing the most critical issues with the highest impact items first. All Senserva’s enriched information is sent to Azure Sentinel for processing by analytics, workbooks, and playbooks in this solution.

HYAS Insight

HYAS Insight is a threat and fraud investigation solution using exclusive data sources and non-traditional mechanisms that improves visibility and triples productivity for analysts and investigators while increasing accuracy. HYAS Insight connects attack instances and campaigns to billions of indicators of compromise to understand and counter adversary infrastructure and includes playbooks to enrich and add context to incidents within the Azure Sentinel platform.

Titanium Cloud File Enrichment from ReversingLabs

TitaniumCloud is a threat intelligence solution providing up-to-date file reputation services, threat classification and rich context on over 10 billion goodware and malware files. Files are processed using ReversingLabs File Decomposition Technology. A powerful set of REST API query and feed functions deliver targeted file and malware intelligence for threat identification, analysis, intelligence development, and threat hunting services in Azure Sentinel.

Proofpoint Solutions

Two Solutions for Proofpoint enables bringing in email protection capability into Azure Sentinel. Proofpoint OnDemand Email security (POD) classifies various types of email, while detecting and blocking threats that don’t involve malicious payload. Proofpoint Targeted Attack Protection (TAP) solution helps detect, mitigate and block advanced threats that target people through email in Azure Sentinel. This includes attacks that use malicious attachments and URLs to install malware or trick users into sharing passwords and sensitive information.

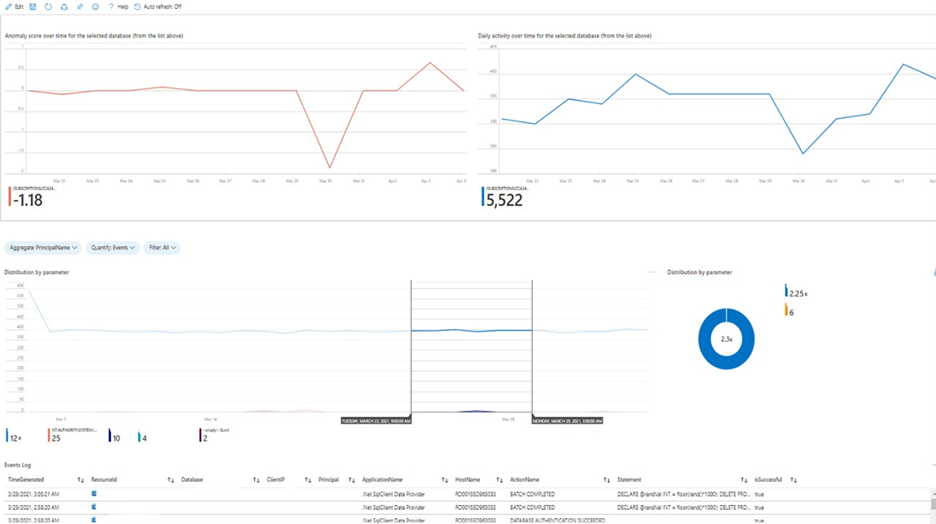

Azure SQL database

This solution provides built-in customizable threat detection for Azure SQL PaaS services in Azure Sentinel, based on SQL Audit log and with seamless integration to alerts from Azure Defender for SQL. This solution includes a guided investigation workbook with incorporated Azure Defender alerts. Furthermore, it includes analytics to detect SQL DB anomalies, audit evasion and threats based on the SQL Audit log, hunting queries to proactively hunt for threats in SQL DBs and a playbook to auto-turn SQL DB audit on.  Azure SQL Solution

Azure SQL Solution

Oracle Database

Oracle Database Unified Auditing enables selective and effective auditing inside the Oracle database using policies and conditions and brings these database audit capabilities in Azure Sentinel. This solution comes with a data connector to get the audit logs as well as workbook to monitor and a rich set of analytics and hunting queries to help with detecting database anomalies and enable threat hunting capabilities in Azure Sentinel.

Ubiquity UniFi

This solution includes data connector to ingest wireless and wired data communication logs into Azure Sentinel and enables to monitor firewall and other anomalies via the workbook and set of analytics and hunting queries.

Box

Box is a single, secure, easy-to-use platform built for the entire content lifecycle, from file creation and sharing, to co-editing, signature, classification, and retention. This solution delivers capabilities to monitor file and user activities for Box and integrates with data collection, workbook, analytics and hunting capabilities in Azure Sentinel.

Closing

Azure Sentinel Solutions is just one of several exciting announcements we’ve made for the RSA Conference 2021. Learn more about other new Azure Sentinel innovations in our announcements blog.

Discover and deploy solutions to get out-of-the-box and end-to-end value for your scenarios in Azure Sentinel. Let us know your feedback using any of the channels listed in the Resources.

We also invite partners to build and publish new solutions for Azure Sentinel. Get started now by joining the Azure Sentinel Threat Hunters GitHub community and follow the solutions build guidance.

by Contributed | May 12, 2021 | Technology

This article is contributed. See the original author and article here.

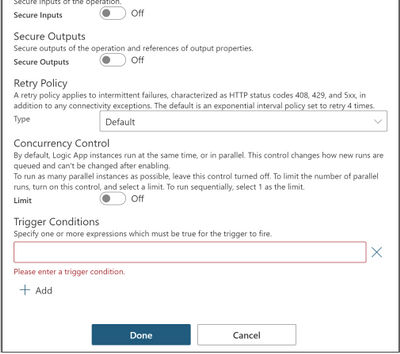

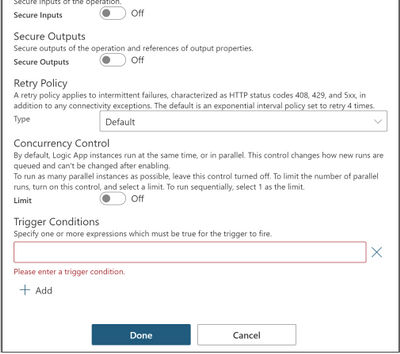

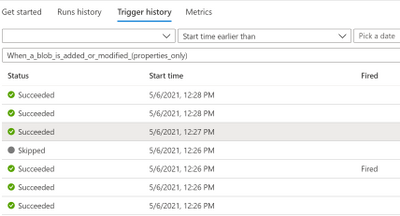

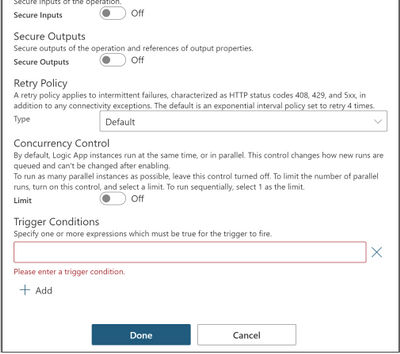

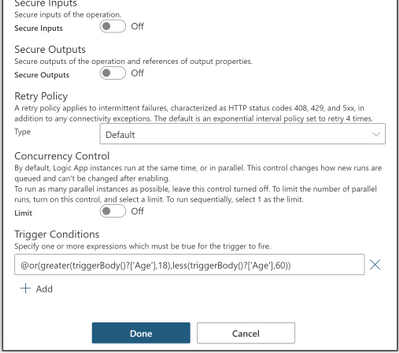

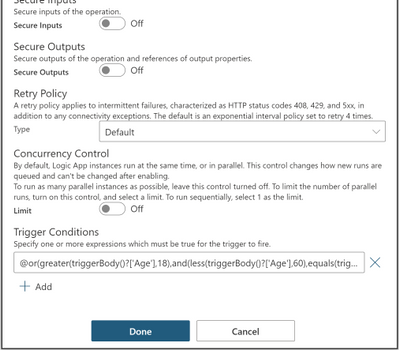

Trigger conditions is a trigger setting used to specify one or more conditional expressions which must be true for the trigger to fire.

Trigger conditions can be set using logic app designer or manually using code view; for schema reference for trigger conditions, check the following link: Schema reference for trigger and action types – Azure Logic Apps | Microsoft Docs

To set trigger conditions using logic app designer, follow the steps below:

1- Go to your logic app.

2- On the trigger, click on the three dots (…) at the top right corner.

3- Select Settings.

4- Scroll down to Trigger Conditions.

5- Specify your conditional expression.

6-Click Done.

You can set single or multiple conditions as follows:

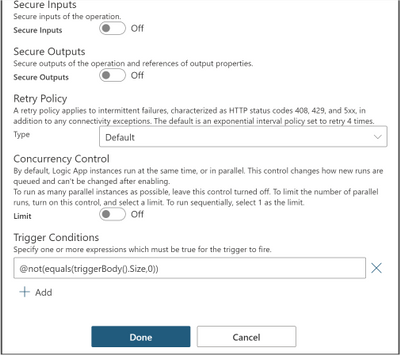

Single Condition

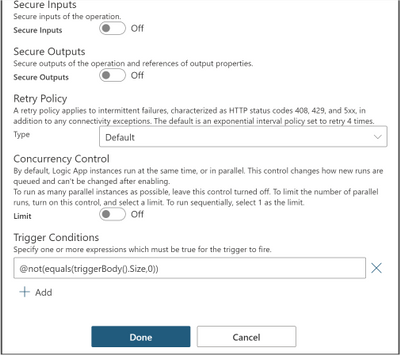

In this example, we apply trigger filter @not(equals(triggerBody().Size,0)) on the When a blob is added or modified (properties only) trigger settings under Trigger Conditions, so that the logic app is not triggered with empty files.

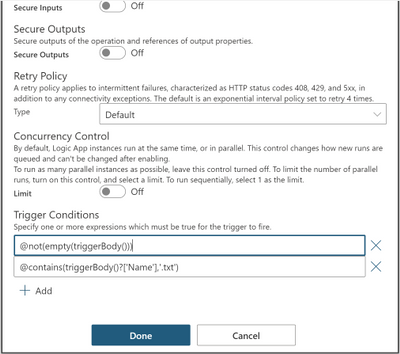

Multiple conditions

You can define multiple conditions but the logic app will only fire if all conditions are met.

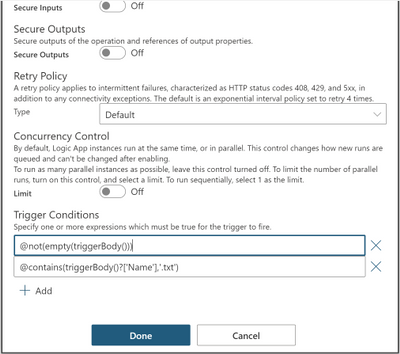

Two or more AND conditions

@not(empty(triggerBody())) and @contains(triggerBody()?[‘Name’],’.txt’)

This trigger will only fire if the trigger body is not empty and the file is a text file by checking if the name property contains ‘.txt’ extension inside the trigger body.

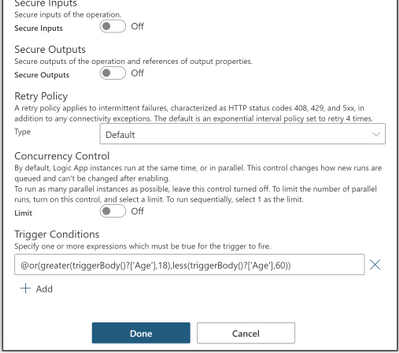

Two or more OR conditions

@or(greater(triggerBody()?[‘Age’],18),less(triggerBody()?[‘Age’],60))

This trigger will only fire if the age is greater than 18 or less than 60 by checking the age property inside the trigger body.

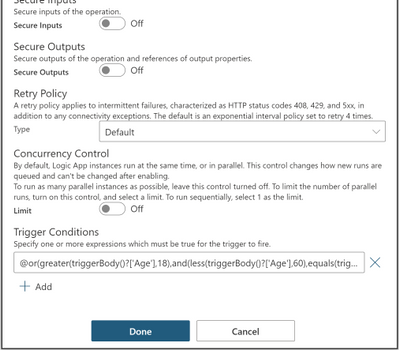

OR and AND Conditions together

@or(greater(triggerBody()?[‘Age’],18),and(less(triggerBody()?[‘Age’],60),equals(triggerBody()?[‘Fit’],true)))

This trigger will only fire if the age is greater than 18 or less than 60 and fit is true by checking the age and fit properties inside the trigger body.

For reference guide for logical comparison functions that can be used in conditional expressions, check the following link: Reference guide for functions in expressions – Azure Logic Apps | Microsoft Docs

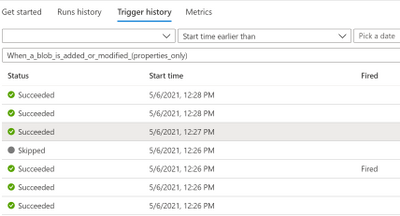

Split On Behavior

If you apply it on a trigger with Split On enabled, the conditions are evaluated after the splitting which guarantees that only the array

elements that match the conditions are fired.

In this case, you will see one trigger event for each element in the trigger history, triggers that did not fire due to trigger conditions will be found in Trigger History.

Pros

- Simple logic app with less steps by moving logic that would usually be inside the logic app to define if the logic app can be executed or not to inside the trigger itself.

- Clean workflow run history that reduces operational burden by eliminating unwanted runs.

- Reduced costs by reducing the action calls made for checking the conditions within logic app.

Cons

- Trigger conditions are not visible which make it hard to troubleshoot; so, it is recommended to modify trigger name to indicate that there are trigger conditions set.

- Adding trigger conditions does not support intellisense; so, it is recommended to draft your expression within an initialize variable or compose action, note that in these actions, an @ is not needed at the beginning of the expression, but within trigger conditions it is needed

- Runs that did not meet the trigger conditions will not show in the runs history.

by Contributed | May 12, 2021 | Technology

This article is contributed. See the original author and article here.

Today, I worked on a very interesting situation. Our customer wants to check a connection but instead of from a single application, they are trying to run a bunch of instance of the same application to simulate a workload.

In order to test this situation, I developed this small PowerShell Script doing a simple connection and executing “SELECT 1” command around for 10000 times, but using the option of multithreading of Powershell to execute the same PowerShell script in multiple instances.

Basic points:

- 10 processes running in parallel from a single PC.

- Every process will run 1000 running 10 times SELECT 1, running the powershell script DoneThreadIndividual.ps1

- In every execution we have the time spent in the connection (with/without connection pooling) and execution and save the information in a log file.

- Everything is customizable, in terms of numbers, number of connections and query to execute.

Script to execute a number of Process defined

Function lGiveID([Parameter(Mandatory=$false)] [int] $lMax)

{

$Jobs = Get-Job

[int]$lPending=0

Foreach ($di in $Jobs)

{

if($di.State -eq "Running")

{$lPending=$lPending+1}

}

if($lPending -lt $lMax) {return $true}

return {$false}

}

try

{

for ($i=1; $i -lt 2000; $i++)

{

if((lGiveid(10)) -eq $true)

{

Start-Job -FilePath "C:TestDoneThreadIndividual.ps1"

Write-output "Starting Up---"

}

else

{

Write-output "Limit reached..Waiting to have more resources.."

Start-sleep -Seconds 20

}

}

}

catch

{

logMsg( "You're WRONG") (2)

logMsg($Error[0].Exception) (2)

}

Script for testing – DoneThreadIndividual.ps1

$DatabaseServer = "servername.database.windows.net"

$Database = "dbname"

$Username = "username"

$Password = "password"

$Pooling = $true

$NumberExecutions =1000

$FolderV = "C:Test"

function GiveMeSeparator

{

Param([Parameter(Mandatory=$true)]

[System.String]$Text,

[Parameter(Mandatory=$true)]

[System.String]$Separator)

try

{

[hashtable]$return=@{}

$Pos = $Text.IndexOf($Separator)

$return.Text= $Text.substring(0, $Pos)

$return.Remaining = $Text.substring( $Pos+1 )

return $Return

}

catch

{

$return.Text= $Text

$return.Remaining = ""

return $Return

}

}

#--------------------------------------------------------------

#Create a folder

#--------------------------------------------------------------

Function CreateFolder

{

Param( [Parameter(Mandatory)]$Folder )

try

{

$FileExists = Test-Path $Folder

if($FileExists -eq $False)

{

$result = New-Item $Folder -type directory

if($result -eq $null)

{

logMsg("Imposible to create the folder " + $Folder) (2)

return $false

}

}

return $true

}

catch

{

return $false

}

}

#--------------------------------

#Validate Param

#--------------------------------

function TestEmpty($s)

{

if ([string]::IsNullOrWhitespace($s))

{

return $true;

}

else

{

return $false;

}

}

Function GiveMeConnectionSource()

{

for ($i=1; $i -lt 10; $i++)

{

try

{

logMsg( "Connecting to the database...Attempt #" + $i) (1)

$SQLConnection = New-Object System.Data.SqlClient.SqlConnection

$SQLConnection.ConnectionString = "Server="+$DatabaseServer+";Database="+$Database+";User ID="+$username+";Password="+$password+";Connection Timeout=15"

if( $Pooling -eq $true )

{

$SQLConnection.ConnectionString = $SQLConnection.ConnectionString + ";Pooling=True"

}

else

{

$SQLConnection.ConnectionString = $SQLConnection.ConnectionString + ";Pooling=False"

}

$SQLConnection.Open()

logMsg("Connected to the database...") (1)

return $SQLConnection

break;

}

catch

{

logMsg("Not able to connect - Retrying the connection..." + $Error[0].Exception) (2)

Start-Sleep -s 5

}

}

}

#--------------------------------

#Log the operations

#--------------------------------

function logMsg

{

Param

(

[Parameter(Mandatory=$true, Position=0)]

[string] $msg,

[Parameter(Mandatory=$false, Position=1)]

[int] $Color

)

try

{

$Fecha = Get-Date -format "yyyy-MM-dd HH:mm:ss"

$msg = $Fecha + " " + $msg

Write-Output $msg | Out-File -FilePath $LogFile -Append

$Colores="White"

If($Color -eq 1 )

{

$Colores ="Cyan"

}

If($Color -eq 3 )

{

$Colores ="Yellow"

}

if($Color -eq 2)

{

Write-Host -ForegroundColor White -BackgroundColor Red $msg

}

else

{

Write-Host -ForegroundColor $Colores $msg

}

}

catch

{

Write-Host $msg

}

}

cls

$sw = [diagnostics.stopwatch]::StartNew()

logMsg("Creating the folder " + $FolderV) (1)

$result = CreateFolder($FolderV)

If( $result -eq $false)

{

logMsg("Was not possible to create the folder") (2)

exit;

}

logMsg("Created the folder " + $FolderV) (1)

$LogFile = $FolderV + "Results.Log" #Logging the operations.

$query = @("SELECT 1")

for ($i=0; $i -lt $NumberExecutions; $i++)

{

try

{

$SQLConnectionSource = GiveMeConnectionSource #Connecting to the database.

if($SQLConnectionSource -eq $null)

{

logMsg("It is not possible to connect to the database") (2)

}

else

{

$SQLConnectionSource.StatisticsEnabled = 1

$command = New-Object -TypeName System.Data.SqlClient.SqlCommand

$command.CommandTimeout = 60

$command.Connection=$SQLConnectionSource

for ($iQuery=0; $iQuery -lt $query.Count; $iQuery++)

{

$start = get-date

$command.CommandText = $query[$iQuery]

$command.ExecuteNonQuery() | Out-Null

$end = get-date

$data = $SQLConnectionSource.RetrieveStatistics()

logMsg("-------------------------")

logMsg("Query : " + $query[$iQuery])

logMsg("Iteration : " +$i)

logMsg("Time required (ms) : " +(New-TimeSpan -Start $start -End $end).TotalMilliseconds)

logMsg("NetworkServerTime (ms): " +$data.NetworkServerTime)

logMsg("Execution Time (ms) : " +$data.ExecutionTime)

logMsg("Connection Time : " +$data.ConnectionTime)

logMsg("ServerRoundTrips : " +$data.ServerRoundtrips)

logMsg("-------------------------")

}

$SQLConnectionSource.Close()

}

}

catch

{

logMsg( "You're WRONG") (2)

logMsg($Error[0].Exception) (2)

}

}

logMsg("Time spent (ms) Procces : " +$sw.elapsed) (2)

logMsg("Review: https://docs.microsoft.com/en-us/dotnet/framework/data/adonet/sql/provider-statistics-for-sql-server") (2)

Enjoy!

by Contributed | May 12, 2021 | Technology

This article is contributed. See the original author and article here.

The SharePoint community monthly call is our general monthly review of the latest SharePoint news (news, tools, extensions, features, capabilities, content and training), engineering priorities and community recognition for Developers, IT Pros and Makers. This monthly community call happens on the second Tuesday of each month. You can download recurrent invite from https://aka.ms/sp-call.

Call Summary:

SPFx v1.12.1 with Node v14 and Gulp4 support is generally available. Don’t miss the SharePoint sample gallery. Preview the new Microsoft 365 Extensibility look book gallery. Visit the new Microsoft 365 PnP Community hub at Microsoft Tech Communities! Sign up and attend one of a growing list of Sharing is Caring events. The Microsoft 365 Update – Community (PnP) | May 2021 is now available. In this call, quickly addressed developer and non-developer entries in UserVoice. We are in the process of moving from UserVoice to a 1st party solution for customer feedback/ feature requests.

A huge thank you to the record number of contributors and organizations actively participating in this PnP Community during April. Month over month, you continue to amaze. The host of this call was Vesa Juvonen (Microsoft) @vesajuvonen. Q&A took place in the chat throughout the call.

Featured Topic:

SharePoint Syntex: Product overview and latest feature updates – SharePoint Syntex – a new add on that builds on the content services capabilities already provided in SharePoint with an infusion of AI to automate and augment the classification of content – understanding, processing, compliance. Demos on building and publishing a document understanding model using UI and on downloading a sample model, publishing and processing content using PowerShell Commandlets or APIs.

Actions:

- Register for Sharing is Caring Events:

- First Time Contributor Session – May 24th (EMEA, APAC & US friendly times available)

- Community Docs Session – May

- PnP – SPFx Developer Workstation Setup – May 13th

- PnP SPFx Samples – Solving SPFx version differences using Node Version Manager – May 20th

- AMA (Ask Me Anything) – Microsoft Graph & MGT – June

- First Time Presenter – May 25th

- More than Code with VSCode – May 27th

- Maturity Model Practitioners – May 18th

- PnP Office Hours – 1:1 session – Register

- Download the recurrent invite for this call – https://aka.ms/sp-call.

You can check the latest updates in the monthly summary and at aka.ms/spdev-blog.

This call was delivered on Tuesday, May 11, 2021. The call agenda is reflected below with direct links to specific sections. You can jump directly to a specific topic by clicking on the topic’s timestamp which will redirect your browser to that topic in the recording published on the Microsoft 365 Community YouTube Channel.

Call Agenda:

- UserVoice status for non-dev focused SharePoint entries – 8:04

- UserVoice status for dev focused SharePoint Framework entries – 9:04

- SharePoint community update with latest news and roadmap – 10:35

- Community contributors and companies which have been involved in the past month – 11:56

- Topic: SharePoint Syntex: Product overview and latest feature updates – Sean Squires (Microsoft) | @iamseansquires – 15:28

- Demo: How to build and publish a document understanding model – James Eccles (Microsoft) | @jimdeccles – 24:52

- Demo: SharePoint Syntex integration and automation options – Bert Jansen (Microsoft) | @o365bert – 39:36

The full recording of this session is available from Microsoft 365 & SharePoint Community YouTube channel – http://aka.ms/m365pnp-videos.

- Presentation slides used in this community call are found at OneDrive.

Resources:

Additional resources on covered topics and discussions.

Additional Resources:

Upcoming calls | Recurrent invites:

“Too many links, can’t remember” – not a problem… just one URL is enough for all Microsoft 365 community topics – http://aka.ms/m365pnp.

“Sharing is caring”

SharePoint Team, Microsoft – 12th of May 2021

by Contributed | May 12, 2021 | Technology

This article is contributed. See the original author and article here.

Overview

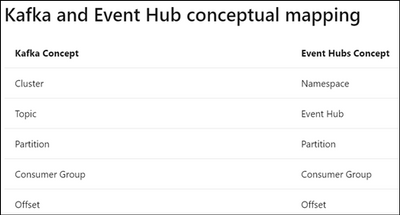

Azure Event Hub provides the feature to be compatible with Apache Kafka. If you are familiar with Apache Kafka, you may have experience in consumer rebalancing in your Kafka Cluster. Rebalancing is a process that decides which consumer in the consumer group should be responsible for which partition in Apache Kafka. The error may occur when a new consumer joins/leaves a consumer group. When you use Azure Event Hub with Apache Kafka, consumer rebalancing may occur in you Azure Event Hub namespace too.

Consumer rebalancing would be a normal process for Apache Kafka. However, if the REBALANCE_IN_PROGRESS error continuously and frequently occurs in your consumer group, you may need to change your configuration to reduce the occurrence frequency of consumer rebalancing. In this post, I would like to share consumer configurations that may cause the REBALANCE_IN_PROGRESS error.

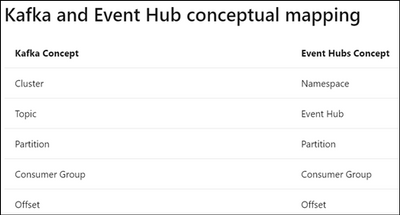

Azure Event Hub with Kafka

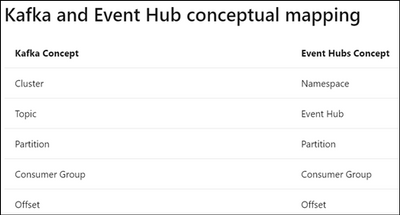

The concept for Azure Event Hub and Kafka may be different, please refer to the document Use event hub from Apache Kafka app – Azure Event Hubs – Azure Event Hubs | Microsoft Docs for Kafka and Event Hub conceptual mapping.

REBALANCE_IN_PROGRESS error

Reference : Apache Kafka

REBALANCE_IN_PROGRESS is a consumer group related error. It is affected by the consumer’s configurations and behaviors. Normally, REBALANCE_IN_PROGRESS error might occur if your session timeout’s value is set too small or application takes too long to process records in the consumer. During rebalancing, your consumer is not able to read any record back. In this case, you will find your consumer is not reading back any records and constantly seeing REBALANCE_IN_PROGRESS. Below are common examples for when rebalancing will happen :

- Scenarios 1

The read back records is empty and there is heartbeat expired error at the same time.

- Scenarios 2

The consumer is processing large records with long processing time and there’s timeout error.

Recommendations for REBALANCE_IN_PROGRESS error

- Recommendations 1 : Increase session.timeout.ms in consumer configuration

Reference : azure-event-hubs-for-kafka/CONFIGURATION.md at master · Azure/azure-event-hubs-for-kafka · GitHub

Heartbeat expired error is usually due to application code crash, long processing time or temporary network issue. If the session.timeout.ms is too small and heartbeat is not sent before timeout, you will see heartbeat expired errors and it will then cause rebalancing.

- Recommendations 2 : Decrease batch size for each poll()

If the time spent on processing records is too large, try to poll less records at a time.

- Recommendations 3 : Increase max.poll.interval.ms in consumer configuration and decrease the time spent on processing the read back records

Reference : azure-event-hubs-for-kafka/CONFIGURATION.md at master · Azure/azure-event-hubs-for-kafka · GitHub

You may need to add logs to track how long the code takes to process records, please refer to the below example. If processing these records exceed the max.poll.interval.ms, it may cause rebalancing.

Consumer< String, String> consumer = new KafkaConsumer<>(props);

consumer.subscribe(Arrays.asList(topic));

While (true) {

log.info("Message begin processing");

ConsumerRecords<String, String> records = consumer.poll(100);

for (ConsumerRecord<String, String> record : records) {

// do something

}

log.info("Message finish processing");

}

consumer.close()

by Contributed | May 12, 2021 | Technology

This article is contributed. See the original author and article here.

Welcome back to our second post in the “Microsoft Cloud App Security: The hunt” series!

If you haven’t read the first post by Sebastien Molendijk, head over to Microsoft Cloud App Security: The hunt in a multi-stage incident – Microsoft Tech Community to see how you can leverage advanced hunting to investigate a multi-stage incident.

As stated previously, this series will be used to address the alerts and scenarios we have seen most frequently from customers and apply simple but effective queries that can be used in everyday investigations.

The below use case describes an avenue to diagnose that an insider is posing risk to an organization. One of the key things to understand about insider risk is that it is an investigation regarding inadvertent or intentional risks posed by employees or other members of the organization. It often requires the ability to understand the context of the user and also to quickly identify and manage risks. The methods we describe are one common way to get at the risk to an organization from an insider who is planning to exit the company.

Every step of this investigation should be done in coordination with your organization’s HR and Legal departments, adhering to appropriate privacy, security and compliance policies as set out by your organization. In addition, there may be training of analysts to handle this kind of investigation with specific and careful steps in accordance with your organization’s commitment to its employees.

Use case

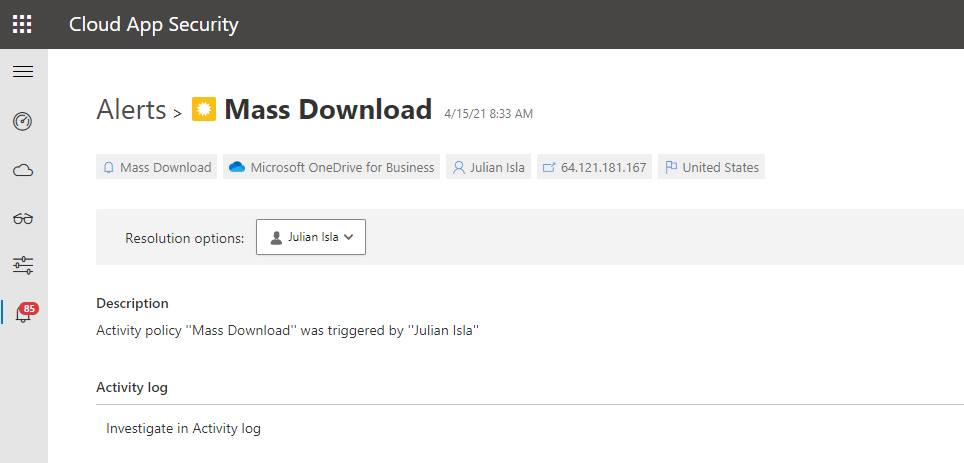

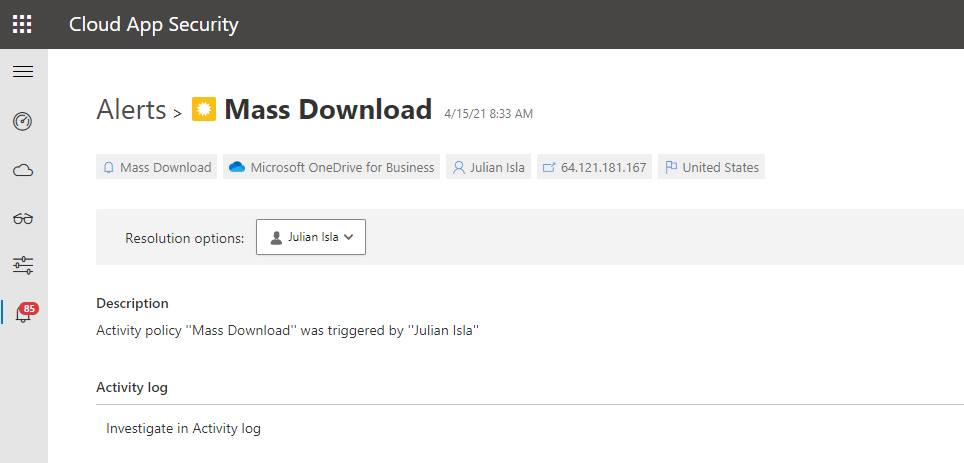

Contoso implemented Microsoft 365 Defender and is monitoring alerts using Microsoft’s security solutions. While reviewing the new alerts, our security analyst noticed a mass download alert that included a user named Julian Isla.

Julian is currently working on a highly confidential initiative called Project Hurricane. Knowing this, the analyst wants to conduct a thorough analysis in this investigation.

Our analyst can immediately see that Cloud App Security provides many key details in the alert, including the user, IP address, application and the location.

The first step for the analyst may be to gather details such as the device, the type of information downloaded, the user’s typical behavior and other possible activities that could mean data was exfiltrated.

Using the available details in the MCAS alert, and the initial questions and concerns of the investigation, we will showcase how to answer each step through an advanced hunting query and that the results of each query shape the follow-on query, allowing the investigator to piece together the full story from the activities logged.

Question 1:

|

Query Used:

|

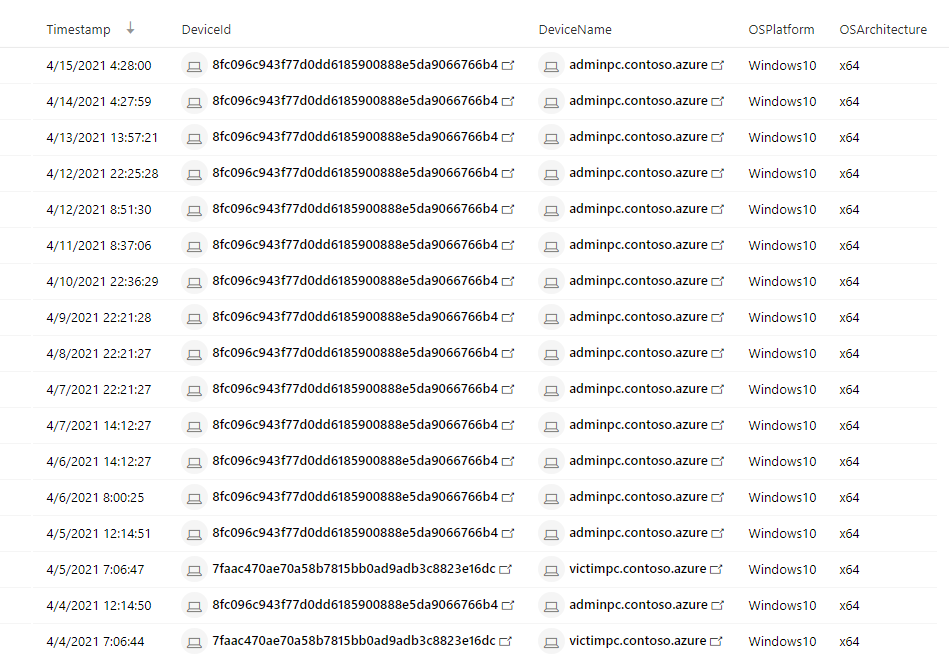

What managed devices has this user logged in to?

|

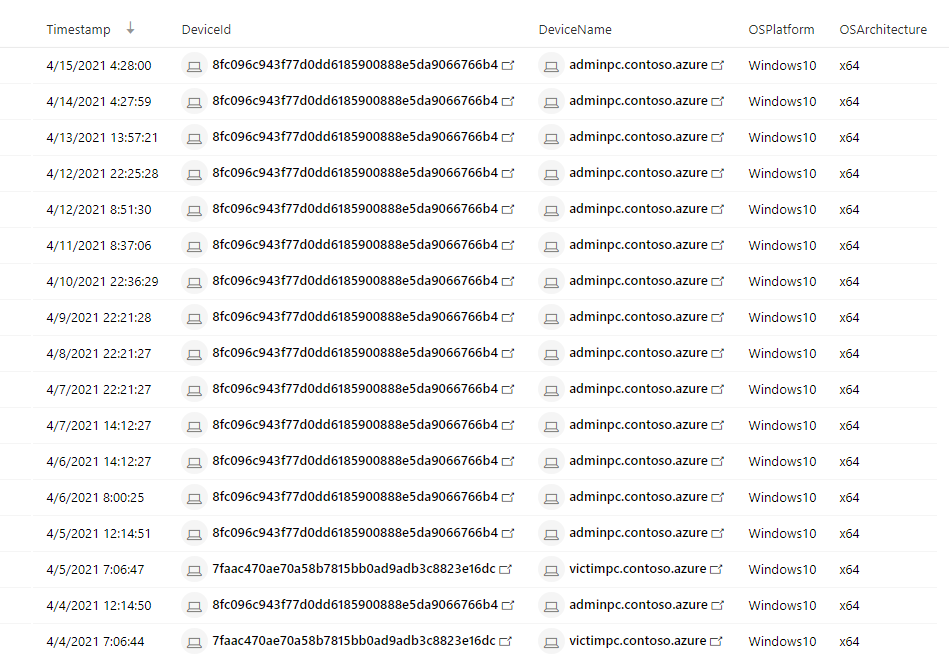

DeviceInfo

| where LoggedOnUsers has "juliani" and isnotempty(OSPlatform)

| distinct Timestamp, DeviceId, DeviceName, OSPlatform, OSArchitecture

|

NOTE: The analyst was able to extract the Security Account Manager (This can be done by using Cloud App Security’s entity page.

NOTE: If the analyst wanted to display the entire LoggedOnUsers table, the column would look like this:

[{“UserName”:”JulianI”,”DomainName”:”CONTOSO”,”Sid”:”S-1-5-21-1661583231-2311428937-3957907789-1103″}]

Result:

Using this query that surfaces Microsoft Defender for Endpoint (MDE) data, the analyst found that Julian used two devices today, adminpc.contoso.azure and victimpc.contoso.azure. More importantly, the analyst can see that Julian was on the adminpc device on the same day as the alert for a mass download was triggered.

Question 2:

|

Query Used:

|

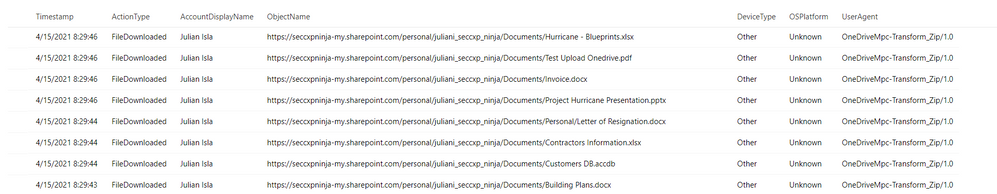

Were the files downloaded to a non-managed device?

|

let AlertTimestamp = datetime(2021-04-15T23:45:00.0000000Z);

CloudAppEvents

| where Timestamp between ((AlertTimestamp - 24h) .. (AlertTimestamp + 24h))

| where AccountDisplayName == "Julian Isla"

| where ActionType == "FileDownloaded"

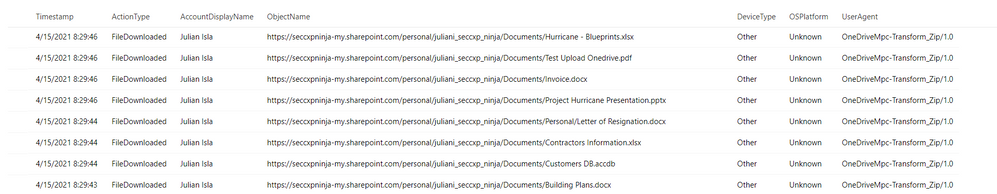

| project Timestamp, ActionType, AccountDisplayName, ObjectName, DeviceType, OSPlatform, UserAgent

|

Result:

By using the CloudAppEvents table, the analyst can now view the file names and the number of files and devices Julian used to complete these downloads. They can determine by the names of the files and the device details that Julian has downloaded important proprietary company data for Project Hurricane, a high-profile initiative for a new application that includes sensitive customer data and source code.

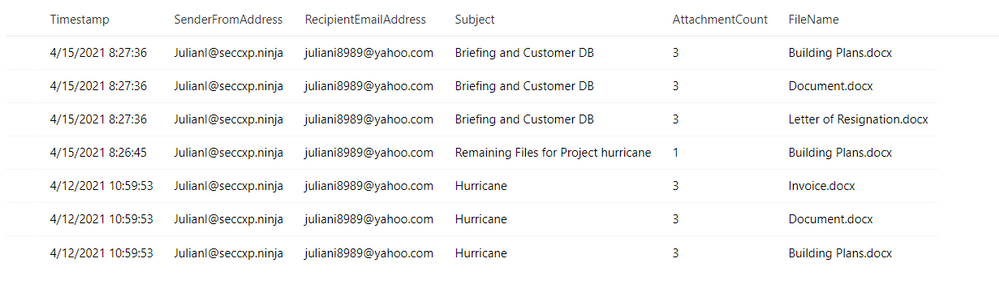

Question 3:

|

Query Used:

|

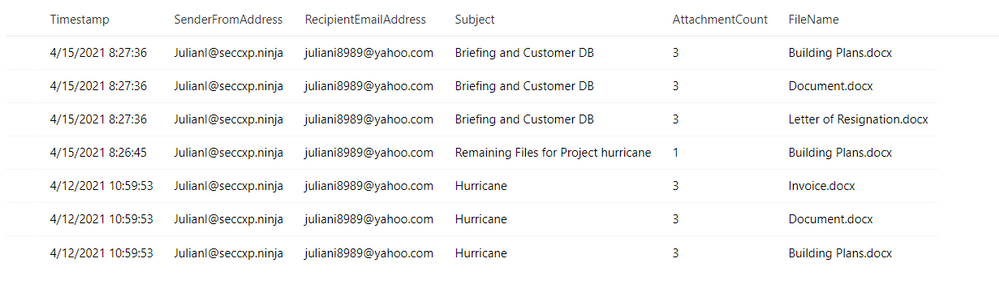

Has this user leveraged personal email in the past?

|

EmailEvents

| where SenderMailFromAddress == "JulianI@seccxp.ninja"

| where RecipientEmailAddress has "@gmail.com" or RecipientEmailAddress has "@yahoo.com" or RecipientEmailAddress has "@hotmail"

| project Timestamp, SenderFromAddress, RecipientEmailAddress, Subject, AttachmentCount, NetworkMessageId

| join EmailAttachmentInfo on NetworkMessageId, RecipientEmailAddress

| project Timestamp, SenderFromAddress, RecipientEmailAddress, Subject, AttachmentCount, FileName

|

Result:

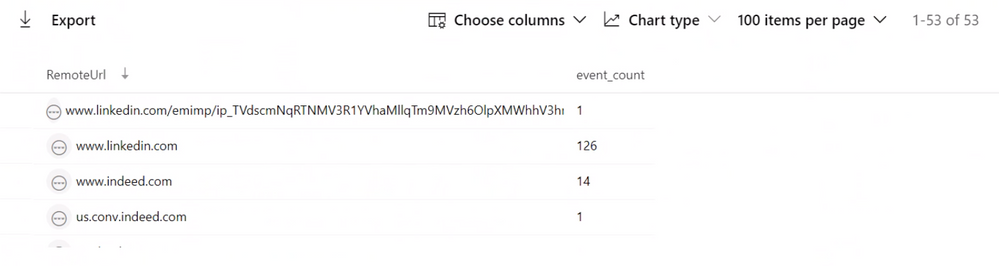

Question 4:

|

Query Used:

|

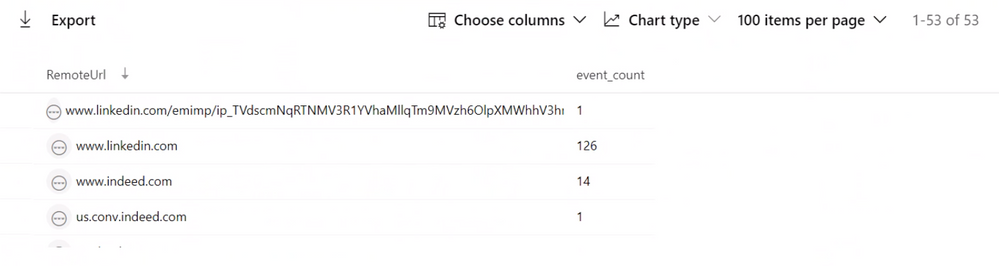

Has this user been actively job searching?

|

DeviceNetworkEvents

| where Timestamp > ago(30d)

| where DeviceName in ("adminpc.contoso.azure”, “victimpc.contoso.azure ")

| where InitiatingProcessAccountName == "juliani"

| where RemoteUrl has "linkedin" or RemoteUrl has "indeed" or RemoteUrl has "glassdoor"

| summarize event_count = count() by RemoteUrl

|

Result:

While investigating the DeviceNetworkEvents table to find if this user may have motivation to be conducting these types of activities, they can see this user is actively surfing job sites and may have plans to leave their current role at Contoso.

Question 5:

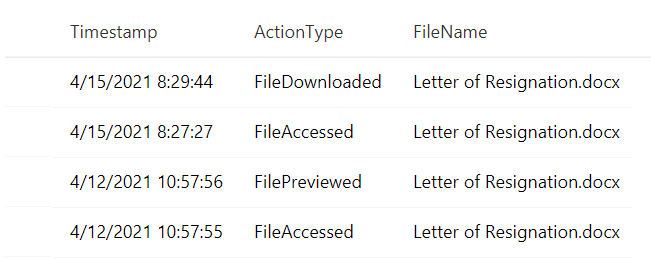

|

Query Used:

|

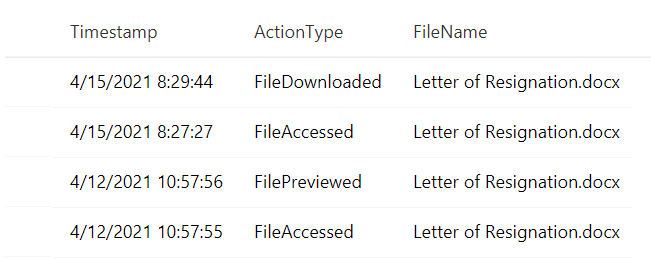

Does this user have a Letter of Resignation or Resume Saved to their local PC?

Does this user have a Letter of Resignation or Resume Saved to their personal OneDrive?

|

DeviceFileEvents

| where Timestamp > ago(30d)

| where InitiatingProcessAccountName == "juliani"

| where DeviceName in ("adminpc.contoso.azure”, “victimpc.contoso.azure ")

| where FileName has "resume" or FileName has "resignation"

| project Timestamp, InitiatingProcessAccountName, ActionType, FileName

CloudAppEvents

| where Timestamp > ago(30d)

| where AccountDisplayName == "Julian Isla"

| where Application == "Microsoft OneDrive for Business"

| extend FileName = tostring(RawEventData.SourceFileName)

| where FileName has "resume" or FileName has "resignation"

| project Timestamp, ActionType, FileName

|

Result:

The analyst is attempting to establish the user’s planned trajectory of actions and sees that they currently have a letter of resignation saved to their desktop and have recently accessed and downloaded it.

Question 6:

|

Query Used:

|

Have any removeable media or external devices been used on the PCs we discovered?

|

let DeviceNameToSearch = "adminpc.contoso.azure";

let TimespanInSeconds = 900; // Period of time between device insertion and file copy

let Connections =

DeviceEvents

| where (isempty(DeviceNameToSearch) or DeviceName =~ DeviceNameToSearch) and ActionType == "PnpDeviceConnected"

| extend parsed = parse_json(AdditionalFields)

| project DeviceId,ConnectionTime = Timestamp, DriveClass = tostring(parsed.ClassName), UsbDeviceId = tostring(parsed.DeviceId), ClassId = tostring(parsed.DeviceId), DeviceDescription = tostring(parsed.DeviceDescription), VendorIds = tostring(parsed.VendorIds)

| where DriveClass == 'USB' and DeviceDescription == 'USB Mass Storage Device';

DeviceFileEvents

| where (isempty(DeviceNameToSearch) or DeviceName =~ DeviceNameToSearch) and FolderPath !startswith "c" and FolderPath !startswith @""

| join kind=inner Connections on DeviceId

| where datetime_diff('second',Timestamp,ConnectionTime) <= TimespanInSeconds

|

Result:

Luckily, the analyst can determine that files were not exfiltrated because there is no record of a removable media device data transfer from the user’s most recently used device.

Throughout the investigation, the analyst had many avenues to pursue and potential ways to mitigate and prevent further exfiltration of data. For example, using Cloud App Security’s user resolutions, the analyst could have suspended the user. Additionally, using Microsoft Defender for Endpoint integration, the analyst could have isolated the managed device, preventing it from having any non-related network communication.

In conclusion, in this test scenario, the Contoso employee, “Julian” had been violating company policy and exfiltrating proprietary data for Project Hurricane to his personal laptop and email account for some time. They also found that the user had been actively job searching and had a recently edited version of a letter of resignation saved to t. Using the initial MCAS alert, as well as logs across Microsoft Defender for Endpoint and Microsoft Defender for Office 365, the analysts have discovered and prevented further data loss for the company by this user.

This completes our second blog, please stay tuned for other common use cases that can be easily and thoroughly investigated with Microsoft Cloud App Security and Microsoft 365 Defender!

Resources:

For more information about the features discussed in this article, please read:

Feedback

We welcome your feedback or relevant use cases and requirements for this pillar of Cloud App Security by emailing CASFeedback@microsoft.com and mention the area or pillar in Cloud App Security you wish to discuss.

Learn more

For further information on how your organization can benefit from Microsoft Cloud App Security, connect with us at the links below:

Follow us on LinkedIn as #CloudAppSecurity. To learn more about Microsoft Security solutions visit our website. Bookmark the Security blog to keep up with our expert coverage on security matters. Also, follow us at @MSFTSecurity on Twitter, and Microsoft Security on LinkedIn for the latest news and updates on cybersecurity.

Happy Hunting!

by Contributed | May 11, 2021 | Technology

This article is contributed. See the original author and article here.

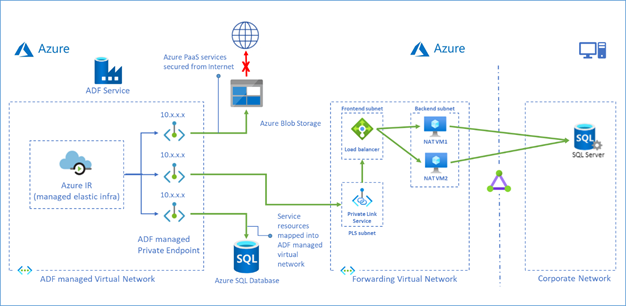

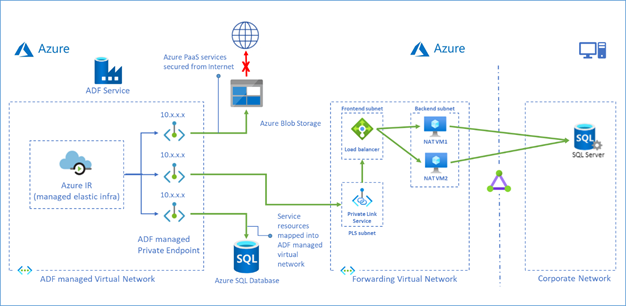

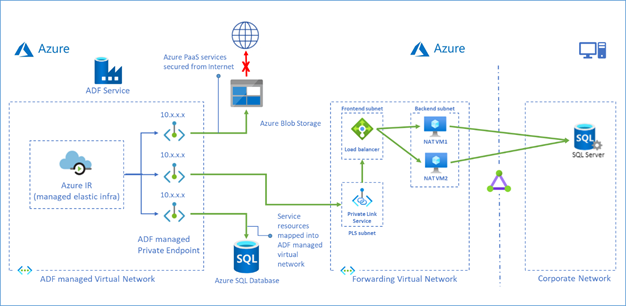

Azure Data Factory managed virtual network is designed to allow you to securely connect Azure Integration Runtime to your stores via Private Endpoint. Your data traffic between Azure Data Factory Managed Virtual Network and data stores goes through Azure Private Link which provides secured connectivity and eliminates your data exposure to the public internet.

Now we have a solution which leverages Private Link Service and Load Balancer to access on premises data stores or data stores in another virtual network from ADF managed virtual network.

To learn more about this solution, visit Tutorial – access on premises SQL Server.

You can also use this approach to access Azure SQL Database Managed Instance, see more in Tutorial – access Azure SQL Database Managed Instance.

by Contributed | May 11, 2021 | Technology

This article is contributed. See the original author and article here.

Improved network performance over the Internet is essential for edge devices connecting to the cloud. Last mile performance impacts user perceived latencies and is an area of focus for our online services like M365, SharePoint, and Bing. Although the next generation transport QUIC is on the horizon, TCP is the dominant transport protocol today. Improvements made to TCP’s performance directly improve response times and download/upload speeds.

The Internet last mile and wide area networks (WAN) are characterized by high latency and a long tail of networks which suffer from packet loss and reordering. Higher latency, packet loss, jitter, and reordering, all impact TCP’s performance. Over the past few years, we have invested heavily in improving TCP WAN performance and engaged with the IETF standards community to help advance the state of the art. In this blog we will walk through our journey and show how we made big strides in improving performance between Windows Server 2016 and the upcoming Windows Server 2022.

Introduction

There are two important building blocks of TCP which govern its performance over the Internet: Congestion Control and Loss Recovery. The goal of congestion control is to determine the amount of data that can be safely injected into the network to maintain good performance and minimize congestion. Slow Start is the initial stage of congestion control where TCP ramps up its speed quickly until a congestion signal (packet loss, ECN, etc.) occurs. The steady state Congestion Avoidance stage follows Slow Start where different TCP congestion control algorithms use different approaches to adjust the amount of data in-flight.

Loss Recovery is the process to detect and recover from packet loss during transmission. TCP can infer that a segment is lost by looking at the ACK feedback from the receiver, and retransmit any segments inferred lost. When loss recovery fails, TCP uses retransmission timeout (RTO, usually 300ms in WAN scenarios) as the last resort to retransmit the lost segments. When the RTO timer fires, TCP returns to Slow Start from the first unacknowledged segment. This long wait period and the subsequent congestion response significantly impacts performance, so optimizing Loss Recovery algorithms enhances throughput and reduces latency.

Improving Slow Start: HyStart++

We determined that the traditional slow start algorithm is overshooting the optimum rate and likely to hit an RTO during slow start due to massive packet loss. We explored the use of an algorithm called HyStart to mitigate this problem. HyStart triggers an exit from Slow Start when the connection latency is observed to increase. However, we found that sometimes false positives cause a premature exit from slow start, limiting performance. We developed a variant of HyStart to mitigate premature Slow Start exit in networks with delay jitter: when HyStart is triggered, rather than going to the Congestion Avoidance stage we use LSS (Limited Slow Start), an increase algorithm that is less aggressive than Slow Start but more aggressive than e have published our ongoing work on the HyStart algorithm as an IETF draft adopted by the TCPM working group: HyStart++: Modified Slow Start for TCP (ietf.org).

Loss recovery performance: Proportional Rate Reduction

HyStart helps prevent the overshoot problem so that we enter loss recovery in Slow Start with fewer packet losses. However, if we retransmit in large bursts. Proportional Rate Reduction (PRR) is a loss recovery algorithm which accurately adjusts the number of bytes in flight throughout the entire loss recovery period such that at the end of recovery it will be as close as possible to the congestion window. We enabled PRR by default in Windows 10 May 2019 Update (19H1).

Re-implementing TCP RACK: Time-based loss recovery

After implementing PRR and HyStart, we still noticed that we tend to consistently hit an RTO during loss recovery if many packets are lost in one congestion window. After looking at the traces, we figured out that it’s lost retransmits that cause TCP to time out. The RACK implementation shipped in Server 2016 is unable to recover lost retransmits. A fully RFC-compliant RACK implementation (which can recover lost retransmits) requires per-segment state tracking but in Server 2016, per-segment state is not stored.

In Server 2016, we built a simple circular-array based data structure to track the send time of blocks of data in one congestion window. The RACK implementation we had with this data structure has many limitations, including being unable to recover lost retransmits. During the development of Windows 10 May 2020 Update, we built per-segment state tracking for TCP and in Server 2022, we shipped a new RACK implementation which can recover lost retransmits.

(Note that Tail Loss Probe (TLP) which is part of RACK/TLP RFC and helps recover faster from tail losses is also implemented and enabled by default since Windows Server 2016.)

Improving resilience to network reordering

Last year, Dropbox and Samsung reported to us that Windows TCP had poor upload performance in their networks due to network reordering. We bumped up the priority of reordering resilience in the Windows version currently under development, we have completed our RACK implementation which is now fully compliant with the RFC. Dropbox and Samsung confirmed that they no longer observed upload performance problems with this new implementation. You can find how we collaborated with the Dropbox engineers here. In our automated WAN performance tests, we also found that the throughput in reordering test cases improved more than 10x.

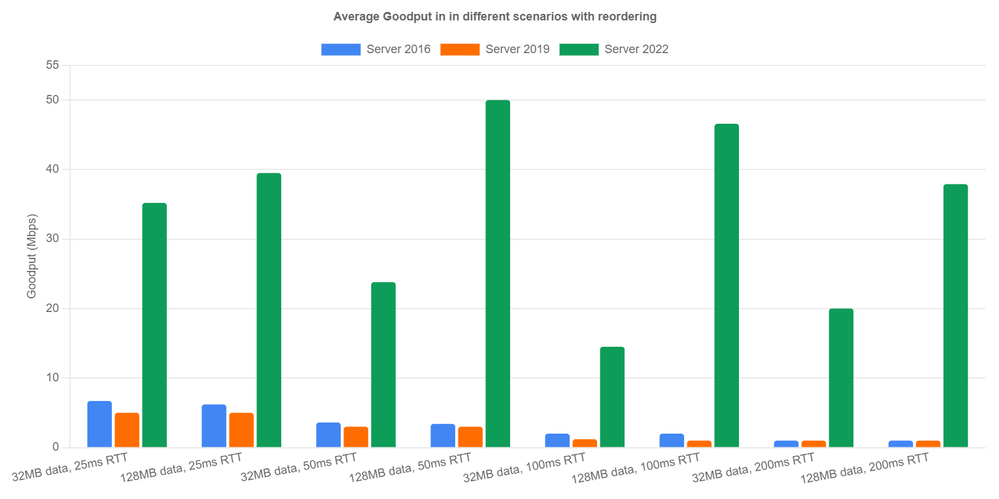

Benchmarks

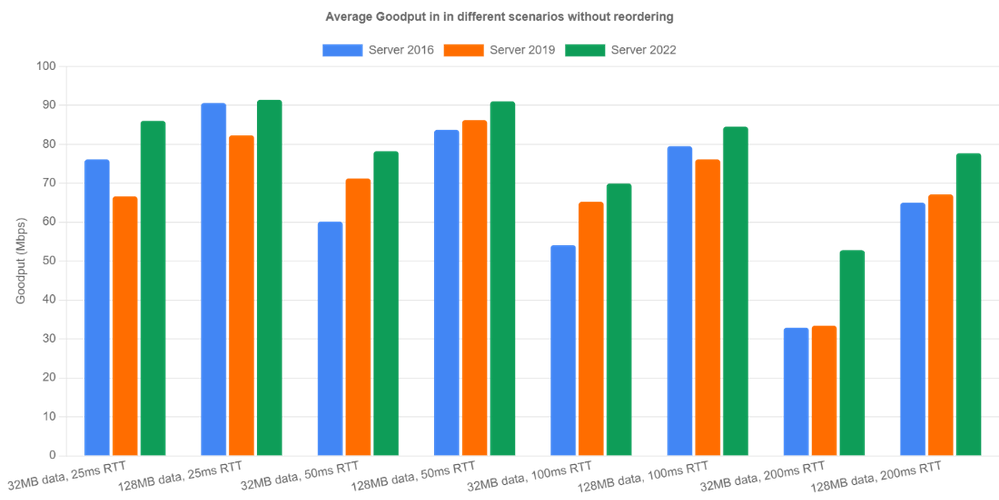

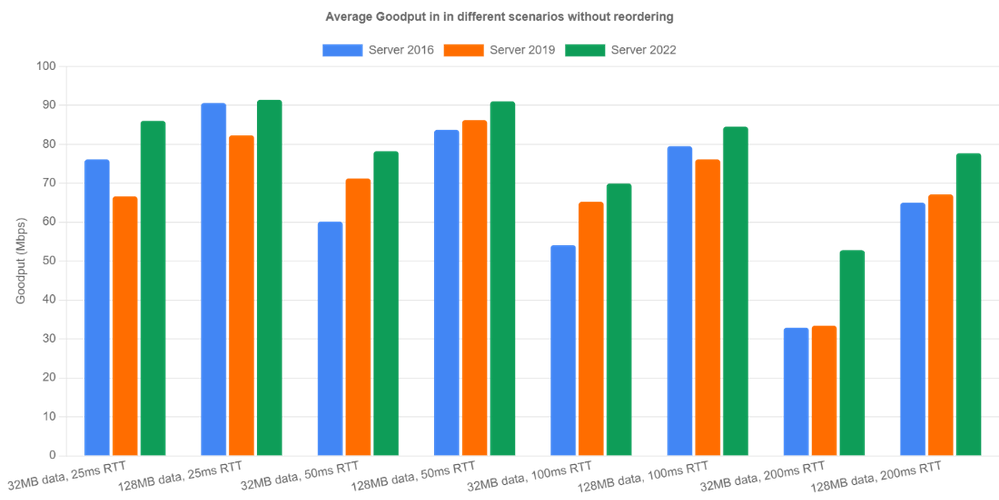

To measure the performance improvements, we set up a WAN environment by creating two NICs on a machine and connecting the two NICs with an emulated link where bandwidth, round trip time, random loss, reordering and jitter can be emulated. We did performance benchmarks on this testbed for Server 2016, Server 2019 and Server 2022 using an A/B testing framework we previously built where you can easily automate testing and data analysis. We used the current Windows build 21359 for Server 2022 in the benchmarks since we plan to backport all TCP perf improvement changes to Server 2022 soon.

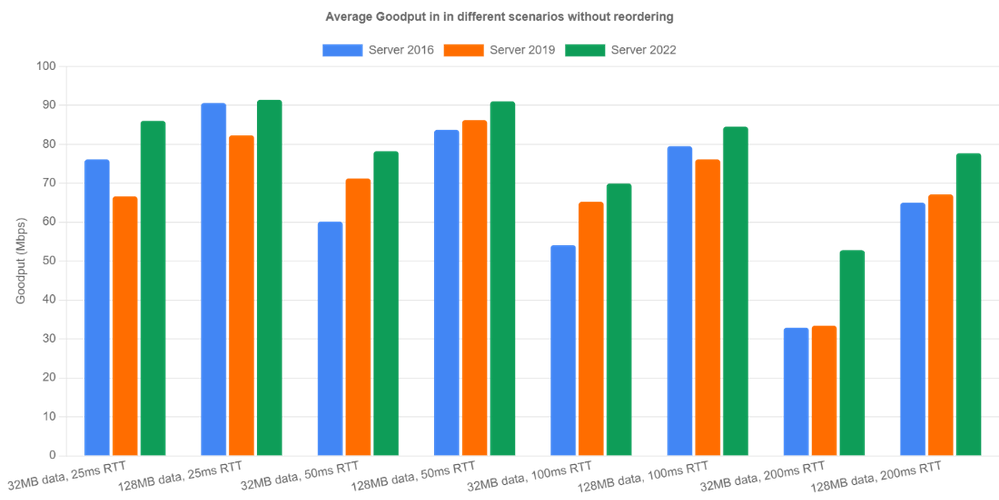

Let’s look at non-reordering scenarios first. We emulated 100Mbps bandwidth and tested the three OS versions under four different round trip times (25ms, 50ms, 100ms, 200ms) and two different flow sizes (32MB, 128MB). The bottleneck buffer size was set to 1 BDP. The results are averaged over 10 iterations.

Server 2022 is the clear winner in all categories because RACK significantly reduces RTOs occurring during loss recovery. Goodput is improved by up to 60% (200ms case). Server 2019 did well in relatively high latency cases (>= 50ms). However, for 25ms RTT, Server 2016 outperformed Server 2019. After digging into the traces, we noticed that the Server 2016 receive window tuning algorithm is more conservative than the one in Server 2019 and it happened to throttle the sender, indirectly preventing the overshoot problem.

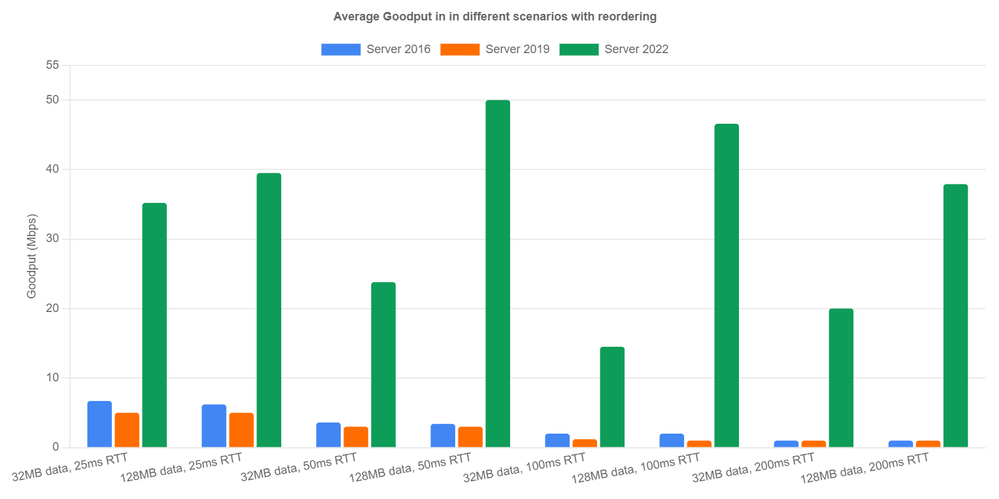

Now let’s look at reordering scenarios. Here’s how we emulate network reordering: we set a probability of reordering per packet. Once a packet is chosen to be reordered, it’s delayed by a specified amount of time instead of the configured RTT. We tested 1% reordering rate and 5ms reordering delay. Server 2016 and Server 2019 achieved extremely low goodput due to lack of reordering resilience. In Server 2022, the new RACK implementation avoided most unnecessary loss recoveries and achieved reasonable performance. We can see goodput is up over 40x in the 128MB with 200ms RTT case. In the other cases, we are seeing at least 5x goodput improvement.

Next Steps

We have come a long way in improving Windows TCP performance on the Internet. However, there are still several issues that we will need to solve in future releases.

- We are unable to measure specific performance improvements from PRR in the A/B tests. This needs more investigation.

- We have found issues with HyStart++ in networks with jitter. So we are working on making the algorithm more resilient to jitter.

- The reassembly queue limit (the max number of discontiguous data blocks allowed in receive queue), turns out to be another factor that affects our WAN performance. After this limit is reached, the receiver discards any subsequent out of order data segments until in-order data fills the gaps. When these segments are discarded, the receiver can only send back SACKs not carrying new information and make the sender stall.

— Windows TCP Dev Team (Matt Olson, Praveen Balasubramanian, Yi Huang)

User Activity report

User Activity report Removable media mounted

Removable media mounted File upload to cloud

File upload to cloud

Recent Comments