by Contributed | May 14, 2021 | Technology

This article is contributed. See the original author and article here.

Microsoft engineering teams have been busy taking your feedback resulting in a plethora of updates. Microsoft service updates in the news includes Webinar enablement made available in Microsoft Teams, Azure IoT Central feature updates, Enabling Azure Site Recovery (ASR) while creating Azure Virtual Machines, Attribute-based Access Control (ABAC) in Azure Storage, and the Bicep enabled Microsoft Learn Module of the Week.

Full-featured webinars for Microsoft Teams

Beginning this week, Microsoft has announced that Webinars and PowerPoint Live are beginning to rollout in Microsoft Teams. Additionally, Presenter mode will begin to roll out later in May. With these capabilities, you have new ways to deliver polished, professional presentations in meetings of all sizes, from small internal meetings to large customer-facing webinars and events—all from a single application.

Learn more about how to use these new capabilities in Microsoft Teams via the following guide: Introduction to Webinars in Microsoft Teams

Azure IoT Central new and updated features for April 2021

Microsoft has announced that the IoT Central API service is now generally available and can be accessed through the production v1.0 endpoint. These APIs and the breadth of the IoT Central extensibility surface can now be used to develop production-ready solutions.

Following customer feedback, Microsoft iterated on thier API surface and invested in new capabilities including the ability to:

- Manage API tokens.

- Create and manage DTDLv2 device templates.

- Create, onboard, and manage devices.

- List the user roles in your application.

- Add, update, and remove users.

Other added abilities made available in this update include the ability to create a link containing a device ID parameter populating a device dashboard, support for components in Edge modules and improvment in the ability to diagnose device health and connection via device connectivity events.

Further details surrounding the IoT Central April 2021 update can be found here: General availability: Azure IoT Central new and updated features—April 2021

Enable Azure Site Recovery (ASR) while creating Azure Virtual Machines

Microsoft recently announced a new capability in Azure Site Recovery (ASR) to further improve the Business Continuity and Disaster Recovery (BCDR) posture of Azure VMs – in-line enablement of ASR at the time of VM creation. This capability helps you avoid the hassle of separately configuring DR across regions (or zones) after the creation of VMs.

Please note that this offering is currently limited to Windows VMs and CentOS, Oracle Linux, and Red Hat Linux VMs. Microsoft also does not currently support zone to zone (in region) disaster recovery through the Create VM workflow. Support for pending Linux distros and zone to zone disaster recovery is coming soon.

Learn more how to create a Windows VM or a Linux VM via the portal.

Azure Storage: Attribute-based Access Control (ABAC) now in public preview

Attribute-based access control (ABAC) is an authorization strategy that defines access levels based on attributes associated with security principals, resources, requests, and the environment. Azure ABAC builds on role-based access control (RBAC) by adding conditions to Azure role assignments in the existing identity and access management (IAM) system. This preview includes support for role assignment conditions on Blobs and ADLS Gen2, and enables you to author conditions based on resource and request attributes.

Role-assignment conditions enable finer-grained access control for storage resources. They can also be used to simplify hundreds of role assignments for a storage resource. The preview enables you to author conditions for storage DataActions, and can be used with built-in or custom roles.

Learn more about testing the Attribute-based Access Control (ABAC) preview here: Authorize access to blobs using Azure role assignment conditions (preview)

Community Events

MS Learn Module of the Week

Introduction to infrastructure as code using Bicep

This module will enable you to describe the benefits of using infrastructure as code, Azure Resource Manager, and Bicep to quickly and confidently scale your cloud deployments. Determine the types of deployments for which Bicep is a good imperative deployment tool.

Learning objectives

After completing this module, you’ll be able to:

- Describe the benefits of infrastructure as code.

- Describe the difference between declarative and imperative infrastructure as code.

- Explain what Bicep is, and how it fits into an infrastructure as code approach.

Learn more here: Introduction to infrastructure as code using Bicep

Let us know in the comments below if there are any news items you would like to see covered in the next show. Be sure to catch the next AzUpdate episode and join us in the live chat.

by Contributed | May 14, 2021 | Technology

This article is contributed. See the original author and article here.

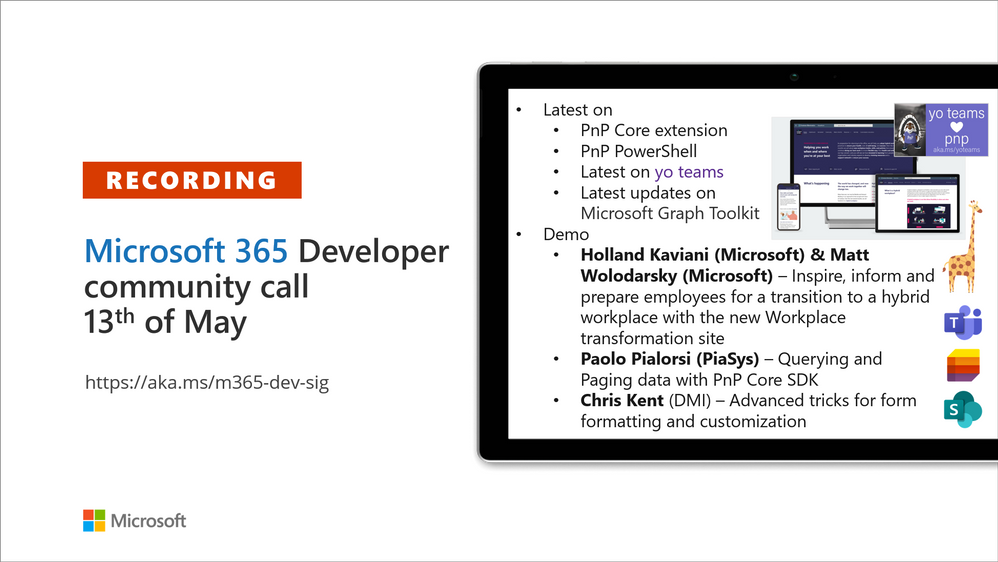

Recording of the Microsoft 365 – General M365 development Special Interest Group (SIG) community call from May 13, 2021.

Call Summary

Latest news from Microsoft 365 engineering and updates on open-source projects: PnP .NET libraries, PnP PowerShell, modernization tooling, on yo Teams, on Microsoft Graph Toolkit, and on Microsoft Teams Samples.

Check out the new Microsoft 365 Extensibility look book gallery, visit the Microsoft Teams samples gallery to get started with Microsoft Teams development, and register now for May trainings on Sharing-is-caring. Open-source project activity is focused on prepping for May releases in Microsoft Build time frame.

Open-source project status:

Project |

Current Version |

Release/Status |

PnP .NET Libraries – PnP Framework |

v1.4.0 |

Bug fixes, Prepping for v1.5.0 (May) |

PnP .NET Libraries – PnP Core SDK |

v1.1.0 |

Bug fixes, Prepping for v1.2.0 (May) |

PnP PowerShell |

v1.5.0 (just added Cmdlets for Viva Connections and Syntex) |

Prepping for v1.6.0 (May) |

Yo teams – generator-teams |

v3.0.3 GA, v3.1.0 Preview |

Preview with Viva Connections support |

Yo teams – yoteams-build-core |

v1.1.0 |

|

Yo teams – msteams-react-base-component |

v3.1.0 |

|

Microsoft Graph Toolkit (MGT) |

v2.1.0 GA, v2.2.0 Preview |

v2.2.0 planned Build release |

Additionally, one new Teams sample delivered. The host of this call was David Warner II (Catapult Systems) | @DavidWarnerII. Q&A takes place in chat throughout the call.

Actions:

- Register for Sharing is Caring Events:

- First Time Contributor Session – May 24th (EMEA, APAC & US friendly times available)

- Community Docs Session – May

- PnP – SPFx Developer Workstation Setup – June

- PnP SPFx Samples – Solving SPFx version differences using Node Version Manager – May 20th

- AMA (Ask Me Anything) – Microsoft Graph & MGT – June

- AMA (Ask Me Anything) – Microsoft Teams Dev – June

- First Time Presenter – May 25th

- More than Code with VSCode – May 27th

- Maturity Model Practitioners – May 18th

- PnP Office Hours – 1:1 session – Register

- Download the recurrent invite for this call – http://aka.ms/m365-dev-sig

- Call attention to your great work by using the #PnPWeekly on Twitter.

Microsoft Teams Development Samples: (https://aka.ms/TeamsSampleBrowser)

Great to see all the faces in the community. Hopefully soon we will get to see each other in person.

Demos delivered in this session

Inspire, inform and prepare employees transition to the new hybrid Workplace transformation site – learn about the customizable SharePoint Hybrid Workplace site template (in look book) and new end user training (available on support.microsoft.com and in Microsoft 365 Learning Pathways playlists) to help customers and colleagues’ transition to a new way of working. Article insights based on customer conversations and research by Microsoft. Training categories (playlists) – meetings & collaboration, wellness and productivity, security & data protection.

Querying and Paging data with PnP Core SDK – after downloading the PnPCoreAuth package, installing and configuring the needed services, obtain the PnPContext from PnPContextFactory and start using the library. There are multiple options for querying data. PnP Core SDK Query Model options – Load*, Get*, LINQ, and Nested/hierarchical queries. PnP Core SDK Paging Model options – Implicit asynchronous paging*, Full load of data/synchronous implicit paging, and Manual paging with Take/Skip. *Preferred.

Advanced tricks for form formatting and customization – some items in a list should not show up on a form. You can delete, hide or conditionally show values (based on entries in other fields) on a form. Column and Form formatting are vastly different. Learn ways to detect what values are in the list vs what values are on the form and also how to create read only sections on a form.

Thank you for your work. Samples are often showcased in Demos.

Topics covered in this call

- PnP .NET library updates – Paolo Pialorsi (PiaSys.com) @paolopia – 4:45

- PnP PowerShell updates – Paolo Pialorsi (PiaSys.com) @paolopia – 6:35

- yo Teams updates – Paolo Pialorsi (PiaSys.com) @paolopia – 7:24

- Microsoft Graph Toolkit updates – Beth Pan (Microsoft) | @beth_panx – 8:34

- Microsoft Teams Samples – Bob German (Microsoft) @Bob1German – 9:46

Demo: Inspire, inform and prepare employees transition to the new hybrid Workplace transformation site – Holland Kaviani (Microsoft) & Matt Wolodarsky (Microsoft) | @mwolodarsky – 12:34

Demo: Querying and Paging data with PnP Core SDK – Paolo Pialorsi (PiaSys) | @PaoloPia – 25:21

Demo: Advanced tricks for form formatting and customization – Chris Kent (DMI) | @theChrisKent – 43:22

Resources:

Additional resources around the covered topics and links from the slides.

General resources:

Upcoming Calls | Recurrent Invites:

General Microsoft 365 Dev Special Interest Group bi-weekly calls are targeted at anyone who’s interested in the general Microsoft 365 development topics. This includes Microsoft Teams, Bots, Microsoft Graph, CSOM, REST, site provisioning, PnP PowerShell, PnP Sites Core, Site Designs, Microsoft Flow, PowerApps, Column Formatting, list formatting, etc. topics. More details on the Microsoft 365 community from http://aka.ms/m365pnp. We also welcome community demos, if you are interested in doing a live demo in these calls!

You can download recurrent invite from http://aka.ms/m365-dev-sig. Welcome and join in the discussion. If you have any questions, comments, or feedback, feel free to provide your input as comments to this post as well. More details on the Microsoft 365 community and options to get involved are available from http://aka.ms/m365pnp.

“Sharing is caring”

Microsoft 365 PnP team, Microsoft – 14th of May 2021

by Contributed | May 14, 2021 | Technology

This article is contributed. See the original author and article here.

One of the major limitations with multi-tenant logic app was its inability to integrate with private resources (that are behind a firewall and/or deny public connections). Integration service environment (ISE) was one solution to achieve this task. But there is certain limitations in using ISE and that’s a different topic for discussion.

Let’s see how to integrate single tenant logic app with private resources.

- Create a Single tenant logic app and workflow. I am using a HTTP trigger to start the workflow.

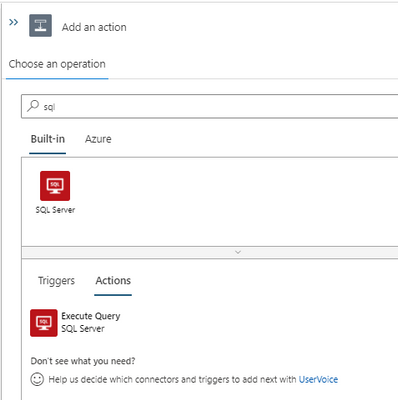

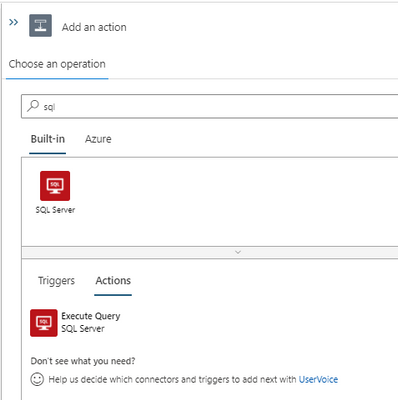

Add an action and search for SQL. We can see two connector options. Built-in and Azure.

Private connection is possible only using the built-in actions. If we use Azure action, we will have to whitelist the connector outbound IP ranges (public) in the destination system firewall.

At the moment, we have only one built-in action for SQL that is ‘Execute SQL query’. More built-in actions are expected to be added in the future.

- To create a built-in SQL connection we can use connection string. We can obtain the connection string from SQL database and add the password to create the action. For this example, I use a select query to one of the tables in the SQL database. I will add a response action to complete the workflow design. Let’s use the result of SQL action in the response using Dynamic content.

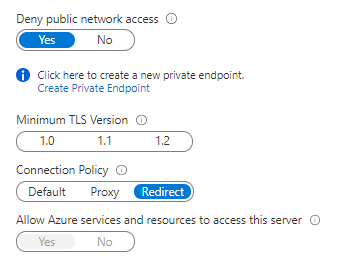

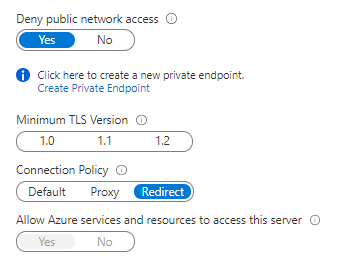

If the SQL server is currently not behind a firewall and ‘Allow Azure services and resources to access this server’ flag is set to Yes we can test the logic app and make sure it is working fine.

Let’s use the HTTP URL that is generated when the workflow is saved in an API testing tool (like Postman) to trigger the logic app. I tested and got the response with the SQL query results.

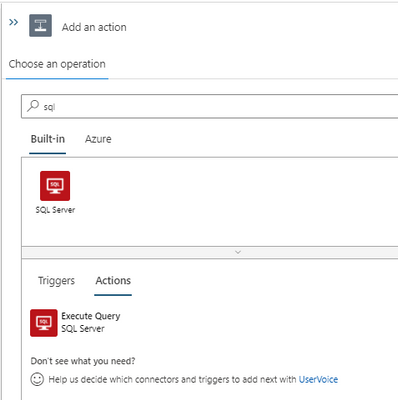

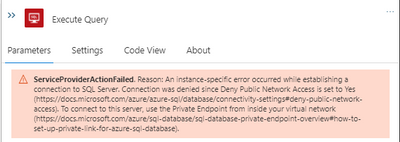

If the SQL server is already behind a firewall and (or) if it denies public connections, workflow would not be able to connect at the moment. We need to create a private endpoint for the Azure SQL server that we need to connect from logic app workflow.

We get below error in workflow (without a private endpoint).

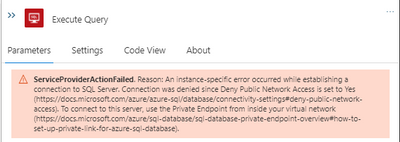

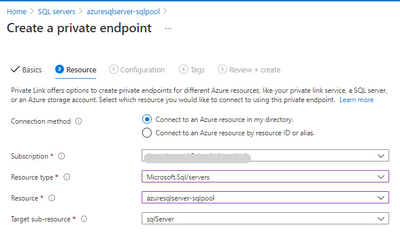

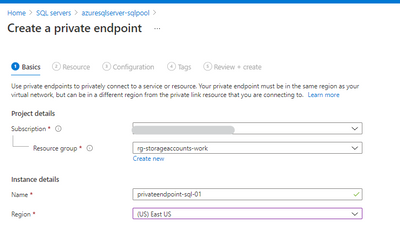

- Let’s create a private endpoint in the SQL server.

Private endpoint enables connectivity between the consumers from the same VNet, regionally peered VNets, globally peered VNets and on premises using VPN or Express Route and services powered by Private Link.

Reference: https://docs.microsoft.com/en-us/azure/private-link/private-endpoint-overview

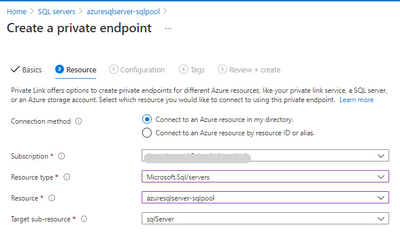

Select the resource.

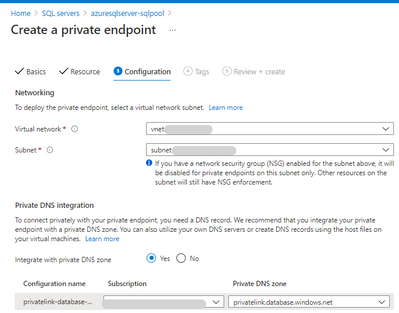

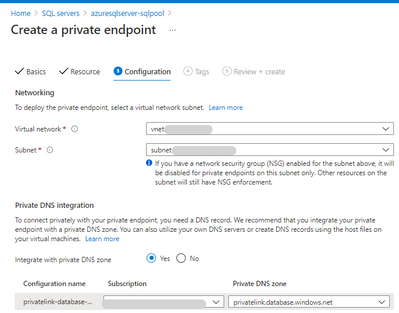

Next step, choose a virtual network and subnet for the private endpoint. We need to integrate the private endpoint with a private DNS zone.

- After the private endpoint is created, let’s deny the public network access.

Test the logic app again. Now we are able to integrate a logic app with a private SQL server using private endpoint. Please let me know your questions or thoughts via comments below.

by Contributed | May 14, 2021 | Technology

This article is contributed. See the original author and article here.

One of my favorite features of (the now General Available) Azure Static Web Apps (SWA) is that in the Standard Tier you can now provide a custom OpenID Connect (OIDC) provider. This gives you a lot more control over who can and can’t access your app.

In this post, I want to look at how we can use Okta and an OIDC provider for Static Web Apps.

For this, you’ll need an Okta account, so if you don’t already have one go sign up and maybe have a read of their docs, just so you’re across everything.

Creating a Static Web App

For this demo, we’ll use the React template, but what we’re covering isn’t specific to React, it’ll be applicable anywhere.

Once you’ve created your app, we’re going to need to setup a configuration file, so add staticwebapp.config.json to the repo root.

This config file is used for controlling a lot of things within our SWA, but the most important part for us is going to be the auth section. Let’s flesh out the skeleton for it:

{

"auth": {

"identityProviders": {

"customOpenIdConnectProviders": {}

}

}

}

Great! Now it’s time to setup Okta.

Creating an Okta application

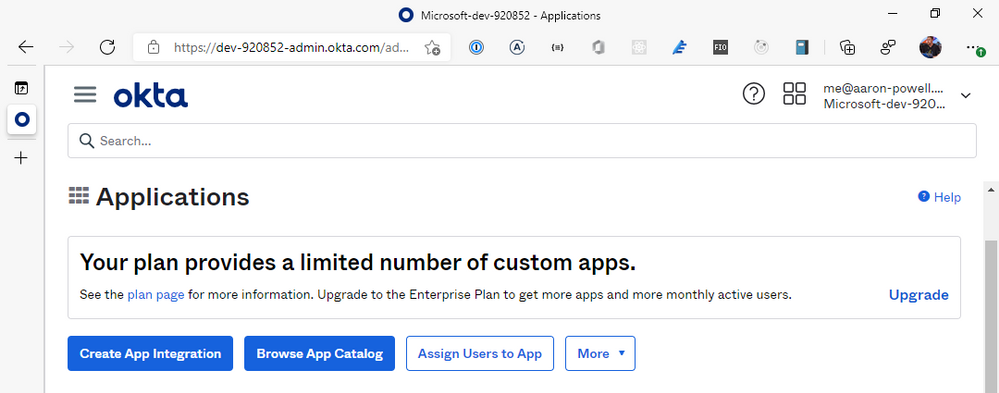

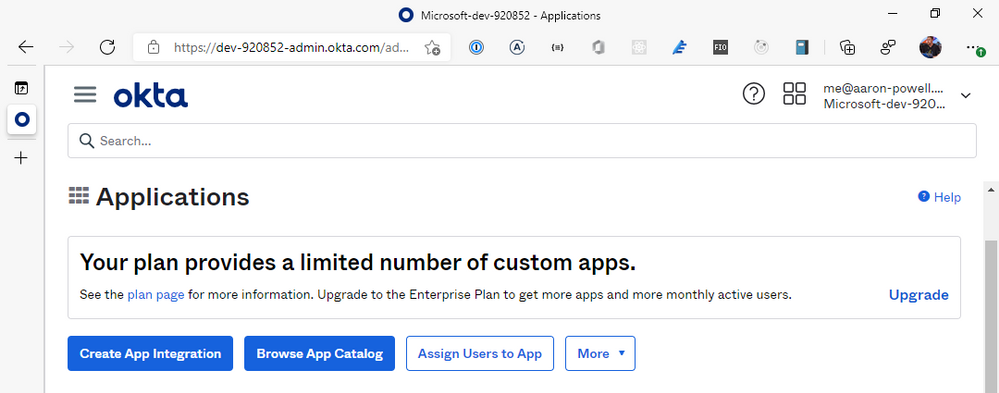

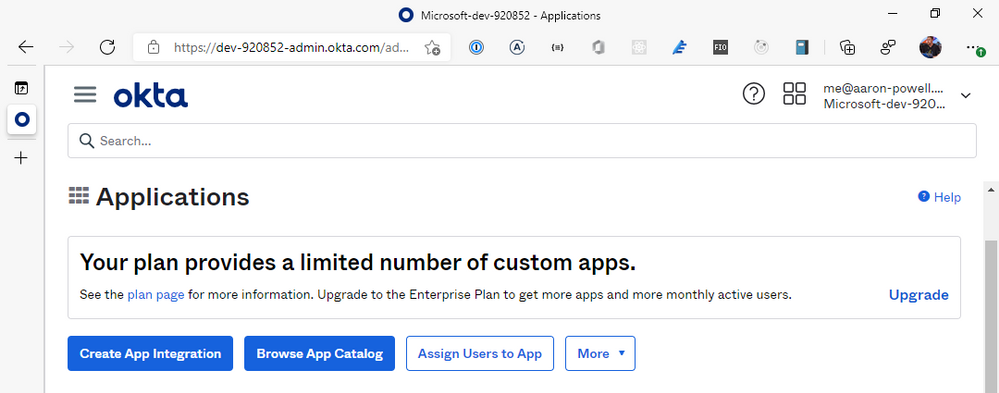

Log into the Okta dashboard and navigate through to the Applications section of the portal:

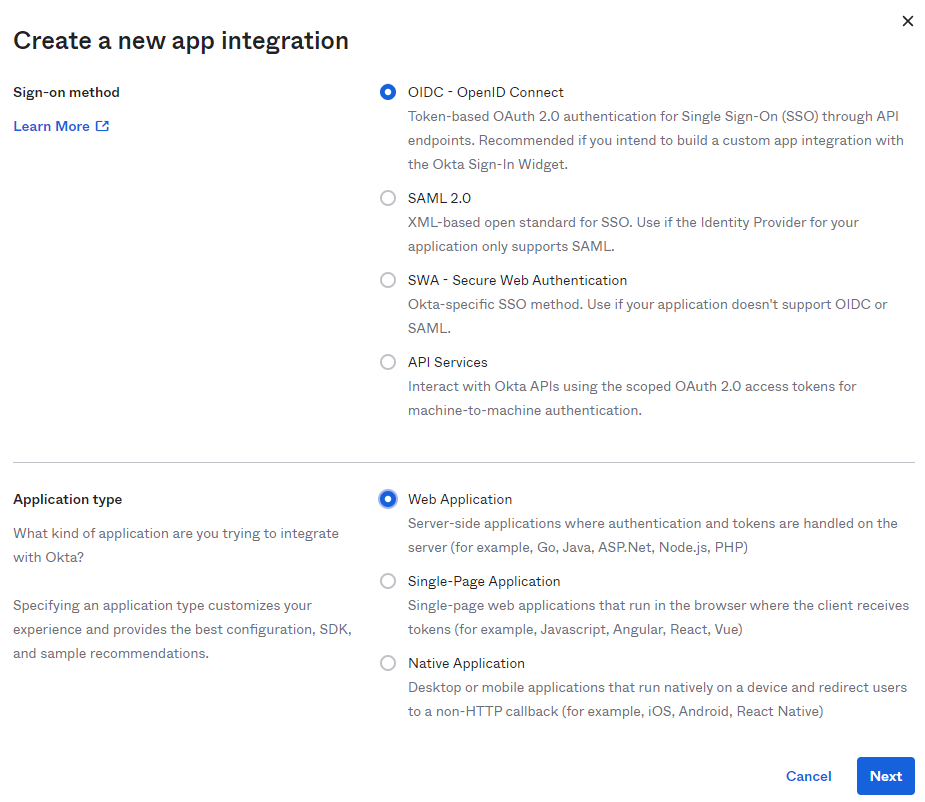

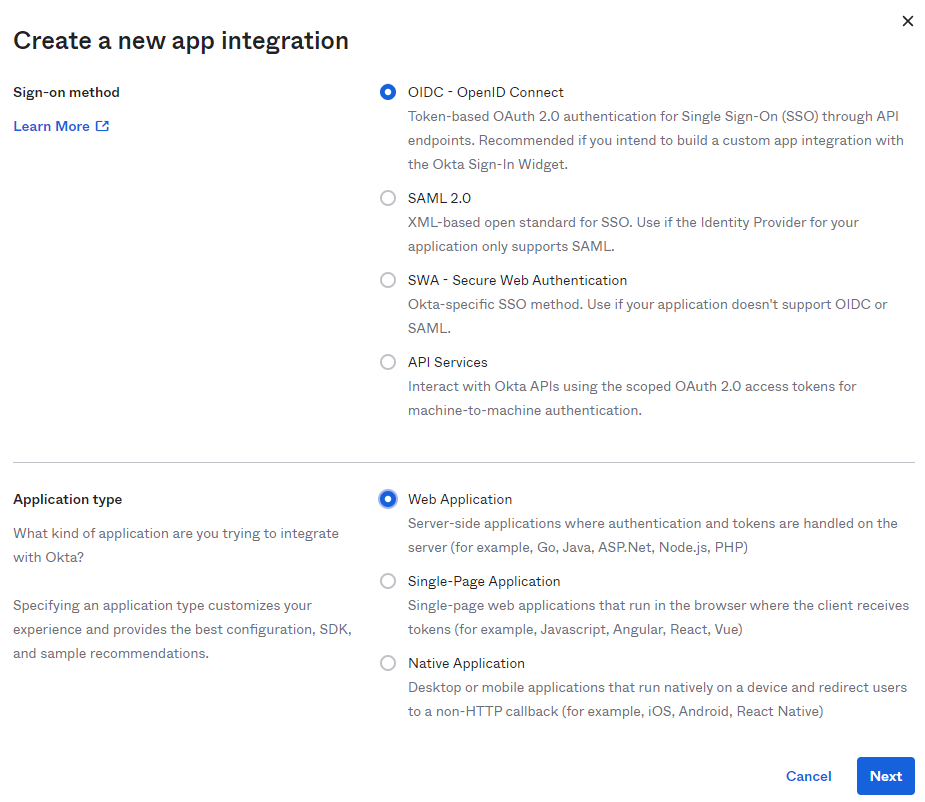

From here, we’re going to select Create App Integration and select OIDC – OpenID Connect for the Sign-on method and Web Application as the Application type. You might be tempted to select the SPA option, given that we’re creating a JavaScript web application, but the reason we don’t use that is that SWA’s auth isn’t handled by your application itself, it’s handled by the underlying Azure service, which is a “web application”, that then exposes the information out that you need.

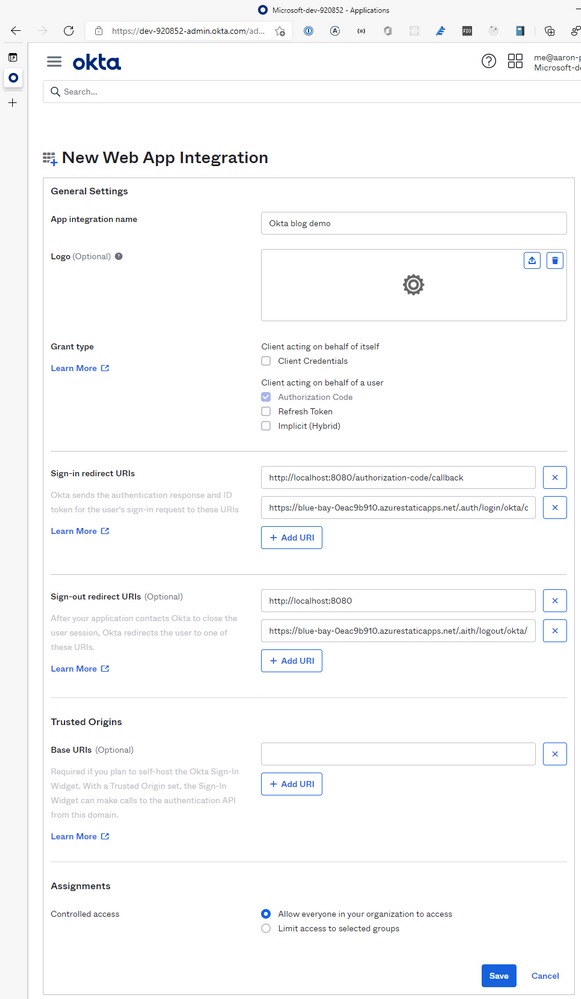

With your application created, it’s time to configure it. Give it a name, something that’ll make sense when you see it in the list of Okta applications, a logo if you desire, but leave the Grant type information alone, the defaults are configured for us just fine.

We are going to need to provide the application with some redirect options for login/logout, so that SWA will know you’ve logged in and can unpack the basic user information.

For the Sign-in redirect URIs you will need to add https://<hostname>/.auth/login/okta/callback and for Sign-out redirect URIs add https://<hostname>/.auth/logout/okta/callback. If you haven’t yet deployed to Azure, don’t worry about this step yet, we’ll do it once the SWA is created.

Quick note – the okta value here is going to be how we name the provider in the staticwebapp.config.json, so it can be anything you want, I just like to use the provider name so the config is easy to read.

Click Save, and it’s time to finish off our SWA config file.

Completing our settings

With our Okta application setup, it’s time to complete our config file so it can use it. We’ll add a new configuration under customOpenIdConnectProviders for Okta and it’ll contain two core pieces of information, the information on how to register the OIDC provider and some login information on how to talk to the provider.

Inside registration, we’ll add a clientIdSettingName field, which will point to an entry in the app settings that the SWA has. Next, we’ll need a clientCredential object that has clientSecretSettingName that is the entry for the OIDC client secret. Lastly, we’ll provide the openIdConnectConfiguration with a wellKnownOpenIdConfiguration endpoint that is https://<your_okta_domain>/.well-known//openid-configuration.

The config should now look like this:

{

"auth": {

"identityProviders": {

"customOpenIdConnectProviders": {

"okta": {

"registration": {

"clientIdSettingName": "OKTA_ID",

"clientCredential": {

"clientSecretSettingName": "OKTA_SECRET"

},

"openIdConnectConfiguration": {

"wellKnownOpenIdConfiguration": "https://dev-920852.okta.com/.well-known/openid-configuration"

}

}

}

}

}

}

}

I use OKTA_ID and OKTA_SECRET as the names of the items I’ll be putting into app settings.

All this information will tell SWA how to issue a request against the right application in Okta, but we still need to tell it how to make the request and handle the response. That’s what we use the login config for. With the login config, we provide a nameClaimType, which is a fully-qualified path to the claim that we want SWA to use as the userDetails field of the user info. Generally speaking, you’ll want this to be http://schemas.xmlsoap.org/ws/2005/05/identity/claims/name, but if there’s a custom field in your response claims you want to use, make sure you provide that. The other bit of config we need here is what scopes to request from Okta. For SWA, you only need openid and profile as the scopes, unless you’re wanting to use a nameClaimType other than standard.

Let’s finish off our SWA config:

{

"auth": {

"identityProviders": {

"customOpenIdConnectProviders": {

"okta": {

"registration": {

"clientIdSettingName": "OKTA_ID",

"clientCredential": {

"clientSecretSettingName": "OKTA_SECRET"

},

"openIdConnectConfiguration": {

"wellKnownOpenIdConfiguration": "https://dev-920852.okta.com/.well-known/openid-configuration"

}

},

"login": {

"nameClaimType": "http://schemas.xmlsoap.org/ws/2005/05/identity/claims/name",

"scopes": ["openid", "profile"]

}

}

}

}

}

}

With the config ready you can create the SWA in Azure and kick off a deployment (don’t forget to update the Okta app with the login/logout callbacks). When the resource is created in Azure, copy the Client ID and Client secret from Okta and create app settings in Azure using the names in your config and the values from Okta.

Using the provider

Once the provider is registered in the config file, it is usable just like the other providers SWA offers, with the login being /.auth/login/<provider_name>, which in this case the provider_name is okta. The user information will then be exposed as standard to both the web and API components.

If you’re building a React application, check out my React auth helper and for the API there is a companion.

Conclusion

I really like that with the GA of Static Web Apps we are now able to use custom OIDC providers with the platform. This makes it a lot easier to have controlled user access and integration with a more complex auth story when needed. Setting this up with Okta only takes a few lines of config.

You can check out a full code sample on my GitHub and a live demo here (but I’m not giving you my Okta credentials :squinting_face_with_tongue:).

by Contributed | May 14, 2021 | Technology

This article is contributed. See the original author and article here.

One of my favorite features of (the now General Available) Azure Static Web Apps (SWA) is that in the Standard Tier you can now provide a custom OpenID Connect (OIDC) provider. This gives you a lot more control over who can and can’t access your app.

In this post, I want to look at how we can use Auth0 and an OIDC provider for Static Web Apps.

For this, you’ll need an Auth0 account, so if you don’t already have one go sign up and maybe have a read of their docs, just so you’re across everything.

Creating a Static Web App

For this demo, we’ll use the React template, but what we’re covering isn’t specific to React, it’ll be applicable anywhere.

Once you’ve created your app, we’re going to need to setup a configuration file, so add staticwebapp.config.json to the repo root.

This config file is used for controlling a lot of things within our SWA, but the most important part for us is going to be the auth section. Let’s flesh out the skeleton for it:

{

"auth": {

"identityProviders": {

"customOpenIdConnectProviders": {}

}

}

}

Great! Now it’s time to setup Auth0.

Creating an Auth0 application

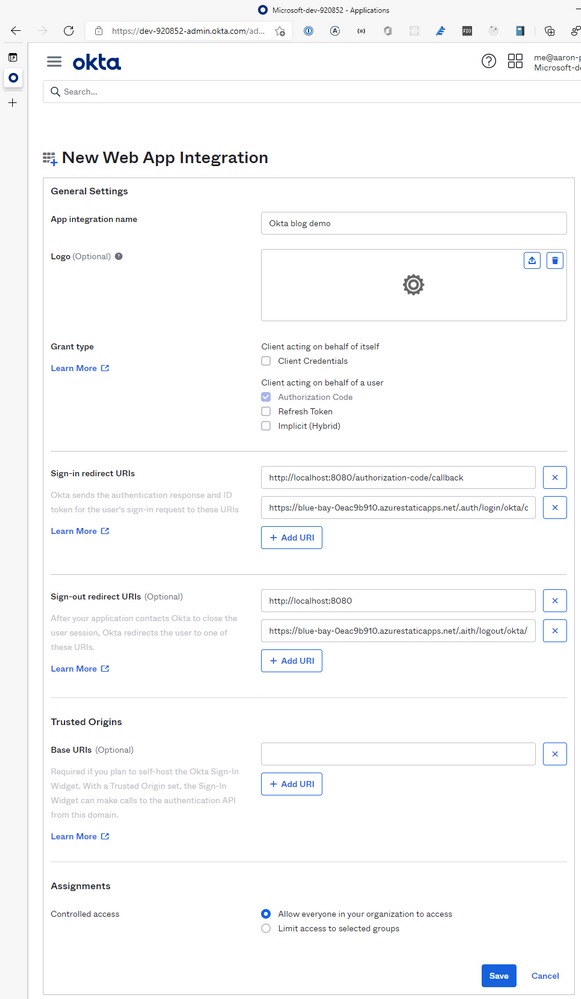

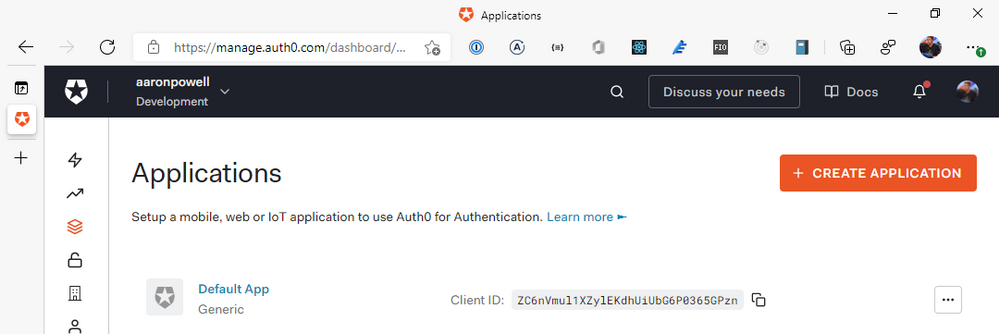

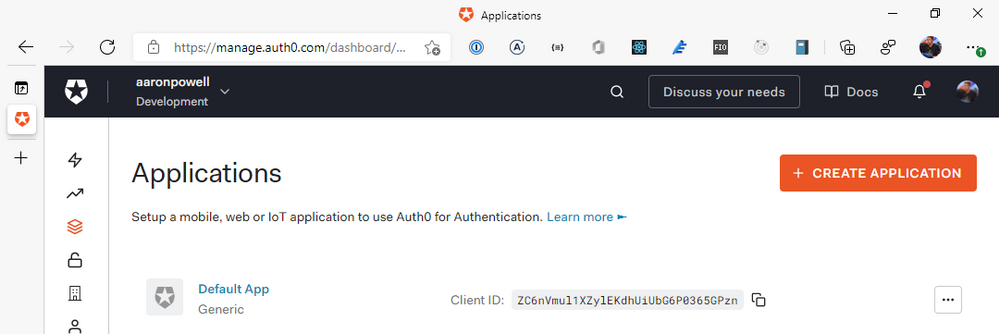

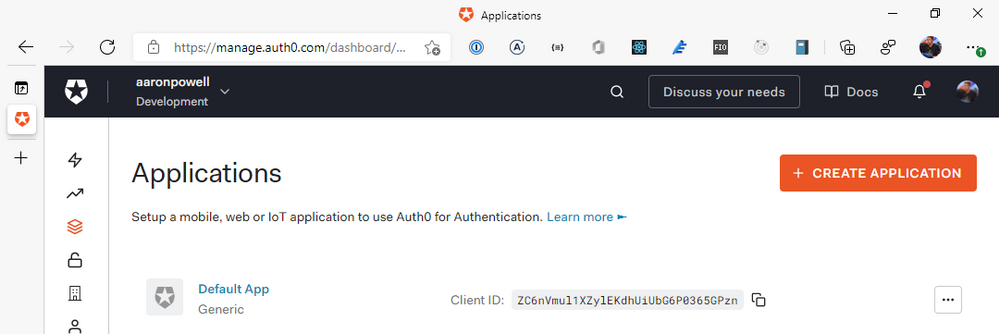

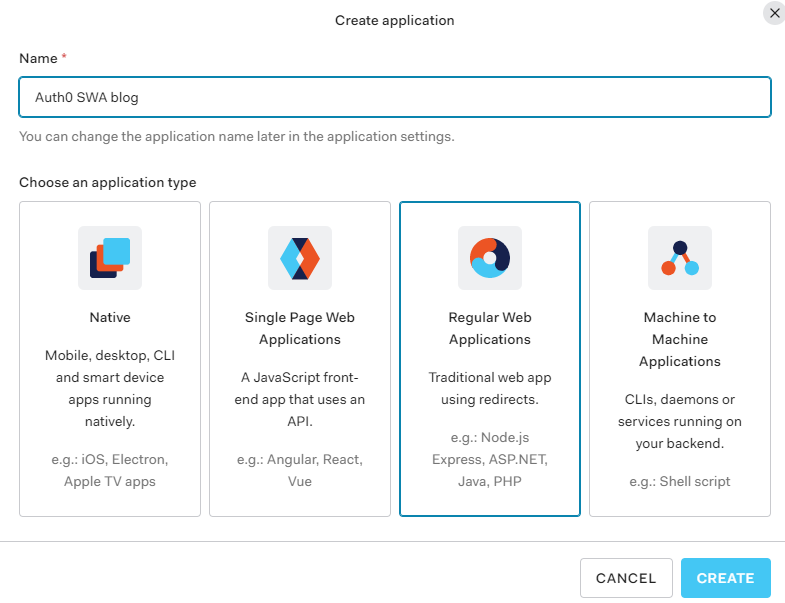

Log into the Auth0 dashboard and navigate through to the Applications section of the portal:

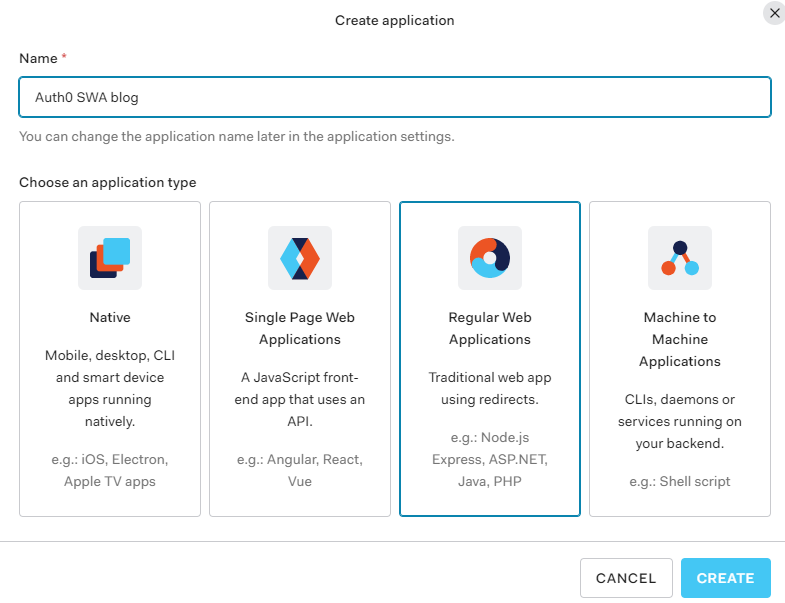

From here, we’re going to select Create Application, give it a name and select Regular Web Applications as the application type. You might be tempted to select the SPA option, given that we’re creating a JavaScript web application, but the reason we don’t use that is that SWA’s auth isn’t handled by your application itself, it’s handled by the underlying Azure service, which is a “web application”, that then exposes the information out that you need.

With your application created, it’s time to configure it. We’ll skip the Quick Start options, as we’re really doing something more custom. Instead, head to Settings as we are going to need to provide the application with some redirect options for login/logout, so that SWA will know you’ve logged in and can unpack the basic user information.

For the Sign-in redirect URIs you will need to add https://<hostname>/.auth/login/auth0 for the Application Login URI, https://<hostname>/.auth/login/auth0/callback for Allowed Callback URLs and for Allowed Logout URLs add https://<hostname>/.auth/logout/auth0/callback. If you haven’t yet deployed to Azure, don’t worry about this step yet, we’ll do it once the SWA is created.

Quick note – the auth0 value here is going to be how we name the provider in the staticwebapp.config.json, so it can be anything you want, I just like to use the provider name so the config is easy to read.

Scroll down and click Save Changes, and it’s time to finish off our SWA config file.

Completing our settings

With our Auth0 application setup, it’s time to complete our config file so it can use it. We’ll add a new configuration under customOpenIdConnectProviders for Auth0 and it’ll contain two core pieces of information, the information on how to register the OIDC provider and some login information on how to talk to the provider.

Inside registration, we’ll add a clientIdSettingName field, which will point to an entry in the app settings that the SWA has. Next, we’ll need a clientCredential object that has clientSecretSettingName that is the entry for the OIDC client secret. Lastly, we’ll provide the openIdConnectConfiguration with a wellKnownOpenIdConfiguration endpoint that is https://<your_auth0_domain>/.well-known//openid-configuration.

The config should now look like this:

{

"auth": {

"identityProviders": {

"customOpenIdConnectProviders": {

"auth0": {

"registration": {

"clientIdSettingName": "AUTH0_ID",

"clientCredential": {

"clientSecretSettingName": "AUTH0_SECRET"

},

"openIdConnectConfiguration": {

"wellKnownOpenIdConfiguration": "https://aaronpowell.au.auth0.com/.well-known/openid-configuration"

}

}

}

}

}

}

}

I use AUTH0_ID and AUTH0_SECRET as the names of the items I’ll be putting into app settings.

All this information will tell SWA how to issue a request against the right application in Auth0, but we still need to tell it how to make the request and handle the response. That’s what we use the login config for. With the login config, we provide a nameClaimType, which is a fully-qualified path to the claim that we want SWA to use as the userDetails field of the user info. Generally speaking, you’ll want this to be http://schemas.xmlsoap.org/ws/2005/05/identity/claims/name, but if there’s a custom field in your response claims you want to use, make sure you provide that. The other bit of config we need here is what scopes to request from Auth0. For SWA, you only need openid and profile as the scopes, unless you’re wanting to use a nameClaimType other than standard.

Let’s finish off our SWA config:

{

"auth": {

"identityProviders": {

"customOpenIdConnectProviders": {

"auth0": {

"registration": {

"clientIdSettingName": "AUTH0_ID",

"clientCredential": {

"clientSecretSettingName": "AUTH0_SECRET"

},

"openIdConnectConfiguration": {

"wellKnownOpenIdConfiguration": "https://aaronpowell.au.auth0.com/.well-known/openid-configuration"

}

},

"login": {

"nameClaimType": "http://schemas.xmlsoap.org/ws/2005/05/identity/claims/name",

"scopes": ["openid", "profile"]

}

}

}

}

}

}

With the config ready you can create the SWA in Azure and kick off a deployment (don’t forget to update the Auth0 app with the login/logout callbacks). When the resource is created in Azure, copy the Client ID and Client secret from Auth0 and create app settings in Azure using the names in your config and the values from Auth0.

Using the provider

Once the provider is registered in the config file, it is usable just like the other providers SWA offers, with the login being /.auth/login/<provider_name>, which in this case the provider_name is auth0. The user information will then be exposed as standard to both the web and API components.

If you’re building a React application, check out my React auth helper and for the API there is a companion.

Conclusion

I really like that with the GA of Static Web Apps we are now able to use custom OIDC providers with the platform. This makes it a lot easier to have controlled user access and integration with a more complex auth story when needed. Setting this up with Auth0 only takes a few lines of config.

You can check out a full code sample on my GitHub and a live demo here (but I’m not giving you my Auth0 credentials :squinting_face_with_tongue:).

by Contributed | May 13, 2021 | Technology

This article is contributed. See the original author and article here.

One of the most common ways to benefit from AI services in your apps is to utilize Speech to Text capabilities to tackle a range of scenarios, from providing captions for audio/video to transcribing phone conversations and meetings. Speech service, an Azure Cognitive Service, offers speech transcription via its Speech to Text API in over 94 language/locales and growing.

In this article we are going to show you how to integrate real-time speech transcription into a mobile app for a simple note taking scenario. Users will be able to record notes and have the transcript show up as they speak. Our Speech SDK supports a variety of operating systems and programming languages. Here we are going to write this application in Java to run on Android.

Common Speech To Text scenarios

The Azure Speech Service provides accurate Speech to Text capabilities that can be used for a wide range of scenarios. Here are some common examples:

- Audio/Video captioning. Create captions for audio and video content using either batch transcription or realtime transcription.

- Call Center Transcription and Analytics. Gain insights from the interactions call center agents have with your customers by transcribing these calls and extracting insights from sentiment analysis, keyword extraction and more.

- Voice Assistants. Voice assistants using the Speech service empowers developers to create natural, human-like conversational interfaces for their applications and experiences. You can add voice in and voice out capabilities to your flexible and versatile bot built using Azure Bot Service with the Direct Line Speech channel, or leverage the simplicity of authoring a Custom Commands app for straightforward voice commanding scenarios.

- Meeting Transcription. Microsoft Teams provides live meeting transcription with speaker attribution that make meetings more accessible and easier to follow. This capability is powered by the Azure Speech Service.

- Dictation. Microsoft Word provides the ability to dictate your documents powered by the Azure Speech Service. It’s a quick and easy way to get your thoughts out, create drafts or outlines, and capture notes.

How to build real-time speech transcription into your mobile app

Prerequisites

As a basis for our sample app we are going to use the “Recognize speech from a microphone in Java on Android” GitHub sample that can be found here. After cloning the cognitive-services-speech-sdk GitHub repo we can use Android Studio version 3.1 or higher to open the project under samples/java/android/sdkdemo. This repo also contains similar samples for various other operating systems and programming languages.

In order to use the Azure Speech Service you will have to create a Speech service resource in Azure as described here. This will provide you with the subscription key for your resource in your chosen service region that you need to use in the sample app.

The only thing you need to try out speech recognition with the sample app is to update the configuration for speech recognition by filling in your subscription key and service region at the top of the MainActivity.java source file:

//

// Configuration for speech recognition

//

// Replace below with your own subscription key

private static final String SpeechSubscriptionKey = "YourSubscriptionKey";

// Replace below with your own service region (e.g., "westus").

private static final String SpeechRegion = "YourServiceRegion";

You can leave the configuration for intent recognition as-is since we are just interested in the speech to text functionality here.

After you have updated the configuration, you can build and run your sample. Ideally you run the application on an Android phone since you will need to have a microphone input.

Trying out the sample

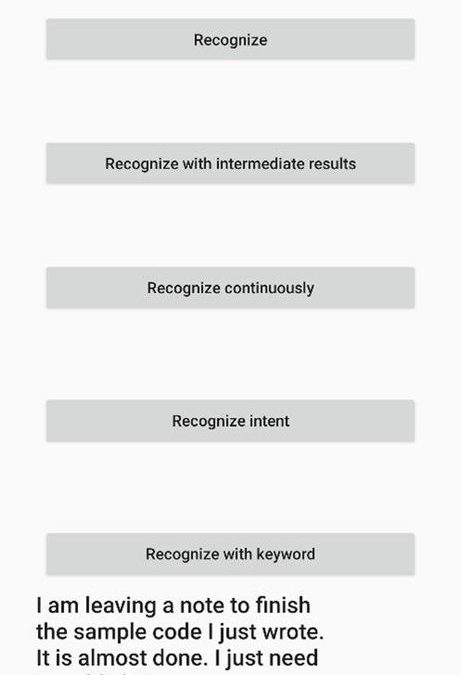

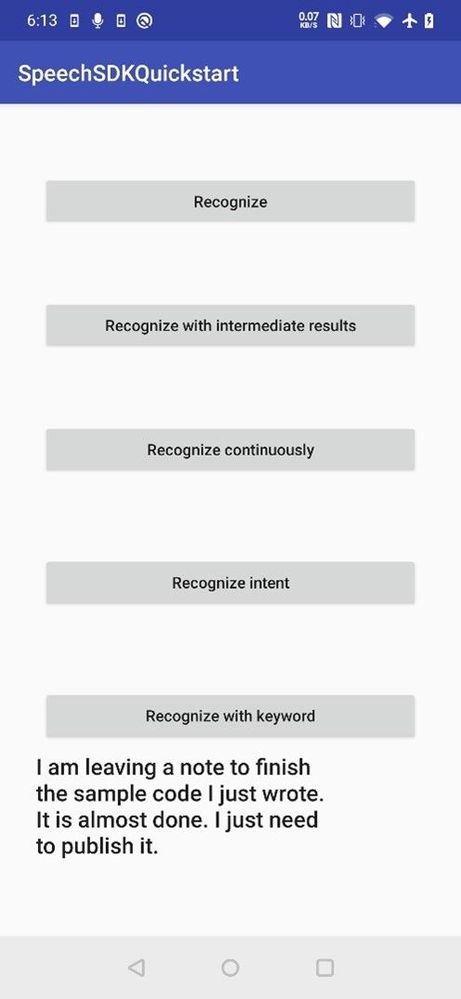

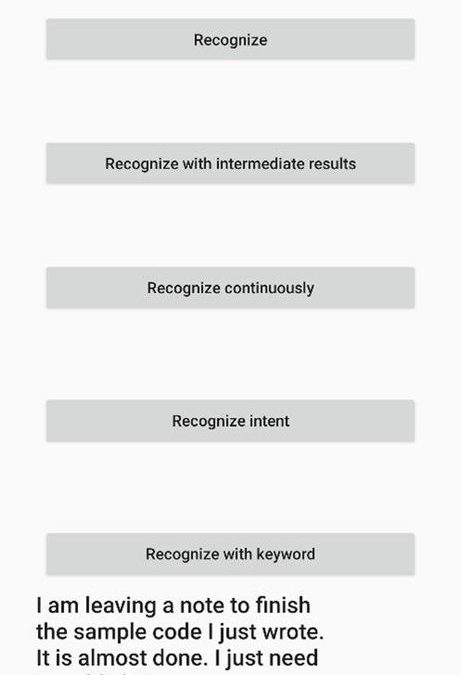

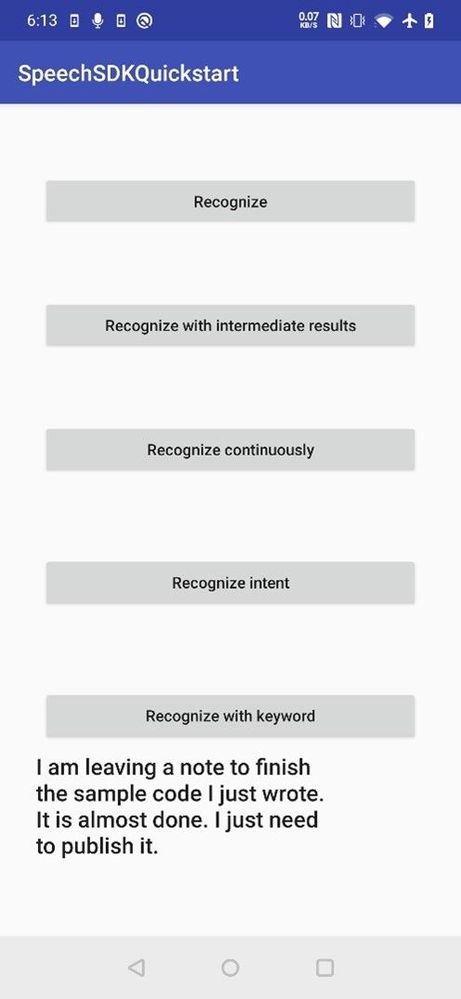

On first use, the application will ask you for the needed application permissions. Then the sample application provides a few options for you to use. Since we want users to be able to capture a longer note we will use the Recognize continuously option.

With this option the recognized text will show up at the bottom of the screen as you speak, and you can speak for a while with some longer pauses in between. Recognition will stop when you hit the stop button. So, this will allow you to capture a longer note.

This is what you should see when you try out the application:

Code Walkthrough

Now that you have this sample working and you have tried it out, let’s look at the key portions of the code that are needed to get the transcript. These can all be found in the MainActivity.java source file.

First in the onCreate function we need to ask for permission to access the microphone, internet, and storage:

int permissionRequestId = 5;

// Request permissions needed for speech recognition

ActivityCompat.requestPermissions(MainActivity.this, new String[]{RECORD_AUDIO, INTERNET, READ_EXTERNAL_STORAGE}, permissionRequestId);

Next, we need to create a SpeechConfig that provides the subscription key and region so we can access the speech service:

// create config

final SpeechConfig speechConfig;

try {

speechConfig = SpeechConfig.fromSubscription(SpeechSubscriptionKey, SpeechRegion);

} catch (Exception ex) {

System.out.println(ex.getMessage());

displayException(ex);

return;

}

The main work to recognize the spoken audio is done in the recognizeContinuousButton function that gets invoked when the Recognize continuously button is pressed and the onClick event is triggered:

///////////////////////////////////////////////////

// recognize continuously

///////////////////////////////////////////////////

recognizeContinuousButton.setOnClickListener(new View.OnClickListener() {

First a new recognizer is created providing information about the speechConfig we created earlier as well as the audio Input from the microphone:

audioInput = AudioConfig.fromStreamInput(createMicrophoneStream());

reco = new SpeechRecognizer(speechConfig, audioInput);

Besides getting the audio stream from a microphone you could also use audio from a file or other stream for example.

Next two event listeners are registered. The first one is for the Recognizing event which signals intermediate recognition results. These are generated as words are being recognized as a preliminary indication of the recognized text. The second one is the Recognized event which signals the completion of a recognition. These will be produced when a long enough pause in the speech is detected and indicate the final recognition result for that part of the audio.

reco.recognizing.addEventListener((o, speechRecognitionResultEventArgs) -> {

final String s = speechRecognitionResultEventArgs.getResult().getText();

Log.i(logTag, "Intermediate result received: " + s);

content.add(s);

setRecognizedText(TextUtils.join(" ", content));

content.remove(content.size() - 1);

});

reco.recognized.addEventListener((o, speechRecognitionResultEventArgs) -> {

final String s = speechRecognitionResultEventArgs.getResult().getText();

Log.i(logTag, "Final result received: " + s);

content.add(s);

setRecognizedText(TextUtils.join(" ", content));

});

Lastly recognition is started using startContinuousRecognitionAsync() and a stop button is displayed.

final Future<Void> task = reco.startContinuousRecognitionAsync();

setOnTaskCompletedListener(task, result -> {

continuousListeningStarted = true;

MainActivity.this.runOnUiThread(() -> {

buttonText = clickedButton.getText().toString();

clickedButton.setText("Stop");

clickedButton.setEnabled(true);

});

});

When the stop button is pressed recognition is stopped by calling stopContinuousRecognitionAsync():

if (continuousListeningStarted) {

if (reco != null) {

final Future<Void> task = reco.stopContinuousRecognitionAsync();

setOnTaskCompletedListener(task, result -> {

Log.i(logTag, "Continuous recognition stopped.");

MainActivity.this.runOnUiThread(() -> {

clickedButton.setText(buttonText);

});

enableButtons();

continuousListeningStarted = false;

});

} else {

continuousListeningStarted = false;

}

return;

}

That is all that is needed to integrate Speech to Text into your application.

Next Steps:

![[Guest Blog] From Publishing to Power Apps: Making a Career Change into Tech](https://www.drware.com/wp-content/uploads/2021/05/fb_image-107.jpeg)

by Contributed | May 13, 2021 | Technology

This article is contributed. See the original author and article here.

This blog was written by Lisa Crosbie, Microsoft Business Applications MVP. Lisa shares how it feels to go from expert to novice, building a network from scratch, learning through a firehose, and why superpower skills are the most important thing.

When you make a mid-life career change, the first time you hear someone introduce their credentials by saying “I’ve been doing this for 20 years” is very confronting. That’s the moment you take stock and realise you may never get to say that, and it’s time to get brave and creative and establish your expertise and credentials in other ways.

When I was a kid, I wanted to be a librarian. Beyond that, I never really had any idea what I wanted to be when I “grew up” and I struggled with how I could ever make a career choice when I was equally interested in and good at both STEM and languages. After an unenthusiastic start with maths and computer science at university, I majored in linguistics and fulfilled my childhood dream of becoming a librarian, and then moved into my new dream career of book publishing.

I had an incredibly fulfilling career, working my way through the ranks to a senior management role. I spent 15 years at Oxford University Press, a dream come true for a long-time language and dictionary nerd. In my first role there, I got paid to visit bookshops and talk about dictionaries all day; now an incredible story of a different time, and a role that was and will always be a career highlight for me. Books were my first love. Even after all that time, the excitement of being in that place where such incredible books were made never wore off.

Unfortunately, my timing was less than perfect, having reached a mid-life career peak in an industry I loved but which was suffering death by a thousand cuts. Books will never die, but the onset of digital and new competitors meant costs went up as revenue stagnated and I found myself almost entirely focused on finding savings and efficiencies. I had stopped learning and growing and was struggling to see the future of my career. Many people talk about career change in mid-life, but very few do it – it’s a tough leap to make. By that stage of life, you’ve established yourself in a field, you have a network and a reputation, a family and/or financial commitments, and a relatively comfortable position of expertise.

I had a now or never moment, and the full support of my family. Life had taught me that there were no guarantees, and that you need to seize the moment when you can. So, I chose now.

Farewell gifts on my last day at Oxford University Press

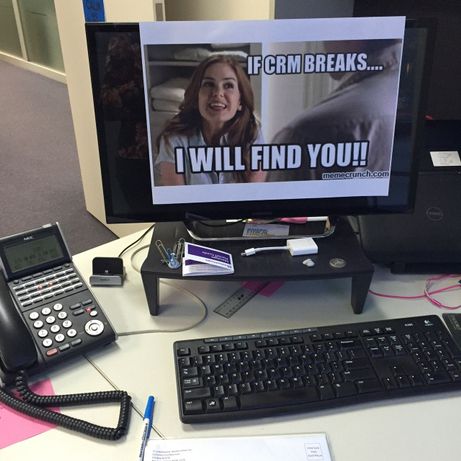

Farewell gifts on my last day at Oxford University Press  My staff decorated my office with memes on my last day

My staff decorated my office with memes on my last day

I took a massive leap that made no sense on paper, resigning from my big job and taking a contract role with the Dynamics 365 partner who had been our consultants. A year later I jumped right into the deep end at Barhead Solutions, just as Power Apps were gaining traction, and Dynamics 365 was getting major investment. The timing for my second career choice could not have been better, riding the wave of massive growth and innovation in Microsoft Business Applications. I took the approach of “say yes and figure it out” and started learning through a firehose. Going from expert to novice is unsettling and scary. I was so far out of my comfort zone, but I found resourcefulness and creativity beyond what I knew I was capable of. I joined conversations online and found myself part of a community of extraordinary people, building an entirely new network from scratch. I had awesome colleagues who were willing to help and share, and I wasn’t afraid to ask “dumb” questions.

Community and excitement – Microsoft Business Applications Summit 2019

Community and excitement – Microsoft Business Applications Summit 2019  Learning and Teaching: Running App in a Day

Learning and Teaching: Running App in a Day

When I was considering career change, I read a lot of advice on finding my transferrable skills. The reality is that you have no idea what’s valuable in your desired new industry, or the terminology for it, until you get there. I wish I could go back and tell my previous self that “I came from the customer side” was the magic phrase to open doors.

Rather than transferrable skills, the real thing to focus on in changing careers is your “superpower” skills. What do you take for granted that might be rare in your new industry? When you work for the world’s largest dictionary publisher, great communication skills are pretty much an entry requirement, but in tech, they have helped me stand out. I have done more proofreading and writing in tech than I ever did in my former career.

I discovered my other superpower skill is my love of learning – a constant desire, ability, and commitment to learn a lot, quickly, often in my free time. When I first landed outside my comfort zone, I had a steep learning curve. Once I learned how to be comfortable outside my comfort zone (another superpower skill) I deliberately put myself on that steep learning curve over and over again. I looked back on my notes this week at jobs I was considering applying for in 2017. There is one there called “Technical Evangelist”, with my annotation ruling it out because it was too technical. That is now my day job.

The learning journey – preparing for my first tech exam over dumplings

The learning journey – preparing for my first tech exam over dumplings

Now 5 years in, I no longer find it confronting that I can’t say I’ve been doing this for 20 years, because I have found my strength in being uniquely me. In fact, I do have 20 years’ experience – skills in leadership, creativity, problem solving, collaboration, and building trust, just applied in completely different ways.

Best of all, there is still so much learning to do and so much to explore in this huge world outside my comfort zone, and that’s a very good place to be.

by Contributed | May 13, 2021 | Technology

This article is contributed. See the original author and article here.

Engaged communities are stronger communities. This is the ethos behind Code for South Florida, a Miami nonprofit that facilitates community feedback between governments and citizens with tech.

The group brings the area’s tech enthusiasts together to create solutions that support the youth and improve quality of life, in partnership with government departments, nonprofit organizations and colleges.

Regarded as the largest public interest technology non-profit in the state, the group leverages public code and data to build prototypes and support data collaborations that make life better for the inhabitants of South Florida.

Ultimately, Code for South Florida seeks to elevate the voices of all who call the region home.

AI MVP and Miami native Noelle Silver says there is new momentum in South Florida that is embracing technology and its potential for social good.

“There are so many people hungry to learn and get into tech but few programs available and affordable to them,” Noelle says. “The Code for South Florida community was created to help more people by combining technology and the government.”

“I was raised in Miami and was not afforded the opportunity to learn about tech or coding. I had to leave and attend a university to get that exposure. I am now back, after travelling the country and working for Amazon, Microsoft, and IBM over the years.”

“South Florida is home to many underrepresented groups and this community serves them all. It allows for people to learn to code in their native language, to find like-minded, career-focused peers and create a social and professional network that will serve them for years.”

Connection and communication are vital to open-source communities like Code for South Florida. Noelle points to recent initiatives like Tech Hub Tech Talk – an educational series that inspires people to get involved in the Miami and South Florida tech scene – and the creation of Open Government and Haitians in Tech – smaller groups that help developers find a support network to call their own – in successfully uniting the many communities of Code for South Florida.

“What I love most about this community is its focus on connecting people together who can further inspire, contribute, and build a local culture of public service and technology,” Noelle says.

“I want to use the Code for South Florida initiative to help bring the idea of technical careers to junior and senior highs across the region. I am using Microsoft Learn as a platform for helping anyone who wants to learn to get the skills they need.”

The biggest challenge, Noelle says, is “trying to be heard above the noise and get this message to more people. This is where the MVP community can help!”

If you have a project you need help with or want to create a new initiative with the tech talent in South Florida, the community has a network of volunteers and staff ready to support. To join the group or for more information visit the Code for South Florida website.

by Contributed | May 13, 2021 | Technology

This article is contributed. See the original author and article here.

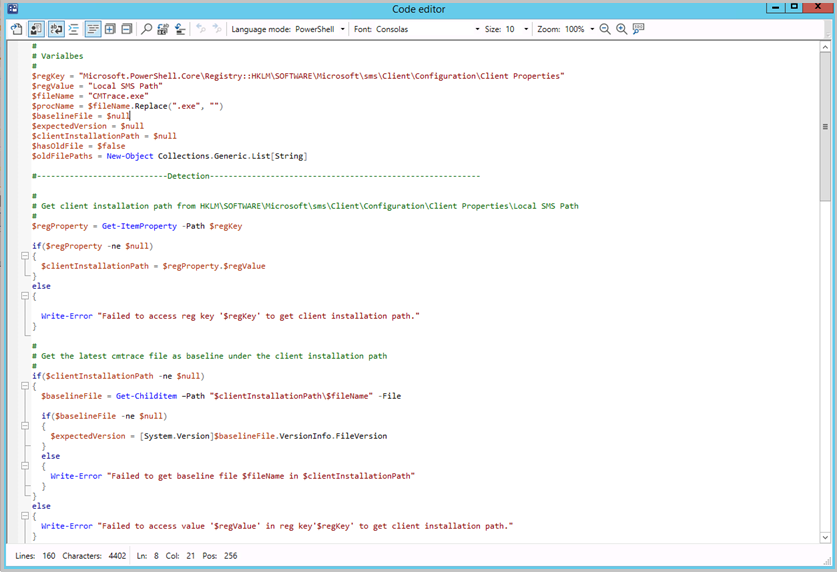

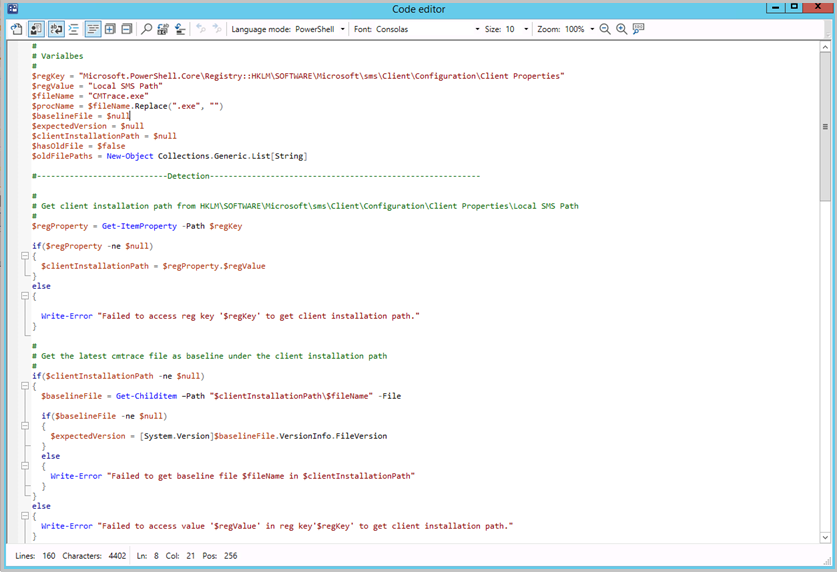

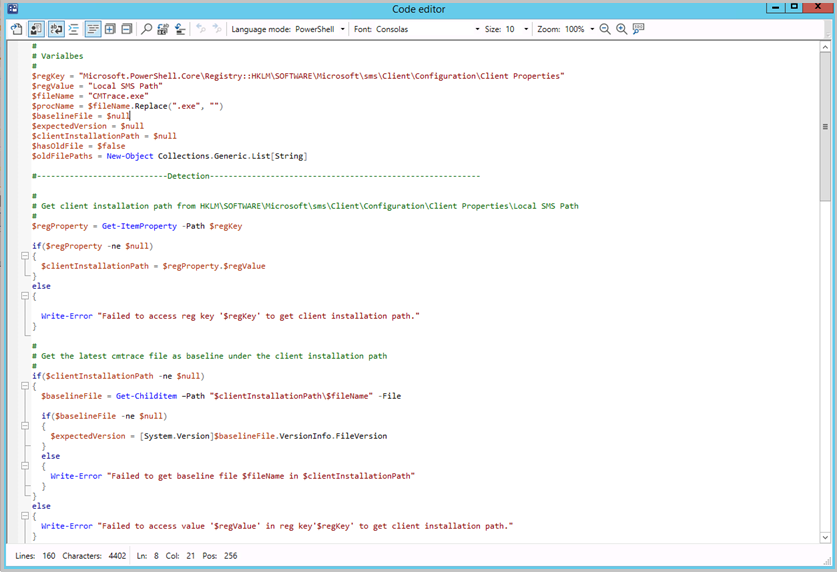

Update 2105 for the Technical Preview Branch of Microsoft Endpoint Configuration Manager has been released. Building on improvements in Configuration Manager 2010 for syntax highlighting and code folding, you now have the ability to edit scripts in an enhanced editor. The new editor supports syntax highlighting, code folding, word wrap, line numbers, and find and replace. The new editor is available in the console wherever scripts and queries can be viewed or edited.

Enhanced code editor in ConfigMgr console

Enhanced code editor in ConfigMgr console

For more information, see Enhanced Code Editor.

This preview release also includes:

Select VM size for CMG – When you deploy a cloud management gateway (CMG) with a virtual machine scale set, you can now choose the virtual machine (VM) size. The following three options are available:

- Lab: B2s

- Standard: A2_v2. This option continues to be the default setting.

- Large: D2_v3

This control gives you greater flexibility with your CMG deployment. You can adjust the size for test labs or if you support large environments.

Support Center dark and light themes – The Support Center tools now offer dark and light modes. Choose to use the system default color scheme, or override the system default by selecting either the dark or light theme.

Updated client deployment prerequisite – The Configuration Manager client requires the Microsoft Visual C++ Redistributable component (vcredist_x*.exe). When you install the client, it automatically installs this component if it doesn’t already exist. Starting in this release, it now uses the Microsoft Visual C++ 2015-2019 Redistributable version 14.28.29914.0. This version improves stability in Configuration Manager client operations.

Change to internet access requirements – To simplify the internet access requirements for Configuration Manager updates and servicing, this technical preview branch release downloads from configmgrbits.azureedge.net. This endpoint is already required, so should already be allowed through internet filters. With this change, the existing internet endpoint for technical preview releases is no longer required: cmupdatepackppe.blob.core.windows.net.

PowerShell release notes preview – These release notes summarize changes to the Configuration Manager PowerShell cmdlets in technical preview version 2105.

For more details and to view the full list of new features in this update, check out our Features in Configuration Manager technical preview version 2105 documentation.

Update 2105 for Technical Preview Branch is available in the Microsoft Endpoint Configuration Manager Technical Preview console. For new installations, the 2103 baseline version of Microsoft Endpoint Configuration Manager Technical Preview Branch is available on the Microsoft Evaluation Center. Technical Preview Branch releases give you an opportunity to try out new Configuration Manager features in a test environment before they are made generally available.

We would love to hear your thoughts about the latest Technical Preview! Send us feedback about product issues directly from the console and continue to share and vote on ideas about new features in Configuration Manager.

Thanks,

The Configuration Manager team

Configuration Manager Resources:

Documentation for Configuration Manager Technical Previews

Try the Configuration Manager Technical Preview Branch

Documentation for Configuration Manager

Configuration Manager Forums

Configuration Manager Support

by Contributed | May 13, 2021 | Technology

This article is contributed. See the original author and article here.

As part of our continued efforts to help customers be successful with Exchange Online, Microsoft has detected that some customers are using an unsupported journaling configuration by pointing their journaling alternate mailbox to an Exchange Online mailbox. With the enforcement of mailbox receiving limits as announced in our February blog post, this may result in loss of journaling non-delivery reports, as cloud-hosted mailboxes will be throttled when they exceed the limit of 3600 messages received per rolling hour. We urge all customers to validate their journaling configuration and ensure they will not be affected.

Using an Exchange Online mailbox as a journaling alternate mailbox

Journaling in Exchange Online allows administrators to set an alternate journaling mailbox. This mailbox will accept non-delivery reports (also known as NDRs or bounce messages) if the primary journaling mailbox is unavailable, to catch undelivered journal reports and allow for re-sending when the journaling mailbox becomes available.

As the documentation states, the alternate journaling mailbox cannot be an Exchange Online mailbox. Cloud mailboxes are subject to our Exchange Online service limits and unsuitable for journaling.

How can I tell if I am affected?

Tenant administrators can check their configuration via the classic Exchange admin center. Navigate to the Journal Rules section and find the email address configured for non-delivery reports. If no address is configured or if the address is not an Exchange Online mailbox, administrators do not need to take action. If the address for journaling NDRs is set to an Exchange Online mailbox, administrators need to change this setting in order to avoid impact.

What do I do if I am affected?

Microsoft’s recommendation is to leverage our Microsoft 365 compliance solutions rather than journaling messages to another location. This suite of solutions provides data protection, data loss prevention, and data governance capabilities to meet customers’ diverse regulatory and compliance requirements.

If journaling is still needed, administrators should double check their settings and switch the alternate journaling address to a non-Exchange Online mailbox. Customers are urged to validate their configuration and take action by May 31st to avoid potential disruption.

Thank you for your attention to this!

The Exchange Transport team and the Microsoft 365 Information Governance team

![[Guest Blog] From Publishing to Power Apps: Making a Career Change into Tech](https://www.drware.com/wp-content/uploads/2021/05/fb_image-107.jpeg)

Recent Comments