by Scott Muniz | Jun 16, 2021 | Security

This article was originally posted by the FTC. See the original article here.

If you’re considering getting a timeshare this vacation season, read on. Maybe you got a flyer in the mail with pictures of sunny beaches and beautiful resort suites. Sounds great, right? But before you sign a timeshare contract, make sure you understand what you’re getting into — and how to get out of it.

Not all timeshares work the same way. Some use points to determine where you can stay and for how long. Others get you one week a year at a resort, but it’s not always the same week. The cost also varies … a lot. Typically, timeshares require you to pay initial fees and yearly maintenance fees that may increase every year.

Promoters might offer you a gift or delicious meal to attend a timeshare presentation. If you decide to go, the sales staff may make a lot of promises and pitches designed to get you to buy right then and there without giving you time to think about it or do any of your own research.

So before you sign that timeshare agreement, ask yourself a few questions:

- If the timeshare is only for a specific property, is this where I want to vacation every year?

- Can I afford this timeshare, even if the maintenance fees go up?

- Do I have the time to deal with issues that may arise if I can’t book the resort I want during the time I want to travel?

- If I no longer can afford or want the timeshare, how can I sell it?

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

by Contributed | Jun 15, 2021 | Technology

This article is contributed. See the original author and article here.

Education IT admins around the world are already planning for the next Back-to-School (BTS) season. If your organization is returning to in person learning, fully remote, or somewhere in between, Microsoft has you covered. Here are the latest Microsoft 365 product updates and roadmap items you need to know!!

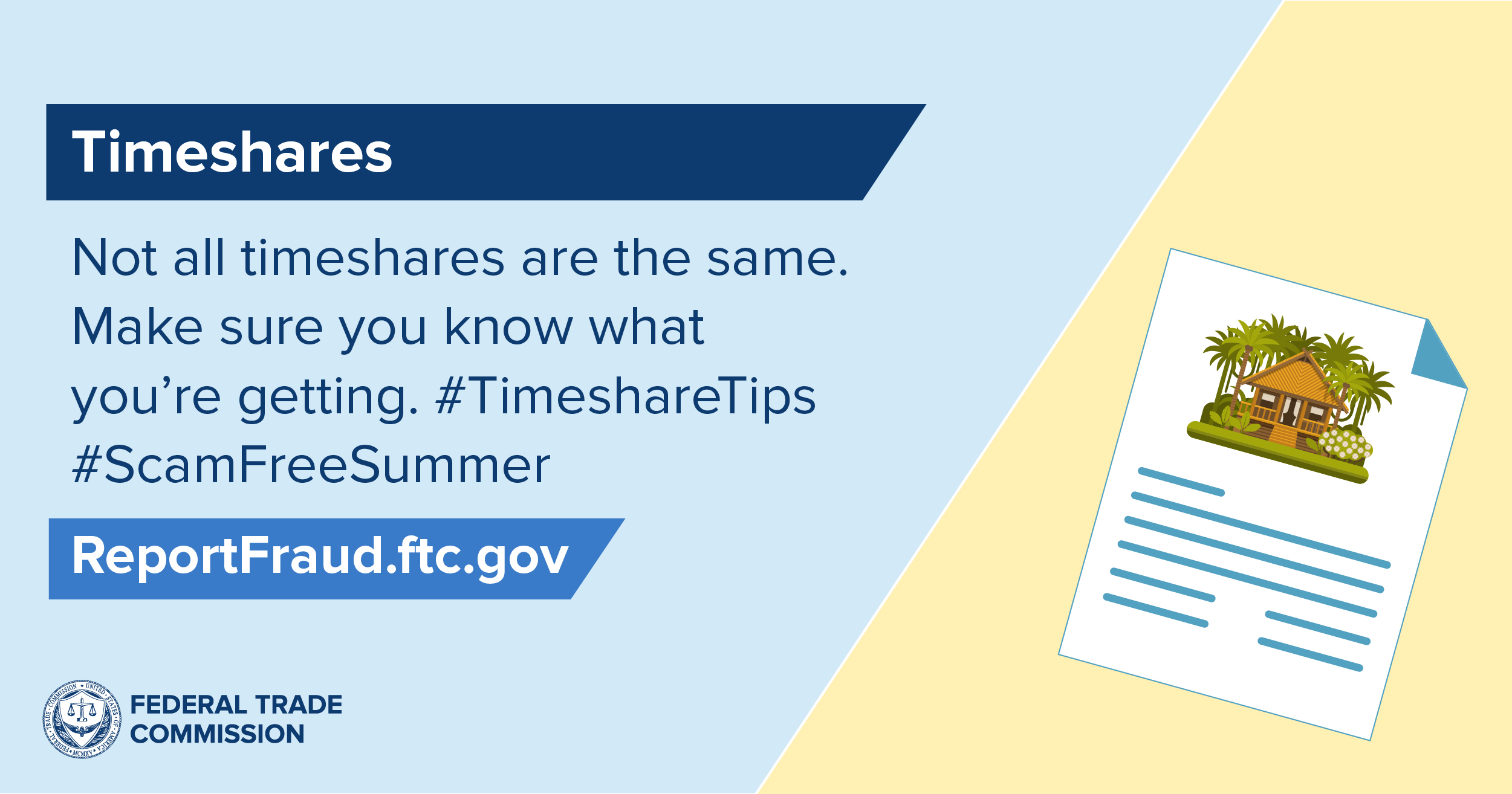

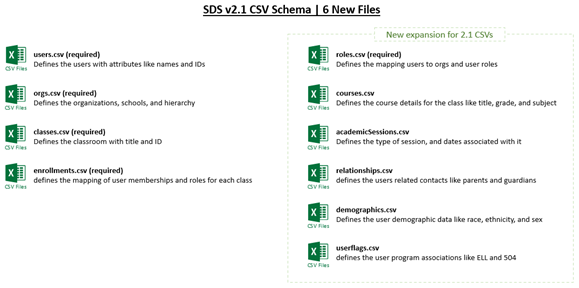

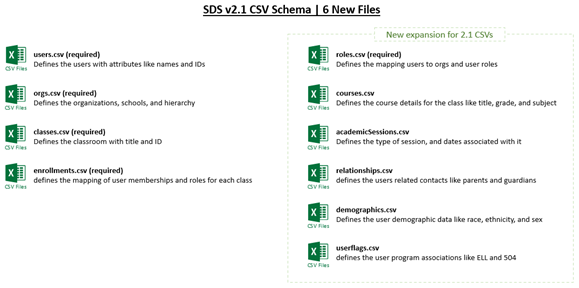

School Data Sync (SDS)

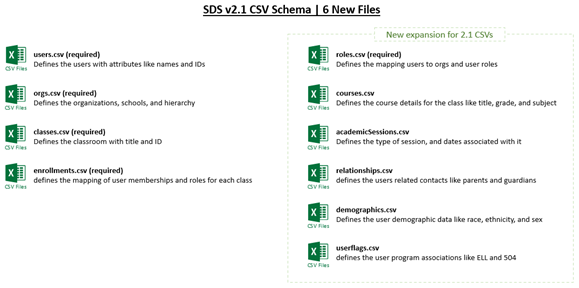

SDS is releasing 11 amazing updates for the upcoming BTS season, including a new enhanced v2.1 CSV data schema for Education Insights Premium, group provisioning support in v2.1 sync profiles, and a several updates to MS Graph APIs for SDS remote management and partner integration scenarios!!

- v2.1 CSV Schema Update: SDS is evolving the v2 CSV file schema to include a variety of new data elements like demographics, flags, sessions, parents and guardians, and courses. These new data elements will be used for both SDS provisioning scenarios and Education Insights Premium enhancements, as described later in this post. Of course, the 2.1 schema still contains all of the same Higher Education and K12 data elements we introduced with the initial v2 release last summer. The new data schema is available now within SDS under the v2.1 CSV Sync Profile setup. Learn More

Release Planned: Available Now

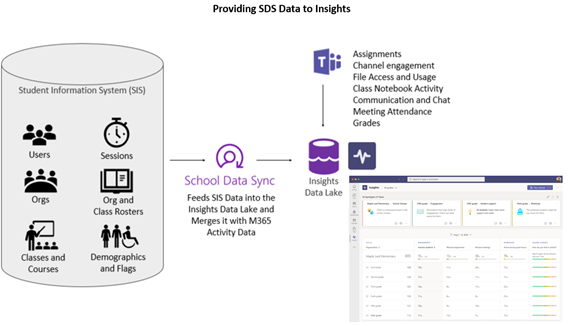

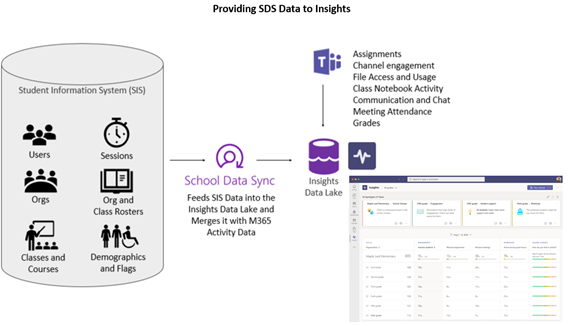

- SDS for Insights: Microsoft recently announced Education Insights Premium, which provides an enriched set of analytics capabilities and reports for organizational leaders within the tenant. To power the experience, SDS is introducing SDS for Insights which merges the data ingested by SDS with the M365 activity data captured and utilized by the Insights App in Teams. The result is enhanced Insights with rich educational context. SDS also ingests organizational data like school memberships, which can be leveraged by Education Insight Premium to school leaders access to Insights for just their school, instead of viewing Insights for the entire organization. Deploying SDS for Insights not only provides more data for Insights, but it also sets and provides the organizational boundaries for delegated access to a subset of Insights reports.

Release Planned: Available Now (public preview)

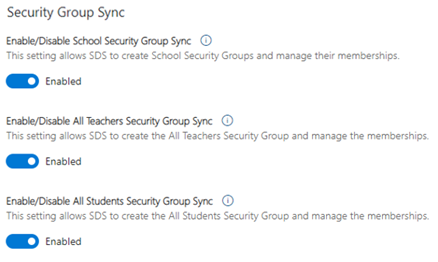

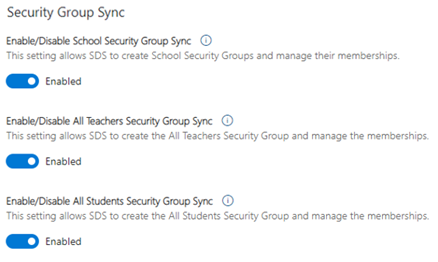

- v2.1 Security Group Sync: SDS is adding the ability to create the dynamic Security Groups (SGs) when using the v2.1 CSV format. On the SDS settings page, an IT admin can easily enable the creation of Security Groups like All Teachers, All Students, and School based SGs. Security Groups are broadly useful across M365, and can help IT Admin manage users, devices, and applications across the platform. Find the settings below on the SDS settings page!

Release Planned: Available Now

- v2.1 Administrative Unit (AU) Sync: SDS is adding the ability to create dynamic School based AUs when using the v2.1 CSV format. New v2.1 sync profiles will create and manage these AUs by default, and the AUs will contain every student, teacher, and class within each school. AUs help IT admins enable delegated administrators with AU scoped roles, allowing them to manage subsets of the broader directory and tenant. See the collection of updates related to delegated administration in the M365 Admin Center, which SDS will help you deploy and utilize.

Release Planned: Available Now

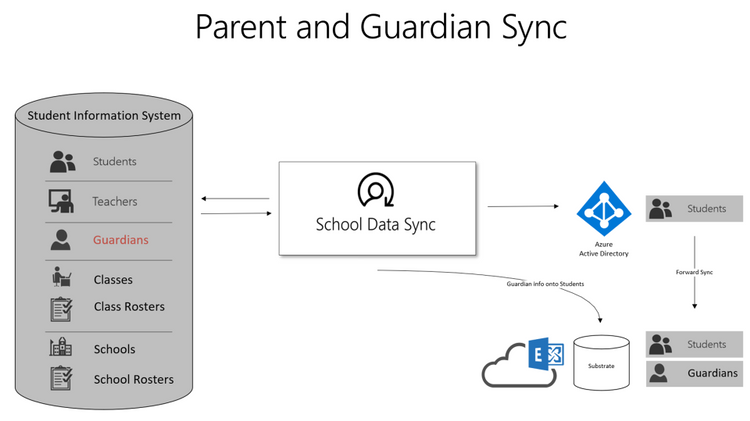

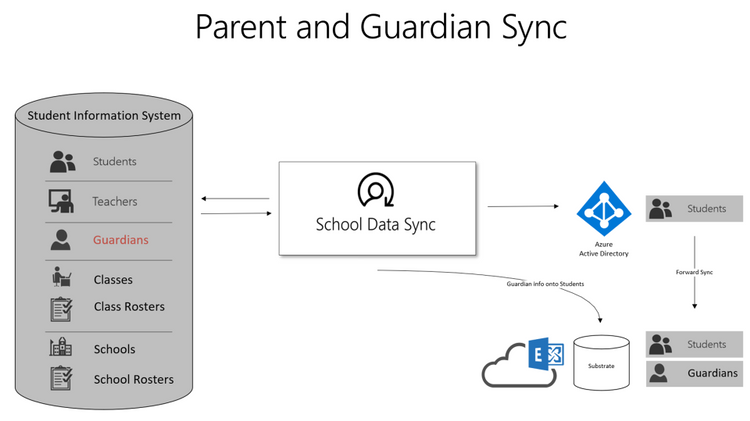

- v2.1 Parents & Guardians: SDS is adding the ability to create the Parent and Guardian Contacts when using the v2.1 CSV format. With the introduction of the new relationships.csv, IT admins can now optionally ingest Parent and Guardian data to provision each one as contacts within substrate. These contacts are then used within Teams for Education to send the Weekly Parent Email Digest. Also, stay tuned for new Parent and Guardian use cases coming soon to M365!!

Release Planned: Available Now

- v2.1 writing more data to Azure AD: SDS is adding the ability to write more attributes onto synced users and groups in Azure AD. The new optional user extension attributes include grade and school associations which can be utilized by Azure AD Dynamic Groups provisioning engine and are also made available on MS Graph to complement app integration scenarios. New optional Groups attributes will include course title, grade, subject, and term/session details, which also may be used to enrich app integration scenarios and assist with group cleanup processes.

Release Planned: Available Now

- New OneRoster API Providers: SDS has just introduced a collection of new OneRoster API partners and providers for simplified setup and configuration of SDS sync profiles which do not require CSV export and import management. The newly released SDS OneRoster API providers include Arbor Education, Edge Learning, eSchooling, iSAMS, Rediker Software, and Skyward!!

Release Planned: Available Now

- App-Only Sync Profile Management APIs: SDS provides a collection of Remote Management APIs on MS Graph within the broader Education API set. The APIs allow customers and partners to remotely manage and monitor their sync profiles. For Managed Service Providers, this required a persistent Global Admin account within the tenant to facilitate remote monitoring and management of their customers. We are excited to announce support for app-only context within most remote management APIs, to remotely manage and monitor SDS sync profiles across multiple tenants from a single application or system, without requiring a persistent Global Admin account in the tenant.

Release Planned: Available Now

- Sync Status API Update: SDS provides an Get Sync Status API on MS Graph to remotely monitor Sync Profile Status. This API is critical for remote SDS management. The SDS team is introducing several enhancements to the Sync Status API, to ensure alignment and consistency with Sync status reporting through the SDS application’s user interface, for deeper insights into the current state of sync.

Release Planned: Mid-July 2021

- Sync Performance Boost: When you configure SDS to start syncing data, each sync profile runs through a pre-sync validation process. During this process, SDS calls into Azure AD to check for valid/matching users before attempting to sync user data forward. This process can be time intensive and add several hours to first-time sync in large tenants. SDS will now pre-load Azure AD data into the SDS service, allowing pre-sync validation to run locally. The change will exponentially decrease sync times, reducing the validation checks from hours to just a few minutes, allowing the real sync to start and complete much faster.

Release Planned: Mid-July 2021

- Grade Sync adding new providers: Educators shouldn‘t have to copy and paste grades – ever. Grade Sync is the time-saving solution within Teams Assignments that automatically sends grades you enter to your Student Information System (SIS) gradebook. Grade Sync now supports syncing to OneRoster v1.1 compliant providers including verified providers: Aequitas, eSchoolData, Infinite Campus, PowerSchool, and Skyward. Learn More about getting started syncing grades.

Release Planned: Summer 2021 (private preview)

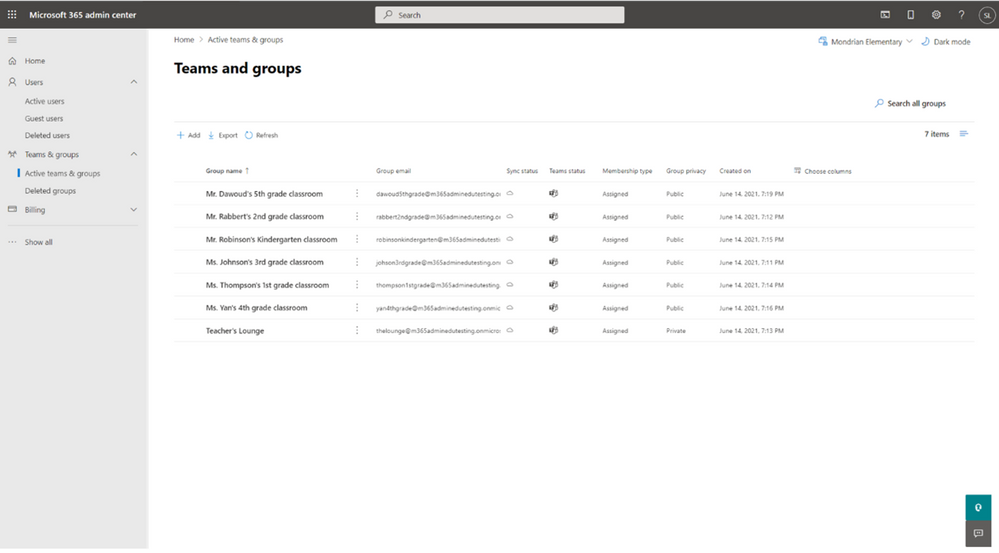

M365 Admin Center and School Level IT Administration

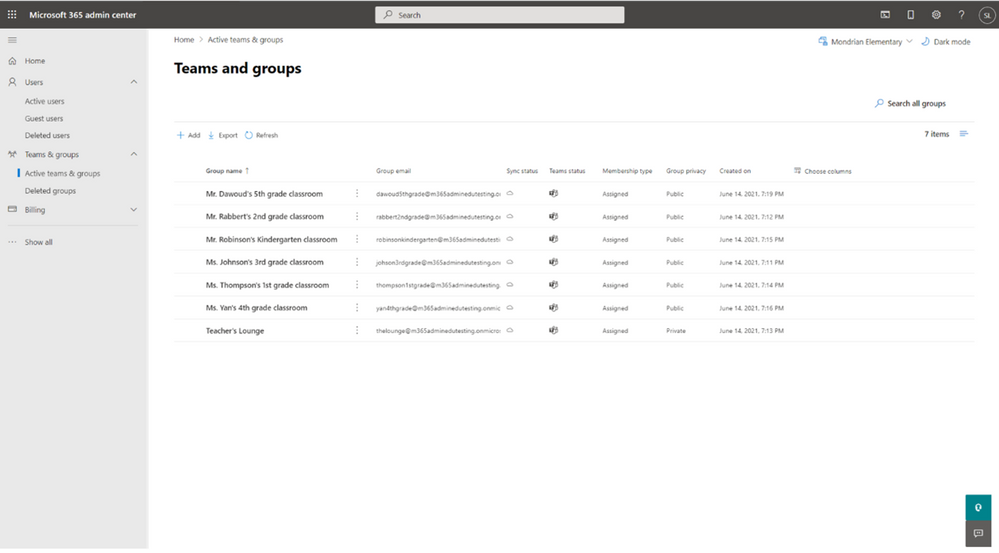

The Microsoft 365 Admin Center is releasing a brand-new experience for Education IT Admins for BTS! The new experience provides a single and centralized place for delegated School level IT admins to perform and manage the most common administrative tasks across M365 workloads like Azure AD, Teams, Exchange, and SharePoint. This will help central IT teams and Global Administrators focus on higher privileged tasks within M365 while delegating the operational tasks down to others within the organization as appropriate. Using Administrative Unit (AU) scoping and RBAC role assignments, delegates will be empowered to manage the subset of users, groups, teams, and group connected sites associated with their specific school, college, or subset of the broader tenant and directory!!

- M365 Admin Center UX for School IT: The M365 Admin Center will provide a new streamlined experience for School level IT admins, which allows central IT teams and Global Administrators to delegate many common and repeatable administrative tasks to school level delegates. The solution ensures that school delegates can only manage the subset of users, groups, teams, sites, and objects associated with their school(s). The tasks and permissions within the solution span Azure AD, Teams, Exchange, and SharePoint:

- Manage Users, Attributes, Reset Passwords, and Assign Licenses

- Manage Groups, Teams, and Group Connected Sites

- Manage Email, Chat, External Sharing, and Privacy

- Manage Memberships and Access

- (Coming soon) Manage Teams channels, Security Groups, and add Groups and Teams to an AU

Release Planned: Mid-July (public preview)

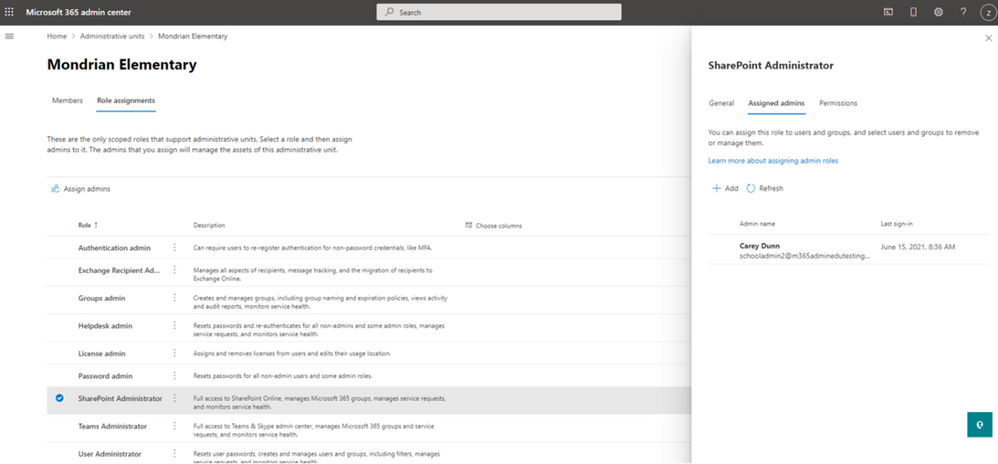

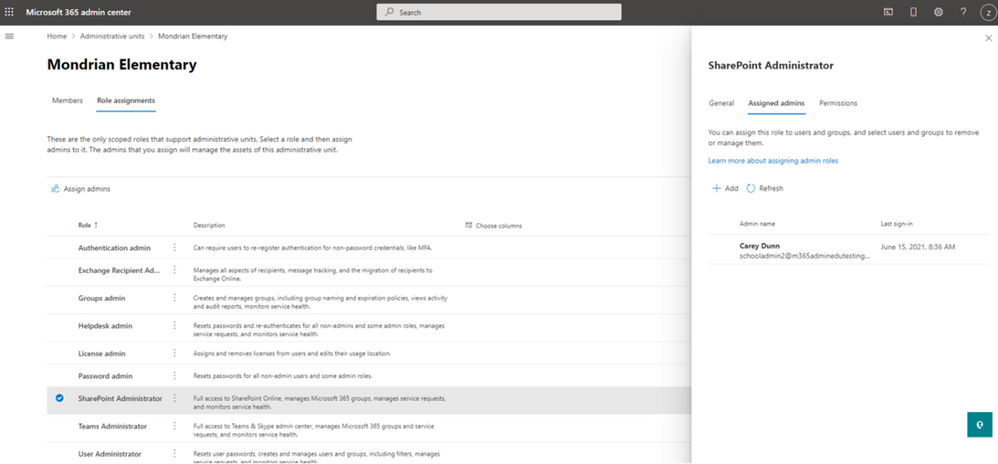

- Administrative Unit support across Teams, SharePoint, and Exchange: Administrative units provide a way to define the structure of an organization to assist with delegated management. For example, one administrative unit for each school in a district. However, these have solely supported management across users and Azure AD groups. To ensure delegated admins can manage across more Microsoft services, Microsoft extended administrative unit support to Teams, Microsoft Groups, and SharePoint sites. There are also three new scoped administration roles that can be assigned: Teams Admin, SharePoint Admin, and Exchange Recipient Admin. These roles can be assigned to an admin over a particular AU, which grants them rights to manage only the objects within that AU from the Microsoft 365 Admin Center. The AU scoped roles will only provide a targeted subset of the role’s broader functionality, while the un-scoped version of these RBAC roles will continue to allow IT admins to do much more within each service specific admin portal.

Release Planned: Mid-July (public preview)

- Structure your tenant and delegate admin tasks across your organization: Administrative units let you subdivide your organization into a logical structure that meets your needs, and then assign specific administrators that can manage only the members of that unit. For example, you can use administrative units to delegate permissions to administrators so they can control access, manage users, and set policies only in the “School of Engineering,” instead of the entire university.

Global admins and privileged role admins can create and manage the membership of administrative units in the Microsoft 365 admin center today. We’ve also added 3 new scoped roles, increasing the total number of scoped role assignments a delegated admin can have to 9. We are going to continue adding functionality to the delegated admin experience, including support for bulk user membership assignments and dynamic user membership.

Release Planned: Mid-July (public preview)

- Delegated Password Reset with Password Writeback Support: One of the most common challenges for EDU IT Admins is the endless student password reset requests. The M365 Admin Center recently released a new streamlined experience for Educators and School Leaders, allowing them to easily perform password resets for their students. To complete the story, M365 Admin Center is adding support for delegated password reset on hybrid Identities with password writeback enabled via AAD Connect Sync!! Now every EDU organization can delegate password reset permissions to Educators and School leaders!!

Release Planned: Mid-July (public preview)

Duty of Care and Student Protection

Many EDU organizations deploy M365 tenants containing multiple schools, sometimes spanning hundreds or even thousands of schools within a single tenant. To protect the students within the tenant for bullying or unintended communications and content sharing, IT admins need a way to set boundaries within the tenant. M365 offers Information Barriers (IB) to provide boundaries for directory visibility, communication, and collaboration. We are pleased to announce a collection of new Information Barrier enhancements planned to release this August tailored for Education.

- Users in Multiple IB Segments: Many EDU institutions have educators that teach across multiple schools, and to support school segmentation educators must be allowed to exist in multiple segments. In addition, Microsoft recommends IT create an All Staff IB segment and policy to facilitate broad communication and collaboration amongst all educators and staff within the tenant, while keeping students segmented to just the school(s) which they attend. To support these scenarios and provide EDU adequate flexibility in design and implementation, Information Barriers will support assigning users into multiple IB segments.

Release Planned: August 2021

- Support scale of 5K+ IB Segments per Tenant: Microsoft recommends deploying into a single tenant when user populations are 1M users or less for a single organization. To support 1M user tenants with school per school segmentation, Microsoft will begin supporting 5K segments per tenant. This scale increase should empower any 1M user or less organization to deploy M365 in a single tenant model while also protecting the students and their data contained within the tenant.

Release Planned: August 2021

- Support for 4 IB Modes on Groups: User segments and IB policies will restrict people’s ability to see and find other users in a variety of people picker experiences like adding users to a chat and communicating via Teams. In addition, we are introducing IB modes on Microsoft 365 Groups to further strengthen IB compliance. The IB modes will evaluate group membership, access, and sharing controls.

- Open mode – no restrictions on the group or its content, anyone can be a member of the group.

- ownerModerated mode – the group membership is restricted to users from within the owner’s IB segments, and allows the owners to share content with other users within their segments.

- Implicit mode – the group membership is restricted to users within group members IB segments, and content is restricted to the group members.

- Explicit mode – the group membership is restricted to the users within the segments explicitly stamped on the group by IT admins. Content is restricted to users within the segments on the group and site access permissions.

Release Planned: August 2021

- Support IBs based on AU membership: For organizations that have deployed School Data Sync, they are creating an AU per school by default. To help schools onboard and adopt information barriers with ease, Microsoft will support creating IB segments based on the membership of an Administrative Unit. So, once you have setup SDS and have created your school AUs, you can configure an IB policy per school and mirror your School AU memberships with a corresponding School IB segment with ease.

Release Planned: August 2021

- SDS provisioning of groups with IB Mode ownerModerated: School Data Sync will begin creating all Class Groups with IB mode ownerModerated, to prepare organizations for Information Barriers adoption. This setting will allow the educator (owner) to add and invite any other users from the segments which they are a member of, and share content with their segment members, putting them in control of their classes.

Release Planned: August 2021

- IBs + Address Book Policy in the same tenant: Information Barriers will be fully supported by Teams and SharePoint, but Outlook and OWA do not yet support IBs. To mitigate directory exposure and the ability to easily find and communication with others outside of the intended segment(s), Microsoft will support configuring and running both Information Barriers and Address Book Policy within the same tenant, at the same time. This will allow IT to apply segmentation that spans all 3 core workloads – Teams, SharePoint, and Exchange.

Release Planned: August 2021

by Contributed | Jun 15, 2021 | Technology

This article is contributed. See the original author and article here.

Azure portal Import from BACPAC fails if database schema is large because the number of tables, columns, indexes and views.

” BadRequest ErrorMessage: The ImportExport operation with Request Id ‘ed049000-1d74-46bf-9dd6-2375c5d079fa’ failed due to ‘An internal error occurred. Please contact Microsoft support and reference the server name, database name, and operation ID.’..”

SQL Server Management Studio “Import data-Tier application” option fails after some time with an error indicating “not enough memory”

If the number of tables, indexes and views is large, import model preparation needs a lot of memory and time.

In this case the solution is use SqlPackage.exe with “/p:Storage=File” option

/p: Storage=({File|Memory}) Specifies how elements are stored when building the database model. For performance reasons the default is InMemory. For large databases, File backed storage is required.

Syntax

sqlpackage.exe /Action:Export /ssn:tcp:<servername>.database.windows.net,1433 /sdn:<source–database–name> /su:<username> /sp:<Password> /tf:.export.bacpac /p:Storage=File /d:true /df:.outputlog.log /p:LongRunningCommandTimeout=0

SqlPackage.exe /Action:Import /sf:.bacpacfile.bacpac /tsn:<servername>.database.windows.net /tdn:<database–name> /p:Storage=File /tu:<username> /tp:********** /d:true /df:.outputlog.log /p:LongRunningCommandTimeout=0

Note that some import steps will take some time (hours) and you could think that process is hang. You can use Windows “Resource Monitor” and monitor Sqlpackage.exe disk activity.

Note: This solution also applies to export operations

Regards, Paloma.-

by Contributed | Jun 15, 2021 | Technology

This article is contributed. See the original author and article here.

Background

Over the years, the size of SAP BW system database grew exponentially, which leads to increase in hardware cost when running the system on HANA. To reduce the size of HANA hardware, customer can leverage an option to migrate their data to near-line storage (NLS). The adapter of SAP IQ as a near-line solution is delivered with the SAP BW system. The integration of SAP IQ makes it possible to separate frequently accessed data from the infrequently accessed data, thus resulting in less resource demands for the BW system.

SAP IQ supports both a simplex and a multiplex architecture. But for the NLS solution, only the simplex architecture is available/evaluated. Technically SAP IQ high availability can be achieved using the IQ multiplex architecture, but the multiplex architecture does not suffice our requirement for NLS solution. On the other side, SAP does not provide any features or procedures to run the SAP IQ simplex architecture in a high availability configuration. But SAP IQ NLS high availability can be achieved using custom solutions, which may require additional configuration or open-source component like Pacemaker, Microsoft Windows Server Failover Cluster, shared disk etc.

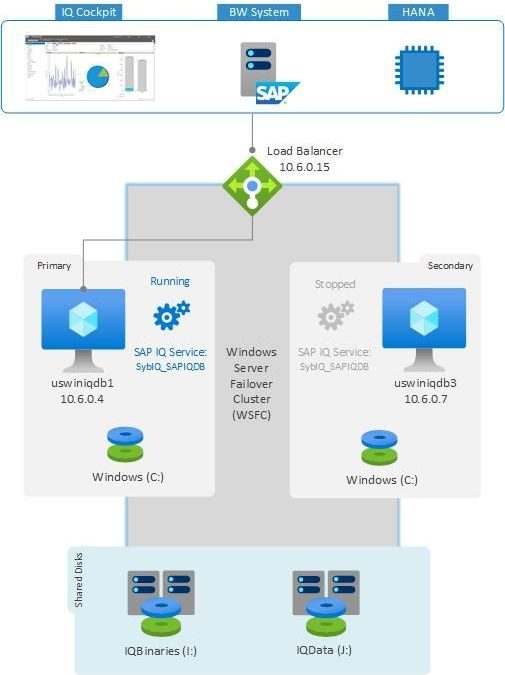

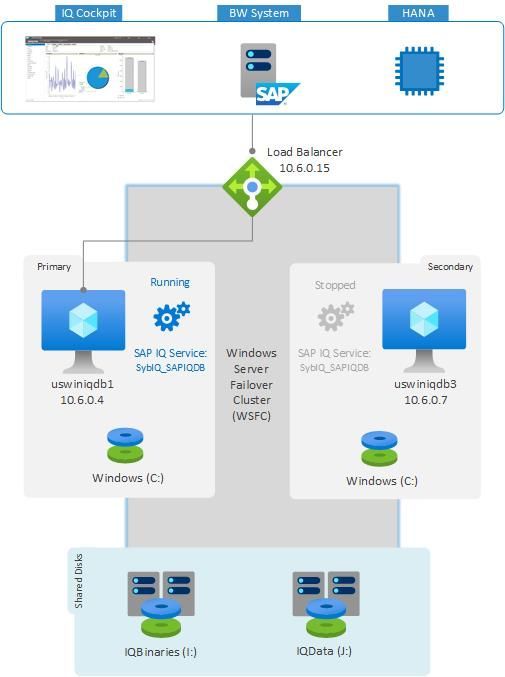

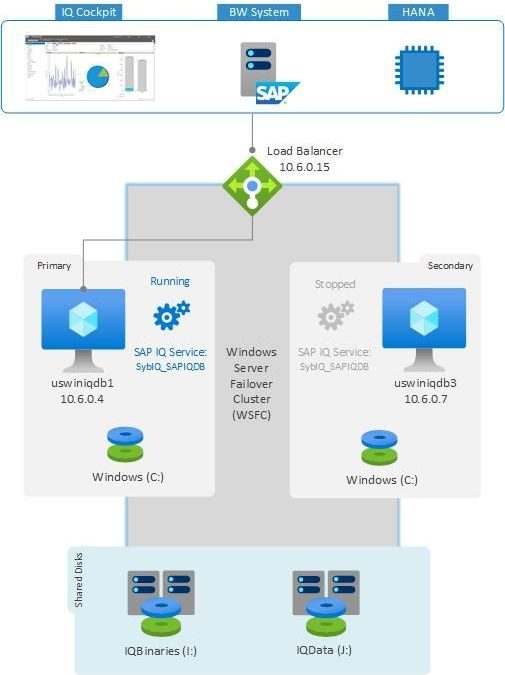

This blog discusses a SAP IQ NLS high available solution using Azure shared disk on Windows Server 2016.

Solution Overview

This blog will provide insights into a SAP IQ NLS high availability architecture on Windows Server. Windows Server Failover Cluster (WSFC) is the foundation of a high availability for critical applications on Windows. A failover cluster is a group of 1+n independent servers (nodes) that work together to increase the availability of applications, database, and services. If a node fails, WSFC calculates the number of failures that can occur and still maintain a healthy cluster to provide applications and services.

Traditionally for SAP NetWeaver running on highly available WSFC, a SAP Resource type is implemented using a dynamic library plug-in DLL (saprc.dll) called cluster resource DLL, which is loaded by the Windows cluster resource monitor process. But for SAP IQ, there is no such dedicated resource type available. Instead Generic Service (clusres2.dll) resource type can be leveraged, which manages SAP IQ database service. To understand more about failover cluster resources/resources type and what they do, see Windows Server 2016/2019 Cluster Resource / Resource Types.

In this example, two virtual machines (VMs) with Windows Server 2016 (uswiniqdb1 and uswiniqdb3) are configured with Windows Server Failover Cluster. Azure shared disk is a new feature on Azure managed disks that enables attaching an Azure managed disk to multiple VMs simultaneously. Attaching a managed disk – IQBinaries (I:) and IQData (J:) to multiple VMs allows you to deploy a highly available clustered application to Azure. An Azure load balancer is required to use a virtual IP address that will be attached to primary node. The Azure load balancer will move this virtual IP to secondary node during failover. All the client connections to SAP IQ will happen through this Azure load balancer.

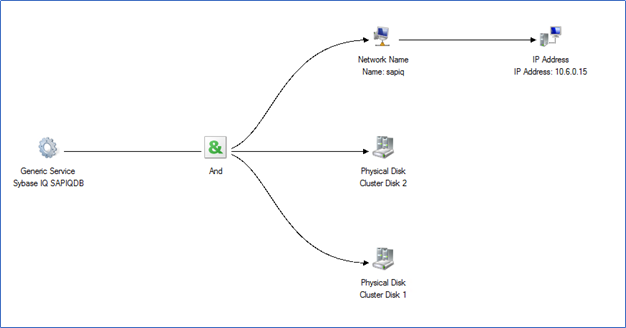

The SAP IQ database instance will be registered and started as Windows service. The role containing the SAP IQ service (SybIQ_SAPIQDB), the shared cluster disks (I: and J:), and client access point (10.6.0.15) are configured for high availability. The cluster role will monitor the SAP IQ Service (SybIQ_SAPIQDB), and shared disks. If any of the component in the cluster role is crashed or host is restarted, the cluster will failover the entire role to the secondary node and start the SAP IQ Service on it.

CURRENT CLUSTER SETUP CONSTRAINT: The cluster setup using the Generic Service resource type will only failover the resources in case of host failure or service crash. The cluster does not monitor the accessibility of SAP IQ database. For example, it might happen that the SAP IQ database service is running but the user/system is not able to communicate to database. In such a case, the cluster thinks that the service is running, so it will not failover the database. To achieve a failover in such scenarios, you need to design a highly available solution using the Generic Script resource type, which allows you to achieve high availability for SAP IQ that can be controlled using a custom script. You can run SAP IQ as a scripted resource, which will stop, start, and monitor SAP IQ database using the custom script. For more details, see Creating and Configuring a Generic Script Resource.

Infrastructure Preparation

This section describes the infrastructure provisioning for a SAP IQ deployment on Windows server with high availability. This blog is not intended to replace the standard SAP IQ deployment practices, rather it complements SAP’s documentation, which represent the primary resources for installation and deployment of SAP IQ NLS solution.

Refer SAP Note 2780668 – SAP First Guidance – BW NLS Implementation with SAP IQ for the information about SAP nearline storage implementation (NLS) for SAP BW, SAP BW on HANA and SAP BW/4HANA.

Consideration for High Availability on Windows

Windows Server Failover Cluster

Windows Server Failover Cluster with Azure virtual machines (VMs) requires additional configuration steps. When you build a cluster, you need to set several IP addresses and virtual host names for SAP IQ database.

The Azure platform does not offer an option to configure virtual IP addresses, such as floating IP addresses. You need an alternate solution to set up a virtual IP address to reach the cluster resources in the cloud. Azure load balancer service provides an internal load balancer for Azure. With the internal load balancer, clients reach the cluster over the cluster virtual IP address.

Deploy the internal load balancer in the resource group that contains the cluster nodes. Configure all necessary port forwarding rules by using the probe ports of the internal load balancer. Clients can connect via the virtual host name. The DNS server resolves the cluster IP address, and the internal load balancer handles port forwarding to the active node of the cluster.

Azure shared disk

Azure shared disk is a feature for Azure managed disk that allows you to attach a managed disk to multiple VMs simultaneously. Azure shared disks do not natively offer a fully managed file system that can be accessed using SMB/NFS protocol, instead you need to use a cluster manager like Windows Server Failover Cluster (WSFC) or Pacemaker to handle the cluster node communication and write locking.

Few things to consider, when leveraging Azure shared disk for SAP IQ high availability –

- Currently Azure shared disk is locally redundant, which mean you will be operating Azure shared disk on one storage cluster. Azure managed disks with zone redundant storage (ZRS) is available in preview.

- Currently the only supported deployment is with Azure shared premium disk in availability set. Azure shared disk is not supported with Availability Zone deployments.

- Make sure to provision Azure premium disk with a minimum disk size as specified in Premium SSD ranges to be able to attach to the required number of VMs simultaneously.

- Azure shared Ultra disk is not supported for SAP workloads, as it does not support deployment in Availability set or zonal deployment.

For more details and limitation of Azure shared disk, kindly review Share an Azure managed disk documentation.

IMPORTANT: When deploying SAP IQ on Windows server failover cluster with Azure shared disk, be aware that your deployment will be operating with a single shared disk in one storage cluster. Your SAP IQ instance would be impacted, in case of issues with the storage cluster where the Azure shared disk is deployed.

With above consideration and pre-requisites, below are the host names and IP addresses of the scenario presented in this blog.

Host name role

|

Hostname

|

Static IP address

|

Availability Set

|

SAP IQ primary node in a cluster

|

uswiniqdb1

|

10.6.0.4

|

sapiq-db-as

|

SAP IQ secondary node in a cluster

|

uswiniqdb3

|

10.6.0.7

|

sapiq-db-as

|

Cluster network name

|

wiqclust

|

10.6.0.20

|

N/A

|

SAP IQ cluster network name

|

sapiq

|

10.6.0.15

|

N/A

|

Virtual machine deployment

- Create a resource group.

- Create a virtual network.

- Create an availability set.

– Set the max update domain.

- Create virtual machines – uswiniqdb1 and uswiniqdb3.

– Select the supported Windows image for SAP IQ from the Azure marketplace. In this example, Windows Server 2016 is used.

– Select the availability set created in step 3.

Create Azure load balancer

To use a virtual IP address, an Azure load balancer is required. It is strongly recommended to use standard load balancer SKU.

IMPORTANT: Floating IP is not supported on a NIC secondary IP configuration in load-balancing scenarios. For details see

Azure Load balancer Limitations. If you need an additional IP address for the VM, deploy a second NIC.

For a standard load balancer SKU, follow these configuration steps:

- Frontend IP configuration

– Static IP address 10.6.0.15

- Backend pools

– Add the virtual machines that should be part of SAP IQ cluster. In this example, VMs uswiniqdb1 and uswiniqdb3

- Health probes (Probe port)

– Protocol: TCP; Port: 62000; keep default value for Interval and Unhealthy threshold.

- Load balancing rules

– Select the frontend IP address configured in step 1 and select HA ports.

– Select the backend pools and health probe configured in step 2 and 3.

– Keep session persistence as none, and idle timeout as 30 minutes.

– Enable floating IP.

Add registry entries on both cluster nodes

Azure load balancer may close connections, if the connections are idle for a period and exceed the idle timeout. To avoid the disruption for these connections, change the TCP/IP KeepAliveTime and KeepAliveInterval on both cluster nodes. The following registry entries must be changed on both cluster nodes:

Path

|

Variable name

|

Variable type

|

Value

|

Documentation

|

HKLMSYSTEMCurrentControlSetServicesTcpipParameters

|

KeepAliveTime

|

REG_DWORD (Decimal)

|

120000

|

KeepAliveTime

|

HKLMSYSTEMCurrentControlSetServicesTcpipParameters

|

KeepAliveInterval

|

REG_DWORD (Decimal)

|

120000

|

KeepAliveInterval

|

Add Windows VMs to the domain

Assign static IP addresses to the VMs and then add both the virtual machines to the domain.

Configure Windows Server Failover Cluster

Installing failover clustering feature

You will first need to install the Failover-Clustering feature on each server that you want to include in the cluster. Once the feature installation is completed, reboot both cluster nodes.

# Hostname of windows cluster for SAP IQ

$SAPSID = “WIQ”

$ClusterNodes = (“uswiniqdb1″,”uswiniqdb3”)

$ClusterName = $SAPSID.ToLower() + “clust”

# Install Windows features.

# After the feature installs, manually reboot both nodes

Invoke-Command $ClusterNodes {Install-WindowsFeature Failover-Clustering, FS-FileServer -IncludeAllSubFeature -IncludeManagementTools}

Test and configure Windows Server Failover Cluster

Before you can create a cluster, you need to conduct preliminary validation tests to confirm that your hardware and settings are compatible with failover clusters. These tests are broken into categories such as inventory, system configuration, network, storage and many more. At this time, SAP IQ 16.x is not supported on Windows server 2019

# Hostnames of the Windows cluster for SAP IQ

$SAPSID = “WIQ”

$ClusterNodes = (“uswiniqdb1″,”uswiniqdb3”)

$ClusterName = $SAPSID.ToLower() + “clust”

# IP adress for cluster network name is needed ONLY on Windows Server 2016 cluster

$ClusterStaticIPAddress = “10.6.0.20”

# Test cluster

Test-Cluster -Node $ClusterNodes -Verbose

$ComputerInfo = Get-ComputerInfo

$WindowsVersion = $ComputerInfo.WindowsProductName

if($WindowsVersion -eq “Windows Server 2019 Datacenter”){

write-host “Configuring Windows Failover Cluster on Windows Server 2019 Datacenter…”

New-Cluster -Name $ClusterName -Node $ClusterNodes -Verbose

}elseif($WindowsVersion -eq “Windows Server 2016 Datacenter”){

write-host “Configuring Windows Failover Cluster on Windows Server 2016 Datacenter…”

New-Cluster -Name $ClusterName -Node $ClusterNodes -StaticAddress $ClusterStaticIPAddress -Verbose

}else{

Write-Error “Not supported Windows version!”

}

Configure cluster cloud quorum

If you are using Windows Server 2016 or higher, we recommend configuring Azure cloud witness as cluster quorum. The Cloud Witness is a type of Failover Cluster quorum witness that uses Microsoft Azure to provide a vote on cluster quorum.

To set up a Cloud Witness as a quorum witness for your cluster, complete the following steps:

- Create an Azure Storage Account to use as a Cloud Witness.

- Configure the Cloud Witness as a quorum witness for your cluster. Run below command –

$AzureStorageAccountName = “clustcloudwitness”

Set-ClusterQuorum -CloudWitness -AccountName $AzureStorageAccountName -AccessKey <YourAzureStorageAccessKey> -Verbose

Check the article view and copy storage access keys for your Azure storage account to get access key for above command.

NOTE: Azure cloud witness is not supported on Windows server 2012.

Tuning Windows Server Failover Cluster thresholds

After you successfully install the Windows Server Failover Cluster, you need to adjust some thresholds, to be suitable for clusters deployed in Azure. The parameters to be changed are documented in Tuning failover cluster network thresholds. If your two VMs that make up the Windows cluster configuration for SAP IQ are in the same subnet, change the following parameters to these values:

- SameSubnetDelay = 2000

- SameSubnetThreshold = 15

- RouteHistoryLength = 30

PS C:> (get-cluster).SameSubnetThreshold = 15

PS C:> (get-cluster).SameSubnetDelay = 2000

PS C:> (get-cluster).RouteHistoryLength = 30

PS C:> get-cluster | fl *subnet*

# CrossSubnetDelay : 1000

# CrossSubnetThreshold : 20

# PlumbAllCrossSubnetRoutes : 0

# SameSubnetDelay : 2000

# SameSubnetThreshold : 15

PS C:> get-cluster | fl *RouteHistory*

# RouteHistoryLength : 30

Configure Azure shared disk

Before provisioning Azure shared disk, refer and review the Azure shared disk section above.

Create and attach Azure shared disk

Run this command on Azure cloud PowerShell. You will need to adjust the values for your resource group, Azure region, SAPSID, and so on.

# Create Azure Shared Disk

$ResourceGroupName = “win-sapiq”

$location = “westus2”

$SAPSID = “WIQ”

$DiskSizeInGB = 512

$DiskName1 = “$($SAPSID)IQBinaries”

$DiskName2 = “$($SAPSID)IQData”

# With parameter ‘-MaxSharesCount’, we define the maximum number of cluster nodes to attach the shared disk

$NumberOfWindowsClusterNodes = 2

$diskConfig = New-AzDiskConfig -Location $location -SkuName Premium_LRS -CreateOption Empty -DiskSizeGB $DiskSizeInGB -MaxSharesCount $NumberOfWindowsClusterNodes

$dataDisk = New-AzDisk -ResourceGroupName $ResourceGroupName -DiskName $DiskName1 -Disk $diskConfig

$dataDisk = New-AzDisk -ResourceGroupName $ResourceGroupName -DiskName $DiskName2 -Disk $diskConfig

# Attach the disk to cluster VMs

# SAP IQ Cluster VM1

$IQClusterVM1 = “uswiniqdb1”

# SAP IQ Cluster VM2

$IQClusterVM2 = “uswiniqdb3”

# Add the Azure Shared Disk to Cluster Node 1

$vm = Get-AzVM -ResourceGroupName $ResourceGroupName -Name $IQClusterVM1

$vm = Add-AzVMDataDisk -VM $vm -Name $DiskName -CreateOption Attach -ManagedDiskId $dataDisk.Id -Lun 0

$vm = Add-AzVMDataDisk -VM $vm -Name $DiskName -CreateOption Attach -ManagedDiskId $dataDisk.Id -Lun 1

Update-AzVm -VM $vm -ResourceGroupName $ResourceGroupName -Verbose

# Add the Azure Shared Disk to Cluster Node 2

$vm = Get-AzVM -ResourceGroupName $ResourceGroupName -Name $IQClusterVM2

$vm = Add-AzVMDataDisk -VM $vm -Name $DiskName -CreateOption Attach -ManagedDiskId $dataDisk.Id -Lun 0

$vm = Add-AzVMDataDisk -VM $vm -Name $DiskName -CreateOption Attach -ManagedDiskId $dataDisk.Id -Lun 1

Update-AzVm -VM $vm -ResourceGroupName $ResourceGroupName -Verbose

Format Azure shared disk

- Get the disk number. Run these PowerShell commands on one of the cluster nodes:

Get-Disk | Where-Object PartitionStyle -Eq “RAW” | Format-Table -AutoSize

# Example output

# Number Friendly Name Serial Number HealthStatus OperationalStatus Total Size Partition Style

# —— ————- ————- ———— —————– ———- —————

# 2 Msft Virtual Disk Healthy Online 512 GB RAW

# 3 Msft Virtual Disk Healthy Online 512 GB RAW

- Format the disk.

# Format SAP ASCS Disk number ‘2’, with drive letter ‘S’

$SAPSID = “WIQ”

$DiskNumber1 = 2

$DiskNumber2 = 3

$DriveLetter1 = “I”

$DriveLetter2 = “J”

$DiskLabel1 = “IQBinaries”

$DiskLabel2 = “IQData”

Get-Disk -Number $DiskNumber1 | Where-Object PartitionStyle -Eq “RAW” | Initialize-Disk -PartitionStyle GPT -PassThru | New-Partition -DriveLetter $DriveLetter1 -UseMaximumSize | Format-Volume -FileSystem ReFS -NewFileSystemLabel $DiskLabel1 -Force -Verbose

# Example outout

# DriveLetter FileSystemLabel FileSystem DriveType HealthStatus OperationalStatus SizeRemaining Size

# ———– ————— ———- ——— ———— —————– ————- —-

# I IQBinaries ReFS Fixed Healthy OK 504.98 GB 511.81 GB

Get-Disk -Number $DiskNumber2 | Where-Object PartitionStyle -Eq “RAW” | Initialize-Disk -PartitionStyle GPT -PassThru | New-Partition -DriveLetter $DriveLetter2 -UseMaximumSize | Format-Volume -FileSystem ReFS -NewFileSystemLabel $DiskLabel2 -Force -Verbose

# Example outout

# DriveLetter FileSystemLabel FileSystem DriveType HealthStatus OperationalStatus SizeRemaining Size

# ———– ————— ———- ——— ———— —————– ————- —-

# J IQData ReFS Fixed Healthy OK 504.98 GB 511.81 GB

- Verify that the disk is now visible as a cluster disk.

Get-ClusterAvailableDisk -All

# Example output

# Cluster : wiqclust

# Id : 7e5e30f6-c84e-4a23-ac2a-9909bc03eee2

# Name : Cluster Disk 1

# Number : 2

# Size : 549755813888

# Partitions : {?GLOBALROOTDeviceHarddisk2Partition2}

- Register the disk in the cluster.

Get-ClusterAvailableDisk -All | Add-ClusterDisk

# Example output

# Name State OwnerGroup ResourceType

# —- —– ———- ————

# Cluster Disk 1 Online Available Storage Physical Disk

# Cluster Disk 2 Online Available Storage Physical Disk

SAP IQ Configuration on Windows cluster

SAP IQ installation and configuration

This section does not cover the end-to-end installation procedure and database configuration for SAP IQ. For most up-to-date information, follow SAP’s documentation for SAP IQ on Windows.

In this example, we had installed SAP IQ 16.1 SP04 PL04 on a Windows Server 2016 with 8 vCPU and 64 GB RAM. SAP IQ installation path is I:SAPIQ. Using utility database, a new SAPIQDB database is created, which will be used for NLS configuration.

To start SAPIQDB database, a configuration file SAPIQDB.cfg is created and placed in the database directory (J:SAPIQdatadb). Using the configuration file (SAPIQDB.cfg) and database file (SAPIQDB.db), we will start SAPIQDB database.

I:SAPIQIQ-16_1Bin64> start_iq.exe @J:SAPIQdatadbSAPIQDB.cfg J:SAPIQdatadbSAPIQDB.db

Register SAP IQ as Windows service on primary node

Run SAP Sybase IQ as a Windows service to start the server. Services run in the background as long as Windows is running. We will be configuring high availability of SAP IQ using “Generic Service” role in Windows Cluster. The cluster software starts the service, and then periodically queries the Service Controller to determine whether the service appears to be running. If so, it is presumed to be online, and it is not restarted or failed over. For more information, see Creating a New Windows Service.

Perform below steps on which your Azure shared disk is mounted. Below steps are performed in uswiniqdb1. The same steps need to be followed in uswiniqdb3 in later part.

- Start SAP IQ Service Manager. On the server where you have performed SAP installation, it can be found under Start > SAP > SAP IQ Service Manager.

- Choose Create a New Service.

- Name the new service. In this example, we named it as SAPIQDB.

- Add the appropriate start-up parameters.

Include the full path to the database file. The server cannot start without a valid database path name.

Service Name: SAPIQDB

Startup Parameters: @J:SAPIQdatadbSAPIQDB.cfg J:SAPIQdatadbSAPIQDB.db

- Click Apply.

- Restart Windows. On restart, Azure shared disk will move to another node (uswiniqdb3).

Because Windows service manager reads environmental variables only at system startup, you must restart Windows after you configure SAP IQ as a Windows service.

- In services.msc, “Sybase IQ SAPIQDB” service gets created.

Set environment variables in secondary server

The installation of SAP IQ is performed on primary node, which means all environment variables are set on primary node. We do no install SAP IQ system on the secondary server. Instead it is used as failover server which will take over all the cluster disks and resources during a failure. For failover to happen smoothly, we need to configure the same environment variables on the secondary server as that are set in primary server.

On primary node (uswiniqdb1), open Control Panel > System and Security > System > Advanced system settings > Environment Variables.

Under System Variables, below SAP IQ parameters are set. Kindly check in your system all SAP IQ parameters.

System Variables

|

Value

|

COCKPIT_JAVA_HOME

|

I:SAPIQSharedSAPJRE-8_1_062_64BIT

|

INCLUDE

|

I:SAPIQOCS-16_0include;

|

IQDIR16

|

I:SAPIQIQ-16_1

|

IQLOGDIR16

|

C:ProgramDataSAPIQlogfiles

|

LIB

|

I:SAPIQOCS-16_0lib;

|

Path

|

I:SAPIQCOCKPIT-4bin

I:SAPIQOCS-16_0lib3p64

I:SAPIQOCS-16_0lib3p

I:SAPIQOCS-16_0dll

I:SAPIQOCS-16_0bin

I:SAPIQIQ-16_1Bin64

I:SAPIQIQ-16_1Bin32

|

SAP_JRE8

|

I:SAPIQSharedSAPJRE-8_1_062_64BIT

|

SAP_JRE8_32

|

I:SAPIQSharedSAPJRE-8_1_062_32BIT

|

SAP_JRE8_64

|

I:SAPIQSharedSAPJRE-8_1_062_64BIT

|

SYBASE

|

I:SAPIQ

|

SYBASE_OCS

|

OCS-16_0

|

SYBROOT

|

I:SAPIQ

|

Login to secondary server (uswiniqdb3) and configure all the environment variable that are set in primary server (uswiniqdb1). You can use below PowerShell command to set environment variable or manually configure each variable on secondary system.

[Environment]::SetEnvironmentVariable(‘COCKPIT_JAVA_HOME’,’I:SAPIQSharedSAPJRE-8_1_062_64BIT’,[System.EnvironmentVariableTarget]::Machine)

[Environment]::SetEnvironmentVariable(‘INCLUDE’,’I:SAPIQOCS-16_0include;’,[System.EnvironmentVariableTarget]::Machine)

[Environment]::SetEnvironmentVariable(‘IQDIR16′,’I:SAPIQIQ-16_1’,[System.EnvironmentVariableTarget]::Machine)

[Environment]::SetEnvironmentVariable(‘IQLOGDIR16′,’C:ProgramDataSAPIQlogfiles’,[System.EnvironmentVariableTarget]::Machine)

[Environment]::SetEnvironmentVariable(‘LIB’,’I:SAPIQOCS-16_0lib;’,[System.EnvironmentVariableTarget]::Machine)

[Environment]::SetEnvironmentVariable(‘PATH’, $env:Path + ‘;I:SAPIQCOCKPIT-4bin;I:SAPIQOCS-16_0lib3p64;I:SAPIQOCS-16_0lib3p;I:SAPIQOCS-16_0dll;I:SAPIQOCS-16_0bin;I:SAPIQIQ-16_1Bin64;I:SAPIQIQ-16_1Bin32’,[System.EnvironmentVariableTarget]::Machine)

[Environment]::SetEnvironmentVariable(‘SAP_JRE8′,’I:SAPIQSharedSAPJRE-8_1_062_64BIT’,[System.EnvironmentVariableTarget]::Machine)

[Environment]::SetEnvironmentVariable(‘SAP_JRE8_32′,’I:SAPIQSharedSAPJRE-8_1_062_32BIT’,[System.EnvironmentVariableTarget]::Machine)

[Environment]::SetEnvironmentVariable(‘SAP_JRE8_64′,’I:SAPIQSharedSAPJRE-8_1_062_64BIT’,[System.EnvironmentVariableTarget]::Machine)

[Environment]::SetEnvironmentVariable(‘SYBASE’,’I:SAPIQ’,[System.EnvironmentVariableTarget]::Machine)

[Environment]::SetEnvironmentVariable(‘SYBASE_OCS’,’OCS-16_0′,[System.EnvironmentVariableTarget]::Machine)

[Environment]::SetEnvironmentVariable(‘SYBROOT’,’I:SAPIQ’,[System.EnvironmentVariableTarget]::Machine)

After the environment variables are configured in secondary server (uswiniqdb3), restart the secondary server for environment variable to be effective.

Register SAP IQ as Windows service on secondary server

On the secondary node, we need to register SAP IQ as Windows service as our cluster roles is configured for the SAP IQ service. During the failover, Azure shared disk will move to the secondary node and the resource will start SAP IQ service on failover node. It will only start if SAP IQ Windows service is registered on secondary as well.

To register the SAP IQ service on secondary node, follow these steps –

- Stop the SAP IQ service on primary node (uswiniqdb1).

- If you Azure shared disk is running on primary node (uswiniqdb1), move shared disks to secondary node. Execute below PowerShell command

PS C:> Get-ClusterGroup

# Name OwnerNode State

# —- ——— —–

# Available Storage uswiniqdb1 Online

# Cluster Group uswiniqdb3 Online

PS C:> Get-ClusterGroup -Name ‘Available Storage’ | Get-ClusterResource

# Name State OwnerGroup ResourceType

# —- —– ———- ————

# Cluster Disk 1 Online Available Storage Physical Disk

# Cluster Disk 2 Online Available Storage Physical Disk

PS C:> Move-ClusterGroup -Name ‘Available Storage’ -Node uswiniqdb3

# Name OwnerNode State

# —- ——— —–

# Available Storage uswiniqdb3 Online

- Start the SAP IQ Service Manager. On the secondary node, the SAP IQ Service manager will not be visible via start menu, instead navigate to part I:SAPIQIQ-16_1Bin64 and open SybaseIQservice16.exe.

- Choose Create a New Service.

- Name the new service. In this example, we named it as SAPIQDB.

- Add the appropriate start-up parameters.

Include the full path to the database file. The server cannot start without a valid database path name.

Service Name: SAPIQDB

Startup Parameters: @J:SAPIQdatadbSAPIQDB.cfg J:SAPIQdatadbSAPIQDB.db

- Click Apply.

Restart Windows. On restart, Azure shared disks will move to another node (uswiniqdb3).

Because Windows service manager reads environmental variables only at system startup, you must restart Windows after you configure SAP IQ as a Windows service.

In services.msc, “Sybase IQ SAPIQDB” service gets created.

Try to start ‘Sybase IQ SAPIQDB’ service in respective node each time after registering, to check the service get started without any issue.

Configure SAP IQ role – Windows failover cluster

Before running below commands, make sure you have configured cluster as described in above “Configure Windows server failover cluster” section.

# Check where you Azure shared disk is running

PS C:> Get-ClusterGroup

# Name OwnerNode State

# —- ——— —–

# Available Storage uswiniqdb1 Online

# Cluster Group uswiniqdb3 Online

# Check the cluster disk name of your azure shared disk

PS C:> Get-ClusterGroup -Name ‘Available Storage’ | Get-ClusterResource

# Name State OwnerGroup ResourceType

# —- —– ———- ————

# Cluster Disk 1 Online Available Storage Physical Disk

# Cluster Disk 2 Online Available Storage Physical Disk

# Command to get service name for “Sybase IQ SAPIQDB” service

PS C:> Get-Service ‘Sybase IQ SAPIQDB’

# Status Name DisplayName

# —— —- ———–

# Running SybIQ_SAPIQDB Sybase IQ SAPIQDB

# Command to create generic service role

# StaticAddress is the IP address of your Internal Load Balancer

PS C:> Add-ClusterGenericServiceRole -ServiceName “SybIQ_SAPIQDB” -Name “sapiq” -StaticAddress “10.6.0.15” -Storage “Cluster Disk 1″,”Cluster Disk 2”

# Name OwnerNode State

# —- ——— —–

# sapiq uswiniqdb1 Online

The internal load balancer IP address 10.6.0.15 will not reach until we set probe port for it. Execute below command to set probe port 62000 to IP address 10.6.0.15.

PS C:> Get-ClusterGroup

# Name OwnerNode State

# —- ——— —–

# Available Storage uswiniqdb1 Offline

# Cluster Group uswiniqdb3 Online

# sapiq uswiniqdb1 Online

PS C:> Get-ClusterGroup -Name ‘sapiq’ | Get-ClusterResource

# Name State OwnerGroup ResourceType

# —- —– ———- ————

# Cluster Disk 1 Online sapiq Physical Disk

# Cluster Disk 2 Online sapiq Physical Disk

# IP Address 10.6.0.15 Online sapiq IP Address

# sapiq Online sapiq Network Name

# Sybase IQ SAPIQDB Online sapiq Generic Service

PS C:> Get-ClusterResource ‘IP Address 10.6.0.15’ | Get-ClusterParameter

# Object Name Value Type

# —— —- —– —-

# IP Address 10.6.0.15 Network Cluster Network 1 String

# IP Address 10.6.0.15 Address 10.6.0.15 String

# IP Address 10.6.0.15 SubnetMask 255.255.255.0 String

# IP Address 10.6.0.15 EnableNetBIOS 0 UInt32

# IP Address 10.6.0.15 OverrideAddressMatch 0 UInt32

# IP Address 10.6.0.15 EnableDhcp 0 UInt32

# IP Address 10.6.0.15 ProbePort 0 UInt32

# IP Address 10.6.0.15 ProbeFailureThreshold 0 UInt32

# IP Address 10.6.0.15 LeaseObtainedTime 1/1/0001 12:00:00 AM DateTime

# IP Address 10.6.0.15 LeaseExpiresTime 1/1/0001 12:00:00 AM DateTime

# IP Address 10.6.0.15 DhcpServer 255.255.255.255 String

# IP Address 10.6.0.15 DhcpAddress 0.0.0.0 String

# IP Address 10.6.0.15 DhcpSubnetMask 255.0.0.0 String

PS C:> Get-ClusterResource ‘IP Address 10.6.0.15’ | Set-ClusterParameter -Name ProbePort -Value 62000

# WARNING: The properties were stored, but not all changes will take effect until IP Address 10.6.0.15 is taken offline and then online again.

PS C:> Stop-ClusterGroup -Name ‘sapiq’

# Name OwnerNode State

# —- ——— —–

# sapiq uswiniqdb1 Offline

PS C:> Start-ClusterGroup -Name ‘sapiq’

# Name OwnerNode State

# —- ——— —–

# sapiq uswiniqdb1 Online

Bind the internal load balancer IP address (10.6.0.15) to sapiq.internal.contoso.net hostname in DNS.

C:>ping sapiq.internal.contoso.net

Pinging sapiq.internal.contoso.net [10.6.0.15] with 32 bytes of data:

Reply from 10.6.0.15: bytes=32 time=1ms TTL=128

Reply from 10.6.0.15: bytes=32 time=1ms TTL=128

Reply from 10.6.0.15: bytes=32 time<1ms TTL=128

Reply from 10.6.0.15: bytes=32 time<1ms TTL=128

For all client connection strings, sapiq.internal.contoso.net hostname should be used.

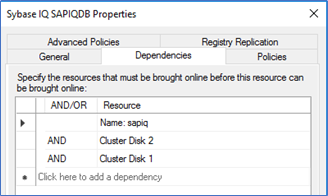

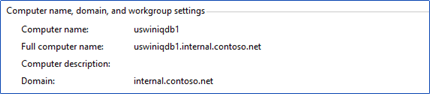

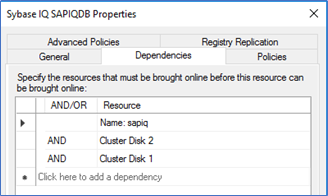

The service “Sybase IQ SAPIQDB” must start only after the resource “sapiq, cluster disk 1 and cluster disk 2” are online. The dependency report can be found as below –

If the dependency is not set, you can open the properties of “Sybase IQ SAPIQDB” role and set below dependency.

Test SAP IQ cluster

For this failover test, our SAP IQ instance is running on node A (uswiniqdb1). You can either your “Failover cluster manager” or “PowerShell” to initiate a failover. Here in this example, node A represent uswiniqdb1 and node B represent uswiniqdb3.

- Move the cluster group to node B.

Get-ClusterGroup ‘sapiq’

# Name OwnerNode State

# —- ——— —–

# sapiq uswiniqdb1 Online

Move-ClusterGroup -Name ‘sapiq’

# Name OwnerNode State

# —- ——— —–

# sapiq uswiniqdb3 Online

Get-ClusterGroup ‘sapiq’ | Get-ClusterResource

# Name State OwnerGroup ResourceType

# —- —– ———- ————

# Cluster Disk 1 Online sapiq Physical Disk

# Cluster Disk 2 Online sapiq Physical Disk

# IP Address 10.6.0.15 Online sapiq IP Address

# sapiq Online sapiq Network Name

# Sybase IQ SAPIQDB Online sapiq Generic Service

C:> hostnameuswiniqdb3 C:> tasklist /FI “IMAGENAME eq iqsrv16.exe”

# Image Name PID Session Name Session# Mem Usage

# ========================= ======== ================ =========== ============

# iqsrv16.exe 16188 Services 0 798,340 K

- Kill ‘iqsrv16.exe’ process running on node B.

PS C:> hostname

uswiniqdb3

PS C:> tasklist /FI “IMAGENAME eq iqsrv16.exe”

# Image Name PID Session Name Session# Mem Usage

# ========================= ======== ================ =========== ============

# iqsrv16.exe 16188 Services 0 798,340 K

PS C:> taskkill /F /PID 16188

SUCCESS: The process with PID 16188 has been terminated.

PS C:> tasklist /FI “IMAGENAME eq iqsrv16.exe”

INFO: No tasks are running which match the specified criteria.

PS C:> tasklist /FI “IMAGENAME eq iqsrv16.exe”

# Image Name PID Session Name Session# Mem Usage

# ========================= ======== ================ =========== ============

# iqsrv16.exe 6956 Services 0 740,624 K

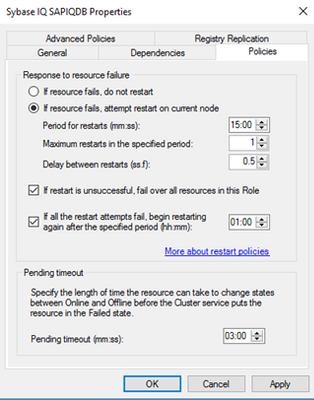

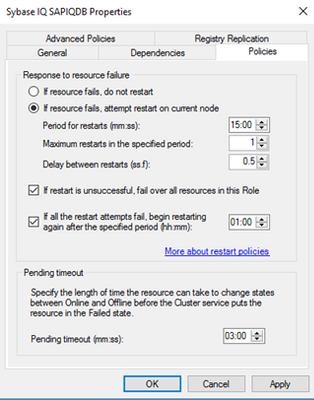

Here we killed iqsrv16.exe, but it got started on the same node B again as the response to resource failure for ‘Sybase IQ SAPIQDB’ is set a below. With below setting, the resource will be restarted on the same server before the resource failover to node A. If you want resource to failover and do not want to restart on the same node, you can set the value of “Maximum restarts in the specified period” to 0.

- Restart node where iqsrv16.exe process is running.

PS C:> hostname

uswiniqdb1

PS C:> tasklist /FI “IMAGENAME eq iqsrv16.exe”

# Image Name PID Session Name Session# Mem Usage

# ========================= ======== ================ =========== ============

# iqsrv16.exe 18025 Services 0 798,340 K

PS C:> shutdown /r

On restart of node A, the resource failover to node B.

PS C:> hostname

uswiniqdb3

PS C:> Get-ClusterGroup ‘sapiq’ | Get-ClusterResource

# Name State OwnerGroup ResourceType

# —- —– ———- ————

# Cluster Disk 1 Online sapiq Physical Disk

# Cluster Disk 2 Online sapiq Physical Disk

# IP Address 10.6.0.15 Online sapiq IP Address

# sapiq Online sapiq Network Name

# Sybase IQ SAPIQDB Online sapiq Generic Service

References

by Contributed | Jun 15, 2021 | Technology

This article is contributed. See the original author and article here.

We just added new APIs in preview in the Azure IoT SDK for C# that will make it easier for device developers to implement IoT Plug and Play, and we’d love to have your feedback.

Since we released IoT Plug and Play back in September 2020, we provided the PnP-specific helpers functions in the form of samples to help demonstrate the PnP convention eg. how to format PnP telemetry message, how to ack on properties updates, etc.

As IoT Plug and Play is becoming the “new normal” for connecting devices to Azure IoT, it is important that there is a solid set of APIs in the C# client SDK to help you efficiently implement IoT Plug and Play in your devices. PnP models expose components, telemetry, commands, and properties. These new APIs format and send messages for telemetry and property updates. They can also help the client receive and ack writable property update requests and incoming command invocations.

These additions to the C# SDK can become the foundation of your solution, taking the load off your plate when it comes to formatting data and making your devices future proof. We introduced these functions in preview for now, so you can test them in your scenarios and give us feedback on the design.. As long we are in preview, it is easy to change these APIs, so it is the right time to give it a try and let us know if that fits your needs or if you see some improvements.

The NuGet package can be found here: https://www.nuget.org/packages/Microsoft.Azure.Devices.Client/1.38.0-preview-001

We have created a couple of samples to help you get started with these new APIs, have a look at:

https://github.com/Azure/azure-iot-sdk-csharp/blob/preview/iothub/device/samples/convention-based-samples/readme.md

In these new APIs, we introduced a couple of new types to help with convention operations. For telemetry, we expose TelemetryMessage that simplifies message formatting for the telemetry:

// Send telemetry "serialNumber".

string serialNumber = "SR-1234";

using var telemetryMessage = new TelemetryMessage

{

MessageId = Guid.NewGuid().ToString(),

Telemetry = { ["serialNumber"] = serialNumber },

};

await _deviceClient.SendTelemetryAsync(telemetryMessage, cancellationToken);

and in the casehere thermostat1) you can prepare the telemetry message this way:

using var telemetryMessage = new TelemetryMessage("thermostat1")

{

MessageId = Guid.NewGuid().ToString(),

Telemetry = { ["serialNumber"] = serialNumber },

};

await _deviceClient.SendTelemetryAsync(telemetryMessage, cancellationToken);

For properties, we introduced the type ClientProperties TryGetValue.

With this new type, accessing a device twin property becomes:

// Retrieve the client's properties.

ClientProperties properties = await _deviceClient.GetClientPropertiesAsync(cancellationToken);

// To fetch the value of client reported property "serialNumber" under component "thermostat1".

bool isSerialNumberReported = properties.TryGetValue("thermostat1", "serialNumber", out string serialNumberReported);

// To fetch the value of service requested "targetTemperature" value under component "thermostat1".

bool isTargetTemperatureUpdateRequested = properties.Writable.TryGetValue("thermostat1", "targetTemperature", out double targetTemperatureUpdateRequest);

Note that in that case, we have a component named thermostat1, first we get the serialNumber and second for a writable property, we use properties.Writable.

Same pattern for reporting properties, we have now the ClientPropertyCollection, that helps to update properties by batch, as we have here a collection and exposing the method AddComponentProperty:

// Update the property "serialNumber" under component "thermostat1".

var propertiesToBeUpdated = new ClientPropertyCollection();

propertiesToBeUpdated.AddComponentProperty("thermostat1", "serialNumber", "SR-1234");

ClientPropertiesUpdateResponse updateResponse = await _deviceClient

.UpdateClientPropertiesAsync(propertiesToBeUpdated, cancellationToken);

long updatedVersion = updateResponse.Version;

With this, it became much easier to Respond to top-level property update requests even for a component model:

await _deviceClient.SubscribeToWritablePropertiesEventAsync(

async (writableProperties, userContext) =>

{

if (writableProperties.TryGetValue("thermostat1", "targetTemperature", out double targetTemperature))

{

IWritablePropertyResponse writableResponse = _deviceClient

.PayloadConvention

.PayloadSerializer

.CreateWritablePropertyResponse(targetTemperature, CommonClientResponseCodes.OK, writableProperties.Version, "The operation completed successfully.");

var propertiesToBeUpdated = new ClientPropertyCollection();

propertiesToBeUpdated.AddComponentProperty("thermostat1", "targetTemperature", writableResponse);

ClientPropertiesUpdateResponse updateResponse = await _deviceClient.UpdateClientPropertiesAsync(propertiesToBeUpdated, cancellationToken);

}

As long as we stay in preview for these APIs, you‘ll find the set of usual PnP samples, migrated to use these news APIs in the code repository, in the preview branch:

https://github.com/Azure/azure-iot-sdk-csharp/tree/preview/iothub/device/samples/convention-based-samples

See project Thermostat for the non-component sample and TemperatureController for the component sample.

Again, it is the right time to let us know any feedback and comments on these APIs. Contact us, open an issue, and help us providing the right PnP API you need.

Happy testing,

Eric for the Azure IoT Managed SDK team

by Contributed | Jun 15, 2021 | Technology

This article is contributed. See the original author and article here.

Summary

As previously announced, we are deprecating all /microsoft org container images hosted in Docker Hub repositories on June 30th, 2021. This includes some old azure-cli images (pre v2.12.1), all of which are already available on Microsoft Container Registry (MCR).

In preparation for the deprecation, we are removing the latest tag from the microsoft/azure-cli container image in Dockerhub. If you are referencing microsoft/azure-cli:latest in your automation or Dockerfiles, you will see failures.

What should I do?

To avoid any impact on your development, deployment, or automation scripts, you should update docker pull commands, FROM statements in Dockerfiles, and other references to microsoft/azure-cli container images to explicitly reference mcr.microsoft.com/azure-cli instead.

What’s next?

On June 30th we will remove all version tags from Dockerhub for microsoft/azure-cli . After that date the only to consume the container images will be via MCR.

How to get additional help?

We understand that there may be unanswered questions. You can get additional help by submitting an issue on GitHub.

by Contributed | Jun 15, 2021 | Technology

This article is contributed. See the original author and article here.

Today, I got a very interesting question about if could be possible to connect from external tables to Azure SQL Managed Instance, SQL Database or Synase. In this article, I would like to explain it.

Besides the option that we have with Linked Server, my first option was to use SQL SERVER 2019 and Polybase, after installing Polybase and using the following TSQL statement I was able to connect to Managed Instance, SQL Database and Synapse from my OnPremises or Azure Virtual Machine.

CREATE MASTER KEY ENCRYPTION BY PASSWORD = 'Password';

CREATE DATABASE SCOPED CREDENTIAL AzureSQLExternalTableCredentials WITH IDENTITY = 'UserName', Secret = 'Password';

CREATE EXTERNAL DATA SOURCE AzureSQLExternalTableDataSource WITH (LOCATION = 'sqlserver://servername.database.windows.net', PUSHDOWN = ON, CREDENTIAL = AzureSQLExternalTableCredentials);

CREATE EXTERNAL TABLE [dbo].[AzureSQLExternalTable_MyTable] ([id] [int] NOT NULL) WITH (DATA_SOURCE = AzureSQLExternalTableDataSource ,location='databasename.schemaname.TableName')

Running a query Select * from AzureSQLExternaTable_MyTable I was able to obtain the data.

Unfortunately, it is not possible to insert data to the table AzureSQLExternalTable_MyTable because external tables in AzureSQL, Synapse and SQL Server OnPrem there is not supported run DML commands.

Enjoy!

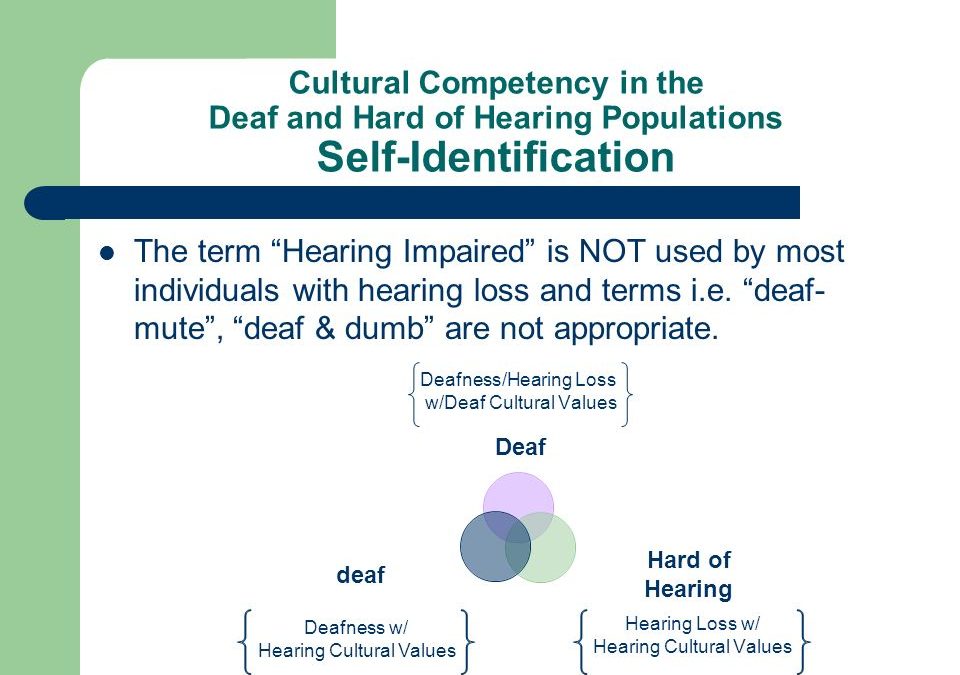

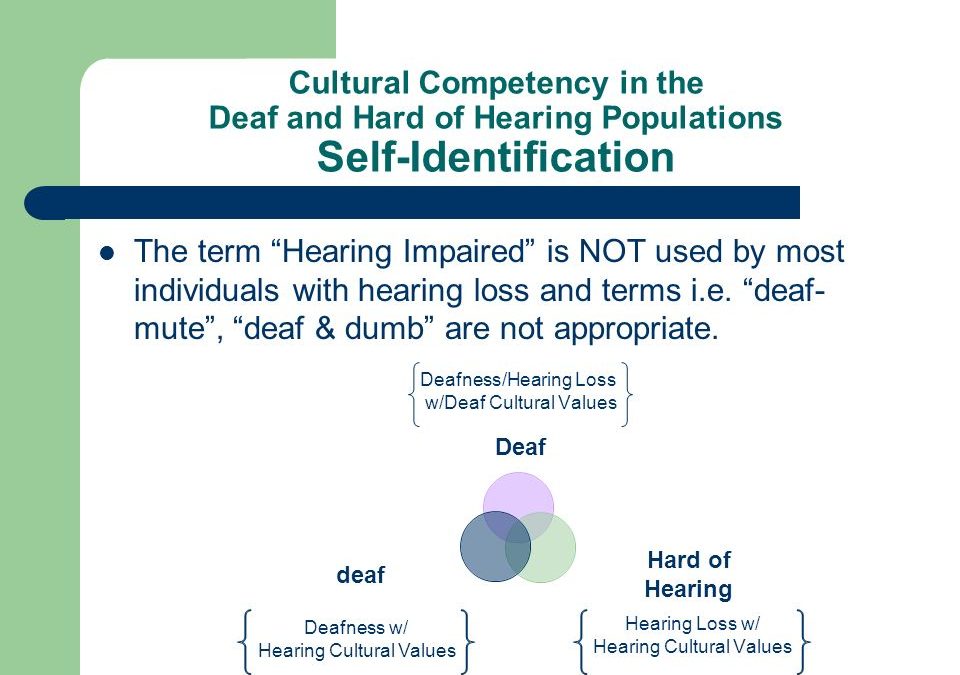

by Jenna Restuccia | Jun 15, 2021 | ADA Compliance, American Sign Language

Cultural mediation is a concept developed to help deaf people experiencing communication problems within their work communities. Cultural mediation involves cultural experts facilitating conversation and learning between the deaf or hard-of-hearing and hearing communities. These discussions work to build an environment that encourages a dialogue between the two groups. The goal is to foster better understanding between the two through careful communication to build trust and respect. Cultural mediators also help participants understand and appreciate a new language or culture, especially where a lack of consistent verbal communication restricts this.

Cultural mediation is a process of interaction that builds a bridge, rather than a divide, between two (or more) sides of a relationship. Cultural mediation assumes that all people have unique and valuable cultural values and experiences. If those qualities and ideas are appreciated and respected by others, a healthy relationship built on mutual respect can form between people from all backgrounds. Cultural mediation can occur in an office setting or group setting. It has been successful for all types of relationships.

In an instance of a deaf or hard-of-hearing person, cultural mediators help the person who uses ASL to communicate to connect with a person who uses English to communicate. Cultural mediation can help to overcome communication barriers that can affect the ability to engage in meaningful interactions. For example, a cultural mediator can make messages clear if there are specific requests to communicate. In addition, cultural mediators are skilled at getting two people on the same page, which is especially important if the issues at hand are at work.

Cultural mediation techniques can also be helpful when one party feels as though they are being talked down to or judged based on their culture. These issues may arise in areas such as language or behaviors. To provide a positive mediation experience, it is essential that the person with the deaf person can participate in the mediation. If a person cannot participate in cultural mediation, the relationship is less likely to thrive. The difference between the deaf and the hearing is a language difference, not a status inequality.

Some examples of cultural misunderstandings that can take place between the two parties are:

- Lack of eye contact can be regarded as rude in the deaf community and would be the equivalent of plugging your ears when someone is speaking to you.

- Looking at the interpreter/mediator instead of the deaf individual can cause feelings of exclusion.

- A deaf individual who stomps the ground or turns off and on lights to get someone’s attention may be considered inappropriate in the workplace when it is very acceptable and common practice in the Deaf community.

Mediation can allow for a safer work environment and more job satisfaction for all parties. When a conflict or concern arises between employees of varying cultures or companies, mediation can help eliminate the dispute and focus on a communal business goal. The goal of cultural mediation is to create a sense of unity and understanding between all parties so that everyone can serve on the team and work towards a common goal. As a result, a business is more likely to create a positive workplace culture that will benefit everyone.

by Contributed | Jun 15, 2021 | Technology

This article is contributed. See the original author and article here.

ORCA is an open-source software solution which helps academic institutions assess the effectiveness of online learning by analyzing data on students’ attendance and engagement with online platforms and content. As a group of students at University College London (UCL), we had the chance to develop ORCA through UCL’s Industry Exchange Network (IXN) programme in collaboration with Microsoft as part of a course within our degree.

Guest post by team leads Lydia Tsami and Omar Beyhum

Although we’re currently busy working on our dissertation projects as part of our degrees, 2 of our developers – Lydia Tsami and Omar Beyhum – are joining Ayca Bas from the Microsoft 365 Advocacy team in this webinar to talk about how we designed, developed, and delivered ORCA.

What is ORCA?

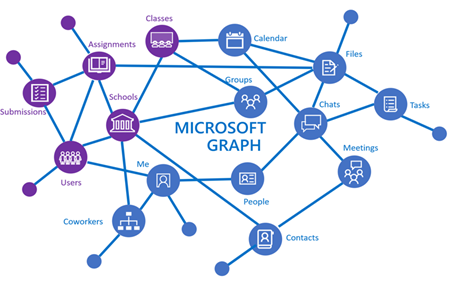

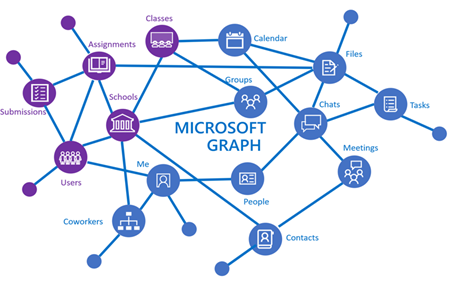

ORCA is designed to complement the online learning and collaboration tools of schools and universities, most notably Moodle and Microsoft Teams. In brief, it can generate visual reports based on student attendance and engagement metrics, and then provide them to the relevant teaching staff. To accomplish this, it leverages Microsoft Graph to cross reference student identities across different platforms and listen to events such as participants joining meetings. Data can be synthesized via templated Sharepoint lists or Power BI dashboards, then shared to the relevant staff members based on an institution’s Azure Active Directory.

You can check the full webinar below if you’re interested in seeing a demo of ORCA in use, how it was implemented, and how to get started with installing it or contributing to the project!

Make your own apps

Keen on developing your own applications on top of services like Teams, Sharepoint, and Microsoft Graph? Make sure to check out these resources, we found them pretty useful when we first got started:

by Contributed | Jun 15, 2021 | Technology

This article is contributed. See the original author and article here.

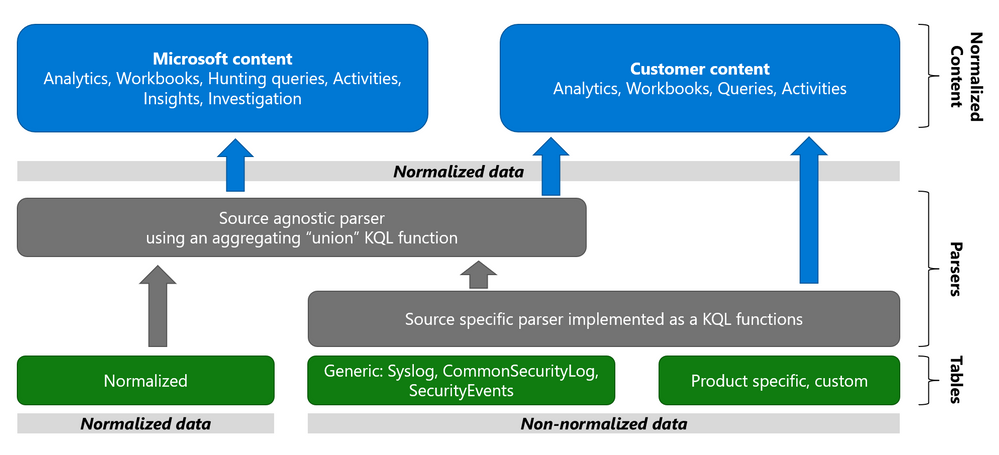

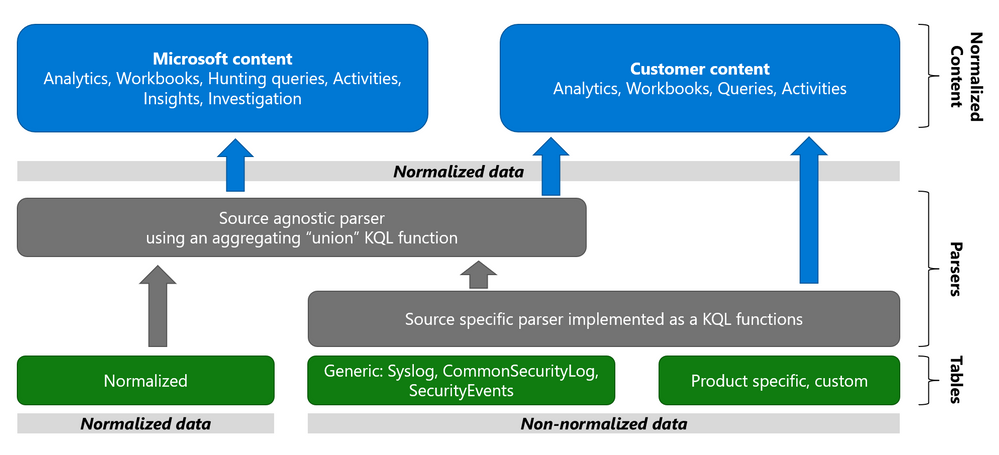

I’m excited to announce the second step in our normalization journey. Following our networking schema, we now extend our Azure Sentinel Information Model (ASIM) guidance and release our DNS schema. We expect to follow suit with additional schemas in the coming weeks.

Special thanks to Yaron Fruchtmann and Batami Gold, who made all this possible.

This release includes additional artifacts to ensure easier use of ASIM:

- All the normalizing parsers can be deployed in a click using an ARM template. The initial release contains normalizing parsers for Infoblox, Cisco Umbrella, and Microsoft DNS server.

- We have migrated analytic rules that worked on a single DNS source to use the normalized template. Those are available in GitHub and will be available in the in product gallery in the coming days. You can find the list at the end of this post.

With a single click deployment and support for normalized content in analytic rules, we believe we will see an accelerated adaption of the Azure Sentinel Information Model.

Join us to learn more about Azure Sentinel information model in two webinars:

- The Information Model: Understanding Normalization in Azure Sentinel

- Deep Dive into Azure Sentinel Normalizing Parsers and Normalized Content

Why normalization, and what is the Azure Sentinel Information Model?

Working with various data types and tables together presents a challenge. You must become familiar with many different data types and schemas, write and use a unique set of analytics rules, workbooks, and hunting queries for each, even for those that share commonalities (for example, DNS servers). Correlation between the different data types necessary for investigation and hunting is also tricky.

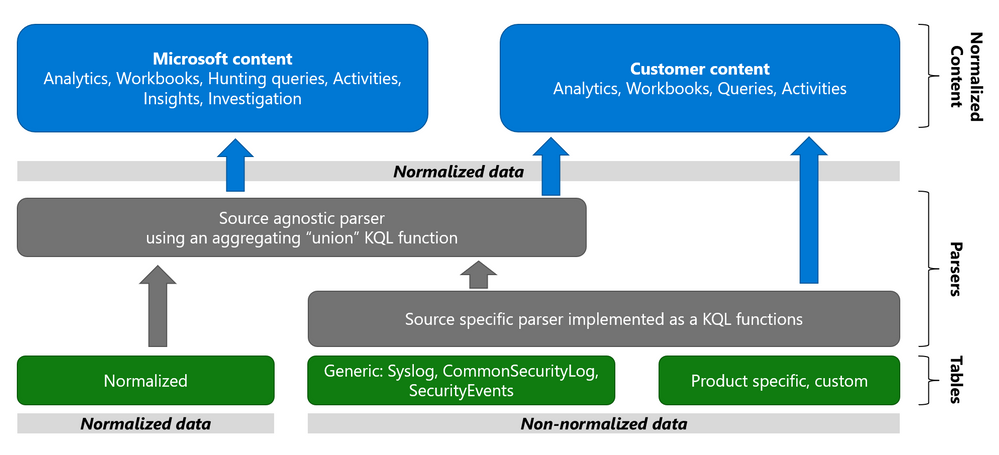

The Azure Sentinel Information Model (ASIM) provides a seamless experience for handling various sources in uniform, normalized views. ASIM aligns with the Open-Source Security Events Metadata (OSSEM) common information model, promoting vendor agnostic, industry-wide normalization. ASIM:

- Allows source agnostic content and solutions

- Simplifies analyst use of the data in sentinel workspaces

The current implementation is based on query time normalization using KQL functions. And includes the following:

- Normalized schemas cover standard sets of predictable event types that are easy to work with and build unified capabilities. The schema defines which fields should represent an event, a normalized column naming convention, and a standard format for the field values.

- Parsers map existing data to the normalized schemas. Parsers are implemented using KQL functions.

- Content for each normalized schema includes analytics rules, workbooks, hunting queries, and additional content. This content works on any normalized data without the need to create source-specific content.

Why normalize DNS data?

ASIM is especially useful for DNS. Different DNS servers and DNS security solutions such as Infoblox, Cisco Umbrella & Microsoft DNS server provide highly non-standard logs, representing similar information, namely the DNS protocol. Using normalization, standard, source agnostic content can apply to all DNS servers without customizing it to each DNS server. In addition, an analyst investigating an incident can query the DNS data in the system without specific knowledge of the source providing it.

Analytic Rules added or updated to work with ASim DNS

- Added:

- Excessive NXDOMAIN DNS Queries (Normalized DNS)

- DNS events related to mining pools (Normalized DNS)

- DNS events related to ToR proxies (Normalized DNS)

- Updated to include normalized DNS:

- Known Barium domains

- Known Barium IP addresses

- Exchange Server Vulnerabilities Disclosed March 2021 IoC Match

- Known GALLIUM domains and hashes

- Known IRIDIUM IP

- NOBELIUM – Domain and IP IOCs – March 2021

- Known Phosphorus group domains/IP

- Known STRONTIUM group domains – July 2019

- Solorigate Network Beacon

- THALLIUM domains included in DCU takedown

- Known ZINC Comebacker and Klackring malware hashes

If you’re considering getting a timeshare this vacation season, read on. Maybe you got a flyer in the mail with pictures of sunny beaches and beautiful resort suites. Sounds great, right? But before you sign a timeshare contract, make sure you understand what you’re getting into — and how to get out of it.

If you’re considering getting a timeshare this vacation season, read on. Maybe you got a flyer in the mail with pictures of sunny beaches and beautiful resort suites. Sounds great, right? But before you sign a timeshare contract, make sure you understand what you’re getting into — and how to get out of it.

Recent Comments