by Contributed | Dec 11, 2021 | Technology

This article is contributed. See the original author and article here.

Before implementing data extraction from SAP systems please always verify your licensing agreement. |

Over the last five episodes, we’ve built quite a complex Synapse Pipeline that allows extracting SAP data using OData protocol. Starting from a single activity in the pipeline, the solution grew, and it now allows to process multiple services on a single execution. We’ve implemented client-side caching to optimize the extraction runtime and eliminate short dumps at SAP. But that’s definitely not the end of the journey!

Today we will continue to optimize the performance of the data extraction. Just because the Sales Order OData service exposes 40 or 50 properties, it doesn’t mean you need all of them. One of the first things I always mention to customers, with who I have a pleasure working, is to carefully analyze the use case and identify the data they actually need. The less you copy from the SAP system, the process is faster, cheaper and causes fewer troubles for SAP application servers. If you require data only for a single company code, or just a few customers – do not extract everything just because you can. Focus on what you need and filter out any unnecessary information.

Fortunately, OData services provide capabilities to limit the amount of extracted data. You can filter out unnecessary data based on the property value, and you can only extract data from selected columns containing meaningful data.

Today I’ll show you how to implement two query parameters: $filter and $select to reduce the amount of data to extract. Knowing how to use them in the pipeline is essential for the next episode when I explain how to process only new and changed data from the OData service.

ODATA FILTERING AND SELECTION

To filter extracted data based on the field content, you can use the $filter query parameter. Using logical operators, you can build selection rules, for example, to extract only data for a single company code or a sold-to party. Such a query could look like this:

/API_SALES_ORDER_SRV/A_SalesOrder?$filter=SoldToParty eq 'AZ001'

The above query will only return records where the field SoldToParty equals AZ001. You can expand it with logical operators ‘and’ and ‘or’ to build complex selection rules. Below I’m using the ‘or’ operator to display data for two Sold-To Parties:

/API_SALES_ORDER_SRV/A_SalesOrder/?$filter=SoldToParty eq 'AZ001' or SoldToParty eq 'AZ002'

You can mix and match fields we’re interested in. Let’s say we would like to see orders for customers AZ001 and AZ002 but only where the total net amount of the order is lower than 10000. Again, it’s quite simple to write a query to filter out data we’re not interested in:

/API_SALES_ORDER_SRV/A_SalesOrder?$filter=(SoldToParty eq 'AZ001' or SoldToParty eq 'AZ002') and TotalNetAmount le 10000.00

Let’s be honest, filtering data out is simple. Now, using the same logic, you can select only specific fields. This time, instead of the $filter query parameter, we will use the $select one. To get data only from SalesOrder, SoldToParty and TotalNetAmount fields, you can use the following query:

/API_SALES_ORDER_SRV/A_SalesOrder?$select=SalesOrder,SoldToParty,TotalNetAmount

There is nothing stopping you from mixing $select and $filter parameters in a single query. Let’s combine both above examples:

/API_SALES_ORDER_SRV/A_SalesOrder?$select=SalesOrder,SoldToParty,TotalNetAmount&$filter=(SoldToParty eq 'AZ001' or SoldToParty eq 'AZ002') and TotalNetAmount le 10000.00

By applying the above logic, the OData response time reduced from 105 seconds to only 15 seconds, and its size decreased by 97 per cent. That, of course, has a direct impact on the overall performance of the extraction process.

FILTERING AND SELECTION IN THE PIPELINE

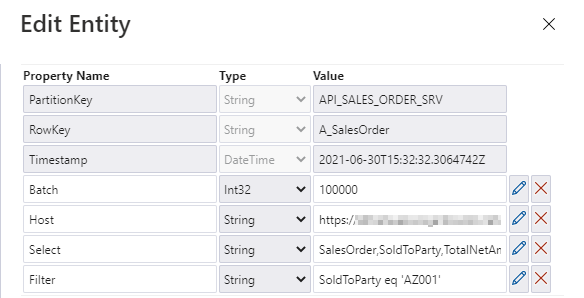

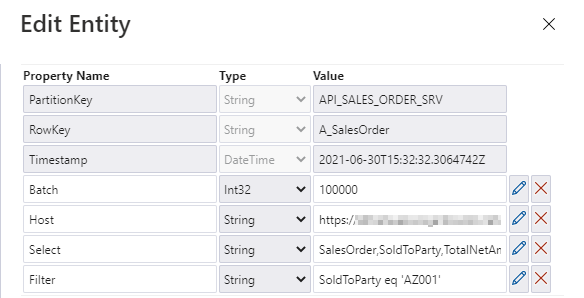

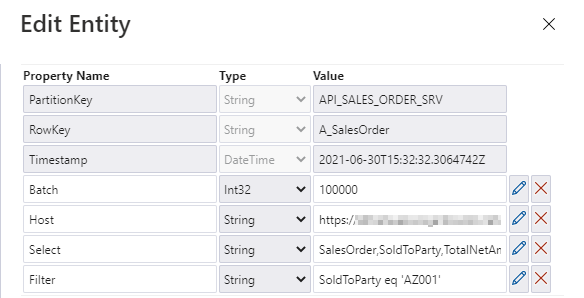

The filtering and selection options should be based on the entity level of the OData service. Each entity has a unique set of fields, and we may want to provide different filtering and selection rules. We will store the values for query parameters in the metadata store. Open it in the Storage Explorer and add two properties: filter and select.

I’m pretty sure that based on the previous episodes of the blog series, you could already implement the logic in the pipeline without my help. But there are two challenges we should be mindful of. Firstly, we should not assume that $filter and $select parameters will always contain a value. If you want to extract the whole entity, you can leave those fields empty, and we should not pass them to the SAP system. In addition, as we are using the client-side caching to chunk the requests into smaller pieces, we need to ensure that we pass the same filtering rules in the Lookup activity where we check the number of records in the OData service.

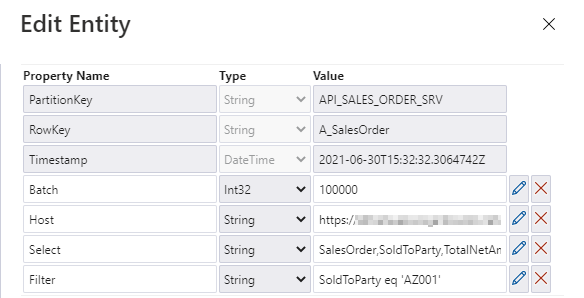

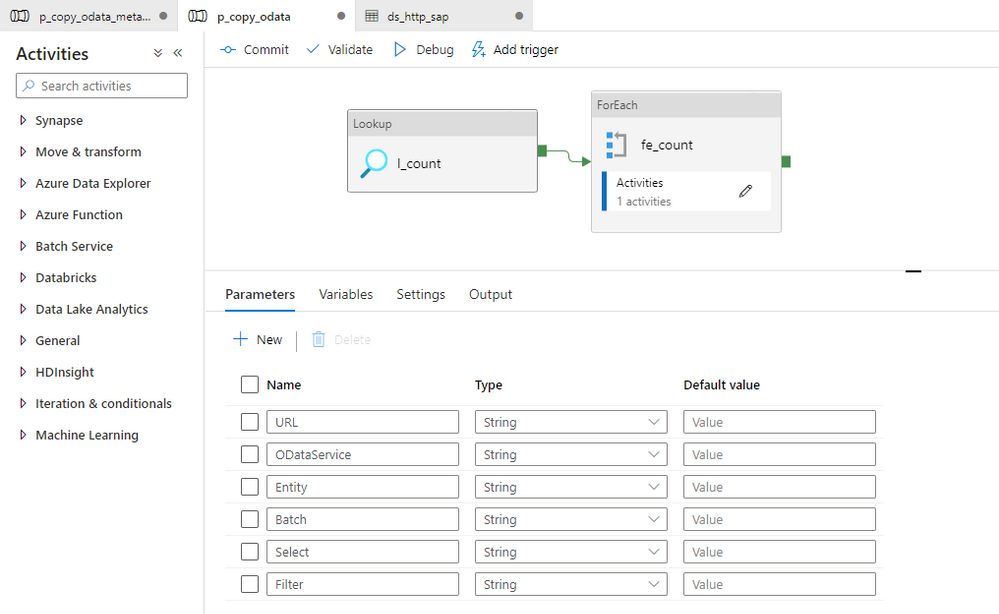

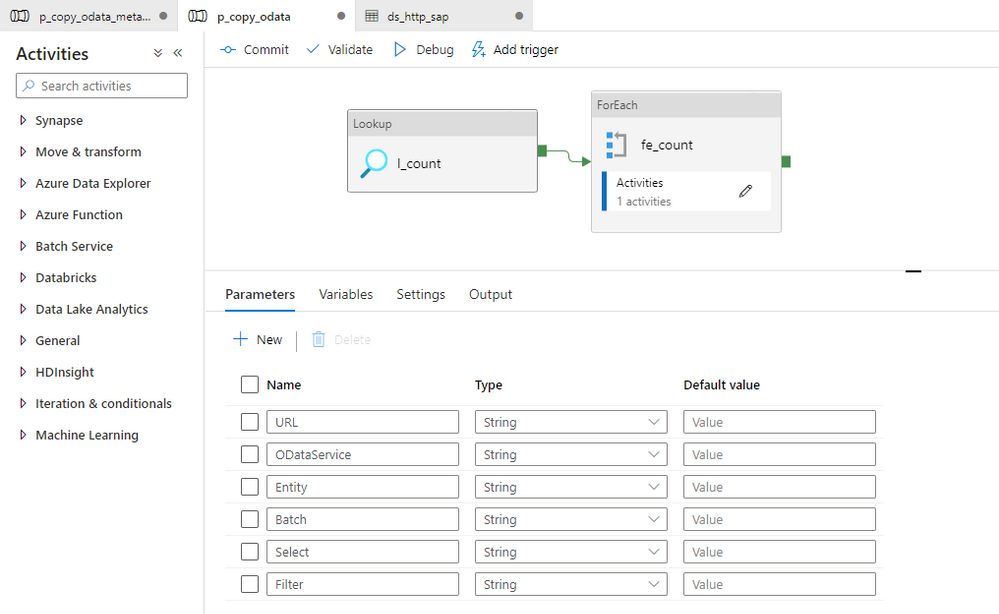

Let’s start by defining parameters in the child pipeline to pass filter and select values from the metadata table. We’ve done that already in the third episode, so you know all steps.

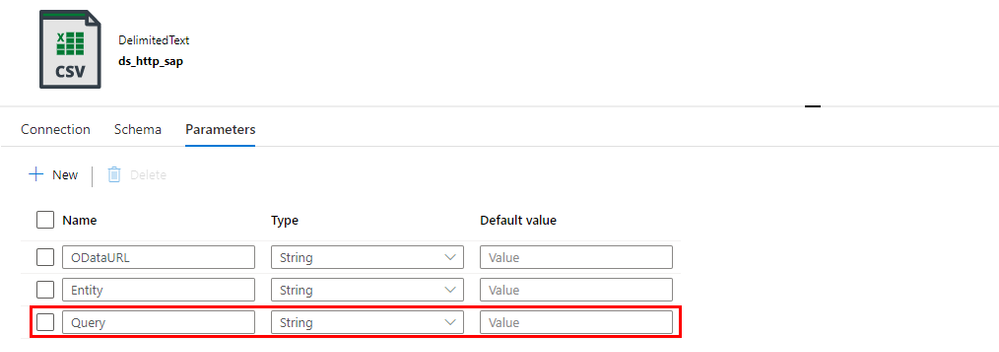

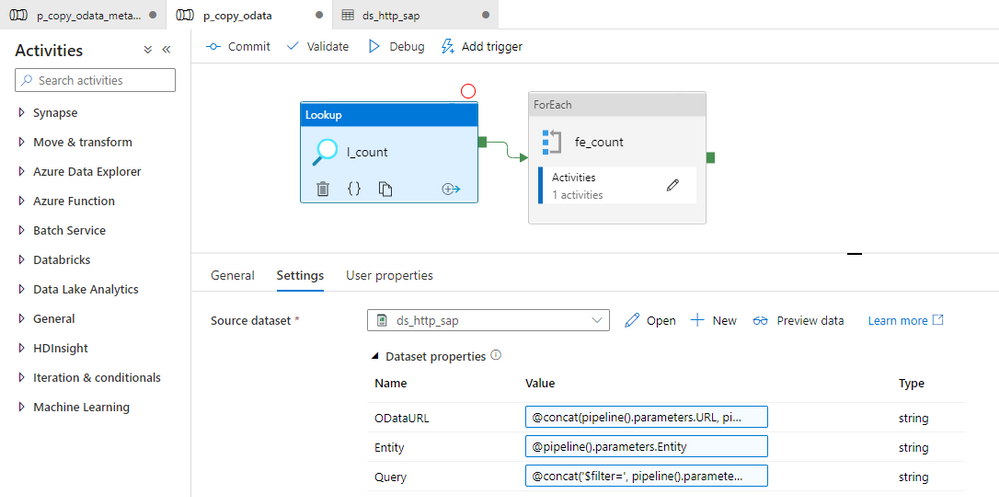

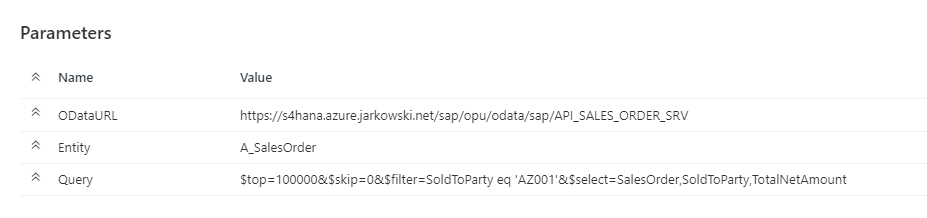

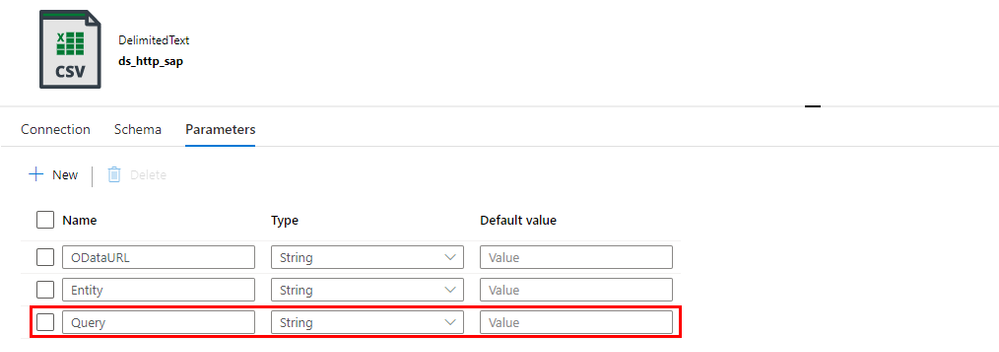

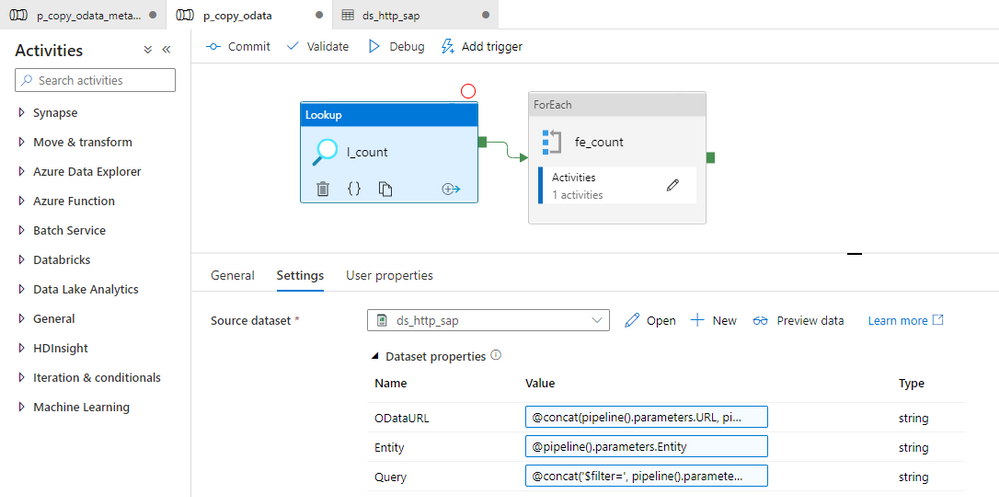

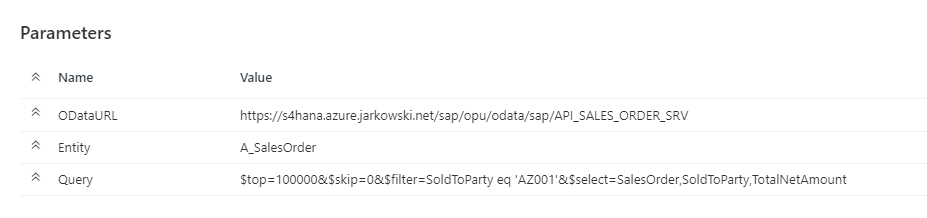

To correctly read the number of records, we have to consider how to combine these additional parameters with the OData URL in the Lookup activity. So far, the dataset accepts two dynamic fields: ODataURL and Entity. To pass the newly defined parameters, you have to add the Query one.

You can go back to the Lookup activity to define the expression to pass the $filter and $query values. It is very simple. I check if the Filter parameter in the metadata store contains any value. If not, then I’m passing an empty string. Otherwise, I concatenate the query parameter name with the value.

@if(empty(pipeline().parameters.Filter), '', concat('?$filter=', pipeline().parameters.Filter))

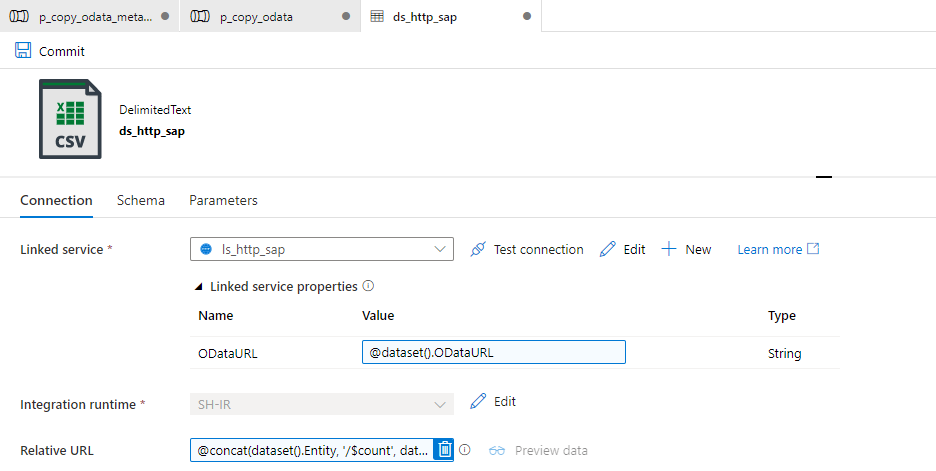

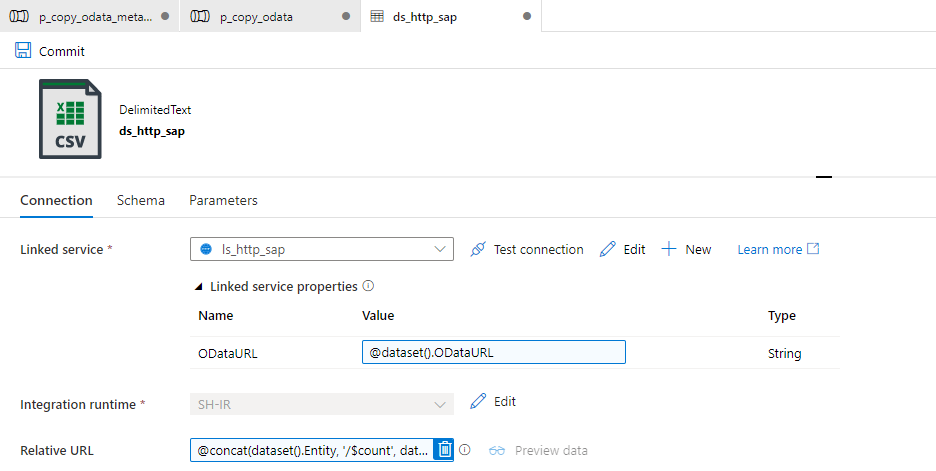

Finally, we can use the Query field in the Relative URL of the dataset. We already use that field to pass the entity name and the $count operator, so we have to slightly extend the expression.

@concat(dataset().Entity, '/$count', dataset().Query)

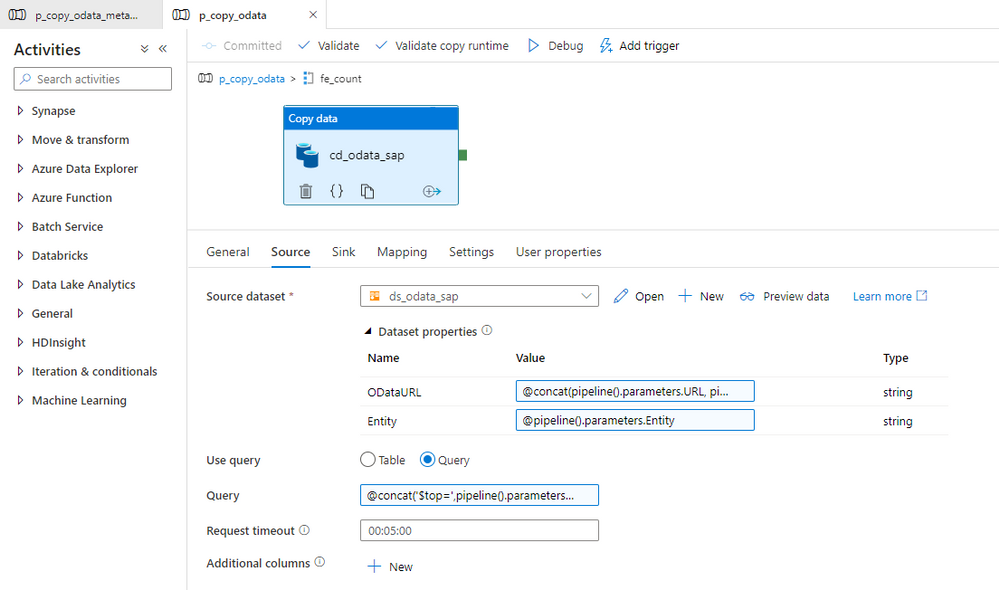

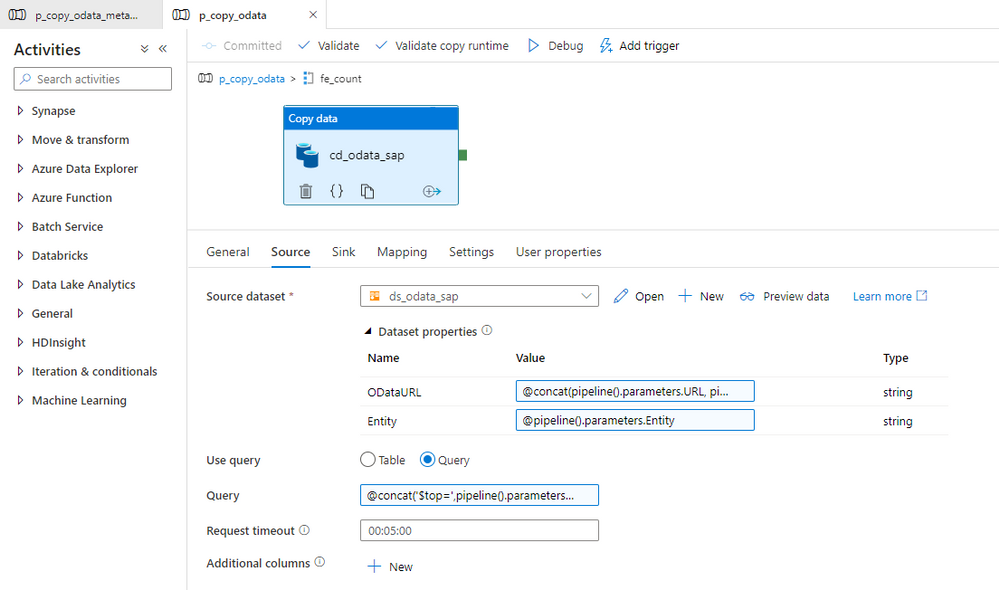

Changing the Copy Data activity is a bit more challenging. The Query field is already defined, but the expression we use should include the $top and $skip parameters, that we use for the client-side paging. Unlike at the Lookup activity, this time we also have to include both $select and $filter parameters and check if they contain a value. It makes the expression a bit lengthy.

@concat('$top=',pipeline().parameters.Batch, '&$skip=',string(mul(int(item()), int(pipeline().parameters.Batch))), if(empty(pipeline().parameters.Filter), '', concat('&$filter=',pipeline().parameters.Filter)), if(empty(pipeline().parameters.Select), '', concat('&$select=',pipeline().parameters.Select)))

With above changes, the pipeline uses filter and select values to extract only the data you need. It reduces the amount of processed data and improves the execution runtime.

IMPROVING MONITORING

As we develop the pipeline, the number of parameters and expressions grows. Ensuring that we haven’t made any mistakes becomes quite a difficult task. By default, the Monitoring view only gives us basic information on what the pipeline passes to the target system in the Copy Data activity. At the same time, parameters influence which data we extract. Wouldn’t it be useful to get a more detailed view?

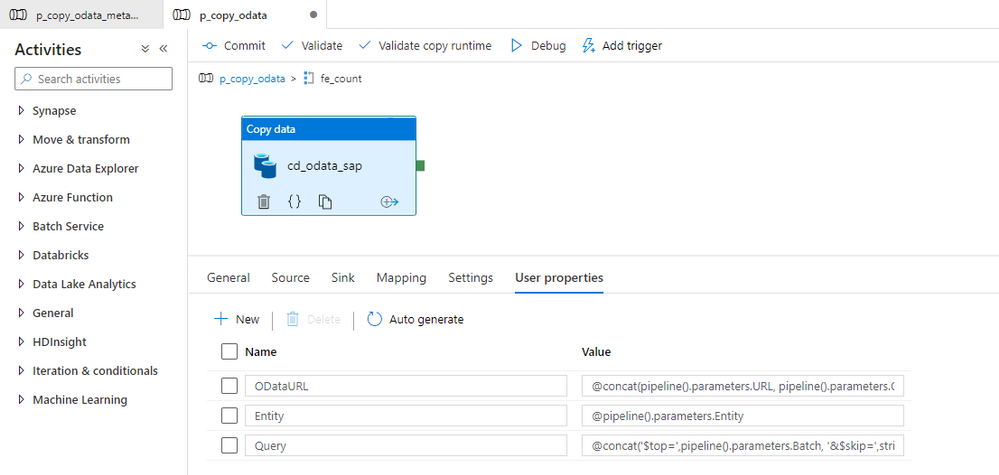

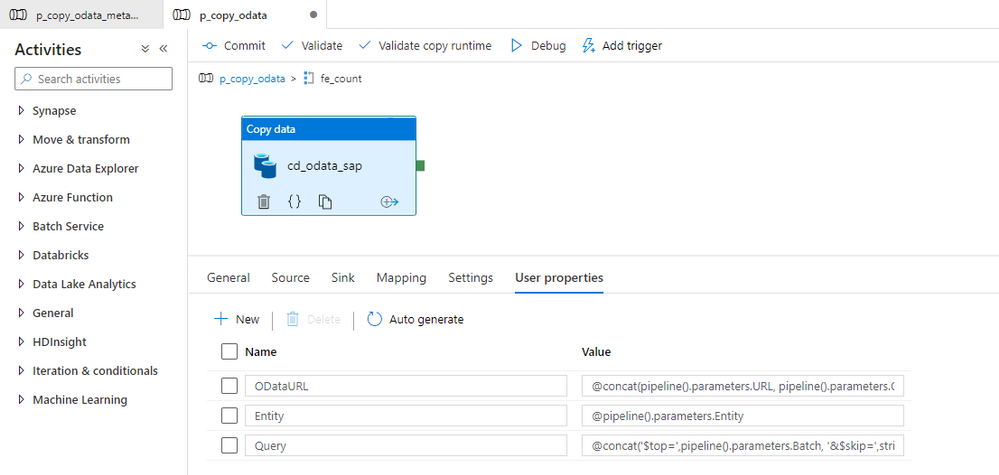

There is a way to do it! In Azure Synapse Pipelines, you can define User Properties, which are highly customizable fields that accept custom values and expressions. We will use them to verify that our pipeline works as expected.

Open the Copy Data activity and select the User Properties tab. Add three properties we want to monitor – the OData URL, entity name, and the query passed to the SAP system. Copy expression from the Copy Data activity. It ensures the property will have the same value as is passed to the SAP system.

Once the user property is defined we start the extraction job.

MONITORING AND EXECUTION

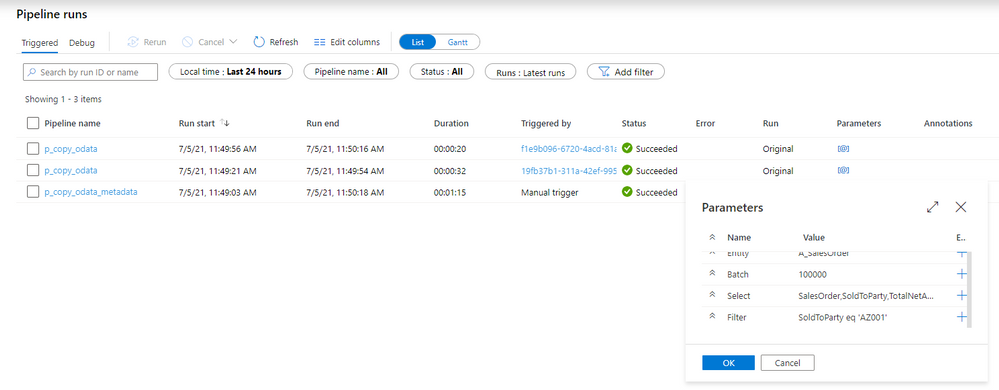

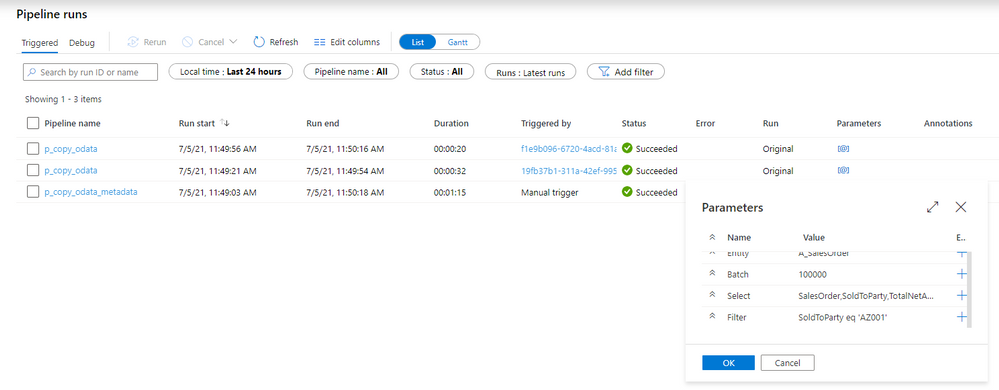

Let’s start the extraction. I process two OData services, but I have defined Filter and Select parameters to only one of them.

Once the processing has finished, open the Monitoring area. To monitor all parameters that the metadata pipeline passes to child one, click on the [@] sign:

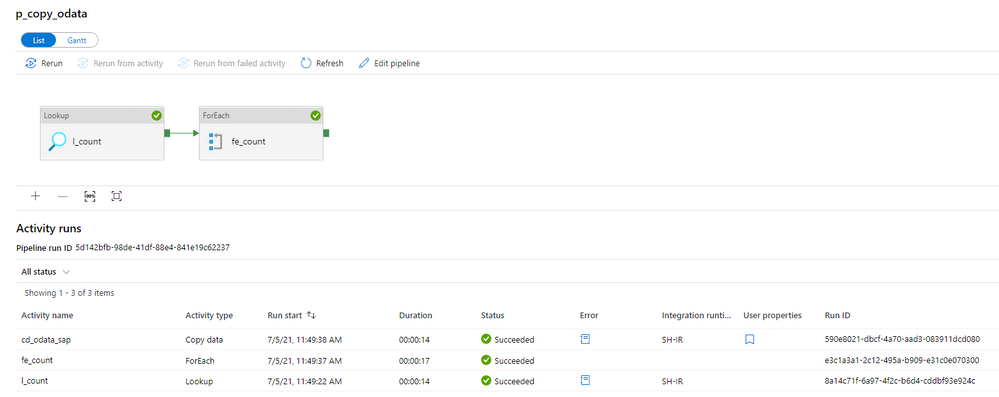

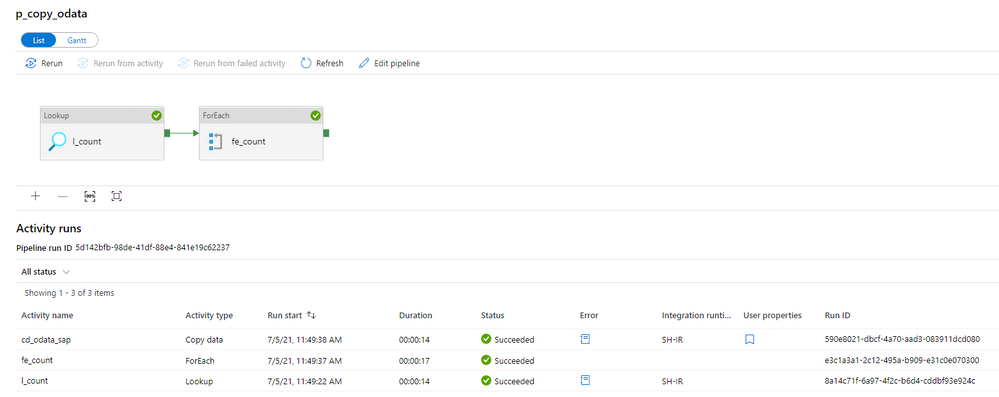

Now, enter the child pipeline to see the details of the Copy Data activity.

When you click on the icon in the User Properties column, you can display the defined user properties. As we use the same expressions as in the Copy Activity, we clearly see what was passed to the SAP system. In case of any problems with the data filtering, this is the first place to start the investigation.

The above parameters are very useful when you need to troubleshoot the extraction process. Mainly, it shows you the full request query that is passed to the OData service – including the $top and $skip parameters that we defined in the previous episode.

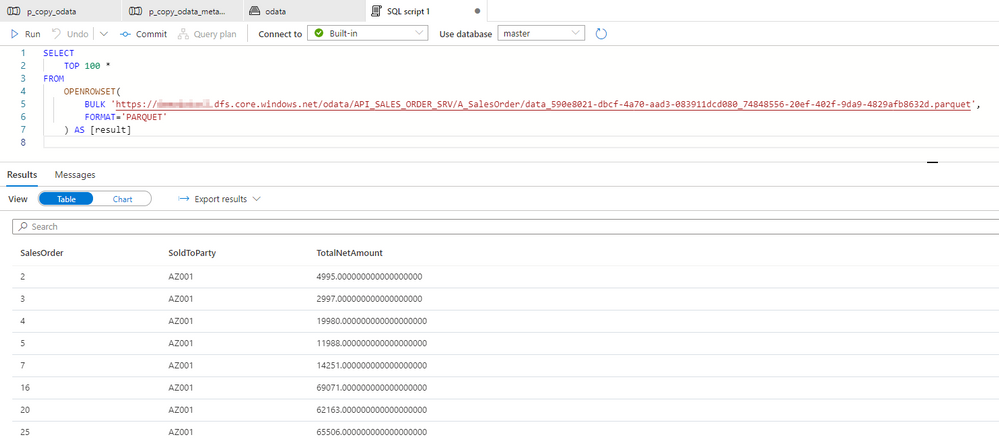

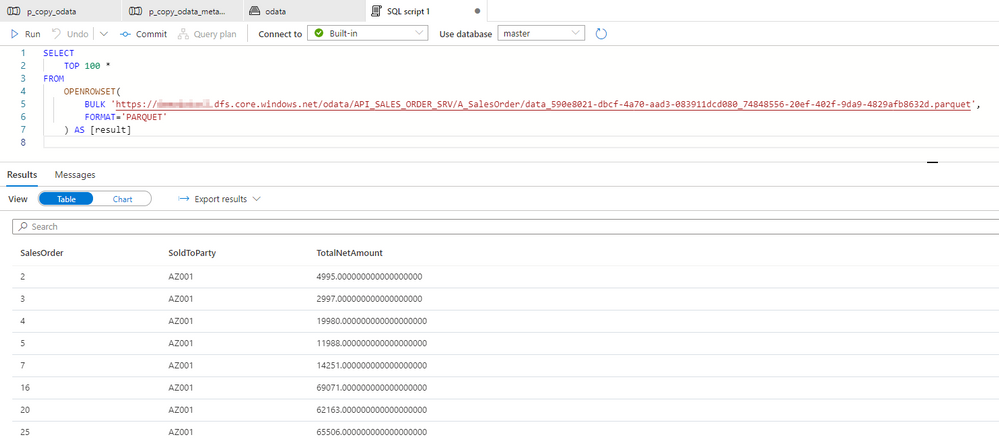

The extraction was successful, so let’s have a look at the extracted data in the lake.

There are only three columns, which we have selected using the $select parameter. Similarly, we can only see rows with SoldToParty equals AZ001 and the TotalNetAmount above 1000. It proves the filtering works fine.

I hope you enjoyed this episode. I will publish the next one in the upcoming week, and it will show you one of the ways to implement delta extraction. A topic that many of you wait for!

by Scott Muniz | Dec 10, 2021 | Security, Technology

This article is contributed. See the original author and article here.

CISA has added thirteen new vulnerabilities to its Known Exploited Vulnerabilities Catalog, based on evidence that threat actors are actively exploiting the vulnerabilities listed in the table below. These types of vulnerabilities are a frequent attack vector for malicious cyber actors of all types and pose significant risk to the federal enterprise.

|

CVE Number

|

CVE Title

|

Remediation Due Date

|

|

CVE-2021-44228

|

Apache Log4j2 Remote Code Execution Vulnerability

|

12/24/2021

|

|

CVE-2021-44515

|

Zoho Corp. Desktop Central Authentication Bypass Vulnerability

|

12/24/2021

|

|

CVE-2021-44168

|

Fortinet FortiOS Arbitrary File Download Vulnerability

|

12/24/2021

|

|

CVE-2021-35394

|

Realtek Jungle SDK Remote Code Execution Vulnerability

|

12/24/2021

|

|

CVE-2020-8816

|

Pi-Hole AdminLTE Remote Code Execution Vulnerability

|

6/10/2022

|

|

CVE-2020-17463

|

Fuel CMS SQL Injection Vulnerability

|

6/10/2022

|

|

CVE-2019-7238

|

Sonatype Nexus Repository Manager Incorrect Access Control Vulnerability

|

6/10/2022

|

|

CVE-2019-13272

|

Linux Kernel Improper Privilege Management Vulnerability

|

6/10/2022

|

|

CVE-2019-10758

|

MongoDB mongo-express Remote Code Execution Vulnerability

|

6/10/2022

|

|

CVE-2019-0193

|

Apache Solr DataImportHandler Code Injection Vulnerability

|

6/10/2022

|

|

CVE-2017-17562

|

Embedthis GoAhead Remote Code Execution Vulnerability

|

6/10/2022

|

|

CVE-2017-12149

|

Red Hat Jboss Application Server Remote Code Execution Vulnerability

|

6/10/2022

|

|

CVE-2010-1871

|

Red Hat Linux JBoss Seam 2 Remote Code Execution Vulnerability

|

6/10/2022

|

Binding Operational Directive (BOD) 22-01: Reducing the Significant Risk of Known Exploited Vulnerabilities established the Known Exploited Vulnerabilities Catalog as a living list of known CVEs that carry significant risk to the federal enterprise. BOD 22-01 requires FCEB agencies to remediate identified vulnerabilities by the due date to protect FCEB networks against active threats. See the BOD 22-01 Fact Sheet for more information.

Although BOD 22-01 only applies to FCEB agencies, CISA strongly urges all organizations to reduce their exposure to cyberattacks by prioritizing timely remediation of Catalog vulnerabilities as part of their vulnerability management practice. CISA will continue to add vulnerabilities to the Catalog that meet the meet the specified criteria.

by Contributed | Dec 10, 2021 | Technology

This article is contributed. See the original author and article here.

The Azure PowerShell team is proud to announce a new major version of the Az PowerShell module. Following our release cadence, this is the second breaking change release for 2021. Because this release includes updates related to security and the switch to MS Graph, we recommend that you review the release notes before upgrading.

MS Graph support

Azure PowerShell offers a set of cmdlets allowing basic management of AzureAD resources (Applications, Service Principal, Users, and Groups). Through Az 6.x, those cmdlets were using the AzureAD Graph API. Starting with Az 7, those cmdlets are now using the Microsoft Graph API. Because the AzureAD Graph API has announced its retirement, we highly recommend that you consider upgrading to Az 7 at your earliest convenience.

The parameters required depend on the API definition and so does the object returned on the response of the API. Our north star in this effort has been to minimize the breaking changes exposed by the cmdlets. Because of the behavior differences between MS Graph API and AzureAD Graph API, some breaking changes could not be avoided. For example, the MS Graph API does not allow setting the password when creating a Service Principal. We removed this parameter from the new cmdlets.

In some cases, cmdlets of a service transparently execute Azure AD operations. For example, when creating an AKS cluster, a service principal will be created if it is not provided. Az.KeyVault, Az.AKS, Az.SQL have been updated and now use Microsoft Graph for those transparent operations. Az.HDInsights, Az.StorageSync, Az.Synapse and Authorization cmdlets in Az.Resources will be updated shortly, this will be transparent.

For your convenience, we have compiled the breaking changes in the article: AzureAD to Microsoft Graph migration changes in Azure PowerShell.

Should you face issues with the Graph cmdlets, please consult our troubleshooting guide or open an issue on GitHub.

Invoke-AzRestMethod supports data plane and MS Graph.

The purpose of the `Invoke-AzRestMethod` cmdlet is to offer a backup solution for when a native cmdlet does not exist for a given resource.

Our initial implementation of this cmdlet supported only management plane operations for Azure Resource Manager. With the support for MS Graph, we updated this cmdlet so it could also serve as a backup to manage MS Graph resources. From the module implementation, the MS Graph API is considered like a data plane API so we added support for MS Graph any Azure data plane.

For example, the following command will retrieve information about the current signed in user via the MS Graph API:

Invoke-AzRestMethod –Uri https://graph.microsoft.com/v1.0/me

Security improvement

When connecting with a service principal, we identified that the secret associated with a service principal or the certificate password would be exposed in a nested property of the object returned by `Connect-AzAccount`. We removed the properties named `ServicePrincipalSecret` and `CertificatePassword` from this object.

Since this property could be exposed in logs or debugging traces of scripts running in automation environments like ADO, we highly recommend that you consider upgrading to the most recent version of Az.Accounts or Az.

Improved support for cloud native services (AKS and ACI updates)

We are continuing our efforts to improve the support of container-based services. In this release, we focused on AKS and ACI.

`Invoke-AzAksRunCommand` has been added to run a shell command using kubectl or helm against an AKS cluster. The response is available as a property of the returned object. This cmdlet greatly simplifies the management of the resources in a cluster. Since the cmdlet also supports file attachment, it is possible to manage Kubernetes clusters and associated applications (for example via a helm chart) directly from PowerShell.

We have greatly improved networking support of AKS clusters. We’ve added support for the following parameters: ‘NetworkPolicy’, ‘PodCidr’, ‘ServiceCidr’, ‘DnsServiceIP’, ‘DockerBridgeCidr’, ‘NodePoolLabel’, ‘AksCustomHeader’, ‘EnableNodePublicIp’, and ‘NodePublicIPPrefixID’.

We also improved the manageability of nodes in an AKS cluster using Azure PowerShell. It is now possible to perform the following operations:

- Change the number of nodes in a node pool

- Upgrade cluster when node pool version does not match the cluster version

We made two additions to the ACI (Azure Container Instance) module:

- `Invoke-AzContainerInstanceCommand` now establishes a connection with the container and returns the output of the command that was executed within the container.

- `Restart-AzContainerGroup` has been added. If a container image has been updated, containers will run with the new version.

We will continue to improve the PowerShell experience with services running cloud native applications.

Additional resources

The Azure PowerShell team is listening to your feedback on the following channels:

- GitHub issues to report issues or feature requests. We triage issues several times a week and provide an initial answer as soon as we can.

- GitHub discussions to open discussions or share best practices.

- @AzurePosh on Twitter to engage informally with the team.

Thank you!

Damien,

on behalf of the Azure PowerShell team

by Scott Muniz | Dec 10, 2021 | Security, Technology

This article is contributed. See the original author and article here.

The Apache Software Foundation has released a security advisory to address a remote code execution vulnerability (CVE-2021-44228) affecting Log4j versions 2.0-beta9 to 2.14.1. A remote attacker could exploit this vulnerability to take control of an affected system. Log4j is an open-source, Java-based logging utility widely used by enterprise applications and cloud services.

CISA encourages users and administrators to review the Apache Log4j 2.15.0 Announcement and upgrade to Log4j 2.15.0 or apply the recommended mitigations immediately.

by Scott Muniz | Dec 10, 2021 | Security, Technology

This article is contributed. See the original author and article here.

CISA has released an Industrial Controls Systems Medical Advisory (ICSMA) detailing a vulnerability in multiple Hillrom Welch Allyn cardiology products. An attacker could exploit this vulnerability to take control of an affected system.

CISA encourages technicians and administrators to review ICSMA-21-343-01: Hillrom Welch Allyn Cardio Products for more information and apply the necessary mitigations.

by Scott Muniz | Dec 9, 2021 | Security

This article was originally posted by the FTC. See the original article here.

Automakers are producing fewer new cars right now due to a computer chip shortage, and many people are looking at used cars instead. If you’re shopping for a used car and feeling rushed to buy a car before you can fully check it out — stop! Some used cars may have flood damage.

After a hurricane or flood, storm-damaged cars are sometimes cleaned up and taken out of state for sale. You may not know a car is damaged until you look at it closely. Here are some steps to take when you shop:

Check for signs and smells of flood damage. Is there mud or sand under the seats or dashboard? Is there rust around the doors? Is the carpet loose, stained, or mismatched? Do you smell mold or decay — or an odor of strong cleaning products — in the car or trunk?

Check for a history of flood damage. The National Insurance Crime Bureau’s (NCIB) free database will show if a car was flood-damaged, stolen but not recovered, or otherwise declared as salvaged — but only if the car was insured when it was damaged.

Get a vehicle history report. Start at vehiclehistory.gov to get free information about a vehicle’s title, most recent odometer reading, and condition. For a fee, you can get other reports with additional information, like accident and repair history. The FTC doesn’t endorse any specific services. Learn more at ftc.gov/usedcars.

Get help from an independent mechanic. A mechanic can inspect the car for water damage that can slowly destroy mechanical and electrical systems and cause rust and corrosion.

Report fraud. If you suspect a dealer is knowingly selling a storm-damaged car or a salvaged vehicle as a good-condition used car, contact the NICB. Also tell the FTC at ReportFraud.ftc.gov and your state attorney general.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

by Contributed | Dec 9, 2021 | Technology

This article is contributed. See the original author and article here.

This is the next segment of our blog series highlighting Microsoft Learn Student Ambassadors who achieved the Gold milestone and have recently graduated from university. Each blog in the series features a different student and highlights their accomplishments, their experience with the Student Ambassadors community, and what they’re up to now.

Today we meet Bethany Jepchumba, who is from Kenya and recently graduated from Jomo Kenyatta University of Agriculture and Technology with a degree in Business Innovation Technology Management.

Responses have been edited for clarity and length.

When you joined the Student Ambassador community in September of 2019, did you have specific goals you wanted to reach, such as a particular skill or quality? What were they? Did you achieve them? How has the community impacted you in general?

Coming from a non-technical background, tech communities had a profound impact on my journey in tech. I wanted to spread the technology gospel to all and have more learners join in, so I joined the Student Ambassador community,

As a Student Ambassador, what was the biggest accomplishment that you’re the proudest of and why?

I managed a Data Science and Artificial Intelligence community in Kenya with a co-lead in 2020 where we conducted 10+ events created to skill up beginners. We had over 500 learners in three months during the COVID-19 pandemic.

Additionally, I was an organizer of the first Microsoft Student Summit Africa in 2020. The event was a collaboration between Student Ambassadors from Kenya and Nigeria and received a total of 3,000+ RSVPs. There were 3 different tracks: Artificial Intelligence, Power Platform, Web Development. My main role was leading the team in designing the conference, moderating sessions, and preparing the speakers. I also stepped in to do an Introduction to DevOps session without any prior preparation when our speaker could not join the call.

I also led a team of five to win a five-week Game of Learners hackathon that had 60 participants. Winners were awarded one-on-one mentorship sessions with different industry professionals, including one with Microsoft’s Donovan Brown. I also delivered a workshop to 100+ on Manipulating and Cleaning Data to the Microsoft Reactor Community.

What are you doing now that you’ve graduated?

My journey in the Student Ambassador community pushed me to empower the next generation of techies. Currently, I am a Program Coordinator Associate at Andela, a unicorn that matches global companies to remote talent in Africa. I enable the skilling of over 50,000 learners through partnerships with global companies such as Microsoft, Google, Salesforce, and Facebook.

If you could redo your time as a Student Ambassador, is there anything you would have done differently?

In the program, I did my best, and I gave my best. If I could go back, I would do more of what I was able to accomplish, and I’d collaborate and speak up more.

If you were to describe the community to a student who is interested in joining, what would you say about it to convince them to join?

There is a lot of swag, free azure credits, and certification vouchers for Student Ambassadors. You will get to make long-time friends and have access to Microsoft Cloud Advocates. The opportunities in the program are limitless, and you get to craft your own experience.

What advice would you give to new Student Ambassadors?

Collaborate. There is power in working together. If you have an idea for an event or engagement you want to organize, include others–the more the merrier. Make Microsoft Teams your friend, learn how to navigate it, and you will not miss any important collaborations. Lastly, ensure you have at least one Student Ambassador engagement per month. Whether it is publishing a blog, speaking at an event, hosting your own sessions, or doing a certification. Ensure that you constantly take advantage of the program and all it offers. Remember, all the efforts you put in the program will be rewarded in equal measure.

Do you have a motto in life, a guiding principle that drives you?

“Do what you love, love what you do, and with all your heart give yourself to it.”

– Roy T. Bennett

What is one random fact few people know about you?

One thing in my bucket list is to visit an upside-down house, either in South Africa or the UK. I still cannot believe they exist.

Good luck to you in the future, Bethany!

Readers, you can keep in touch with Bethany on LinkedIn, GitHub, Instagram, Twitter, or on her blog.

by Scott Muniz | Dec 9, 2021 | Security, Technology

This article is contributed. See the original author and article here.

Cisco has released a security advisory to address Cisco products affected by multiple vulnerabilities in Apache HTTP Server 2.4.48 and earlier releases. An unauthenticated remote attacker could exploit this vulnerability to take control of an affected system.

CISA encourages users and administrators to review Cisco Advisory cisco-sa-apache-httpd-2.4.49-VWL69sWQ and apply the necessary updates.

by Scott Muniz | Dec 9, 2021 | Security, Technology

This article is contributed. See the original author and article here.

CISA has released Capacity Enhancement Guide (CEG): Social Media Account Protection, which details ways to protect the security of organization-run social media accounts. Malicious cyber actors that successfully compromise social media accounts—including accounts used by federal agencies—could spread false or sensitive information to a wide audience. The measures described in the CEG aim to reduce the risk of unauthorized access on platforms such as Twitter, Facebook, and Instagram.

CISA encourages social media account administrators to implement the protection measures described in CEG: Social Media Account Protection:

- Establish and maintain a social media policy

- Implement credential management

- Enforce multi-factor authentication (MFA)

- Manage account privacy settings

- Use trusted devices

- Vet third-party vendors

- Maintain situational awareness of cybersecurity threats

- Establish an incident response plan

Note: although CISA created the CEG primarily for federal agencies, the guidance is applicable to all organizations.

by Scott Muniz | Dec 8, 2021 | Security

This article was originally posted by the FTC. See the original article here.

One of our favorite things to do at the FTC — whenever possible — is to return money to people who lost it to scammers. That’s what we’re doing today. If you lost money to a business coaching scheme that did business as Coaching Department or Apply Knowledge, among other names, you should be getting a check in the mail soon.

According to the FTC, these companies made millions of dollars by falsely telling people they could earn thousands of dollars a month by paying for business coaching services and creating an online business. People spent thousands — and sometimes tens of thousands — of dollars on these coaching services before finding out they didn’t deliver on their promises.

This is the third check the FTC has been able to send to people who lost money to this business coaching scam and brings their total recovery to 48% of their losses. Previous checks were sent out in October 2019 and June 2020. The checks going out today total $25.6 million dollars and average $2,388.

If you paid money to these companies but didn’t get the earlier checks, reach out to the refund administrator Analytics LLC at 1-844-982-1005. To learn more about the FTC’s refund program, visit ftc.gov/refunds Remember that the FTC never requires people to pay money or give account information to cash a refund check. Spotted someone doing that? Report it at ReportFraud.ftc.gov.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

Recent Comments