Business opportunities and trainings that claim you’ll make big money are often scams

This article was originally posted by the FTC. See the original article here.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

This article was originally posted by the FTC. See the original article here.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

This article was originally posted by the FTC. See the original article here.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

This article was originally posted by the FTC. See the original article here.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

This article is contributed. See the original author and article here.

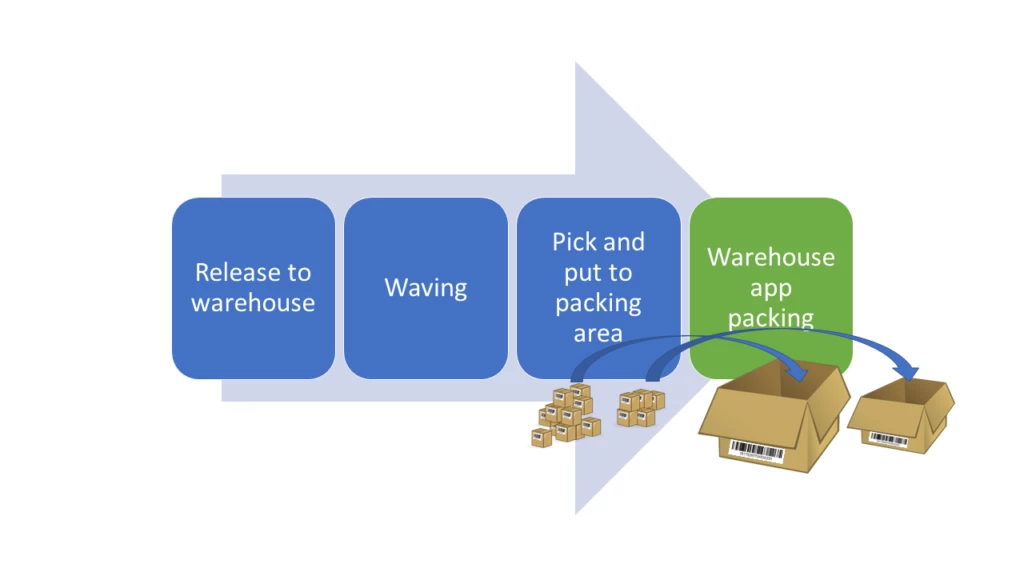

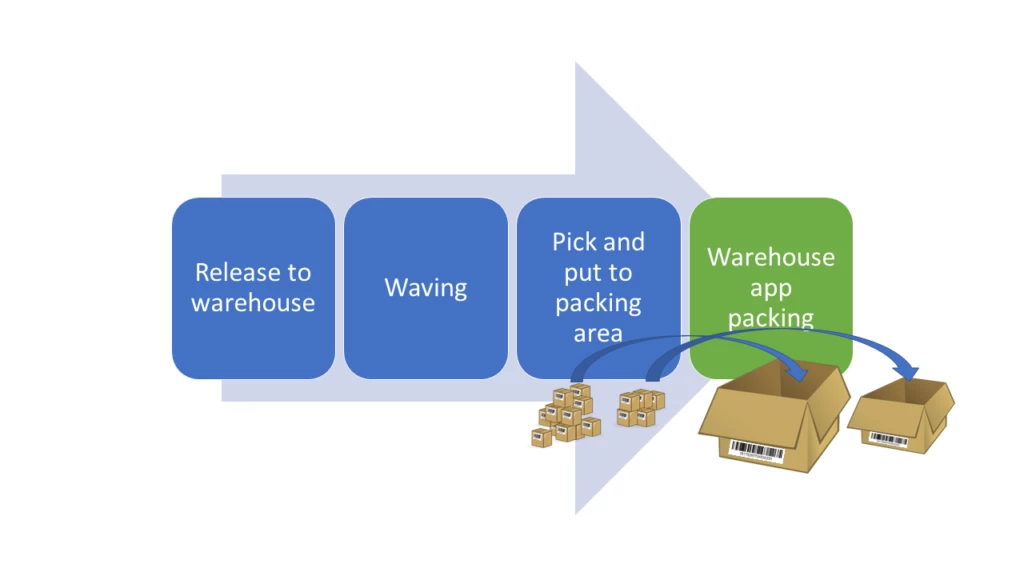

Traditionally, warehouse workers pack items for shipment at a specific packing station, using a process that’s optimized to ship small and medium-sized parcels. To improve packing efficiency when working in larger areas and with larger items, the Warehouse Management mobile app in Microsoft Dynamics 365 Supply Chain Management now provides a mobile packing experience that gives workers the freedom to move around while performing packing activities.

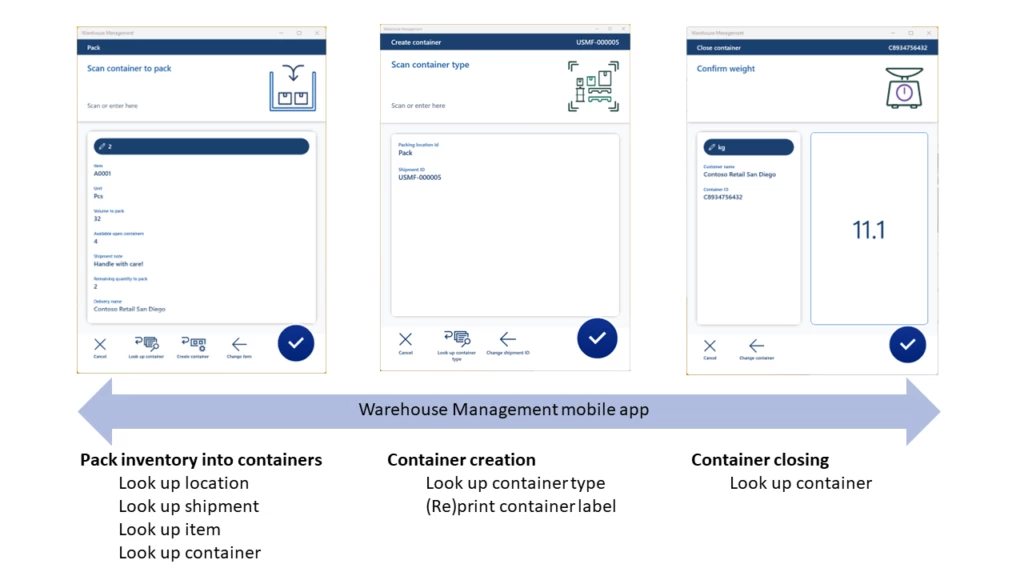

The packing flow in the Warehouse Management mobile app includes three main processes:

To provide for more flexibility, you can easily embed the container creation and closing processes in any packing operation step using a detour. The same applies to look-up requirements (for example, when not able to scan an item barcode label) by querying data using mobile app detours.

To improve packing efficiency even more, you can configure the packing process to automatically print a container label from the mobile app. Workers can apply the label to the container to ease the packing validation process when they are packing items into the container.

To learn more, read the documentation: Packing containers with the Warehouse Management mobile app | Microsoft Learn

Try out an example scenario.

The post Improve packing efficiency with the Warehouse Management mobile app appeared first on Microsoft Dynamics 365 Blog.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

This article is contributed. See the original author and article here.

In the world of hybrid work, ensuring all participants feel included and can contribute their ideas in an effective and visual way is incredibly important. A successful meeting not only invites all participants but provides everyone with the tools to contribute to the conversation. For your next Microsoft Teams meeting, you can lead more visually engaging discussions that hit your objectives before, during, and after the meeting by using Microsoft Whiteboard. With new innovations in Whiteboard, you can feel empowered to create a strong and productive meeting – together.

Quickly set up your whiteboard with discussion objectives using one of the 60+ Whiteboard templates. Whether you’re leading a discussion for brainstorming, problem solving, or team bonding, you can easily use template search in the create panel. Template search will be generally available for Microsoft 365 commercial users in December 2022.

Looking to enhance your meeting whiteboard? Embedded online videos and links can add visual richness and make your meeting more dynamic. Avoid fussing with tab and window switching that interrupts the flow of a meeting by including supplemental videos directly on the whiteboard with embedded online videos. Embedded online videos and links is now generally available for Microsoft 365 commercial users.

An image of a user embedding a YouTube video and website link onto the whiteboard from the create panel in Whiteboard.

Engaging your participants from the moment they join the meeting is easy. Pull up the whiteboard you prepared earlier by using open existing board in Teams meetings, and your meeting is already off to a great start. Open existing board in Teams meetings is now generally available for Microsoft 365 commercial users.

Provide time for individual participants to brainstorm for inclusive discussions with timer in Whiteboard. Create focus, stay on-task, and keep your meeting moving by setting a timer for agenda activities. Timer will be generally available for Microsoft 365 commercial users early next calendar year.

Invite users on a whiteboard to follow your viewpoint as you navigate through the board for a more visually guided discussion. The facilitator originally locates the follow feature by selecting their profile picture in the Whiteboard roster. When follow is activated, a participant can pause following the facilitator to continue to move through and contribute to the board on their own. With just one click, the participant can resume follow to get back to where the facilitator is at any time. Microsoft Teams Rooms devices will also be enhanced with automatic follow sessions, bringing greater flexibility for you in the conference room for hybrid work. Follow will start rolling out to Microsoft 365 users with a work account by the end of the calendar year.[1]

Enhance the look and legibility of your whiteboard with automatic resizing of text inside sticky notes so you can add more lines of text without needing to scroll. Text inside sticky notes now also automatically scales in text size as you resize the sticky note, increasing legibility, even at lower zoom levels. Finally, text formatting features like bold, italics and underlining, as well as a new, more professional default font, help emphasize your fresh ideas across notes, text boxes, and shapes. For Microsoft 365 commercial users, auto-resize text in notes is now generally available and text formatting will be rolling out to general availability by the end of the calendar year.

An image displaying sticky notes that automatically resize the font with amount of text and text formatting options on Whiteboard.

Create collaborative relationships by knowing who is contributing notes and feedback with sticky note attribution. Sticky note attribution will start rolling out to Microsoft 365 commercial users by the end of the calendar year.

Whiteboard is a new Microsoft Loop endpoint, enabling you to copy and paste Loop components into Whiteboard from Teams chat and soon from Outlook mail and Word for the web. Loop components in Whiteboard help your team continue adding information to lists, tables and more while they brainstorm in Whiteboard. Changes made in Loop components in Whiteboard will stay in sync across all the places the component has been shared, ensuring everyone has the latest info. Copy and paste Loop components in Whiteboard will start rolling out to be generally available for Microsoft 365 commercial users in the coming months.

The collaboration doesn’t have to end after a synchronous meeting! The whiteboard you and your participants collaborated on can be accessed in the Teams chat under the Whiteboard tab. With the ease of Whiteboard integration in Teams, you and your participants can continue collaborating on the same whiteboard asynchronously. For your next synchronous meeting, start again with open existing Whiteboard in Teams Meetings and show the progress of iterative collaboration in between meetings.

An image demonstrating a magnifier window showcasing the Whiteboard tab in a Teams chat.

With comments you can share your thoughts, celebrate with your teammates, or just have a conversation. You can create an anchored comment that will stay connected to the content being shared, keeping things simple and effective, while comment bubbles help you understand the sentiment and find areas where there is more discussion on the content. Commenting will start rolling out to Microsoft 365 commercial users by the end of the calendar year.

We hear from customers every day that while the future of work may be evolving, one thing remains clear—it’s never been more important for people to be able to collaborate effectively wherever and whenever. We continue to be committed and focused to meet this need, so both remote and in-person attendees can visually collaborate across the same digital canvas. To get started, try Whiteboard today.

To learn more, visit the Whiteboard product page or the Whiteboard support site.

Did you know? The Microsoft 365 Roadmap is where you can get the latest updates on productivity apps and intelligent cloud services. Check out what features are in development or coming soon on the Microsoft 365 Roadmap, or view Microsoft Whiteboard roadmap items here.

Footnotes

[1] Follow will not be generally available for school accounts.

This article is contributed. See the original author and article here.

CISA released one Industrial Control Systems (ICS) advisory on November 15, 2022. This advisory provides timely information about current security issues, vulnerabilities, and exploits surrounding ICS.

CISA encourages users and administrators to review the newly released ICS advisory for technical details and mitigations:

This article was originally posted by the FTC. See the original article here.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

This article is contributed. See the original author and article here.

The Transfer Files feature is being retired, but transferring files is becoming easier in Office Mobile.

As we evolve to offer the best of Microsoft 365 on mobile, we are constantly look at new ways to bring value to our users while assuring you remain productive across your devices.

To keep the Office app in tune with the needs of our users, the Transfer Files feature is being replaced by OneDrive, which is seamlessly integrated with the Office app. You will be able use the Transfer Files as a legacy feature on Android and iOS in parallel with OneDrive until December 31, 2022.

Rest assured that files that have been sent and received using the Transfer Files feature will remain in the Office app and will be unaffected by this change.

Learn more on how you can use OneDrive to share files here:

This article is contributed. See the original author and article here.

Many ISVs (Independent Software Vendors) are exchanging information with devices, applications, services, or humans. In many cases the information passed can be a file or a blob. Each of these ISVs would need to implement such a service. In the past few months, I discussed this capability with several (over 4) different customers, each with slightly unique needs. When I tried to generalize the need, it was clear: they wanted a quick, safe means to exchange files with customers or devices.

So, I tried to translate these asks into user stories:

As a service provider, I need my customers to upload content in a secure, easy-to-maintain micro-service exposed as an API (application programming interfaces) so that I can process the content uploaded and perform an action on it.

As a service provider, I would like to enable the download of specific files for authorized devices or humans so that they could download the content directly from storage.

As a service provider, I would like to offer my customers the ability to see what files they have already uploaded so that they can download them when needed with a time restricted SaS (shared access signatures) token.

Cool, nice start, but if we look at the underline ask, does it have to be exposed to humans? Why not create a micro service that would handle this requirement and delegate the interaction with humans to the application already interacting with users?

I decided to use this opportunity and learn Azure Container Apps. For more information on ACA (Azure Container Apps) please review this documentation.

The use of ACA provides significant security benefits (among others) with respect to VNet (Virtual Network) integration. I did consider using Azure Functions, however, when comparing the SKU of Azure Function that supports VNet integration to the potential cost of use of ACA, the ACA would incur lower costs.

While ACA can integrate with a VNet, my initial sample repo does not include it yet. I decided to focus on minimal applicative and network capabilities keeping it simple.

I also decided to ensure readers who want to experiment with the code would have a quick way to do it. This is the reason time was spent on creating the bicep code that spins up the entire solution.

There are no application settings which include secrets; all connection strings or keys would be stored in Azure Key Vault, while the access to this vault is governed by RBAC (role-based access control) and only specific identities can access it.

I used .NET Core 6 as the platform using the C# language. The secured web Api template was my initial version, as it provides most of what is required to create such a service, wrapping it as a container and deploying it to ACA was the additional effort.

Here is a diagram of the solution components:

With the initial drop, content validation is out-of-scope.

My repo (will be moved to Azure Samples) also includes few GitHub actions that perform the following activities:

Bicep is used to provision all required resources, excluding the AAD (Azure Active Directory) entities and the resource group in which all components would be provisioned.

The best practice is to avoid using the “latest” tag; as a user of ACA, you currently do not have the ability to control the image-pulling trigger, which is the equivalent of “Image Pull Policy” in Kubernetes. Instead, use a unique, autogenerated tag, which can be generated by your CD (Continuous Deployment) pipeline. In the sample repo, The GitHub Action uses the git commit hash as the image tag.

When working locally, you can leverage the setting file, but when working with ACA, i decided to leverage enviorment variables. My next learning was based on the following question:

How ami I going to inject these values into an environment provisioned by the Bicep script?

Well, the answer is, to use environment variables. Also, when working locally, you would be able to use the pattern ‘AzureAd:Audience‘. However, when using ACA, you would need to use a slightly different pattern: ‘AzureAd__Audience‘, with the double underscore indicating a section drill-down. (The reason is the operating system)

Note, It will takes time for changes to reflect in the GUI (graphical user interfaces) is minutes.

Using .NET Core 6 allows programmers to focus on the applicative content they want to create. It is, in some cases, a double-edged sword since some of the logs and activities are masked.

For example, when you wish to use a secret from Azure Key Vault, you can access it as if it were part of your configuration, assuming you registered it correctly:

builder.Configuration.AddAzureKeyVault(

new Uri($"https://{builder.Configuration["keyvault"]}.vault.azure.net/"),

new DefaultAzureCredential(new DefaultAzureCredentialOptions

{

ManagedIdentityClientId = builder.Configuration["AzureADManagedIdentityClientId"]

}));

This single line (separated for ease of reading) registers the Key Vault, assigning the managed identity as its reader. Note that in many cases managed identities would require just a subset of the secrets – for further reading and best practices please follow these guidlines.

Once you have done this, accessing a secret from your code would look like this, where the ‘storagecs’ is a secret configured by the bicep code.

string connectionString = _configuration.GetValue<string>("storagecs");

Adding authorization is similarly ‘difficult’:

builder.Services.AddAuthentication(JwtBearerDefaults.AuthenticationScheme)

.AddMicrosoftIdentityWebApi(builder.Configuration.GetSection("AzureAd"));

Again, one line that assumes you have a JSON (JavaScript Object Notation) section in your App setting named ‘AzureAd’ which contains all required details to perform an authentication and authorization.

"AzureAd": {

"Instance": "https://login.microsoftonline.com/",

"Domain": "<your domain>",

"ClientId": "<your app registration client id>",

"TenantId": "<your tenant>",

"Audience": "<your app registration client id>",

"AllowWebApiToBeAuthorizedByACL": true

}

Let us unpack the above settings, to explain that it took some time to understand that without the last two items, the default authentication will fail. The .NET platform will check that the value of the ‘audience’ claim in the JWT (JSON Web Token) matches the one defined in the registered application.

The last setting tells the platform not to check for any other claims or roles. If you need that type of authorization, it is up to you to implement, here is an example how-to guide.

One of my initial dilemmas was, how can I spin a fully functional environment, which requires an image to be available for a pull when the ACA is provisioned. With the help of Yuval Herziger I created a GitHub action that is triggered on a release, which would build a vanilla image of my code, and store it in the ghcr.

Long story short, unless you know which flow you are trying to implement, you can find time passed with minimal progress. So, choose the right flow. Henrik Westergaard Hansen helped me here. He listened to my use cases and said my flow should be the client credential flow, as its service-to-service communication. I cannot emphasize enough how important it is; the moment I understood it, the time for completion was hours.

This article is contributed. See the original author and article here.

Happy Friday, MTC! It’s 11/11 – time to make a wish – and lets see what’s been going on in the community this week!

MTC Moments of the Week

Our MTC Member of the Week spotlight is on @Chandrasekhar_Arya for being a rockstar in the Azure forums, both in starting discussions and helping out other MTC’ers! We really appreciate your contributions to the community :)

Next up, we had another double-header in community events this week! First was our Microsoft Viva Goals AMA, where MTC’ers had the opportunity to ask questions about the Viva Goals goal setting and OKR management solution and get answers from the experts, including @lucyhitz, @Ashwin_Jeyakumar, @gupta_amit, and @balajiseetharaman. In case you missed this event, you can head to Viva Community Discussion Space to ask your questions there!

We also had a brand-new episode of Tips and tricks featuring @Christiaan_Brinkhoff and one of our amazing Windows 365 MVPs, @Ola Ström. You can catch up on demand and hear about Ola’s experiences as well as register for the next event on the Windows in the Cloud page.

And over on the blogs this week, a reminder that all editions of Windows 10, version 21H1 will reach end of servicing on December 13, 2022. @Mabel_Gomes wrote a helpful article to guide you with the next steps, so make sure you check it out!

Unanswered Questions – Can you help them out?

Every week, users come to the MTC seeking guidance or technical support for their Microsoft solutions, and we want to help highlight a few of these each week in the hopes of getting these questions answered by our amazing community!

In the Excel Forum, new MTC’er @ankitsingh2063 is looking for guidance on where to start when writing a Power Query to ‘Get Data’ from a Google Drive folder.

Meanwhile, in the Intune Forum, @ashokdangol is asking the community for best practices to manage a shared PC with multiple users.

Next Week in Events – Mark Your Calendars!

These will be our last events for November before we take a holiday break, so make sure you RSVP!

And for today’s fun fact… did you know that Merriam Webster has a Time Traveler page where you can look up what year a word first entered the dictionary? You can even see what words were “born” the same year as you – “meh”, “photoshop”, and “URL” are just a few of mine. So interesting!

I hope you all have a great weekend and a Happy Veteran’s Day. Thank you for your service!

Recent Comments