This article is contributed. See the original author and article here.

Neural Text-to-Speech (Neural TTS), part of Speech in Azure Cognitive Services, enables you to convert text to lifelike speech for more natural user interactions. One emerging solution area is to create an immersive virtual experience with an avatar that automatically animates its mouth movements to synchronize with the synthetic speech. Today, we introduce the new feature that allows developers to synchronize the mouth and face poses with TTS – the viseme events.

What is viseme

A viseme is the visual description of a phoneme in a spoken language. It defines the position of the face and the mouth when speaking a word. With the lip sync feature, developers can get the viseme sequence and its duration from generated speech for facial expression synchronization. Viseme can be used to control the movement of 2D and 3D avatar models, perfectly matching mouth movements to synthetic speech.

Traditional avatar mouth movement requires manual frame-by-frame production, which requires long production cycles and high human labor costs.

Viseme can generate the corresponding facial parameters according to the input text. It greatly expands the number of scenarios by making the avatar easier to use and control. Below are some example scenarios that can be augmented with the lip sync feature.

- Customer service agent: Create an animated virtual voice assistant for intelligent kiosks, building the multi-mode integrative services for your customers;

- Newscast: Build immersive news broadcasts and make content consumption much easier with natural face and mouth movements;

- Entertainment: Build more interactive gaming avatars and cartoon characters that can speak with dynamic content;

- Education: Generate more intuitive language teaching videos that help language learners to understand the mouth behavior of each word and phoneme;

- Accessibility: Help the hearing-impaired to pick up sounds visually and “lip-read” any speech content.

How viseme works with Azure neural TTS

The viseme turns the input text or SSML (Speech Synthesis Markup Language) into Viseme ID and Audio offset which are used to represent the key poses in observed speech, such as the position of the lips, jaw and tongue when producing a particular phoneme. With the help of a 2D or 3D rendering engine, you can use the viseme output to control the animation of your avatar.

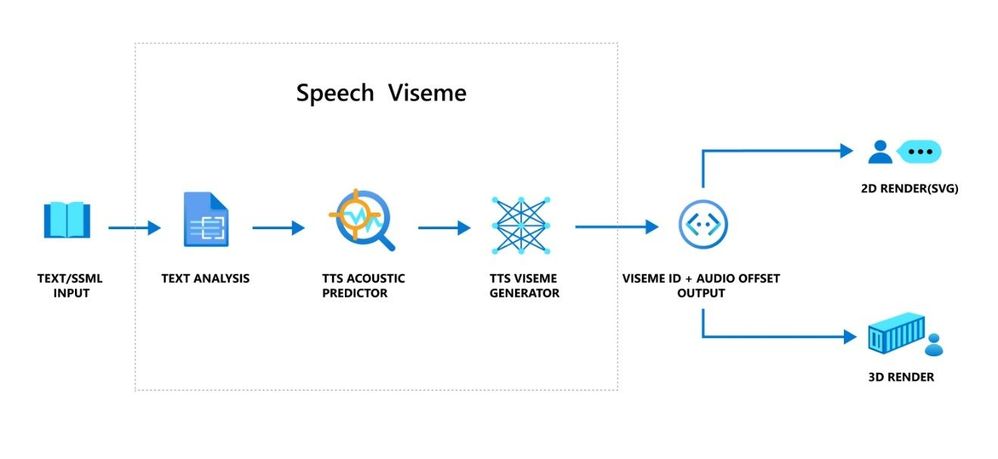

The overall workflow of viseme is depicted in the flowchart below.

The underlying technology for the Speech viseme feature consists of three components: Text Analyzer, TTS Acoustic Predictor, and TTS Viseme Generator.

To generate the viseme output for a given text, the text or SSML is first input into the Text Analyzer, which analyzes the text and provides output in the form of phoneme sequence. A phoneme is a basic unit of sound that distinguishes one word from another in a particular language. A sequence of phonemes defines the pronunciations of the words provided in the text.

Next, the phoneme sequence goes into the TTS Acoustic Predictor and the start time of each phoneme is predicted.

Then, the TTS Viseme generator maps the phoneme sequence to the viseme sequence and marks the start time of each viseme in the output audio. Each viseme is represented by a serial number, and the start time of each viseme is represented by an audio offset. Often several phonemes correspond to a single viseme, as several phonemes look the same on the face when pronounced, such as ‘s’, ‘z’.

Here is an example of the viseme output.

(Viseme), Viseme ID: 1, Audio offset: 200ms.

(Viseme), Viseme ID: 5, , Audio offset: 850ms.

……

(Viseme), Viseme ID: 13, Audio offset: 2350ms.

This feature is built into the Speech SDK. With just a few lines of code, you can easily enable facial and mouth animation using the viseme events together with your TTS output.

How to use the viseme

To enable viseme, you need to subscribe to the VisemeReceived event in Speech SDK (The TTS REST API doesn’t support viseme). The following snippet illustrates how to subscribe to the viseme event in C#. Viseme only supports English (United States) neural voices at the moment but will be extended to support more languages later.

using (var synthesizer = new SpeechSynthesizer(speechConfig, audioConfig))

{

// Subscribes to viseme received event

synthesizer.VisemeReceived += (s, e) =>

{

Console.WriteLine($"Viseme event received. Audio offset: " +

$"{e.AudioOffset / 10000}ms, viseme id: {e.VisemeId}.");

};

var result = await synthesizer.SpeakSsmlAsync(ssml));

}

After obtaining the viseme output, you can use these outputs to drive character animation. You can build your own characters and automatically animate the characters.

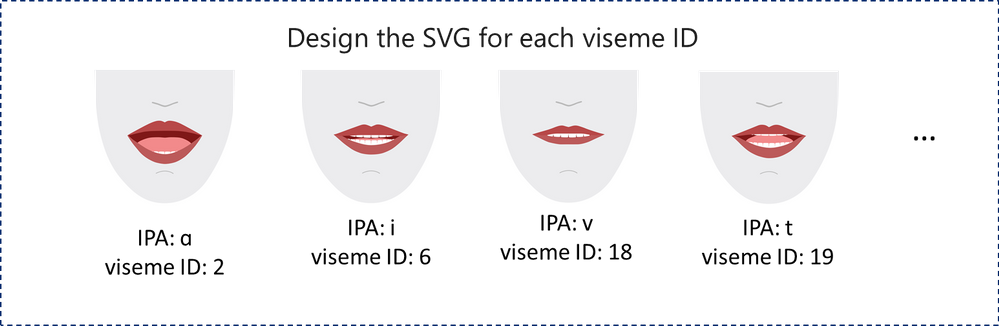

For 2D characters, you can design a character that suits your scenario and use Scalable Vector Graphics (SVG) for each viseme ID to get a time-based face position. With temporal tags provided by viseme event, these well-designed SVGs will be processed with smoothing modifications, and provide robust animation to the users. For example, below illustration shows a red lip character designed for language learning. Try the red lip animation experience in Bing Translator, and learn more about how visemes are used to demonstrate the correct pronunciations for words.

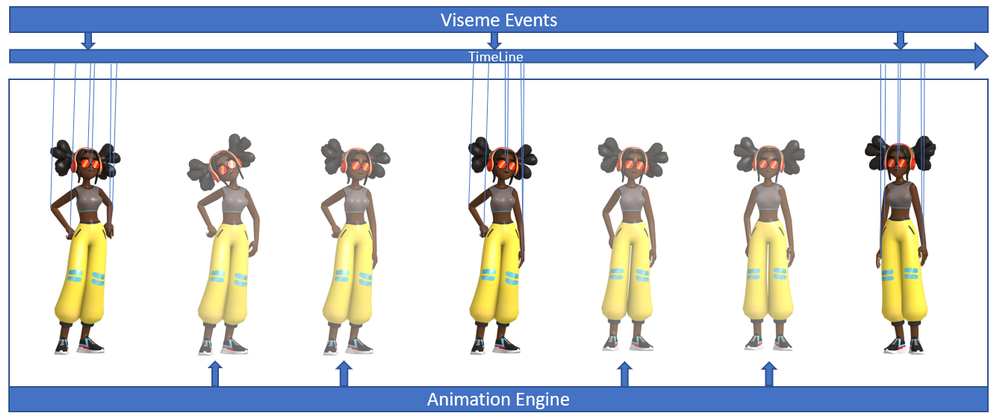

For 3D characters, think of the characters as string puppets. The puppet master pulls the strings from one state to another and the laws of physics will do the rest and drive the puppet to move fluidly. The Viseme output acts as a puppet master to provide an action timeline. The animation engine defines the physical laws of action. By interpolating frames with easing algorithms, the engine can further generate high-quality animations.

(Note: the character image in this example is from Mixamo.)

Learn more about how to use the viseme feature to enable text-to-speech animation with the tutorial video below.

Get started

With the viseme feature, Azure neural TTS expands its support for more scenarios and enables developers to create an immersive virtual experience with automatic lip sync to synthetic speech.

Let us know how you are using or plan to use Neural TTS voices in this form. If you prefer, you can also contact us at mstts [at] microsoft.com. We look forward to hearing about your experience and developing more compelling services together with you for the developers around the world.

See our documentation for Viseme

Add voice to your app in 15 minutes

Deploy Azure TTS voices on prem with-Speech Containers

Improve synthesis with the Audio Content Creation tool

Visit our Speech page to explore more speech scenarios

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

Recent Comments